3. Simulations

Due to the nature of the tune estimation problem, any BBQ data that has been collected thus far contains the spectra from the BBQ system and tune estimates from the BQ algorithm. Thus when using logged data, it can not be assumed that the logged tune values are correct. Due to this limitation another source of pairs of spectra and tune values had to be obtained, which could be used to train and test the ML approaches. Previous work approached this problem by considering that since the motion of a particle in a circular accelerator can be described by a Hill’s type equation, we can approximate the shape of a frequency spectrum obtained by the BBQ system by using a second order system simulation [

7].

The first part of this work continues to use simulations, however this time in order to generate a large dataset of spectra and tune value pairs which can be used to train neural network models. Specifically, the frequency spectrum of a second order system is given by the following formula:

where we can obtain

by using the following:

In Equation (

1),

denotes an additive Gaussian noise term with zero mean and

standard deviation while

is the damping factor which controls the width of the resonance peak obtained. In addition, a finite value of

also shifts the true position of the resonance in

according to Equation (

2).

All of the ML models considered in this work require a fixed length input, and can only provide a fixed length output. For the models that estimate the tune from a frequency spectrum, it would have been possible to feed the entire spectrum however this would require a model with a large input length, implying a large number of parameters to train. This approach is not necessary since in real operational conditions, the spectral region inside which to find the tune frequency is generally well known.

In this work, the frequency window was chosen to be 100 frequency bins long, while guaranteeing that the tune peak lies within this frequency window. This value was chosen to be slightly larger than the frequency windows chosen during real machine operation using the BQ algorithm. The average operational frequency window obtained from a sample of parameters used in the BBQ system for the beam during FLATTOP is around 80 frequency bins long. It was empirically observed however that sometimes the dominant peak lay close to the edges of the chosen window, which subsequently limits the performance of the BQ algorithm.

Figure 4 compares the new window length to that used in operation and as it can be observed, the tune estimates around the 15 s mark are close to edge of the operational window. This example also paints a clearer picture of the bounds on the inputs used throughout this work where we see that in all the cases the input size chosen will always be adequate and representative of real operation.

It is important to note that the absolute magnitudes of the spectra are not needed. For example in

Figure 1 the vertical axis is in the range of

and this is calculated by the BBQ system to be representative of the real power in each frequency bin. For training neural networks it is imperative that we normalise the input data to be either in the range

or

. This is due to the type of activation functions that are used in between layers in a neural network, which are designed to operate in normalised space. Due to this, the real power of the spectra need not be generated by the simulator. Another important detail is that the value of the tune, while equivalent to

, had to be normalised with respect to the frequency window passed to the model. This is not detrimental to the operation of the model as the choice of the frequency window is chosen by the operators, and the real value of the tune can be easily transformed to Hertz.

By performing a Monte Carlo simulation of the

and

required by the second order model as shown in Equation (

1), we can explore a myriad of possible spectra, with an exact value of the resonant frequency for each spectrum.

was sampled from the bounds of the frequency window in radians and

was sampled from

where

is a uniform distribution. The normalised amplitude of the injected 50 Hz harmonics was drawn from

and after adding the harmonics to the second order spectrum, a simple linear digital filter of size 3 was passed forward and backward to the spectrum in order to give width to the harmonics as can be observed in

Figure 1. The spectrum is then normalised again, and

is found in terms of the normalised frequency range. Hence by using this generated dataset, we can expect the ANN to generalise well and provide a robust tool which can reliably estimate the tune even from a BBQ spectrum directly.

Figure 5 illustrates a normalised real spectrum clipped to the relevant frequency window, along with a second order simulation. The procedure described above was iteratively performed to locate suitable parameters for the simulated spectrum of

,

and

. As can be observed, the shape of the simulated tune peak matches with that observed in real BBQ spectra.

Author Contributions

Conceptualisation, L.G., G.V.; methodology, L.G., G.V. and D.A.; software, L.G.; validation, L.G., G.V. and D.A.; formal analysis, L.G.; investigation, L.G.; resources, L.G. and G.V.; data curation, L.G.; writing—original draft preparation, L.G.; writing—review and editing, L.G., G.V. and D.A.; visualisation, L.G.; supervision, G.V. and D.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was carried out in association with CERN.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Acknowledgments

We would like to thank Thibaut Lefevre, Manuel Gonzalez Berges, Stephen Jackson, Marek Gasior and Tom Levens for their contributions in acquiring resources and reviewing the results obtained in this work.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Baird, S. Accelerators for Pedestrians; Technical Report AB-Note-2007-014. CERN-AB-Note-2007-014. PS-OP-Note-95-17-Rev-2. CERN-PS-OP-Note-95-17-Rev-2; CERN: Meyrin, Switzerland, 2007. [Google Scholar]

- Steinhagen, R.J. LHC Beam Stability and Feedback Control-Orbit and Energy. Ph.D. Thesis, RWTH Aachen University, 2007. Available online: https://cds.cern.ch/record/1054826 (accessed on 29 April 2021).

- Gasior, M.; Jones, R. High sensitivity tune measurement by direct diode detection. In Proceedings of the 7th European Workshop on Beam Diagnostics and Instrumentation for Particle Accelerators (DIPAC 2005), Lyons, France, 6–8 June 2005; p. 4. [Google Scholar]

- Gasior, M. Tuning the LHC. BE Newsletter 2012, 4, 5–6. [Google Scholar]

- Alemany, R.; Lamont, M.; Page, S. Functional specification—LHC Modes; Technical Report LHC-OP-ES-0005; CERN: Meyrin, Switzerland, 2007. [Google Scholar]

- Kostoglou, S.; Arduini, G.; Papaphilippou, Y.; Sterbini, G.; Intelisano, L. Origin of the 50 Hz harmonics in the transverse beam spectrum of the Large Hadron Collider. Phys. Rev. Accel. Beams 2021, 24, 034001. [Google Scholar] [CrossRef]

- Grech, L.; Valentino, G.; Alves, D.; Gasior, M.; Jackson, S.; Jones, R.; Levens, T.; Wenninger, J. An Alternative Processing Algorithm for the Tune Measurement System in the LHC. In Proceedings of the 9th International Beam Instrumentation Conference (IBIC 2020), Santos, Brazil, 14–18 September 2020. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Available online: http://www.deeplearningbook.org (accessed on 29 April 2021).

- Lindsay, G.W. Convolutional Neural Networks as a Model of the Visual System: Past, Present, and Future. J. Cogn. Neurosci. 2020, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Shrivastava, A.; Pfister, T.; Tuzel, O.; Susskind, J.; Wang, W.; Webb, R. Learning from simulated and unsupervised images through adversarial training. In Proceedings of the IEEE conference on computer vision and pattern recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2107–2116. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the Advances in Neural Information Processing Systems 27, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Matsuoka, K. Noise injection into inputs in back-propagation learning. IEEE Trans. Syst. Man Cybern. 1992, 22, 436–440. [Google Scholar] [CrossRef]

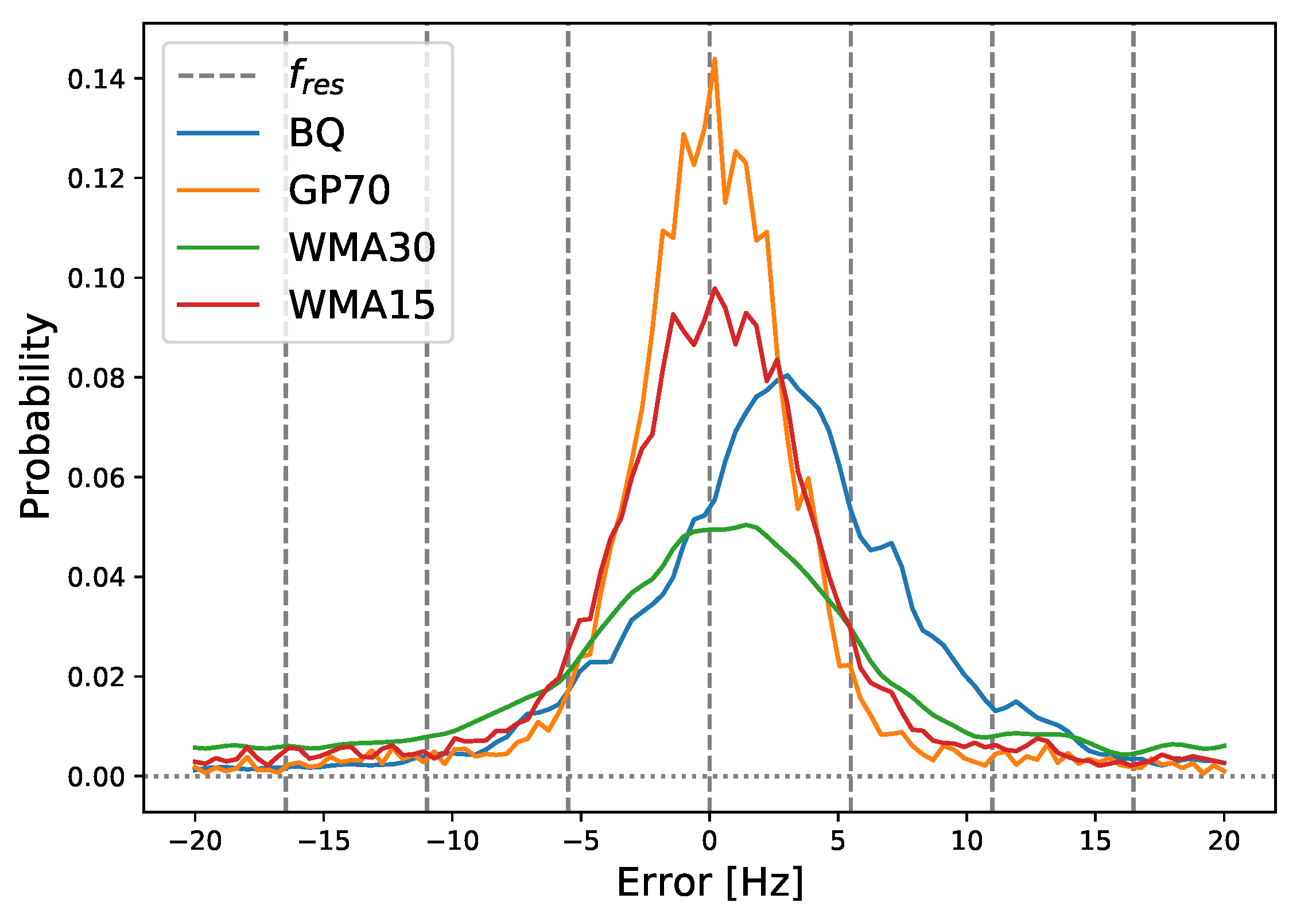

Figure 1.

Example of 50 Hz harmonics present in the BBQ spectrum, obtained from LHC Fill 6890, Beam 2, horizontal plane in the FLATTOP beam mode.

Figure 2.

Present BQ tune estimation algorithm [

7].

Figure 3.

Probability density plot of the errors obtained by non-ML algorithms. The errors are the difference of the respective tune estimates and the ground-truth resonance of he simulated data. BQ is the algorithm used until the LHC Run 2; GP70 uses Gaussian Processes with a Radial Basis Function kernel having a length scale of 70; WMA30 and WMA15 use a weighted moving average with a half-window length of 30 and 15, respectively. GP and WMA were introduced in [

7].

Figure 4.

An example of the operational frequency windowing used by the BQ algorithm during the LHC Run 2 to the new window length used in this work. The tunes were obtained from LHC fill 6890, horizontal plane of beam 1 in the FLATTOP beam mode. During FLATTOP the optics are changed and thus the tune shifts to a new frequency.

Figure 5.

Second order simulation of a real spectrum obtained from the BBQ system.

Figure 6.

Probability density of the errors obtained by fully-connected networks. The errors are the differences between the predicted tunes and the resonances used to simulate the spectra.

Figure 7.

Network using convolutional layers.

Figure 8.

Probability density of the errors obtained by convolutional networks. The errors are the differences between the predicted tunes and the resonances used to simulate the spectra.

Figure 9.

Error distribution of tune estimates of trained ML models and algorithmic approaches to the ground-truth resonance. ML#1 and ML#5 are the best DNN and CNN respectively; BQ is the algorithm used in the LHC until Run 2; GP70 uses Gaussian Processes with a RBF kernel having a length scale of 70; WMA15 and WMA30 use a Weighted Moving Average with half window lengths of 15 and 30 respectively.

Figure 10.

Comparison of validation losses when using simulated and real spectra respectively. Note that the dataset containing the real spectra was used to obtain tune estimates from BQ, GP70 and WMA30, thus creating 3 separate validation datasets.

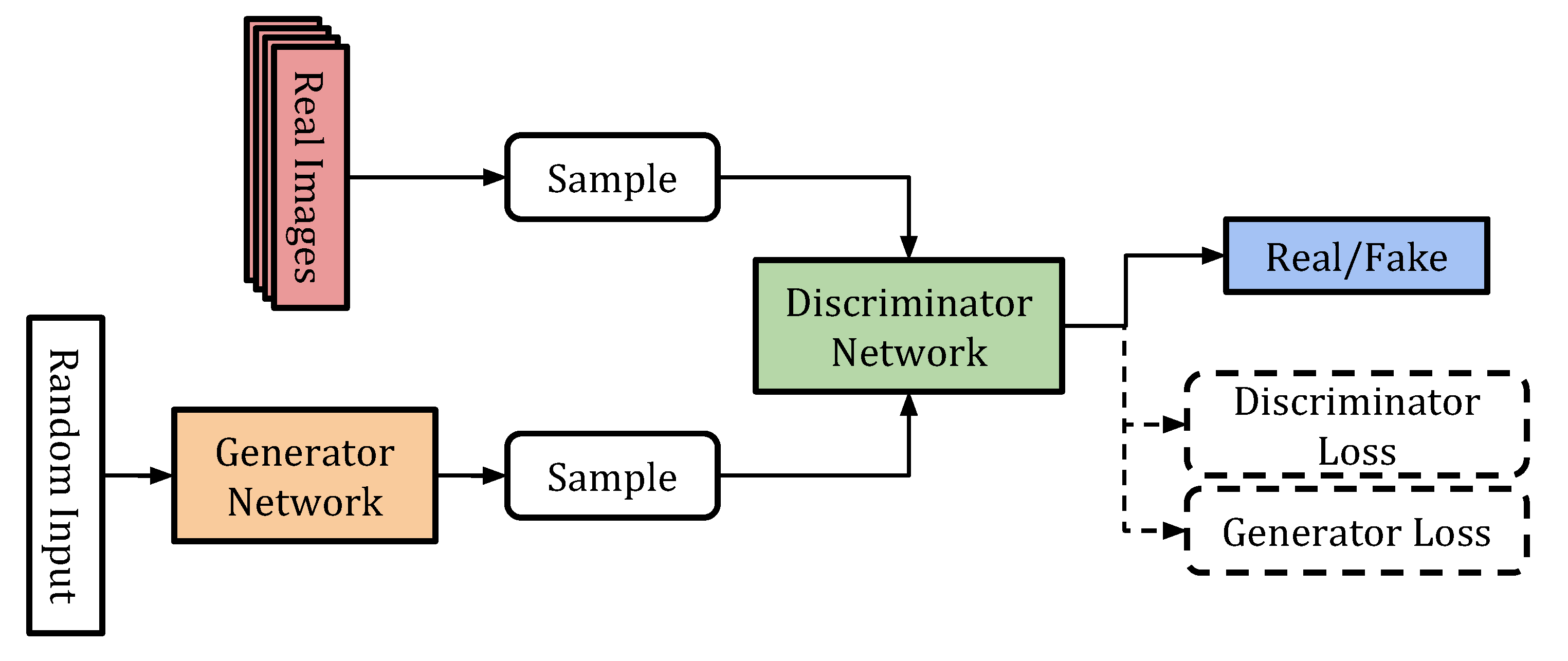

Figure 11.

Overview of the Generative Adversarial Network architecture.

Figure 12.

Overview of the SimGAN architecture.

Figure 13.

Results from a trained SimGAN. The green line represents the tune estimate from BQ algorithm on the refined spectrum.

Figure 14.

(Background) Heat map obtained by post-processing BBQ spectra from LHC Fill 6768, Beam 1, Horizontal plane, in the transition from PRERAMP to RAMP. (Foreground) Superimposed is the mean,

, and scaled standard deviation,

, of the tune evolution as estimated by different tune estimation algorithms and ML models. (

a) The original algorithm used in the LHC until Run 2 (BQ) (

b) Tune estimation using Gaussian Processes with an RBF kernel having a length scale of 70 (GP70) [

7] (

c) Tune estimation using a Weighted Moving Average with a window half length of 15 (WMA15) [

7] (

d) The best DNN model trained using simulated spectra (ML#1) (

e) The best 1D CNN model trained using simulated spectra (ML#5) (

f) DNN model with the same architecture as ML#1 and trained using spectra refined by SimGAN.

Figure 15.

(Background) Heat map obtained by post-processing BBQ spectra from LHC Fill 6768, Beam 1, Horizontal plane, in the transition from RAMP to FLATTOP. (Foreground) Superimposed is the mean,

, and scaled standard deviation,

, of the tune evolution as estimated by different tune estimation algorithms and ML models. Three regions of interest are also marked (dashed white circles) (

a) The original algorithm used in the LHC until Run 2 (BQ) (

b) Tune estimation using Gaussian Processes with an RBF kernel having a length scale of 70 (GP70) [

7] (

c) Tune estimation using a Weighted Moving Average with a window half length of 15 (WMA15) [

7] (

d) The best DNN model trained using simulated spectra (ML#1) (

e) The best 1D CNN model trained using simulated spectra (ML#5) (

f) DNN model with the same architecture as ML#1 and trained using spectra refined by SimGAN.

Figure 16.

Probability distribution of the tune estimation stability. Obtained from Fill 6768, beam 1, horizontal plane using spectra from PRERAMP to FLATTOP. Slow and Fast correspond to stability measures having time constants of 10 s and 2 s respectively (Equation (

7)). The threshold was chosen in the early design of the beam-based feedback systems and used in operation during the LHC Run 2. BQ was the original tune estimation algorithm used until the LHC Run 2; GP70 uses Gaussian Processes with a RBF kernel of length scale 70; WMA15 uses a Weighted Moving Average with a half-window length 15; ML#1 and ML#5 were the best performing DNN and CNN networks respectively trained with simulated spectra; ML-Refined had the same architecture as ML#1 and trained with refined spectra.

Table 1.

Model architectures presented for fully-connected networks.

| | Layer 1 | Layer 2 | Layer 3 | # 1 |

|---|

| ML#0 | 150 | 50 | 10 | 23,221 |

| ML#1 | 300 | 100 | 20 | 62,441 |

| ML#2 | 500 | 250 | 50 | 188,351 |

Table 2.

Model architectures presented for CNNs.

| | Layer 1 | Layer 2 | Layer 3 | Dense | # 4 |

|---|

| f 1 | k 2 | s 3 | f | k | s | f | k | s |

|---|

| ML#3 | 32 | 3 | 3 | 16 | 3 | 3 | 8 | 3 | 3 | 20 | 2753 |

| ML#4 | 32 | 3 | 1 | 16 | 3 | 1 | 8 | 3 | 1 | 20 | 18,113 |

| ML#5 | 64 | 3 | 3 | 32 | 3 | 1 | 16 | 3 | 1 | 20 | 18,905 |

| ML#6 | 128 | 3 | 3 | 64 | 3 | 3 | 16 | 3 | 3 | 20 | 29,561 |

| ML#7 | 64 | 3 | 1 | 32 | 3 | 1 | 16 | 3 | 1 | 20 | 40,025 |

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).