TextQ—A User Friendly Tool for Exploratory Text Analysis

Abstract

:1. Introduction

1.1. Background and Motivation

1.2. Related Work

2. Materials and Methods

2.1. Software and Hardware

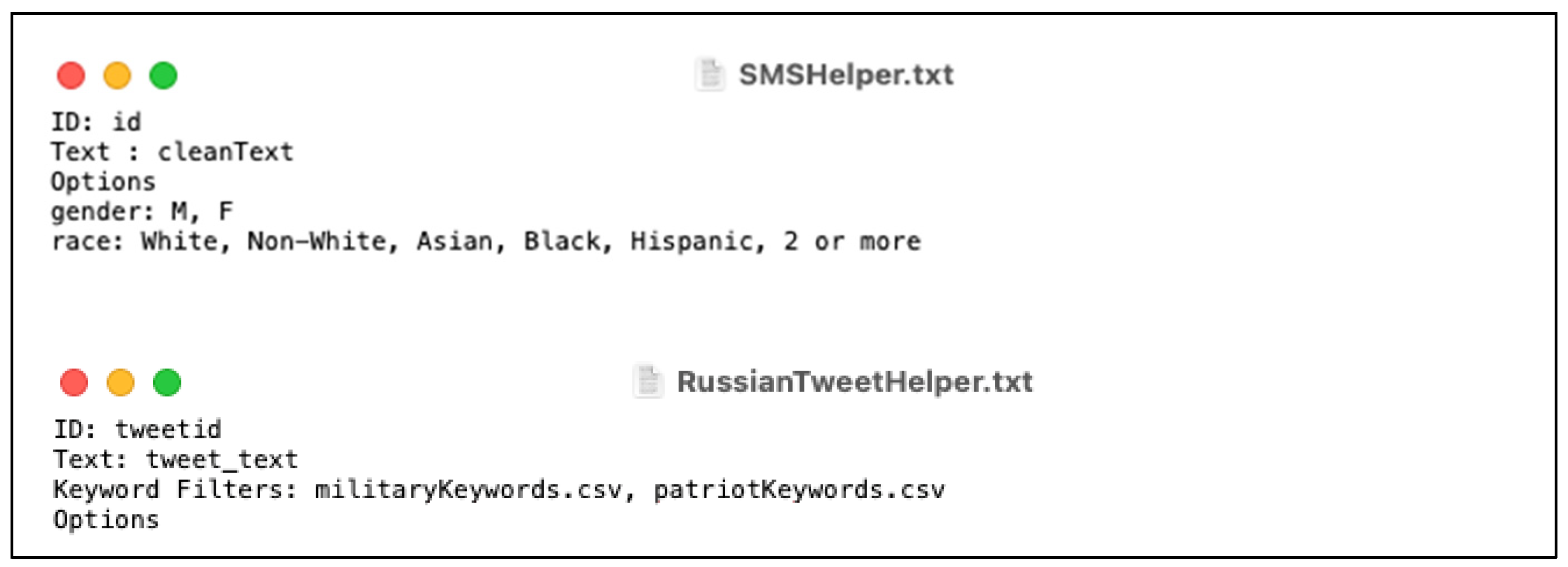

2.2. Data Preparation

2.3. TextQ Interface

3. Results

3.1. Run Time

3.2. Case Study 1—Cyberbullying Collection

3.3. Case Study 2—Tweets from Russian Influence Operations

#StopImportingIslam

#StopImportingIslam  #JihadistNOTWelcome

#JihadistNOTWelcome  #SayNoToIslam

#SayNoToIslam  #DeathCult

#DeathCult4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Marr, B. How much data do we create every day? The mind-blowing stats everyone should read. Forbes, 21 May 2018; 1–5. [Google Scholar]

- Statista. Available online: https://www.statista.com/statistics/195140/new-user-generated-content-uploaded-by-users-per-minute/ (accessed on 5 November 2021).

- quanthub. Available online: https://quanthub.com/data-scientist-shortage-2020/ (accessed on 5 November 2021).

- Alexa, M.; Zuell, C. Text Analysis Software: Commonalities, Differences and Limitations: The Results of a Review. Qual. Quant. 2000, 34, 299–321. [Google Scholar] [CrossRef]

- Wiechmann, D.; Fuhs, S. Concordancing software. Corpus Linguist. Linguist. Theory 2006, 2, 107–127. [Google Scholar] [CrossRef]

- Diesner, J. ConText: Software for the Integrated Analysis of Text Data and Network Data. 2014. Available online: http://jdiesnerlab.ischool.illinois.edu/calls/ICA2014/Diesner_ICA_2014.pdf (accessed on 5 November 2021).

- LIWC. Available online: http://liwc.wpengine.com/ (accessed on 5 November 2021).

- Welbers, K.; Van Atteveldt, W.; Benoit, K. Text analysis in R. Commun. Methods Meas. 2017, 11, 245–265. [Google Scholar] [CrossRef]

- Kim, S.W.; Gil, J.M. Research paper classification systems based on TF-IDF and LDA schemes. Hum. Cent. Comput. Inf. Sci. 2019, 9, 30. [Google Scholar] [CrossRef]

- Willard, N.E. Cyberbullying and Cyberthreats: Responding to the Challenge of Online Social Aggression, Threats, and Distress; Research Press: Champaign, IL, USA, 2007. [Google Scholar]

- Olweus, D. Bullying at School: What We Know and What We Can Do; Blackwell: Oxford, UK; Cambridge, MA, USA, 1993. [Google Scholar]

- Lenhart, A.; Smith, A.; Anderson, M.; Duggan, M.; Perrin, A. Teens, Technology, and Friendships. 2015. Available online: https://www.pewresearch.org/internet/2015/08/06/teens-technology-and-friendships/ (accessed on 5 November 2021).

- Edwards, L.; Kontostathis, A. Reclaiming Privacy: Reconnecting Victims of Cyberbullying and Cyberpredation. In Proceedings of the Reconciling Privacy with Social Media Workshop, Held in conjunction with the 2012 ACM Conference on Computer Supported Cooperative Work, Seattle, WA, USA, 11–15 February 2012. [Google Scholar]

- Edwards, A.; Demoll, D.; Edwards, L. Detecting Cyberbullying Activity Across Platforms. In Proceedings of the 17th International Conference on Information Technology–New Generations (ITNG 2020), Las Vegas, NV, USA, 5–8 April 2020; Springer: Cham, Switzerland; pp. 45–50. [Google Scholar]

- Twitter Transparency. Available online: https://transparency.twitter.com/en/reports/information-operations.html (accessed on 5 November 2021).

- Weinberg, D.; Dawson, J. Military Narratives and Profiles in Russian Influence Operations on Twitter. 2021. Available online: https://osf.io/preprints/socarxiv/b9a2m/ (accessed on 5 November 2021).

| Test Description | Number Selected | Time to Load | Time to Parse | Term Analysis | Bigram Analysis |

|---|---|---|---|---|---|

| 10,000 Tweets * | 575 | 1.896 | 0.002 | 0.091 | 0.092 |

| 10,000 Tweets *—Inverted | 9424 | 1.898 | 0.026 | 1.227 | 1.352 |

| Full Twitter Dataset * (~1.5 million tweets) | 111,584 | 351.784 | 0.427 | 15.746 | 16.553 |

| Full Twitter Dataset * Inverted | 1,274,528 | 359.538 | 4.776 | 167.225 | 178.918 |

| Cyberbully SMS Data (Males only) | 88,597 | 0.460 | 0.143 | 11.927 | 12.152 |

| Cyberbully SMS Data (Females only) | 122,004 | 0.475 | 0.211 | 16.358 | 16.467 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Edwards, A.; Sullivan, M.; Itkowsky, E.; Weinberg, D. TextQ—A User Friendly Tool for Exploratory Text Analysis. Information 2021, 12, 508. https://doi.org/10.3390/info12120508

Edwards A, Sullivan M, Itkowsky E, Weinberg D. TextQ—A User Friendly Tool for Exploratory Text Analysis. Information. 2021; 12(12):508. https://doi.org/10.3390/info12120508

Chicago/Turabian StyleEdwards, April, MaryLyn Sullivan, Ezrah Itkowsky, and Dana Weinberg. 2021. "TextQ—A User Friendly Tool for Exploratory Text Analysis" Information 12, no. 12: 508. https://doi.org/10.3390/info12120508

APA StyleEdwards, A., Sullivan, M., Itkowsky, E., & Weinberg, D. (2021). TextQ—A User Friendly Tool for Exploratory Text Analysis. Information, 12(12), 508. https://doi.org/10.3390/info12120508