A Domain-Adaptable Heterogeneous Information Integration Platform: Tourism and Biomedicine Domains

Abstract

:1. Introduction

- Implementing a set of functionalities that deals with heterogeneous information by using NLP technologies and concept recognition.

- Meeting W3C Semantic Web criteria. Most mashups applications do not use W3C standards and cannot be automatically accessed, reducing their functionality.

- Automatically incorporating machine learning higher-level functionalities by integrating the recommendation of information and enriching this recommendation via sentiment analysis.

2. Related Work

2.1. Information Integration

- Data exchange. This involves the transformation of information depending on how well the database schema from which the data are extracted is defined, and on how well the destination database is defined (how the data are to be arranged).

- Data integration. The data to extract may be in databases or other sources (with other schemas), but it must all end up in a single schema.

- Peer to peer integration. All of the peers are autonomous and independent; therefore, there is not any schema.

2.2. Mashups

- A provider of content (data layer). Sources usually provide their data via an application programming interface (API) or web protocols, such as really simple syndication (RSS), representational state transfer (REST), and web services. RDF modelling is performed in this layer, the data are filtered via a SPARQL query, and the output elements are grouped under a SPARQL design and then published.

- A mashup site (processing layer). This web application offers integrated information based on different data sources. It extracts the information from the data layer, manages the applications involved, and prepares the output data for visualization via languages such as Java or via web services.

- A browser client (presentation layer). This is the mashup interface. Browsers find content and display it via HTML, Ajax, Java Script, or similar functionality toolkits.

2.3. Recommendation Systems

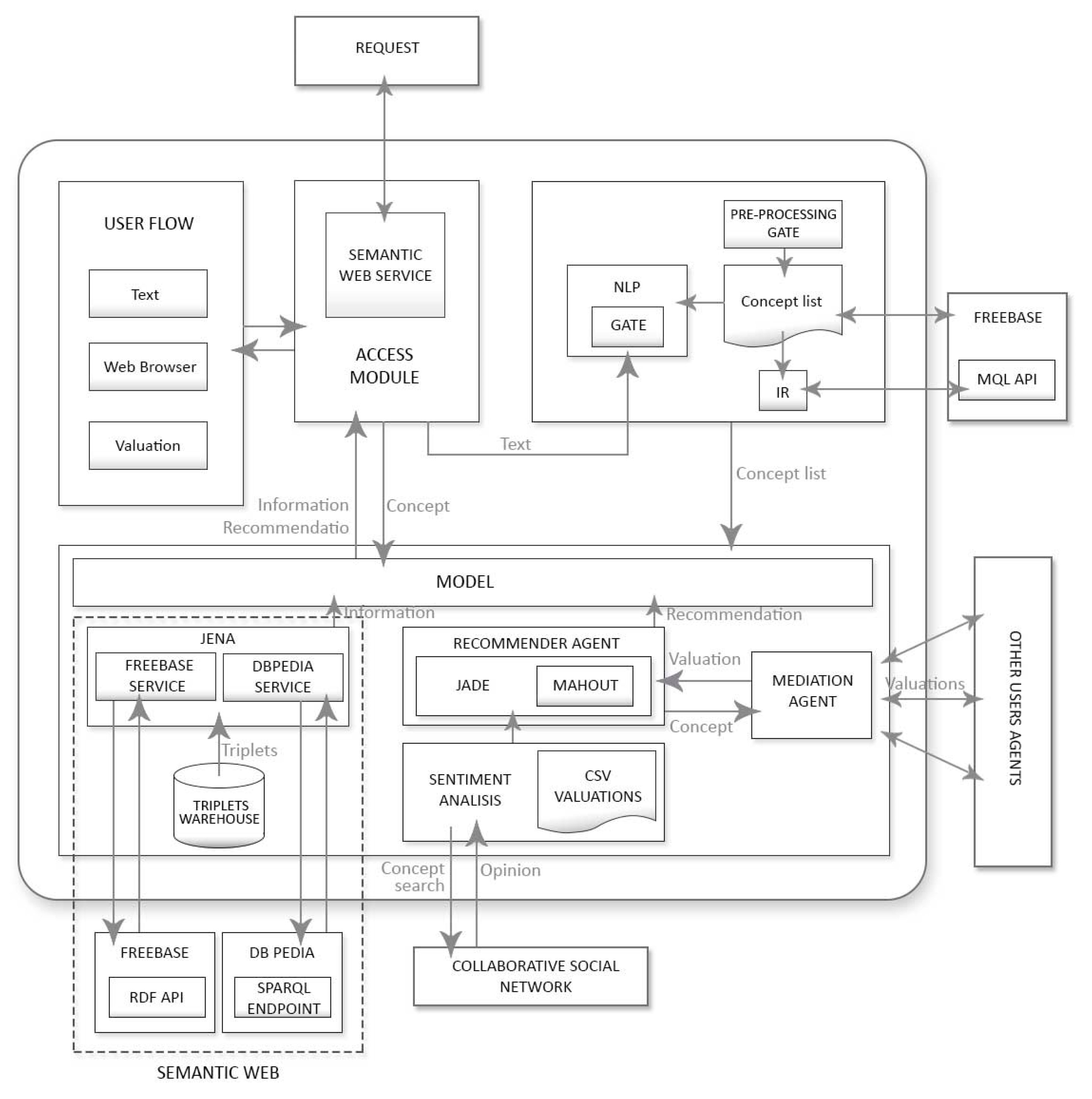

3. Intelligent Domain-Adaptable Platform (IDAP)

- End-user flow and access module

- Natural language processing and concept recognition module

- The Semantic Web module

- Recommendation intelligent agents module

- Semantic Web service

- In the next part, we describe these modules.

3.1. End-User Flow and Access Module

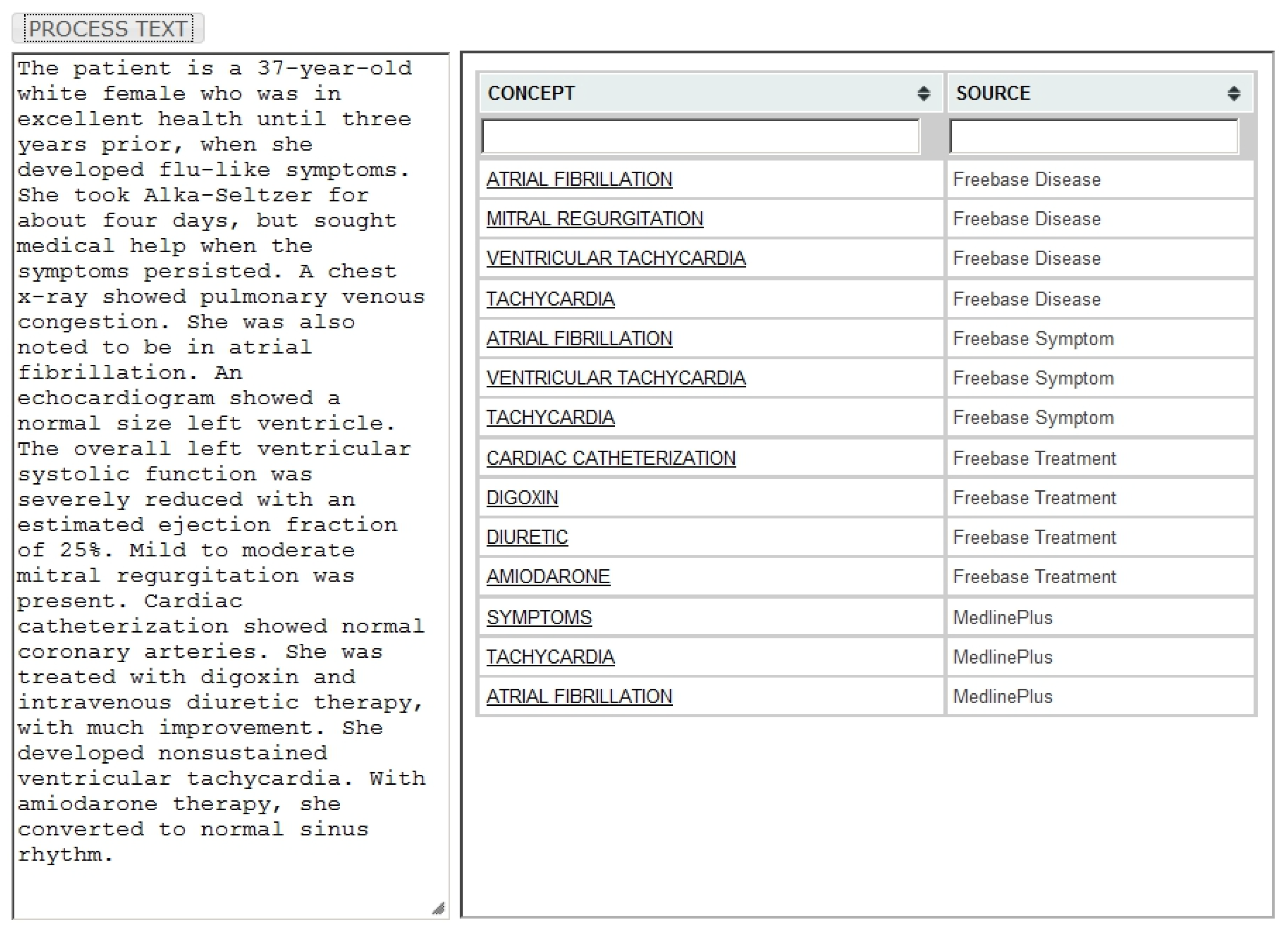

3.2. Natural Language Processing and Concept Recognition Module

- Language resources (LRs), which represent entities such as documents, corpora, or ontologies.

- Processing resources (PRs), which represent entities that are mainly algorithms, such as analyzers, generators, and so on.

- Visual resources (VRs), which represent the viewing and editing of graphic interface components.

- GATE provides two operational mechanisms: one graphic and one consisting of a JAVA interface. The development environment can be used to display the data structures produced and consumed in processing, as well as to debug and obtain performance measures. Among the different programming options for integrating this software into the proposed platform, we selected the development and testing with the graphical user interface (GUI) ‘Developer’, which makes use of the logic for the NLP module. GATE is distributed along with an information extraction system, a nearly new information extraction system (ANNIE), that incorporates a wide range of resources that carry out language analysis tasks. Gazetteer is also one of its components. Based on predefined lists, Gazetteer allows the recognition of previously mentioned concepts. These lists, in turn, allow the inclusion of features for each concept and in the present proposal are primarily used to store the Freebase identifier.

3.3. Use of Freebase in Semantic Access

3.4. The Semantic Web Module

- controlling the storage and recovery of the information (cities, attractions, means of transport, users, valuations) stored within the triplet storage system.

- handling the information recovered from Freebase to filter the data.

- converting the data recovered from DBPedia as triplets.

3.5. Recommendation Intelligent Agent’s Module

- A data model: defines the input users’ data, and each line has following format: userID, ItemID, Preference_value.

- A preference value: can be any real value; high values mean strong end-user preference. In the proposed model, a range between 1.0 and 10.0 was used; 1.0 indicates little interest and 10 indicates items stored as favorites.

- A similarity criterion: it measures the similarity between two different items and is defined by the Pearson correlation.

- A recommender: includes the collaborative filtering recommendation model that can be defined as item–item- or user–user-based.

3.6. Semantic Web Service

4. Tourism Domain Use Case

- Tourist attractions: monuments, parks, museums, events, etc. This type of result also includes locations and events that are defined as attractions with changing locations.

- Accommodation: hotels, bed and breakfasts, backpacker hostels, or any place to stay.

- Travel destination: a location where a person can go for a holiday.

5. Biomedicine Domain Use Case

6. Assessment of the Platform and Test of Adaptability

6.1. Assessment of the Platform in the Tourism Domain

6.1.1. Assessment of the Platform: Methodology

6.1.2. Task A Results: End-User Usability and Capabilities Experience

6.1.3. Task A Results: Platform Functionalities

- Natural language processing and concept recognition (Q10).

- Information integration sources (Q11). The system recovers information from different sources (Freebase, DBPedia, Expedia, and Trip Advisor).

- Depth of information (Q12). The system shows detailed information on different concepts.

- Basic recommendation (Q13). The system indicates whether a concept is recommendable or not.

- Advanced suggestions (Q14). Depending on user preferences, the system shows new recommendations.

- Concept evaluation (Q15). The end-user can provide feedback to the system and to his/her own profile through concept evaluation.

6.1.4. Task B Results: End-User Enrichment Functionalities Experience

6.2. Assessment of the Platform in the Biomedical Domain

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Performance Tasks

Appendix A.1. Task 1

Appendix A.2. Task 2

Appendix B. Questionnaire

References

- Knoblock, C.; Steven, M.; Ambite, J.L.; Ashish, N.; Modi, P.J.; Muslea, I.; Philpot, A.; Tejada, S. Modeling Web Sources for Information Integration. Proceedings AAAI-98. 1998. Available online: https://www.aaai.org/Papers/AAAI/1998/AAAI98-029.pdf (accessed on 1 October 2021).

- Genesereth, M.; Keller, A.; Duschka, O. Infomaster an information integration system. In Proceedings of the 1997 ACM SIGMOD international conference on Management of data, Tucson, AZ, USA, 11–15 May 1997; pp. 539–542. [Google Scholar]

- Wolfson, D.C.; Kleewein, J.C.; Nelin, C.J. Information integration a new generation of information technology. IBM Syst. J. 2002, 41, 563–577. [Google Scholar]

- Bernstein, P.; Haas, L. Information integration in the enterprise. Commun. ACM 2008, 51, 72–79. [Google Scholar] [CrossRef]

- Haas, L. Beauty and the Beast: The theory and practice of information integration. Lect. Notes Comput. Sci. 2006, 4353, 28–43. [Google Scholar]

- Chiticariu, L.; Kolaitis, P.G.; Popa, L. Interactive Generation of Integrated Schemas. In Proceedings of the 2008 ACM SIGMOD International Conference on Management of data, Vancouver, BC, Canada, 9–12 June 2008; pp. 833–846. [Google Scholar]

- Mattos, N.; Pirahesh, H. Information Integration: A research agenda. IBM Syst. J. 2002, 41, 555–562. [Google Scholar]

- Noy, N. Semantic integration: A survey of ontology-based approaches. ACM SIGMOD 2004, 33, 65–70. [Google Scholar] [CrossRef]

- Berners-Lee, T.; Hendler, J.; Lassila, O. The semantic web. Sci. Am. 2001, 284, 28–37. [Google Scholar] [CrossRef]

- Klusch, M. Information agent technology for the Internet: A survey. Data Knowl. Eng. 2001, 36, 337–372. [Google Scholar] [CrossRef]

- Rahimi, S.; Carver, N.; Petry, F. A Multi-Agent Architecture for Distributed Domain-Specific Information. In Net-Centric Approaches to Intelligence and National Security; Springer: Berlin/Heidelberg, Germany, 2005; pp. 129–148. [Google Scholar]

- Ricci, F.; Rokach, L.; Shapira, B. Introduction to Recommender Systems. In Recommender Systems Handbook; Springer: Berlin/Heidelberg, Germany, 2011; pp. 1–35. [Google Scholar]

- Berners-Lee, T. Linked Data—Design Issues. W3C. Available online: https://www.w3.org/DesignIssues/LinkedData.html (accessed on 8 August 2021).

- Hoang, H.H.; Cung, T.N.-P.; Truong, D.K.; Dosam, H.; Jung, J.J. Semantic information integration with linked data mashups approaches. Int. J. Distrib. Sens. Netw. 2014, 10, 813875. [Google Scholar] [CrossRef] [Green Version]

- Howald, B.; Kondadadi, R.; Schilder, F. Domain adaptable semantic clustering in statistical nlg. In Proceedings of the 10th International Conference on Computational Semantics (IWCS 2013), Potsdam, Germany, 19–22 March 2013; pp. 143–154. [Google Scholar]

- García-Santa, N.; García-Cuesta, E.; Villazón-Terrazas, B. Controlling and Monitoring Crisis. In The Semantic Web: ESWC 2015 Satellite Events; ESWC, 2015; Lecture Notes in Computer, Science; Gandon, F., Guéret, C., Villata, S., Breslin, J., Faron-Zucker, C., Zimmermann, A., Eds.; Springer: Cham, Switzerland, 2015; Volume 9341. [Google Scholar]

- Zadeh, A.H.; Zolbanin, H.M.; Sharda, R.; Delen, D. Social Media for Nowcasting Flu Activity: Spatio-Temporal Big Data Analysis. Inf. Syst. Front. 2019, 21, 743–760. [Google Scholar] [CrossRef]

- Kamdar, M.R.; Fernández, J.D.; Polleres, A.; Tudorache, T.; Musen, M.A. Enabling Web-scale data integration in biomedicine through Linked Open Data. NPJ Digit. Med. 2019, 2, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Vdovjak, R.; Houben, G.J. RDF-Based Architecture for Semantic Integration of Heterogeneous Information Sources. In Proceedings of the Workshop on Information Integration on the Web, Rio de Janeiro, Brazil, 9–11 April 2001; pp. 51–57. [Google Scholar]

- Shen, W.; Hao, Q.; Wang, S.; Li, Y.; Ghenniwa, H. An agent-based service-oriented integration architecture for collaborative intelligent manufacturing. Robot. Comput. Integr. Manuf. 2007, 23, 315–325. [Google Scholar] [CrossRef]

- Chiu, D.K.; Yueh, Y.T.; Leung, H.F.; Hung, P.C. Towards ubiquitous tourist service coordination and process integration: A collaborative travel agent system architecture with semantic web services. Inf. Syst. Front. 2009, 11, 241–256. [Google Scholar] [CrossRef]

- Shaw, N.G.; Mian, A.; Yadav, S.B. A comprehensive agent-based architecture for intelligent information retrieval in a distributed heterogeneous environment. Decis. Support Syst. 2002, 32, 401–415. [Google Scholar] [CrossRef]

- Daniel, S.; Przemyslaw, T.; Lars, H. Integrating information systems using web oriented integration architecture and restful web services. In Proceedings of the 2010 6th World Congress on Services, Miami, FL, USA, 5–10 July 2010; pp. 598–605. [Google Scholar]

- Lukasiewicz, T.; Martinez, M.V.; Predoiu, L. Information Integration with Provenance on the Semantic Web via Probabilistic Datalog. In Uncertainty Reasoning for the Semantic Web III; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2014; Volume 8816, pp. 41–62. [Google Scholar]

- Dumitrache, M.; Dumitra, S.; Baciu, M. Web services integration with distributed applications. J. Appl. Quant. Methods 2010, 5, 223–233. [Google Scholar]

- Yang, J.; Li, J.; Deng, X.; Xu, K.; Zhang, H. A web services-based approach to develop a networked information integration service platform for gear enterprise. J. Intell. Manuf. 2012, 23, 1721–1732. [Google Scholar] [CrossRef]

- Dave, B.; Boddy, S.C.; Koskela, L.J. Improving information flow within the production management system with web service. In Proceedings of the 18th Annual conference of the international group for lean construction, Haifa, Isreal, 14–16 July 2010. [Google Scholar]

- Heilig, L.; VoB, S. A Cloud-Based SOA for Enhancing Information Exchange and Decision Support in ITT Operations. In Computational Logistic; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2014; Volume 8760, pp. 112–131. [Google Scholar]

- Guarino, N.; Oberle, D.; Staab, S. What is an Ontology. In Handbook on Ontologies; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1–17. [Google Scholar]

- Kondylakis, H.; Plexousakis, D. Exelixis: Evolving ontology-based data integration system. In Proceedings of the ACM SIGMOD International Conference on Management of Data, Athens, Greece, 12–16 June 2011; pp. 1283–1286. [Google Scholar]

- Smart, P.; Jones, C.; Twaroch, F. Multi-Source Toponym Data Integration and Mediation for a Met-Gazatteer Services. In Geographic Information Science; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2010; Volume 6292, pp. 234–248. [Google Scholar]

- Yao, X.; Van Durme, B. Information Extraction over Structured Data Question Answering with Freebase. Proceedings of ACL. 2014. Available online: https://aclanthology.org/P14-1090.pdf (accessed on 1 October 2021).

- Correndo, G.; Salvadores, M. SPARQL Query Rewriting for Implementing Data Integration over Linked Data. In Proceedings of the 2010 EDBT/ICDT Workshops, Lausanne, Switzerland, 22–26 March 2010; pp. 1–11. [Google Scholar]

- Sonsilphong, S.; Arch-int, N. Semantic interoperability for data integration framework using semantic web services and rule based inference: A case study in healthcare domain. J. Converg. Inf. Technol. 2013, 8, 150–159. [Google Scholar]

- Di Lorenzo, G.; Hacid, H.; Paik, H.-Y.; Benatallah, B. Data integration in mashups. SIGMOD Rec. 2009, 38, 59–66. [Google Scholar] [CrossRef]

- Luo, X.; Zhou, M.; Shang, M.; Li, S.; Xia, Y. A novel approach to extracting non-negative latent factors from non-negative big sparse matrices. IEEE Access 2016, 4, 2649–2655. [Google Scholar] [CrossRef]

- Al-Sharawneh, J.; Williams, M.A. Credibility-aware Web-based social network recommender: Follow the leader. In Proceedings of the 2010 ACM Conference on Recommender Systems, Barcelona, Spain, 1 September 2010; pp. 1–8. [Google Scholar]

- Fengkun, L.; Hong, J.L. Use of social network information to enhance collaborative filtering performance. Expert Syst. Appl. 2010, 37, 4772–4778. [Google Scholar]

- Yang, X.; Guo, Y.; Liu, Y.; Steck, H. A survey of collaborative filtering based social recommender systems. Comput. Commun. 2014, 41, 1–10. [Google Scholar] [CrossRef]

- Jamali, M.; Ester, M. A matrix factorization technique with trust propagation for recommendation. In Proceedings of the Fourth ACM Conference on Recommender Systems (RecSys), Barcelona, Spain, 26–30 September 2010; pp. 135–142. [Google Scholar]

- Yu, L.; Pan, R.; Li, Z. Adaptive social similarities for recommender systems. In Proceedings of the Fifth ACM Conference on Recommender Systems, Chicago, IL, USA, 23–27 October 2011; pp. 257–260. [Google Scholar]

- Pakdeetrakulwong, U.; Wongthongtham, P. State of the art of a multi-agent based recommender system for active software engineering ontology. Int. J. Digit. Inf. Wirel. Commun. 2013, 3, 363–376. [Google Scholar]

- Palopoli, L.; Rosaci, D.; Sarnè, G.M.L. Introducing specialization in e-commerce recommender systems. Concurr. Eng. Res. Appl. 2013, 21, 187–196. [Google Scholar] [CrossRef]

- Palopoli, L.; Rosaci, D.; Sarné, G.M.L. A multi-tiered recommender system architecture for supporting E-Commerce. Intell. Distrib. Comput. VI 2013, 446, 71–81. [Google Scholar]

- Birukou, A.; Blanzieri, E.; Giorgini, P. Implicit: A multi-agent recommendation system for web search. Auton. Agents Multi-Agent Syst. 2012, 24, 141–174. [Google Scholar] [CrossRef] [Green Version]

- Hang, C.W.; Singh, M.P. Generalized framework for personalized recommendations in agent networks. Auton. Agents Multi-Agent Syst. 2012, 25, 475–498. [Google Scholar] [CrossRef]

- Taghavi, M.; Bakhtiyari, K.; Scavino, E. Agent-based computational investing recommender system. In Proceedings of the 7th ACM conference on recommender systems, Hong Kong, China, 12–16 October 2013; pp. 455–458. [Google Scholar]

- Trewin, S. Social knowledge-based recommender system. Application to the movies domain. Expert Syst. Appl. 2012, 39, 10990–11000. [Google Scholar]

- Di Noia, T.; Mirizzi, R.; Ostuni, V.C.; Romito, D. Linked open data to support content-based recommender systems. In Proceedings of the 8th international conference on semantic systems, Graz, Austria, 5–7 September 2012; pp. 1–8. [Google Scholar]

- Cunningham, H.; Maynard, D.; Bontcheva, K.; Tablan, V. A framework and graphical development environment for robust NLP tools and applications. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics (ACL 2002), Philadelphia, PA, USA, 7–12 July 2002; pp. 168–175. [Google Scholar]

- Bollacker, K.; Evans, C.; Paritosh, P.; Sturge, T. Freebase: A collaborative created graph database for structuring human knowloedge. In Proceedings of the ACM SIGMOD International Conference on Management of Data, Vancouver, BC, Canada, 9–12 June 2008; pp. 1247–1250. [Google Scholar]

- Castillo, L.F.; Franco, O.H.; Giralbo, J.A. Agentes Basados en Ontologías para la Web Semántica. EATIS. 2010. Available online: http://www.eatis.org/eatis2010/portal/paper/memoria/html/files/99.pdf (accessed on 1 October 2021).

- Borràs, J.; Moreno, A.; Valls, A. Intelligent tourism recommender systems A survey. Expert Syst. Appl. 2014, 41, 7370–7389. [Google Scholar] [CrossRef]

- Muñoz, R.; Aparicio, F.; De Buenaga, M.; Gachet, D.; Puertas, E.; Giráldez, I.; Gaya, M.C. Tourist Face: A Contents System Based on Concepts of Freebase for Access to the Cultural-Tourist Information. In Natural Language Processing and Information Systems; Springer: Berlin/Heidelberg, Germany, 2011; pp. 300–304. [Google Scholar]

- Villena-Román, J.; Lana-Serrano, S.; Moreno, C.; García-Morera, J.; González, J.C. DAEDALUS at RepLab 2012: Polarity Classification and Filtering on Twitter Data. CLEF (Online Working Notes/Labs/Workshop). 2012. Available online: http://ceur-ws.org/Vol-1178/CLEF2012wn-RepLab-VillenaRomanEt2012.pdf (accessed on 1 October 2021).

- Fernald, G.H.; Capriotti, E.; Daneshjou, R.; Karczewski, K.J.; Altman, R.B. Bioinformatics Challenges for Personalized Medicine. Bioinformatics 2011, 27, 1741–1748. [Google Scholar] [CrossRef] [Green Version]

- Aparicio, F.; De Buenaga, M.; Rubio, M.; Hernando, A. An intelligent information access system assisting a case based learning methodology evaluated in higher education with medical students. Comput. Educ. 2012, 58, 1282–1295. [Google Scholar] [CrossRef]

- Vassiliadis, P.; Bouzeghoub, M.; Quix, C. Towards quality-oriented data warehouse usage and evolution. Inf. Syst. 2000, 25, 89–115. [Google Scholar] [CrossRef] [Green Version]

- Gómez-Pérez, J.M.; García-Cuesta, E.; Zhao, J.; Garrido, A.; Ruiz, J.E. How Reliable is Your Workflow: Monitoring Decay in Scholarly Publications. In Proceedings of the SePublica, Montpelier, France, 26–30 May 2013; pp. 75–86. [Google Scholar]

- Gómez-Pérez, J.M.; García-Cuesta, E.; Garrido, A.; Ruiz, J.E.; Zhao, J.; Klyne, G. When History Matters—Assessing Reliability for the Reuse of Scientific Workflows. In The Semantic Web—ISWC 2013; Lecture Notes in Computer, Science; Alani, H., Kagal, L., Fokoue, A., Groth, P., Biemann, C., Parreira, J.X., Aroyo, L., Noy, N., Welty, C., Janowicz, K., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8219. [Google Scholar]

- Goodhue, D.L. Understanding user evaluations of information systems. Manag. Sci. 1995, 41, 1827–1844. [Google Scholar] [CrossRef]

- Pu, P.; Chen, L. A User-Centric Evaluation Framework of Recommender Systems. In Proceedings of the ACM RecSys 2010 Workshop on User-Centric Evaluation of Recommender Systems and Their Interfaces, Barcelona, Spain, 26–30 September 2010; pp. 14–21. [Google Scholar]

- Pu, P.; Chen, L.; Hu, R. Evaluating recommender systems from the user’s perspective: Survey of the state of the art. User Modeling User-Adapt. Interact. 2012, 22, 317–355. [Google Scholar] [CrossRef]

- Jamieson, S. Likert scales: How to (ab) use them. Med Educ. 2004, 38, 1217–1218. [Google Scholar] [CrossRef] [PubMed]

- Aparicio, F.; Morales-Botello, M.L.; Rubio, M.; Hernando, A.; Muñoz, R.; López-Fernández, H.; Glez-Peña, D.; Fdez-Riverola, F.; de la Villa, M.; Maña, M.; et al. Perceptions of the use of intelligent information access systems in university level active learning activities among teachers of biomedical subjects. Int. J. Med. Inform. 2018, 112, 21–33. [Google Scholar] [CrossRef] [PubMed]

- De la Villa, M.; Aparicio, F.; Maña, M.J.; De Buenaga, M. A learning support tool with clinical cases based on concept maps and medical entity recognition. In Proceedings of the 2012 ACM International Conference on Intelligent User Interfaces, Lisbon, Portugal, 14–17 February 2012; pp. 61–70. [Google Scholar]

| Recommendation | Score |

|---|---|

| Not recommender | From 0 to 2 |

| Little recommended | From 2 to 3 |

| Recommended | From 3 to 4 |

| Very recommended | From 5 to 4.5 |

| Impressive | From 4.5 to 5 |

| The Functionality Is Easy to Use (a) | The Functionality Is Necessary (b) | The Functionality Is Agreeable (c) | I Was Informed about This Functionality (d) | |

|---|---|---|---|---|

| Natural language processing and Concept recognition (Q10) | 57% | 43% | 64% | 57% |

| Information integration; Sources (Q11) | 79% | 64% | 50% | 50% |

| Depth of extra information (Q12) | 50% | 57% | 57% | 71% |

| Basic recommendation (Q13) | 79% | 57% | 71% | 50% |

| Advanced suggestions (Q14) | 79% | 64% | 43% | 64% |

| Concept evaluation (Q15) | 86% | 79% | 71% | 71% |

| System | Statements | Answer | Mode | Median | ||||

|---|---|---|---|---|---|---|---|---|

| 1 Strongly Disagree | 2 Disagree | 3 Neutral | 4 Agree | 5 Strongly Agree | ||||

| BioAnnote | Q1 | 1 | 0 | 1 | 5 | 4 | 4 | 4 |

| Q2 | 0 | 0 | 1 | 2 | 8 | 5 | 5 | |

| Cleim | Q1 | 0 | 0 | 1 | 5 | 5 | 4–5 | 4 |

| Q2 | 0 | 0 | 0 | 3 | 8 | 5 | 5 | |

| MedCMap | Q1 | 0 | 0 | 3 | 2 | 6 | 5 | 5 |

| Q2 | 0 | 0 | 2 | 2 | 7 | 5 | 5 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gil, R.M.; de Buenaga Rodríguez, M.; Galisteo, F.A.; Páez, D.G.; García-Cuesta, E. A Domain-Adaptable Heterogeneous Information Integration Platform: Tourism and Biomedicine Domains. Information 2021, 12, 435. https://doi.org/10.3390/info12110435

Gil RM, de Buenaga Rodríguez M, Galisteo FA, Páez DG, García-Cuesta E. A Domain-Adaptable Heterogeneous Information Integration Platform: Tourism and Biomedicine Domains. Information. 2021; 12(11):435. https://doi.org/10.3390/info12110435

Chicago/Turabian StyleGil, Rafael Muñoz, Manuel de Buenaga Rodríguez, Fernando Aparicio Galisteo, Diego Gachet Páez, and Esteban García-Cuesta. 2021. "A Domain-Adaptable Heterogeneous Information Integration Platform: Tourism and Biomedicine Domains" Information 12, no. 11: 435. https://doi.org/10.3390/info12110435

APA StyleGil, R. M., de Buenaga Rodríguez, M., Galisteo, F. A., Páez, D. G., & García-Cuesta, E. (2021). A Domain-Adaptable Heterogeneous Information Integration Platform: Tourism and Biomedicine Domains. Information, 12(11), 435. https://doi.org/10.3390/info12110435