Predicting Stock Movements: Using Multiresolution Wavelet Reconstruction and Deep Learning in Neural Networks

Abstract

:1. Introduction

2. Related Work

2.1. Predictability of Stock Price Movement

2.2. Multiresolution Reconstruction Using Wavelets

2.3. Neural Networks

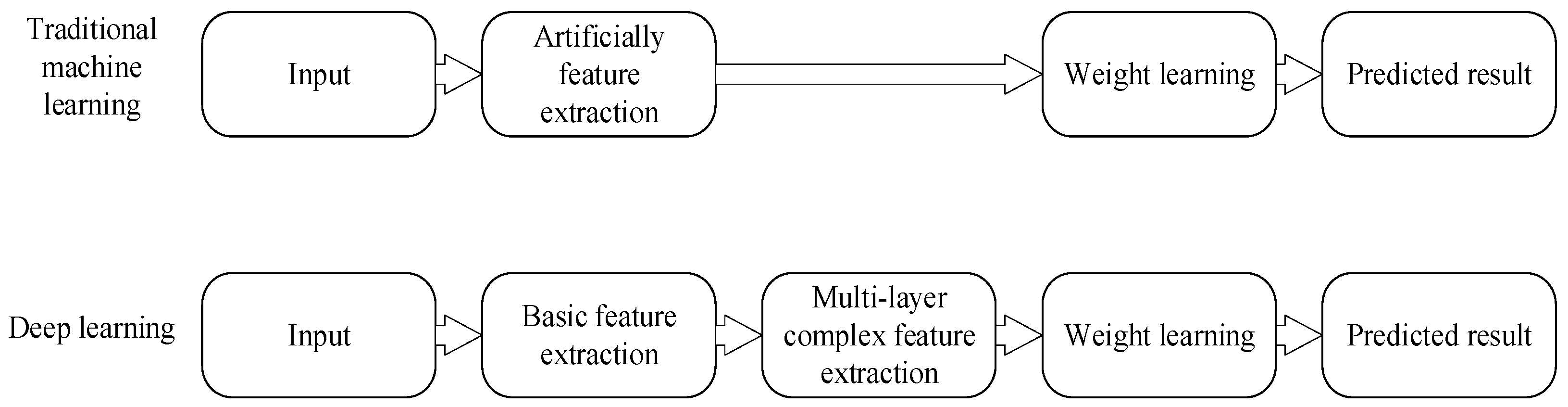

2.4. Deep Learning

2.5. Wavelet and Deep Neural Networks

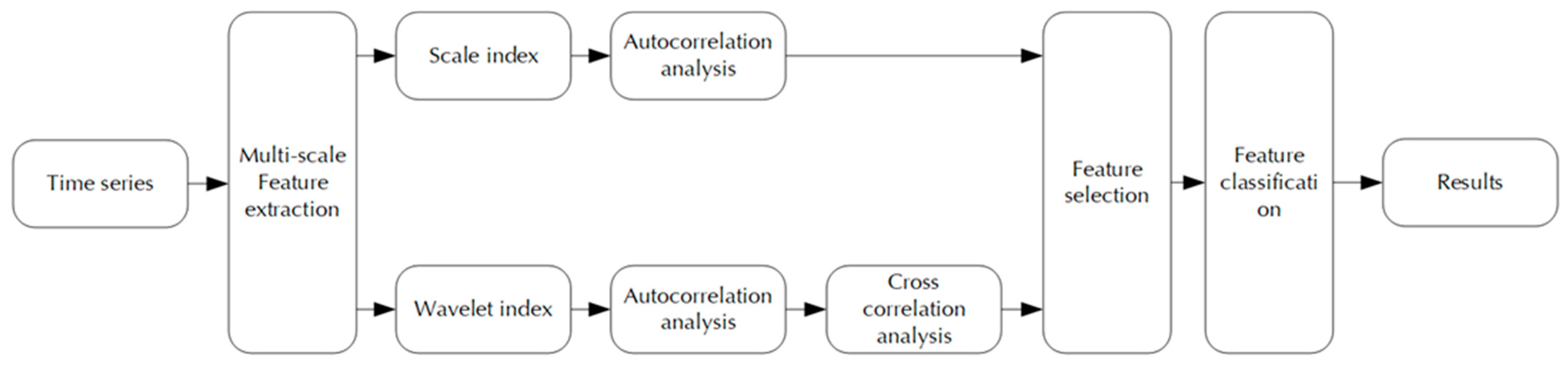

3. Multiresolution Wavelet Analysis and Correlation Analysis Model

3.1. Multi-Scale Analysis for Time Series

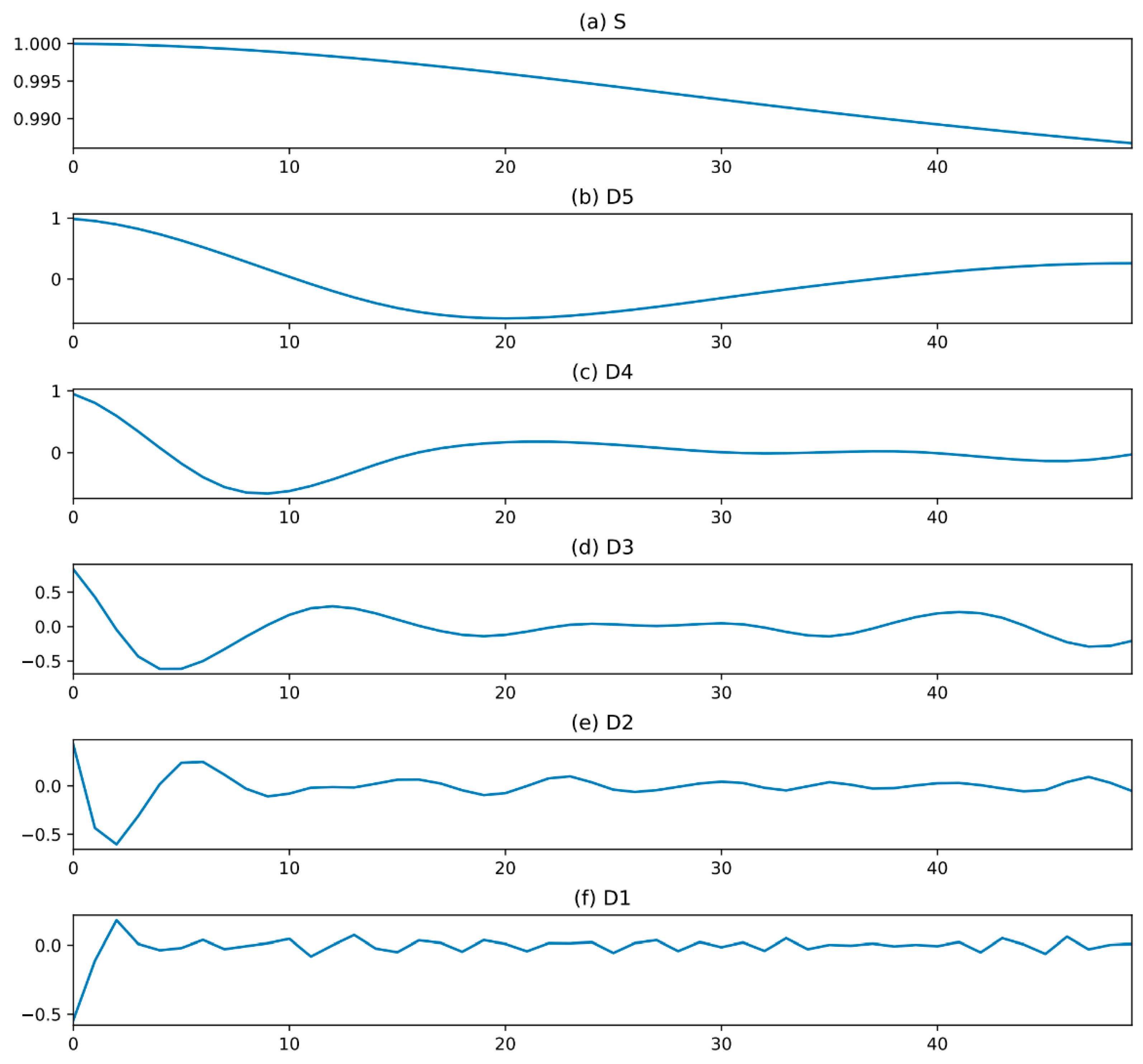

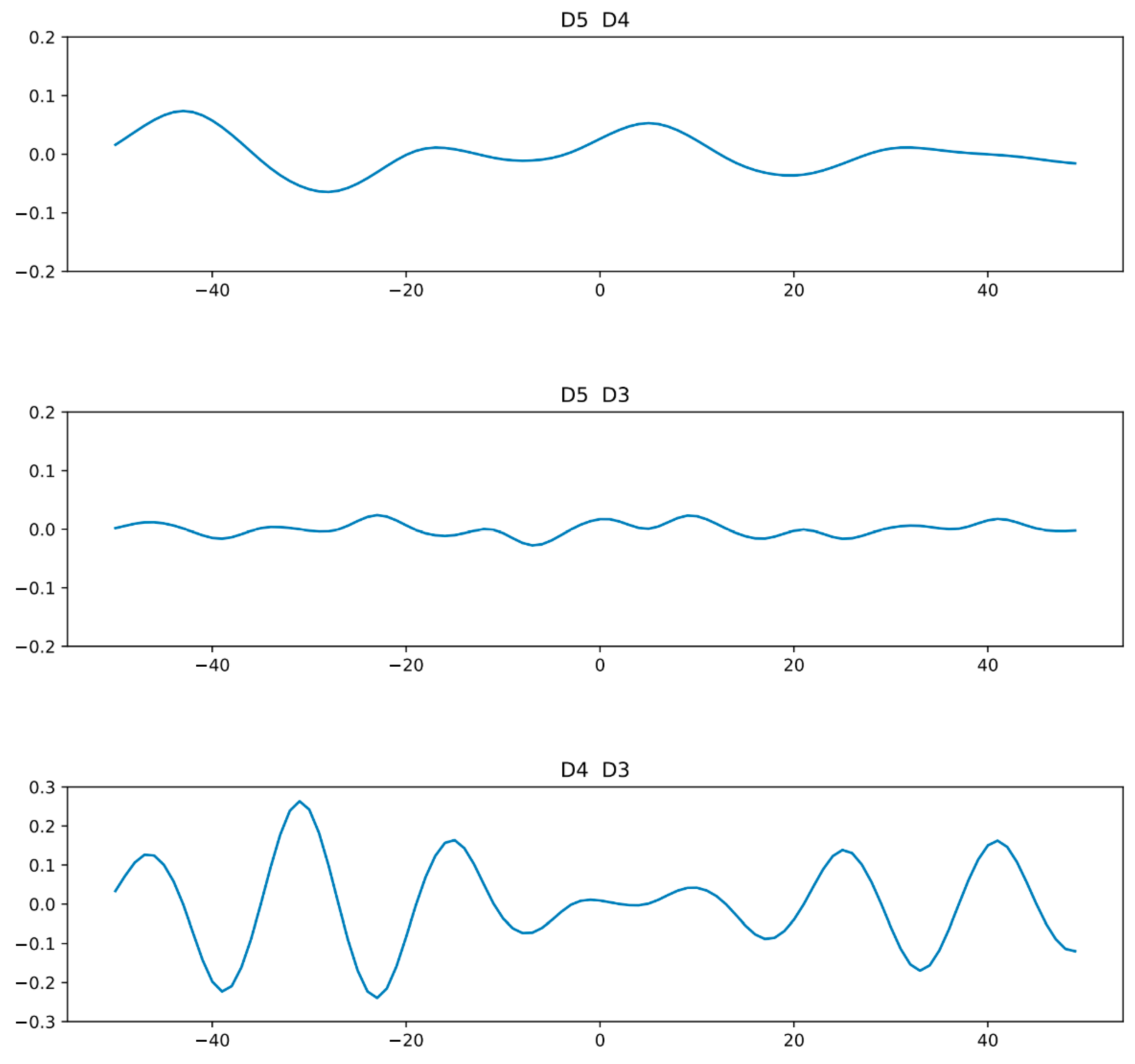

3.2. Correlation Analysis of Time Series

4. Empirical Study

4.1. Data Collection

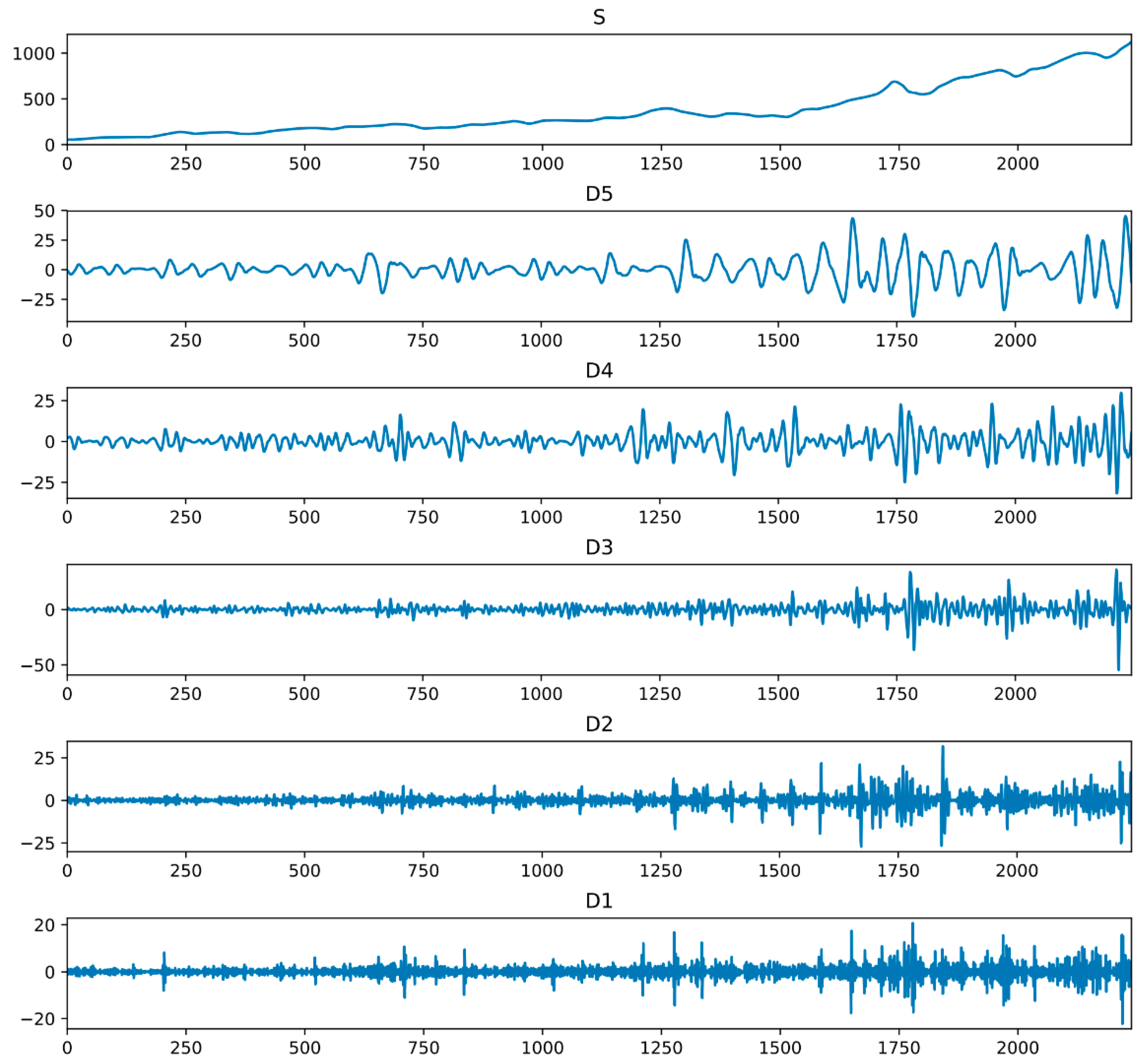

4.2. Multiresolution Reconstruction and Coefficients Selection

4.3. Results and Analysis

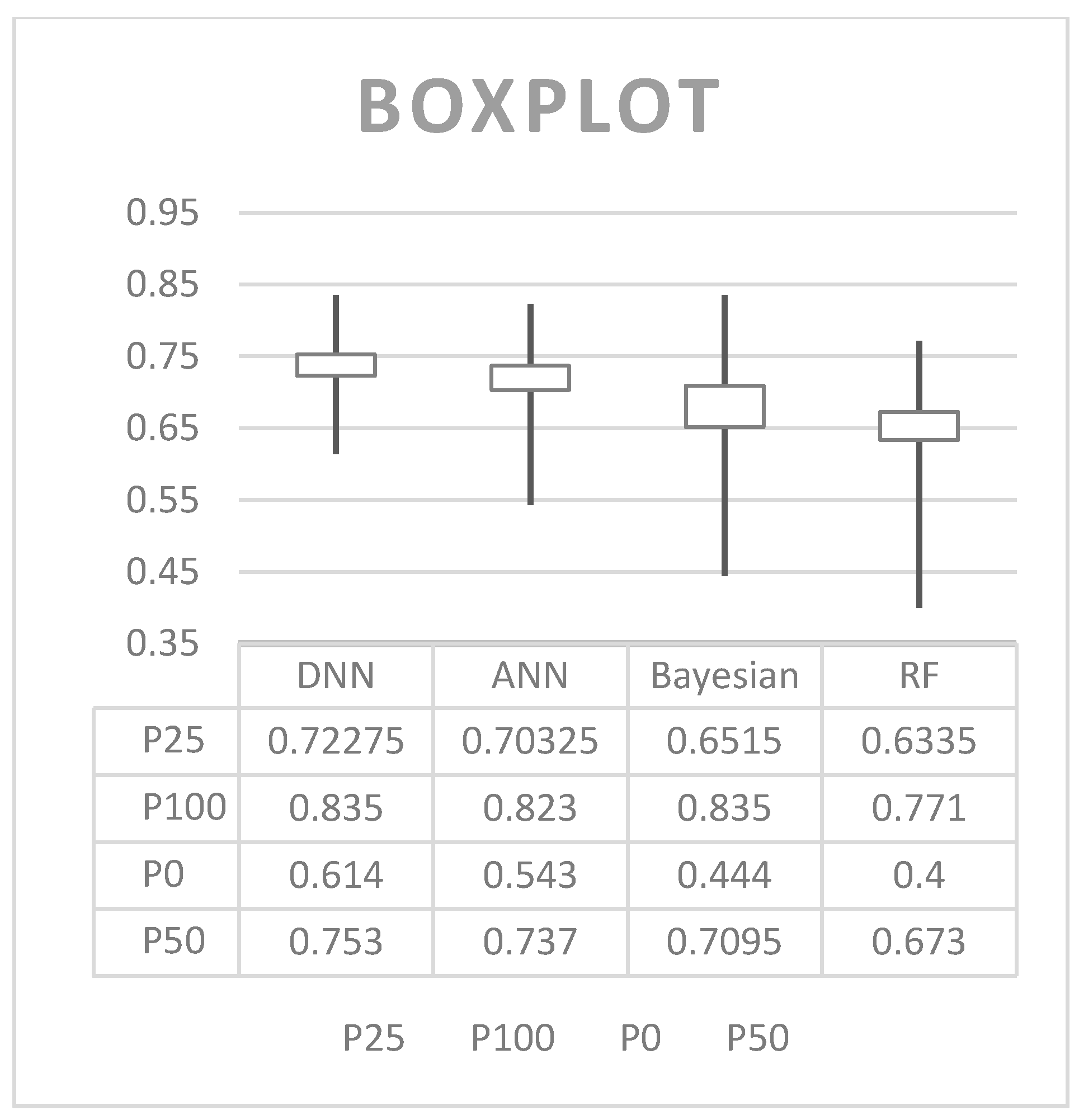

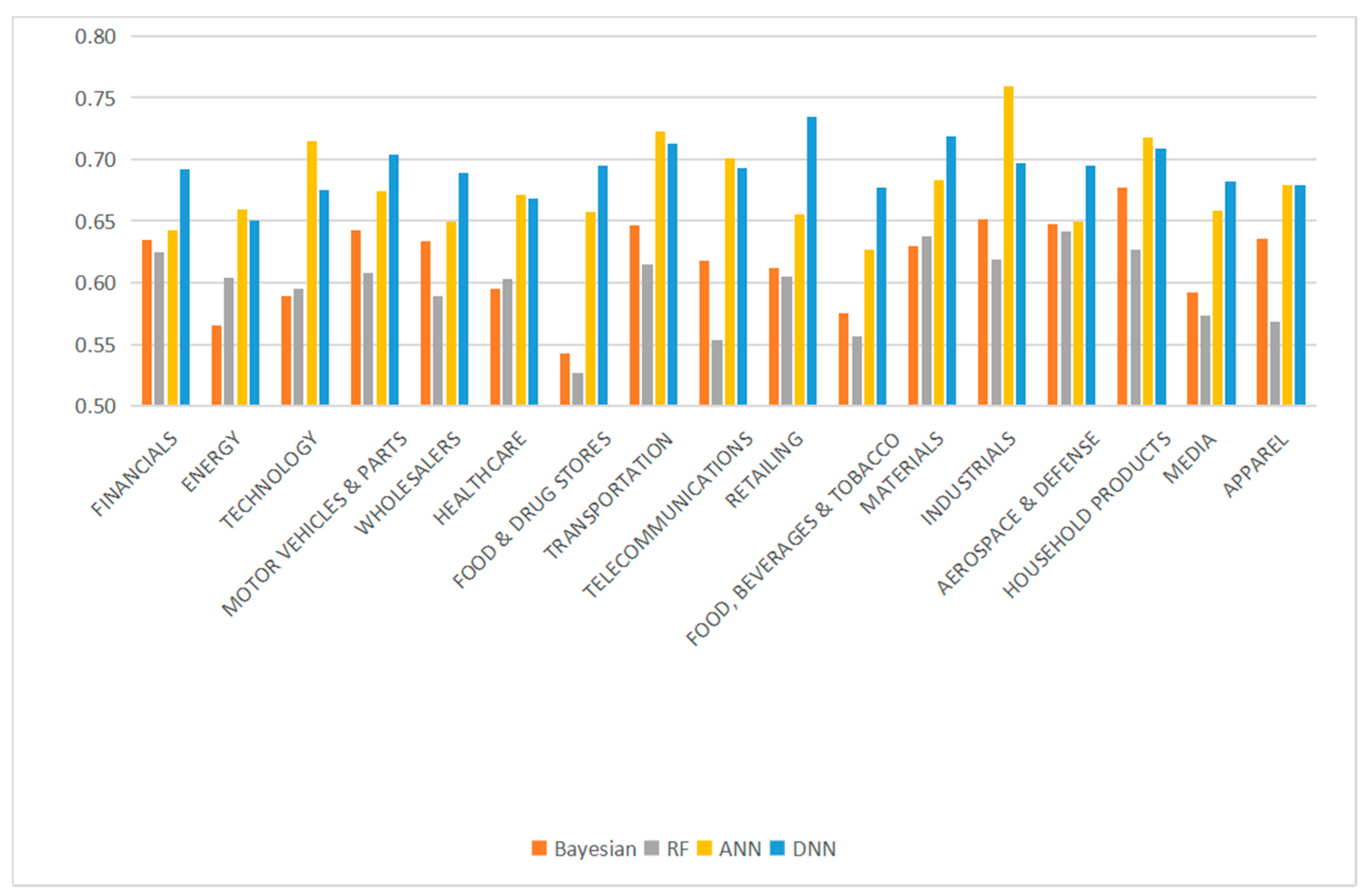

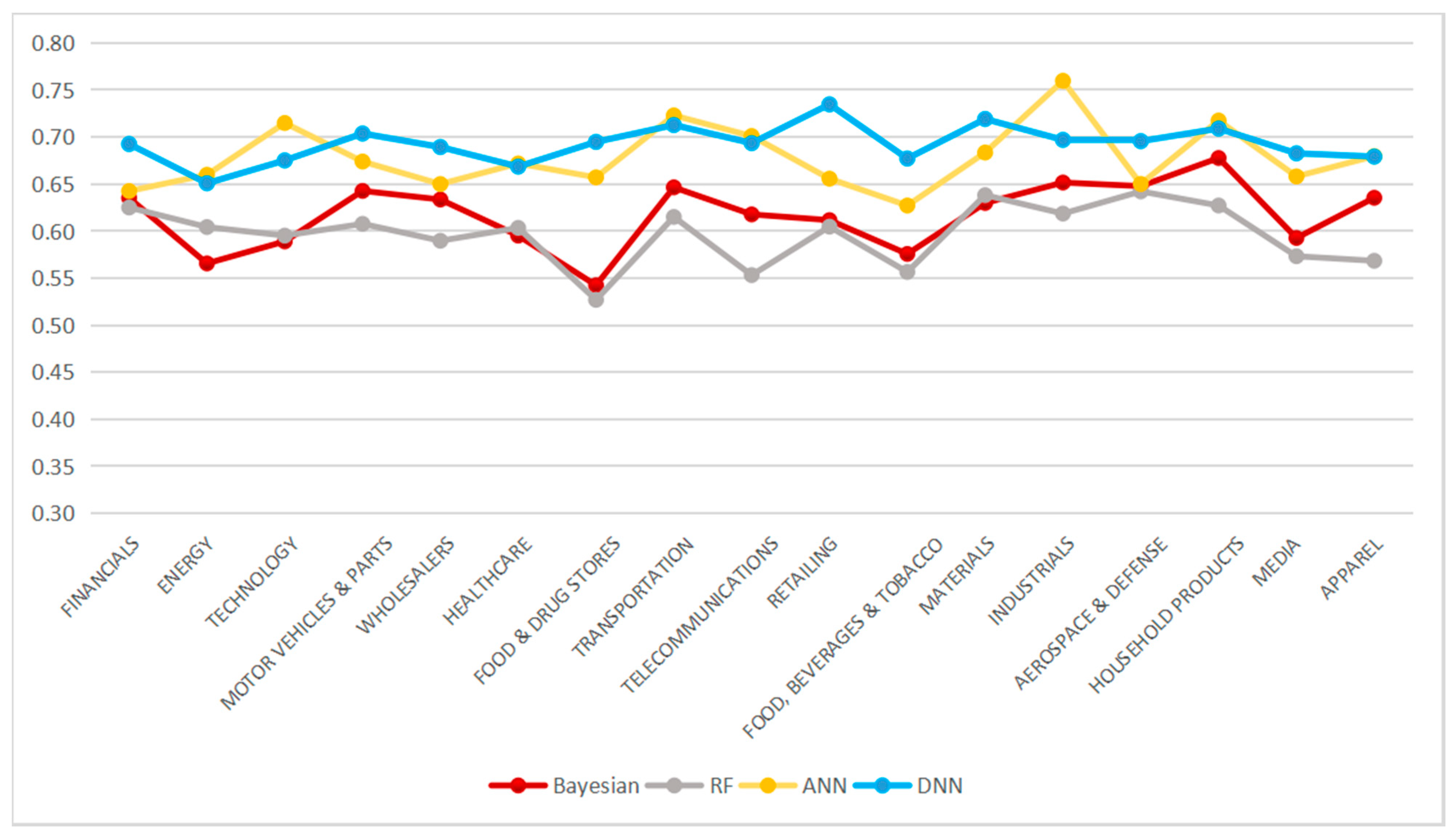

4.3.1. Comparisons Results with Other Baseline Algorithms

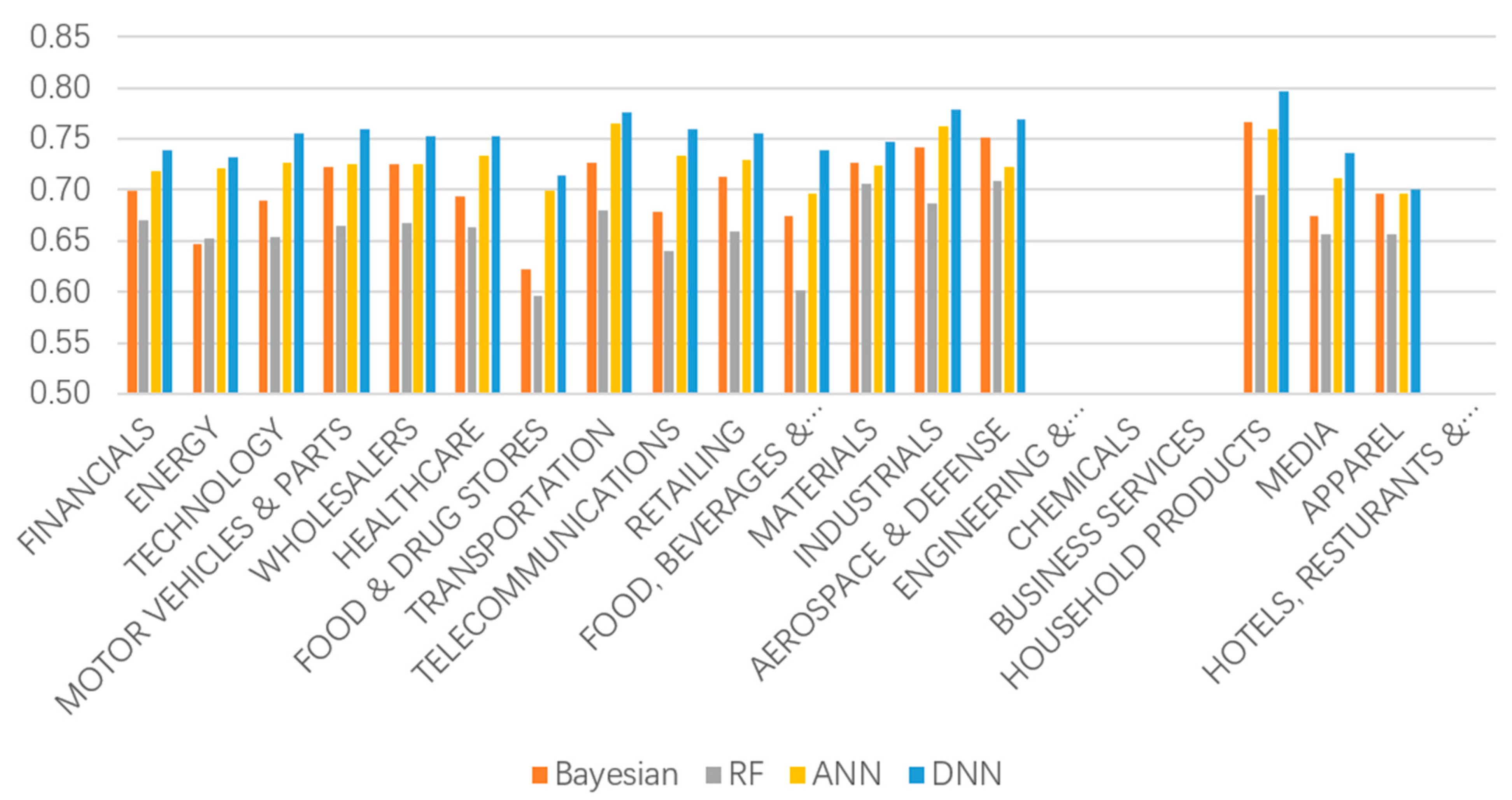

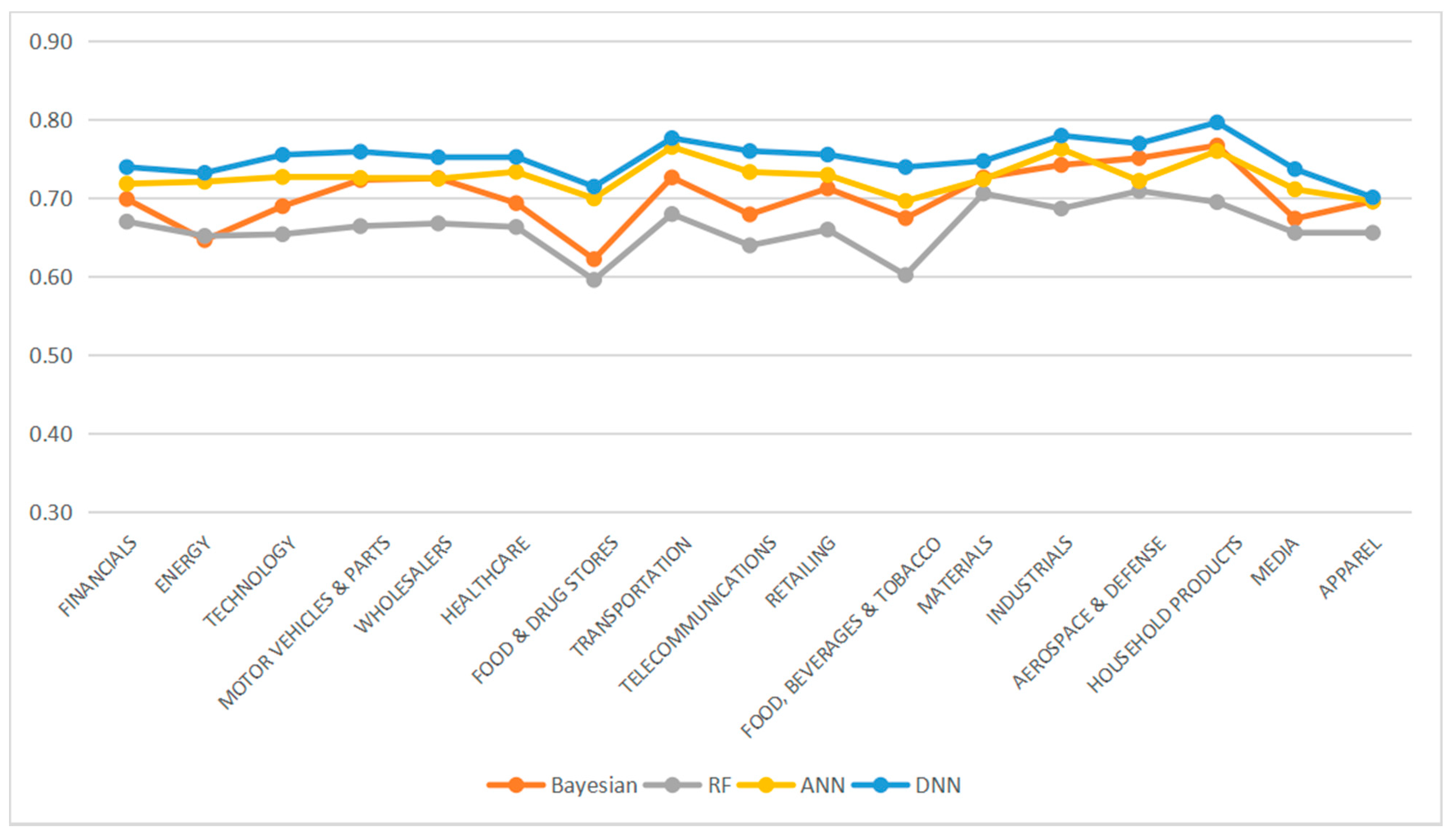

4.3.2. Results between Different Industries

5. Conclusions and Future Work

5.1. Conclusions

5.2. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ballings, M.; Poel, D.V.D.; Hespeels, N.; Gryp, R. Evaluating multiple classifiers for stock price direction prediction. Expert Syst. Appl. 2015, 42, 7046–7056. [Google Scholar] [CrossRef]

- Chong, E.; Han, C.; Park, F.C. Deep learning networks for stock market analysis and prediction: Methodology, data representations, and case studies. Expert Syst. Appl. 2017, 83, 187–205. [Google Scholar] [CrossRef] [Green Version]

- Guresen, E.; Kayakutlu, G.; Daim, T.U. Using artificial neural network models in stock market index prediction. Expert Syst. Appl. 2011, 38, 10389–10397. [Google Scholar] [CrossRef]

- Hsu, C.-M. A hybrid procedure for stock price prediction by integrating self-organizing map and genetic programming. Expert Syst. Appl. 2011, 38, 14026–14036. [Google Scholar] [CrossRef]

- Thanh, H.T.P.; Meesad, P. Stock Market Trend Prediction Based on Text Mining of Corporate Web and Time Series Data. J. Adv. Comput. Intell. Intell. Inform. 2014, 18, 22–31. [Google Scholar] [CrossRef]

- Dan, J.; Guo, W.; Shi, W.; Fang, B.; Zhang, T. PSO Based Deterministic ESN Models for Stock Price Forecasting. J. Adv. Comput. Intell. Intell. Inform. 2015, 19, 312–318. [Google Scholar] [CrossRef]

- Lei, L. Wavelet Neural Network Prediction Method of Stock Price Trend Based on Rough Set Attribute Reduction. Appl. Soft Comput. 2018, 62, 923–932. [Google Scholar] [CrossRef]

- Chen, Y.; Hao, Y. A feature weighted support vector machine and K-nearest neighbor algorithm for stock market indices prediction. Expert Syst. Appl. 2017, 80, 340–355. [Google Scholar] [CrossRef]

- Addison, P.S. The Illustrated Wavelet Transform Handbook: Introductory Theory and Applications in Science, Engineering, Medicine and Finance; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Bengio, Y. Learning Deep Architectures for AI. Found. Trends® Mach. Learn. 2009, 2, 1–127. [Google Scholar] [CrossRef]

- Malkiel, B.G.; Fama, E.F. Efficient Capital Markets: A Review of Theory and Empirical Work. J. Financ. 1970, 25, 383–417. [Google Scholar] [CrossRef]

- Singh, R.; Srivastava, S. Stock prediction using deep learning. Multimed. Tools Appl. 2017, 76, 18569–18584. [Google Scholar] [CrossRef]

- Tsinaslanidis, P.; Kugiumtzis, D. A prediction scheme using perceptually important points and dynamic time warping. Expert Syst. Appl. 2014, 41, 6848–6860. [Google Scholar] [CrossRef]

- Schumaker, R.P.; Chen, H. A quantitative stock prediction system based on financial news. Inf. Process. Manag. 2009, 45, 571–583. [Google Scholar] [CrossRef]

- Tsibouris, G.; Zeidenberg, M. Testing the efficient markets hypothesis with gradient descent algorithms. In Neural Networks in the Capital Markets; Wiley: Chichester, UK, 1995; pp. 127–136. [Google Scholar]

- Thomsett, M.C. Mastering Fundamental Analysis; Dearborn Financial Publishing: Chicago, IL, USA, 1998. [Google Scholar]

- Thomsett, M.C. Mastering Technical Analysis; Dearborn Trade Publishing: Chicago, IL, USA, 1999. [Google Scholar]

- Hellström, T.; Holmström, K. Predictable Patterns in Stock Returns. Sweden. 1998. Available online: http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.12.8541 (accessed on 7 September 2021).

- Teixeira, L.A.; de Oliveira, A.L.I. A method for automatic stock trading combining technical analysis and 1998 nearest neighbor classification. Expert Syst. Appl. 2010, 37, 6885–6890. [Google Scholar] [CrossRef]

- Hagenau, M.; Hauser, M.; Liebmann, M.; Neumann, D.; Neumann, D. Reading All the News at the Same Time: Predicting Mid-term Stock Price Developments Based on News Momentum. In Proceedings of the 2013 46th Hawaii International Conference on System Sciences, Maui, HI, USA, 7–10 January 2013; pp. 1279–1288. [Google Scholar]

- Daubechies, I. Ten Lectures on Wavelets; SIAM: Philadelphia, PA, USA, 1992. [Google Scholar]

- Chen, A.-S.; Leung, M.T.; Daouk, H. Application of neural networks to an emerging financial market: Forecasting and trading the Taiwan Stock Index. Comput. Oper. Res. 2003, 30, 901–923. [Google Scholar] [CrossRef]

- Hadavandi, E.; Shavandi, H.; Ghanbari, A. Integration of genetic fuzzy systems and artificial neural networks for stock price forecasting. Knowl. Based Syst. 2010, 23, 800–808. [Google Scholar] [CrossRef]

- Kim, K.-J.; Han, I. Genetic algorithms approach to feature discretization in artificial neural networks for the prediction of stock price index. Expert Syst. Appl. 2000, 19, 125–132. [Google Scholar] [CrossRef]

- Rather, A.M.; Agarwal, A.; Sastry, V. Recurrent neural network and a hybrid model for prediction of stock returns. Expert Syst. Appl. 2015, 42, 3234–3241. [Google Scholar] [CrossRef]

- Saad, E.; Prokhorov, D.; Wunsch, D. Comparative study of stock trend prediction using time delay, recurrent and probabilistic neural networks. IEEE Trans. Neural Netw. 1998, 9, 1456–1470. [Google Scholar] [CrossRef]

- Ticknor, J.L. A Bayesian regularized artificial neural network for stock market forecasting. Expert Syst. Appl. 2013, 40, 5501–5506. [Google Scholar] [CrossRef]

- Nagaya, S.; Chenli, Z.; Hasegawa, O. A Proposal of Stock Price Predictor Using Associated Memory. J. Adv. Comput. Intell. Intell. Inform. 2011, 15, 145–155. [Google Scholar] [CrossRef]

- White, H. Economic prediction using neural networks: The case of IBM daily stock returns. In Proceedings of the IEEE International Conference on Neural Networks, San Diego, CA, USA, 24–27 July 1988. [Google Scholar]

- Wuthrich, B.; Cho, V.; Leung, S.; Permunetilleke, D.; Sankaran, K.; Zhang, J. Daily stock market forecast from textual web data. In Proceedings of the SMC’98 Conference Proceedings—1998 IEEE International Conference on Systems, Man, and Cybernetics (Cat. No.98CH36218), San Diego, CA, USA, 14 October 1998; Volume 1–5, pp. 2720–2725. [Google Scholar]

- Groth, S.S.; Muntermann, J. An intraday market risk management approach based on textual analysis. Decis. Support Syst. 2011, 50, 680–691. [Google Scholar] [CrossRef]

- Enke, D.; Mehdiyev, N. Stock Market Prediction Using a Combination of Stepwise Regression Analysis, Differential Evolution-based Fuzzy Clustering, and a Fuzzy Inference Neural Network. Intell. Autom. Soft Comput. 2013, 19, 636–648. [Google Scholar] [CrossRef]

- Chiang, W.-C.; Enke, D.; Wu, T.; Wang, R. An adaptive stock index trading decision support system. Expert Syst. Appl. 2016, 59, 195–207. [Google Scholar] [CrossRef]

- Arévalo, A.; Niño, J.; Hernández, G.; Sandoval, J. High-Frequency Trading Strategy Based on Deep Neural Networks. In Intelligent Computing Methodologies; Huang, D.S., Han, K., Hussain, A., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2016; Volume 9773. [Google Scholar] [CrossRef] [Green Version]

- Zhong, X.; Enke, D. Forecasting daily stock market return using dimensionality reduction. Expert Syst. Appl. 2017, 67, 126–139. [Google Scholar] [CrossRef]

- Ejbali, R.; Zaied, M. A dyadic multi-resolution deep convolutional neural wavelet network for image classification. Multimed. Tools Appl. 2018, 77, 6149–6163. [Google Scholar] [CrossRef]

- Siegelmann, H.T.; Sontag, E. Turing computability with neural nets. Appl. Math. Lett. 1991, 4, 77–80. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Q.; Benveniste, A. Wavelet networks. IEEE Trans. Neural Netw. 1992, 3, 889–898. [Google Scholar] [CrossRef]

- Zhang, J.; Walter, G.; Miao, Y.; Lee, W.N.W. Wavelet neural networks for function learning. IEEE Trans. Signal Process 1995, 43, 1485–1497. [Google Scholar] [CrossRef]

- Bakshi, B.; Stephanopoulos, G. Wave-net: A multiresolution, hierarchical neural network with localized learning. Aiche J. 1993, 39, 57–81. [Google Scholar] [CrossRef]

- Yamanaka, S.; Morikawa, K.; Yamamura, O.; Morita, H.; Huh, J.Y. The Wavelet Transform of Pulse Wave and Electrocardiogram Improves Accuracy of Blood Pressure Estimation in Cuffless Blood Pressure Measurement. Circulation 2016, 134 (Suppl. 1), A14155. [Google Scholar]

- Xu, M. Study on the Wavelet and Frequency Domain Methods of Financial Volatility Analysis. Ph.D. Thesis, Tianjin University, Tianjin, China, 2004. [Google Scholar]

- Al Shalabi, L.; Shaaban, Z.; Kasasbeh, B. Data Mining: A Preprocessing Engine. J. Comput. Sci. 2006, 2, 735–739. [Google Scholar] [CrossRef] [Green Version]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Deng, J.; Sun, J.; Peng, W.; Hu, Y.; Zhang, D. Application of neural networks for predicting hot-rolled strip crown. Appl. Soft Comput. 2019, 78, 119–131. [Google Scholar] [CrossRef]

| Authors (Year) | Method | Sample Period | Forecast Type | Accuracy |

|---|---|---|---|---|

| Wuthrich et al. (1998) [30] | ANNs, rule-based | 6-Dec-1997 to 6-Mar-1998 (daily) | Market direction (up, steady or down) | 43.6% |

| Groth and Muntermann (2011) [31] | ANNs | 1-Aug-2003 to 31-Jul-2005 (daily) | Trading signal (stock price) | - |

| Enke and Mehdiyev (2013) [32] | Fuzzy NNs, fuzzy clustering | Jan-1980 to Jan-2010 (daily) | Stock price | - |

| Chiang, Enke, Wu, and Wang (2016) [33] | ANNs, particle swarm optimization | Jan-2008 to Dec-2010 (daily) | Trading signal (stock price) | - |

| Arevalo, Nino, Hernandez, and Sandoval (2016) [34] | DNNs | 2-Sep-2008 to 7-Nov-2008 (1 min) | Stock price | 66% |

| Zhong and Enke (2017) [35] | ANNs, dimension reduction | 1-Jun-2003 to 31-May-2013 (daily) | Market direction (up or down) | 58.1% |

| Singh and Srivastava (2017) [12] | DNNs, dimension reduction | 19-Aug-2004 to 10-Dec-2015 (daily) | Stock price | - |

| (Lei, 2018) [7] | Wavelet NNs, rough set (RS) | 2009 to 2014, five different stock markets | Stock price trend | 65.62~66.75% |

| Our approach | Deep learning in RNNs, MRA | 2013 to 2017, three different stock market, and S&P 500 stock index | Stock price movement |

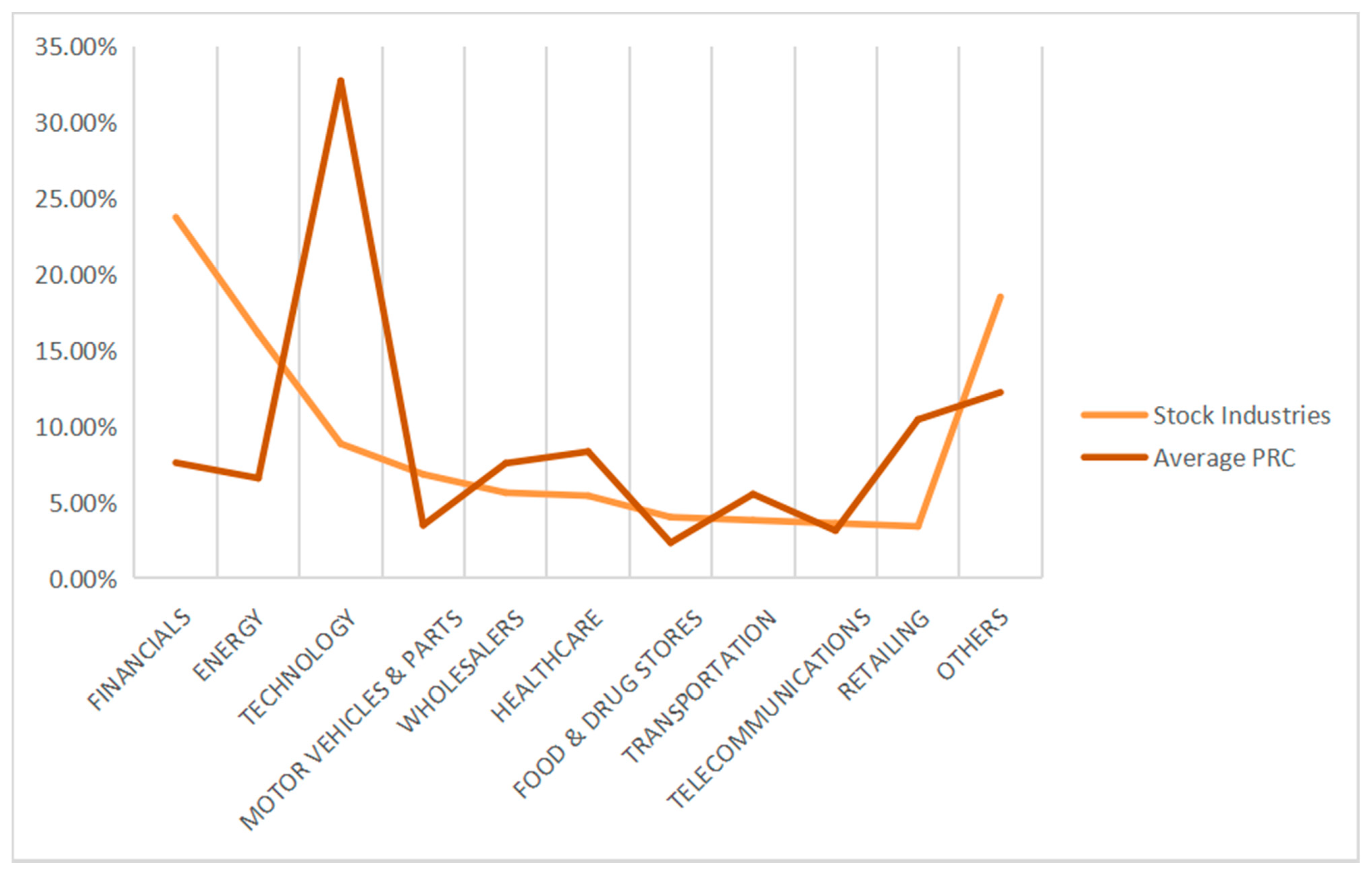

| Stock Industries | % |

|---|---|

| Financials | 23.74 |

| Energy | 16.10 |

| Technology | 8.85 |

| Motor Vehicles and Parts | 6.84 |

| Wholesalers | 5.63 |

| Healthcare | 5.43 |

| Food and Drug Stores | 4.02 |

| Transportation | 3.82 |

| Telecommunications | 3.62 |

| Retailing | 3.42 |

| Food, Beverages and Tobacco | 3.22 |

| Materials | 3.22 |

| Industrials | 3.02 |

| Aerospace and Defense | 2.82 |

| Engineering and Construction | 2.62 |

| Chemicals | 1.41 |

| Business Services | 0.60 |

| Household Products | 0.60 |

| Media | 0.60 |

| Apparel | 0.40 |

| Hotels, Restaurants and Leisure | 0.00 |

| Stock Industries | Baseline | Our Model | ||

|---|---|---|---|---|

| Bayesian | RF | ANN | DNN | |

| Financials | 0.60 | 0.61 | 0.63 | 0.71 |

| Energy | 0.56 | 0.61 | 0.69 | 0.65 |

| Technology | 0.59 | 0.57 | 0.65 | 0.69 |

| Motor Vehicles and Parts | 0.66 | 0.58 | 0.71 | 0.68 |

| Wholesalers | 0.65 | 0.58 | 0.72 | 0.70 |

| Healthcare | 0.60 | 0.60 | 0.72 | 0.71 |

| Food and Drug Stores | 0.53 | 0.52 | 0.63 | 0.64 |

| Transportation | 0.64 | 0.63 | 0.76 | 0.72 |

| Telecommunications | 0.60 | 0.58 | 0.70 | 0.69 |

| Retailing | 0.62 | 0.58 | 0.66 | 0.68 |

| Food, Beverages and Tobacco | 0.58 | 0.56 | 0.67 | 0.67 |

| Materials | 0.66 | 0.65 | 0.71 | 0.72 |

| Industrials | 0.65 | 0.62 | 0.76 | 0.74 |

| Aerospace and Defense | 0.65 | 0.64 | 0.70 | 0.71 |

| Engineering and Construction | ||||

| Chemicals | ||||

| Business Services | ||||

| Household Products | 0.68 | 0.63 | 0.72 | 0.71 |

| Media | 0.59 | 0.57 | 0.66 | 0.68 |

| Apparel | 0.64 | 0.57 | 0.68 | 0.68 |

| Hotels, Restaurants and Leisure | ||||

| AVG | 0.62 | 0.59 | 0.69 | 0.69 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peng, L.; Chen, K.; Li, N. Predicting Stock Movements: Using Multiresolution Wavelet Reconstruction and Deep Learning in Neural Networks. Information 2021, 12, 388. https://doi.org/10.3390/info12100388

Peng L, Chen K, Li N. Predicting Stock Movements: Using Multiresolution Wavelet Reconstruction and Deep Learning in Neural Networks. Information. 2021; 12(10):388. https://doi.org/10.3390/info12100388

Chicago/Turabian StylePeng, Lifang, Kefu Chen, and Ning Li. 2021. "Predicting Stock Movements: Using Multiresolution Wavelet Reconstruction and Deep Learning in Neural Networks" Information 12, no. 10: 388. https://doi.org/10.3390/info12100388

APA StylePeng, L., Chen, K., & Li, N. (2021). Predicting Stock Movements: Using Multiresolution Wavelet Reconstruction and Deep Learning in Neural Networks. Information, 12(10), 388. https://doi.org/10.3390/info12100388