Multidocument Arabic Text Summarization Based on Clustering and Word2Vec to Reduce Redundancy

Abstract

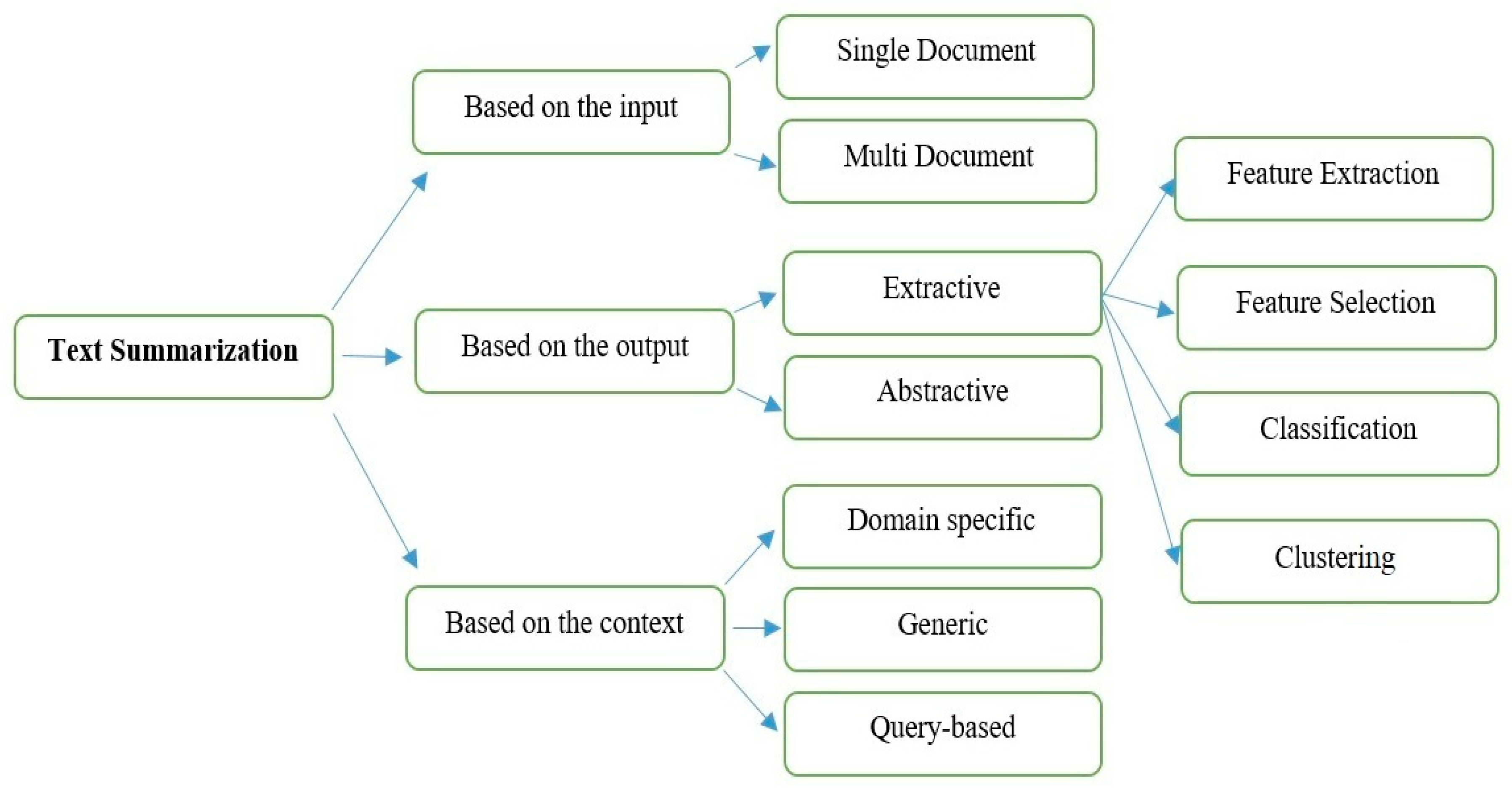

1. Introduction

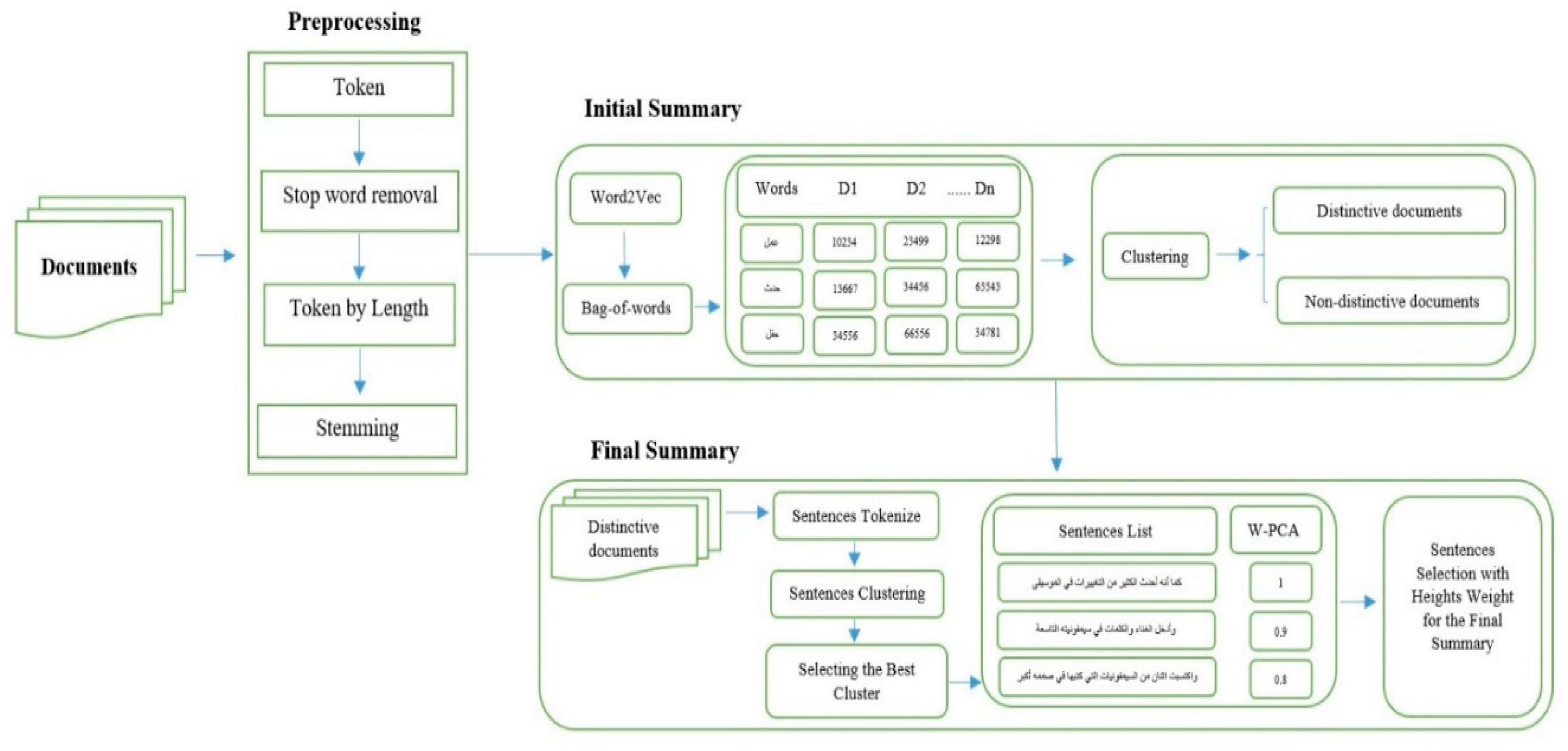

- We have adopted a continuous bag-of-words (CBOW) model to generate the semantics and relationships in order to retain the context information.

- We have applied a k-means algorithm for realizing text summarization with the Arabic language, which can aid in increasing the efficiency of the model.

- We have adopted a statistical model (weighted principal component analysis (W-PCA)) based on a list of features to solve the ranking and selection problems.

2. Related Work

2.1. Machine Learning Method

2.2. Semantic Method

2.3. Cluster-Based Method

2.4. Statistical Method

2.5. Graph Method

2.6. Optimization Method

2.7. Discourse Method

3. The Challenge of the Redundancy

- The Arabic language is diacritical and derivative, making morphology analysis a hard task.

- Arabic words are often imprecise, since the system is based on a tri-literal root.

- Broken plurals, where a broken plural in linguistics is an irregular plural form of a noun or adjective initiate in the Semitic Arabic languages.

- Characters can be written in different ways based on the location of the character in a word.

4. Proposed System

5. Experimental Results

5.1. Data Collection

5.2. Text Preprocessing

5.3. Initial Summary Generation

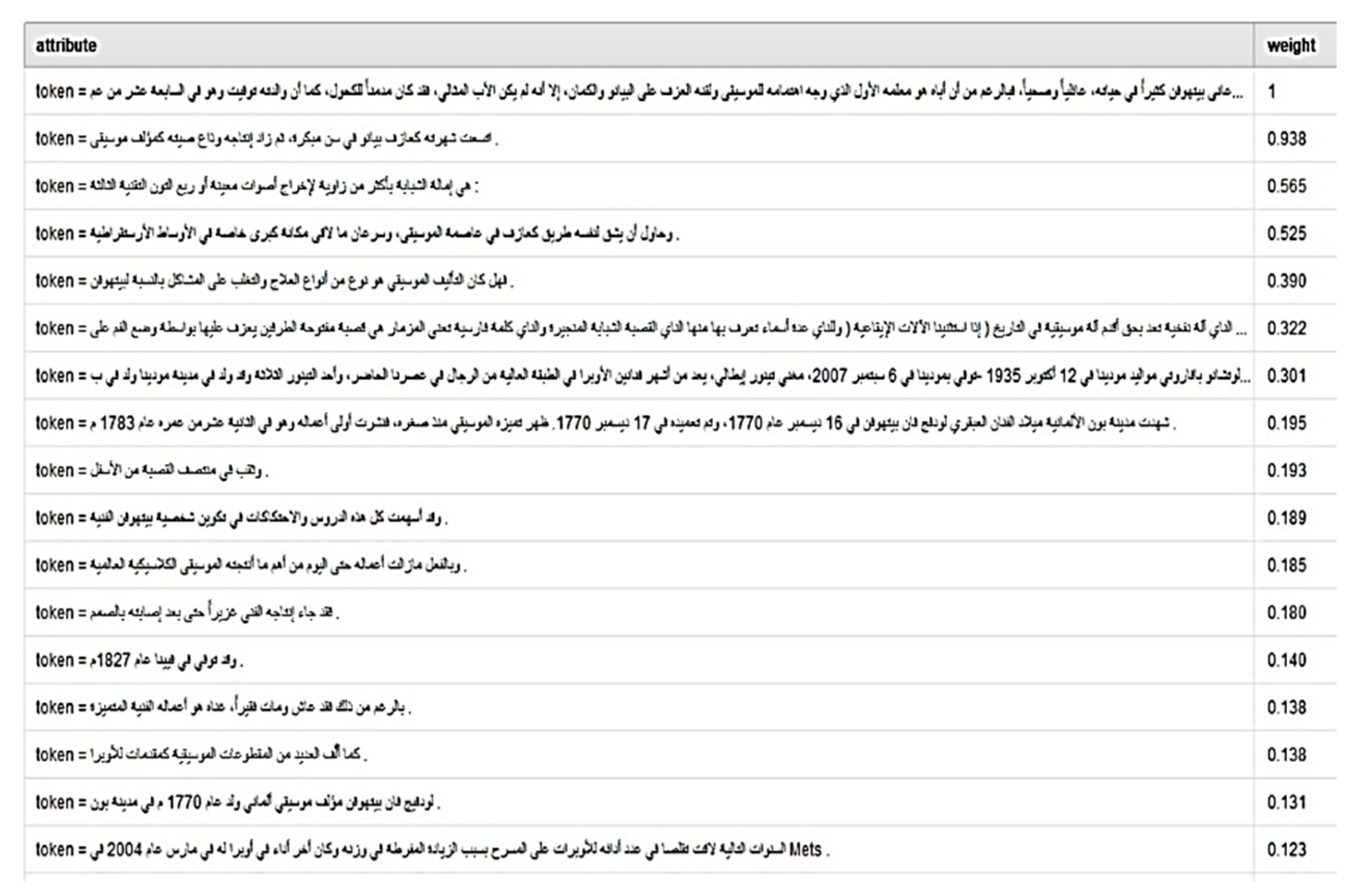

5.4. Final Summary Generation

6. Evaluation and Comparison

7. Discussion

8. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Aliguliyev, R.M.; Isazade, N.R.; Abdi, A.; Idris, N.J.E.S. COSUM: Text summarization based on clustering and optimization. Wiley Online Libr. 2019, 36, e12340. [Google Scholar] [CrossRef]

- Sanchez-Gomez, J.M.; Vega-Rodríguez, M.A.; Pérez, C.J. Comparison of automatic methods for reducing the Pareto front to a single solution applied to multi-document text summarization. Knowl. -Based Syst. 2019, 174, 123–136. [Google Scholar] [CrossRef]

- Verma, P.; Om, H. MCRMR: Maximum coverage and relevancy with minimal redundancy based multi-document summarization. Expert Syst. Appl. 2019, 120, 43–56. [Google Scholar] [CrossRef]

- Patel, D.B.; Shah, S.; Chhinkaniwala, H.R. Fuzzy logic based multi Document Summarization with improved sentence scoring and redundancy removal technique. Expert Syst. Appl. 2019. [Google Scholar] [CrossRef]

- Mallick, C.; Das, A.K.; Dutta, M.; Das, A.K.; Sarkar, A. Graph-Based Text Summarization Using Modified TextRank. In Soft Computing in Data Analytics; Springer: Berlin, Germany, 2019; pp. 137–146. [Google Scholar]

- Kanapala, A.; Pal, S.; Pamula, R. Text summarization from legal documents: A survey. Artif. Intell. Rev. 2019, 51, 371–402. [Google Scholar] [CrossRef]

- Belkebir, R.; Guessoum, A. A supervised approach to arabic text summarization using adaboost. In New Contributions in Information Systems and Technologies; Springer: Berlin, Germany, 2015; pp. 227–236. [Google Scholar]

- Amato, F.; Marrone, S.; Moscato, V.; Piantadosi, G.; Picariello, A.; Sansone, C. HOLMeS: eHealth in the Big Data and Deep Learning Era. MDPI Inf. 2019, 10, 34. [Google Scholar] [CrossRef]

- Gerani, S.; Carenini, G.; Ng, R.T. Language. Modeling content and structure for abstractive review summarization. Comput. Speech Lang. 2019, 53, 302–331. [Google Scholar] [CrossRef]

- Abualigah, L.; Bashabsheh, M.Q.; Alabool, H.; Shehab, M. Text Summarization: A Brief Review. In Recent Advances in NLP: The Case of Arabic Language; Springer: Berlin, Germany, 2020; pp. 1–15. [Google Scholar]

- Suleiman, D.; Awajan, A.A.; Al Etaiwi, W. Arabic Text Keywords Extraction using Word2vec. In Proceedings of the 2019 2nd International Conference on new Trends in Computing Sciences (ICTCS), Amman, Jordan, 9–11 October 2019; pp. 1–7. [Google Scholar]

- Amato, F.; Moscato, V.; Picariello, A.; Sperli’ì, G. Extreme events management using multimedia social networks. Future Gener. Comput. Syst. 2019, 94, 444–452. [Google Scholar] [CrossRef]

- Al-Abdallah, R.Z.; Al-Taani, A.T. Arabic Text Summarization using Firefly Algorithm. In Proceedings of the 2019 Amity International Conference on Artificial Intelligence (AICAI), Dubai, United Arab Emirates, 4–6 February 2019; pp. 61–65. [Google Scholar]

- Wei, F.; Li, W.; Lu, Q.; He, Y. A document-sensitive graph model for multi-document summarization. Knowl. Inf. Syst. 2010, 22, 245–259. [Google Scholar] [CrossRef]

- Wan, X.; Yang, J. Multi-document summarization using cluster-based link analysis. In Proceedings of the 31st annual international ACM SIGIR conference on Research and development in information retrieval, Singapore, 20–24 July 2008; pp. 299–306. [Google Scholar]

- Amato, F.; Castiglione, A.; Mercorio, F.; Mezzanzanica, M.; Moscato, V.; Picariello, A.; Sperlì, G. Multimedia story creation on social networks. Future Gener. Comput. Syst. 2018, 86, 412–420. [Google Scholar] [CrossRef]

- Al-Radaideh, Q.A.; Bataineh, D.Q. A hybrid approach for arabic text summarization using domain knowledge and genetic algorithms. Cogn. Comput. 2018, 10, 651–669. [Google Scholar] [CrossRef]

- Al-Abdallah, R.Z.; Al-Taani, A.T. Arabic single-document text summarization using particle swarm optimization algorithm. Procedia Comput. Sci. 2017, 117, 30–37. [Google Scholar] [CrossRef]

- Lagrini, S.; Redjimi, M.; Azizi, N. Automatic Arabic Text Summarization Approaches. Int. J. Comput. Appl. 2017, 164. [Google Scholar] [CrossRef]

- Bialy, A.A.; Gaheen, M.A.; ElEraky, R.; ElGamal, A.; Ewees, A.A. Single Arabic Document Summarization Using Natural Language Processing Technique. In Recent Advances in NLP: The Case of Arabic Language; Springer: Berlin, Germany, 2020; pp. 17–37. [Google Scholar]

- Al Qassem, L.M.; Wang, D.; Al Mahmoud, Z.; Barada, H.; Al-Rubaie, A.; Almoosa, N.I. Automatic Arabic summarization: A survey of methodologies and systems. Procedia Comput. Sci. 2017, 117, 10–18. [Google Scholar] [CrossRef]

- Badry, R.M.; Moawad, I.F. A Semantic Text Summarization Model for Arabic Topic-Oriented. In Proceedings of the International Conference on Advanced Machine Learning Technologies and Applications, Cairo, Egypt, 28–30 March 2019; pp. 518–528. [Google Scholar]

- El-Haj, M.; Kruschwitz, U.; Fox, C. Using Mechanical Turk to Create a Corpus of Arabic Summaries; University of Essex: Essex, UK, 2010. [Google Scholar]

- Alami, N.; Meknassi, M.; En-nahnahi, N. Enhancing unsupervised neural networks based text summarization with word embedding and ensemble learning. Expert Syst. Appl. 2019, 123, 195–211. [Google Scholar] [CrossRef]

- Blagec, K.; Xu, H.; Agibetov, A.; Samwald, M. Neural sentence embedding models for semantic similarity estimation in the biomedical domain. BMC Bioinform. 2019, 20, 178. [Google Scholar] [CrossRef]

- Elbarougy, R.; Behery, G.; El Khatib, A. Extractive Arabic Text Summarization Using Modified PageRank Algorithm. Int. Conf. Adv. Mach. Learn. Technol. Appl. 2019. [Google Scholar] [CrossRef]

- Deng, X.; Li, Y.; Weng, J.; Zhang, J. Feature selection for text classification: A review. Multimed. Tools Appl. 2019, 78, 3797–3816. [Google Scholar] [CrossRef]

- Mosa, M.A.; Anwar, A.S.; Hamouda, A. A survey of multiple types of text summarization with their satellite contents based on swarm intelligence optimization algorithms. Knowl. -Based Syst. 2019, 163, 518–532. [Google Scholar] [CrossRef]

- Adhvaryu, N.; Balani, P. Survey: Part-Of-Speech Tagging in NLP. In Proceedings of the International Journal of Research in Advent Technology (E-ISSN: 2321-9637) Special Issue 1st International Conference on Advent Trends in Engineering, Science and Technology “ICATEST 2015”, Amravati, Maharashtra, India, 8 March 2015. [Google Scholar]

- Abuobieda, A.; Salim, N.; Albaham, A.T.; Osman, A.H.; Kumar, Y.J. Text summarization features selection method using pseudo genetic-based model. In Proceedings of the 2012 International Conference on Information Retrieval & Knowledge Management, Kuala Lumpur, Malaysia, 13–15 March 2012; pp. 193–197. [Google Scholar]

- Al-Saleh, A.B.; Menai, M.E.B. Automatic Arabic text summarization: A survey. Artif. Intell. Rev. 2016, 45, 203–234. [Google Scholar] [CrossRef]

- Li, H. Multivariate time series clustering based on common principal component analysis. Neurocomputing 2019, 349, 239–247. [Google Scholar] [CrossRef]

| Word | Prefixes | Infixes | Suffixes | Meaning |

|---|---|---|---|---|

| دارسون | - | أ | و+ ن | Scholars |

| مدرسات | م | - | أ + ت | Teachers |

| المدارس | م + ل + ا | أ | - | Schools |

| The Name of the Corpus | Essex Arabic Summaries Corpus (EASC) |

|---|---|

| Number of Documents | 153 |

| Number of Sentences | 1652 |

| Number of Words | 29,045 |

| Number of Distinct Words | 12,785 |

| Number of Gold-Standard Summaries | 10 (one for each category) |

| Name of Category | Cluster Distance Performance | Best Cluster | Distinctive Documents |

|---|---|---|---|

| Music and Art | Cluster-0: 0.694 Cluster-1: 0.678 | Cluster-1 | 1, 2, 3, 4, and 10 |

| Education | Cluster-0: 0.639 Cluster-1: 0.534 | Cluster-1 | 5, 6, and 7 |

| Tourisms | Cluster-0: 0.660 Cluster-1: 0.700 | Cluster-0 | 2, 3, 4, 5, 7, and 9 |

| Environment | Cluster-0: 0.770 Cluster-1: 0.484 | Cluster-1 | 1, 5, and 8 |

| Health | Cluster-0: 0.583 Cluster-1: 0.722 | Cluster-0 | 2, 3, 4, and 10 |

| Finance | Cluster-0: 0.675 Cluster-1: 0.694 | Cluster-0 | 1, 2, 3, 7, and 9 |

| Politics | Cluster-0: 0.724 Cluster-1: 0.612 | Cluster-1 | 3, 6, 7, and 8 |

| Science and Technology | Cluster-0: 0.709 Cluster-1: 0.706 | Cluster-1 | 5, 7, 8, 9, and 10 |

| Religion | Cluster-0: 0.533 Cluster-1: 0.687 | Cluster-0 | 1, 3, and 5 |

| Sport | Cluster-0: 0.537 Cluster-1: 0.716 | Cluster-0 | 1, 2, and 10 |

| Name of Category | ROUGE-1 | ROUGE-2 | ||||

|---|---|---|---|---|---|---|

| Recall | Precision | F-score | Recall | Precision | F-Score | |

| Music and Art | 0.672 | 0.501 | 0.574 | 0.70 | 0.427 | 0.517 |

| Education | 0.603 | 0.579 | 0.590 | 0.580 | 0.351 | 0.431 |

| Tourisms | 0.504 | 0.304 | 0.379 | 0.548 | 0.360 | 0.405 |

| Environment | 0.532 | 0.307 | 0.389 | 0.464 | 0.308 | 0.355 |

| Finance | 0.605 | 0.589 | 0.467 | 0.558 | 0.339 | 0.418 |

| Health | 0.695 | 0.60 | 0.644 | 0.621 | 0.498 | 0.552 |

| Politics | 0.548 | 0.407 | 0.467 | 0.447 | 0.319 | 0.345 |

| Religion | 0.608 | 0.6 | 0.603 | 0.587 | 0.534 | 0.559 |

| Science and Technology | 0.607 | 0.564 | 0.584 | 0.615 | 0.421 | 0.501 |

| Sport | 0.364 | 0.313 | 0.336 | 0.462 | 0.418 | 0.332 |

| Author (s) Name & Year | Arabic Corpus | Methods | F-score Results |

|---|---|---|---|

| [18] Al-Abdallah 2017 | EASC | Optimization Algorithm (single document) | 0.553 |

| [13] Al-Abdallah 2019 | EASC | Firefly Algorithm (single document) | 0.57 |

| [17] Al-Radaideh 2018 | EASC | Genetic Algorithms (single document) | 0.605 |

| [11] Suleiman 2019 | The Universal Declaration of Human Rights | Word2Vec and Clustering (single document) | 0.63 |

| Our approach | EASC | Word2Vec, Clustering, and Statistical-based methods (multidocument) | 0.644 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdulateef, S.; Khan, N.A.; Chen, B.; Shang, X. Multidocument Arabic Text Summarization Based on Clustering and Word2Vec to Reduce Redundancy. Information 2020, 11, 59. https://doi.org/10.3390/info11020059

Abdulateef S, Khan NA, Chen B, Shang X. Multidocument Arabic Text Summarization Based on Clustering and Word2Vec to Reduce Redundancy. Information. 2020; 11(2):59. https://doi.org/10.3390/info11020059

Chicago/Turabian StyleAbdulateef, Samer, Naseer Ahmed Khan, Bolin Chen, and Xuequn Shang. 2020. "Multidocument Arabic Text Summarization Based on Clustering and Word2Vec to Reduce Redundancy" Information 11, no. 2: 59. https://doi.org/10.3390/info11020059

APA StyleAbdulateef, S., Khan, N. A., Chen, B., & Shang, X. (2020). Multidocument Arabic Text Summarization Based on Clustering and Word2Vec to Reduce Redundancy. Information, 11(2), 59. https://doi.org/10.3390/info11020059