Multi-Sensor Activity Monitoring: Combination of Models with Class-Specific Voting

Abstract

1. Introduction

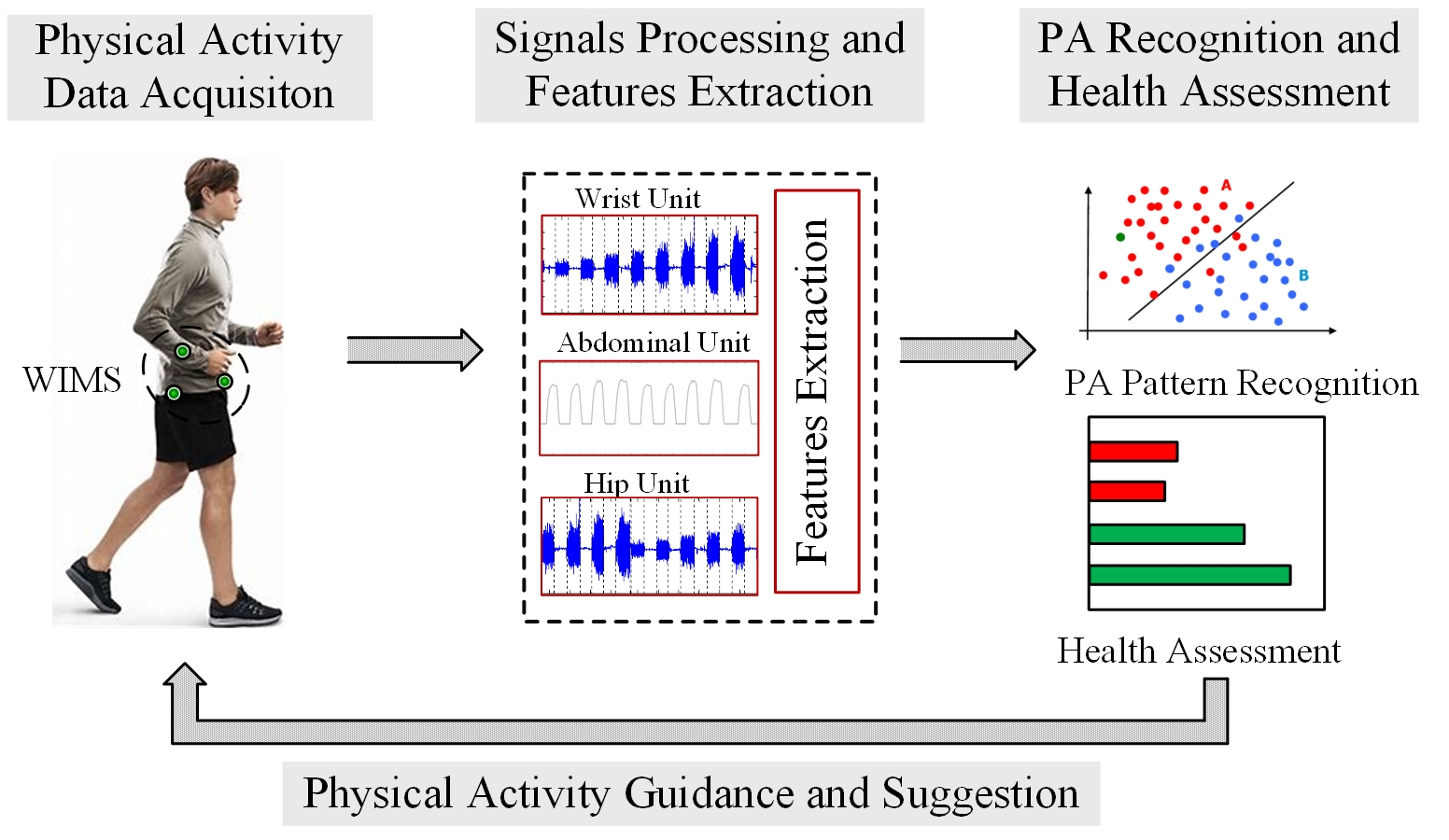

2. Model Combination Method

2.1. Model Combination System Design

2.2. Class-Specific Weighted Majority Voting

3. Multi-Sensor Measurement Platform

3.1. Multi-Sensor Monitoring System Based on the WIMS

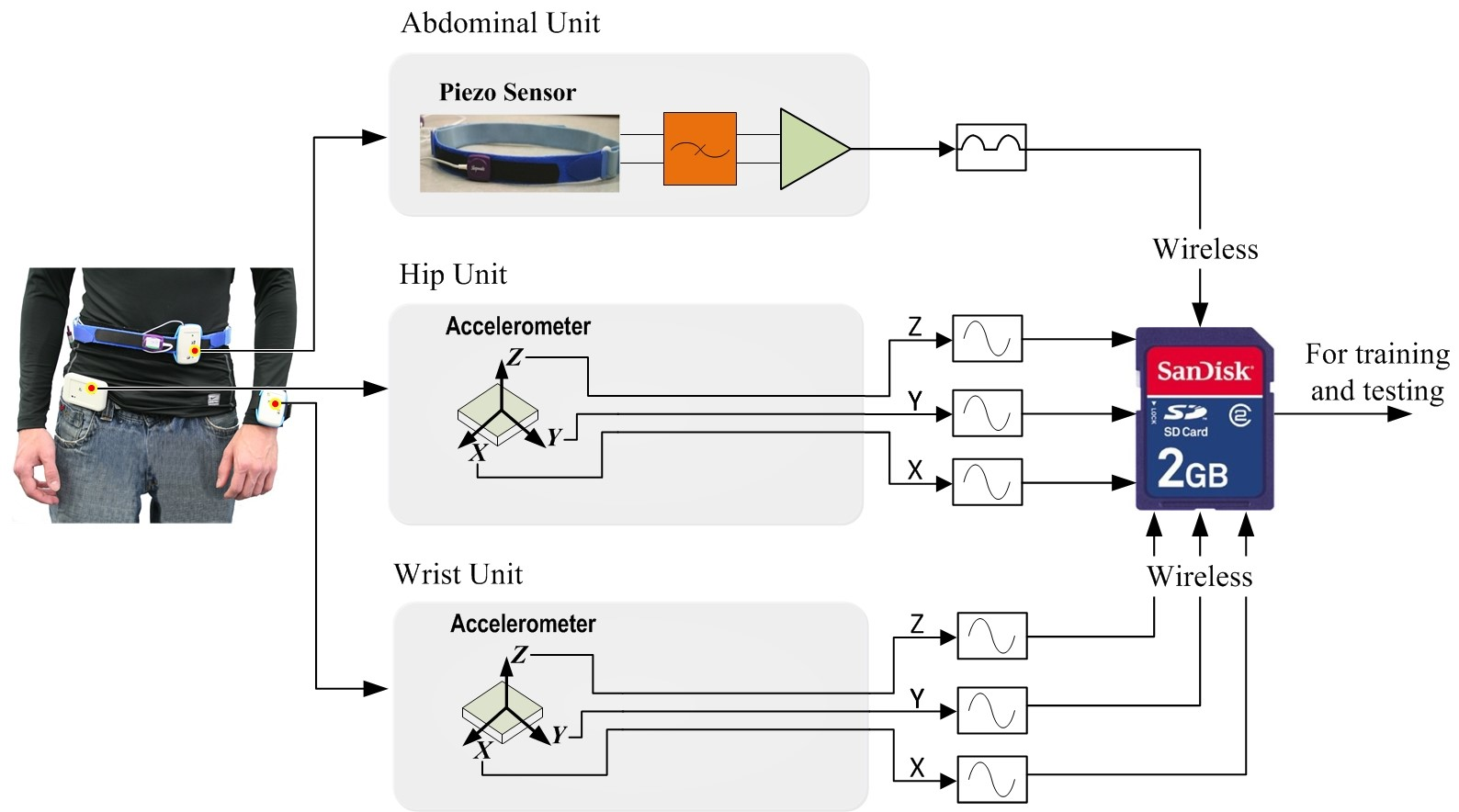

3.2. WIMS System Design and Realization

- Hip Unit: one tri-axial accelerometer ADXL345 worn at the hip, to measure the body motions that characterize the degree of PA of the lower part of the body;

- Wrist Unit: one tri-axial accelerometer ADXL345 worn on the wrist, to measure the arm and hand motions that characterize the PA of the upper part of the body;

- Abdominal Unit: one ventilation sensor made of piezoelectric crystal wrapped around the abdomen, for measuring the expansion and contraction resulting from the subject’s respiration (breathing rate and volume).

4. Experiment Design and Data Processing

4.1. Design of Experiments

4.2. Data Processing

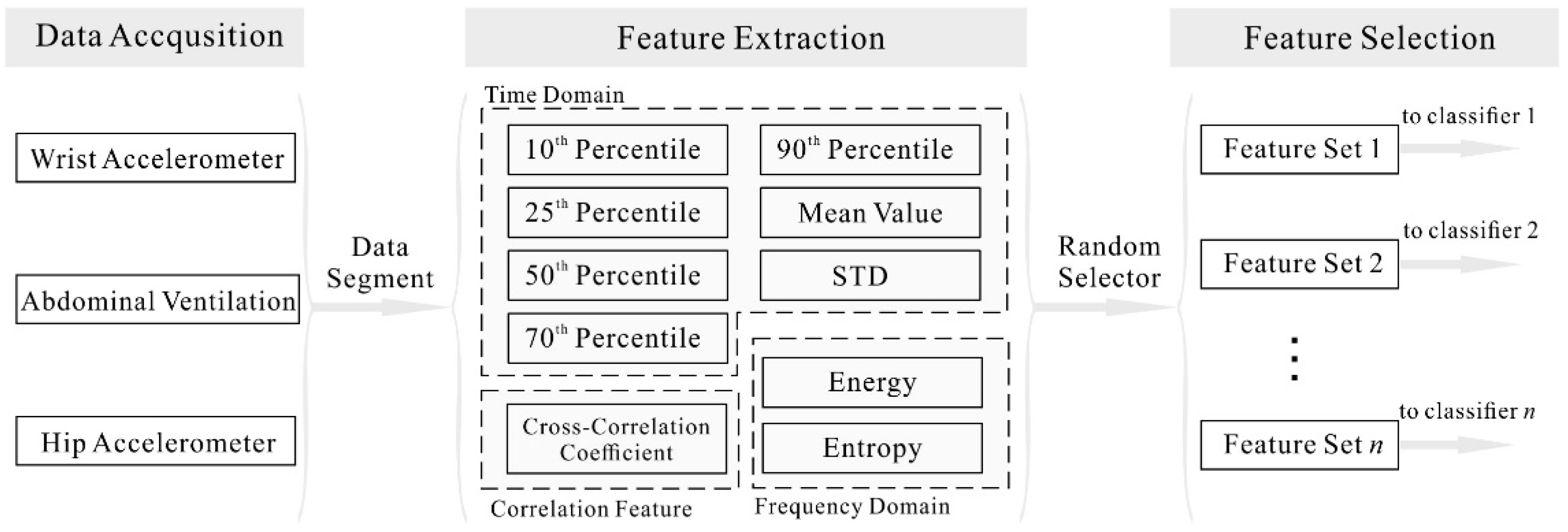

4.2.1. Sensor Sets Generation and Diversification

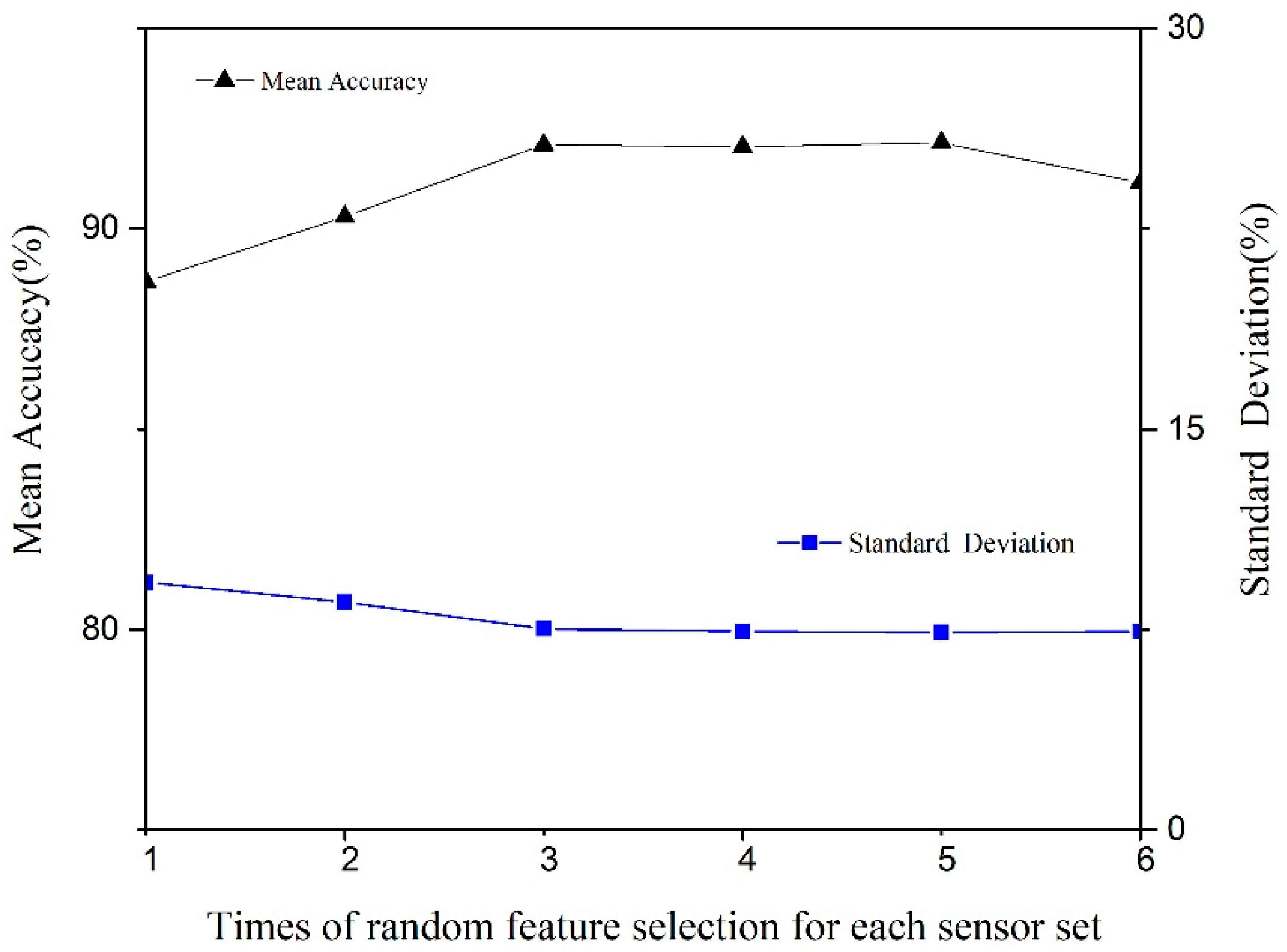

4.2.2. Feature Extraction and Selection

4.2.3. Model Selection, Training, and Testing

5. Results and Discussion

5.1. Separate Classifier Results

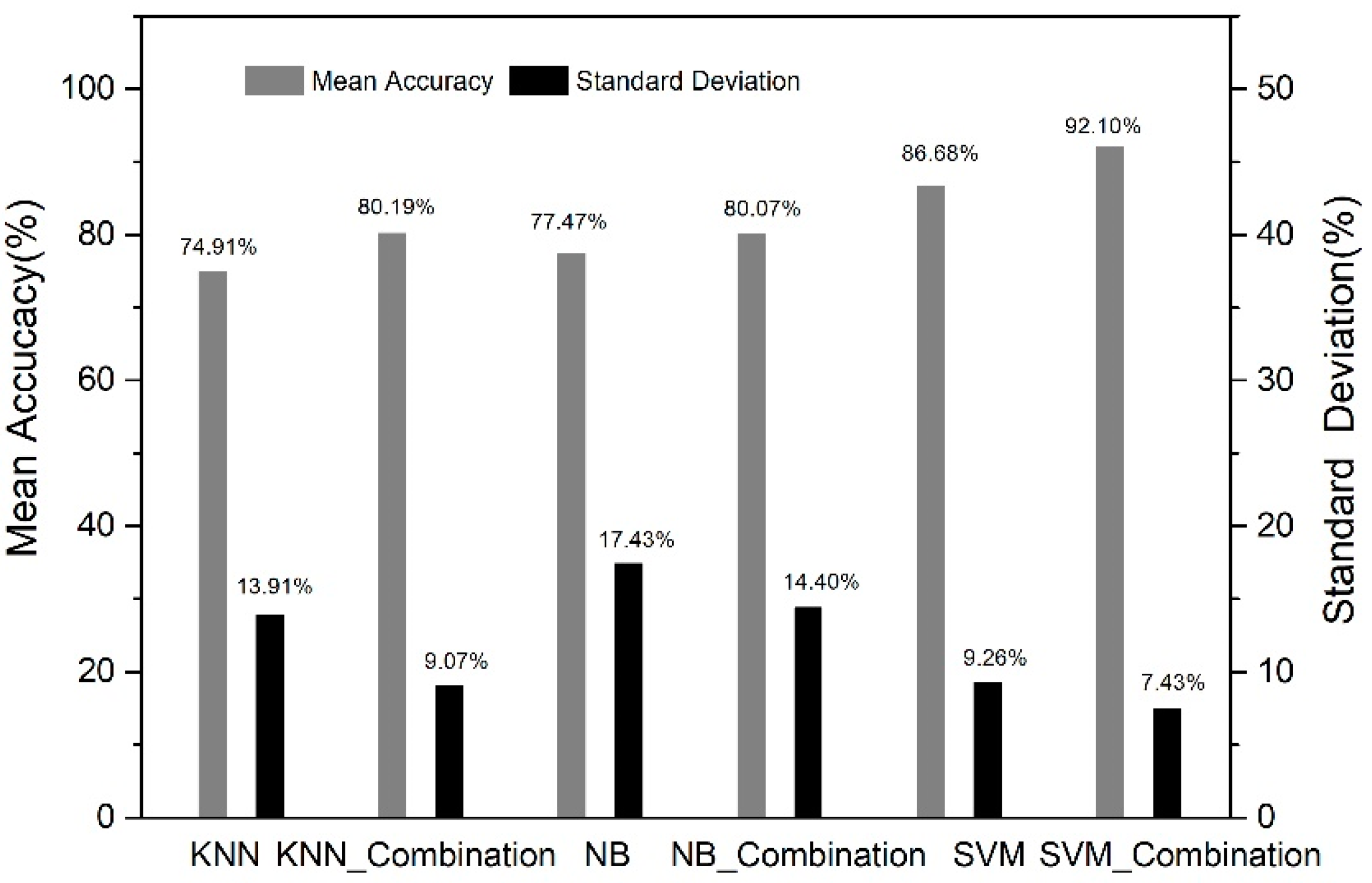

5.2. PA Types Identification of Different Classifier Clusters

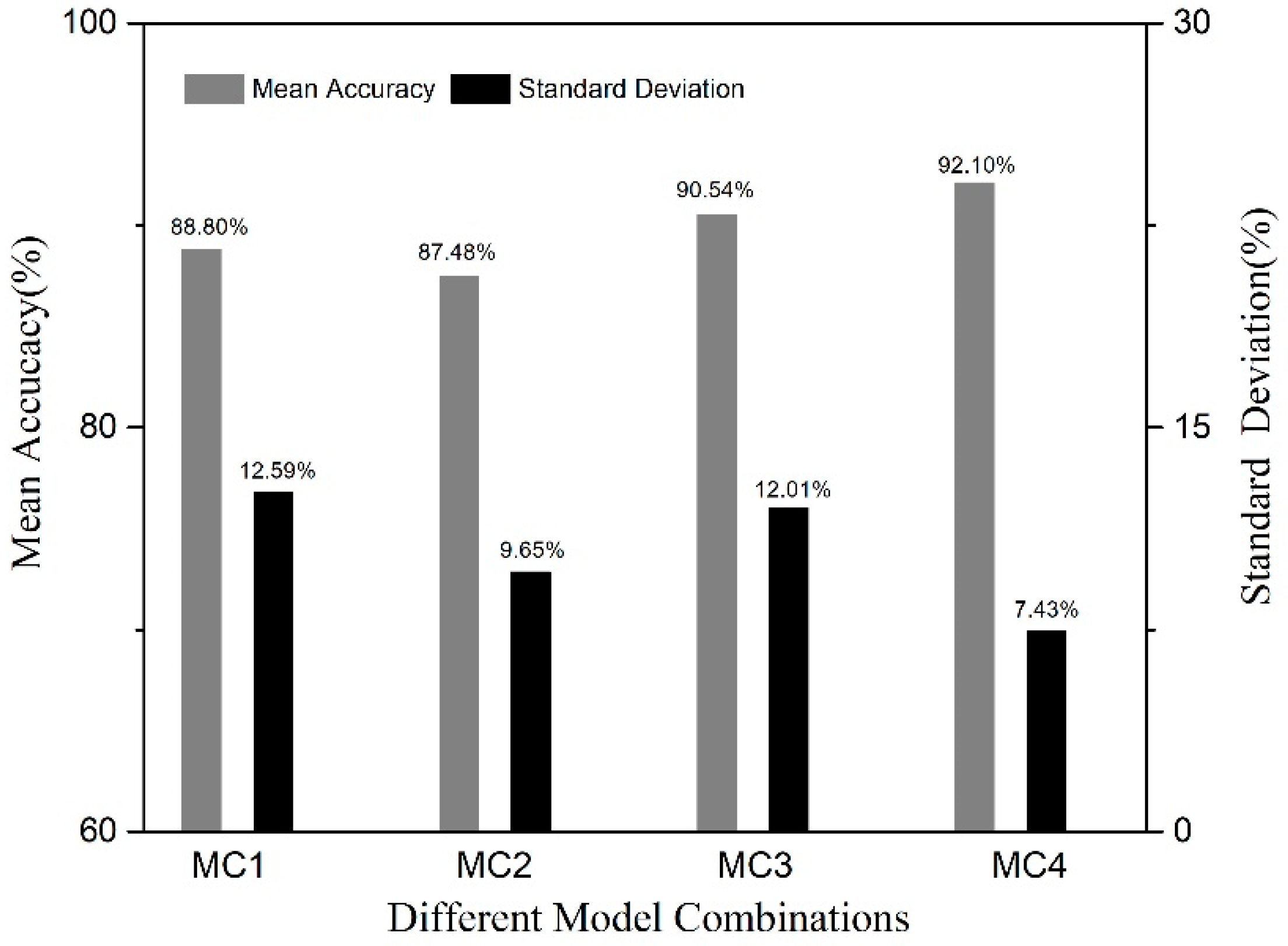

5.3. Results of Different Model Combinations

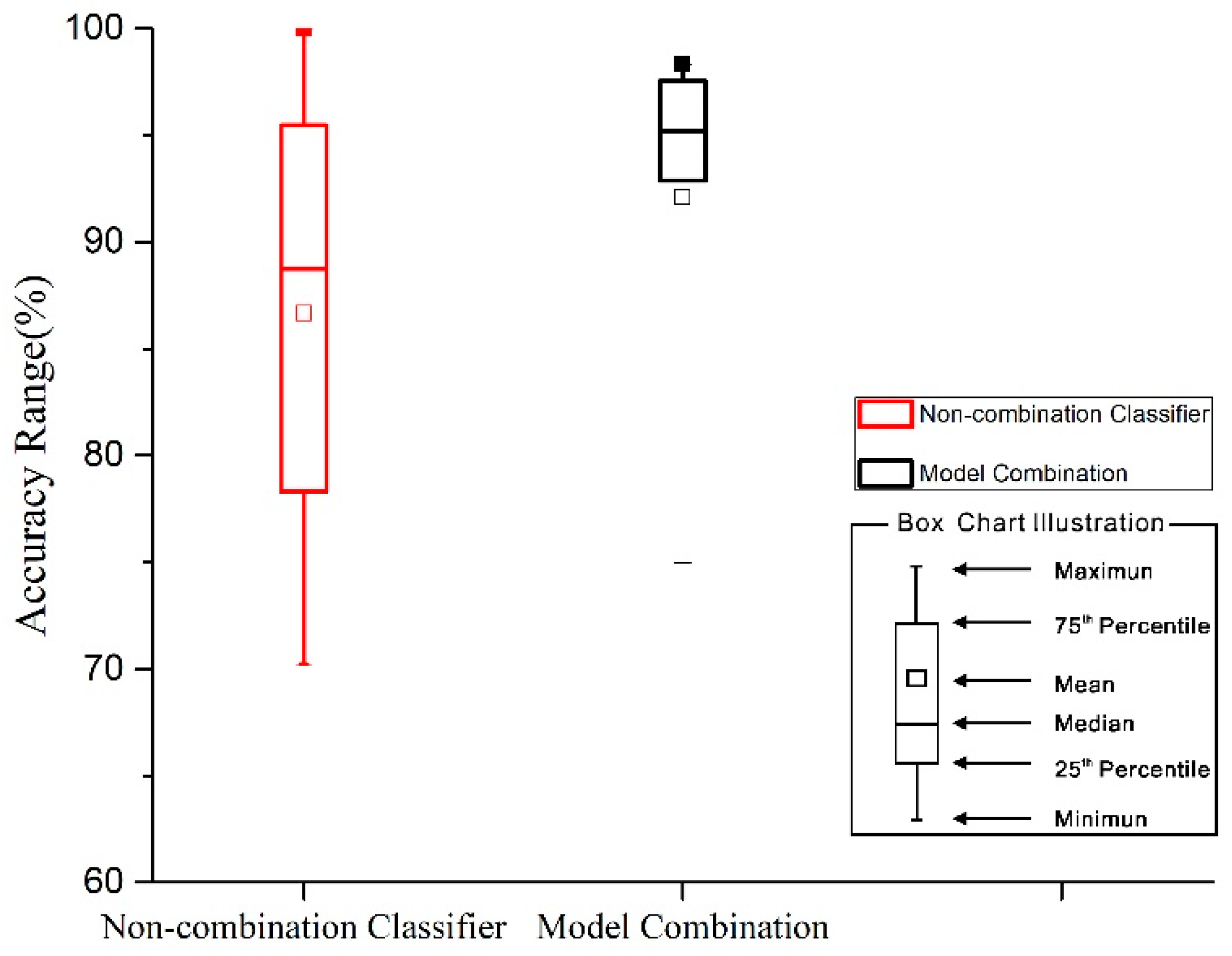

5.4. Comparison between Model Combination and Non-Combination Classifiers

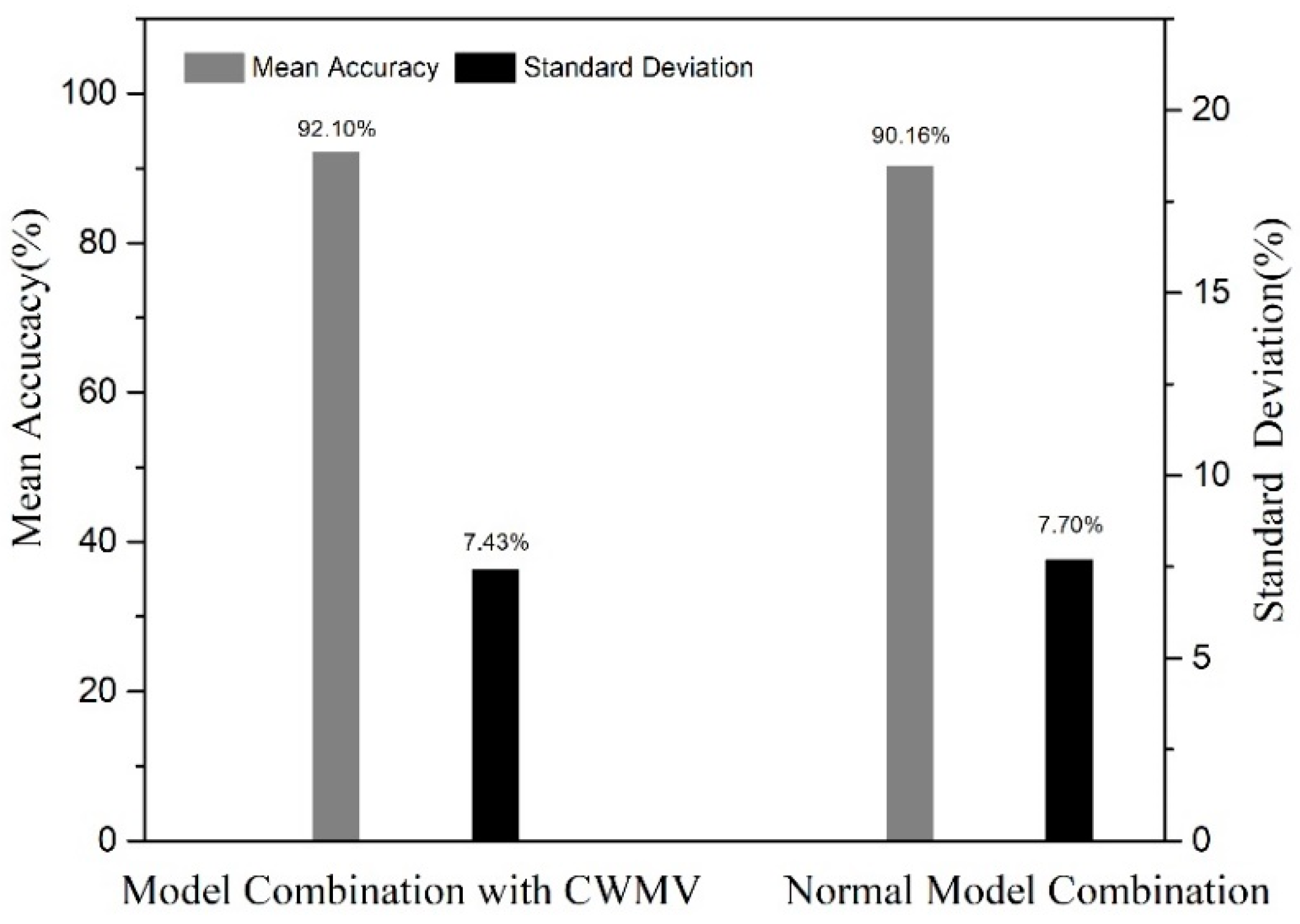

5.5. Comparison Between Voting Methods

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Caspersen, C.; Powell, K.; Christenson, G. Physical activity, exercise, and physical fitness: Definitions and distinctions for health-related research. Public Health Rep. 1985, 100, 126–131. [Google Scholar] [PubMed]

- Hendelman, D.; Miller, K.; Bagget, C.; Debold, E.; Freedson, P. Validity of accelerometry for the assessment of moderate intensity physical activity in the field. Med. Sci. Sports Exerc. 2000, 32, 442–449. [Google Scholar] [CrossRef]

- Scanaill, C.; Carew, S.; Barralon, P.; Noury, N.; Lyons, D.; Lyons, G. A review of approaches to mobility telemonitoring of the elderly in their living environment. Ann. Biomed. Eng. 2006, 34, 547–563. [Google Scholar] [CrossRef] [PubMed]

- Staudenmayer, J.; Pober, D.; Crouter, S.; Bassett, D.; Freedson, P. An artificial neural network to estimate physical activity energy expenditure and identify physical activity type from an accelerometer. J. Appl. Physiol. 2009, 107, 1300–1307. [Google Scholar] [CrossRef] [PubMed]

- Cao, J.; Li, W.; Ma, C.; Muhammad, S.; Stephan, B.; Ozlem, I.; Hans, S.; Paul, H. Complex human activity recognition using smartphone and wrist-worn motion sensors. Sensors 2016, 16, 426. [Google Scholar]

- Ermes, M.; Parkka, J.; Mantyjarvi, J.; Korhonen, I. Detection of daily activities and sports with wearable sensors in controlled and uncontrolled conditions. IEEE Trans. Inf. Technol. Biomed. 2008, 12, 20–26. [Google Scholar] [CrossRef] [PubMed]

- Feng, Z.; Mo, L.; Li, M. A Random Forest-based ensemble method for activity recognition. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015. [Google Scholar]

- Altini, M.; Penders, J.; Vullers, R.; Amft, O. Estimating energy expenditure using body-worn accelerometers: A comparison of methods, sensors number and positioning. Biomed. Health Inform. 2014, 19, 219–226. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Gao, R.; Freedson, P. Design of a wearable multi-sensor system for physical activity assessment. In Proceedings of the 2010 IEEE/ASME International Conference on Advanced Intelligent Mechatronics 2010, Montreal, ON, Canada, 6–9 July 2010; pp. 254–259. [Google Scholar]

- Carbonell, J.G. Machine learning research. ACM Sigart Bull. 1981, 18, 29. [Google Scholar] [CrossRef]

- Pober, D.; Staudenmayer, J.; Raphael, C.; Freedson, P. Development of novel techniques to classify physical activity mode using accelerometers. Med. Sci. Sports Exerc. 2006, 38, 1626–1634. [Google Scholar] [CrossRef] [PubMed]

- Yudistira, N.; Kurita, T. Gated spatio and temporal convolutional neural network for activity recognition: Towards gated multimodal deep learning. EURASIP J. Image Video Process. 2017, 2017, 85. [Google Scholar] [CrossRef]

- Naik, G.; Kumar, K. Twin SVM for gesture classification using the surface electromyogram. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 301–308. [Google Scholar] [CrossRef] [PubMed]

- Shao, S.; Shen, K.; Ong, C.; Wilder-Smith, E.; Li, X. Automatic EEG artifact removal: A weighted support vector machine approach with error correction. IEEE Trans. Biomed. Eng. 2009, 56, 336–344. [Google Scholar] [CrossRef] [PubMed]

- Umakanthan, S.; Denman, S.; Fookes, C. Activity recognition using binary tree SVM. In Proceedings of the IEEE Workshop on Statistical Signal Processing Proceeding, Gold Coast, VIC, Australia, 29 June–2 July 2014; pp. 248–251. [Google Scholar]

- Liu, S.; Gao, R.; John, D.; Staudenmayer, J.; Freedson, P. Multi-sensor data fusion for physical activity assessment. IEEE Trans. Biomed. Eng. 2012, 59, 687–696. [Google Scholar] [PubMed]

- Yuan, Y.; Wang, C.; Zhang, J. An Ensemble Approach for Activity Recognition with Accelerometer in Mobile-Phone. In Proceedings of the IEEE International Conference on Computational Science and Engineering IEEE Computer Society, Chengdu, China, 19–21 December 2014; pp. 1469–1474. [Google Scholar]

- Kuncheva, L.; Whitaker, C. Measures of diversity in classifier ensembles and their relationship with the ensemble accuracy. Mach. Learn. 2003, 51, 181–207. [Google Scholar] [CrossRef]

- Zheng, Y.; Wong, W.K.; Guan, X.; Trost, S.G. Physical activity recognition from accelerometer data using a multi-scale ensemble method. In Proceedings of the Twenty-Seventh AAAI Conference on Artificial Intelligence, Bellevue, WA, USA, 14–18 July 2013; AAAI Press: Menlo Park, CA, USA, 2013. [Google Scholar]

- Ravi, N.; Dandekar, N.; Mysore, P.; Littman, M. Activity recognition from accelerometer data. In Proceedings of the Seventeenth Conference on Innovative Applications of Artificial Intelligence, Pittsburgh, PA, USA, 9–13 July 2005; pp. 1541–1546. [Google Scholar]

- Kaur, A.; Sharma, S. Human Activity Recognition Using Ensemble Modelling. In International Conference on Smart Trends for Information Technology & Computer Communications; Springer: Singapore, 2016. [Google Scholar]

- Mo, L.; Liu, S.; Gao, R.; Freedson, P.S. Multi-Sensor Ensemble Classifier for Activity Recognition. J. Softw. Eng. Appl. 2012, 5, 113–116. [Google Scholar] [CrossRef]

- Yoon, J.; Zame, W.R.; van der Schaar, M. ToPs: Ensemble learning with trees of predictors. IEEE Trans. Signal Process. 2018, 66, 2141–2152. [Google Scholar] [CrossRef]

- Opitz, D.; Maclin, R. Popular ensemble methods: An empirical study. J. Artif. Intell. Res. 1999, 11, 169–198. [Google Scholar] [CrossRef]

- Dos Santos, E.M.; Sabourin, R.; Maupin, P. Overfitting cautious selection of classifier ensembles with genetic algorithms. Inf. Fusion 2009, 10, 150–162. [Google Scholar] [CrossRef]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Shawkat, A.B.M.; Dobele, T. A novel classifier selection approach for adaptive algorithms. In Proceedings of the IEEE/ACIS International Workshop on e-Activity, Melbourne, QLD, Australia, 11–13 July 2007; pp. 532–536. [Google Scholar]

- Freund, Y.; Schapire, R. A decision-theoretic generalization of on-line learning and an application to boosting. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 1995; pp. 23–37. [Google Scholar]

- Gashler, M.; Giraud-Carrier, C.; Martinez, T. Decision tree ensemble: Small heterogeneous is better than large homogeneous. In Proceedings of the IEEE Seventh International Conference on Machine Learning and Application, San Diego, CA, USA, 11–13 December 2008; pp. 900–905. [Google Scholar]

- Kuncheva, L. Combining Pattern Classifiers: Methods and Algorithms; Wiley-Interscience: Hoboken, NJ, USA, 2004. [Google Scholar]

- Remya, K.R.; Ramya, J.S. Using weighted majority voting classifier combination for relation classification in biomedical texts. In Proceedings of the 2014 International Conference on Control, Instrumentation, Communication and Computational Technologies (ICCICCT), Kanyakumari, India, 10–11 July 2014. [Google Scholar]

- Markov, Z.; Russell, I. Introduction to data mining. In An Introduction to the WEKA Data Mining System; China Machine Press: Beijing, China, 2006. [Google Scholar]

- Mo, L.; Liu, S.; Gao, R.; John, D.; Staudenmayer, J.; Freeson, P.S. Wireless Design of a Multi-Sensor System for Physical Activity Monitoring. IEEE Trans. Biomed. Eng. 2012, 59, 3230–3237. [Google Scholar]

- Gao, L.; Bourke, A.K.; Nelson, J. Evaluation of Accelerometer Based Multi-Sensor versus Single Activity Recognition System. Available online: http://dx.doi.org/10.1016/j.medengphy.2014.02.012 (accessed on 11 March 2014).

- Gravina, R.; Alinia, P.; Ghasemzadeh, H. Multi-Sensor Fusion in Body Sensor Networks: State-of-the-Art and Research Challenges. Available online: http://dx.doi.org/10.1016/j.inffus.2016.09.005 (accessed on 13 September 2016).

- Chang, C.; Lin, C. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 27. [Google Scholar] [CrossRef]

- Lapin, M.; Hein, M.; Schiele, B. Learning Using Privileged Information: SVM+ and Weighted SVM. Available online: http://dx.doi.org/10.1016/j.neunet.2014.02.002 (accessed on 14 February 2014).

| Charact-Eristics | Distribution | Statistics | |||

|---|---|---|---|---|---|

| Category | Number | Percentage | Mean | Standard Deviation | |

| Gender | F | 59 | 53.6% | N/A | N/A |

| M | 51 | 46.4% | |||

| Age (years) | 20–30 | 30 | 27.3% | 38.7182 | 11.8385 |

| 30–40 | 28 | 25.5% | |||

| 40–50 | 25 | 22.7% | |||

| 50–60 | 27 | 24.5% | |||

| Mass (kg) | <50 | 2 | 1.8% | 71.2500 | 14.8677 |

| 50–60 | 26 | 23.7% | |||

| 60–70 | 34 | 30.9% | |||

| 70–80 | 13 | 11.8% | |||

| 80–90 | 21 | 19.1% | |||

| >90 | 14 | 12.7% | |||

| Height (cm) | 150–160 | 16 | 14.5% | 169.555 | 9.2474 |

| 160–170 | 43 | 39.1% | |||

| 170–180 | 33 | 30.0% | |||

| >180 | 18 | 16.4% | |||

| BMI (kg/m2) | <18.5 | 1 | 0.9% | 25.0211 | 4.2116 |

| 18.5–25 | 65 | 59.1% | |||

| 25–30 | 30 | 27.3% | |||

| >30 | 14 | 12.7% | |||

| Activities | Category | Abbr. |

|---|---|---|

| Computer work | Sedentary activity | CW |

| Filing paper | FP | |

| Moving boxes | Household and other | MB |

| Vacuuming | VA | |

| Cycling with 1-kp resistance | C1 | |

| Treadmill at 3.0 mph | Moderate locomotion | T3 |

| Treadmill at 4.0 mph | Vigorous activity | T4 |

| Treadmill at 6.0 mph | T6 | |

| Tennis | TE | |

| Basketball | BA |

| PA Types | The Voting Weights (%) | ||||||

|---|---|---|---|---|---|---|---|

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | |

| CW | 75.29 | 95.29 | 0 | 98.82 | 96.47 | 82.35 | 97.65 |

| C1 | 83.45 | 82.07 | 51.03 | 94.48 | 91.72 | 88.97 | 93.79 |

| T3 | 58.24 | 92.94 | 70.59 | 93.53 | 92.35 | 62.35 | 92.94 |

| T4 | 67.23 | 84.87 | 9.24 | 92.44 | 84.87 | 68.91 | 92.44 |

| FP | 100.0 | 16.13 | 0 | 98.39 | 64.52 | 100.0 | 98.39 |

| VA | 96.77 | 80.65 | 0 | 98.39 | 69.35 | 93.55 | 95.16 |

| BA | 76.19 | 42.86 | 0 | 73.81 | 61.90 | 76.19 | 73.81 |

| MB | 91.67 | 45.24 | 22.62 | 86.90 | 54.76 | 91.67 | 86.90 |

| TE | 80.65 | 58.06 | 0 | 77.42 | 56.45 | 82.26 | 79.03 |

| T6 | 95.24 | 97.62 | 0 | 95.24 | 90.48 | 100.0 | 100.0 |

| Model Combination | Definition of Different Combinations |

|---|---|

| MC1 | Classifier of C7. |

| MC2 | A combination of classifiers of C1, C2 and C3. |

| MC3 | A combination of classifiers of C4, C5 and C6. |

| MC4 | A combination of all the classifiers (C1 + C2 + C3 + C4 + C5 + C6 + C7). |

| Predict PA Type | True PA Type | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| CW | C1 | T3 | T4 | FP | VA | BA | MB | TE | T6 | ||

| Model Combination with CWMV | CW | 97.56 | 0 | 0 | 0 | 7.12 | 0 | 0 | 0 | 0 | 0 |

| C1 | 0 | 92.91 | 0.86 | 0 | 0 | 2.38 | 0 | 0 | 0 | 0 | |

| T3 | 0 | 0 | 75.00 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| T4 | 0 | 0 | 2.59 | 94.95 | 0 | 0 | 0 | 0 | 0 | 0 | |

| FP | 2.44 | 5.51 | 2.59 | 2.02 | 92.86 | 0 | 0 | 5.77 | 0 | 0 | |

| VA | 0 | 1.57 | 18.97 | 0 | 0 | 97.62 | 0 | 1.92 | 4.55 | 0 | |

| BA | 0 | 0 | 0 | 3.03 | 0 | 0 | 95.56 | 11.54 | 0 | 1.67 | |

| MB | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 80.77 | 0 | 0 | |

| TE | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 95.45 | 0 | |

| T6 | 0 | 0 | 0 | 0 | 0 | 0 | 4.44 | 0 | 0 | 98.33 | |

| SVM | CW | 70.21 | 0.88 | 0 | 0 | 16.67 | 0 | 0 | 0 | 0 | 0 |

| C1 | 21.28 | 90.27 | 1.89 | 0 | 1.67 | 9.09 | 0 | 0 | 0 | 0 | |

| T3 | 0 | 0 | 78.30 | 0 | 0 | 7.27 | 0 | 0 | 0 | 0 | |

| T4 | 0 | 0 | 0 | 90.48 | 0 | 1.82 | 0 | 2.13 | 0 | 0 | |

| FP | 8.51 | 5.31 | 0.94 | 0.95 | 81.67 | 5.45 | 0 | 8.51 | 0 | 0 | |

| VA | 0 | 3.54 | 17.92 | 0.95 | 0 | 76.36 | 0 | 2.13 | 0 | 0 | |

| BA | 0 | 0 | 0 | 7.62 | 0 | 0 | 100.0 | 0 | 4.55 | 3.17 | |

| MB | 0 | 0 | 0.94 | 0 | 0 | 0 | 0 | 87.23 | 0 | 0 | |

| TE | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 95.45 | 0 | |

| T6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 96.83 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mo, L.; Zeng, L.; Liu, S.; Gao, R.X. Multi-Sensor Activity Monitoring: Combination of Models with Class-Specific Voting. Information 2019, 10, 197. https://doi.org/10.3390/info10060197

Mo L, Zeng L, Liu S, Gao RX. Multi-Sensor Activity Monitoring: Combination of Models with Class-Specific Voting. Information. 2019; 10(6):197. https://doi.org/10.3390/info10060197

Chicago/Turabian StyleMo, Lingfei, Lujie Zeng, Shaopeng Liu, and Robert X. Gao. 2019. "Multi-Sensor Activity Monitoring: Combination of Models with Class-Specific Voting" Information 10, no. 6: 197. https://doi.org/10.3390/info10060197

APA StyleMo, L., Zeng, L., Liu, S., & Gao, R. X. (2019). Multi-Sensor Activity Monitoring: Combination of Models with Class-Specific Voting. Information, 10(6), 197. https://doi.org/10.3390/info10060197