Abstract

The least squares support vector method is a popular data-driven modeling method which shows better performance and has been successfully applied in a wide range of applications. In this paper, we propose a novel coupled least squares support vector ensemble machine (C-LSSVEM). The proposed coupling ensemble helps improve robustness and produce good classification performance than the single model approach. The proposed C-LSSVEM can choose appropriate kernel types and their parameters in a good coupling strategy with a set of classifiers being trained simultaneously. The proposed method can further minimize the total loss of ensembles in kernel space. Thus, we form an ensemble regressor by co-optimizing and weighing base regressors. Experiments conducted on several datasets such as artificial datasets, UCI classification datasets, UCI regression datasets, handwritten digits datasets and NWPU-RESISC45 datasets, indicate that C-LSSVEM performs better in achieving the minimal regression loss and the best classification accuracy relative to selected state-of-the-art regression and classification techniques.

1. Introduction

Among the support vector machines (SVM) [1,2], the least squares support vector machine (LSSVM) is considered as a variation of the standard support vector machine (SVM) developed by Suykens et al. [3]. The concept of LSSVM has been successfully applied in many literatures to achieve good results. It is used optimally to control non-linear Karush–Kuhn–Tucker systems for both classification and regression. Also, it has been applied in real-world pattern recognition problems such as image classifications, visual tracking and fault detection techniques, among others [4,5,6,7]. LSSVM is computationally based on equality constraints in place of inequality constraints. Also, it produces closed-form solutions by solving linear equation systems instead of solving quadratic programming (QP) problems iteratively in the conventional SVM methods. Thus, training using LSSVM is simpler than that of the SVMs. Furthermore, LSSVM is simple to construct and has the ability to avoid over-fitting to aid in achieving a high generalization performance, making LSSVM popular and widely used. Researchers in recent times have also made some contributions towards the robustness of the LSSVM method. For instance, Lu et al. [8] proposed a robust spatiotemporal LSSVM modeling method for a distributed parameter system (DPS) with disturbances. In this model, a spatial kernel function is firstly constructed in order to describe the nonlinear relation amongst the spatial positions. Liu et al. [9] also proposed a robust WLSSVM-PTS based on weighted LSSVM and penalized trimmed squares to overcome the drawback of achieving robust regression in a noisy environment by adding a weight to each training sample.

Despite the computational advantage and attractive features of LSSVM, it has some drawbacks. For example, parameter selection is vulnerable with respect to various kernel types and their parameters. The selection of kernel functions could be very difficult considering the wide diversity of kernel functions. Moreover, the optimization of parameters is computationally challenging due to the evaluation demands of some cross-validation procedures [10]. To solve this problem, the ensemble model is used. The ensemble model primarily groups several weak learning techniques together to form a strong learning technique. Some well-known ensemble models are Random Forest (RF) [11,12], Gradient Boosting [13,14] and Tree Regression [15,16].

Motivated by the developments discussed above, we propose a novel coupled least squares support vector ensemble machine (C-LSSVEM). The proposed coupling ensemble helps improve robustness and produce good classification performance than the single model approach. The proposed C-LSSVEM can choose appropriate kernel types and their parameters. Moreover, the proposed method can minimize the total loss of ensembles in kernel space. Thus, we form an ensemble regressor by co-optimizing and weighing base kernel regressors. To improve the robustness of the single model, the coupled idea is used to train our ensemble model simultaneously. It is worth noting that the proposed method is similar to yet different from the existing coupled methods used in the field of facial recognition [17,18], artificial neural network [19,20] and partial least square [21,22]. Furthermore, the proposed C-LSSVEM method is different and improves [23] by integrating the coupling strategy to optimize our base model regressors.

To the best of our knowledge, we are the first to propose a coupled ensemble framework of LSSVM. The main contributions of this paper are as follows:

- The proposed model uses the ensemble model to choose suitable kernel types and their parameters. The proposed method can minimize the total loss of ensembles in kernel space. Thus, we form an ensemble regressor by co-optimizing and weighing base kernel regressors.

- Our proposed method improves training base models in a coupling strategy. This helps the base model generate robustness and better classification performance by compelling each local minimizers together to solve training optimization problem in a coupling way.

- Experiments conducted on artificial datasets, UCI datasets, and handwritten digits datasets indicate that the proposed model effectively performs better in achieving the lowest regression loss and the highest classification accuracy as compared to the state-of-the-art methods. Additionally, we test our model on NWPU-RESISC45 dataset with deep features being trained in AlexNet and VGGNet. This shows superiority performance in feature learning and classification.

2. Related Work

LSSVM has been addressed in a lot of prior studies. In this section, we introduce some related studies on LSSVM and ensemble models.

2.1. Least Squares Support Vector Machine

LSSVM has the same classification principle as SVM, but there are differences in solving the hyperplane. SVM uses quadratic programming to optimize parameter hyperplane while LSSVM transforms the linear programming problem of SVM into constraint conditions. Thus, changes the structure of the loss function, hence greatly reduces the computational effort. LSSVM uses this hyperplane to fit the location of the sample points. LSSVM is generally used for optimal control, classification and regression problems [3,24]. LSSVR is introduced as a regression for LSSVM. The LSSVR technique is to approximate a function by using a given sample of a training data series . The regression function can be formulated as a feature space representation:

where the and is the mapping to the high dimensional feature space. The optimization problem of LSSVM is given as:

Subjected to

Research has recently been made to the contributions of the LSSVM method. For example, Zheng et al. [25] proposed a novel model that combines wavelet technique integrated LSSVM with improved PSO for forecasting of dissolved gases in oil-immersed transformers. Wen et al. [26] on the other hand also presented a different method which integrates machine learning and complexity theory to assess node relevance in complex network relying on LSSVMs techniques with experimental outcome showing the accuracy and efficacy of their method.

2.2. Ensemble Regression

Ensemble learning is a kind of machine learning paradigm in which multiple models, such as decision trees, neural networks and SVM, are combined together to solve a particular problem [27]. Typical ensemble methods include Adaboost [28], random forests [29] and gradient boosted machines [30]. All these methods encourage diversity of the base learners to some extent to compensate individual errors and reach a better-expected performance.

Adaboost is a common ensemble and iterative algorithm [31] that allows a new classifier to be generated from the training dataset in each iteration; it further classifies all samples to assess the importance of each sample. The weight of the wrongly classified samples will be higher in the next training. The whole process will not end until the error rate is small enough or up to a certain iteration number. Moghimi et al. [32] proposed a vehicle detection technology which aims to locate and show the vehicle size in digital images based on the boosting technique by Viola Jones. Their experimental results showed that the accuracy, completeness, and quality of the proposed vehicle detection method are better than previous techniques. Yin et al. [33] proposed a new method of video text localization based on Adaboost. The experimental results showed that their method does not only achieve a good effect on the text localization in video images with a text of various fonts, sizes and colors but also can realize rapidly and accurately these requirements to meet the video text localization.

The random forest, proposed by Breiman [29] is an ensemble approach that can also be thought of as a form of the nearest neighbor predictor. It is an algorithm that uses multiple trees to train and predict a sample. Melville et al. [34] presented a random forest classification approach for identifying and mapping three types of lowland natives grassland communities found in the Tasmania midlands region. The results of this study indicated that remote sensing is a viable method for the identification of lowland native grassland communities in the Tasmanian Midlands, and that repeat classification and statistical significance testing can be used to identify optimal datasets for vegetation community mapping. Jog et al. [35] presented a supervised random forest image synthesis approach called RELICA, that learns a non-linear regression to predict the intensities of alternate tissue contracts given specific input tissue contracts.

Gradient boosting is an ensemble technique in which the predictors are not made independently, but sequentially. Gradient boosting is one of the most powerful techniques for building predictive models. Li et al. [36] used an extreme gradient boosting regression tree model to analyze twitter signals as a medium for user sentiment to predict the price fluctuations of a small-cap alternative cryptocurrency called (ZClassic). Their model is the first academic proof of concept that social media platforms such as twitter can serve as a powerful social signal for predicting price movements in the highly speculative alternative cryptocurrency or "alt-coin" market. Touzani et al. [37] more recently presented an energy consumption baseline modeling method based on a gradient boosting machine to assess the performance of testing procedures used on a large dataset of 410 commercial buildings. The results showed that using the gradient boosting machine model improved the R-squared prediction accuracy and the CV(RMSE) in more than 80 percent of the cases when compared to an industry best practice model that is based on piecewise linear regression, and to a random forest algorithm.

3. The Proposed Method

In this section, we explore the intricacy of the novel coupled least squares support vector ensemble machine (C-LSSVEM). The following subsections talks about kernel theory and the proposed model respectively

3.1. Kernel Theory

Kernel methods map the data into a high dimensional feature space, where each coordinate corresponds to one feature of the data items. In that space, a variety of methods can be used to find relations in the data. Since the mapping can be quite general (e.g., not necessarily linear), the relations found in this way are explicitly general. Kernels are proposed as a result of varied situational and application differences. A Mercer Kernel function is said to be symmetrically continuous and positive semidefinite. Thus, for any finite set of distinct points , the matrix is positive semidefinite.

The basic features of a kernel function are derived from Mercer’s theorem [38]. Applicable kernel functions must satisfy Mercer’s conditions. This study uses the radial function (RBF), the gaussian function and the polynomial function as kernel functions as shown below:

- The Polynomial kernel

- The RBF kernel (Radial Basis Function)

- The Gaussian kernel

where . and c are kernel parameters used in the experiment, and are parameters frequently used by kernels in practice due to its capacity to generate nonparametric classification functions. represents feature vectors in input space. While denotes a Gram matrix obtained according to samples. Which is a symmetric and semi-positive definite matrix given as follows:

Given a set of labeled examples , the standard framework estimates an unknown function by minimizing:

where is a loss function, such as squared loss for hinge loss or regularized least square loss function max for SVM. is considered as a smooth condition on likely solutions and the lambda is a positive parameter to trade off the balance. Moreover, the classical representation theorem states the solution to minimizing problems that exist can be written as:

Hence, the difficulty is reduced to enhancing over the finite dimensional space or coefficients , which is the algorithmic basis for SVM, regularized least squares, and other regression methods.

3.2. Coupled Least Squares Support Vector Ensemble Machine (C-LSSVEM)

In this subsection, we introduce our coupled least squares support vector ensemble method. Diverse kernel models and their parameters are utilized to construct base regressors. The proposed kernel ensemble method is presented as follows.

Diverse kernels are archived according to data samples. Supposing a training set X with regression result and a testing set without regression result where expresses a training sample, is the real value of , and expresses a testing sample. N is the number of training samples and is the number of testing samples. The base kernel regressor is built as a kernel regressor.

On the other hand, diverse kernel types and their parameters selection result in various regression results. So as to get a superior regression ensemble model, base kernel regressors are consolidated in our coupled least squares support vector ensemble framework. Those base regressors are coupled and weighted in the following part. To make simpler the whole model, we present a new variable , which equals to . The proposed coupled least squares support vector ensemble machine (C-LSSVEM) model is as follows:

where L is the number of base regressors. w denotes a weight vector of individual base kernel regression model and . is the i-th base gram matrix and is the coupling error between the (i + 1)-th base regressor and the i-th base regressor. is weight column vector, which is identified to the weight of every training data sample in . is the bias item for the i-th base regressor.

Derivatives are taken of Equation (11) with respect to , , , and obtain as follows, whiles we set them to zero :

To derive

To derive

To derive

To derive

From the above, we then substitute and into Equations (14) and (15) and obtain the following equations:

Since our approach aims to select suitable kernel types and parameters in individual kernel regressors and also to obtain an optimal weight vector of base regressors, we aimed at minimizing the loss for determining the performance of the base kernel regression models.

Consider to be (where r speaks to the control parameter for the weights of multiple features) in light of the fact that linear programming accomplishes its ideal solution at the extreme ends. In this way, either or . This implies there will be one kernel chosen in opposing to our goal of discovering the rich complementation of multiple kernels. At the point , just a single kernel will be chosen in the ideal result, which is undesirable, yet on the off chance that the ideal result is based on multi-kernel adjusting. The value of r is man-made to obtain appropriate w. We can further derive that:

where denotes the loss of each kernel. As per Equation (18), the ideal weight of the ensemble method can be achieved, where r is a parameter to get suitable w. We achieve an ensemble regression model by consolidating the different base kernel models linearly. The proposed kernel ensemble regressor is constructed using Formula (19).

The C-LSSVEM method summarized in Algorithm 1.

| Algorithm 1 The proposed C-LSSVEM method. |

|

4. Experiment Result

This section demonstrates the generalization performance advantage of the coupled ensemble multiple kernel based method in our proposed model (C-LSSVEM) over other regression methods, for example, ridge regression (RR), support vector regression (SVR), random forest (RF), gradient boosting regression (GBR), decision tree regression (DTR) and extreme gradient boosting (XGBoost) [39]. To validate the performance of our proposed method, artificial dataset, UC Irvine (UCI) regression and UC Irvine (UCI) classification datasets are used. The details of the experimental settings and results on different datasets are discussed in the following subsection.

4.1. Experimental Settings

The experiments are conducted with training (i.e., 2/3) and testing (i.e., 1/3) data from each dataset. It is worth noting that the training and testing data of each dataset are randomly selected. The experimental results are performed 10 times on each dataset.

A demonstration of how several LSSVM models in an ensemble are coupled is discussed in the proposed method. In Equation (4), a single polynomial kernel method is considerably used as the elementary method of an ensemble for all the diverse datasets. This method comprises of three parameters (i.e., a, b, and c). Also, the different values of the parameters yield different effects with respect to the experimental results. Specifically, we set parameters a, b, and c as , and respectively. The parameter L in Equation (10) demonstrates the number of the base polynomial kernel models. Considering the generalization ability of an ensemble regressor, it is always expedient to have enough base models. Nevertheless, extreme availability of base models possibly will result in a worse generality capacity of an ensemble regressor, which yields a poor classification accuracy level. Therefore, a careful selection of L is given as in our experimentations. Moreover, 20 blends among three parameters (i.e., a, b, and c) are selected.

4.2. Experimental Results

In this section, we discuss the overall performance of the proposed C-LSSVEM method with all the relative methods on diverse datasets under the: artificial dataset, UCI regression and UCI classification datasets. The outcomes are recorded in Table 1, Table 2 and Table 3 with the highest performance results on each dataset highlighted in bolded textual style.

Table 1.

MSE results (Average ± Std) of different methods on eight UCI datasets.

Table 2.

MAE results (Average ± Std) of different methods on eight UCI datasets.

Table 3.

Classification accuracy results (%) (Average ± Std) of different methods on five UCI datasets.

4.2.1. Artificial Dataset

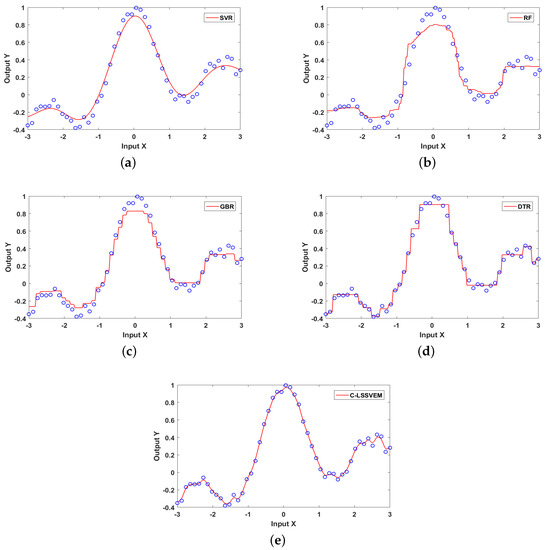

The utilization of artificial dataset is purposefully used to illustrate the performances of the proposed method and comparative methods visually. Ideally, the sampling of the input space is not practical in most cases. So, we utilized it only for demonstration purposes to visualize the regression effects in Figure 1.

Figure 1.

The fitting of five different methods on artificial dataset. (a) SVR; (b) RF; (c) GBR; (d) DTR; (e) C-LSSVEM.

Fifty data points are produced from the scalar function corrupted by an observation noise. We made use of the model shown below:

We visualize the regression effects on five regression methods, namely, SVR, RF, GBR, DTR and C-LSSVEM. We considered the data points to be consistently spread over the x-axis on all the methods as shown in the following experimental graph, where denotes noise.

From Figure 1 the fitting of our proposed C-LSSVEM method outperforms the other comparative method in this experiment. From all indications, the loss of the proposed method is the least among the regression methods indicated above. Similar to the SVR method is the GBR and DTR methods where good fitting is achieved by both methods on the x-axis however poorly fit midway of the x-axis. Our proposed method in Figure 1e shows an excellent regression performance to the comparative methods due to the coupling benefits of both ensemble and kernel methods.

4.2.2. UCI-Datasets

(1) Regression

Quite a lot of regression datasets with diverse features have been tested so as to authenticate the performance of our proposed model. We selected eight benchmark publicly available datasets for the evaluation of our performance of our technique from the UCI repository, namely Abalone, Bodyfat, Concrete, Mg, Mpg, RedWine, Space, and Housing. The detailed summary about the UCI datasets used is presented in Table 4 [40]. The standards applied is Mean Absolute Error (MAE) and Mean Square Error (MSE). They are given as:

where and are the real output and the model output respectively, and is the number of samples. The mean and variance of MSE and MAE are used to evaluate the performance of the proposed method.

Table 4.

Descriptions of the UCI datasets.

Table 1 presents the average MSE with corresponding standard deviations after running each method ten times. It is evident from the results that our approach performed much better than all the comparative methods. Taking for example the Abalone dataset, our approaches performance superseded RR by 1.042, SVR by 1.853, RF by 0.401, GBR by 0.656, DTR by 1.167 and XGBoost by 1.28. On the Concrete dataset, most of the approaches performed relatively poorly. Our approach however outperformed RF which is the next best performing method on this dataset by 3.022, and also outperformed the worst method which is SVR by 238.6. Taking the standard deviations of all approaches into perspective indicates also that our approach has the best stability, as it consistently records the lowest deviations. The lower MSE and standard deviation values indicates that our proposed approach can better handle non-linear datasets with kernel methods and obtains stable regression performance as a result of the coupling of ensemble methods.

We demonstrate further the merits of coupling kernel and ensemble methods using MAE as a performance measure. Table 2 illustrates the obtained results of the six regression approaches on our selected benchmark datasets. Our proposed C-LSSVEM again consistently outperforms all comparing methods. It records an optimal result of 1.6580 on the Abalone dataset, leading the RF approach by 0.0206. It is also observed that our C-LSSVEM approach attains the best performance of 12.873 and 7.074 respectively on the Bodyfat and Concrete datasets, whiles SVR achieves the poorest performance of 15.673 on the concrete dataset.

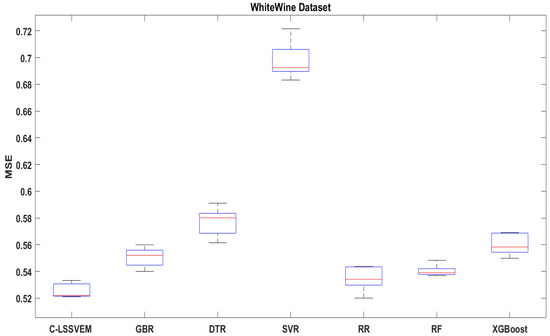

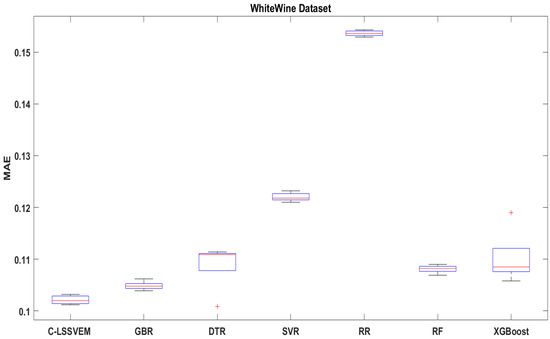

Using box diagrams of MSE and MAE on the WhiteWine dataset, which is a regression dataset of 4898 cases in 11 features, which was collected by variants of the Portuguese “Vinho Verde” wine. We again show the preeminence of our approach with the other comparing methods in Figure 2 and Figure 3.

Figure 2.

The MSE of six regression methods on WhiteWine dataset.

Figure 3.

The MAE box plot of six regression methods on WhiteWine dataset.

SVR and RR respectively recorded the highest value MSE and MAE amongst all the comparing regression methods from the results in Figure 2 and Figure 3. This is a result of bad parameter selection of SVR and RR. It is observed from the same figures that our C-LSSVEM has the least MSE and MAE values amongst all the comparing methods, making it invariably the best performing method. RF performed quite well on the WhiteWine dataset for both MAE and MSE.

From the above discussions, we deduce that our proposed C-LSSVEM approach has good performance on all the UCI datasets chosen for our experiments. It is also able to select suitable kernels with corresponding parameters that enhances significantly the performance of the regression.

(2) Classification

Even though all the models discussed in the previous sections are for regression tasks, we additionally apply those techniques for classification task to additionally confirm the performance of our proposed C-LSSVEM. Five open datasets from UCI (http://archive.ics.uci.edu/ml/index.php), namely, Breast-cancer, Pima, Sonar, Australian and German are used. Also, we use five comparison algorithms to compare with our method, which includes RR, SVR, RF, K-Nearest Oracles Eliminate (KNORA-E) [41] and Overall Local Accuracy (OLA) [42]. The last two comparison methods are the state-of-the-art techniques for dynamic classifier and ensemble selection in DESlib. The essential information on these datasets is shown in Table 5.

Table 5.

Description information for the five datasets.

Table 3 summarizes the results of average classification accuracies and corresponding standard deviations of the various comparing methods on selected UCI datasets to further ascertain the efficacy of our approach. The proposed C-LSSVEM obtains higher performance compared to the other approaches under review with respect to all the datasets used in the experiment. For instance on the Breast-cancer dataset, C-LSSVEM recorded a mean accuracy of 99.9246 with SVR and OLA lagging behind our approach by 1.0992 and 1.5568, respectively. KNORA-E attains the worse performance lagging behind our approach by 3.9757. Analysis on the standard deviations recorded by the various approaches confirms that the proposed C-LSSVEM is more stable than the other baseline approaches since it records the lowest values relative to the comparative models.

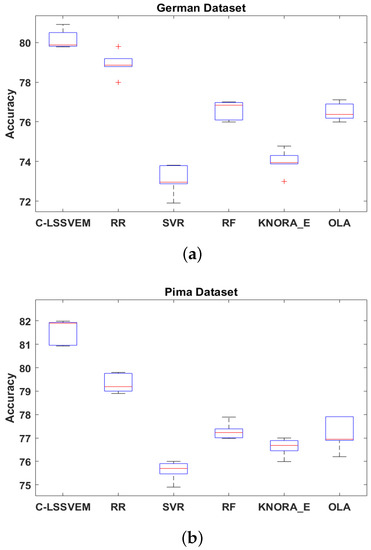

Additionally, we compare the performance of different comparative approaches. The box plot on two datasets (German and Pima) as illustrated in Figure 4 is used.

Figure 4.

The accuracy of six regression methods on two UCI dataset. (a) German (b) Pima.

Using box plot, we illustrate in Figure 4, the performance of all models on the German and Pima datasets. As asserted in our earlier discussions, Figure 4 further confirms the superiority of our method in classification accuracy with C-LSSVEM recording the highest position amongst the comparative models on the two datasets. The relative narrow shape of the boxes also confirms the stability of our model relative to the other approaches. In both the German and Pima datasets, the RR approach which is a classical method, performed second best to C-LSSVEM, indicating the relevance of our approach which outperforms all the comparative methods in our study.

4.2.3. Handwritten Digits-Datasets

In this section, MNIST (http://www.cad.zju.edu.cn/home/dengcai/Data/data.html), USPS (http://www.cad.zju.edu.cn/home/dengcai/Data/data.html) which are handwritten datasets are used in our experiment to perform classification task on five regression methods namelyin our experiments to perform the classification task on six regression methods, namely Adaboost (AB) [43], RR, RF, Simple Vote Rule [44], QFWEC [45] and C-LSSVEM. The detailed descriptions are shown in Table 6 and their accuracy can be seen in Table 7.

Table 6.

Descriptions information for handwritten digits datasets.

Table 7.

Classification accuracy result (%) (Average ± Std) of different methods on Handwritten digits datasets.

Comparing the results of C-LSSVEM from Table 7 to the other methods, the highest mean accuracy performances recorded all belong to our C-LSSVEM method. For instance, 93.8726 ± 0.3979 and 94.0768 ± 0.5994 as recorded for MNIST and USPS, respectively. On the MNIST dataset, it outperformed Adaboost by 19.6381, RR by 24.0191, RF by 5.6933, SVR by 5.9459, and QFWEC by 10.2055. The QFWEC method has high mean accuracy on the USPS dataset and second only to our approach. Although, the result of QFWEC is not satisfactory on the MNIST dataset. In addition, the Simple Vote Rule and RF are optimistic on two datasets. From Table 7, the result of Adaboost is worse on the USPS dataset. The standard deviations values of Table 7 implies our C-LSSVEM method is the most stable amongst the comparative methods as it records the least values in our experiments. From our experimental results on USPS and MNIST datasets, our proposed approach has a good effect on the handwriting field.

4.2.4. NWPU-RESISC45 Dataset

In this subsection, we test our model in a large dataset with features learned from deep networks. Deep learning can learn high-level features in data by using structures composed of multiple non-linear transformations. In view of this, we test our model on deep features, which are trained from two kinds of deep learning-based CNN features: AlexNet [46] and VGGNet [47] for its superiority performance in feature learning and classification. The details of these models are tabulated in Table 8.

Table 8.

The detail features of AlexNet and VGGNet models.

The NWPU-RESISC45 dataset [48] is used in this subsection. It consists of 31,500 remote sensing images divided into 45 scene classes. Each class includes 700 images with a size of pixels in the red green blue (RGB) color space. This dataset was extracted, by experts in the field of remote sensing image interpretation, from Google Earth (Google Inc.) that maps the Earth by the superimposition of images obtained from satellite imagery, aerial photography and geographic information system (GIS) onto a 3D globe. This data set is of the largest scale on the number of scene classes and the total number of images. The rich image variations, large within class diversity and high between class similarities make the data set rather challenging. The NWPU-RESISC45 dataset has the following three notable characteristics compared with all existing scene classification datasets including large scale, rich image variation and high with-in class diversity and between class similarity. Figure 5 shows two samples of each class from this dataset.

Figure 5.

Some example images from the NWPU-RESISC45 dataset.

In order to perform a comprehensive comparison, five comparative methods such as Ada-boost (AB), RR, RF, Simple Vote Rule, and QFWEC are used in the experiment. The accuracy results are recorded in Table 9.

Table 9.

The accuracy results(%) (Average ± Std) of different methods on deep features.

From Table 9, our proposed C-LSSVEM outperforms all the comparative methods using deep features from AlexNet and VGGNet This indicates the effectiveness of the proposed method on diverse deep features. Our proposed C-LSSVEM achieves higher accuracy and shows better robustness than all the comparatives models. For instance, on AlexNet deep features, Adaboost performed poorly with the least accuracy of mean of 53.3628. RF and RR are similar to a difference of 0.2004. Adaboost again, on the other hand, had the least performance with a mean accuracy of 51.8674. QFWEC performed fairly well on both models. Simple vote rule performed well on AlexNet model compared to VGGNet model. When the proposed C-LSSVEM is applied on AlexNet deep features, the classification accuracy of is 67.2631 and 64.7485 on VGGNet deep features.This indicates that the proposed method has the best classification accuracy.

5. Conclusions

In this paper, a novel coupled least squares support vector ensemble machine is presented. We explore the difficulty of how to combine diverse base kernel regressors. Our proposed coupled ensemble model helps to improve the robustness and to produce good classification performance than the single model approach. The coupled least squares support vector ensemble model has the ability to select appropriate kernel types and their parameters in a good coupling strategy with a set of classifiers. We form an ensemble regressor by co-optimizing and weighing base kernel regressors. Experiments conducted on several datasets including artificial datasets, UCI classification datasets, UCI regression datasets, handwritten digits datasets and NWPU-RESISC45 datasets, indicate that C-LSSVEM performs better in achieving minimal regression loss with best classification accuracy relative to selected state-of-the-art regression and classification techniques.

We will aim to expand our model by altering our objective functions into different functions, such as -insensitive loss and the hinge loss function in the future. Meanwhile, we will try to find other ways to update the weights of base kernel regressors. Furthermore, we will find more effective ways to utilize the end-to-end deep learning model.

Author Contributions

X.-J.S. and D.K.W. fabricated the algorithm; D.K.W. performed the experiments; X.-J.S. analyzed the results and provide supervision; D.K.W. drafted the manuscript; and X.-J.S. reviewed the paper.

Funding

This work was funded in part by the National Natural Science Foundation of China (No. 61572240).

Acknowledgments

The authors thank Elias Ocquaye, Abeo Timothy Apasiba, Ernest Ganaa, and Huang Chang Bin for their kind assistance, and also thanks to Rita Keddy for their motivation.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Song, S.; Zhan, Z.; Long, Z.; Zhang, J.; Yao, L. Comparative study of SVM methods combined with voxel selection for object category classification on fMRI data. PLoS ONE 2011, 6, e17191. [Google Scholar] [CrossRef]

- Oliveira, P.P.d.M., Jr.; Nitrini, R.; Busatto, G.; Buchpiguel, C.; Sato, J.R.; Amaro, E., Jr. Use of SVM methods with surface-based cortical and volumetric subcortical measurements to detect Alzheimer’s disease. J. Alzheimer’s Dis. 2010, 19, 1263–1272. [Google Scholar] [CrossRef] [PubMed]

- Suykens, J.A.; Van Gestel, T.; De Brabanter, J. Least Squares Support Vector Machines; World Scientific: London, UK, 2002. [Google Scholar]

- Long, B.; Xian, W.; Li, M.; Wang, H. Improved diagnostics for the incipient faults in analog circuits using LSSVM based on PSO algorithm with Mahalanobis distance. Neurocomputing 2014, 133, 237–248. [Google Scholar] [CrossRef]

- Yang, L.; Yang, S.; Li, S.; Zhang, R.; Liu, F.; Jiao, L. Coupled compressed sensing inspired sparse spatial-spectral LSSVM for hyperspectral image classification. Knowl. Based Syst. 2015, 79, 80–89. [Google Scholar] [CrossRef]

- Mehrkanoon, S.; Suykens, J.A. Learning solutions to partial differential equations using LS-SVM. Neurocomputing 2015, 159, 105–116. [Google Scholar] [CrossRef]

- Gao, Y.; Shan, X.; Hu, Z.; Wang, D.; Li, Y.; Tian, X. Extended compressed tracking via random projection based on MSERs and online LS-SVM learning. Pattern Recognit. 2016, 59, 245–254. [Google Scholar] [CrossRef]

- Lu, X.; Zou, W.; Huang, M. Robust spatiotemporal LS-SVM modeling for nonlinear distributed parameter system with disturbance. IEEE Trans. Ind. Electron. 2017, 64, 8003–8012. [Google Scholar] [CrossRef]

- Liu, J.; Wang, Y.; Fu, C.; Guo, J.; Yu, Q. A robust regression based on weighted LSSVM and penalized trimmed squares. Chaos Solitons Fractals 2016, 89, 328–334. [Google Scholar] [CrossRef]

- An, S.; Liu, W.; Venkatesh, S. Fast cross-validation algorithms for least squares support vector machine and kernel ridge regression. Pattern Recognit. 2007, 40, 2154–2162. [Google Scholar] [CrossRef]

- Wu, H.; Cai, Y.; Wu, Y.; Zhong, R.; Li, Q.; Zheng, J.; Lin, D.; Li, Y. Time series analysis of weekly influenza-like illness rate using a one-year period of factors in random forest regression. Biosci. Trends 2017, 11, 292–296. [Google Scholar] [CrossRef]

- Svetnik, V.; Liaw, A.; Tong, C.; Culberson, J.C.; Sheridan, R.P.; Feuston, B.P. Random forest: A classification and regression tool for compound classification and QSAR modeling. J. Chem. Inf. Comput. Sci. 2003, 43, 1947–1958. [Google Scholar] [CrossRef]

- Wang, Y.; Feng, D.; Li, D.; Chen, X.; Zhao, Y.; Niu, X. A mobile recommendation system based on logistic regression and Gradient Boosting Decision Trees. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 1896–1902. [Google Scholar]

- Zhang, F.; Du, B.; Zhang, L. Scene classification via a gradient boosting random convolutional network framework. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1793–1802. [Google Scholar] [CrossRef]

- Rathore, S.S.; Kumar, S. A decision tree regression based approach for the number of software faults prediction. ACM SIGSOFT Softw. Eng. Notes 2016, 41, 1–6. [Google Scholar] [CrossRef]

- Schmidt, U.; Jancsary, J.; Nowozin, S.; Roth, S.; Rother, C. Cascades of regression tree fields for image restoration. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 677–689. [Google Scholar] [CrossRef] [PubMed]

- Lei, Z.; Li, S.Z. Coupled spectral regression for matching heterogeneous faces. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1123–1128. [Google Scholar]

- Zhang, W.; Wang, X.; Tang, X. Coupled information-theoretic encoding for face photo-sketch recognition. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011; pp. 513–520. [Google Scholar]

- Kalteh, A.M. Monthly river flow forecasting using artificial neural network and support vector regression models coupled with wavelet transform. Comput. Geosci. 2013, 54, 1–8. [Google Scholar] [CrossRef]

- Adamowski, J.; Chan, H.F.; Prasher, S.O.; Sharda, V.N. Comparison of multivariate adaptive regression splines with coupled wavelet transform artificial neural networks for runoff forecasting in Himalayan micro-watersheds with limited data. J. Hydroinformat. 2012, 14, 731–744. [Google Scholar] [CrossRef]

- Edmunds, C.W.; Hamilton, C.; Kim, K.; Andre, N.; Labbe, N. Rapid Detection of Ash and Inorganics in Bioenergy Feedstocks Using Fourier Transform Infrared Spectroscopy Coupled with Partial Least-Squares Regression. Energy Fuels 2017, 31, 6080–6088. [Google Scholar] [CrossRef]

- Yang, T.; Zhou, R.; Jiang, D.; Fu, H.; Su, R.; Liu, Y.; Su, H. Rapid Detection of Pesticide Residues in Chinese Herbal Medicines by Fourier Transform Infrared Spectroscopy Coupled with Partial Least Squares Regression. J. Spectrosc. 2016, 2016, 1–9. [Google Scholar] [CrossRef]

- Shen, X.J.; Dong, Y.; Gou, J.P.; Zhan, Y.Z.; Fan, J. Least squares kernel ensemble regression in Reproducing Kernel Hilbert Space. Neurocomputing 2018, 311, 235–244. [Google Scholar] [CrossRef]

- Suykens, J.A.; Vandewalle, J. Least squares support vector machine classifiers. Neural Process. Lett. 1999, 9, 293–300. [Google Scholar] [CrossRef]

- Zheng, H.; Zhang, Y.; Liu, J.; Wei, H.; Zhao, J.; Liao, R. A novel model based on wavelet LS-SVM integrated improved PSO algorithm for forecasting of dissolved gas contents in power transformers. Electr. Power Syst. Res. 2018, 155, 196–205. [Google Scholar] [CrossRef]

- Wen, X.; Tu, C.; Wu, M.; Jiang, X. Fast ranking nodes importance in complex networks based on LS-SVM method. Phys. A Stat. Mech. Appl. 2018, 506, 11–23. [Google Scholar] [CrossRef]

- Polikar, R. Ensemble based systems in decision making. IEEE Circuits Syst. Mag. 2006, 6, 21–45. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. Experiments with a new boosting algorithm. Icml 1996, 96, 148–156. [Google Scholar]

- Moghimi, M.M.; Nayeri, M.; Pourahmadi, M.; Moghimi, M.K. Moving Vehicle Detection Using AdaBoost and Haar-Like Feature in Surveillance Videos. arXiv 2018, arXiv:1801.01698. [Google Scholar]

- Yin, F.; Wu, R.; Yu, X.; Sun, G. Video text localization based on Adaboost. Multimed. Tools Appl. 2018, 78, 5345–5354. [Google Scholar] [CrossRef]

- Melville, B.; Lucieer, A.; Aryal, J. Object-based random forest classification of Landsat ETM+ and WorldView-2 satellite imagery for mapping lowland native grassland communities in Tasmania, Australia. Int. J. Appl. Earth Obs. Geoinf. 2018, 66, 46–55. [Google Scholar] [CrossRef]

- Jog, A.; Carass, A.; Roy, S.; Pham, D.L.; Prince, J.L. Random forest regression for magnetic resonance image synthesis. Med. Image Anal. 2017, 35, 475–488. [Google Scholar] [CrossRef] [PubMed]

- Li, T.R.; Chamrajnagar, A.S.; Fong, X.R.; Rizik, N.R.; Fu, F. Sentiment-based prediction of alternative cryptocurrency price fluctuations using gradient boosting tree model. arXiv 2018, arXiv:1805.00558. [Google Scholar]

- Touzani, S.; Granderson, J.; Fernandes, S. Gradient boosting machine for modeling the energy consumption of commercial buildings. Energy Build. 2018, 158, 1533–1543. [Google Scholar] [CrossRef]

- Mercer, B. XVI. Functions of positive and negative type, and their connection the theory of integral equations. Phil. Trans. R. Soc. Lond. A 1909, 209, 415–446. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM Sigkdd International Conference on Knowledge Discovery And Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Wornyo, D.K.; Shen, X.J.; Dong, Y.; Wang, L.; Huang, S.C. Co-regularized kernel ensemble regression. World Wide Web 2018, 22, 717–734. [Google Scholar] [CrossRef]

- Oliveira, D.V.; Cavalcanti, G.D.; Porpino, T.N.; Cruz, R.M.; Sabourin, R. K-Nearest Oracles Borderline Dynamic Classifier Ensemble Selection. arXiv 2018, arXiv:1804.06943. [Google Scholar]

- Cruz, R.M.; Sabourin, R.; Cavalcanti, G.D. Prototype selection for dynamic classifier and ensemble selection. Neural Comput. Appl. 2018, 29, 447–457. [Google Scholar] [CrossRef]

- Hong, H.; Liu, J.; Bui, D.T.; Pradhan, B.; Acharya, T.D.; Pham, B.T.; Zhu, A.X.; Chen, W.; Ahmad, B.B. Landslide susceptibility mapping using J48 Decision Tree with AdaBoost, Bagging and Rotation Forest ensembles in the Guangchang area (China). Catena 2018, 163, 399–413. [Google Scholar] [CrossRef]

- Morvant, E.; Habrard, A.; Ayache, S. Majority Vote of Diverse Classifiers for late Fusion; Joint IAPR International Workshops on Statistical Techniques in Pattern Recognition (SPR) and Structural and Syntactic Pattern Recognition (SSPR); Springer: Berlin, Germany, 2014; pp. 153–162. [Google Scholar]

- Cai, C.; Wornyo, D.K.; Wang, L.; Shen, X. Building Weighted Classifier Ensembles Through Classifiers Pruning. In Proceedings of the International Conference on Internet Multimedia Computing and Service, Qingdao, China, 23–25 August 2017; pp. 131–139. [Google Scholar]

- Alom, M.Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Hasan, M.; Van Esesn, B.C.; Awwal, A.A.S.; Asari, V.K. The History Began from AlexNet: A Comprehensive Survey on Deep Learning Approaches. arXiv 2018, arXiv:1803.01164. [Google Scholar]

- Muhammad, U.; Wang, W.; Chattha, S.P.; Ali, S. Pre-trained VGGNet Architecture for Remote-Sensing Image Scene Classification. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20 August 2018; pp. 1622–1627. [Google Scholar]

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state of the art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).