3.2.1. Additive Syntropy and Entropy of Reflection

The first thing we encounter when analyzing the informational aspects of the reflection of system

A is its attributive information

, which the system reflects about itself as a holistic entity. This information is reflected by the system through its

N parts

, which, in the case of the informational closeness of the system, coincide with the sets

, that is,

. At the same time, each part

reflects a certain amount of information about the system, which is equal to syntropy

, which according to Equation (17) has the form:

Accordingly, the total amount of information about system

A, which is actually reproduced through

N of its parts, is the additive syntropy

of reflection, equal to the sum of partial syntropies (19):

Let us compare the information being reflected

and the information that has been reflected

. To this end, we multiply the right-hand sides of Equations (14) and (20) by

and divide by

. As a result of this operation, for

we get the inequality

from which it follows that

Inequality (21) says that not all information about system

A, as a single whole, is reflected through the totality of its parts, and there is always some part of information

that remains unreflected. Denote this non-reflected information by the symbol

S and define its value as the difference between the information being reflected and the information that has been reflected:

Let us represent (22) in expanded form and carry out simple transformations:

If we now multiply the right-hand side of Equation (23) by the total number

of elements of the system and use natural logarithms, then we get a mathematical copy of statistical weight of a thermodynamic system, which is a measure of its entropy [

17]. This circumstance gives us a reason to call the unreflected information

S the entropy of reflection. That is, the

entropy of reflection is the information about a discrete system that is not reflected (not reproduced) through a collection of its parts. In other words, entropy

S characterizes inadequacy, the uncertainty of reflection of a discrete system through its parts, while the additive syntropy

is a measure of adequacy, definitiveness of this reflection.

Until now, in our reasoning, we have never used such a concept as a

probability. We just did not need to. At the same time, the quotient

can be interpreted from the standpoint of the theory of probability as the probability

of encountering the elements with the characteristic

among all the elements of system

A. With this interpretation of the indicated quotient, the entropy of reflection

S is represented as follows:

Comparison of Equation (2) and Equation (24) shows that the reflection entropy

S and the Shannon entropy

H do not differ mathematically from each other, that is,

Equation (2) and Equation (23) are obtained in different ways, have different content-related interpretation and intended to measure different types of information. Therefore, to some extent, equality (25) is unexpected. In connection with this, it is appropriate to say that, regarding such similarities of different measures, such a well-known scientist as Kolmogorov said that “

such mathematical analogies should always be emphasized, since the concentration of attention on them contributes to the progress of science” [

18] (p. 39). In addition, we note that in Shannon’s theory [

7], the derivation of the entropy

H is based on three axioms (conditions), whereas only two axioms are in the foundation of STI.

Equality (25) indicates that STI and Shannon’s theory of information, though dealing with different types of information, is, at the same time, mathematically interrelated. The nature of this relationship, as will be shown in

Section 5.2, has an integrative code character. Moreover, we note the following.

In Shannon’s theory, certain requirements are a priori imposed on the entropy

H of the set of probabilities [

7], and the entire theory, in fact, begins with the derivation of Formula (2). In STI, in turn, no preliminary conditions are set for the entropy of reflection

S; it is obtained simply as the difference between the information being reflected

and the information that has been reflected

. That is, in the chronological sequence of obtaining information-synergetic functions

, the entropy of reflection S is the closing member. This fact suggests that the

synergetic information with which STI is dealing is genetically primary with respect to the probabilistic information, as measured in Shannon’s theory (we will come to the same conclusion, but from other standpoints, in

Section 5.2). This is fully consistent with Kolmogorov’s conclusion that “

information theory should precede probability theory, but not rely on it” [

19] (p. 35). That is, STI can be viewed as a verification of this conclusion of recognized authority in the field of information theory.

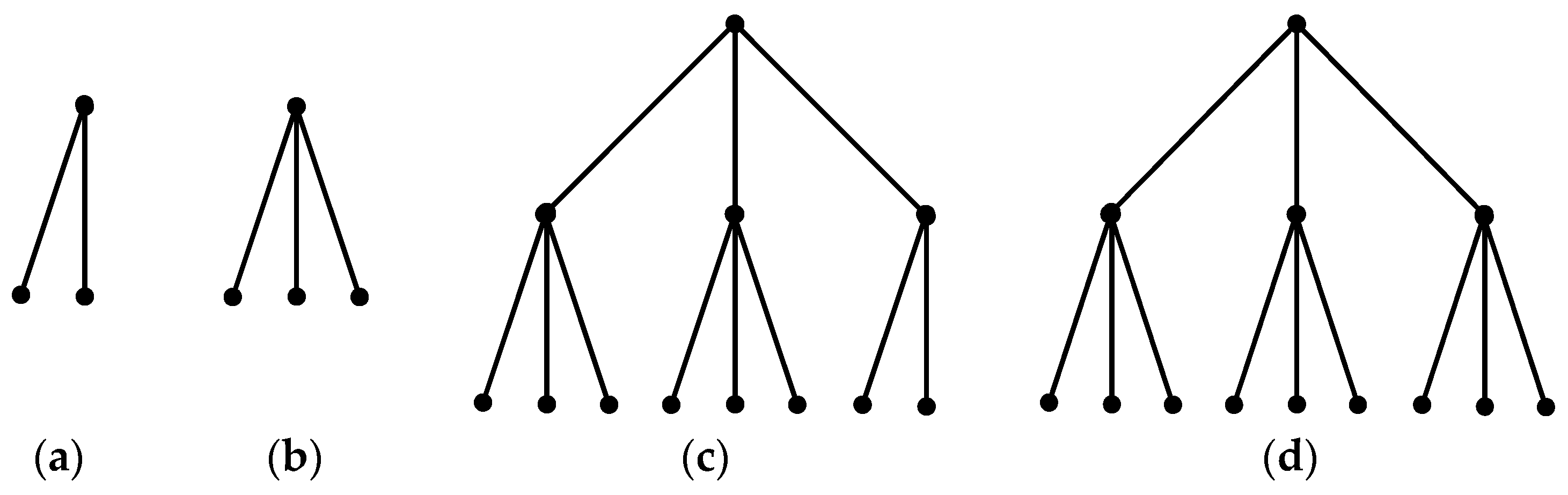

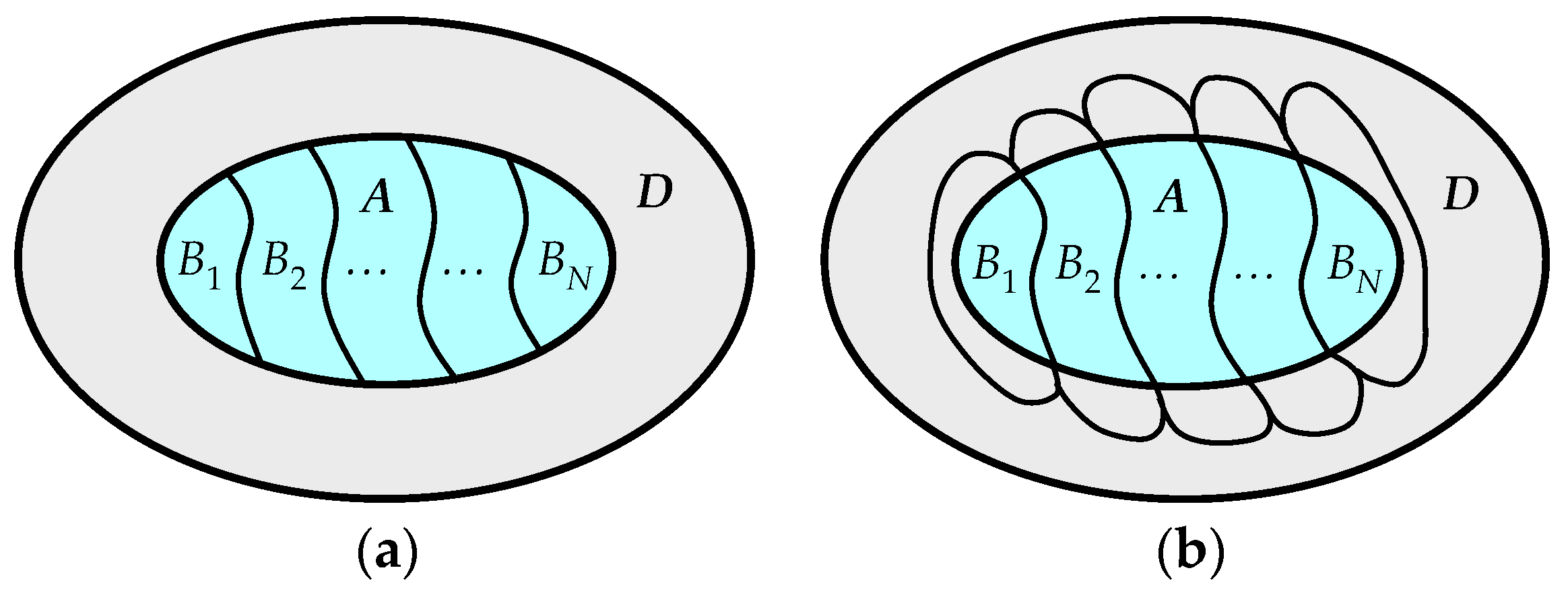

3.2.2. Chaos and Order of Discrete Systems

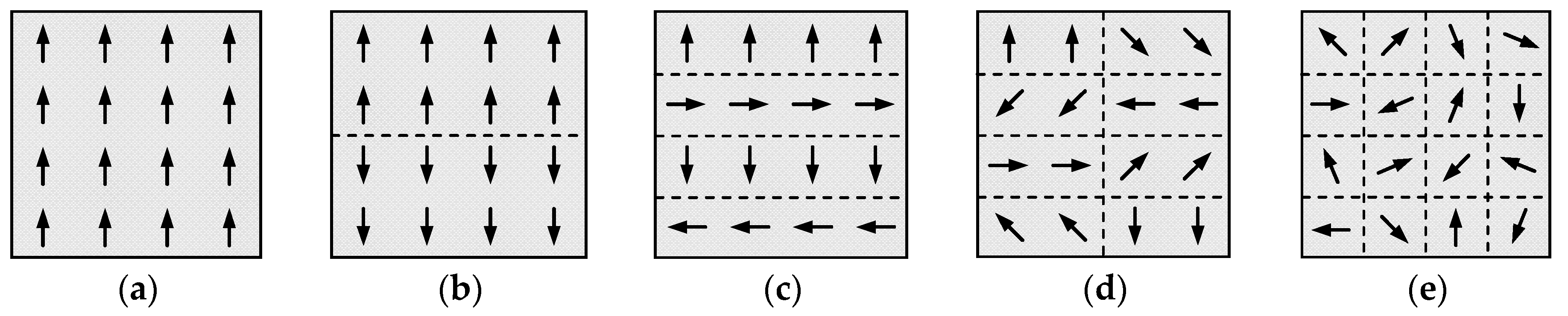

The structure of any discrete system with a finite set of elements can be characterized with the help of such concepts as order and disorder or chaos. At the same time, these concepts are not unambiguously defined in modern science. Therefore, starting a conversation about the informational analysis of chaos and order in the structure of discrete systems, we first take a decision concerning the interpretation that will be used in the further presentation of our material.

So, we say that there is a complete order in the structure of the system in terms of the values of a certain attribute , if all elements have the same value of this attribute. That is, when all the elements in the composition of the system do not differ from each other in terms of this attribute, we say that the structure of the system is maximally ordered. In contrast, complete chaos in the structure of the system occurs when each element of the system has its own individual value of the attribute . That is, in this case, all the elements are different from each other, and we say that the structure of the system is maximally chaotic.

What has been said is formalized by the fact that, for complete order, , and for complete chaos, . In other cases, when , the system is in an intermediate state between complete chaos and complete order, and we say that the structure of the system is characterized by both chaos and orderliness. In this case, if the system changes its state in such a way that , then order develops into chaos, whereas if , then the chaos is transformed into order. Taking into account the comments made, we now turn to the information analysis of the structural features of discrete systems in terms of chaos and order.

An analysis of (20) and (23) shows that the value of the additive syntropy and the entropy of reflection S depends on the number of parts of the system and their mutual relations according to the number of elements. At the same time, the more chaotic the structure of the system is, that is, the more parts are identified in its composition and the less the parts differ from each other in terms of the number of elements, the larger is S and the smaller is . Conversely, the more order is in the structure of the system, that is, the smaller is the number of its parts and the more the parts differ from each other in terms of the number of elements, the larger is and the smaller is S. This indicates that and S depend on the degree of orderliness and randomness of the structures of discrete systems and can be considered as measures of order and chaos. Let us show this using a concrete example.

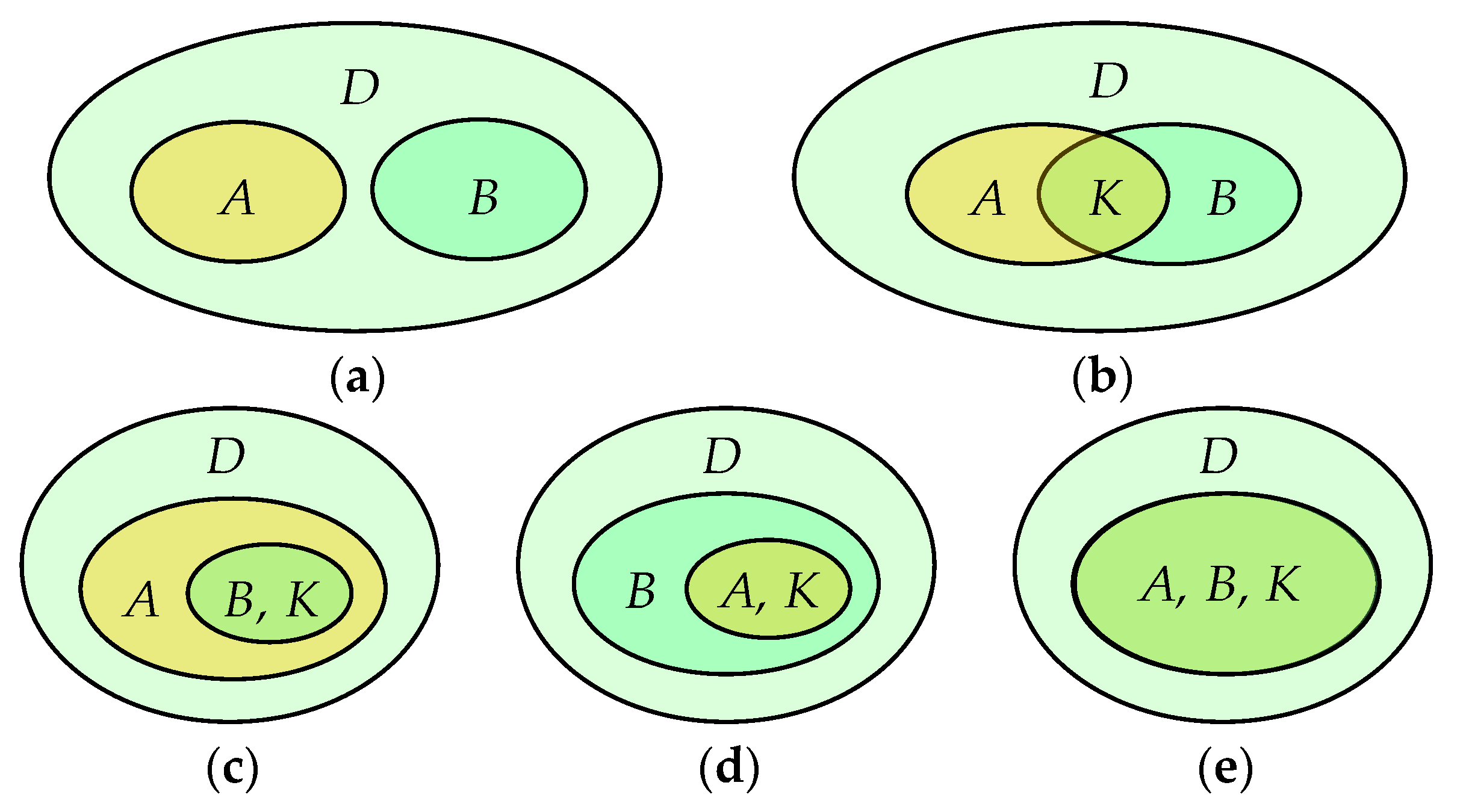

Figure 7 shows various states of a discrete system consisting of 16 elements, which are characterized by the “

direction of motion” characteristic. According to the values of this characteristic, the system successively takes 5 states, which correspond to its division into 1, 2, 4, 8, 16 parts which have the same number of elements.

In the state of the system in

Figure 7a, all elements move in the same direction and an ideal order is observed in the structure of the system. In

Figure 7e we have the polar opposite, that is, each element of the system has an individual direction of movement, and the structure of the system is maximally chaotic. The states of the system in

Figure 7b–d are intermediate to those in

Figure 7a,e and are characterized by the fact that both chaos and orderliness are observed in the structure of the system. Calculating Equation (20) and Equation (23) for each state of the system the values of additive syntropy and entropy of reflection, we successively obtain:

;

. That is, an increase in chaos and a decrease in order in the structure of the system in

Figure 7 correspond to a decrease in the values of

and an increase in the values of

S.

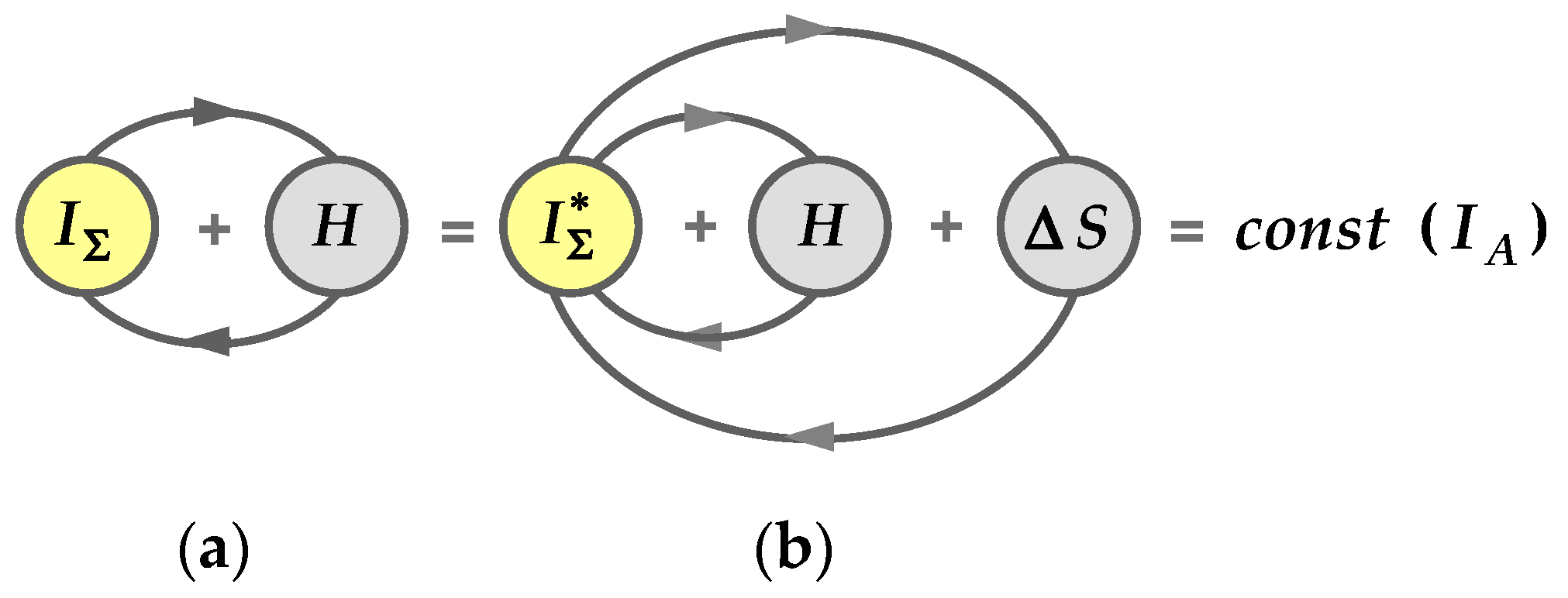

Additive syntropy

, as the reflected information, and the entropy of reflection

S, as non-reflected information, are integral parts of the reflected information

, that is:

At the same time, as was shown, the values of

and

S characterize the structure of a discrete system from the standpoints of order and chaos. Therefore, Equation (26) can be also interpreted as the following expression:

That is, it can be argued that Equation (26) and its interpretation (27) can be viewed as

the law of conservation of the sum of chaos and order, which is valid for any structural transformation of the system without changing the total number of its elements. In other words,

no matter what we do with the system without changing the total number of its elements, no matter into how many parts the system is divided by the values of any characteristic and whatever relations in terms of the number of elements are between the parts, the sum of chaos and order in the system structure will always remain constant. For example, for all the states of the system in

Figure 7, there holds the equality:

.

Let us now consider the specific features of the relationship between additive syntropy and entropy of reflection S with a constant number of elements of the system and a different number of its parts, correlated with each other in the number of elements in an arbitrary way. First, in accordance with Equations (14), (20), (23) and (26), we define the maximum and minimum values of and S for a fixed N.

When

all parts of the system consist of only one element and, according to Equation (20), we have

. Thus, it follows from Equation (26) that, in this case,

. In other words, for

syntropy

assumes the minimal value, and entropy

S, the maximum of all possible values for any

N. Since in this case all parts of the system are equal with one another in terms of the number of elements

, then in all other cases, when

and

, we should expect that syntropy

is minimal, and entropy

S, maximal. That is:

In turn, additive syntropy will assume its maximum value when the number of elements in one part is equal to

, and each of the other

parts includes only one element, that is:

Accordingly, the minimum entropy of reflection is equal to:

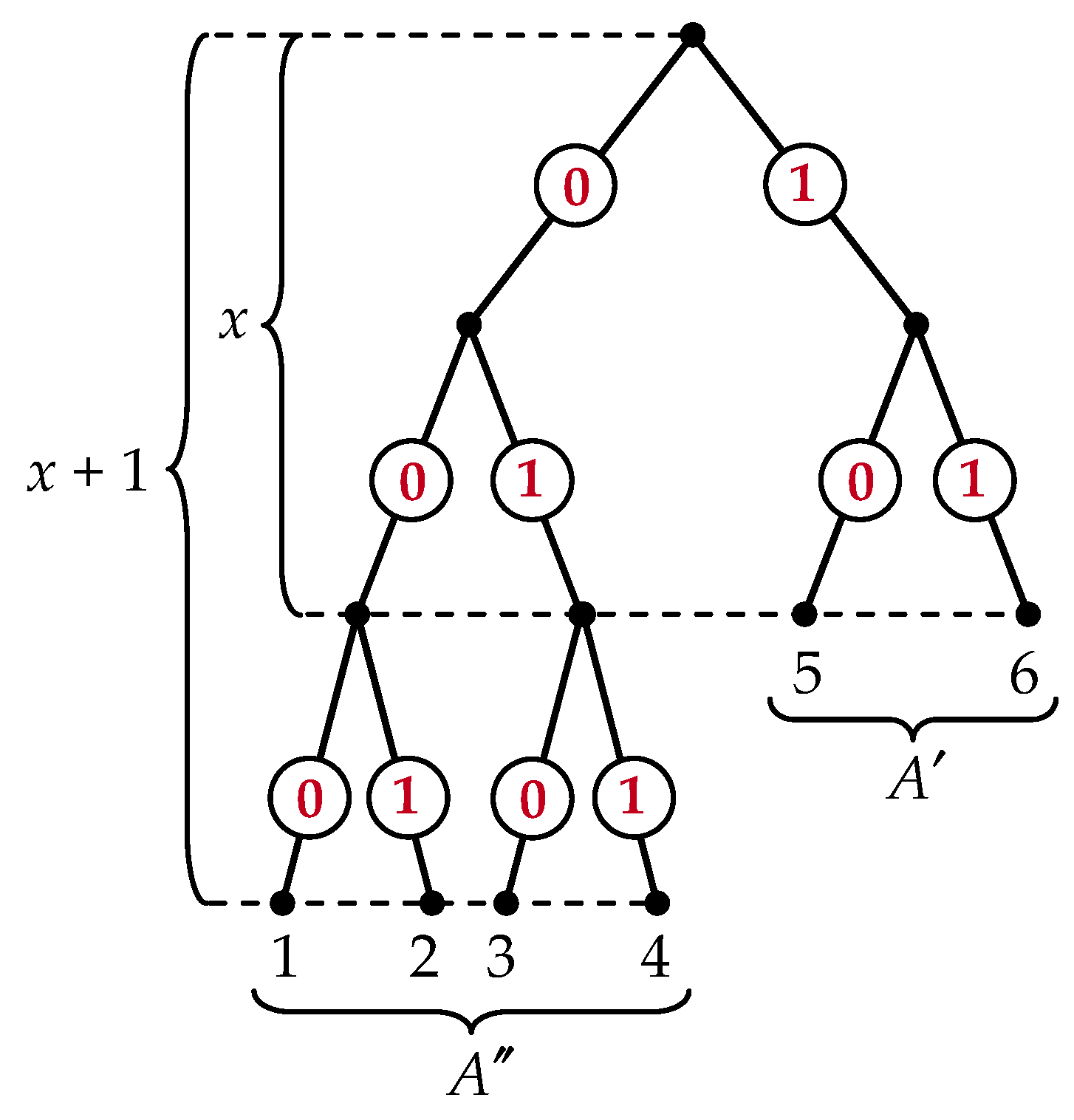

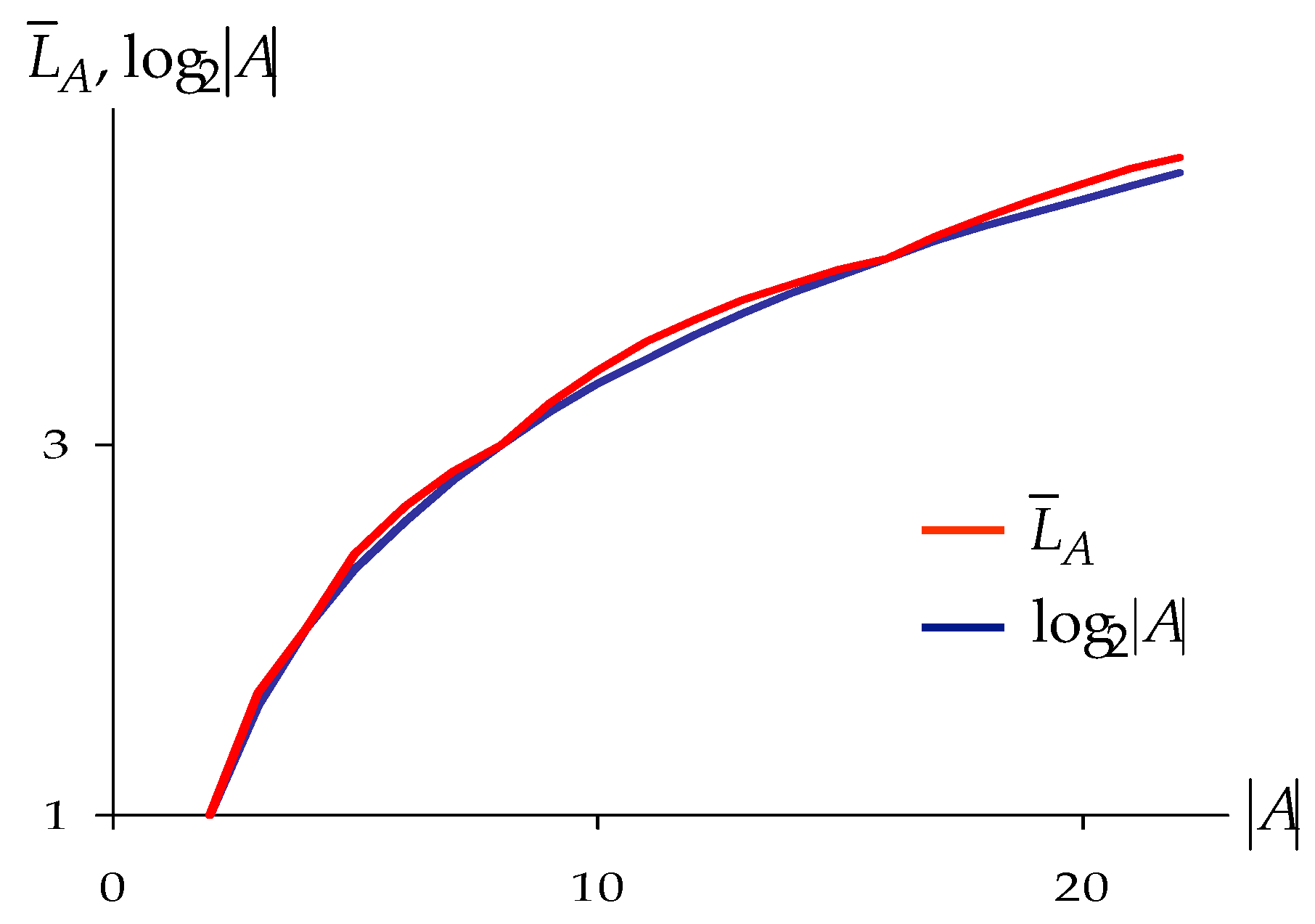

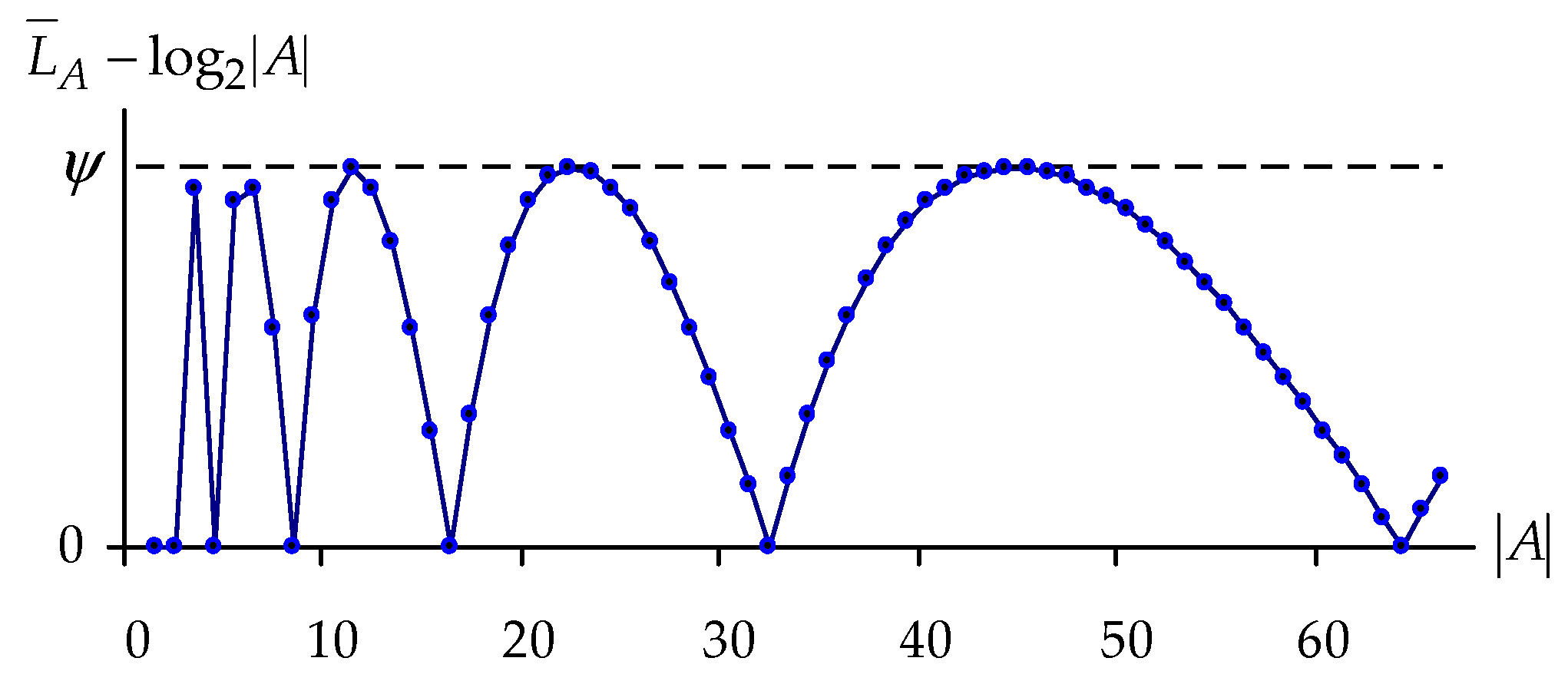

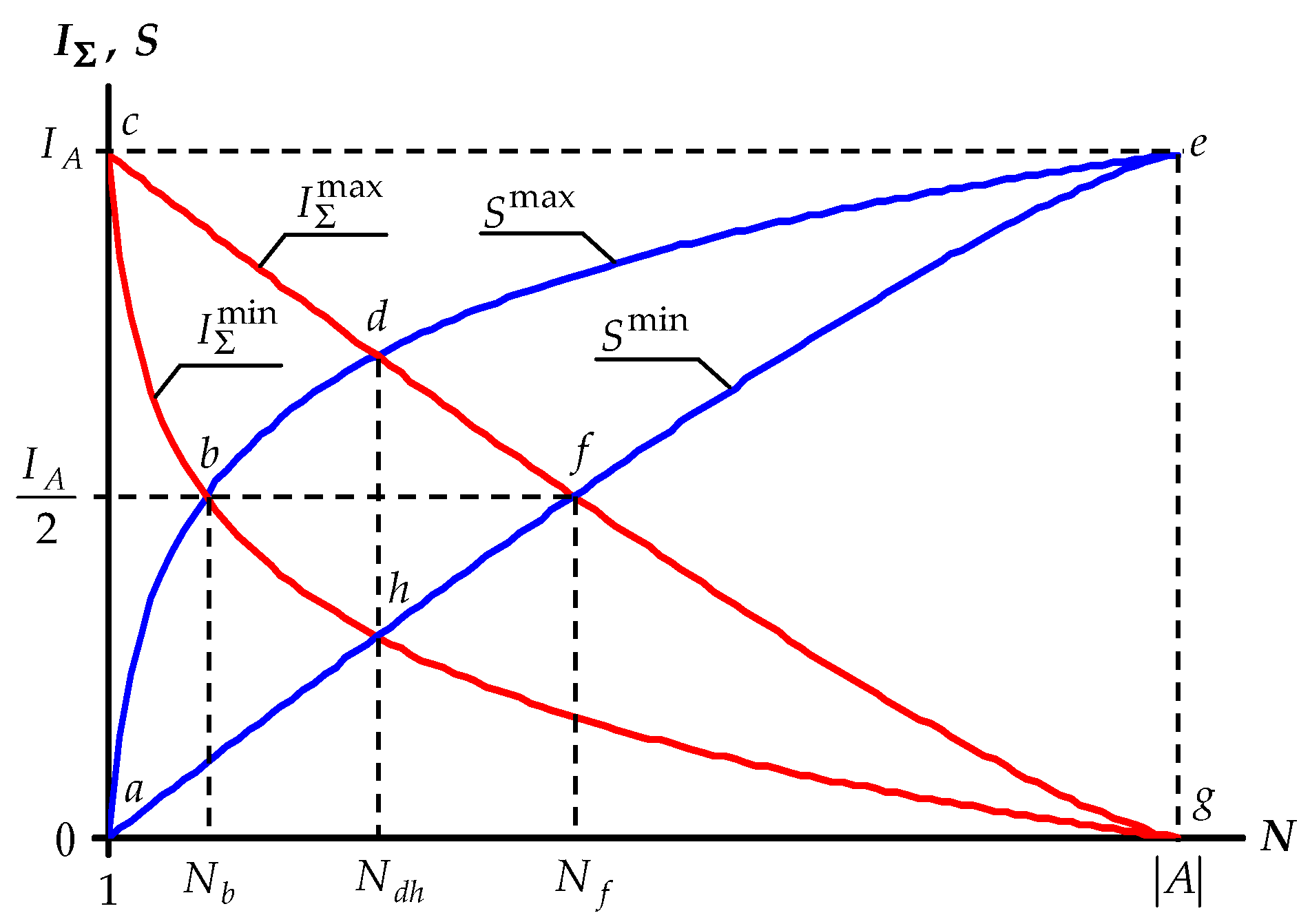

We construct the graphs of dependence of

on the number

N of parts of the system with

and analyze the resulting diagram, which we will call

the information field of reflection of discrete systems (

Figure 8).

The graphs constructed in the figure initially form two contours—the entropic one abdefha and the syntropic one cdfghbc, which localize the ranges of all possible values of and S. The intersection of these contours at the points b and f, where equalities and are observed, allows, using the projection of these points on the horizontal axis, to identify three intervals of values of N: The left , the central , and the right ones. Let us consider the features of relationships between and S in these intervals and first let us determine the values of and .

At point b, according to Equations (28) and (29), we have the equation , the solution of which yields (hereinafter, the values of N are rounded to the nearest larger integer). A similar equation for the point f formed by the right-hand sides of (30) and (31) has no analytical solution. That is, the value of can only be determined numerically, which is as follows. For the members of the sequence are determined by and , and as the value of we take the value of N, after which . The values of calculated in this way lead to the regression equation .

Thus, for , we have three intervals of values of N:

the left one: ;

the central one: ;

the right one: .

The systems that fall into the left interval according to the value of N are characterized by the fact that for any relations between their parts in terms of the number of elements, the inequality is preserved. That is, in the left interval of the values of N, the structural orderliness of the system is always greater than its randomness. In the right interval, the opposite situation is observed, when inequality holds for any structural transformation of the system, which corresponds to the predominance of chaos over the order in the structure of the system. In the central interval, there is an intersection of the regions of the possible values of and S, as a result of which there can be various relations between them , and there is a tendency towards structural harmony of chaos and order.

In other words, in the left interval of the values of N, we observe an irreversible predominance of order over chaos in the structure of the system, and in the right interval, on the contrary, irreversible predominance of chaos over order. In the central interval, in turn, the predominance of both order over chaos and chaos over order is reversible. Any discrete system with a finite set of elements, divided by the values of any characteristic into N parts, inevitably falls into one of the specified intervals, which allows all systems to be classified into three types according to the specific features of these intervals:

For example, the system in

Figure 7, consisting of 16 elements, in its various states will be classified as follows: (a) Ordered

(b) ordered

(c) harmonious

(d) chaotic

; (e) chaotic

.

The division of the values of N into the left, center, and right intervals is not comprehensive. In addition to this division, the central interval is additionally subdivided into left and right parts according to the value , which corresponds to the combined projection of the points d and h. We note the features of these parts, and first we determine the value .

At the point

d, the equality

is observed, and at the point

h, the equality

. It follows that the value

must satisfy the equation:

Equation (32) has no analytical solution, as a result, the values can only be obtained numerically (by analogy with the above determination of ). Such numerical solutions lead to the regression equation .

Thus, the left and right parts of the central interval have the form:

The interrelationships of syntropy and entropy S in the left and right parts of the central interval are characterized by the fact that in the left part, , and in the right part, . That is, in the structure of systems from the left part there is a tendency for dominance of order over chaos, which weakens with increasing of N, and in the structure of systems from the right part, the opposite tendency for the prevalence of chaos over order is manifested, which increases together with the increase of N. These features of interrelations between and S in the left and right sides of the central interval, as well as the equality , observed in each of these parts along the bf line, provide a ground for further dividing harmonious systems into three kinds:

ordered harmonious (the left part of the central interval);

balanced harmonious (the entire central interval with );

chaotic harmonious (the right part of the central interval).

Thus, all discrete systems with a finite set of elements are divided into three types according to the proportion of chaos and order in their structure; these types include five sorts of systems.

3.2.3. Structural Organization and Development of Discrete Systems

It is obvious that the structural organization of a discrete system depends on the randomness and orderliness of its structure. Moreover, the more order in the structure of the system, the more the system is organized, and vice versa, the more disorder, the less the level of organization is. Accordingly, to quantify the structural organization of systems, one or another function can be used, the arguments of which are measures of chaos and order. As such a function of the structural organization, we can use the ratio of additive syntropy

to the entropy of reflection

S, which will be called

R-function (

R is the first letter of the word

reflection):

The greater the values of the

R-function, the higher the structural organization of the system is. In this case, the values of R indicate what and to what extent predominates in the structure of the system: Chaos or order. For example, if

, then order prevails in the structure of the system, otherwise, when

, chaos. When

, chaos and order balance each other, and the structural organization of the system are equilibrium. Accordingly, in the left interval of the values of

N, systems always have

, in the right interval,

, whereas in the central interval, the structural organization of the systems may be diverse, that is,

,

or

. In this case, when all elements of the system have one and the same characteristic, that is, when

and

, it is assumed that

. For example, for the states of the system in

Figure 7, whose syntropy

and entropy

S were determined above, we have the following values: (a)

; (b)

; (c)

; (d)

; (e)

.

Apart from structural organization, discrete systems are also characterized by the level of their development, which is related to the ratio of chaos and order in the structure of the system and depends on the total number of system elements, the number of its parts and the distribution of the latter in terms of the number of elements. For a quantitative assessment of the structural development of systems, it seems natural to use the values of a certain function, the arguments of which are the additive syntropy and the entropy of reflection S. The behavior of such a function must be made consistent with the general laws of structural development, which can be represented as follows.

When , all elements of the system have the same distinguishing feature, the division of the system into parts is absent, and the structure of the system is not developed. In this case, , . In the interval , the formation takes place of various groups of elements with the same values of the attribute , as a result of which the system is divided into N parts and its structural development occurs, for which, , . In the state of the system, when , each element has an individual value of the attribute , and the system is split up into parts consisting of only one element. This corresponds to the complete destruction of any structure, that is, in this state of the system there is no structural development and , .

It follows from the above that when a system moves from the state

to the state

, the values of the function of structural development must first increase from zero to a certain maximum, and then decrease again to zero. As such a function, it is natural to take the product

, which we will call the

D-function of the structural development of discrete systems (

D is the first letter of the word

development):

The structural development function (34) assumes the minimum value

when

and

, whereas this function has the maximum value

when

. The latter is explained by the fact that the product of two non-negative numbers with a constant sum is maximal when the numbers are equal. That is, the development function (34) is characterized by the fact that its values are the greater, the smaller the absolute value

is, and, accordingly, we have:

In other words, the system reaches the maximum possible level of development when chaos and order in its structure are equal to each other.

In the general case, systems with different numbers of elements may have the same value of the development function

D. In this case, if, for example, two systems

and

are given such that

and

, then it is clearly understood that the system

is at a higher level of development than the system

. Therefore, when comparing the structural development of various systems, it is expedient to use the relative value of development

, which is convenient to express as a percentage:

In order to have a visual representation of the behavior of the structural development function,

Table 3 shows its absolute and relative values, as well as the values

, for different states of the discrete system in

Figure 7.

It is seen from the table that D and have the maximum value when and .