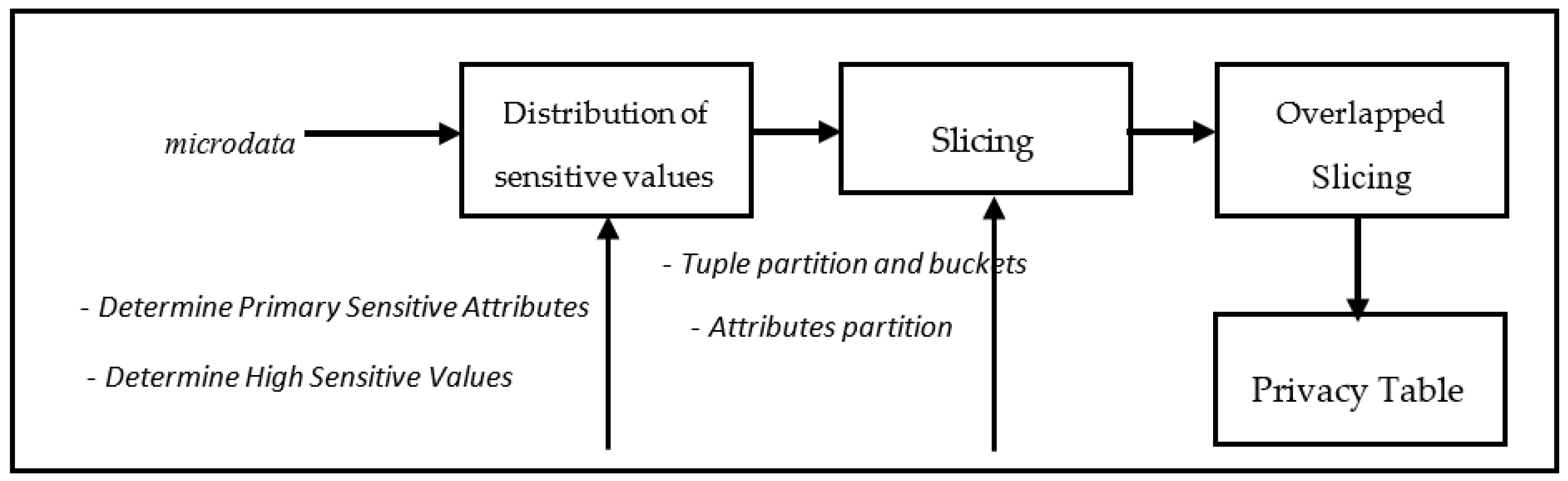

Processes number 2–4 are the main process of our proposed method. In data preprocessing, we removed missing values. We also set the quasi identifier attributes and sensitive attributes. We collected data from UCI machine learning repository which characteristics is microdata. In next subsection, we explain process number 2–5.

4.1. The Distribution of Sensitive Values

Many researches on PPDP did not set its sensitive attributes, whereas, it is very important to prevent a QI group, or a bucket obtains over sensitive values compare with others. This distribution also reduces the probability of relational attack, an attack that often reveals the privacy table containing multiple sensitive attributes.

Before we discuss distribution, it is better to describe relational attack. Relational attack is an attack that consists of three types attack, elimination attack [

9], full functional dependency attack [

9] and association disclosure attack [

13]. These three types of attack concerned with multiple sensitive attributes since they have relation between sensitive attributes and quasi identifier attributes [

9] or among sensitive attributes [

9,

13]. Since the characteristics are similar, we categorized these three attacks into relational attack.

We used distribution of sensitive values like our previous works [

20,

21]. Two steps are performed for distributing evenly the microdata table:

In our model, as in previous works described, a table with sensitive attributes more than three will be dissociated into two or more sub table with maximum number of sensitive attributes in a sub table are three. Each sub table has same QI attributes with different order after being set. In this paper we assume only two sensitive attributes as shown in

Table 7.

For setting the sensitive attributes, firstly we determined High-Sensitive Value (HSV) for each sensitive attribute. HSV is a value of sensitive attributes with confidential information and tends to be disgraceful by revealing it. Other sensitive values which is not categorized as HSV are called Less Sensitive Value (LSV).

In

Table 7, HSV from Disease are HIV and Cancer, while from Occupation are Cook and Driver. Then, we determined Primary Sensitive Attributes (PSA), a sensitive attribute that contains HSV more than others. Other sensitive attributes are called Contributory Sensitive Attributes (CSA). PSA is put before CSA. In

Table 7, Disease has 4 HSV (2 HIV and 2 Cancer), while Occupation only has three HSV (2 Cook and 1 Driver). Then, we decided Disease become PSA in this table and put before Occupation.

Next Step, we distribute evenly the sensitive values and form bucket or QI group with k parameter in k-anonymity. A distributional model in [

20] is employed but by ignoring generalization and suppression. In our distributional model step number 1 to 3 has been performed, therefore we start to conduct step 4 and 5. The rule of distribution is explained as follows:

Distribute evenly tuples that contain HSV in PSA to each bucket or group:

If all tuples that contain HSV in PSA have been distributed evenly, but there are still buckets or groups that have not being filled yet, then put tuples contain HSV in CSA to each group or bucket, otherwise tuples put randomly into buckets.

If all buckets have been filled by tuples with HSV from PSA, but there are still HSV in PSA, then repeat from first bucket to continue in distributing rest of tuples contain HSV.

Check the table for privacy guarantee to satisfy p-sensitive. If it does not satisfy p-sensitive then exchange a non HSV tuple in the bucket to others with condition, the exchanged bucket still in p-sensitive privacy guarantee.

Table 8 shows the result of sensitive attributes distribution which satisfy p-sensitive. It is obviously seen in

Table 8 that each group satisfies k-anonymity with k = 3 and it also satisfies p-sensitive due to the fact that in each group, they have more than one sensitive value. We did not generalize and suppress to this table since this is not the released table. We anonymized this table in overlapped slicing stage with no generalization and suppression too. The reason is when a table is generalized or suppressed, it produces information loss.

Now, we formalize the distribution. If

T is a microdata table, then

T can be viewed horizontally and vertically. Horizontally, microdata

T is:

where

T is a microdata table that has been anonymized.

QI are quasi identifier attributes, while

S are sensitive attributes.

are generalized and/or suppressed obtaining anonymity group.

denotes

ith sensitive attributes, each of

contains

HSV and

LSV which each attribute owns some HSVs (High-Sensitive Value) and LSVs (Less Sensitive Value). Those form horizontally microdata table, while vertically:

where

T is a microdata table that has been anonymized.

Bi is a bucket or quasi identifier group that contains at least

k records, and

k is parameter in k-anonymity, while

ri is

ith record in

.

denotes matching bucket

in

. Then the distribution is performed as shown below:

with a requirement

, and

k is parameter in k-anonymity. This requirement cannot be satisfied if total

since probability of HSVs lie on a bucket can fill greater than k.

4.2. Slicing on Multiple Sensitive Attributes

Slicing of microdata T is performed by attribute and column partition. Microdata T can be viewed as a collection of attributes . They have attribute domain as . If tuple , then where is value of tuple t with .

Attribute partitioning is to partition attribute to more than one, so that each partition belongs to one subset as a partition, and each attribute’s subset is called an attribute, and union of these attribute is called column. Let there be c attribute then and it is for any . In single sensitive attribute, its sensitive attribute is put in the last position for easy representation. In multiple sensitive attributes, as the last attribute contains more than one attribute. represent multiple sensitive attributes as .

Tuple partition is a partition of T that each partition belongs to exactly one subset. Each subset of tuple is called a bucket. Let assume, there are b buckets, then for any . A Bucket is not generalized or suppressed yet. It depends on the framework used in anonymizing microdata.

In this stage, a slicing method for multiple sensitive attributes is conducted. It is adopted from slicing for single sensitive attribute [

15]. This stage is performed by partitioning attributes and tuples. Then columns are formed which contain more than one attributes.

Table 9 shows the result after an attribute partition is performed. It is vertically partition for each attributes, Age and Zip code as QI attributes, Disease and Occupation as sensitive attributes. Slicing in

Table 9 produced five columns, each column contains exactly one attribute. Age, Zip code, and Sex are QI attributes, Disease and Occupation are sensitive attributes, each attribute fills different column.

Table 10 shows result of tuple partition that partition the table horizontally. Each of horizontal partition is treated as a bucket with number of tuples follow k in k-anonymity. Next step, a slicing process by forming two columns with each column contains some attributes. A column represents as QI attribute and another one represents as sensitive attributes. But, one of three attributes in QI column will be put in another one to keep its correlation. Two attributes with most correlated is joined in first column are Age and Sex, while Zip code is put in next column.

Table 11 shows the result of slicing method. First attribute has two columns, Age and Sex, second attribute contains three columns, Zip code, Disease, Occupation. In fact, Disease is a QI attribute, but it is put in an attribute contain sensitive columns to keep correlation between two attributes.

4.3. Overlapped Slicing on Multiple Sensitive Attributes

Overlapped slicing is to put one sensitive attribute in sensitive column into QI column. Overlapped slicing is an extension of slicing method and we implemented in multiple sensitive attributes. In overlapped slicing, the table does not only follow the slicing’s method but also the tuple in each bucket randomly permutated. Its aim is to improve data utility, while its privacy is maintained by permutating tuples in a bucket.

An attribute that chosen from sensitive column is CSA, because CSA does not potentially embarrass the holder. A microdata table T is given as:

If

is CSA then

,

, and

. Vertically, in each bucket, its sensitive value is randomly permutated.

Each tuple in a bucket is represented by . The notation describes value of sensitive attributes in ith record. describe sensitive value of attribute S1,S2, and S3 in record r1. Simply we denote as . Then, we randomly permutate sensitive values in in bucket. Therefore, it could be , or . means record 1 in with the bucket contains sensitive values, which originally from record 3 in the bucket.

In

Table 12, Disease is overlapped in first and second column. By overlapping this attribute, it will provide better data utility [

15]. To maintain privacy guarantee, then tuples in each bucket from second columns is randomly permutated.

Table 13 below depicts how random permutation is performed in overlapped slicing. This random permutation creates fake tuples which not affected on information loss. From

Table 13, values in each bucket in second column are randomly permutated to break linking between two columns. As shown in

Table 12, in the first bucket, the values {(39, M, HIV), (29, M, Cancer), (23, F, Flu)} are randomly permutated, and the values {(11360, HIV, Cook), (11222, Flu, Nurse), (12666, Cancer, Police)} are randomly permutated also, therefore linking between both columns in one bucket is disguised. For example, in group 2 first tuple, {(35, M, Cancer), (11660, Cancer, Teacher)} is permutated to {(35, M, Cancer), (11234, Flu, Police)}. The second is fake tuple, because it is changed from the original tuple.

We assume table has been clustered into bucket based on quasi identifier. We briefly explain the overlapped slicing formally below, with OT is overlapped table.

Create Overlapped Table

, where and

Q is quasi identifier attributes, while S is sensitive attributes. Then, Q and S being overlapped:

, is randomly put from Q,

iff (CSA is Contributory Sensitive Attribute)

If number of CSA is more than one, then CSA is put randomly.

Create Fake tuples (by tuples random permutation)

for bucket , where ,

domain of t,

bi is a bucket contains

ti, where

ti is

ith tuple in a bucket.

Overlapped slicing is a microdata table that satisfies overlapped attribute and tuple random permutation: , where . and , overlapping with , and bucket , , with and .