Perceptual Fusion of Electronic Chart and Marine Radar Image

Abstract

:1. Introduction

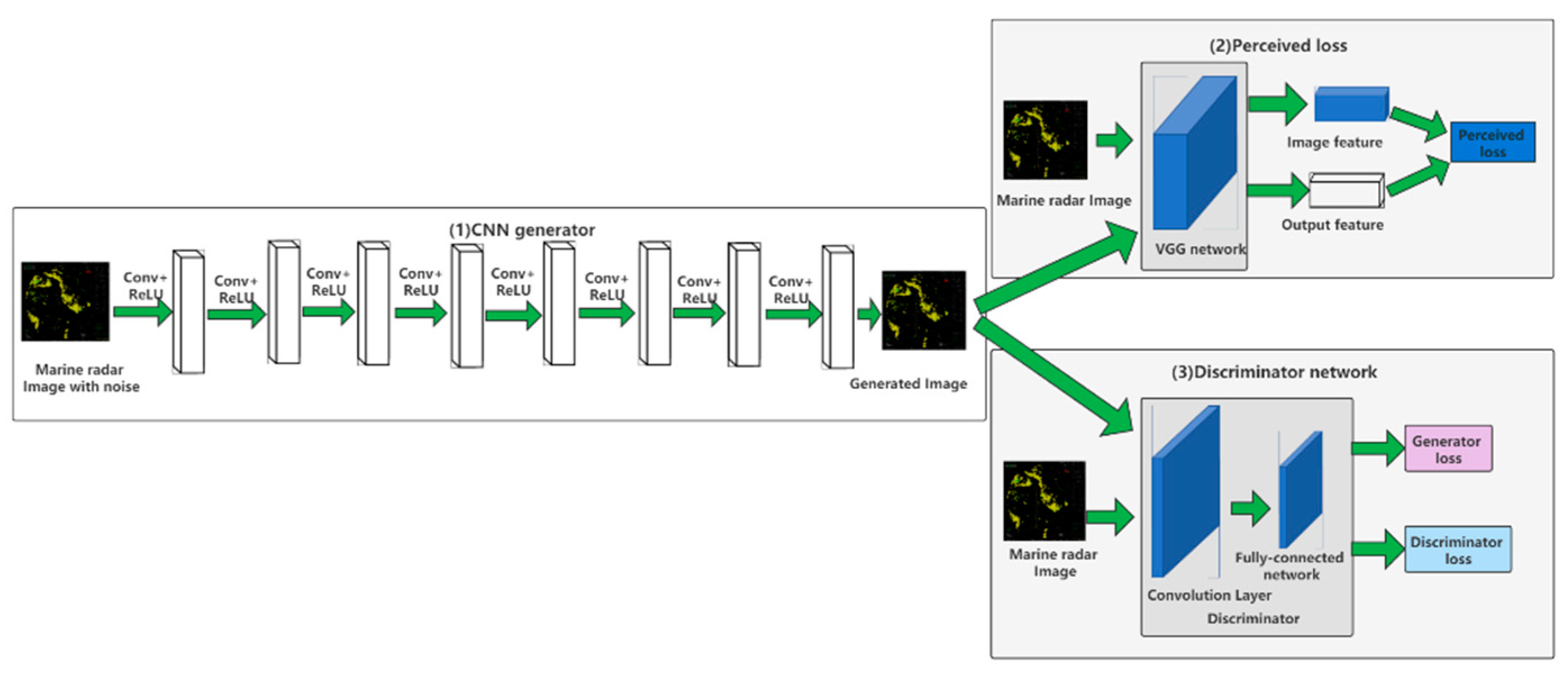

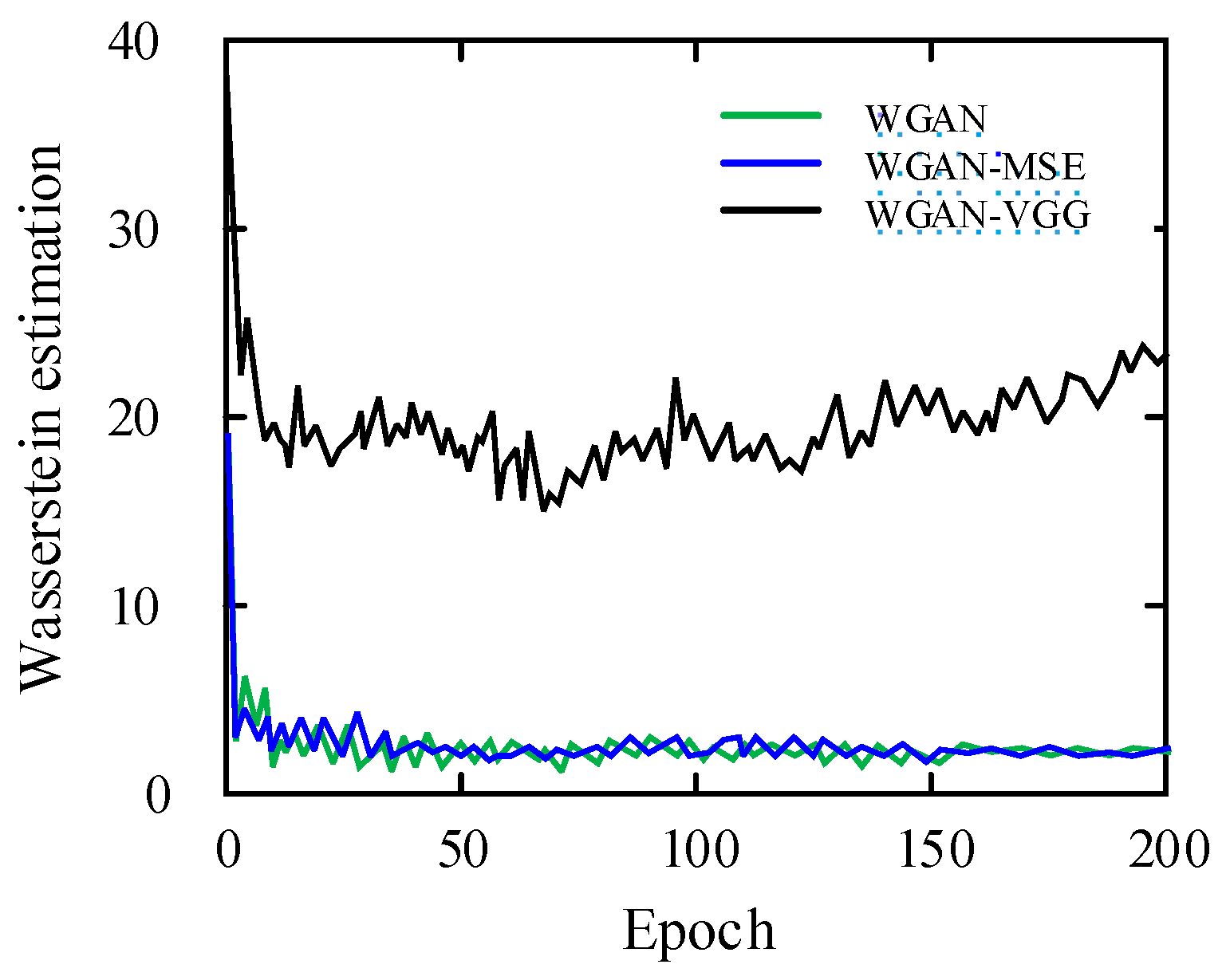

2. Image Denoising Algorithm Based on WGAN

3. Image Registration

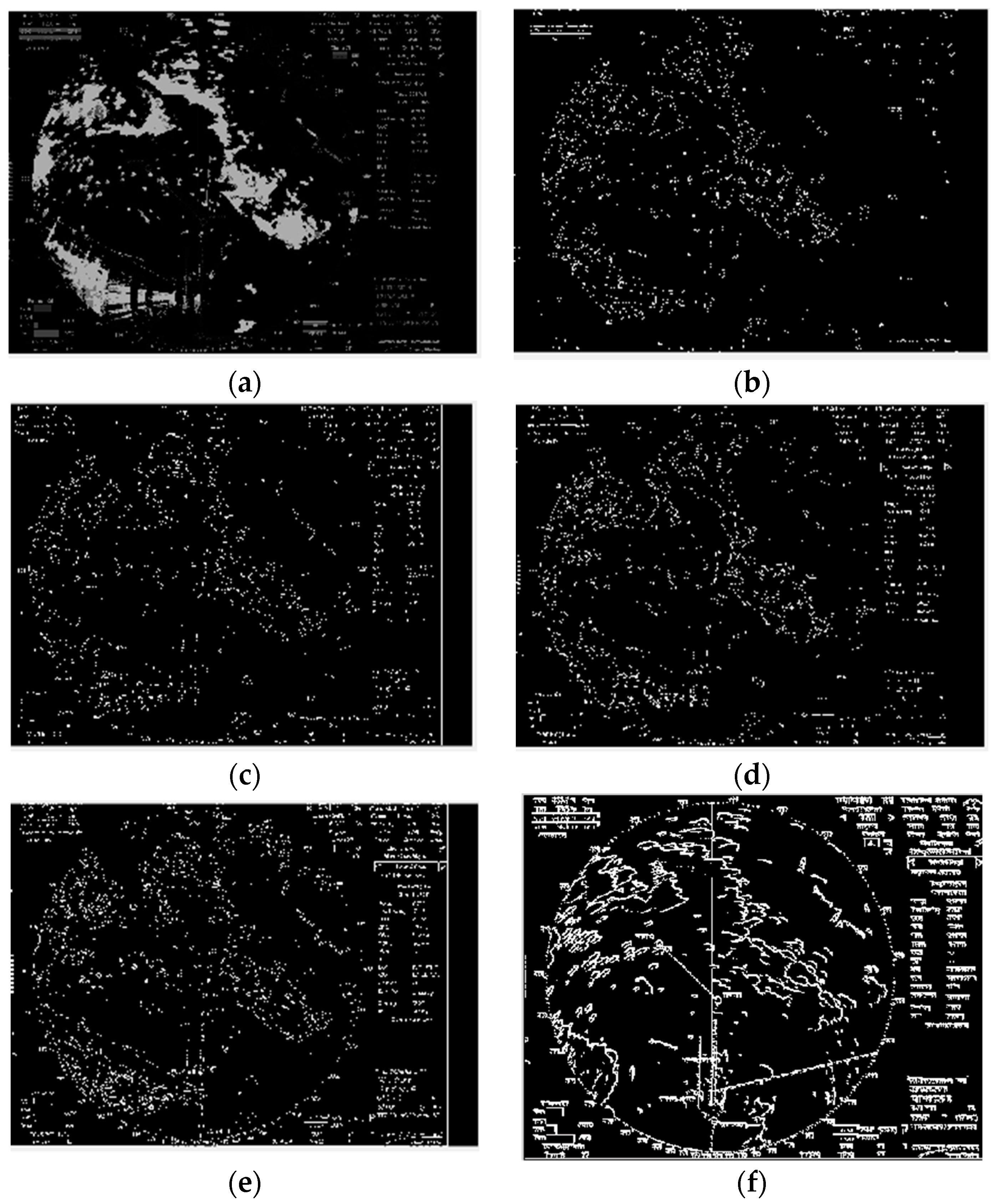

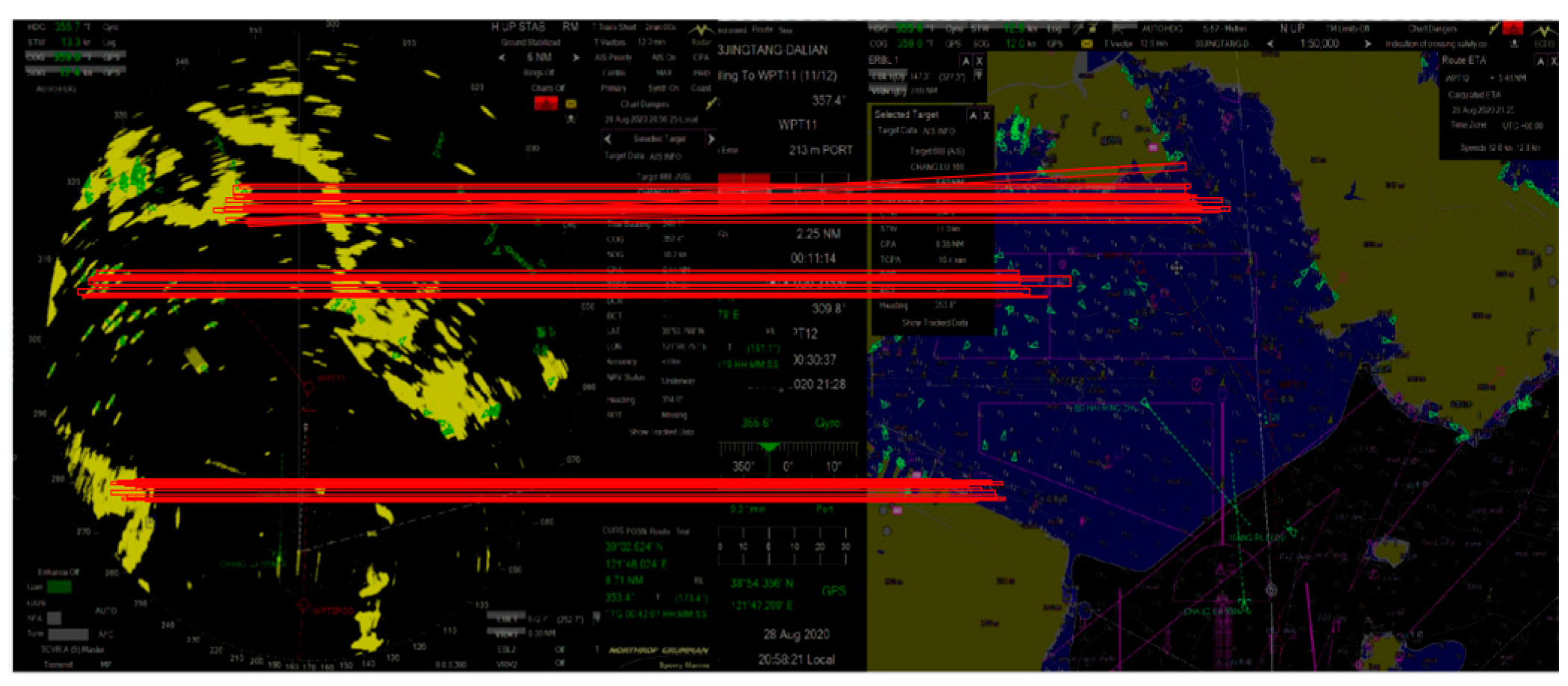

3.1. Local Adaptive Canny Edge Detection

- Choose the number of marine radar echo images to use, and then segment the entire image as needed.

- The local high threshold Qs and low threshold Qe of each marine radar echo image are calculated respectively according to the experiment in this research, Qe is 0.4 Qs.

- A Gaussian filter is used to filter Gaussian noise, and the size and direction of the gradient are calculated [21].

- Non-maximum suppression is utilized to gradient values. Get rid of part of the non-edge pixels and retrieval candidate edges to highlight the most possible edge pixels.

- The Qs and Qe are used to identify and link the edge of each radar echo image. When the gradient of a pixel is greater than Qs, the pixel is marked as an edge pixel, but when the gradient of a pixel is lower than Qe, the pixel is defined as the background. The processed marine radar echo image is merged into a complete image.

3.2. Image Conversion

3.2.1. Mapping Relationship

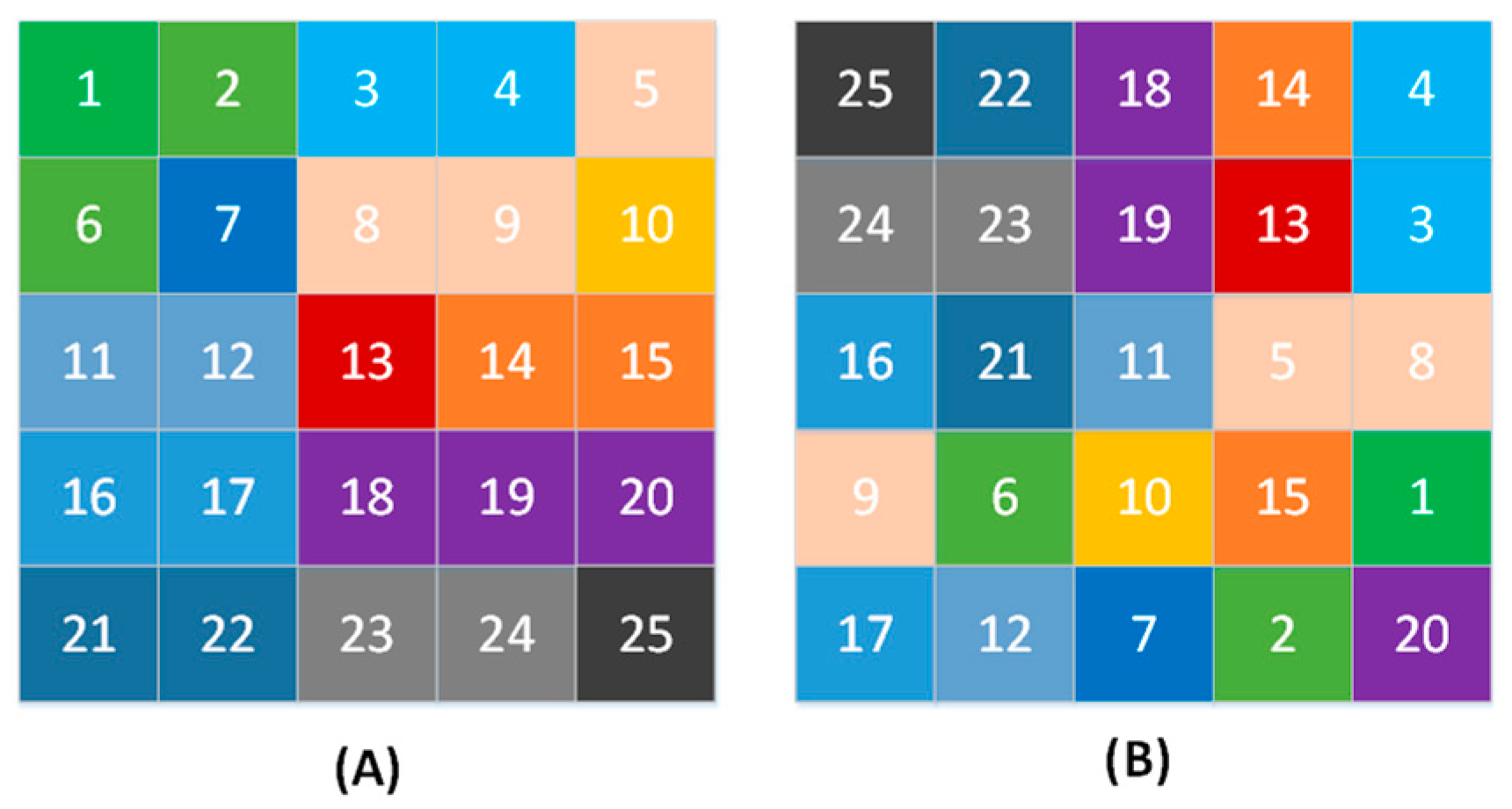

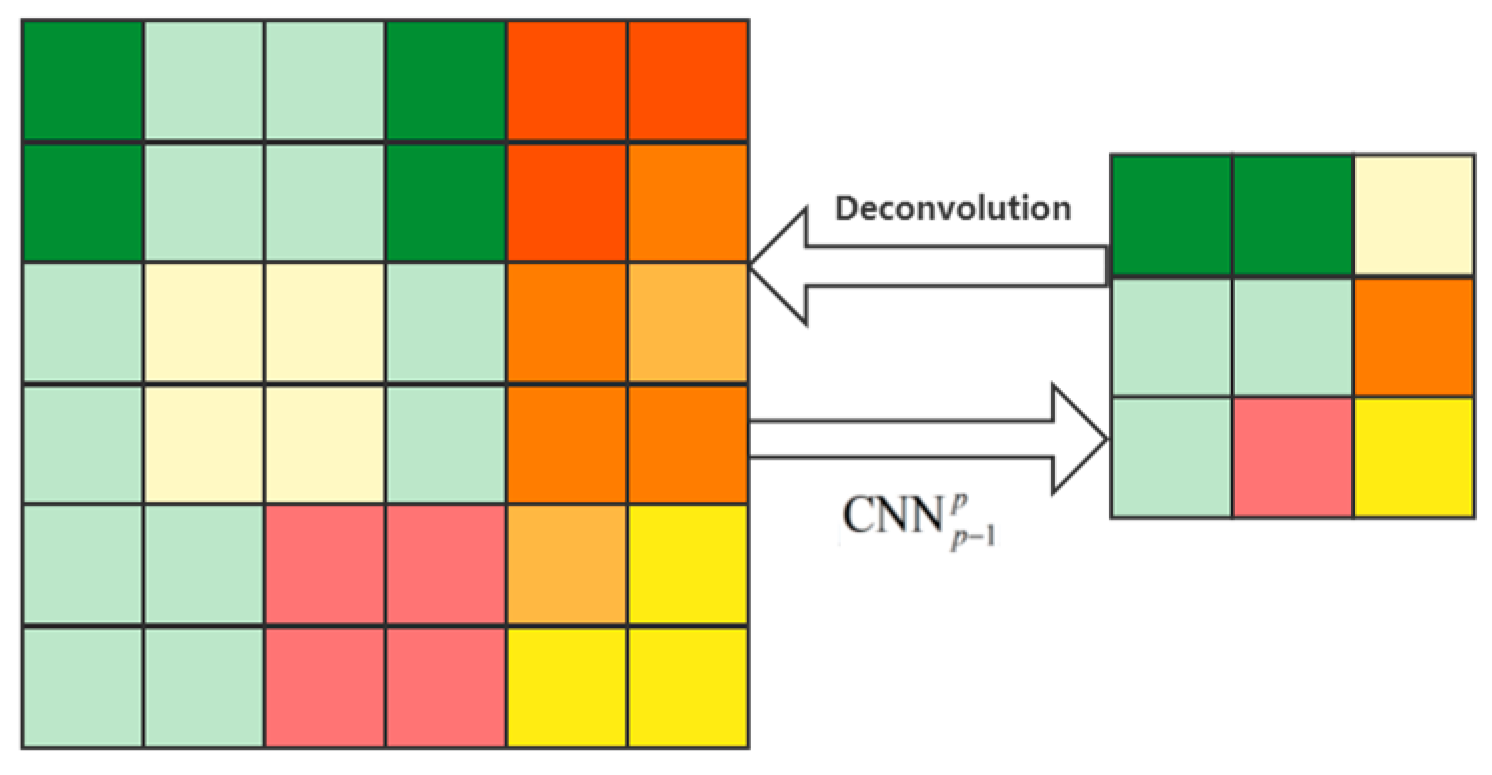

3.2.2. Restructure

3.3. Image Matching

4. Fusion Algorithm

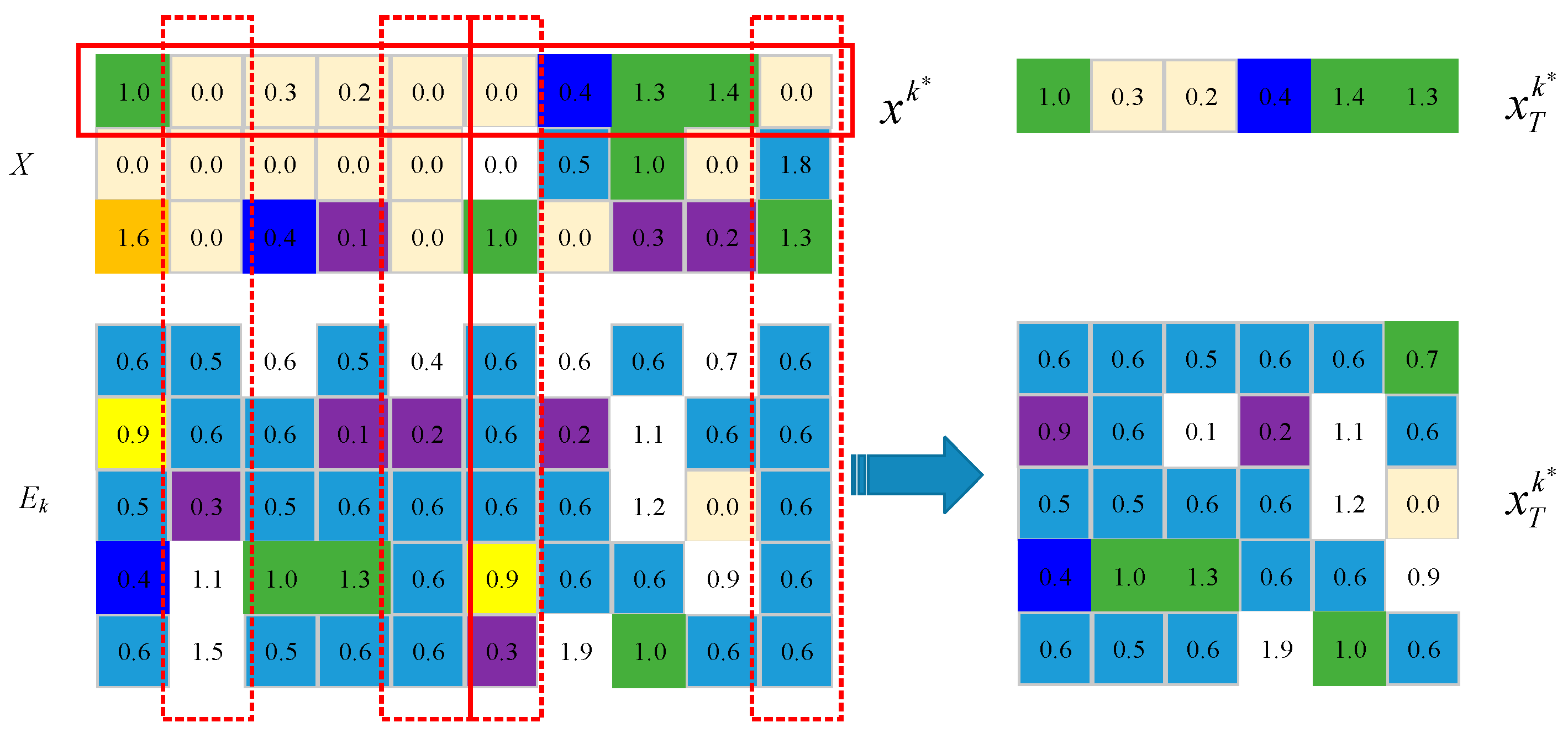

4.1. Sparse Theory

4.2. Fast Fourier Transform

4.3. Dictionary Learning

4.4. Fusion Algorithm

- Taking the region coefficient of radar echo image R, the approximate coefficient after image fusion is denoted as .

- Solve the relative standard deviation of and , denoted as and .

- Calculate the absolute value of the difference between and , denoted as .

- Solve the energy value of .

- Judge the three values of , and , select the most representative parameters, denoted as .

5. Experimental Results and Analysis

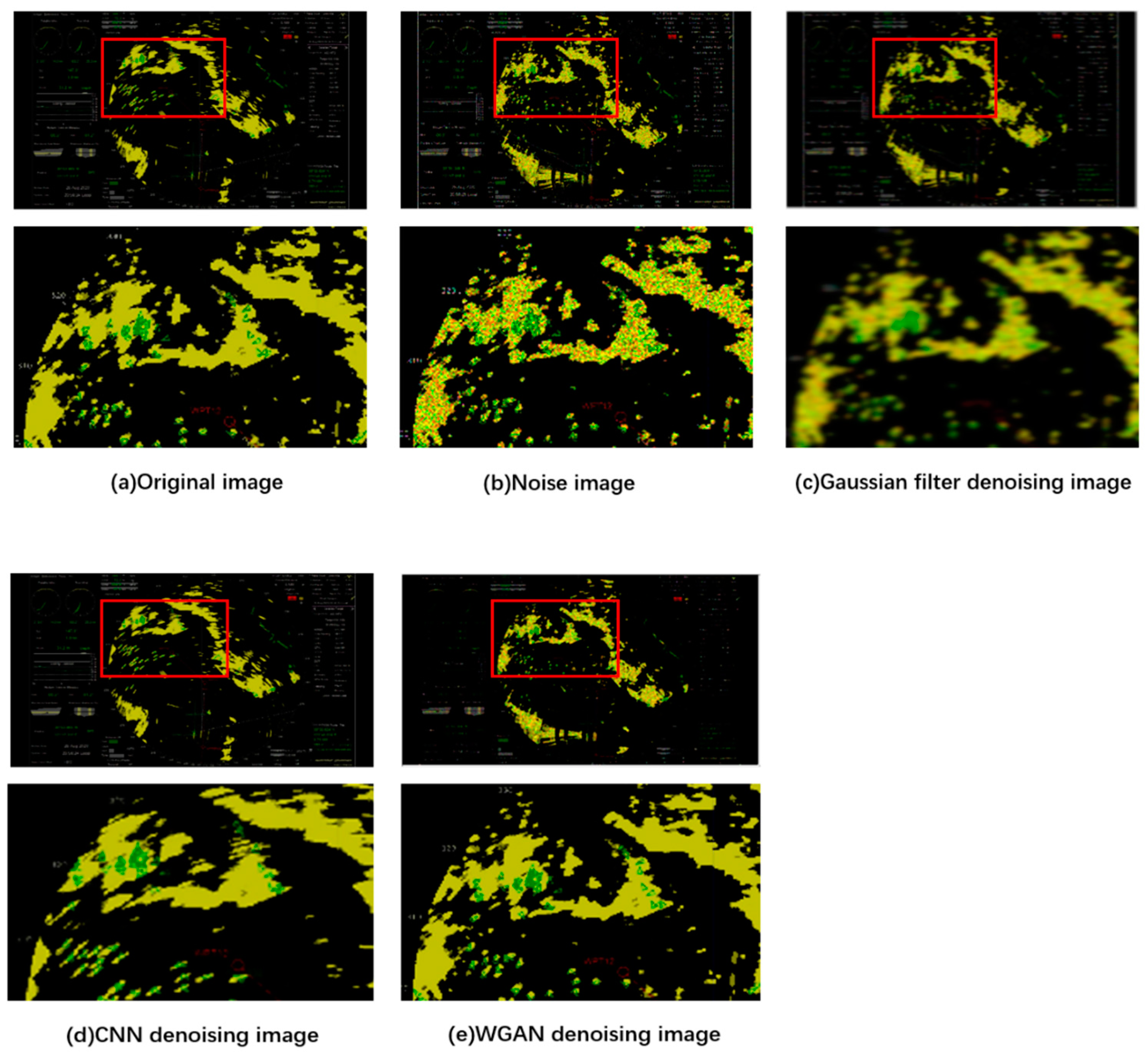

5.1. Image Denoising and Edge Detection

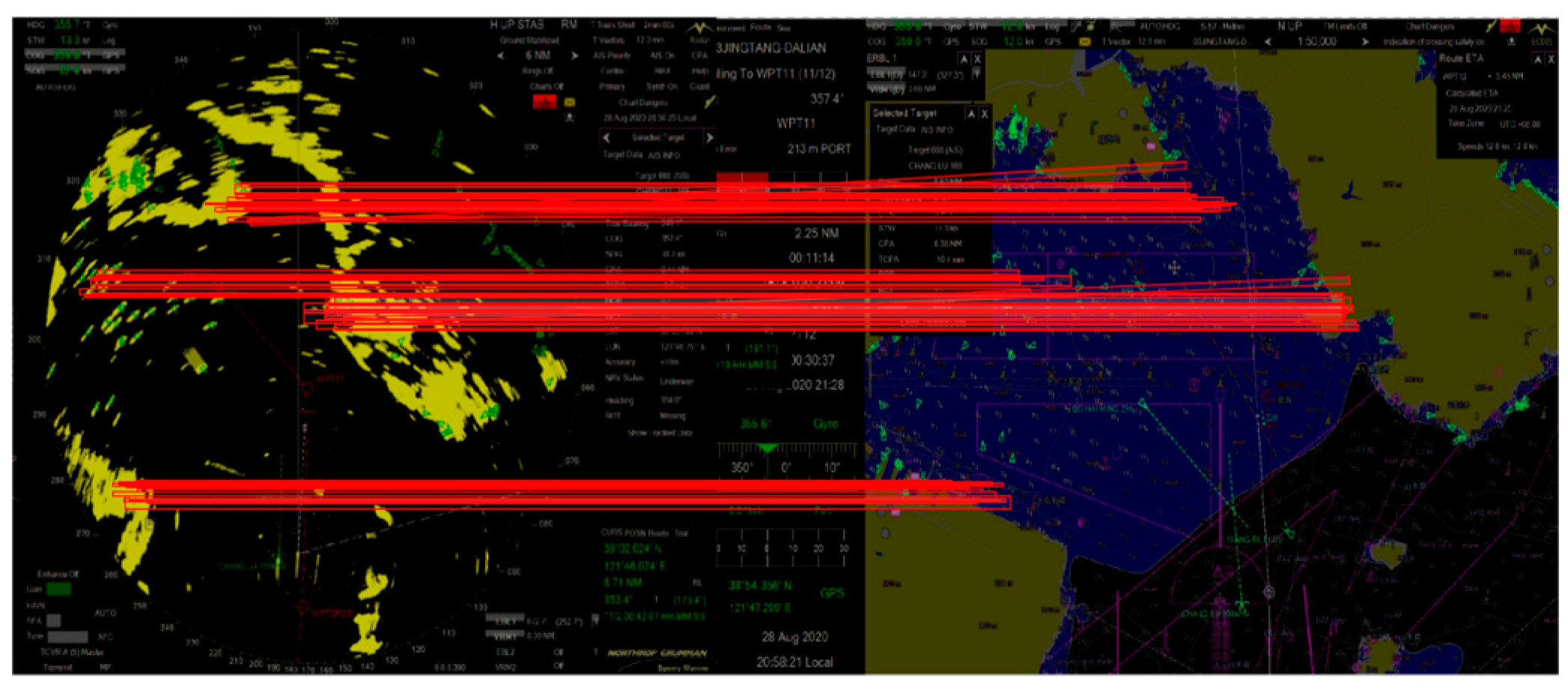

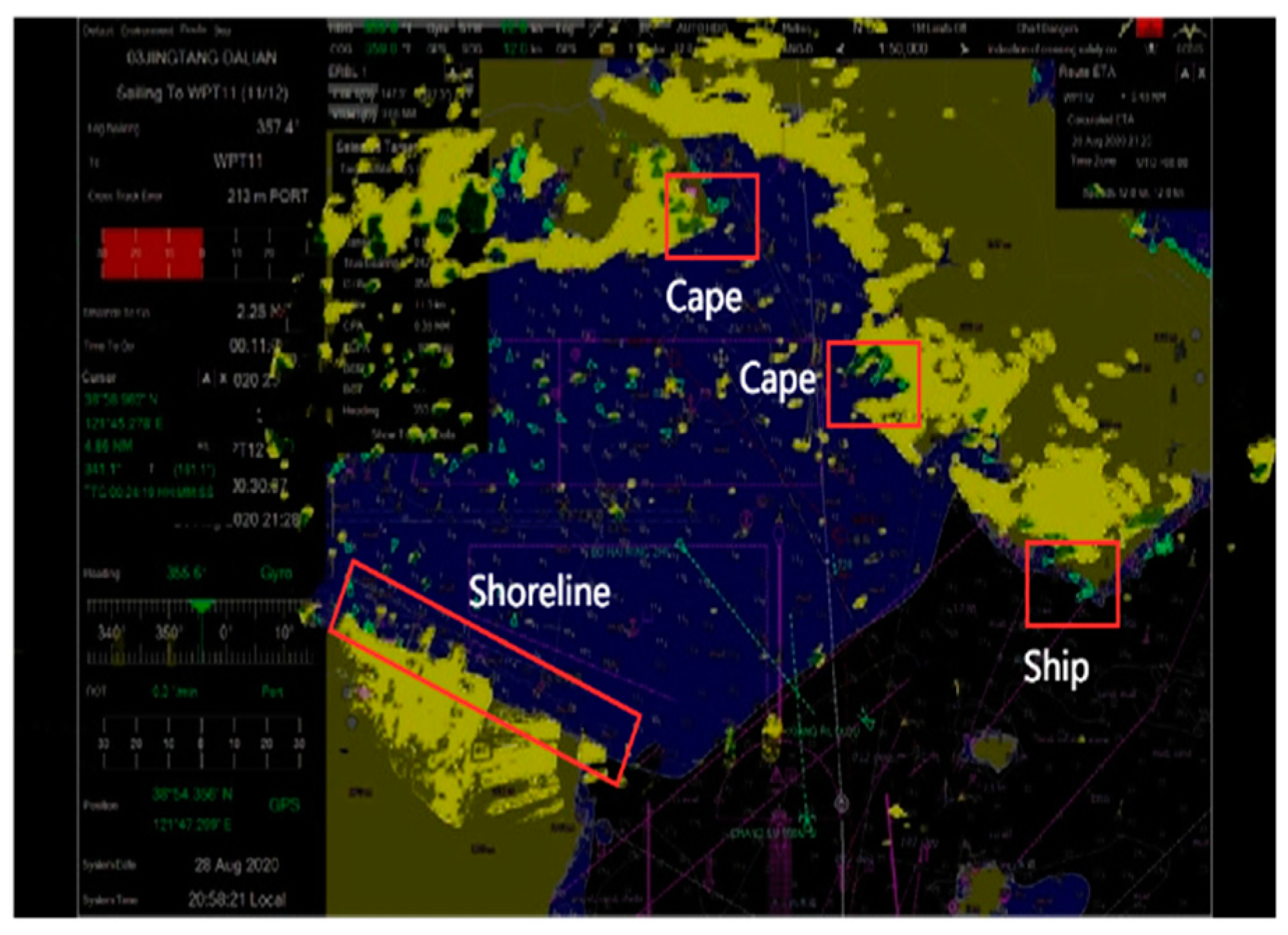

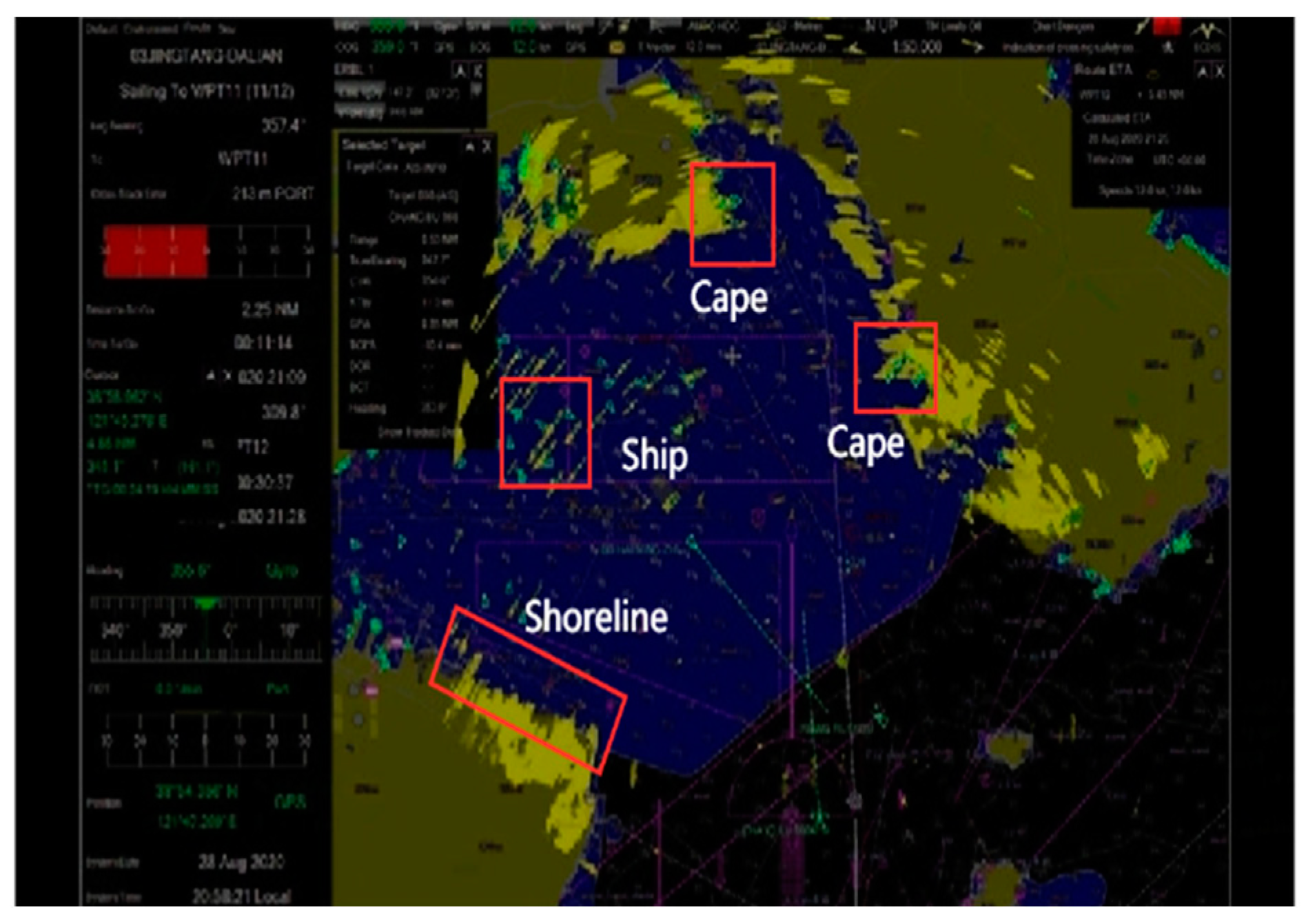

5.2. Image Registration

5.3. Image Fusion Effect

5.4. Image Fusion Quality Evaluation

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zhang, C.; Cao, C.; Guo, C.; Li, T.; Guo, M. Navigation multisensor fault diagnosis approach for an unmanned surface vessel adopted particle-filter method. IEEE Sens. J. 2021, 1, 1–8. [Google Scholar] [CrossRef]

- Abbasi, A.; Monadjemi, A.; Fang, L.; Rabbani, H.; Zhang, Y. Three-dimensional optical coherence tomography image denoising through multi-input fully-convolutional networks. Comput. Biol. Med. 2019, 108, 1–8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Adv. Neural Inf. Process. Syst. 2014, 3, 2672–2680. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, J.; Ma, W.; Wu, Y.; Jiao, L. Multimodal remote sensing image registration based on image transfer and local features. IEEE Geosci. Remote. Sens. Lett. 2019, 16, 1210–1214. [Google Scholar] [CrossRef]

- Meng, Y.; Zhang, Z.; Yin, H.; Ma, T. Automatic detection of particle size distribution by image analysis based on local adaptive canny edge detection and modified circular Hough transform. Micron 2018, 106, 34–41. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.H.; Zhang, Y.J.; Zhang, C. Faster R-CNN based data fusion of electronic charts and radar images. Syst. Eng. Electron. 2020, 42, 1267–1273. [Google Scholar]

- Li, H.Q.; Yu, Q.C.; Fang, M. Application of Otsu thresholding method on canny operator. Comput. Eng. Des. 2008, 29, 2297–2299. [Google Scholar]

- Liu, Z.; Li, H.; Zhang, L.; Zhou, W.; Tian, Q. Cross-Indexing of Binary SIFT Codes for Large-Scale Image Search. IEEE Trans. Image Process. 2014, 23, 2047–2057. [Google Scholar] [CrossRef]

- Zhou, Z.; Wu, Q.M.J.; Wan, S.; Sun, W.; Sun, X. Integrating SIFT and CNN Feature Matching for Partial-Duplicate Image Detection. IEEE Trans. Emerg. Top. Comput. Intell. 2020, 4, 593–604. [Google Scholar] [CrossRef]

- Donderi, D.C.; Mercer, R.; Hong, M.B.; Skinner, D. Simulated Navigation Performance with Marine Electronic Chart and Information Display Systems (ECDIS). J. Navig. 2004, 57, 189–202. [Google Scholar] [CrossRef]

- Liu, W.T.; Ma, J.X.; Zhuang, X.B. Research on radar image & chart graph overlapping technique in ECDIS. Navig. China 2005, 62, 59–63. [Google Scholar]

- Yang, G.L.; Dou, Y.B.; Zheng, R.C. Method of image overlay on radar and electronic chart. J. Chin. Inert. Technol. 2010, 18, 181–184. [Google Scholar]

- Guo, M.; Guo, C.; Zhang, C.; Zhang, D.; Gao, Z. Fusion of ship perceptual information for electronic navigational chart and radar images based on deep learning. J. Navig. 2020, 73, 192–211. [Google Scholar] [CrossRef]

- Vishwakarma, A.; Bhuyan, M.K. Image fusion using adjustable non-subsampled shearlet transform. IEEE Trans. Instrum. Meas. 2019, 68, 3367–3378. [Google Scholar] [CrossRef]

- Yang, Q.; Yan, P.; Zhang, Y.; Yu, H.; Shi, Y.; Mou, X.; Kalra, M.K.; Zhang, Y.; Sun, L.; Wang, G. Low-dose CT image denoising using a generative adversarial network with wasserstein distance and perceptual loss. IEEE Trans. Med. Imaging. 2018, 37, 1348–1357. [Google Scholar] [CrossRef]

- Ma, X.; Hu, S.; Liu, S.; Fang, J.; Xu, S. Multi-focus image fusion based on joint sparse representation and optimum theory. Signal Process. Image Commun. 2019, 78, 125–134. [Google Scholar] [CrossRef]

- Chen, L.; Qu, H.; Zhao, J.; Chen, B.; Principe, J.C. Efficient and robust deep learning with Correntropy-induced loss function. Neural Comput. Appl. 2016, 27, 1019–1031. [Google Scholar] [CrossRef]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; Volume 90, pp. 770–778. [Google Scholar]

- Hu, S.; Zhang, H. Image edge detection based on FCM and improved canny operator in NSST domain. In Proceedings of the 2018 14th IEEE International Conference on Signal Processing (ICSP), Beijing, China, 12–16 August 2018; pp. 363–368. [Google Scholar]

- Bai, X.; Peng, X. Radar image series denoising of space targets based on gaussian process regression. IEEE Trans. Geosci. Remote. Sens. 2019, 57, 4659–4669. [Google Scholar] [CrossRef]

- Liao, J.; Yao, Y.; Yuan, L.; Hua, G.; Kang, S.B. Visual attribute transfer through deep image analogy. ACM Trans. Graph. 2017, 36, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Yuan, L.; Zhao, Q.; Gui, L.; Cao, J. High-dimension tensor completion via gradient-based optimization under tensor-train format. Signal. Process. Image Commun. 2018, 73, 53–61. [Google Scholar] [CrossRef]

- Elhamifar, E.; Vidal, R. Sparse subspace clustering: Algorithm, theory, and applications. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2765–2781. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kircheis, M.; Potts, D. Direct inversion of the nonequispaced fast Fourier transform. Linear Algebra its Appl. 2019, 575, 106–140. [Google Scholar] [CrossRef] [Green Version]

- Kreutz-Delgado, K.; Murray, J.F.; Rao, B.D.; Engan, K.; Lee, T.-W.; Sejnowski, T.J. Dictionary learning algorithms for sparse representation. Neural Comput. 2003, 15, 349–396. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- El Helou, M.; Susstrunk, S. Blind universal bayesian image denoising with gaussian noise level learning. IEEE Trans. Image Process. 2020, 29, 4885–4897. [Google Scholar] [CrossRef] [Green Version]

- Chen, H.; Zhang, Y.; Kalra, M.K.; Lin, F.; Chen, Y.; Liao, P.; Zhou, J.; Wnag, G. Low-dose CT with a residual encoder-decoder convolutional neural network (RED-CNN). IEEE Trans. Med. Imaging 2017, 36, 2524–2535. [Google Scholar] [CrossRef]

- Li, Z.; Shi, W.; Xing, Q.; Miao, Y.; He, W.; Yang, H.; Jiang, Z. Low-dose CT image denoising with improving WGAN and hybrid loss function. Comput. Math. Methods Med. 2021, 13, 10435–10440. [Google Scholar]

- Anselmo, M.; Giammarresi, D.; Madonia, M. Sets of pictures avoiding overlaps. Int. J. Found. Comput. Sci. 2019, 30, 875–898. [Google Scholar] [CrossRef]

| Method | Gaussian Filter | CCN | WGAN |

|---|---|---|---|

| PSNR | 39.52 | 41.39 | 42.58 |

| SSI | 0.7903 | 0.8460 | 0.9223 |

| Method | Canny | Roberts | Sobel | LoG | Improved Canny |

|---|---|---|---|---|---|

| PSNR | 40.23 | 41.85 | 41.92 | 40.36 | 43.31 |

| SSI | 0.6346 | 0.6931 | 0.7679 | 0.72.40 | 0.8342 |

| Run time/s | 0.26 | 0.37 | 0.43 | 0.35 | 0.15 |

| Parameter | RMSE | CCN | PSNR | Precision Rate |

|---|---|---|---|---|

| SIFT | 0.3471 | 386 | 44.3 | 95% |

| SURT | 0.4126 | 336 | 39.1 | 82% |

| Method | IVIF | Qo | Qw | CO |

|---|---|---|---|---|

| Proposed Method | 0.8663 | 0.8620 | 0.7796 | 0.9366 |

| Faster R-CNN | 0.6953 | 0.6528 | 0.7525 | 0.8311 |

| Wavelet Transform | 0.7402 | 0.7682 | 0.7678 | 0.8917 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, C.; Fang, M.; Yang, C.; Yu, R.; Li, T. Perceptual Fusion of Electronic Chart and Marine Radar Image. J. Mar. Sci. Eng. 2021, 9, 1245. https://doi.org/10.3390/jmse9111245

Zhang C, Fang M, Yang C, Yu R, Li T. Perceptual Fusion of Electronic Chart and Marine Radar Image. Journal of Marine Science and Engineering. 2021; 9(11):1245. https://doi.org/10.3390/jmse9111245

Chicago/Turabian StyleZhang, Chuang, Meihan Fang, Chunyu Yang, Renhai Yu, and Tieshan Li. 2021. "Perceptual Fusion of Electronic Chart and Marine Radar Image" Journal of Marine Science and Engineering 9, no. 11: 1245. https://doi.org/10.3390/jmse9111245

APA StyleZhang, C., Fang, M., Yang, C., Yu, R., & Li, T. (2021). Perceptual Fusion of Electronic Chart and Marine Radar Image. Journal of Marine Science and Engineering, 9(11), 1245. https://doi.org/10.3390/jmse9111245