Monthly and Quarterly Sea Surface Temperature Prediction Based on Gated Recurrent Unit Neural Network

Abstract

1. Introduction

2. Materials and Methods

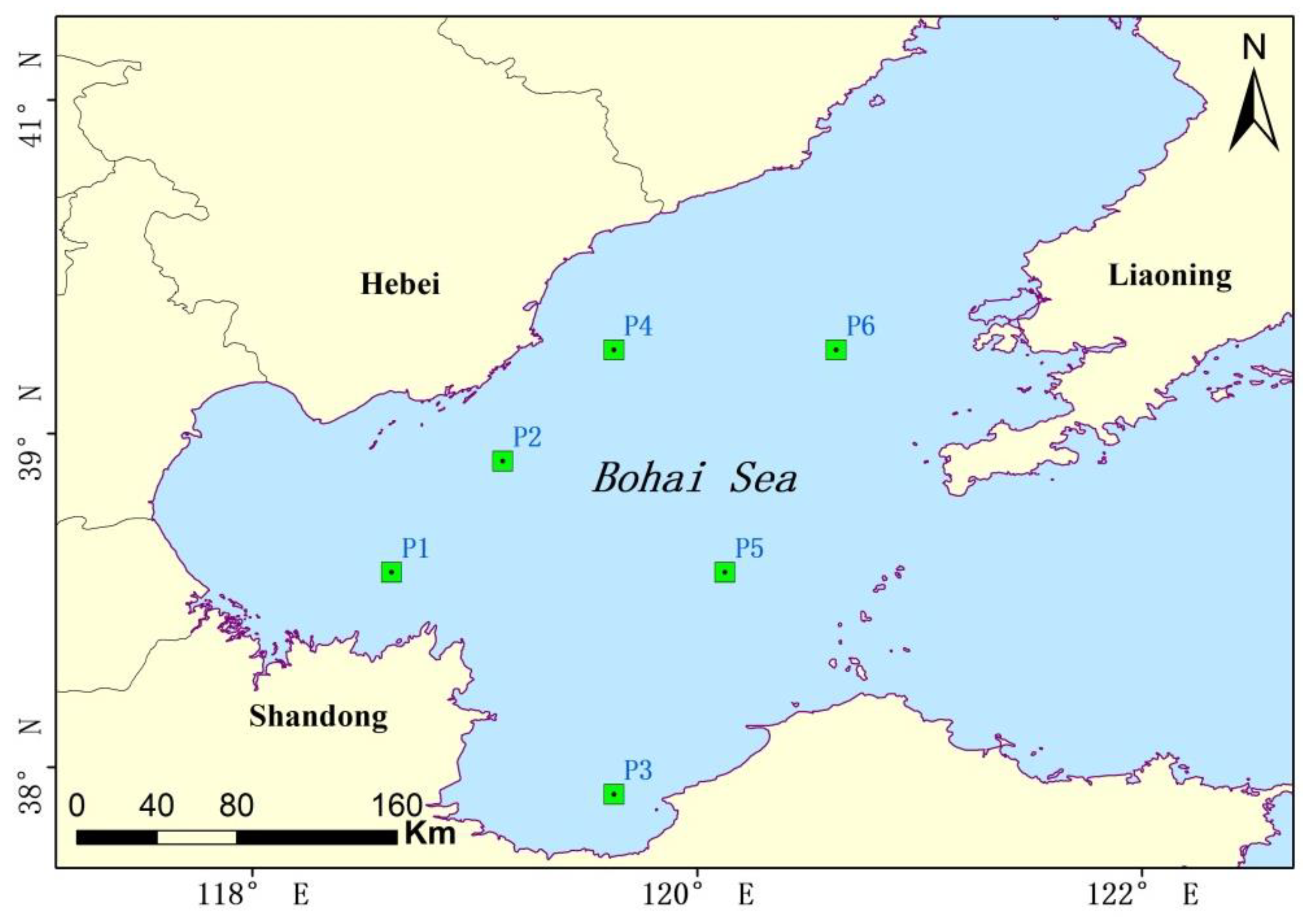

2.1. Study Area

2.2. Data Source

3. Methods

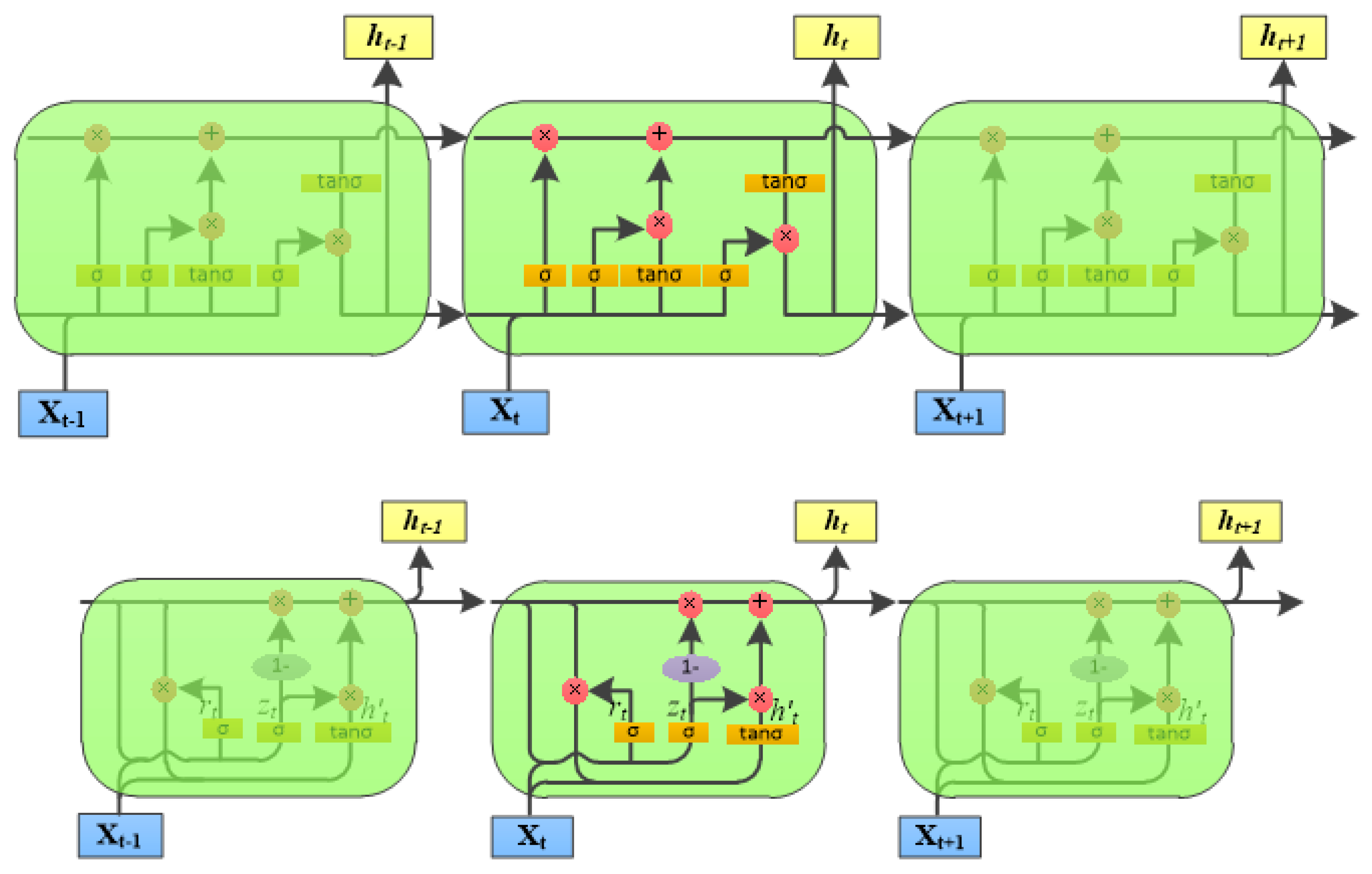

3.1. GRU Neural Network Model

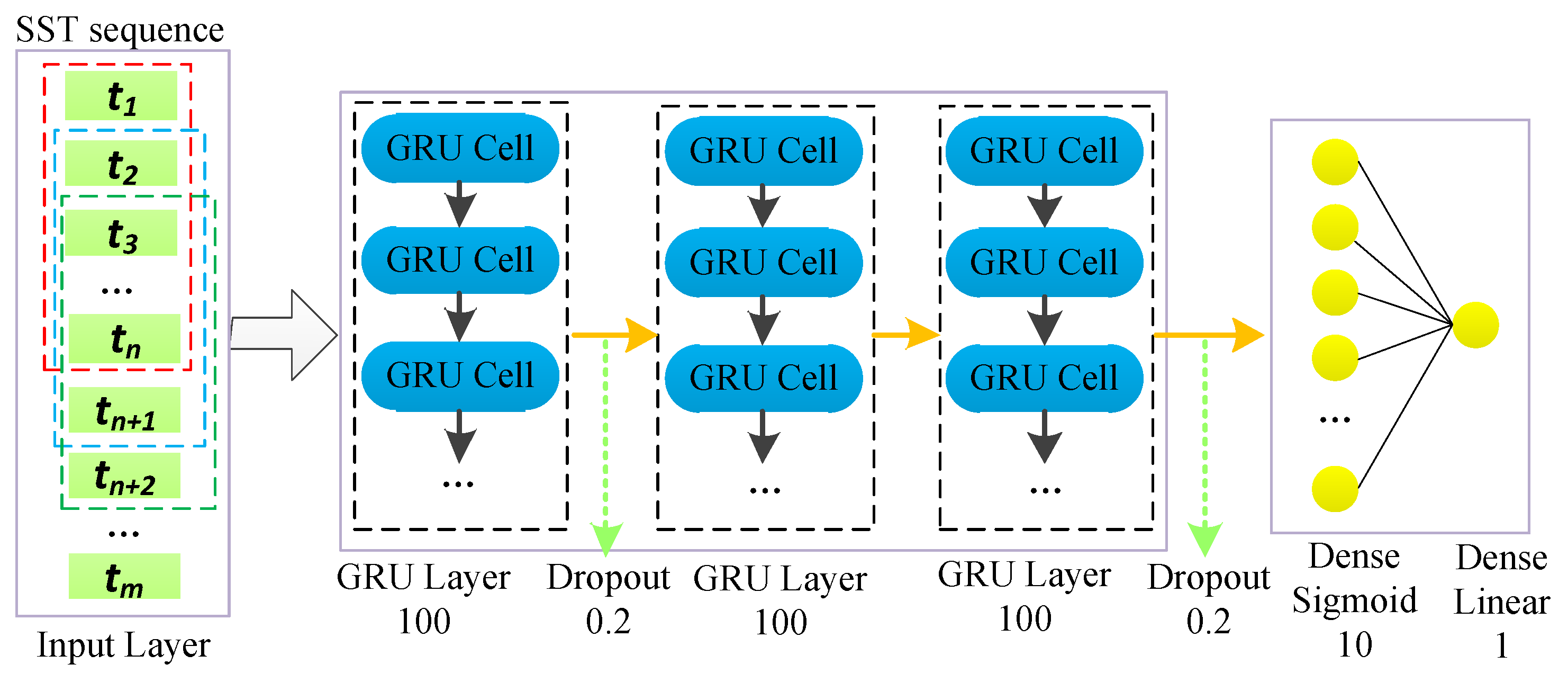

3.2. Construction of GRU Model for Medium- and Long-term SST Prediction

3.3. Data Preprocessing

4. Results and Discussion

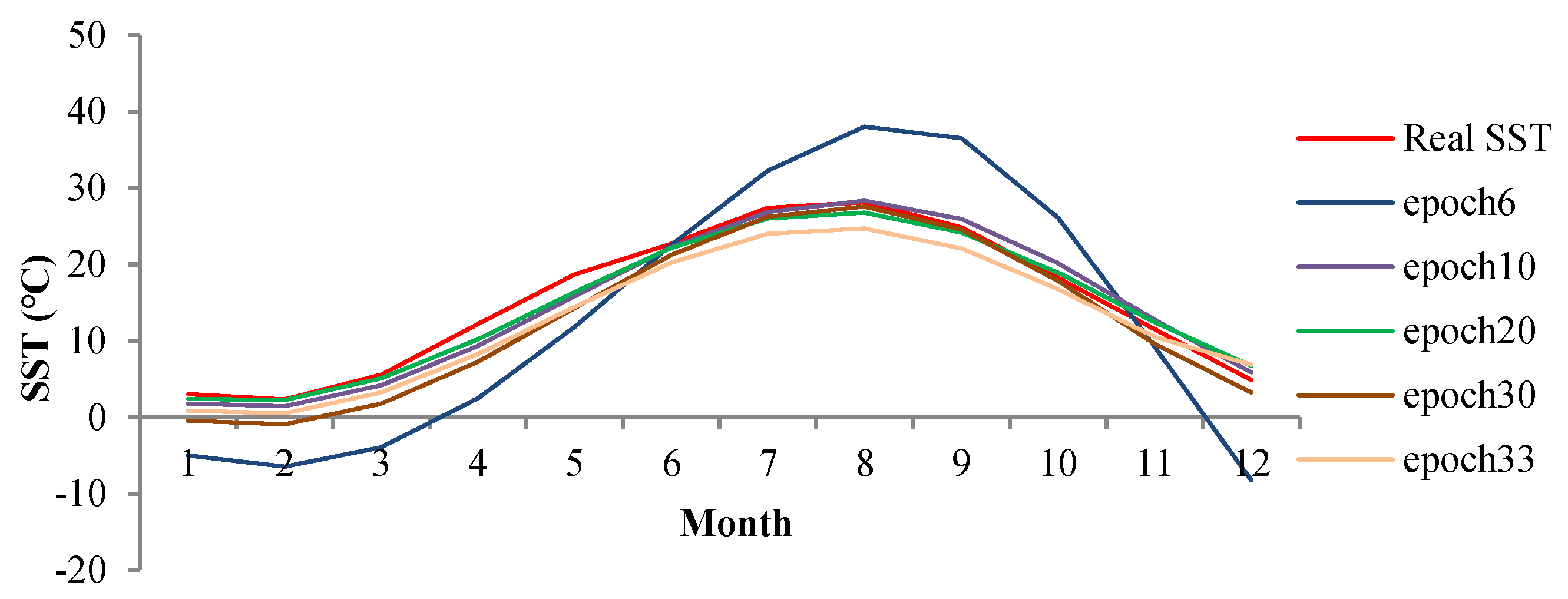

4.1. Experiment Setup

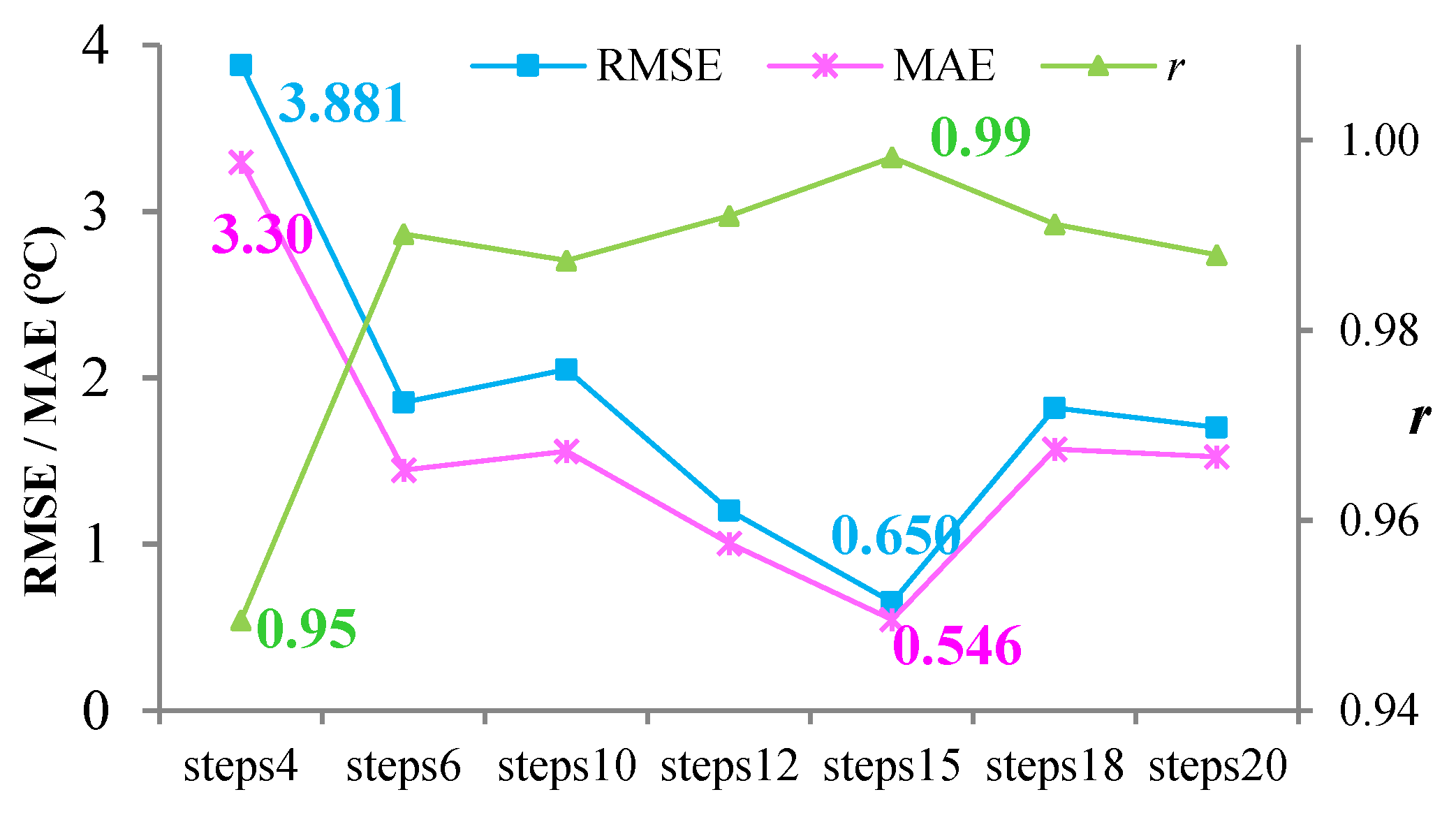

4.2. Results of Using Different Parameters

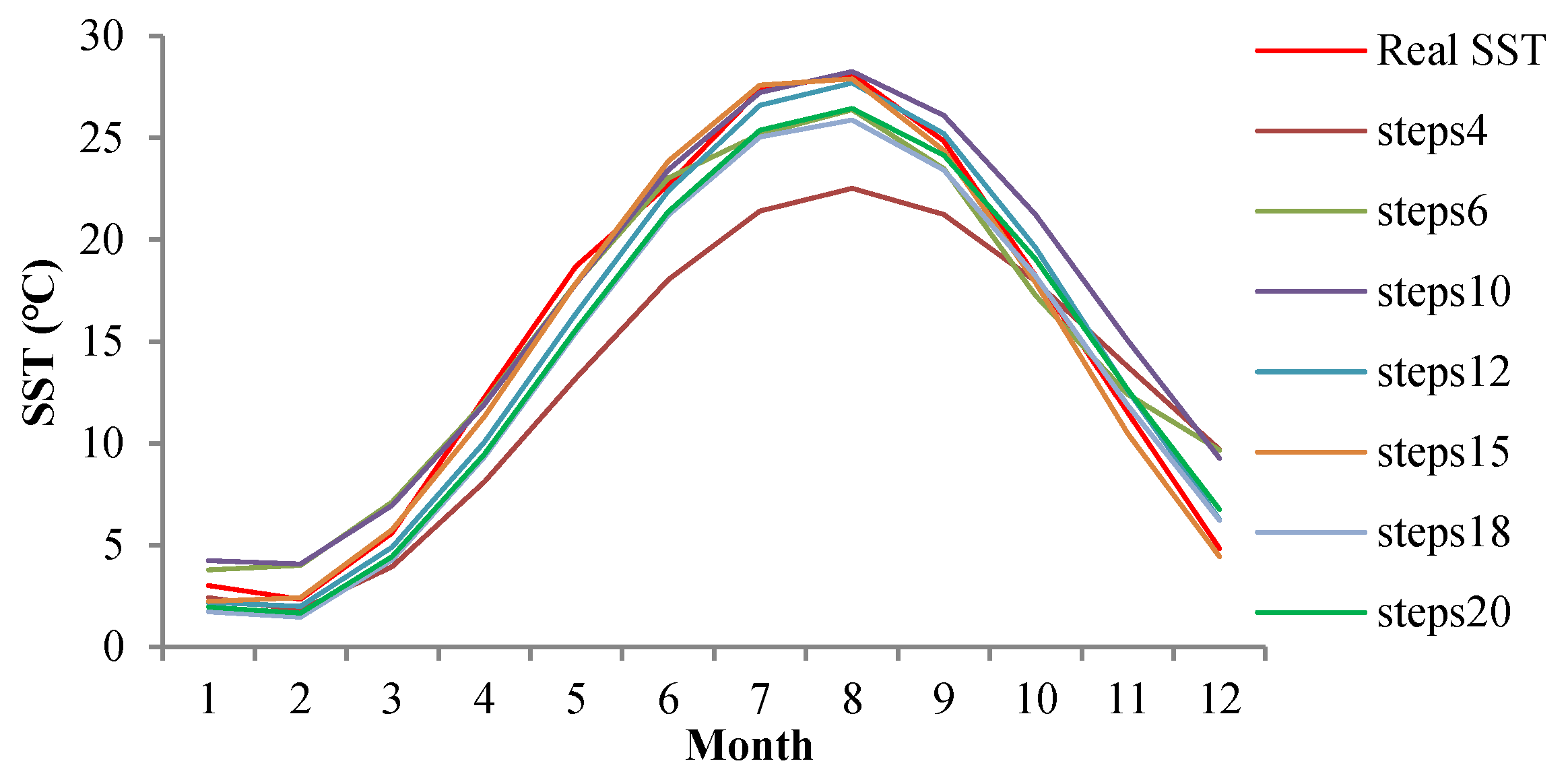

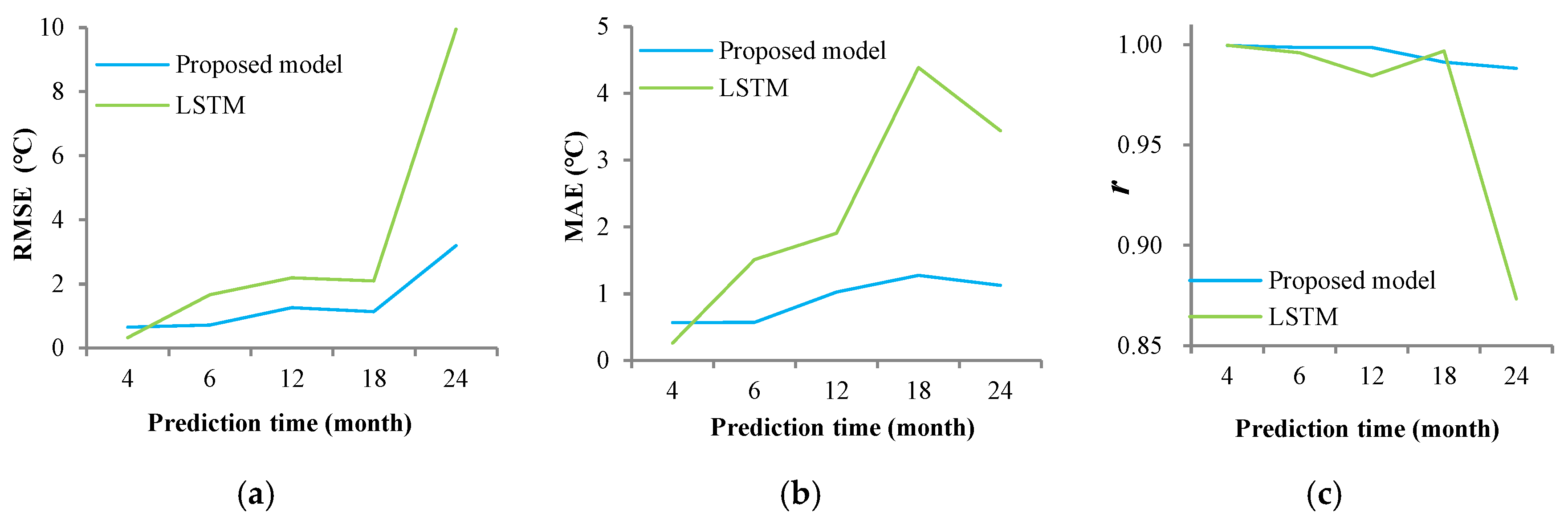

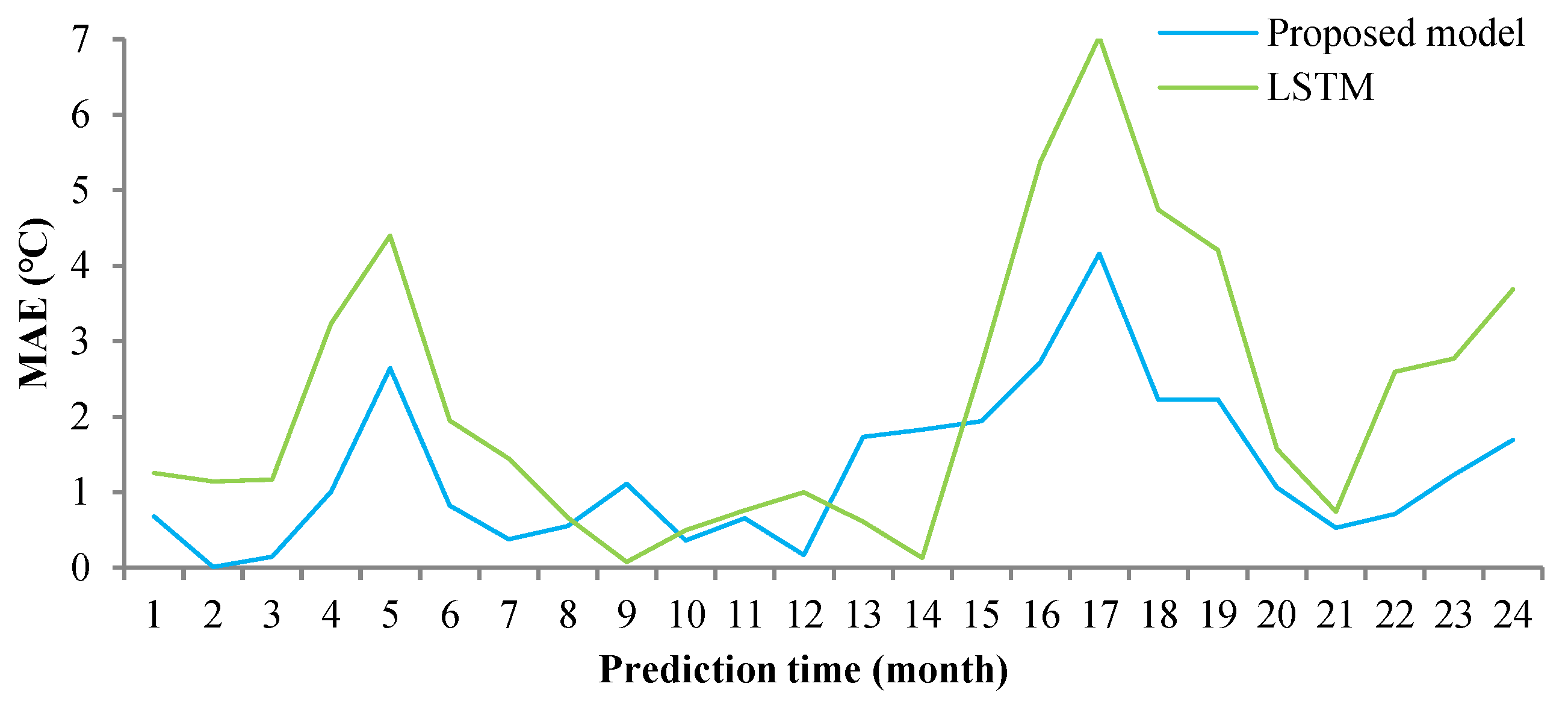

4.3. Monthly Data Prediction

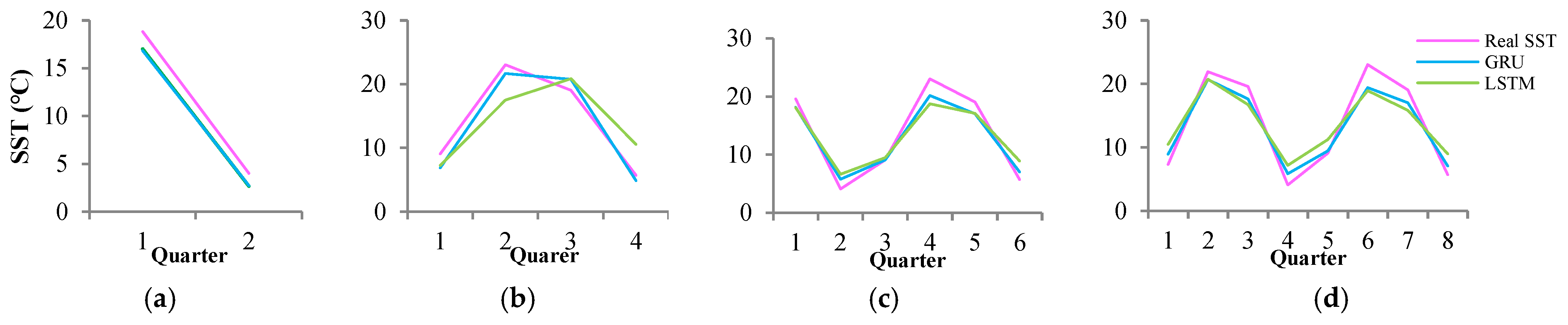

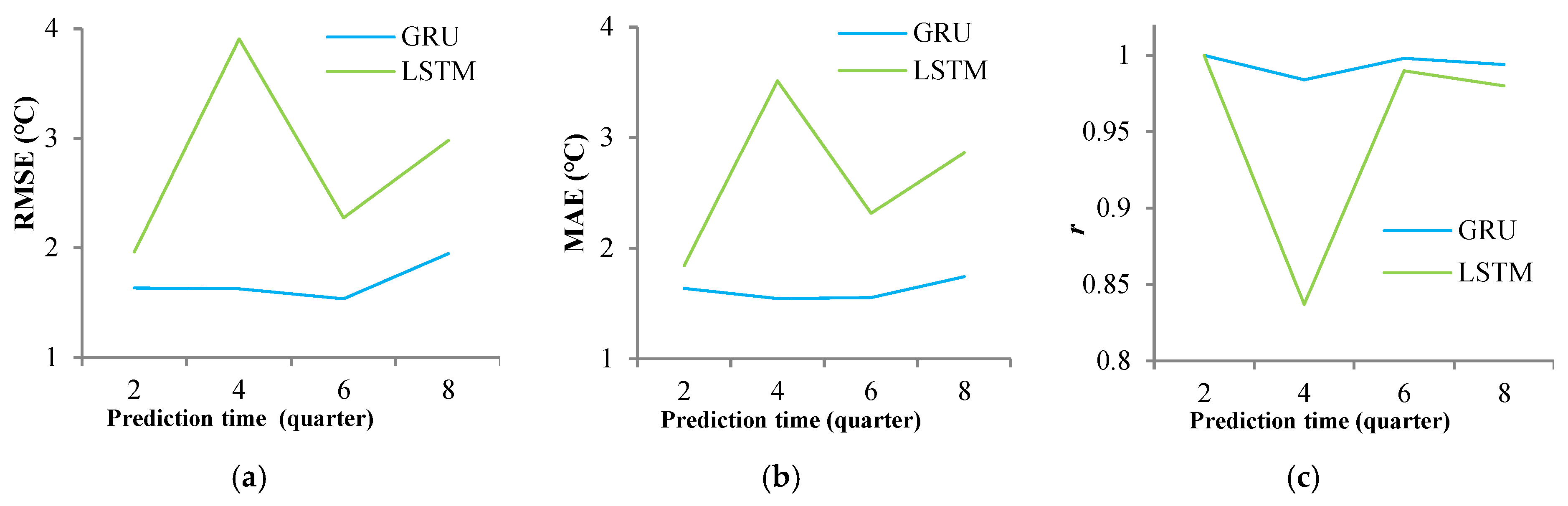

4.4. Quarterly Data Prediction

5. Conclusions

- (1)

- The designed SST prediction model based on GRU can efficiently fit the trend of the real SST and has high reliability. Additionally, the proposed model in this paper has the characteristics of a conservative estimation for SST prediction; that is, the predicted value of SST is smaller than the real value. Furthermore, LSTM experiences the same problem as the proposed method in this paper.

- (2)

- In the prediction at the monthly time scale, RMSE and MAE are mostly concentrated in the range of 0–2.5 °C. In the prediction at the quarterly time scale, RMSE and MAE are mostly concentrated in the range of 0–2 °C. The r values of both time scales are above 0.98, indicating high prediction accuracy.

- (3)

- The proposed prediction model has more stable results than LSTM. The advantages are more evident with an increase in prediction length.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Frank, J.; Wentz, C.G.; Smith, D.; Chelton, D. Satellite measurements of sea surface temperature through clouds. Science 2000, 288, 847–850. [Google Scholar] [CrossRef]

- Sumner, M.D.; Michael, K.J.; Bradshaw, C.J.; Hindell, M.A. Remote sensing of Southern Ocean sea surface temperature: Implications for marine biophysical models. Remote Sens. Environ. 2003, 84, 161–173. [Google Scholar] [CrossRef]

- Sun, W.; Zhang, J.; Meng, J.; Liu, Y. Sea surface temperature characteristics and trends in China offshore seas from 1982 to 2017. J. Coast. Res. 2019, 90, 27–34. [Google Scholar] [CrossRef]

- Bouali, M.; Sato, O.T.; Polito, P.S. Temporal trends in sea surface temperature gradients in the South Atlantic Ocean. Remote Sens. Environ. 2017, 194, 100–114. [Google Scholar] [CrossRef]

- Herbert, T.D.; Peterson, L.C.; Lawrence, K.T.; Liu, Z. Tropical Ocean Temperatures over the Past 3.5 Million Years. Science 2010, 328, 1530–1534. [Google Scholar] [CrossRef] [PubMed]

- Yao, S.-L.; Luo, J.-J.; Huang, G.; Wang, P. Distinct global warming rates tied to multiple ocean surface temperature changes. Nat. Clim. Chang. 2017, 7, 486–491. [Google Scholar] [CrossRef]

- Solanki, H.U.; Bhatpuria, D.; Chauhan, P. Signature analysis of satellite derived SSHa, SST and chlorophyll concentration and their linkage with marine fishery resources. J. Mar. Syst. 2015, 150, 12–21. [Google Scholar] [CrossRef]

- Kim, D.-I. Effects of temperature, salinity and irradiance on the growth of the harmful red tide dinoflagellate Cochlodinium polykrikoides Margalef (Dinophyceae). J. Plankton Res. 2004, 26, 61–66. [Google Scholar] [CrossRef]

- Li, S.; Goddard, L.; Dewitt, D.G. Predictive Skill of AGCM Seasonal Climate Forecasts Subject to Different SST Prediction Methodologies. J. Clim. 2008, 21, 2169–2186. [Google Scholar] [CrossRef]

- Patil, K.; Deo, M.C.; Ravichandran, M. Prediction of Sea Surface Temperature by Combining Numerical and Neural Techniques. J. Atmos. Ocean. Technol. 2016, 33, 1715–1726. [Google Scholar] [CrossRef]

- Krishnamurti, T.N.; Chakraborty, A.; Krishnamurti, R.; Dewar, W.K.; Clayson, C.A. Seasonal Prediction of Sea Surface Temperature Anomalies Using a Suite of 13 Coupled Atmosphere–Ocean Models. J. Clim. 2006, 19, 6069–6088. [Google Scholar] [CrossRef]

- Stockdale, T.N.; Balmaseda, M.A.; Vidard, A. Tropical Atlantic SST prediction with coupled ocean–atmosphere GCMs. J. Clim. 2006, 19, 6047–6061. [Google Scholar] [CrossRef]

- Laepple, T.; Jewson, S. Five Year Ahead Prediction of Sea Surface Temperature in the Tropical Atlantic: A Comparison between IPCC Climate Models and Simple Statistical Methods. arXiv 2007, arXiv:physics/0701165. [Google Scholar]

- Xue, Y.; Leetmaa, A. Forecasts of tropical Pacific SST and sea level using a Markov model. Geophys. Res. Lett. 2000, 27, 2701–2704. [Google Scholar] [CrossRef]

- Li, J.K.; Zhao, Y.; Liao, H.L. SST forecast based on BP neural network and improved EMD algorithm. Clim. Environ. Res. 2017, 22, 587–600. [Google Scholar] [CrossRef]

- Lins, I.D.; Araujo, M.; das Chagas Moura, M.; Silva, M.A.; Droguett, E.L. Prediction of sea surface temperature in the tropical Atlantic by support vector machines. Comput. Stat. Data Anal. 2013, 61, 187–198. [Google Scholar] [CrossRef]

- Martinez, S.A.; Hsieh, W.W. Forecasts of tropical Pacific sea surface temperatures by neural networks and support vector regression. Int. J. Oceanogr. 2009, 1–13. [Google Scholar] [CrossRef]

- Álvarez, A.; López, C.; Riera, M.; Hernández-García, E.; Tintoré, J. Forecasting the SST Space-time variability of the Alboran Sea with genetic algorithms. Geophys. Res. Lett. 2000, 27, 2709–2712. [Google Scholar] [CrossRef]

- Garcia-Gorriz, E.; Garcia-Sanchez, J. Prediction of sea surface temperatures in the western Mediterranean Sea by neural networks using satellite observations. Geophys. Res. Lett. 2007, 34. [Google Scholar] [CrossRef]

- Patil, K.; Deo, M.C. Prediction of daily sea surface temperature using efficient neural networks. Ocean Dyn. 2017, 67, 357–368. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, H.; Dong, J.; Zhong, G.; Sun, X. Prediction of Sea Surface Temperature Using Long Short-Term Memory. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1745–1749. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Dong, J.; Sun, X.; Lima, E.; Mu, Q.; Wang, X. A CFCC-LSTM Model for Sea Surface Temperature Prediction. IEEE Geosci. Remote Sens. Lett. 2018, 15, 207–211. [Google Scholar] [CrossRef]

- Liu, H.; Tian, H.; Li, Y.; Zhang, L. Comparison of four Adaboost algorithm based artificial neural networks in wind speed predictions. Energy Conv. Manag. 2015, 92, 67–81. [Google Scholar] [CrossRef]

- Xiao, C.; Chen, N.; Hu, C.; Wang, K.; Gong, J.; Chen, Z. Short and mid-term sea surface temperature prediction using time-series satellite data and LSTM-AdaBoost combination approach. Remote Sens. Environ. 2019, 233, 111358. [Google Scholar] [CrossRef]

- Messias, V.R.; Estrella, J.C.; Ehlers, R.; Santana, M.J.; Santana, R.C.; Reiff-Marganiec, S. Combining time series prediction models using genetic algorithm to autoscaling Web applications hosted in the cloud infrastructure. Neural Comput. Appl. 2015, 27, 2383–2406. [Google Scholar] [CrossRef]

- Dey, R.; Salemt, F.M. Gate-variants of Gated Recurrent Unit (GRU) neural networks. In Proceedings of the IEEE 60th International Midwest Symposium on Circuits and Systems (MWSCAS), Boston, MA, USA , 6–9 August 2017; pp. 1597–1600. [Google Scholar] [CrossRef]

- Rana, Rajib. Gated Recurrent Unit (GRU) for Emotion Classification from Noisy Speech. arXiv 2016, arXiv:1612.07778.

- Agarap, A.F.M. A Neural Network Architecture Combining Gated Recurrent Unit (GRU) and Support Vector Machine (SVM) for Intrusion Detection in Network Traffic Data. In Proceedings of the 2018 10th International Conference on Machine Learning and Computing-ICMLC 2018, Macau, China, 26–28 February 2018; 2018; pp. 26–30. [Google Scholar] [CrossRef]

- Wang, F.; Shi, X.; Su, J. A Sentence Segmentation Method for Ancient Chinese Texts Based on Recurrent Neural Network. Acta Sci. Nat. Univ. Pekin. 2017, 53, 255–261. [Google Scholar] [CrossRef]

indicates the sigmoid and tanh neural network layers.

indicates the sigmoid and tanh neural network layers.  represents the pointwise multiplication and addition operations.

represents the pointwise multiplication and addition operations.  is the operation subtracted by 1.

is the operation subtracted by 1.  depicts the input time series data.

depicts the input time series data.  exhibits the output data.

exhibits the output data.

indicates the sigmoid and tanh neural network layers.

indicates the sigmoid and tanh neural network layers.  represents the pointwise multiplication and addition operations.

represents the pointwise multiplication and addition operations.  is the operation subtracted by 1.

is the operation subtracted by 1.  depicts the input time series data.

depicts the input time series data.  exhibits the output data.

exhibits the output data.

| Site | Month | Quarter | |||||

|---|---|---|---|---|---|---|---|

| Mean | Coldest | Warmest | Std.dev | Coldest | Warmest | Std.dev | |

| P1 | 13.12 | −0.44 | 27.05 | 8.48 | 2.18 | 24.09 | 7.69 |

| P2 | 12.99 | 0.22 | 26.51 | 8.16 | 2.65 | 23.13 | 7.46 |

| P3 | 13.59 | −0.31 | 28.59 | 8.90 | 1.83 | 26.06 | 8.09 |

| P4 | 12.86 | −0.22 | 26.57 | 8.33 | 2.11 | 23.36 | 7.62 |

| P5 | 13.13 | 0.66 | 26.79 | 7.86 | 3.13 | 23.21 | 7.19 |

| P6 | 12.53 | 0.26 | 25.82 | 7.95 | 2.75 | 22.66 | 7.29 |

| Site | Pre_4m 1 | Pre_6m 1 | Pre_12m 1 | Pre_18m 1 | Pre_24m 1 | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| RMSE | MAE | RMSE | MAE | RMSE | MAE | RMSE | MAE | RMSE | MAE | ||

| LSTM | P1 | 0.631 | 0.567 | 1.345 | 1.176 | 1.603 | 1.373 | 1.386 | 1.108 | 2.881 | 2.238 |

| P2 | 0.324 | 0.263 | 1.670 | 1.512 | 2.189 | 1.908 | 2.097 | 4.389 | 9.954 | 3.440 | |

| P3 | 1.000 | 0.937 | 1.742 | 1.582 | 1.772 | 1.486 | 1.973 | 1.736 | 3.785 | 2.644 | |

| P4 | 0.616 | 0.545 | 1.423 | 1.183 | 4.248 | 3.288 | 1.958 | 1.750 | 1.986 | 1.494 | |

| P5 | 0.517 | 0.457 | 2.298 | 1.929 | 1.899 | 1.565 | 1.496 | 1.247 | 1.775 | 1.402 | |

| P6 | 0.231 | 0.200 | 1.711 | 1.384 | 1.466 | 1.246 | 2.645 | 2.060 | 1.328 | 0.986 | |

| Proposed model | P1 | 1.362 | 1.286 | 0.598 | 0.552 | 1.247 | 1.168 | 1.112 | 1.026 | 1.613 | 1.275 |

| P2 | 0.653 | 0.569 | 0.717 | 0.570 | 1.258 | 1.027 | 1.129 | 1.275 | 3.196 | 1.124 | |

| P3 | 0.677 | 0.539 | 1.370 | 1.261 | 1.128 | 0.933 | 1.645 | 1.461 | 2.891 | 2.353 | |

| P4 | 1.205 | 1.160 | 1.216 | 1.061 | 1.293 | 1.153 | 1.271 | 1.018 | 1.419 | 1.083 | |

| P5 | 0.862 | 0.807 | 1.089 | 0.908 | 1.256 | 1.044 | 1.355 | 1.158 | 1.636 | 1.286 | |

| P6 | 1.155 | 1.106 | 1.159 | 1.477 | 0.929 | 0.810 | 1.676 | 1.294 | 1.167 | 0.991 | |

| Location | Pre_2q 1 | Pre_4q 1 | Pre_6q 1 | Pre_8q 1 | |||||

|---|---|---|---|---|---|---|---|---|---|

| RMSE | MAE | RMSE | MAE | RMSE | MAE | RMSE | MAE | ||

| LSTM | P1 | 1.676 | 1.636 | 2.150 | 2.023 | 2.810 | 3.045 | 3.482 | 3.336 |

| P2 | 1.964 | 1.842 | 3.907 | 3.513 | 2.276 | 2.315 | 2.980 | 2.865 | |

| P3 | 3.006 | 2.334 | 2.829 | 2.039 | 2.175 | 2.042 | 3.426 | 2.711 | |

| P4 | 2.698 | 2.683 | 2.292 | 1.975 | 1.245 | 0.993 | 1.748 | 1.496 | |

| P5 | 1.578 | 1.325 | 2.751 | 2.433 | 0.727 | 0.699 | 1.395 | 1.025 | |

| P6 | 1.856 | 1.587 | 2.214 | 1.882 | 1.593 | 1.737 | 2.415 | 2.264 | |

| Proposed model | P1 | 1.593 | 1.575 | 2.153 | 1.848 | 1.86 | 1.976 | 1.577 | 1.305 |

| P2 | 1.636 | 1.636 | 1.628 | 1.545 | 1.536 | 1.553 | 1.948 | 1.743 | |

| P3 | 2.239 | 1.955 | 0.993 | 0.794 | 1.692 | 1.679 | 3.423 | 2.896 | |

| P4 | 2.302 | 2.165 | 1.673 | 1.267 | 0.937 | 0.909 | 0.892 | 0.687 | |

| P5 | 1.326 | 1.012 | 2.635 | 2.393 | 0.714 | 0.705 | 1.049 | 0.883 | |

| P6 | 1.504 | 1.153 | 1.870 | 1.763 | 1.135 | 1.126 | 1.600 | 1.275 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Pan, X.; Jiang, T.; Sui, B.; Liu, C.; Sun, W. Monthly and Quarterly Sea Surface Temperature Prediction Based on Gated Recurrent Unit Neural Network. J. Mar. Sci. Eng. 2020, 8, 249. https://doi.org/10.3390/jmse8040249

Zhang Z, Pan X, Jiang T, Sui B, Liu C, Sun W. Monthly and Quarterly Sea Surface Temperature Prediction Based on Gated Recurrent Unit Neural Network. Journal of Marine Science and Engineering. 2020; 8(4):249. https://doi.org/10.3390/jmse8040249

Chicago/Turabian StyleZhang, Zhen, Xinliang Pan, Tao Jiang, Baikai Sui, Chenxi Liu, and Weifu Sun. 2020. "Monthly and Quarterly Sea Surface Temperature Prediction Based on Gated Recurrent Unit Neural Network" Journal of Marine Science and Engineering 8, no. 4: 249. https://doi.org/10.3390/jmse8040249

APA StyleZhang, Z., Pan, X., Jiang, T., Sui, B., Liu, C., & Sun, W. (2020). Monthly and Quarterly Sea Surface Temperature Prediction Based on Gated Recurrent Unit Neural Network. Journal of Marine Science and Engineering, 8(4), 249. https://doi.org/10.3390/jmse8040249