Joint Representation and Recognition for Ship-Radiated Noise Based on Multimodal Deep Learning

Abstract

1. Introduction

- Based on DL methods, the paper further introduces multimodal-DL methods for ship-radiated noise recognition, and advantages and superiorities of the multimodal-DL methods over the DL methods and traditional methods are demonstrated.

- The paper proposes the multimodal-DL framework, the multimodal-CNNs to simultaneously model on the two modalities.

- The paper proposes the CCA-based strategy to build a more discriminative joint representation and recognition on the two single-modality.

2. Related Work

3. Multimodal Deep Learning Methods for Ship-Radiated Nose Recognition

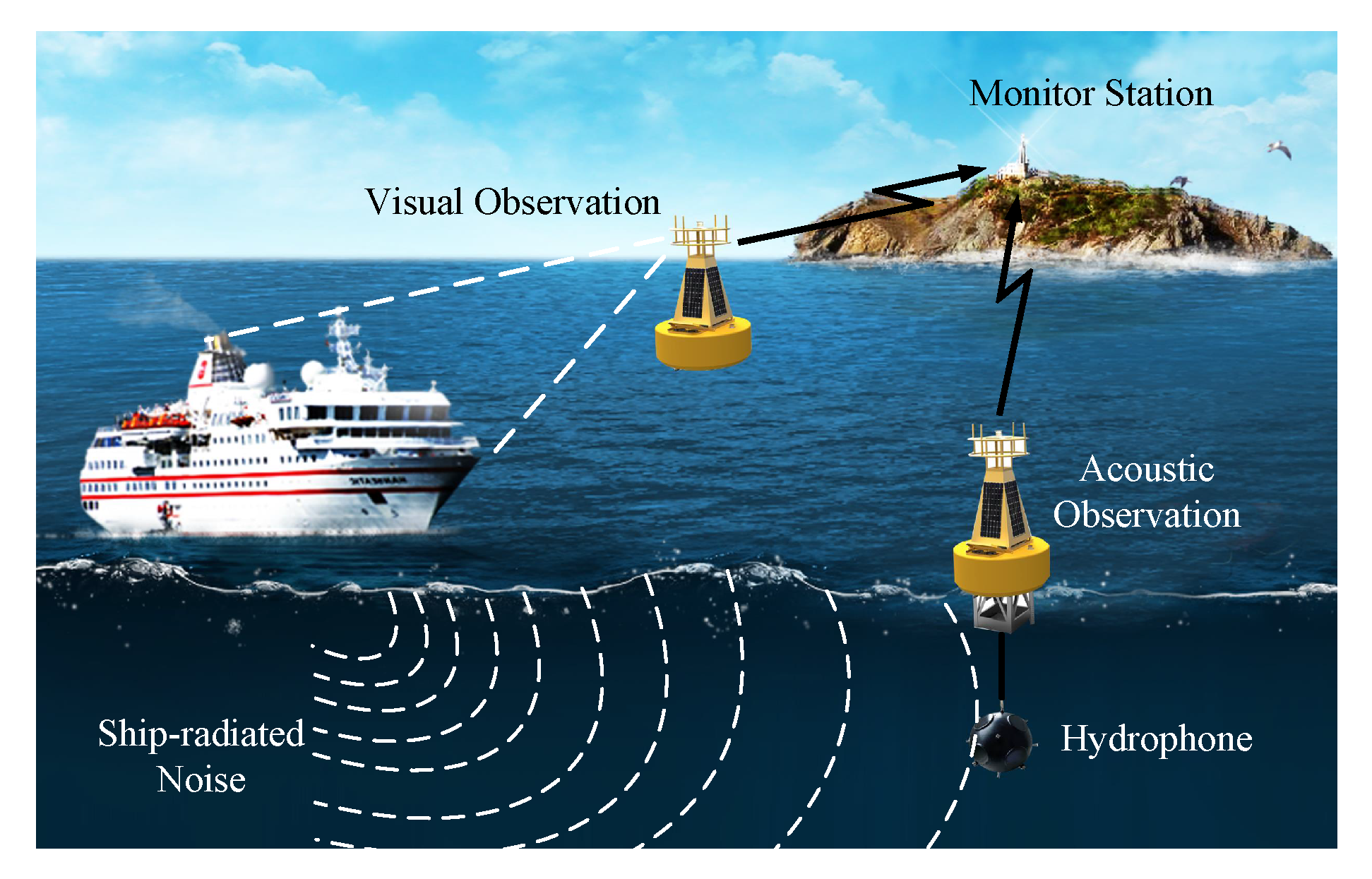

3.1. Application Scenario

3.2. The Multimodal-CNNs Framework

3.3. Training Method for Multimodal-CNNs Framework

3.4. CCA-Based Strategy

4. Experiments and Discussion

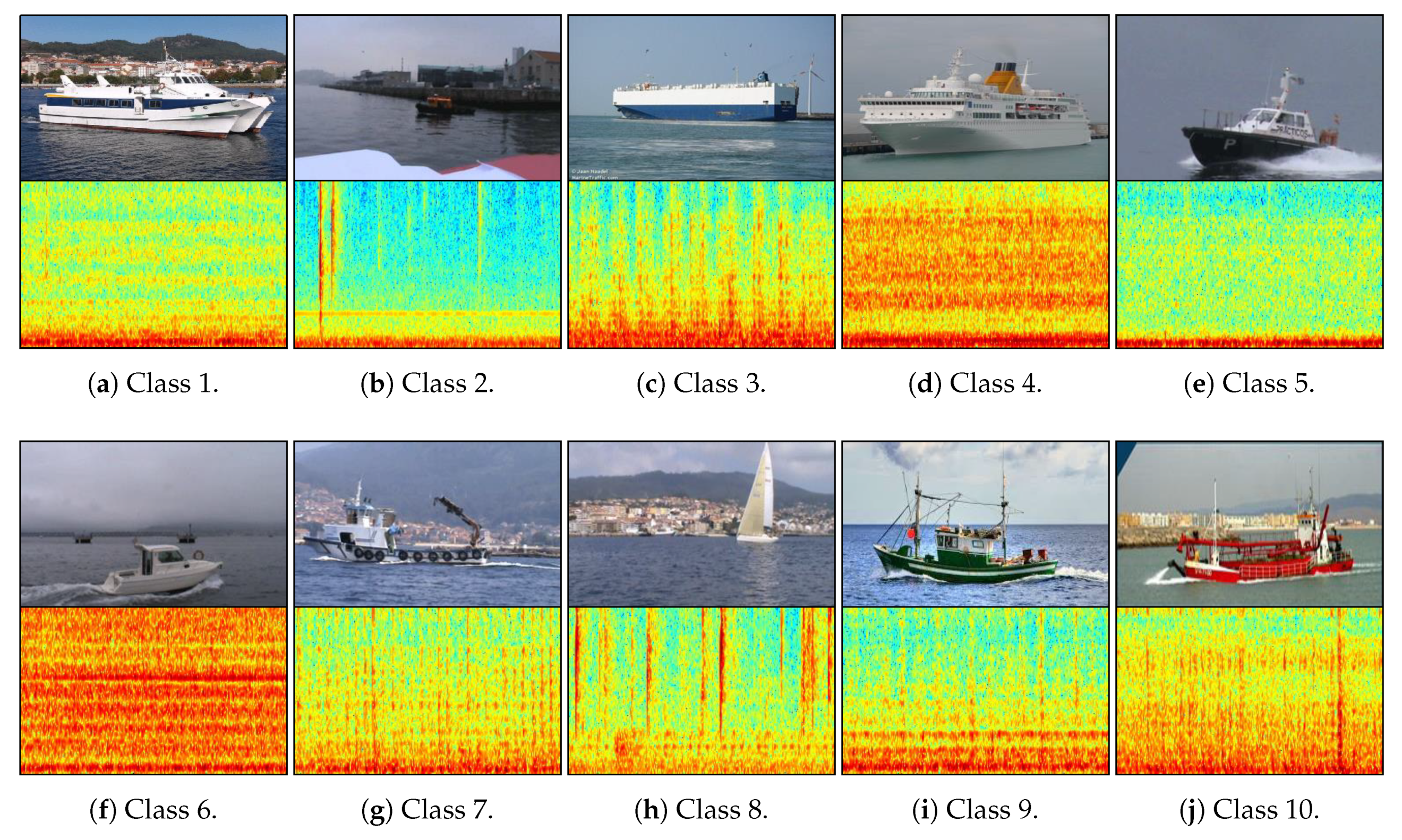

4.1. Experiment Setting

4.2. Single-Modality Consideration

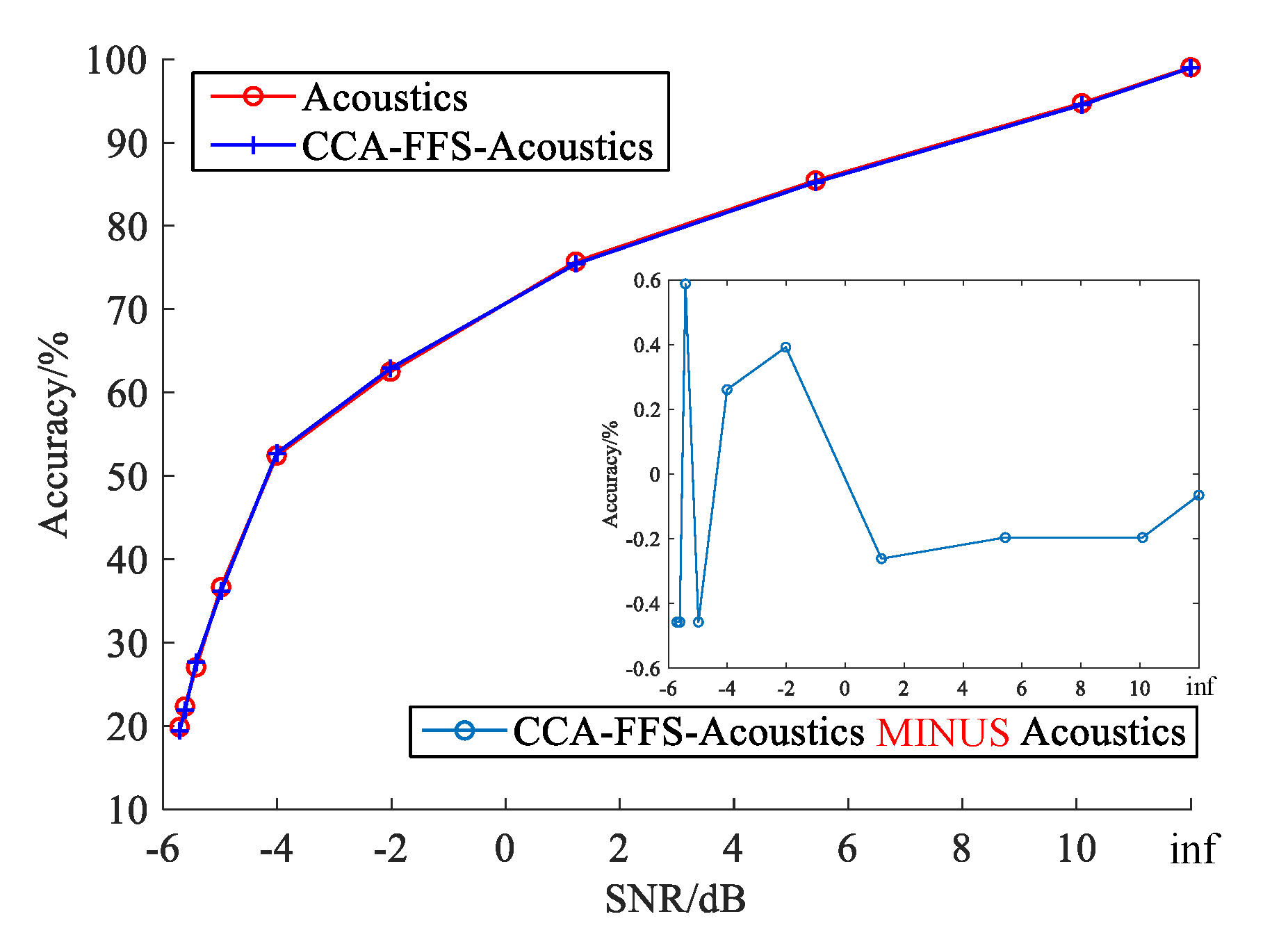

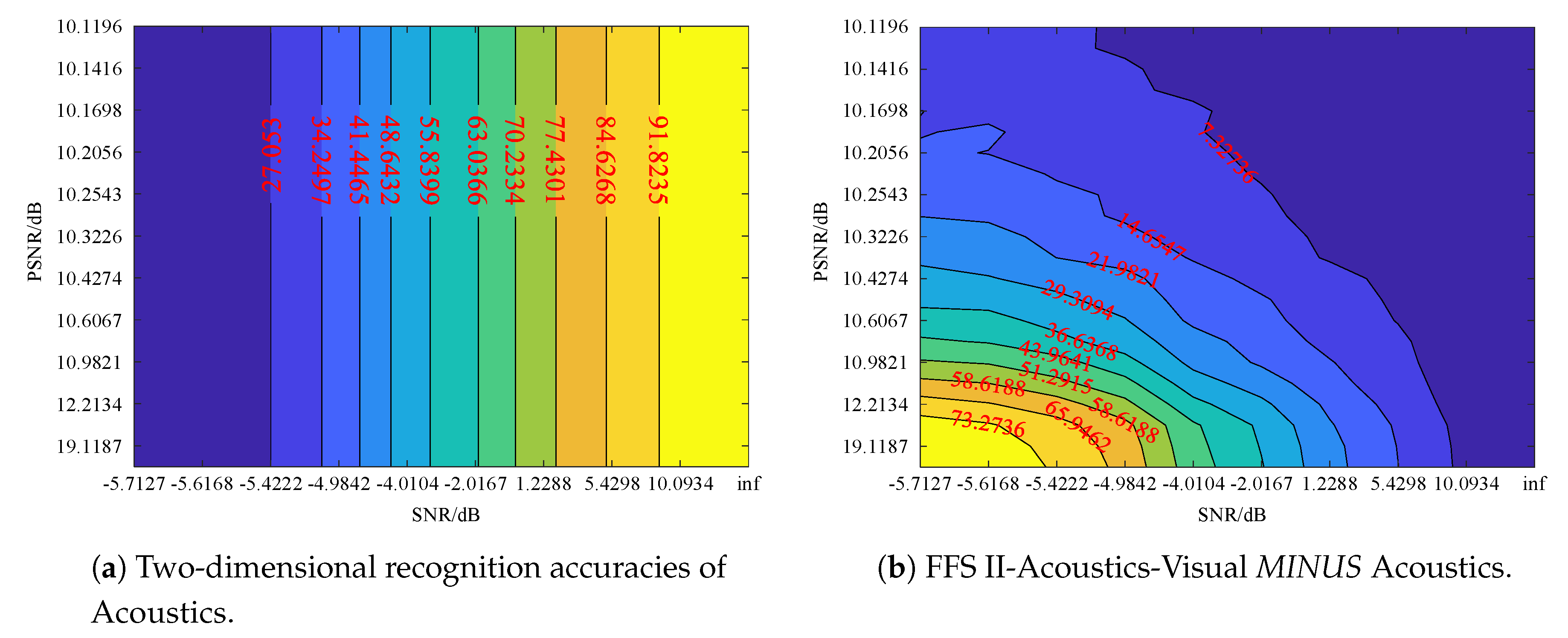

4.2.1. The Acoustics Modality

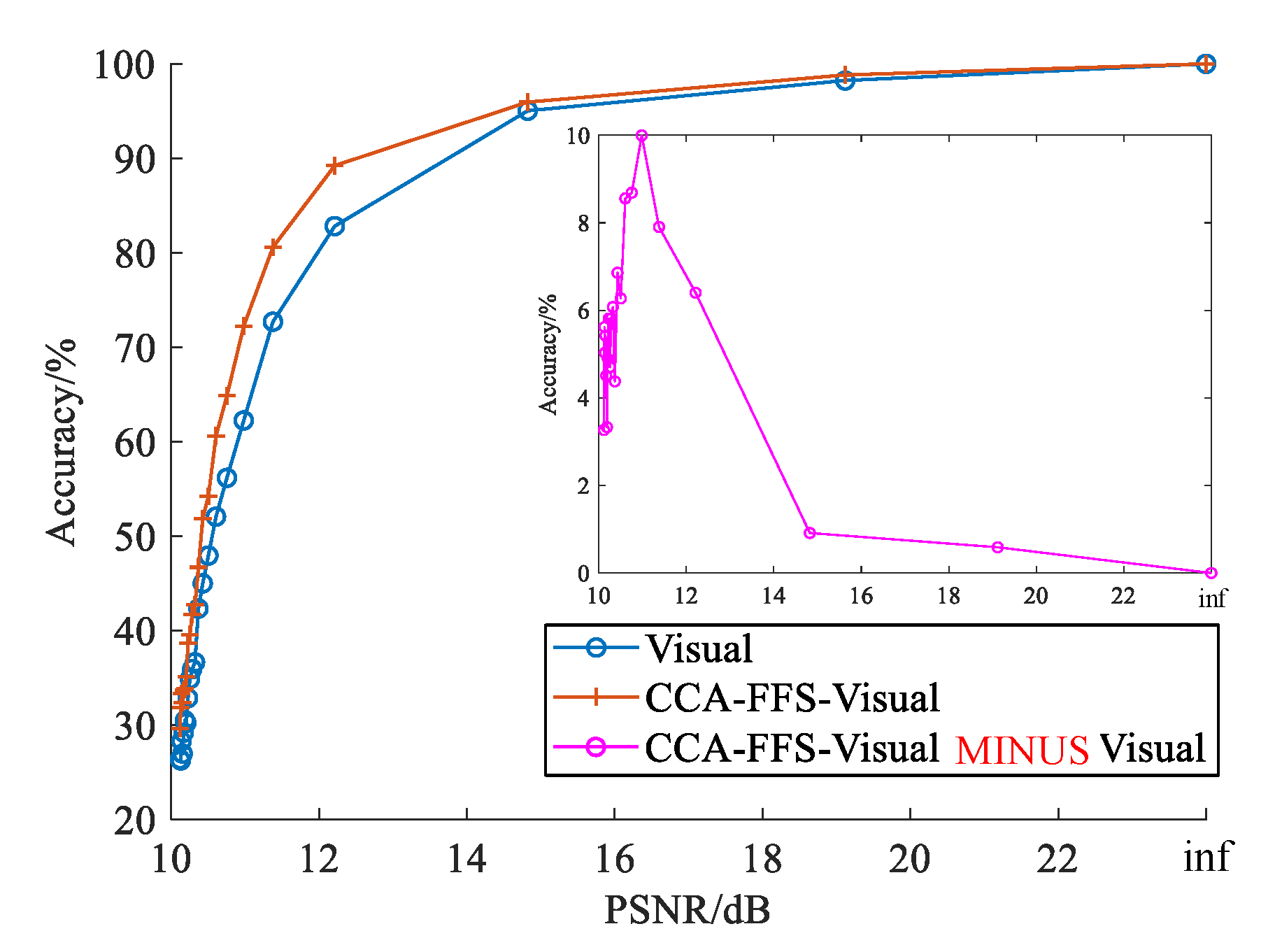

4.2.2. The Visual Modality

4.3. Multi-Modalities Consideration

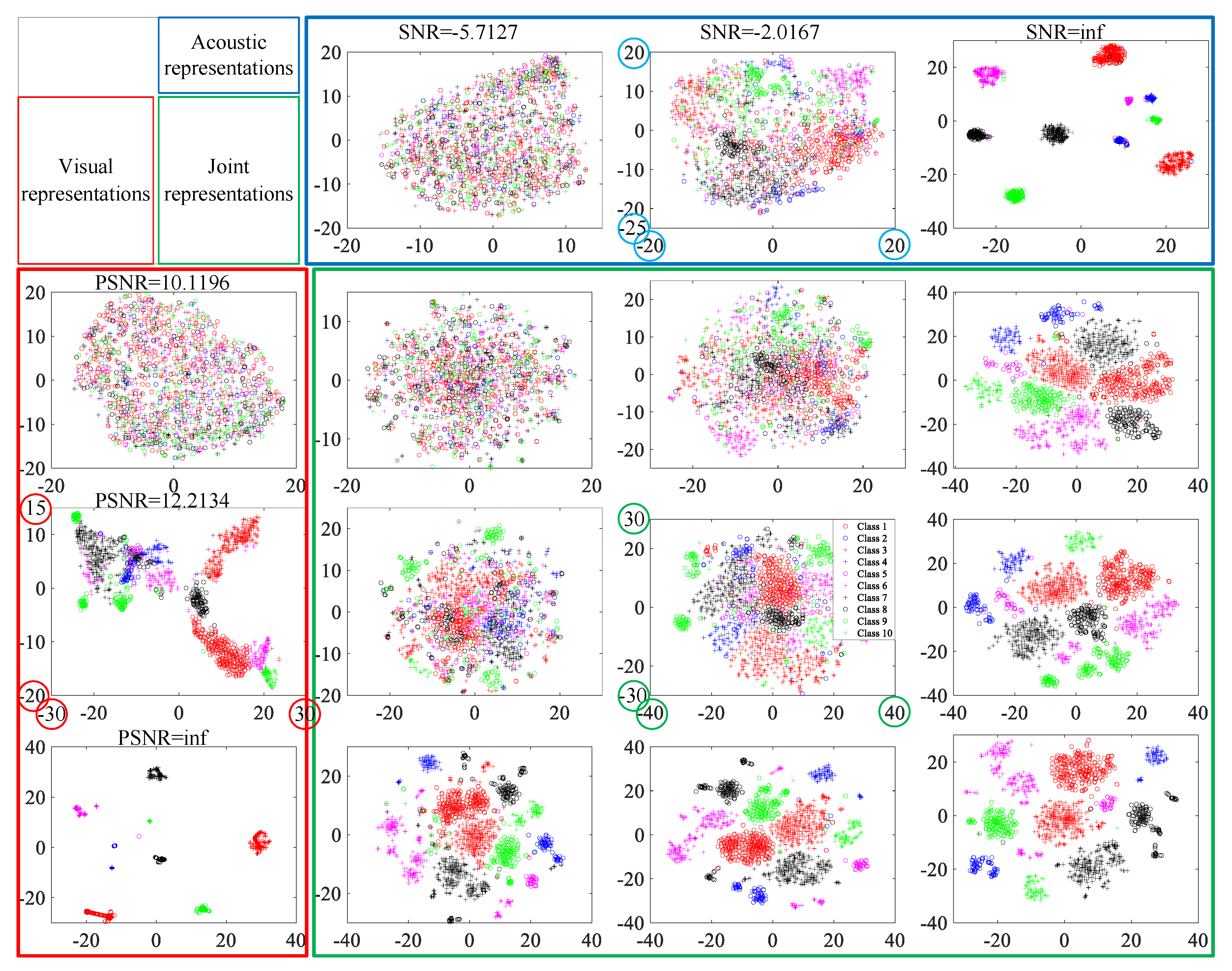

4.4. Joint Representation

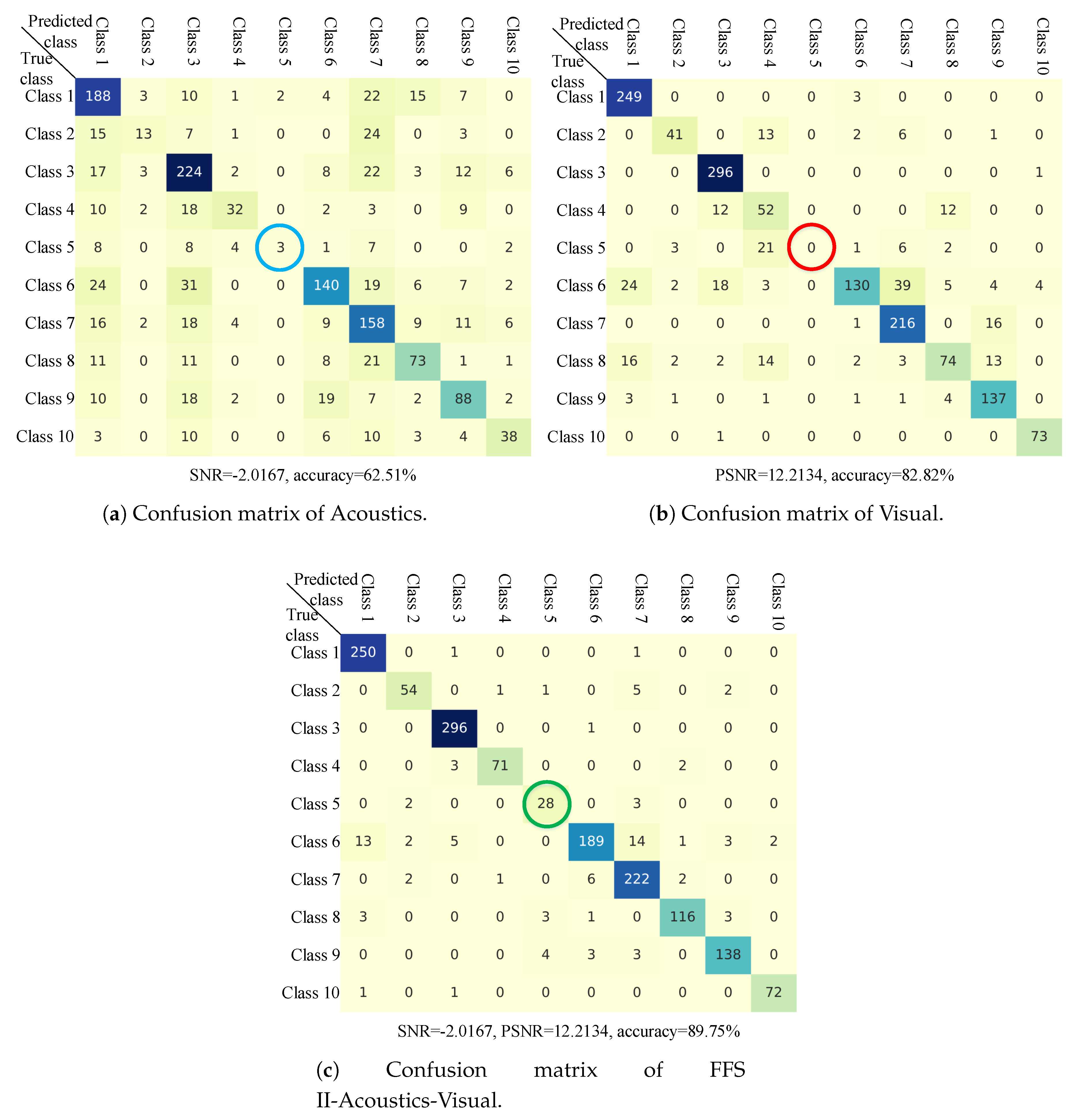

4.5. Joint Recognition

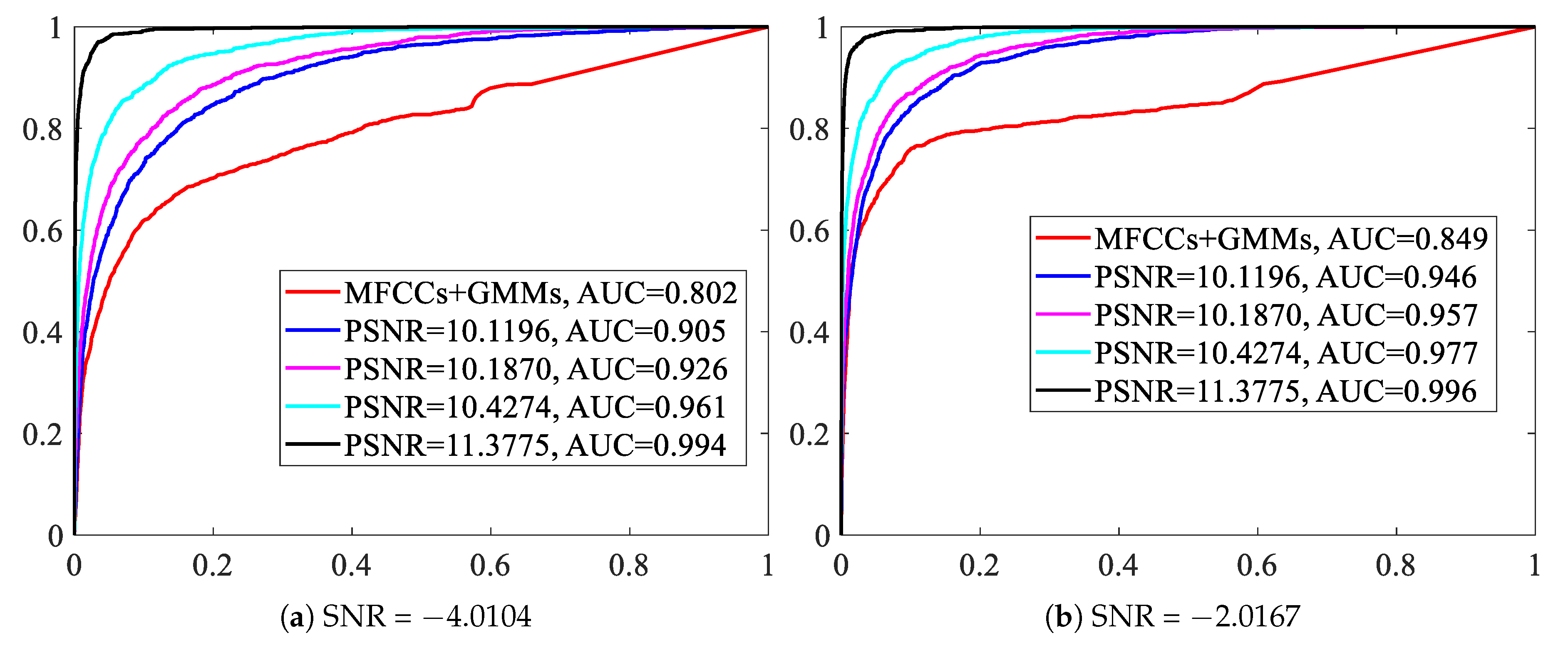

4.6. Overall Comparison

4.6.1. The Acoustics Modality

4.6.2. The Visual Modality

4.6.3. The Baseline

5. Conclusions

6. Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Srivastava, N.; Salakhutdinov, R. Multimodal Learning with Deep Boltzmann Machines. J. Mach. Learn. Res. 2014, 15, 2949–2980. [Google Scholar]

- Ngiam, J.; Khosla, A.; Kim, M.; Nam, J.; Lee, H.; Ng, A.Y. Multimodal deep learning. In Proceedings of the 28th International Conference on Machine Learning (ICML-11), Bellevue, Washington, DC, USA, 28 June–2 July 2011; pp. 689–696. [Google Scholar]

- Swietojanski, P.; Ghoshal, A.; Renals, S. Convolutional neural networks for distant speech recognition. IEEE Signal Process. Lett. 2014, 21, 1120–1124. [Google Scholar]

- Cao, X.; Togneri, R.; Zhang, X.; Yu, Y. Convolutional Neural Network With Second-Order Pooling for Underwater Target Classification. IEEE Sens. J. 2018, 19, 3058–3066. [Google Scholar] [CrossRef]

- Haghighat, M.; Abdel-Mottaleb, M.; Alhalabi, W. Discriminant correlation analysis: Real-time feature level fusion for multimodal biometric recognition. IEEE Trans. Inf. Forensics Secur. 2016, 11, 1984–1996. [Google Scholar] [CrossRef]

- Sun, Q.S.; Zeng, S.G.; Liu, Y.; Heng, P.A.; Xia, D.S. A new method of feature fusion and its application in image recognition. Pattern Recognit. 2005, 38, 2437–2448. [Google Scholar] [CrossRef]

- Liu, J.; He, Y.; Liu, Z.; Xiong, Y. Underwater Target Recognition Based on Line Spectrum and Support Vector Machine. In 2014 International Conference on Mechatronics, Control and Electronic Engineering (MCE-14); Atlantis Press: Paris, France, 2014. [Google Scholar] [CrossRef]

- Wei, X. On feature extraction of ship radiated noise using 11/2 d spectrum and principal components analysis. In Proceedings of the 2016 IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC), Hong Kong, China, 5–8 August 2016; pp. 1–4. [Google Scholar]

- Santos-Domínguez, D.; Torres-Guijarro, S.; Cardenal-López, A.; Pena-Gimenez, A. ShipsEar: An underwater vessel noise database. Appl. Acoust. 2016, 113, 64–69. [Google Scholar] [CrossRef]

- Zhang, L.; Wu, D.; Han, X.; Zhu, Z. Feature extraction of underwater target signal using Mel frequency cepstrum coefficients based on acoustic vector sensor. J. Sens. 2016, 2016. [Google Scholar] [CrossRef]

- Meng, Q.; Yang, S. A wave structure based method for recognition of marine acoustic target signals. J. Acoust. Soc. Am. 2015, 137, 2242. [Google Scholar] [CrossRef]

- Azimi-Sadjadi, M.R.; Yao, D.; Huang, Q.; Dobeck, G.J. Underwater target classification using wavelet packets and neural networks. IEEE Trans. Neural Netw. 2000, 11, 784–794. [Google Scholar] [CrossRef] [PubMed]

- Averbuch, A.; Zheludev, V.; Neittaanmäki, P.; Wartiainen, P.; Huoman, K.; Janson, K. Acoustic detection and classification of river boats. Appl. Acoust. 2011, 72, 22–34. [Google Scholar] [CrossRef]

- Wang, S.; Zeng, X. Robust underwater noise targets classification using auditory inspired time–frequency analysis. Appl. Acoust. 2014, 78, 68–76. [Google Scholar] [CrossRef]

- Cao, X.; Zhang, X.; Yu, Y.; Niu, L. Deep learning-based recognition of underwater target. In Proceedings of the 2016 IEEE International Conference on Digital Signal Processing (DSP), Beijing, China, 16–18 October 2016; pp. 89–93. [Google Scholar]

- Ke, X.; Yuan, F.; Cheng, E. Underwater Acoustic Target Recognition Based on Supervised Feature-Separation Algorithm. Sensors 2018, 18, 4318. [Google Scholar] [CrossRef] [PubMed]

- Yang, H.; Shen, S.; Yao, X.; Sheng, M.; Wang, C. Competitive deep-belief networks for underwater acoustic target recognition. Sensors 2018, 18, 952. [Google Scholar] [CrossRef] [PubMed]

- Kamal, S.; Mohammed, S.K.; Pillai, P.S.; Supriya, M. Deep learning architectures for underwater target recognition. In Proceedings of the 2013 Ocean Electronics (SYMPOL), Kochi, India, 23–25 October 2013; pp. 48–54. [Google Scholar]

- Mroueh, Y.; Marcheret, E.; Goel, V. Deep multimodal learning for audio-visual speech recognition. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brisbane, Australia, 19–24 April 2015; pp. 2130–2134. [Google Scholar]

- Tuma, M.; Rørbech, V.; Prior, M.K.; Igel, C. Integrated optimization of long-range underwater signal detection, feature extraction, and classification for nuclear treaty monitoring. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3649–3659. [Google Scholar] [CrossRef]

- Maaten, L.V.D.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| 1 | We recognize 10 classes of ships in this paper, and the recognition of a specific ship is similar. |

| 2 | To obtain the samples of different SNR, different amplitudes of Gaussian white noise are added to the original samples. The “inf” in Figure 4 means that no noise is added to the original samples. |

| 3 | We also add different Gaussian white noise to the original pictures to obtain the pictures of different PSNR, the “inf” also means that no noise is added to the original pictures. |

| Training Set | Testing Set | ||

|---|---|---|---|

| ID | Number of Samples | Number of Samples | |

| Class 1: Passenger ferries | 60, 61, 62 | 554 | 252 |

| Class 2: Tugboats | 15, 31 | 143 | 63 |

| Class 3: RO-RO vessels | 18, 19, 20 | 789 | 297 |

| Class 4: Ocean liners | 22, 24, 25 | 159 | 76 |

| Class 5: Pilot boats | 29, 30 | 105 | 33 |

| Class 6: Motorboats | 50, 51, 52, 70, 72, 77, 79 | 487 | 229 |

| Class 7: Mussel boats | 46, 47, 48, 49, 66 | 497 | 233 |

| Class 8: Sailboats | 37, 56, 57, 68 | 282 | 126 |

| Class 9: Fishing boats | 73, 74, 75, 76 | 366 | 148 |

| Class 10: Dredgers | 80, 93, 94, 95, 96 | 188 | 74 |

| Total | 3570 | 1531 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, F.; Ke, X.; Cheng, E. Joint Representation and Recognition for Ship-Radiated Noise Based on Multimodal Deep Learning. J. Mar. Sci. Eng. 2019, 7, 380. https://doi.org/10.3390/jmse7110380

Yuan F, Ke X, Cheng E. Joint Representation and Recognition for Ship-Radiated Noise Based on Multimodal Deep Learning. Journal of Marine Science and Engineering. 2019; 7(11):380. https://doi.org/10.3390/jmse7110380

Chicago/Turabian StyleYuan, Fei, Xiaoquan Ke, and En Cheng. 2019. "Joint Representation and Recognition for Ship-Radiated Noise Based on Multimodal Deep Learning" Journal of Marine Science and Engineering 7, no. 11: 380. https://doi.org/10.3390/jmse7110380

APA StyleYuan, F., Ke, X., & Cheng, E. (2019). Joint Representation and Recognition for Ship-Radiated Noise Based on Multimodal Deep Learning. Journal of Marine Science and Engineering, 7(11), 380. https://doi.org/10.3390/jmse7110380