Robust Forward-Looking Sonar-Image Mosaicking Without External Sensors for Autonomous Deep-Sea Mining

Abstract

1. Introduction

2. Related Work

2.1. Review of Sonar-Image Denoising Methods

2.2. Review of Sonar-Image Matching and Mosaicking Algorithms

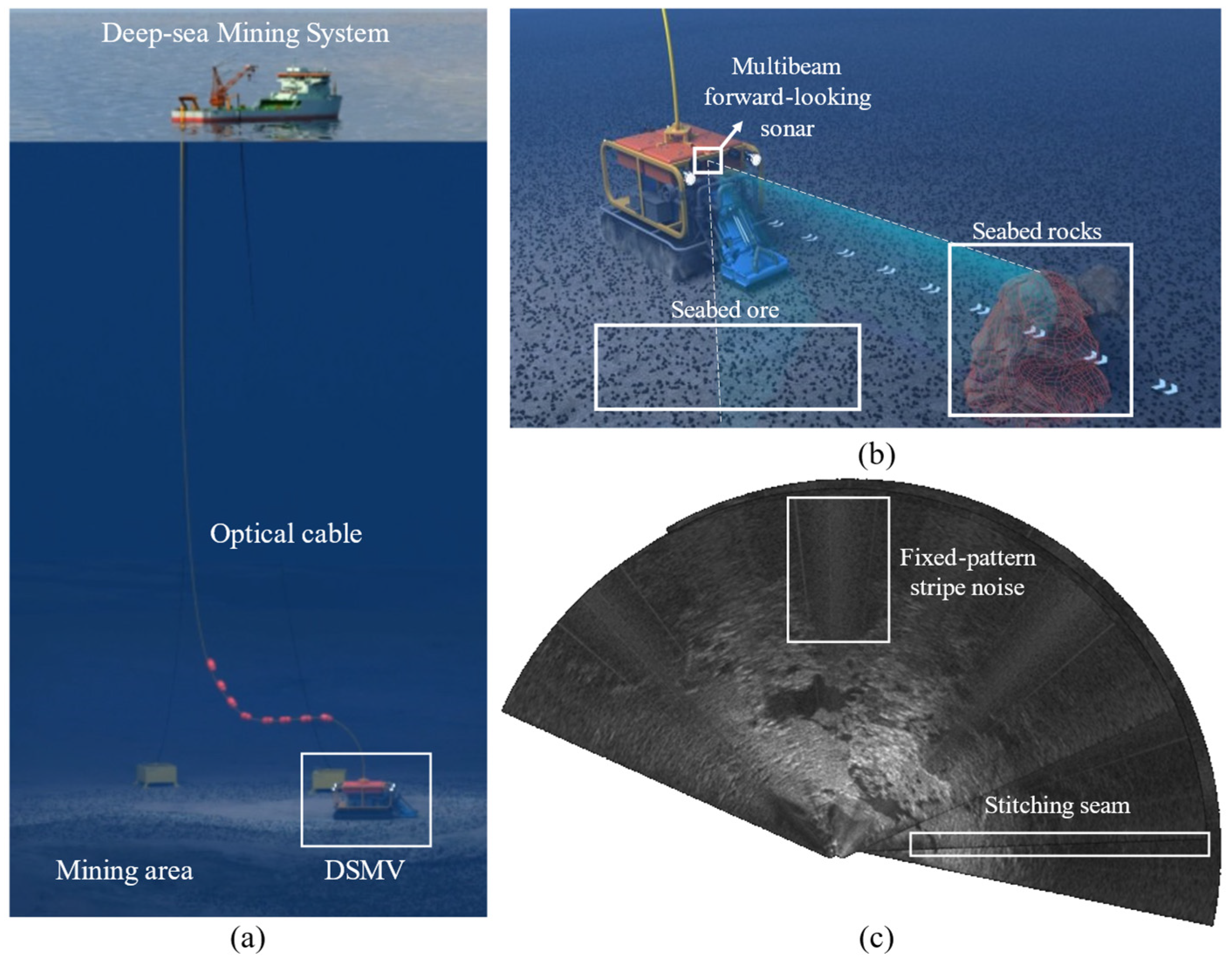

3. Methodology

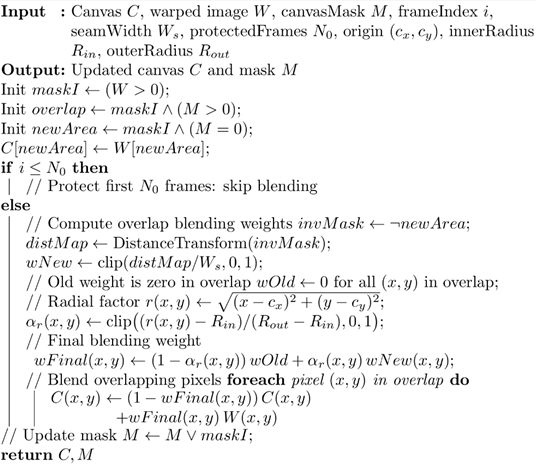

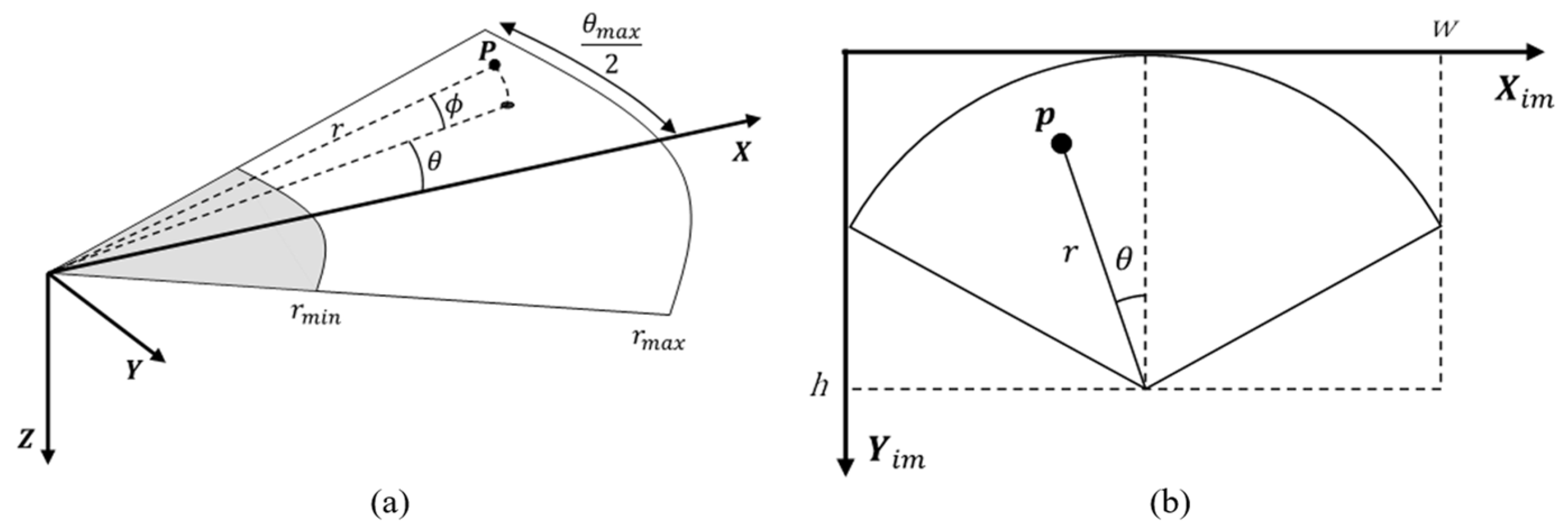

3.1. Imaging Model and Platform Perturbation

3.2. Brightness Normalization and Noise Suppression

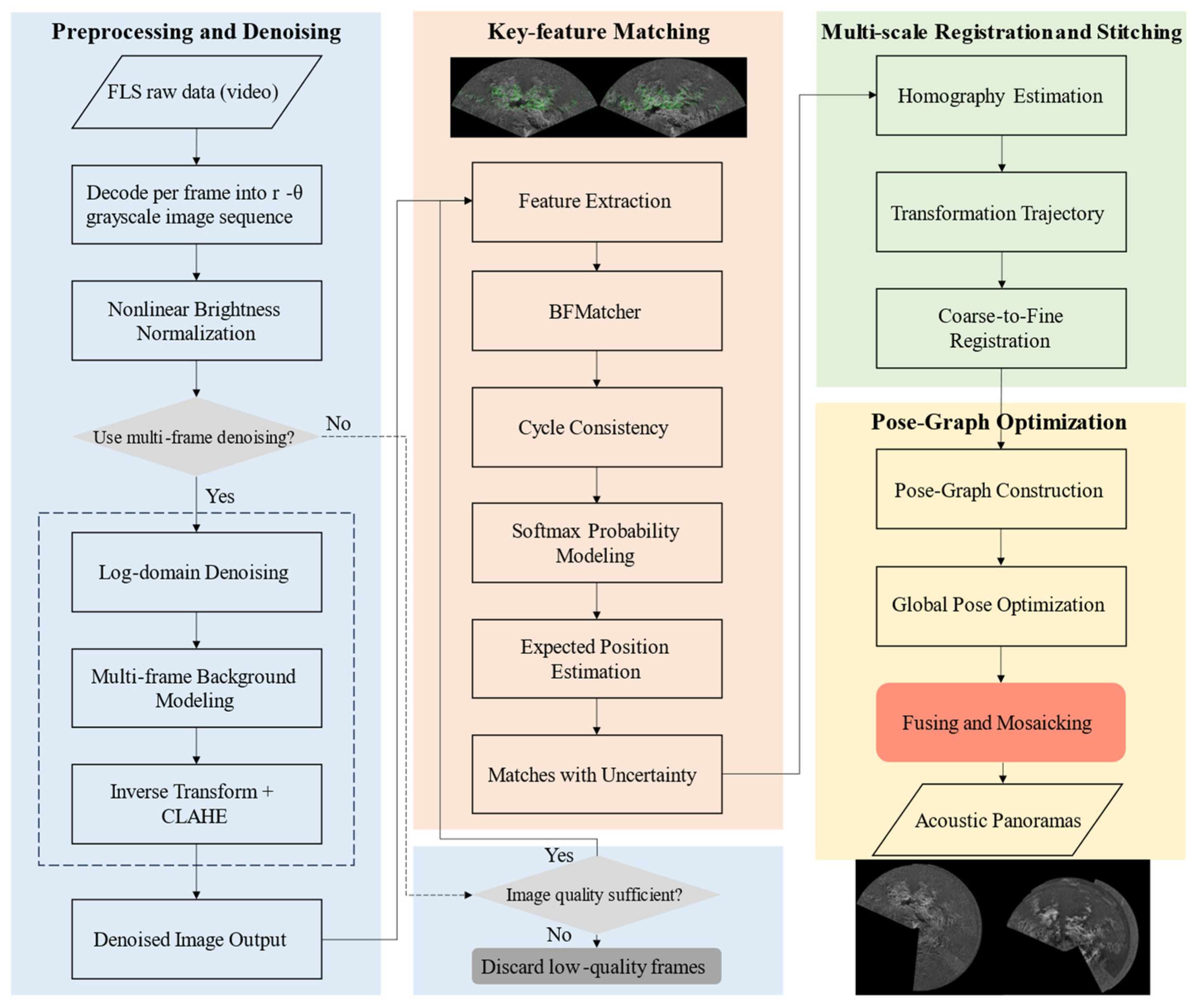

| Algorithm 1: Two-stage preprocessing and denoising framework for FLS images. |

|

3.3. Key-Point Matching Optimization for FLS Images

3.4. Multi-Scale Registration and Stitching

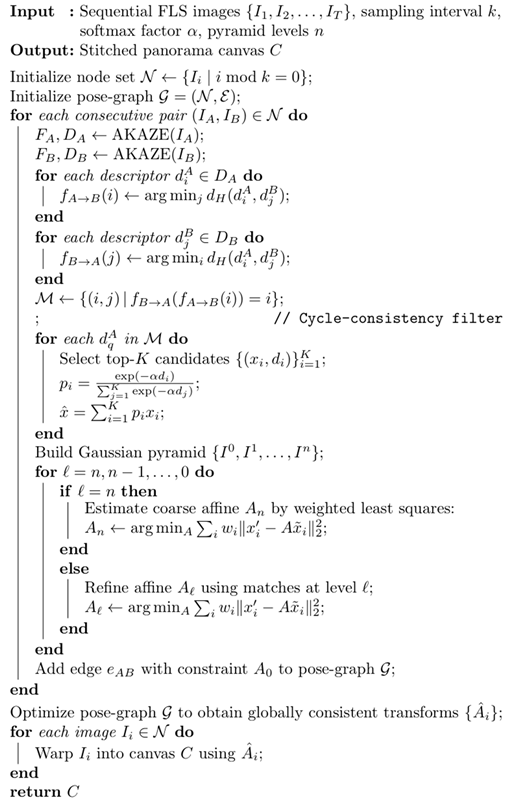

| Algorithm 2: Uncertainty-aware multi-scale registration for FLS images. |

|

| Algorithm 3: Protected-frame radial-adaptive blending algorithm. |

|

4. Experiments and Discussion

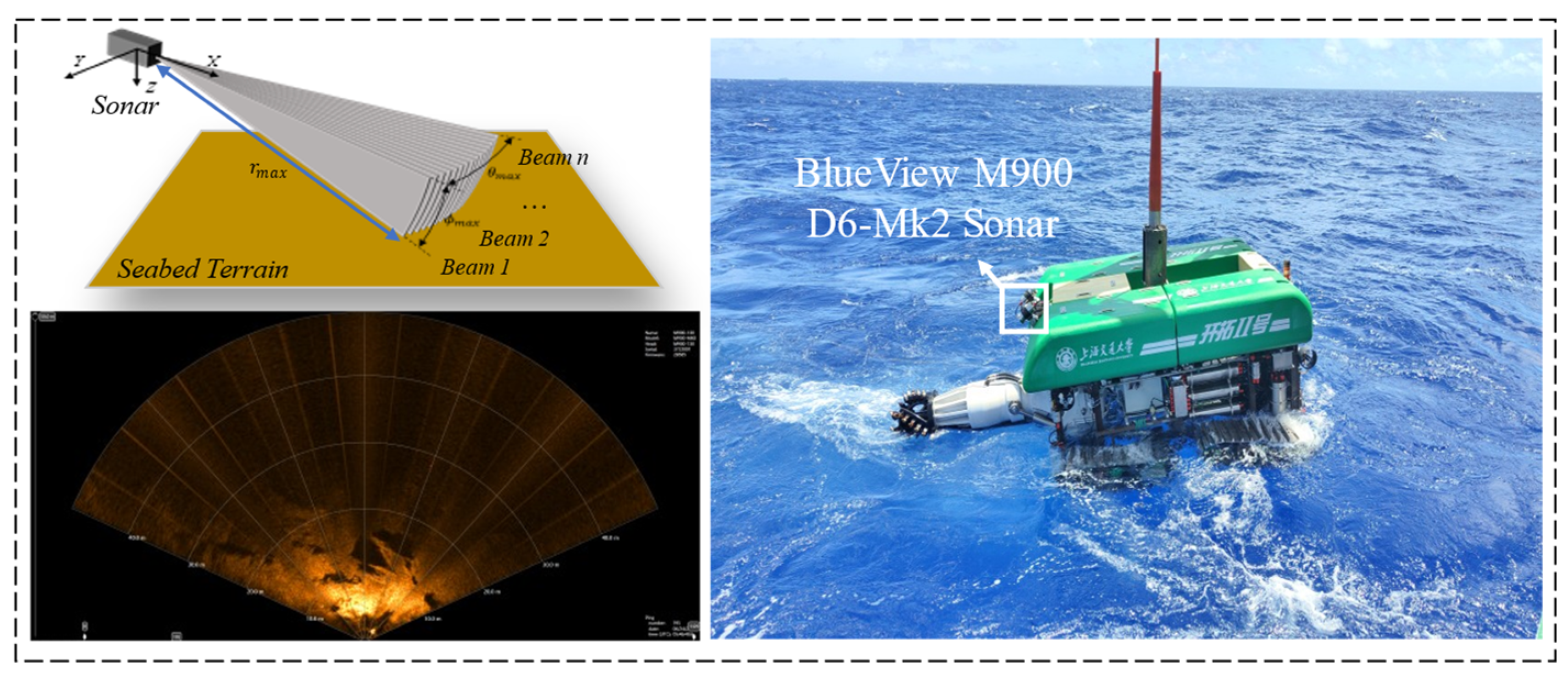

4.1. Experimental Platform and Dataset

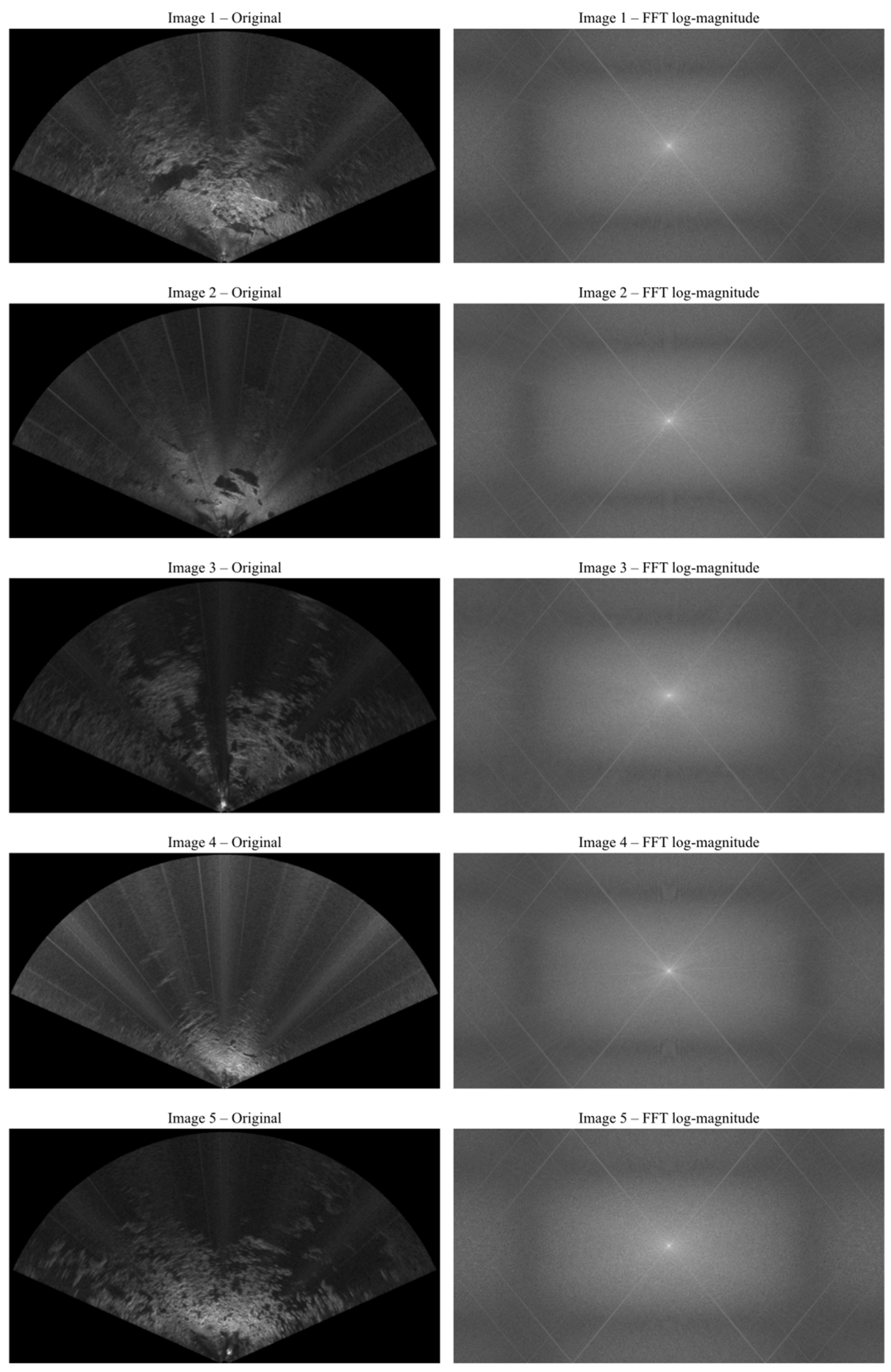

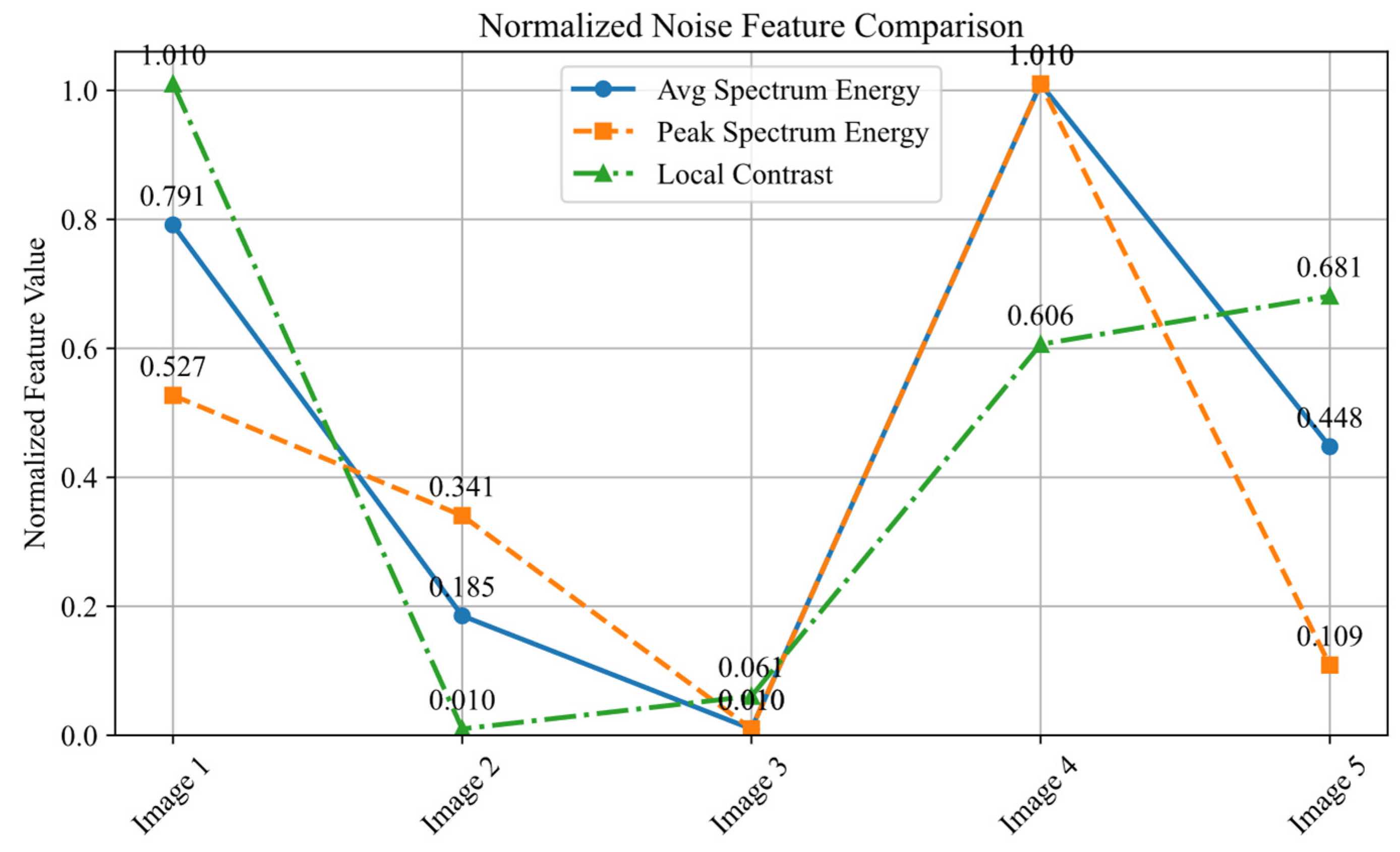

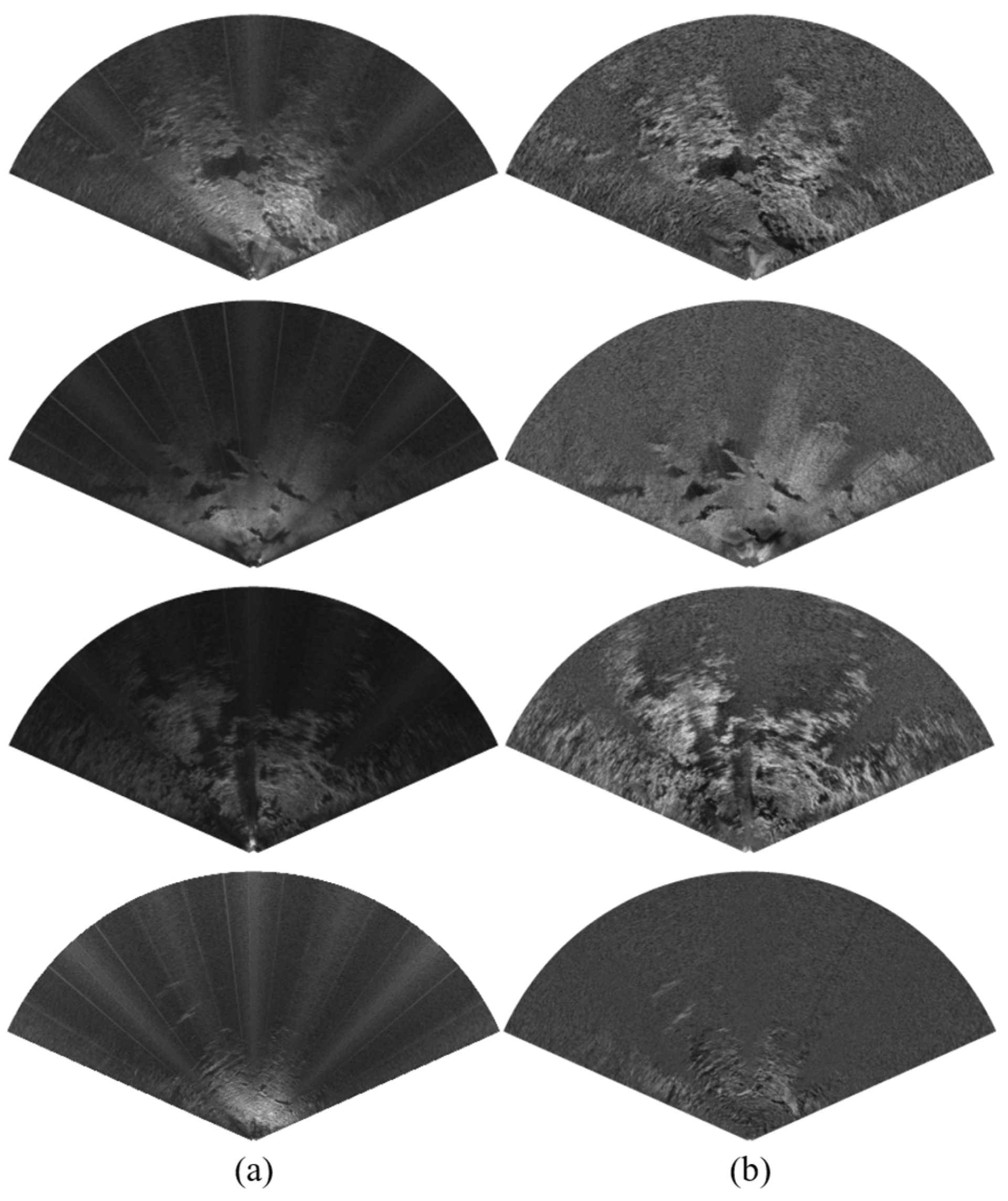

4.2. No-Reference Quantitative Evaluation of Denoising Results

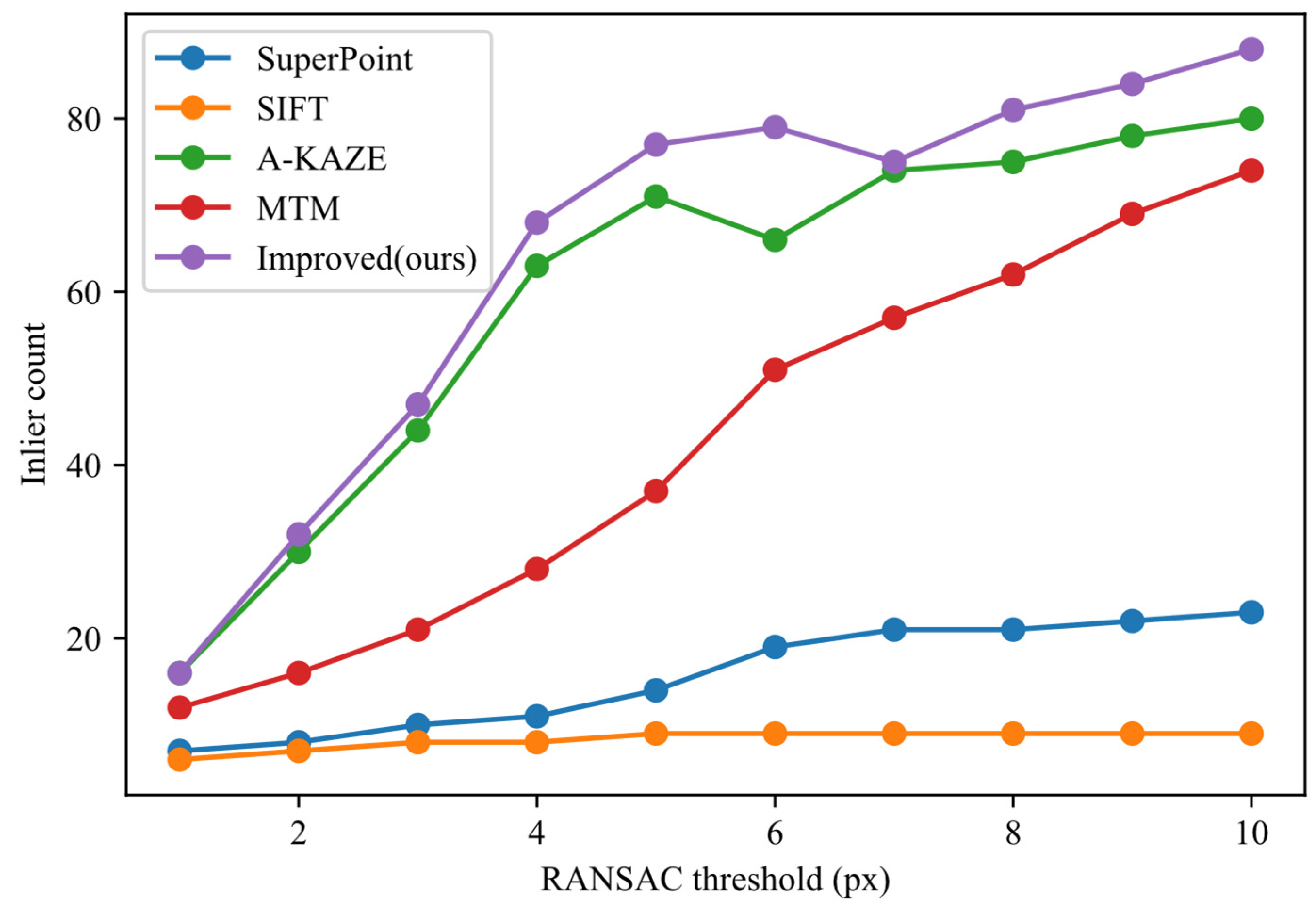

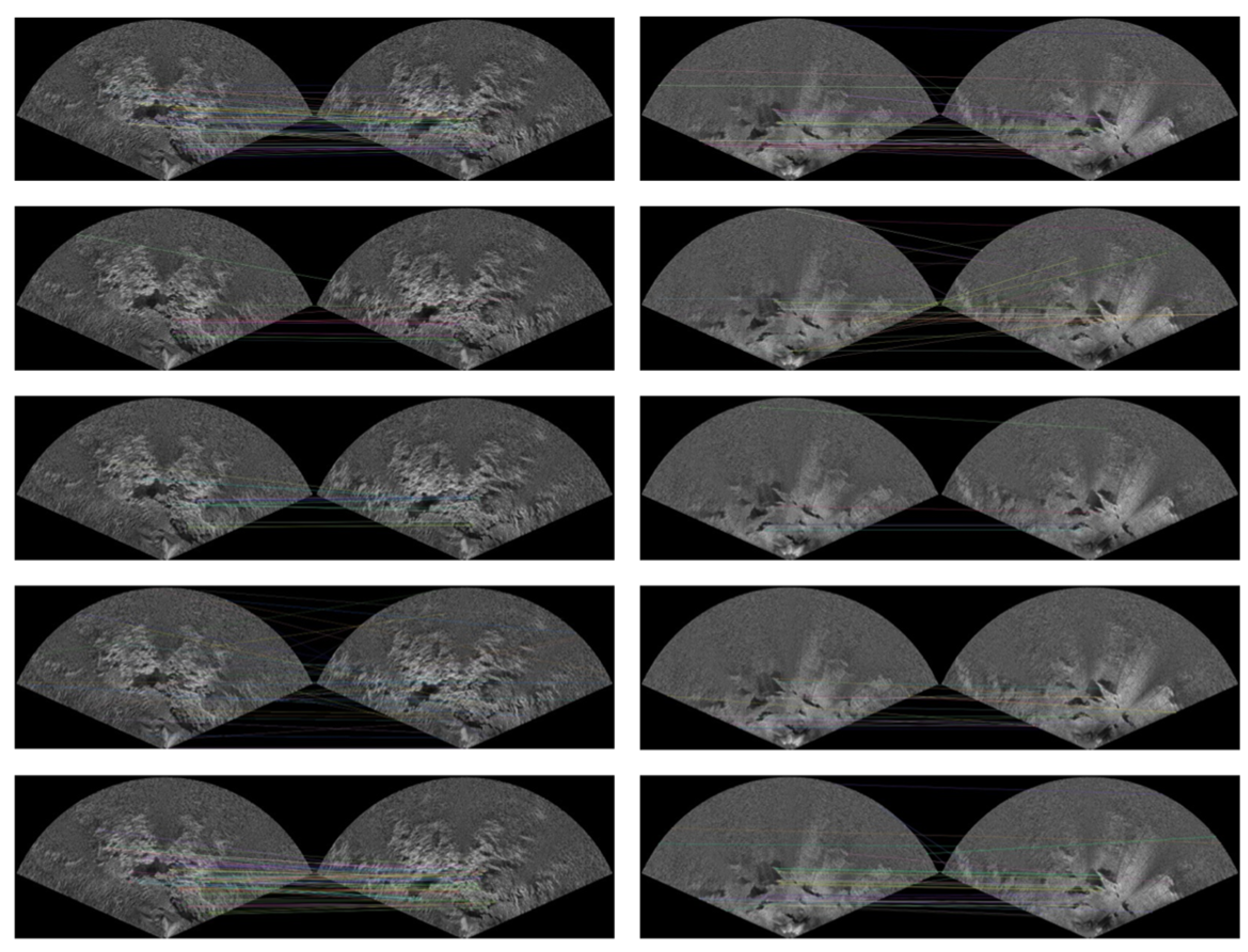

4.3. Performance Evaluation of Two-Frame Feature Matching and Registration

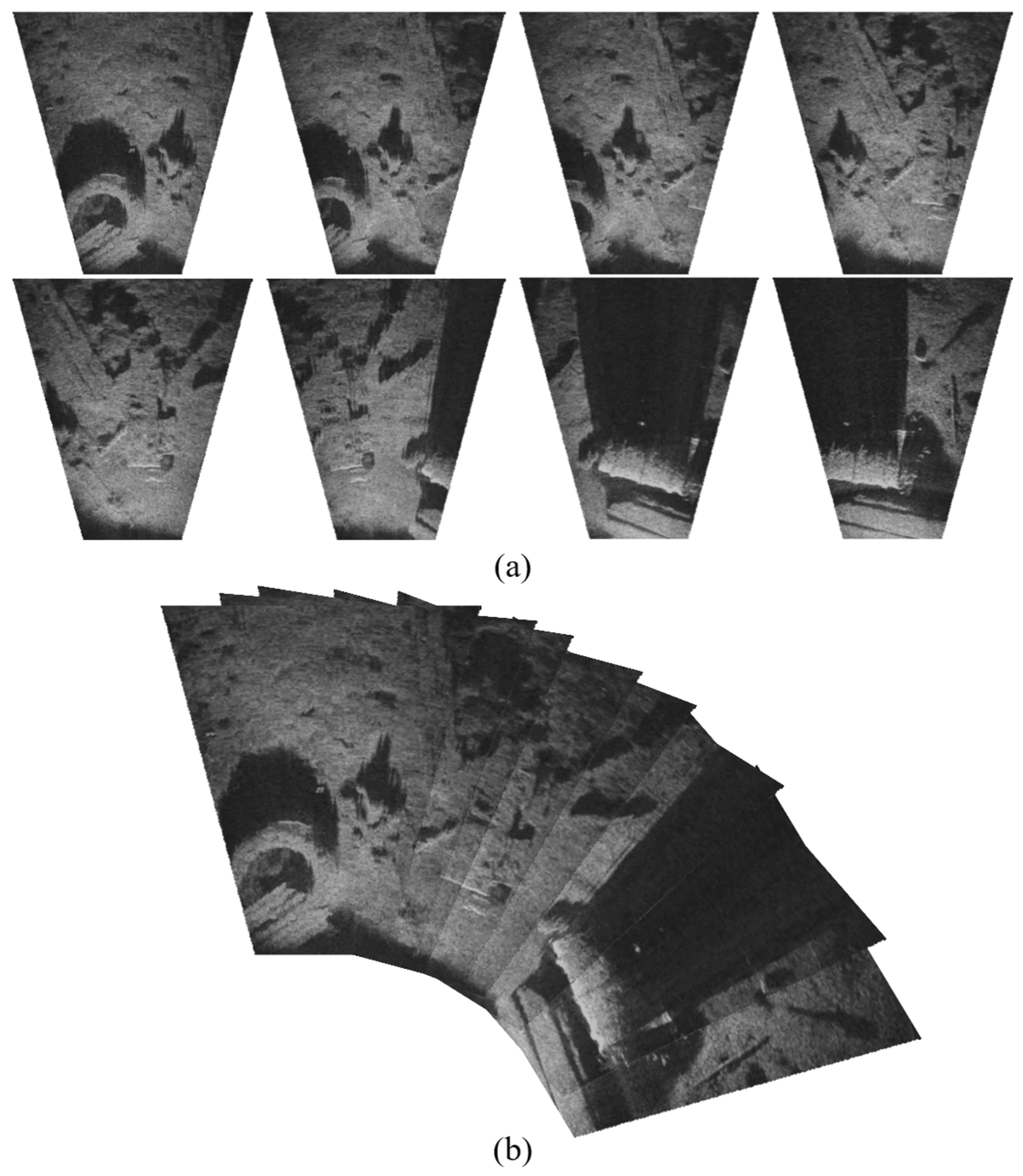

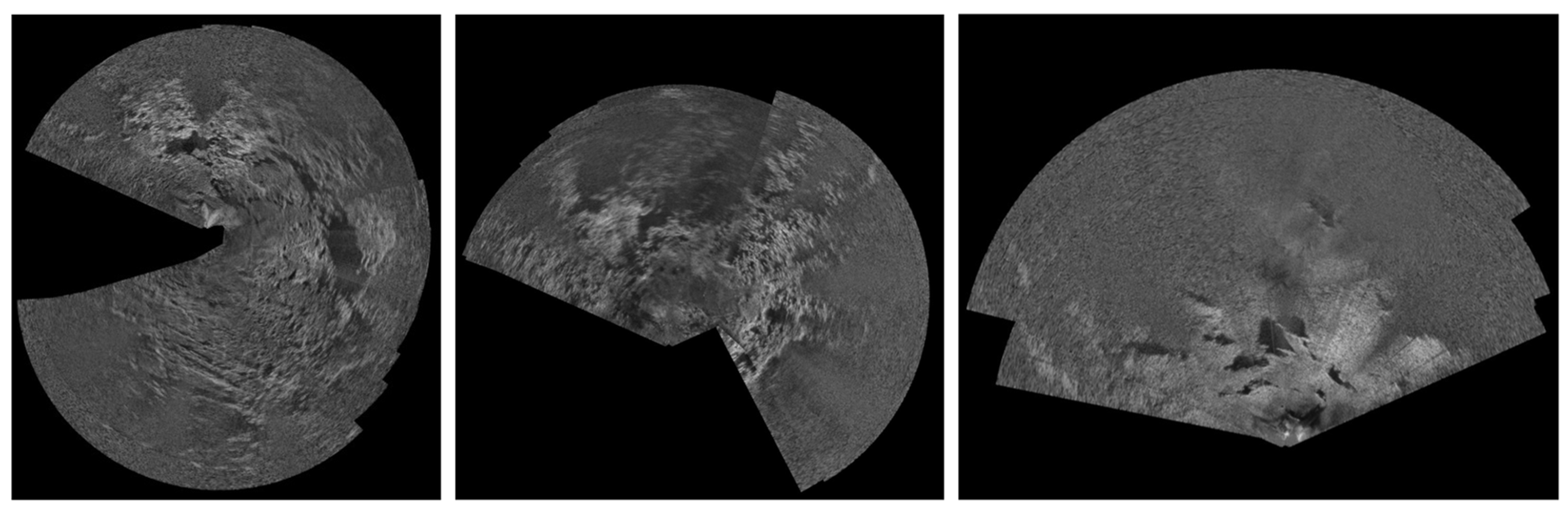

4.4. Demonstration of Deep-Sea Image Stitching Using Multi-Frame Matching

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Leng, D.; Shao, S.; Xie, Y.; Wang, H.; Liu, G. A brief review of recent progress on deep sea mining vehicle. Ocean Eng. 2021, 228, 108565. [Google Scholar] [CrossRef]

- Liu, X.; Yang, J.; Xu, W.; Chen, Q.; Lu, H.; Chai, Y.; Lu, C.; Xue, Y. DSM-Net: A multi-scale detection network of sonar images for deep-sea mining vehicle. Appl. Ocean Res. 2025, 158, 104551. [Google Scholar] [CrossRef]

- Cao, Y.; Xu, C.; Li, J.; Zhou, T.; Lin, L.; Chen, B. Underwater gas leakage flow detection and classification based on multibeam forward-looking sonar. J. Mar. Sci. Appl. 2024, 23, 674–687. [Google Scholar] [CrossRef]

- Ferreira, F.; Djapic, V.; Micheli, M.; Caccia, M. Forward looking sonar mosaicing for mine countermeasures. Annu. Rev. Control 2015, 40, 212–226. [Google Scholar] [CrossRef]

- Zhou, X.; Mizuno, K.; Zhang, Y.; Tsutsumi, K.; Sugimoto, H. Acoustic Camera-Based Adaptive Mosaicking Framework for Underwater Structures Inspection in Complex Marine Environments. IEEE J. Ocean Eng. 2024, 49, 1549–1573. [Google Scholar] [CrossRef]

- Lu, C.; Yang, J.; Leira, B.J.; Skjetne, R.; Mao, J.; Chen, Q.; Xu, W. High-traversability and efficient path optimization for deep-sea mining vehicles considering complex seabed environmental factors. Ocean Eng. 2024, 313, 119500. [Google Scholar] [CrossRef]

- Xu, W.; Yang, J.; Wei, H.; Lu, H.; Tian, X.; Li, X. Seabed mapping for deep-sea mining vehicles based on forward-looking sonar. Ocean Eng. 2024, 299, 117276. [Google Scholar] [CrossRef]

- Lu, C.; Yang, J.; Lu, H.; Lin, Z.; Wang, Z.; Ning, J. Adaptive bi-level path optimization for deep-sea mining vehicle in non-uniform grids considering ocean currents and dynamic obstacles. Ocean Eng. 2025, 315, 119835. [Google Scholar] [CrossRef]

- Wang, N.; Chen, Y.; Wei, Y.; Chen, T.; Karimi, H.R. UP-GAN: Channel-spatial attention-based progressive generative adversarial network for underwater image enhancement. J. Field Robot. 2024, 41, 2597–2614. [Google Scholar] [CrossRef]

- Petillot, Y.; Ruiz, I.T.; Lane, D.M. Underwater vehicle obstacle avoidance and path planning using a multi-beam forward looking sonar. IEEE J. Ocean Eng. 2001, 26, 240–251. [Google Scholar] [CrossRef]

- Liu, X.; Yang, J.; Xu, W.; Zhang, E.; Lu, C. FLS-GAN: An end-to-end super-resolution enhancement framework for FLS terrain in deep-sea mining vehicles. Ocean Eng. 2025, 332, 121369. [Google Scholar] [CrossRef]

- Lehtinen, J.; Munkberg, J.; Hasselgren, J.; Laine, S.; Karras, T.; Aittala, M.; Aila, T. Noise2Noise: Learning image restoration without clean data. arXiv 2018, arXiv:1803.04189. [Google Scholar]

- Batson, J.; Royer, L. Noise2self: Blind Denoising by Self-Supervision. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 524–533. [Google Scholar]

- Ye, T.; Deng, X.; Cong, X.; Zhou, H.; Yan, X. Parallelisation Strategy of Non-local Means Filtering Algorithm for Real-time Denoising of Forward-looking Multi-beam Sonar Images. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 13226–13243. [Google Scholar] [CrossRef]

- Hurtos, N.; Ribas, D.; Cufi, X.; Petillot, Y.; Salvi, J. Fourier-based registration for robust forward-looking sonar mosaicing in low-visibility underwater environments. J. Field Robot. 2015, 32, 123–151. [Google Scholar] [CrossRef]

- Hansen, T.; Birk, A. Using Registration with Fourier-Soft in 2d (fs2d) for Robust Scan Matching of Sonar Range Data. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 3080–3087. [Google Scholar]

- Tsutsumi, K.; Mizuno, K.; Sugimoto, H. Accuracy Enhancement of High-Resolution Acoustic Mosaic Images Using Positioning and Navigating Data. In Proceedings of the Global Oceans 2020: Singapore–US Gulf Coast, Biloxi, MS, USA, 5–30 October 2020; pp. 1–4. [Google Scholar]

- Li, B.; Yan, W.; Li, H. A combinatorial registration method for forward-looking sonar image. IEEE Trans. Ind. Inform. 2023, 20, 2682–2691. [Google Scholar] [CrossRef]

- Su, J.; Li, H.; Qian, J.; An, X.; Qu, F.; Wei, Y. A blending method for forward-looking sonar mosaicing handling intra-and inter-frame artifacts. Ocean Eng. 2024, 298, 117249. [Google Scholar] [CrossRef]

- Shang, X.; Dong, L.; Fang, S. Sonar Image Matching Optimization Using Convolution Approach Based on Clustering Strategy. IEEE Geosci. Remote Sens. Lett. 2024, 22, 1500705. [Google Scholar] [CrossRef]

- Wei, M.; Bian, H.; Zhang, F.; Jia, T. Beam-domain image mosaic of forward-looking sonar using expression domain mapping model. IEEE Sens. J. 2022, 23, 4974–4982. [Google Scholar] [CrossRef]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-Supervised Interest Point Detection and Description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 224–236. [Google Scholar]

- Sun, J.; Shen, Z.; Wang, Y.; Bao, H.; Zhou, X. LoFTR: Detector-Free Local Feature Matching with Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8922–8931. [Google Scholar]

- Gode, S.; Hinduja, A.; Kaess, M. Sonic: Sonar Image Correspondence Using Pose Supervised Learning for Imaging Sonars. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 3766–3772. [Google Scholar]

- Johannsson, H.; Kaess, M.; Englot, B.; Hover, F.; Leonard, J. Imaging Sonar-Aided Navigation for Autonomous Underwater Harbor Surveillance. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 4396–4403. [Google Scholar]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2012, 20, 209–212. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef]

- Chen, W.; Cai, B.; Zheng, S.; Zhao, T.; Gu, K. Perception-and-Cognition-Inspired Quality Assessment for Sonar Image Super-Resolution. IEEE Trans. Multimed. 2024, 26, 6398–6410. [Google Scholar] [CrossRef]

- Zheng, S.; Chen, W.; Zhao, T.; Wei, H.; Lin, L. Utility-Oriented Quality Assessment of Sonar Image Super-Resolution. In Proceedings of the OCEANS 2022, Hampton Roads, VA, USA, 17–22 October 2022; pp. 1–5. [Google Scholar]

- Cai, B.; Chen, W.; Zhang, J.; Junejo, N.U.R.; Zhao, T. Unified No-Reference Quality Assessment for Sonar Imaging and Processing. IEEE Trans. Geosci. Remote Sens. 2024, 63, 5902711. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Alcantarilla, P.F.; Solutions, T. Fast explicit diffusion for accelerated features in nonlinear scale spaces. IEEE Trans. Patt. Anal. Mach. Intell. 2011, 34, 1281–1298. [Google Scholar]

- Liu, H.; Ye, X. Forward-looking sonar image stitching based on midline template matching in polar image. IEEE Trans. Geosci. Remote Sens. 2023, 62, 4201210. [Google Scholar] [CrossRef]

- Sound Metrics. Image Gallery; Sound Metrics: Washington, DC, USA, 2023; Available online: http://www.soundmetrics.com (accessed on 31 May 2025).

| Parameters | Value |

|---|---|

| Operating Frequency | 900 kHz |

| Field of View | 130° |

| Maximum Detection Range | 100 m |

| Optimal Detection Range | 2–60 m |

| Horizontal Beamwidth | 1° |

| Vertical Beamwidth | 20° |

| Maximum Number of Beams | 768 |

| Beam Spacing | 0.18° |

| Range Resolution | 1.3 cm |

| Update Rate | Up to 25 Hz |

| Scene ID | NIQE (Before) | BRISQUE (Before) | NIQE (After) | BRISQUE (After) |

|---|---|---|---|---|

| S1 | 5.138 | 43.451 | 6.016 | 54.147 |

| S2 | 4.665 | 38.006 | 5.609 | 53.102 |

| S3 | 4.987 | 40.441 | 5.477 | 46.230 |

| S4 | 4.914 | 37.371 | 5.555 | 50.392 |

| Scene ID | Trajectory Type | Frame Count | SSR(%) | PRE(%) |

|---|---|---|---|---|

| S1 | Non-loop | 16 | 93.8 | 383 |

| S2 | Loop | 27 | 92.6 | 247 |

| S3 | Loop | 31 | 90.3 | 256 |

| S4 | Non-loop | 25 | 92 | 303 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Yang, J.; Lu, C.; Zhang, E.; Xu, W. Robust Forward-Looking Sonar-Image Mosaicking Without External Sensors for Autonomous Deep-Sea Mining. J. Mar. Sci. Eng. 2025, 13, 1291. https://doi.org/10.3390/jmse13071291

Liu X, Yang J, Lu C, Zhang E, Xu W. Robust Forward-Looking Sonar-Image Mosaicking Without External Sensors for Autonomous Deep-Sea Mining. Journal of Marine Science and Engineering. 2025; 13(7):1291. https://doi.org/10.3390/jmse13071291

Chicago/Turabian StyleLiu, Xinran, Jianmin Yang, Changyu Lu, Enhua Zhang, and Wenhao Xu. 2025. "Robust Forward-Looking Sonar-Image Mosaicking Without External Sensors for Autonomous Deep-Sea Mining" Journal of Marine Science and Engineering 13, no. 7: 1291. https://doi.org/10.3390/jmse13071291

APA StyleLiu, X., Yang, J., Lu, C., Zhang, E., & Xu, W. (2025). Robust Forward-Looking Sonar-Image Mosaicking Without External Sensors for Autonomous Deep-Sea Mining. Journal of Marine Science and Engineering, 13(7), 1291. https://doi.org/10.3390/jmse13071291