Impacts of Wind Assimilation on Error Correction of Forecasted Dynamic Loads from Wind, Wave, and Current for Offshore Wind Turbines

Abstract

1. Introduction

2. Model and Data

2.1. Dynamic Load Forecasting Model for Wind Turbines

2.1.1. COAWST Model

2.1.2. Data Assimilation Module

2.1.3. Bias Correction Algorithm

2.1.4. Dynamic Load Calculation Module

- (a)

- Aerodynamic Loads

- (b)

- Wave Loads

- (c)

- Current Loads

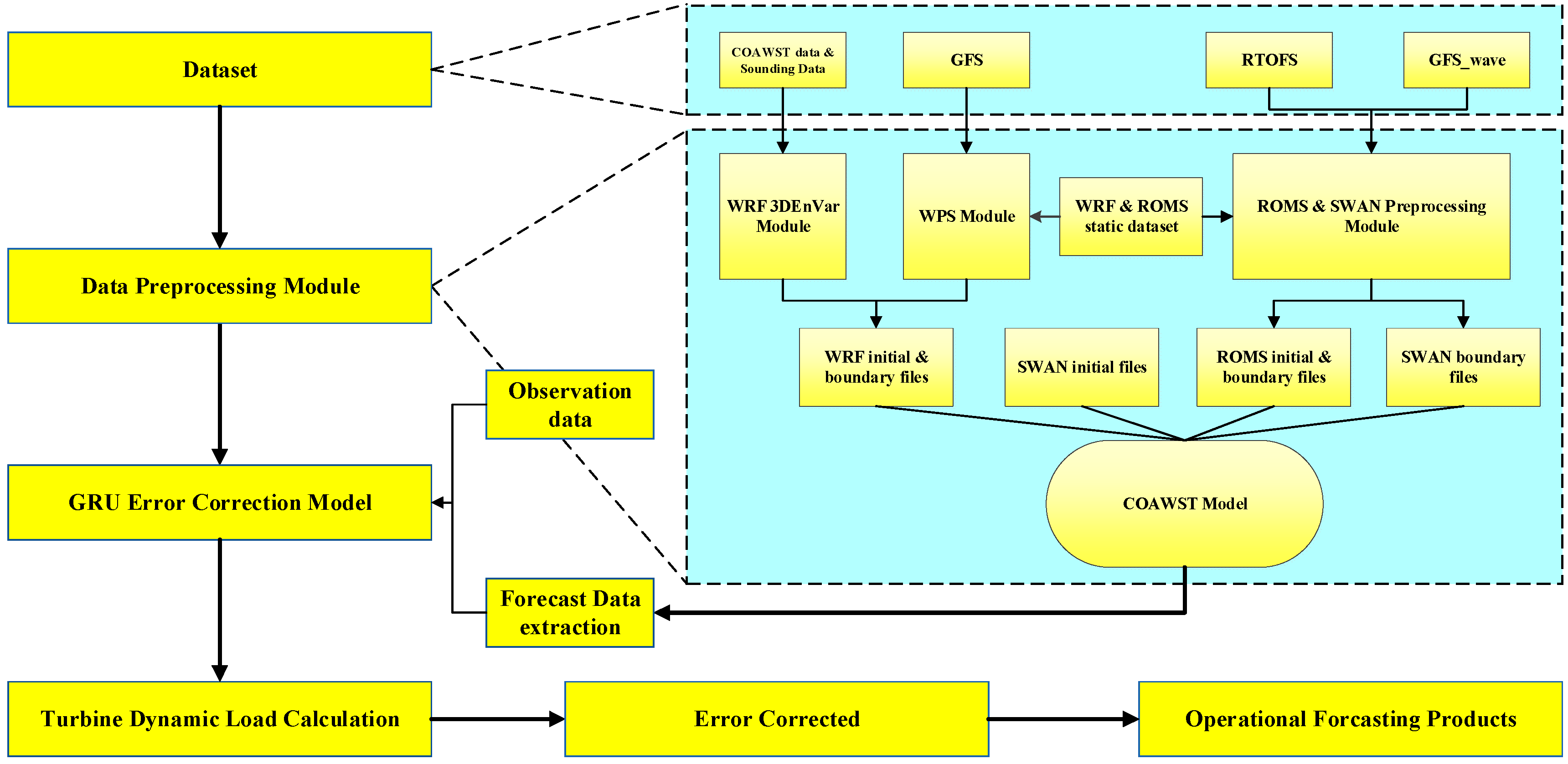

2.1.5. Flowchart of the Forecasting System

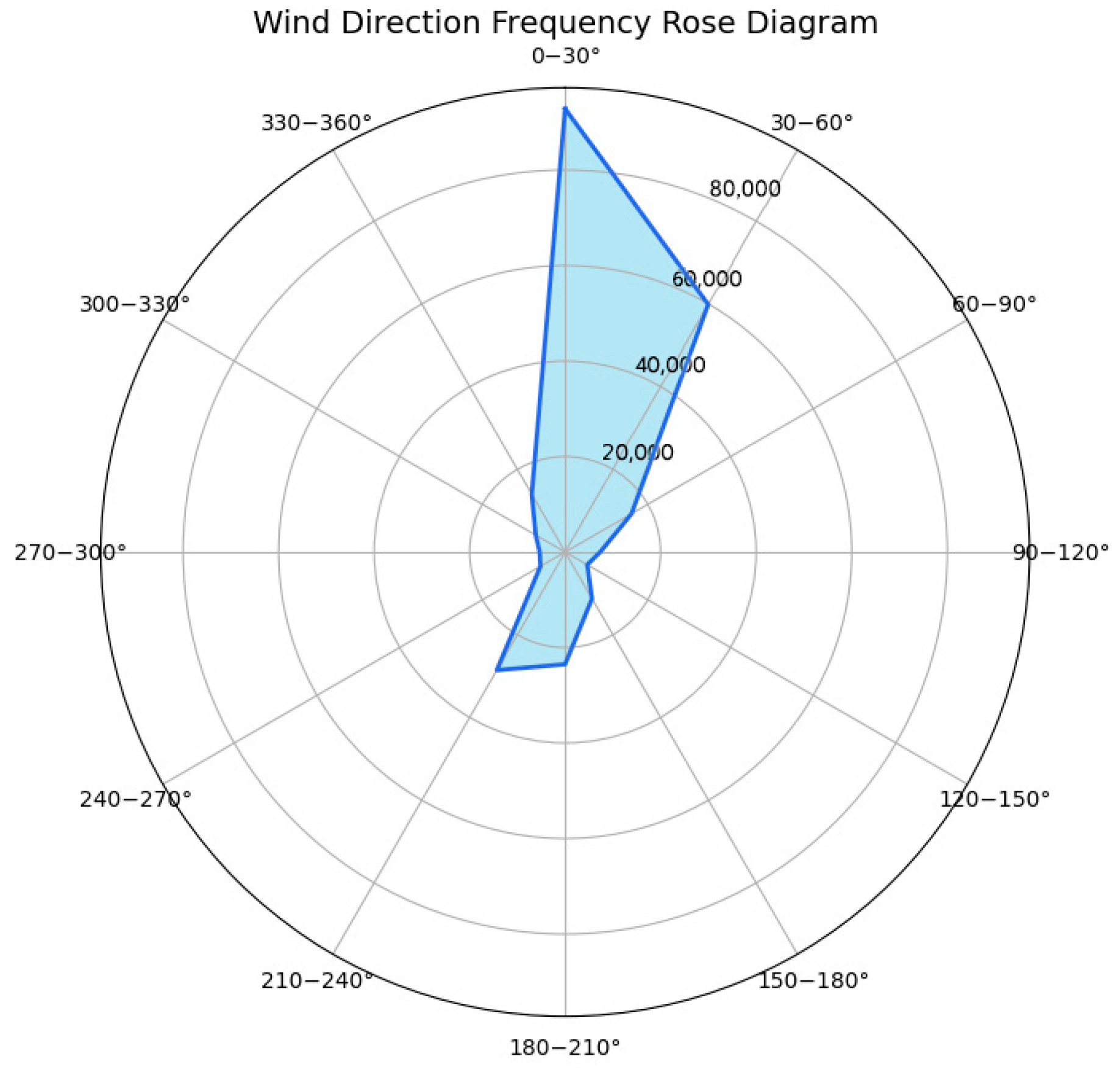

2.2. Data Description

3. Experimental Design

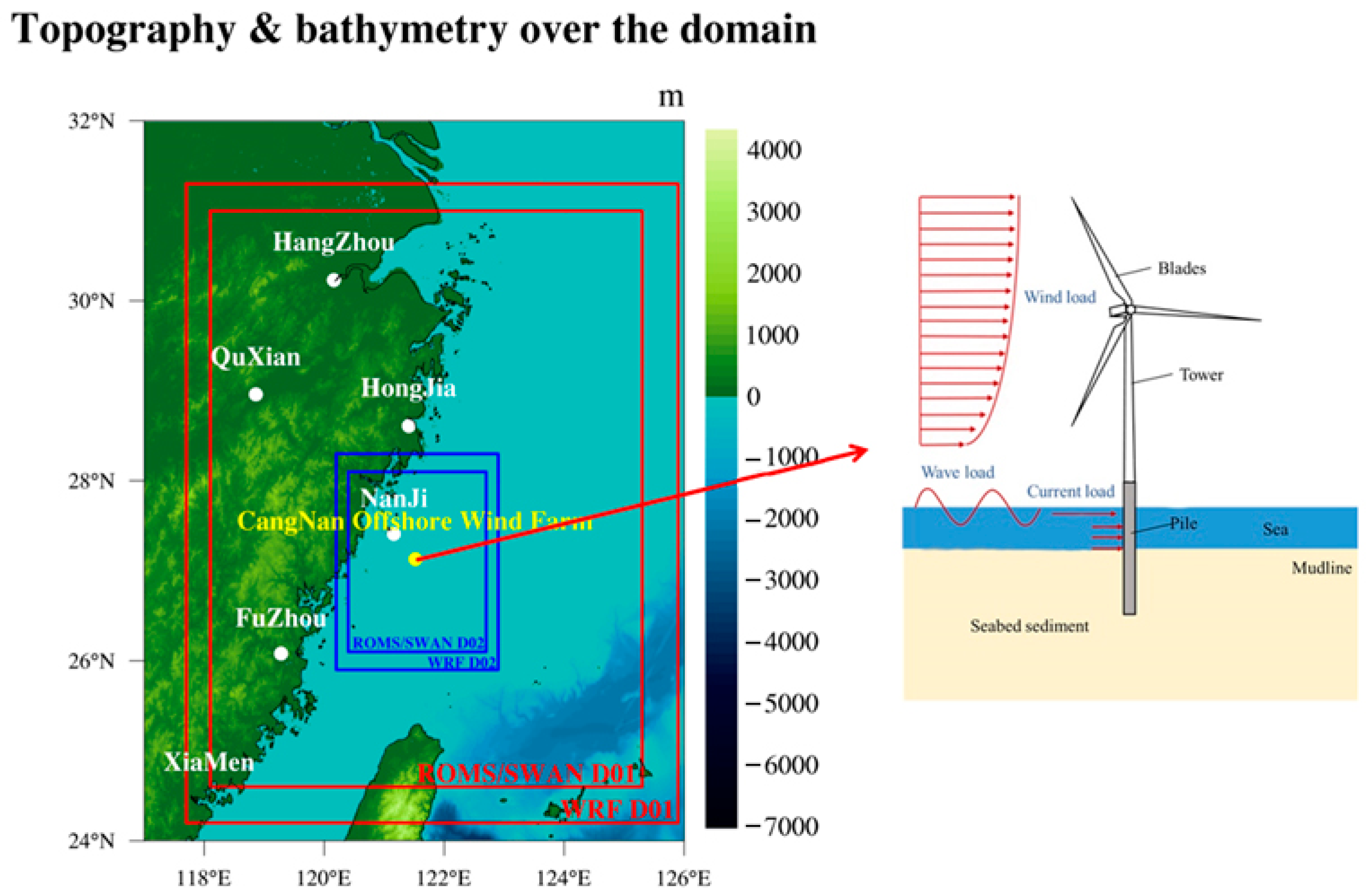

3.1. Model Configuration

3.2. Design of Sensitivity Tests

4. Result

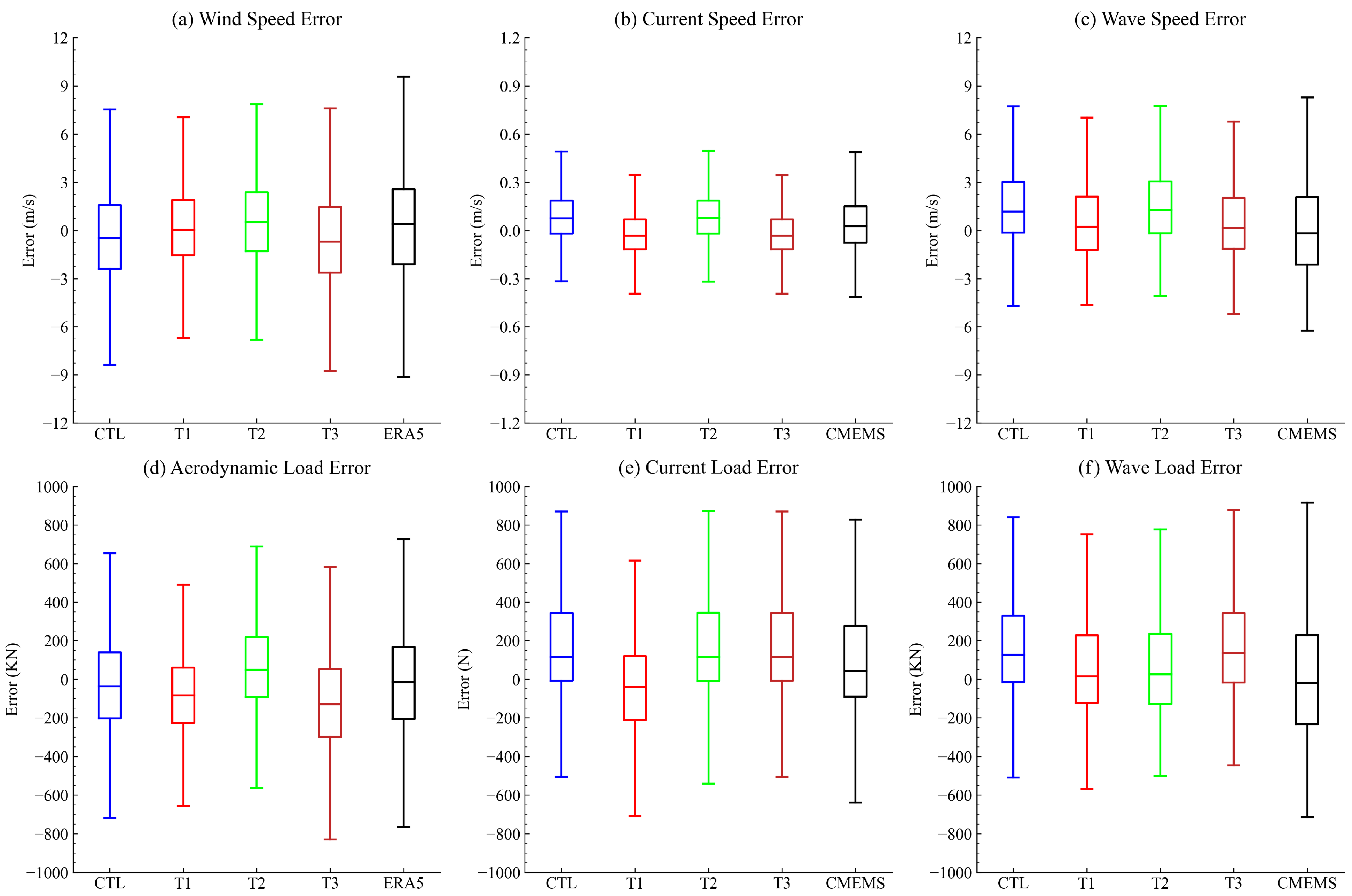

4.1. Error Distribution of Forecasted Dynamic Factors

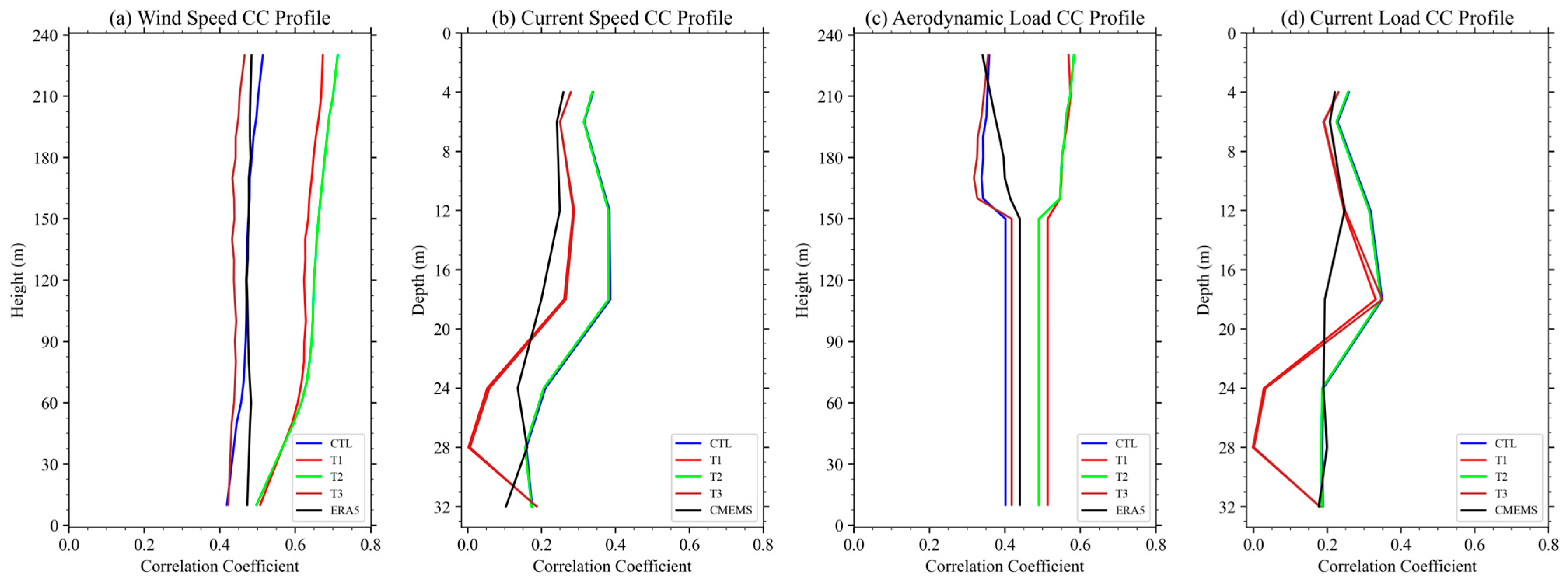

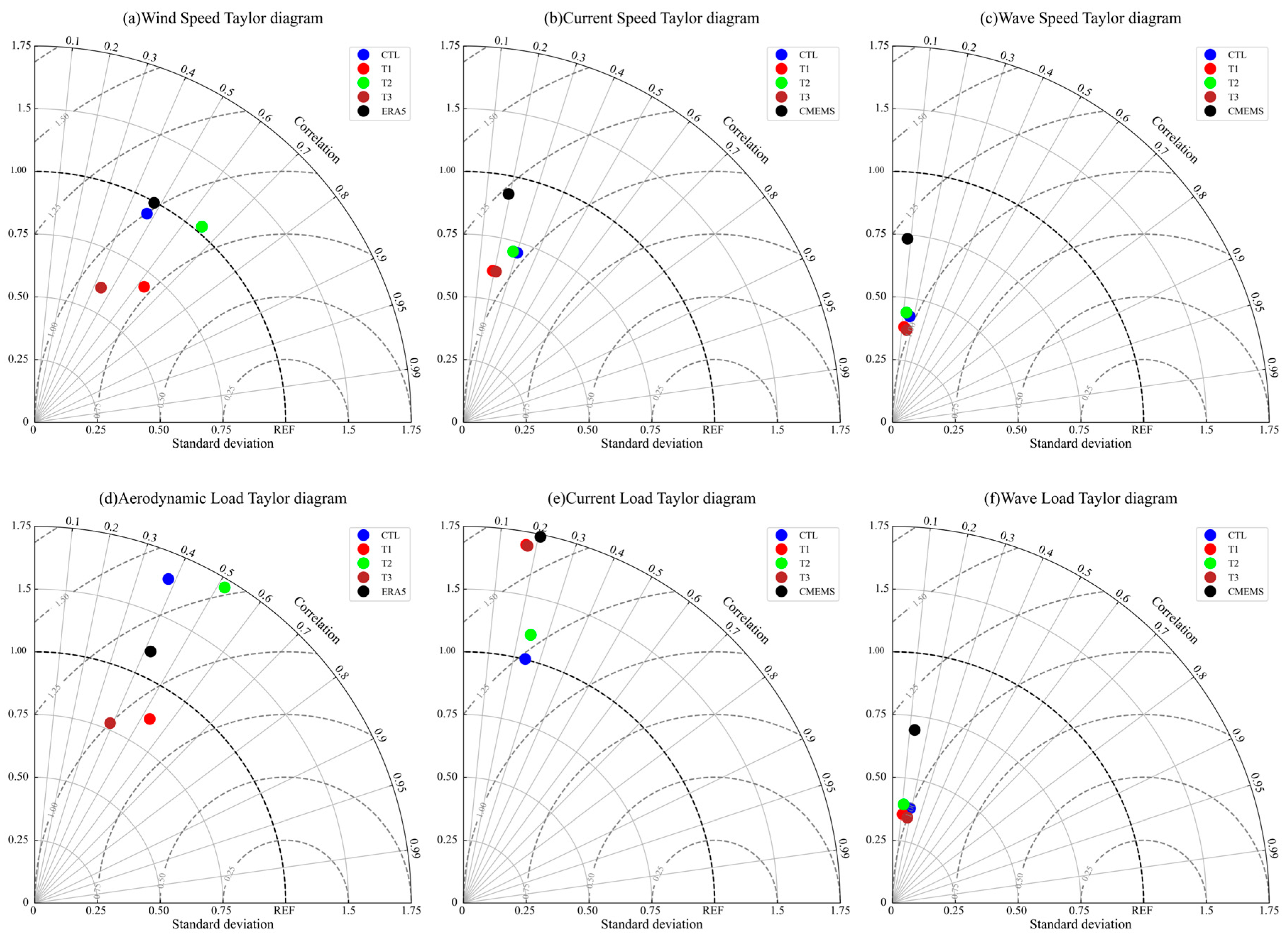

4.2. Statistical Characteristics of Predicted Dynamic Factors

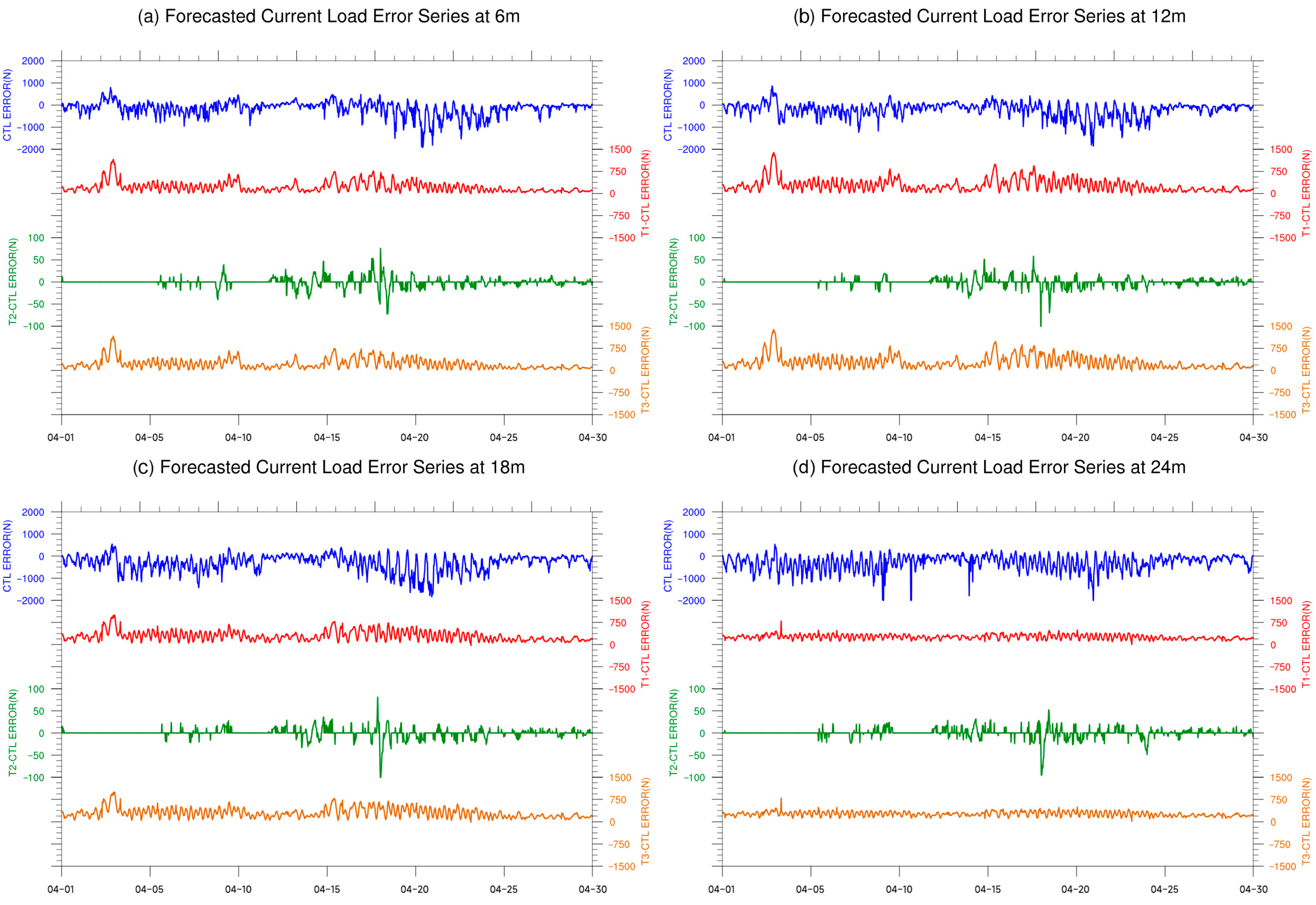

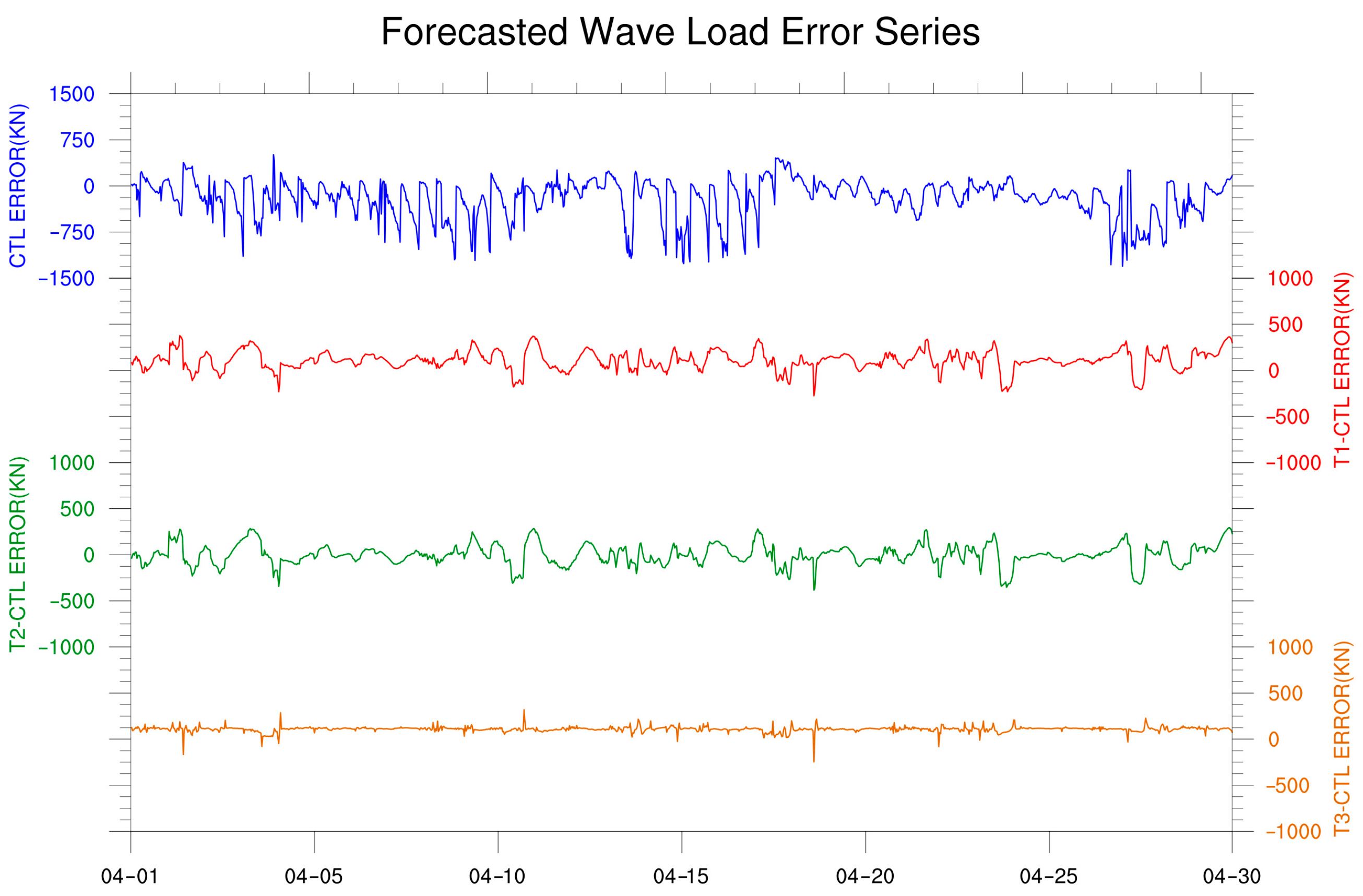

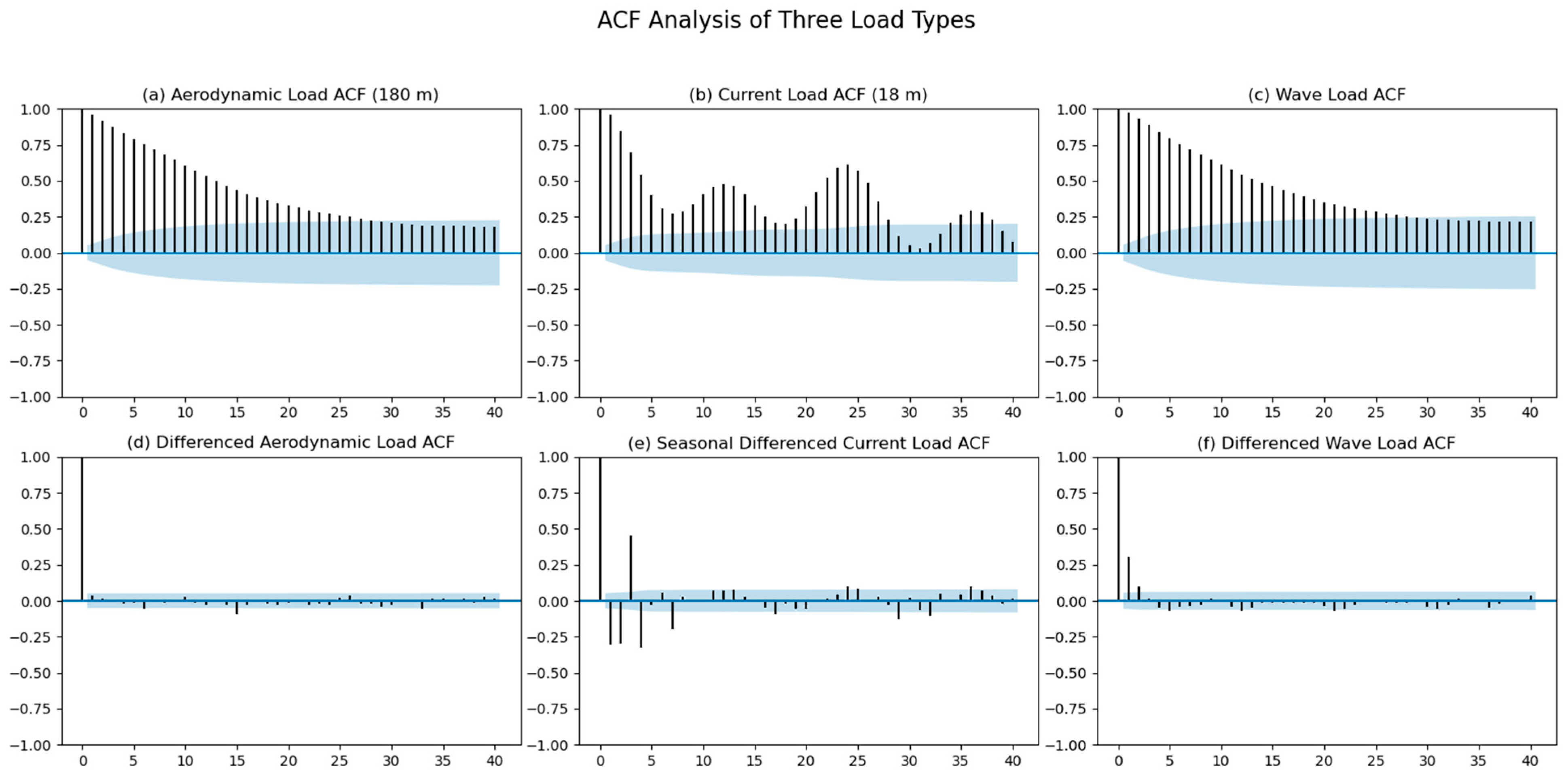

4.3. Temporal Variability of Predicted Dynamic Factors

5. Discussion and Conclusions

- The atmospheric assimilation process effectively reduces forecasting errors, particularly for aerodynamic loads on offshore wind turbines, and can be further enhanced through integration with deep learning-based error correction. During validation, the T3 test, which applied only error correction, reduced RMSEs of aerodynamic, current, and wave loads by approximately 8%, 6.9%, and 12.9%, respectively, compared to the control test (CTL). In contrast, the T1 test, which incorporated both atmospheric assimilation and error correction, achieved greater reductions of about 13%, 6.7%, and 10.09%, respectively. Overall, the combined approach produced forecasts that were more consistent with observational data and outperformed ERA5 and CMEMS reanalysis datasets across multiple evaluation metrics, including RMSE and correlation coefficient.

- While atmospheric assimilation effectively reduced forecast errors for environmental wind speed and aerodynamic loads at turbine height, it had a limited impact on the forecast accuracy of current speed, wave speed, and their associated dynamic loads. In the case of wave loads, atmospheric assimilation introduced minor fluctuations in the simulation results, but the overall changes in error and standard deviation were not substantial. For current load forecasts, the differences caused by atmospheric assimilation were negligible and could even be considered as noise-level effects.

- The error correction module based on the GRU algorithm, although effective in reducing dynamic load forecasting errors, primarily corrects the mean bias of the forecast results. This process tends to suppress the temporal variability or fluctuations in the forecasts. Consequently, the corrected results exhibit limited improvement in terms of correlation coefficient and standard deviation. In contrast, atmospheric assimilation does not significantly alter the temporal variability of the original forecast. In the case of aerodynamic load forecasting, where the improvement was most significant, the integration of atmospheric assimilation effectively compensated for the decline in the correlation coefficient between the corrected forecasts and actual observations. However, for wave and current load forecasts, atmospheric assimilation had minimal impact on enhancing the correlation coefficient.

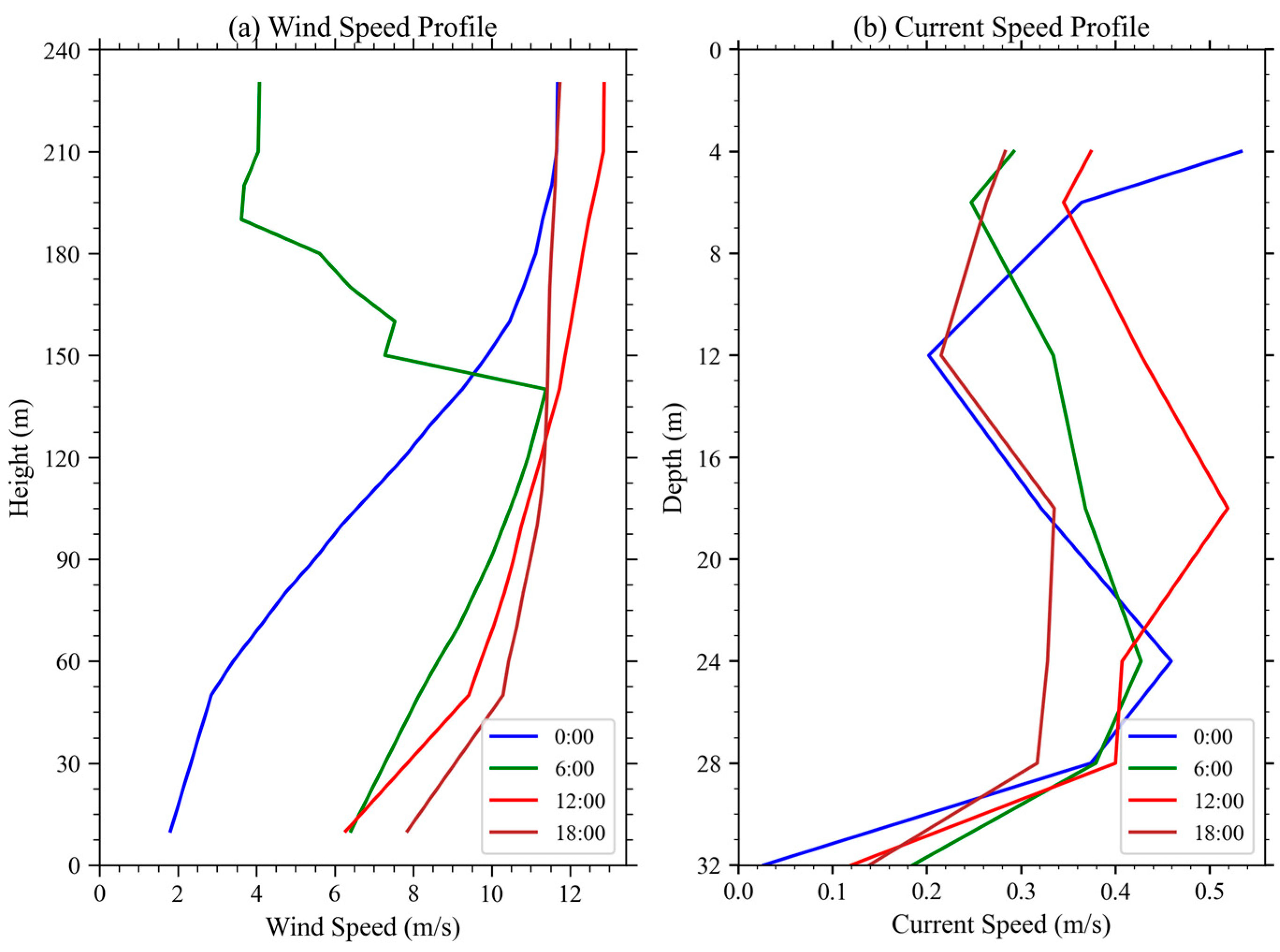

- The forecast errors for aerodynamic and current loads exhibited vertical variations that were generally consistent with the magnitude of their baseline values. At higher altitudes or shallower depths—where wind and current speeds tend to be greater—the corresponding load forecast errors were also relatively larger. However, the vertical variation in correlation coefficients was minimal, indicating that the forecast accuracy, in terms of trend consistency with observations, remained relatively stable across different heights.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Nomenclature

| Abbreviations | |

| AR | Autoregressive Model |

| ARMA | Auto Regressive Moving Average |

| ARIMA | Auto Regressive Integrated Moving Average |

| ANN | Artificial Neural Network |

| CMEMS | Copernicus Marine Environment Monitoring Service |

| COAWST | Coupled Ocean-Atmosphere-Wave-Sediment Transport |

| ECMWF | European Centre for Medium-Range Weather Forecasts |

| ERA5 | ECMWF Reanalysis v5 |

| FL | Fuzzy Logic |

| GF | Grell-Freitas |

| GFS | Global Forecast System |

| GRU | Gated Recurrent Unit |

| JTWC | Joint Typhoon Warning Center |

| LS-SVM | Least Squares-Support Vector Machine |

| LSTM | Long-Short Term Memory |

| MAE | Mean Absolute Error |

| MAPE | Mean Absolute Percentage Error |

| MCT | Model Coupling Toolkit |

| MOI | Mercator Ocean International |

| RMSE | Root Mean Square Error |

| ROMS | Regional Ocean Modeling System |

| SWAN | Simulating Waves Nearshore |

| WRF | Weather Research and Forecasting |

| WSM6 | WRF Single-Moment 6-Class |

| 3D EnVar | 3D Three-dimensional Ensemble Variational Assimilation |

| Symbols | |

| Projected Area of the Unit Cylindrical Height Perpendicular to the Direction of Wave Propagation | |

| Swept Area of the Rotor | |

| Drag Coefficient | |

| Drag Coefficient in the Direction Perpendicular to the Cylinder Axis | |

| Inertia Coefficient | |

| Thrust Coefficient | |

| Drag Coefficient | |

| Diameter of the Monopile | |

| The Turbine Load | |

| Current Load | |

| Tower Wind Load | |

| Wave Load | |

| Wind Speed | |

| Cut-In Wind Speed | |

| Rated Wind Speed | |

| Cut-Out Wind Speed | |

| Wave Velocity. | |

| The Average Wind Speed at Height Z | |

| Displacement Volume of the Unit Cylindrical Height | |

| Air Density | |

| Seawater Density |

References

- Liu, H.; Mi, X.; Li, Y. Smart Deep Learning Based Wind Speed Prediction Model Using Wavelet Packet Decomposition, Convolutional Neural Network and Convolutional Long Short Term Memory Network. Energy Convers. Manag. 2018, 166, 120–131. [Google Scholar] [CrossRef]

- Bilgili, M.; Yasar, A.; Simsek, E. Offshore Wind Power Development in Europe and Its Comparison with Onshore Counterpart. Renew. Sustain. Energy Rev. 2011, 15, 905–915. [Google Scholar] [CrossRef]

- Jahani, K.; Langlois, R.G.; Afagh, F.F. Structural Dynamics of Offshore Wind Turbines: A Review. Ocean. Eng. 2022, 251, 111136. [Google Scholar] [CrossRef]

- Marino, E.; Giusti, A.; Manuel, L. Offshore Wind Turbine Fatigue Loads: The Influence of Alternative Wave Modeling for Different Turbulent and Mean Winds. Renew. Energy 2017, 102, 157–169. [Google Scholar] [CrossRef]

- Moulas, D.; Shafiee, M.; Mehmanparast, A. Damage Analysis of Ship Collisions with Offshore Wind Turbine Foundations. Ocean. Eng. 2017, 143, 149–162. [Google Scholar] [CrossRef]

- Lian, J.; Jia, Y.; Wang, H.; Liu, F. Numerical Study of the Aerodynamic Loads on Offshore Wind Turbines under Typhoon with Full Wind Direction. Energies 2016, 9, 613. [Google Scholar] [CrossRef]

- Chen, X.; Li, C.; Xu, J. Failure Investigation on a Coastal Wind Farm Damaged by Super Typhoon: A Forensic Engineering Study. J. Wind. Eng. Ind. Aerodyn. 2015, 147, 132–142. [Google Scholar] [CrossRef]

- Chou, J.-S.; Ou, Y.-C.; Lin, K.-Y.; Wang, Z.-J. Structural Failure Simulation of Onshore Wind Turbines Impacted by Strong Winds. Eng. Struct. 2018, 162, 257–269. [Google Scholar] [CrossRef]

- Pokhrel, J.; Seo, J. Statistical Model for Fragility Estimates of Offshore Wind Turbines Subjected to Aero-Hydro Dynamic Loads. Renew. Energy 2021, 163, 1495–1507. [Google Scholar] [CrossRef]

- Hu, Y.; Yang, J.; Baniotopoulos, C.; Wang, X.; Deng, X. Dynamic Analysis of Offshore Steel Wind Turbine Towers Subjected to Wind, Wave and Current Loading during Construction. Ocean. Eng. 2020, 216, 108084. [Google Scholar] [CrossRef]

- Zuo, H.; Bi, K.; Hao, H.; Xin, Y.; Li, J.; Li, C. Fragility Analyses of Offshore Wind Turbines Subjected to Aerodynamic and Sea Wave Loadings. Renew. Energy 2020, 160, 1269–1282. [Google Scholar] [CrossRef]

- Bisoi, S.; Haldar, S. Impact of Climate Change on Dynamic Behavior of Offshore Wind Turbine. Mar. Georesour. Geotechnol. 2017, 35, 905–920. [Google Scholar] [CrossRef]

- Chen, C.; Ma, Y.; Fan, T. Review of Model Experimental Methods Focusing on Aerodynamic Simulation of Floating Offshore Wind Turbines. Renew. Sustain. Energy Rev. 2022, 157, 112036. [Google Scholar] [CrossRef]

- Buljac, A.; Kozmar, H.; Yang, W.; Kareem, A. Concurrent Wind, Wave and Current Loads on a Monopile-Supported Offshore Wind Turbine. Eng. Struct. 2022, 255, 113950. [Google Scholar] [CrossRef]

- Rana, F.M.; Adamo, M.; Lucas, R.; Blonda, P. Sea Surface Wind Retrieval in Coastal Areas by Means of Sentinel-1 and Numerical Weather Prediction Model Data. Remote Sens. Environ. 2019, 225, 379–391. [Google Scholar] [CrossRef]

- Cassola, F.; Burlando, M. Wind Speed and Wind Energy Forecast through Kalman Filtering of Numerical Weather Prediction Model Output. Appl. Energy 2012, 99, 154–166. [Google Scholar] [CrossRef]

- Mirouze, I.; Rémy, E.; Lellouche, J.-M.; Martin, M.J.; Donlon, C.J. Impact of Assimilating Satellite Surface Velocity Observations in the Mercator Ocean International Analysis and Forecasting Global 1/4° System. Front. Mar. Sci. 2024, 11, 1376999. [Google Scholar] [CrossRef]

- Rusu, E. Reliability and Applications of the Numerical Wave Predictions in the Black Sea. Front. Mar. Sci. 2016, 3, 95. [Google Scholar] [CrossRef]

- Kavasseri, R.G.; Seetharaman, K. Day-Ahead Wind Speed Forecasting Using f-ARIMA Models. Renew. Energy 2009, 34, 1388–1393. [Google Scholar] [CrossRef]

- Leutbecher, M.; Palmer, T.N. Ensemble Forecasting. J. Comput. Phys. 2008, 227, 3515–3539. [Google Scholar] [CrossRef]

- Zhou, J.; Shi, J.; Li, G. Fine Tuning Support Vector Machines for Short-Term Wind Speed Forecasting. Energy Convers. Manag. 2011, 52, 1990–1998. [Google Scholar] [CrossRef]

- Özger, M. Significant Wave Height Forecasting Using Wavelet Fuzzy Logic Approach. Ocean. Eng. 2010, 37, 1443–1451. [Google Scholar] [CrossRef]

- Liu, Z.; Jiang, P.; Zhang, L.; Niu, X. A Combined Forecasting Model for Time Series: Application to Short-Term Wind Speed Forecasting. Appl. Energy 2020, 259, 114137. [Google Scholar] [CrossRef]

- Halicki, A.; Dudkowska, A.; Gic-Grusza, G. Short-Term Wave Forecasting for Offshore Wind Energy in the Baltic Sea. Ocean Eng. 2025, 315, 119700. [Google Scholar] [CrossRef]

- Krivec, T.; Kocijan, J.; Perne, M.; Grašic, B.; Božnar, M.Z.; Mlakar, P. Data-Driven Method for the Improving Forecasts of Local Weather Dynamics. Eng. Appl. Artif. Intell. 2021, 105, 104423. [Google Scholar] [CrossRef]

- Han, Y.; Mi, L.; Shen, L.; Cai, C.S.; Liu, Y.; Li, K.; Xu, G. A Short-Term Wind Speed Prediction Method Utilizing Novel Hybrid Deep Learning Algorithms to Correct Numerical Weather Forecasting. Appl. Energy 2022, 312, 118777. [Google Scholar] [CrossRef]

- Frnda, J.; Durica, M.; Rozhon, J.; Vojtekova, M.; Nedoma, J.; Martinek, R. ECMWF Short-Term Prediction Accuracy Improvement by Deep Learning. Sci. Rep. 2022, 12, 7898. [Google Scholar] [CrossRef]

- Yang, X.; Dai, K.; Zhu, Y. Calibration of Gridded Wind Speed Forecasts Based on Deep Learning. J. Meteorol. Res. 2023, 37, 757–774. [Google Scholar] [CrossRef]

- Schultz, M.G.; Betancourt, C.; Gong, B.; Kleinert, F.; Langguth, M.; Leufen, L.H.; Mozaffari, A.; Stadtler, S. Can Deep Learning Beat Numerical Weather Prediction? Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2021, 379, 20200097. [Google Scholar] [CrossRef]

- Bourgin, F.; Ramos, M.H.; Thirel, G.; Andréassian, V. Investigating the Interactions between Data Assimilation and Post-Processing in Hydrological Ensemble Forecasting. J. Hydrol. 2014, 519, 2775–2784. [Google Scholar] [CrossRef]

- Ham, Y.-G.; Joo, Y.-S.; Kim, J.-H.; Lee, J.-G. Partial-Convolution-Implemented Generative Adversarial Network for Global Oceanic Data Assimilation. Nat. Mach. Intell. 2024, 6, 834–843. [Google Scholar] [CrossRef]

- Warner, J.C.; Armstrong, B.; He, R.; Zambon, J.B. Development of a Coupled Ocean–Atmosphere–Wave–Sediment Transport (COAWST) Modeling System. Ocean. Model. 2010, 35, 230–244. [Google Scholar] [CrossRef]

- Liu, N.; Ling, T.; Wang, H.; Zhang, Y.; Gao, Z.; Wang, Y. Numerical Simulation of Typhoon Muifa (2011) Using a Coupled Ocean-Atmosphere-Wave-Sediment Transport (COAWST) Modeling System. J. Ocean. Univ. China 2015, 14, 199–209. [Google Scholar] [CrossRef]

- Thankaswamy, A.; Xian, T.; Wang, L.-P. Typhoons and Their Upper Ocean Response over South China Sea Using COAWST Model. Front. Earth Sci. 2023, 11, 1102957. [Google Scholar] [CrossRef]

- Gao, S.; Chen, J.; Yu, C.; Hu, H.; Wu, Y. Direct Assimilation of Radar Reflectivity Using an Ensemble 3DEnVar Approach to Improve Analysis and Forecasting of Tornadic Supercells over Eastern China. Q. J. R. Meteorol. Soc. 2024, 150, 2581–2601. [Google Scholar] [CrossRef]

- Montmerle, T.; Michel, Y.; Arbogast, E.; Ménétrier, B.; Brousseau, P. A 3D Ensemble Variational Data Assimilation Scheme for the Limited-Area AROME Model: Formulation and Preliminary Results. Q. J. R. Meteorol. Soc. 2018, 144, 2196–2215. [Google Scholar] [CrossRef]

- Michel, Y.; Brousseau, P. A Square-Root, Dual-Resolution 3DEnVar for the AROME Model: Formulation and Evaluation on a Summertime Convective Period. Mon. Weather. Rev. 2021, 149, 3135–3153. [Google Scholar] [CrossRef]

- Cho, K.; Chung, J.; Gülçehre, Ç.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Arany, L.; Bhattacharya, S.; Macdonald, J.; Hogan, S.J. Design of Monopiles for Offshore Wind Turbines in 10 Steps. Soil Dyn. Earthq. Eng. 2017, 92, 126–152. [Google Scholar] [CrossRef]

- Liu, G.; Jiang, Z.; Zhang, H.; Du, Y.; Tian, X.; Wen, B.; Peng, Z. A Novel Real-Time Hybrid Testing Method for Twin-Rotor Floating Wind Turbines with Single-Point Mooring Systems. Ocean. Eng. 2024, 312, 119151. [Google Scholar] [CrossRef]

- Morison, J.R.; Johnson, J.W.; Schaaf, S.A. The Force Exerted by Surface Waves on Piles. J. Pet. Technol. 1950, 2, 149–154. [Google Scholar] [CrossRef]

- JTS145-2015; Hydrographic Specifications for Ports and Waterways. Ministry of Transport: Beijing, China, 2015.

- NB/T10105-2018; Code for Design of Wind Turbine Foundations for Offshore Wind Power Projects. China Energy Administration: Beijing, China, 2018.

- Ghafoorian, F.; Wan, H.; Chegini, S. A Systematic Analysis of a Small-Scale HAWT Configuration and Aerodynamic Performance Optimization Through Kriging, Factorial, and RSM Methods. J. Appl. Comput. Mech. 2024, 1–17. [Google Scholar] [CrossRef]

- Zhou, J.; Gu, R.; Shen, P.; Liu, C.; Li, Z.; Zhu, K.; Shi, Z. CPTU-Based Offshore Wind Monopile Rigid Bearing Mechanism Analysis. J. Mar. Sci. Eng. 2025, 13, 130. [Google Scholar] [CrossRef]

- GB/T14914.6-2021; The Specification for Marine Observation—Part 6: Data Processing and Quality Control of Marine Observational Data. National Marine Data & Information Service: Dalian, China, 2021.

- Traon, P.-Y.L.; Reppucci, A.; Fanjul, E.Á.; Aouf, L.; Behrens, A.; Belmonte, M.; Bentamy, A.; Bertino, L.; Brando, V.E.; Kreiner, M.B.; et al. From Observation to Information and Users: The Copernicus Marine Service Perspective. Front. Mar. Sci. 2019, 6. [Google Scholar] [CrossRef]

| WRF | ROMS | SWAN | |

|---|---|---|---|

| Time Step | 30 s | 60 s | 180 s |

| Gird Nesting | YES | YES | YES |

| Outer Grid Number | 100 × 100 | 97 × 97 | 97 × 97 |

| Inner Grid Number | 100 × 100 | 97 × 97 | 97 × 97 |

| Horizontal Grid Resolution | 9 km for outer grid, 3 km for inner grid | 9 km for outer grid, 3 km for inner grid | 9 km for outer grid, 3 km for inner grid |

| Vertical Layer Number | 51 | 16 | None |

| Initial Data Source | GFS | RTOFS | GFS_wave |

| Variable Exchange Frequency | 1800 s−1 | 1800 s−1 | 1800 s−1 |

| CTL | T1 | T2 | T3 | ERA5 | CMEMS | |

|---|---|---|---|---|---|---|

| Wind speed | 3.51 m/s | 2.68 m/s | 2.97 m/s | 3.19 m/s | 3.55 m/s | - |

| Aerodynamic load | 319.21 kN | 275.59 kN | 278.38 kN | 344.82 kN | 319.93 kN | - |

| Wave speed | 3.19 m/s | 2.83 m/s | 3.28 m/s | 2.77 m/s | - | 3.23 m/s |

| Wave load | 373.58 kN | 335.85 kN | 382.93 kN | 327.67 kN | - | 377.48 kN |

| Current Speed | 0.17 m/s | 0.13 m/s | 0.16 m/s | 0.14 m/s | - | 0.17m/s |

| Current load | 336.26 N | 315.51 N | 336.76 N | 312.78 N | - | 334.02 N |

| CTL | T1 | T2 | T3 | ERA5 | CMEMS | |

|---|---|---|---|---|---|---|

| Wind speed | 0.47 | 0.65 | 0.63 | 0.44 | 0.47 | - |

| Aerodynamic load | 0.38 | 0.53 | 0.51 | 0.39 | 0.42 | - |

| Wave speed | 0.16 | 0.11 | 0.14 | 0.12 | - | 0.08 |

| Wave load | 0.18 | 0.11 | 0.11 | 0.17 | - | 0.12 |

| Current Speed | 0.28 | 0.18 | 0.27 | 0.19 | - | 0.19 |

| Current load | 0.24 | 0.17 | 0.24 | 0.18 | - | 0.21 |

| OBS | CTL | T1 | T2 | T3 | ERA5 | CMEMS | |

|---|---|---|---|---|---|---|---|

| Wind speed (m2/s2) | 3.21 | 3.43 | 2.34 | 3.49 | 2.04 | 3.42 | - |

| Aerodynamic load (106N2) | 284.69 | 270.74 | 277.69 | 294.96 | 236.27 | 289.94 | - |

| Wave speed (m2/s2) | 2.56 | 1.09 | 0.98 | 1.13 | 0.95 | - | 1.88 |

| Wave load (106N2) | 299.78 | 110.96 | 102.29 | 111.59 | 101.59 | - | 201.27 |

| Current Speed (m2/s2) | 0.13 | 0.093 | 0.092 | 0.094 | 0.091 | - | 0.12 |

| Current load (N2) | 298.31 | 140.24 | 228.32 | 140.59 | 227.83 | - | 244.22 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zou, J.; Yang, S.; Liu, X.; Wang, H.; Liu, L.; Guo, X.; Zhang, H.; Qiu, Z.; Gai, Z. Impacts of Wind Assimilation on Error Correction of Forecasted Dynamic Loads from Wind, Wave, and Current for Offshore Wind Turbines. J. Mar. Sci. Eng. 2025, 13, 1211. https://doi.org/10.3390/jmse13071211

Zou J, Yang S, Liu X, Wang H, Liu L, Guo X, Zhang H, Qiu Z, Gai Z. Impacts of Wind Assimilation on Error Correction of Forecasted Dynamic Loads from Wind, Wave, and Current for Offshore Wind Turbines. Journal of Marine Science and Engineering. 2025; 13(7):1211. https://doi.org/10.3390/jmse13071211

Chicago/Turabian StyleZou, Jing, Shuai Yang, Xiaolei Liu, Hang Wang, Lu Liu, Xingsen Guo, Hong Zhang, Zhijin Qiu, and Zhipeng Gai. 2025. "Impacts of Wind Assimilation on Error Correction of Forecasted Dynamic Loads from Wind, Wave, and Current for Offshore Wind Turbines" Journal of Marine Science and Engineering 13, no. 7: 1211. https://doi.org/10.3390/jmse13071211

APA StyleZou, J., Yang, S., Liu, X., Wang, H., Liu, L., Guo, X., Zhang, H., Qiu, Z., & Gai, Z. (2025). Impacts of Wind Assimilation on Error Correction of Forecasted Dynamic Loads from Wind, Wave, and Current for Offshore Wind Turbines. Journal of Marine Science and Engineering, 13(7), 1211. https://doi.org/10.3390/jmse13071211