1. Introduction

For marine mammals, behavior provides key insights into health status, social interactions, and environmental adaptation. By systematically monitoring and analyzing these behaviors, researchers and caretakers can detect early signs of distress, illness, or abnormal activity, enabling timely intervention and better care. However, manual behavior observation is labor-intensive, subjective, and often affected by observer fatigue, experience level, and environmental conditions. These challenges become more pronounced in large-scale monitoring, such as tracking multiple animals in expansive enclosures or natural habitats. Consequently, there is an increasing demand for automated behavior recognition systems to minimize human intervention.

Recent advancements in computer vision and machine learning have made automated behavior recognition possible. However, existing systems face major limitations—they typically rely on predefined behavior categories and require large-scale labeled datasets for training, making it difficult to accommodate new or unexpected behaviors. Furthermore, conventional models lack the flexibility to incorporate new behaviors.

To address these challenges, this study proposes a flexible, user-driven animal behavior recognition system that leverages DeepLabCut-based pose estimation and a Long Short-Term Memory (LSTM) network to analyze animal movements over time. The system introduces an incremental learning framework, enabling users to seamlessly add new behaviors with minimal annotation while retaining previously learned ones. Compared to traditional approaches, this system reduces annotation effort, enhances adaptability, and supports diverse research settings without requiring frequent model retraining.

By improving behavior recognition accuracy, adaptability, and usability, this system provides a more practical and scalable solution for monitoring animal welfare and facilitating behavioral research across different species and environments.

Animal behavior serves as a fundamental indicator of physical and mental well-being, making its accurate assessment crucial for both conservation and animal care. Monitoring animal behavior in controlled environments, such as aquariums, zoos, and research facilities, helps identify stress, illness, and environmental factors that may impact welfare. Similarly, in wild populations, behavioral patterns reflect ecosystem health, human disturbances, and habitat conditions.

Animals with complex social structures and high cognitive abilities often exhibit behavioral changes that can signify underlying health concerns or environmental stressors. However, manual tracking of animal behaviors over extended periods is impractical, especially when monitoring multiple individuals across large habitats. Existing automated methods are often constrained by fixed behavior categories, high data annotation requirements, and limited adaptability, making them less effective in real-world applications.

This study aims to develop a scalable and user-adaptive system that enables continuous learning and real-time observation of animal behaviors. By allowing users to incrementally update behavior models with minimal annotation, the system enhances its adaptability and ensures more efficient, accurate, and accessible behavior recognition. Beyond simply detecting behaviors, this system is designed to support early identification of behavior shifts that may indicate stress or health issues, allowing caretakers to intervene before conditions worsen. Furthermore, automating behavior recognition reduces the workload of caregivers, allowing them to focus on animal care rather than manual monitoring.

Ultimately, this research not only seeks to enhance animal care in controlled environments but also contributes to wildlife conservation by providing an efficient and adaptable behavior recognition tool. A flexible and expandable model applicable across different species and research environments would be invaluable for both scientists and conservationists working to protect biodiversity and preserve ecosystems.

The primary objective of this research is to develop an expandable and user-driven animal behavior recognition system that improves upon conventional approaches. Specifically, the system aims to achieve the following:

Reduce Annotation Effort

Unlike traditional models that require extensive labeled datasets, this system updates behavior models using a small number of annotated video samples, significantly reducing the time and expertise required for data preparation.

Enable Incremental Learning Without Full Retraining

Implement an incremental learning framework that allows users to seamlessly add new behavior categories while maintaining recognition of previously learned behaviors. This ensures adaptability without requiring full model retraining.

Enhance Scalability Across Species and Environments

Design a model that is not restricted to specific species and make it adaptable for various research settings, including zoos, aquariums, field studies, and so on.

Develop a User-Friendly Interface

Create an intuitive annotation and training interface that allows both researchers and non-experts to contribute new behavior data, ensuring broader accessibility and ease of use.

Through these advancements, the proposed system surpasses traditional methods by offering a more efficient, scalable, and user-adaptive solution for real-time animal behavior analysis.

2. Related Works

2.1. Paper Surveys of Animal Behavior Observation

Observing animal behavior is fundamental to ethology and offers insights into the physiological and psychological states of animals across various environments. Traditional observation methods have long documented specific actions and interaction patterns, forming the basis for understanding animal welfare and ecological adaptations. Recent studies have expanded this field, developing systematic behavioral catalogs and assessing observed behaviors in both captive and wild settings.

Behavioral observation in captivity provides a controlled setting for studying animal welfare. For instance, Delfour et al. [

1] found that behavioral diversity is a positive welfare indicator that is influenced by factors such as social grouping and environmental enrichment. Similarly, Jensen et al. [

2] showed that dolphins exhibit increased vigilance and surface-looking behaviors before shows, indicating anticipation of positive reinforcement. In [

3], they further linked frequent anticipatory behavior to cognitive bias, suggesting a potential indicator of negative affective states.

In natural environments, behavioral observations provide critical insights into individual behavioral phenotypes. Zenth et al. [

4] examined the role of endocrine levels, personality traits, and social environment in shaping behaviors of Galápagos sea lion pups. The study emphasized that controlled environments may fail to capture complex interactions between these factors and highlighted how population declines can reduce behavioral diversity and adaptability.

Together, these studies underscore the importance of behavioral observation in assessing animal welfare, social interactions, and ecological adaptations. Methods such as ethograms and anticipatory behavior analysis provide valuable data for improving care practices and supporting conservation efforts. However, traditional observation methods remain labor-intensive and subjective, emphasizing the need for automated and scalable solutions to enhance efficiency and objectivity in behavioral monitoring.

2.2. Animal Behavior Observation

2.2.1. Sensor-Based Techniques

Sensor-based techniques are invaluable for studying animal behavior and physiology, particularly in environments where direct observation is limited. Devices such as accelerometers, GPS trackers, and physiological monitors allow researchers to infer behaviors with high precision by collecting detailed data on movements, locations, and biological states. In [

5], bio-logging sensors were used to classify bottlenose dolphin behaviors, achieving over 90% accuracy. The study highlighted the importance of data preprocessing and algorithmic optimization, showing that effective classification is possible even with sparse observational data. Similarly, Shorter et al. [

6] explored high-resolution DTAGs to monitor the daily routines of bottlenose dolphins. The study quantified activity levels and diving behaviors, revealing energy-efficient swimming patterns. These findings emphasize how sensor data can enhance understanding of animal behavior and health.

Non-invasive physiological monitoring has also proven effective. In [

7], a suction cup with an ECG electrode successfully recorded heart rate changes in Risso’s dolphins, providing valuable insights into their respiratory-related heart rate oscillations. This method demonstrated feasibility for field use, expanding its application to free-ranging cetaceans. These studies showcase the versatility of sensor-based techniques in capturing movement and physiological data, offering non-invasive tools for monitoring animals in both captive and wild settings. However, challenges remain, including reliance on annotated data for training and ensuring minimal impact on animal welfare.

2.2.2. Vision-Based Techniques

Vision-based techniques, which have become indispensable in animal behavior studies, leverage advancements in computer vision to enable automated tracking, detection, and classification. These methods are particularly powerful for monitoring animals in complex environments, reducing reliance on labor-intensive manual annotation and improving accuracy. In [

8], the researchers utilized convolutional neural networks (CNNs) combined with Kalman filters to track bottlenose dolphins in a zoo environment. Fixed overhead cameras collected approximately 100 h of video data, generating over 12 million location estimates. The framework quantified habitat use and swimming kinematics, revealing patterns such as increased kinematic diversity near midday and activity hotspots around animal care specialists and enrichment areas. The study demonstrated the effectiveness of CNNs for large-scale tracking and behavioral analysis. Similarly, in [

9], a CNN-based approach was employed to track individual fish within schools. By detecting fish heads and associating detections across frames using motion state predictions, the method successfully handled challenges such as frequent occlusions and similar appearances among individuals. The proposed framework outperformed other state-of-the-art tracking methods, highlighting CNNs’ robustness in multi-individual tracking scenarios.

YOLO (You Only Look Once) models, as a subset of the CNN architecture, have further advanced the field by providing real-time detection capabilities. In [

10], YOLO-NAS and YOLOv8 were applied to detect sea lions in their natural habitat. YOLOv8 excelled under complex environmental conditions, while YOLO-NAS performed better at detecting smaller individuals and larger groups. This work underscores the potential of YOLO models for automating species counting and habitat monitoring, streamlining conservation efforts for protected species. A similar application of YOLO technology can be found in [

11], where the researchers developed a model to detect the dorsal fins of the endangered Sousa chinensis dolphin. Using over 3000 images from Leizhou Bay, the model achieved a recognition accuracy of 90.98%. This real-time detection capability offers an effective method for monitoring specific anatomical features, aiding in the conservation of this endangered species. These studies illustrate the versatility and efficiency of vision-based techniques in diverse animal behavior scenarios. By combining CNN architectures with advanced post-processing methods, researchers can achieve detailed spatial and temporal insights into animal movements and interactions. However, challenges such as computational demands, environmental variability, and data quality remain, necessitating further advancements in algorithmic optimization and hardware capabilities.

2.2.3. Behavior Classification Models

Pose estimation methods are crucial for studying animal movement and behavior, providing skeletal keypoint data that supports research in biomechanics, ethology, and social interactions. These methods extract spatial information from video footage, enabling precise movement tracking and analysis. With advancements in machine learning, pose estimation has become highly adaptable, allowing researchers to apply it across various species and behaviors.

DeepLabCut has emerged as a leading tool due to its flexibility and efficiency, requiring minimal labeled data through transfer learning. Its capabilities extend to multi-animal tracking and identity prediction, as demonstrated in [

12,

13]. These studies highlight DeepLabCut’s ability to handle occlusions and visually similar individuals, ensuring accurate tracking even in complex group interactions. Additionally, DeepLabCut integrates well into research workflows, offering active learning and graphical user interfaces (GUIs) for enhanced usability.

OpenPose, originally developed for human pose estimation, is another widely recognized tool. It excels in real-time analysis and pretrained model applications, making it effective for human-centric studies. For example, Blanco et al. [

14] adapted OpenPose for primate studies, achieving accuracy comparable to human pose models. Similarly, Washabaugh et al. [

15] evaluated OpenPose, MoveNet, and DeepLabCut, showing that OpenPose performed well in extracting human hip and knee kinematics.

Despite its strengths, OpenPose has limitations in complex animal research. It struggles with multi-animal tracking, occlusions, and species with unique anatomical structures. In contrast, DeepLabCut enables researchers to define custom keypoints and adapt models for specific species, making it the preferred tool for intricate multi-animal studies. Its robustness in handling occlusions and identity tracking ensures consistent data collection in challenging environments. Both DeepLabCut and OpenPose have significantly advanced pose estimation in animal research. OpenPose provides a user-friendly solution for real-time and simple animal movement analysis. However, DeepLabCut’s precision, adaptability, and multi-animal tracking capabilities make it the preferred choice for detailed and species-specific behavior studies. As pose estimation technologies continue to evolve, they are expected to further enhance the understanding of motion, interactions, and behavior across ecological and experimental settings.

2.3. Behavior Classification Models

Behavior classification models are essential for analyzing animal behaviors from various data sources, including video, accelerometry, and other sensor inputs. These models utilize deep learning techniques, particularly convolutional neural networks (CNNs) and recurrent neural networks (RNNs), to process complex and time-dependent datasets. Recent advancements have shown that integrating spatial and temporal features significantly improves behavior classification accuracy.

One example can be found in [

16], where the researchers combined DeepLabCut for pose estimation with the AquaAI Dolphin Decoder (ADD) and a BiLSTM model to classify six distinct dolphin behaviors. This integration achieved over 90% accuracy, demonstrating BiLSTM’s ability to capture temporal dependencies in behavior sequences. Deep learning models have also been applied to underwater animal detection. In [

17], the researchers used CNNs for spatial feature extraction and LSTM networks for temporal sequence learning, improving classification accuracy in low-visibility underwater environments. Their findings highlight the importance of combining color, texture, and handcrafted features with neural networks to enhance detection robustness. Beyond RNNs, hybrid CNN–RNN architectures have proven effective for behavior classification. Qiao et al. [

18] integrated a CNN (Inception-V3) to extract spatial features from video data, which were then processed by BiLSTM to model temporal dependencies. The framework demonstrated that longer video sequences yield better classification accuracy, reinforcing the value of combining CNNs and BiLSTM for sequential behavior analysis.

These studies collectively highlight the strengths of combining spatial and temporal models for behavior classification. While CNNs excel at extracting spatial features, RNNs, LSTMs, and GRUs effectively model time-dependent behavioral patterns. Among these, GRU models have shown promise for resource-limited applications due to their computational efficiency. Furthermore, hybrid models integrating CNNs with BiLSTM or GRU offer a comprehensive solution for complex animal behavior analysis. As behavior classification continues to evolve, future research may explore multi-modal data fusion, such as integrating accelerometry with pose estimation or employing advanced architectures like transformers and Temporal Convolutional Networks (TCNs). These innovations have the potential to further enhance accuracy, scalability, and adaptability, enabling more precise and automated monitoring of animal behavior and welfare.

2.4. Challenges and Opportunities in Animal Behavior Recognition

Despite significant advancements in animal behavior recognition, the field still faces notable challenges, particularly in the cost and complexity of introducing new behaviors and adapting to diverse scenarios. Existing systems often rely heavily on large annotated datasets for model training, requiring substantial time and expertise in animal behavior science. For instance, Tseng et al. [

19] highlighted the dependency on extensive labeled data for behavior classification, making it difficult to expand the system to recognize new behaviors or adapt to new environments. Similarly, multi-animal scenarios introduce further complexity, such as occlusions and diverse behavior patterns, which reduce classification accuracy. As noted in [

12], frequent interactions and similarities between animals pose significant challenges to current systems, limiting their generalizability and robustness. These challenges reveal critical limitations in the adaptability and scalability of current technologies, particularly when rapid updates or new behavior classifications are needed.

To address these issues, this study introduces a user-driven animal behavior recognition system that allows users to autonomously add new behaviors by uploading and annotating a small amount of video data. Unlike traditional systems that require full retraining for updates, this approach seamlessly integrates new behaviors, significantly reducing time and cost. This functionality enhances accessibility for a wider range of users while ensuring flexibility for diverse research and management applications. As technology advances, user-driven models will continue to expand in capability. Compared to conventional systems that rely on specialized expertise and large datasets, the proposed approach offers a more efficient and sustainable solution for behavior recognition. By lowering entry barriers and improving adaptability, it supports broader applications in animal welfare monitoring, environmental conservation, and behavioral research. With its emphasis on cost reduction and usability, this system has the potential to transform how animal behavior recognition is applied across various fields.

3. Methods

3.1. System Overview

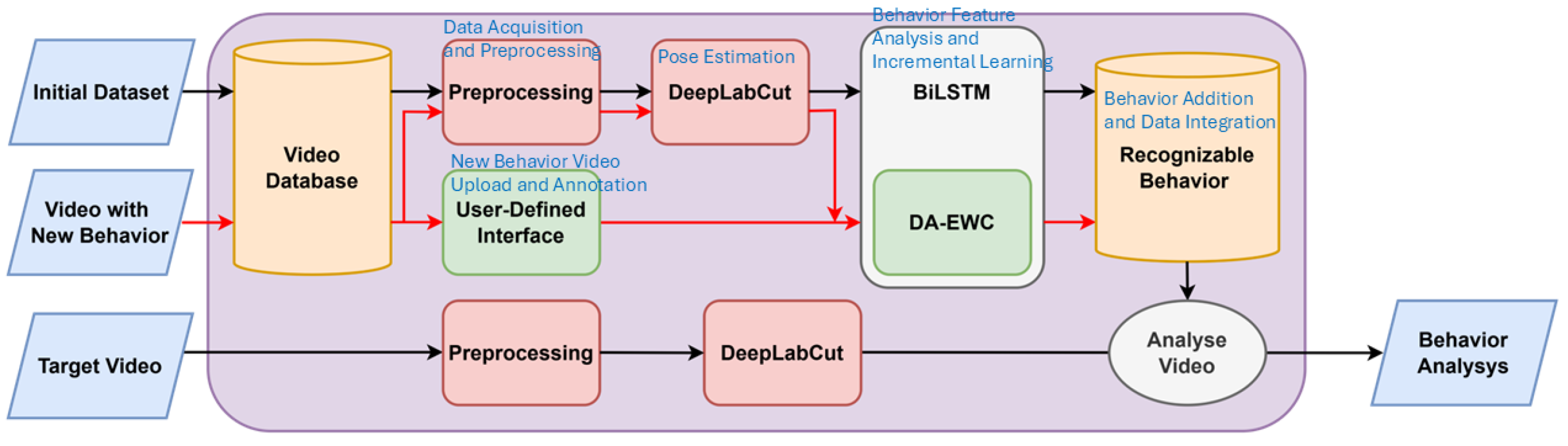

The total system architecture is illustrated in

Figure 1 and is divided into five stages:

Data Acquisition and Preprocessing: The system acquires video data from sixteen network cameras installed above the dolphin pools. These videos are transmitted to a PC for preprocessing, which enhances the image quality by applying contrast enhancement and sharpening.

Pose Estimation: DeepLabCut is used to identify the key skeleton points of the dolphins, ensuring accurate pose estimation.

New Behavior Video Upload and Annotation: Dolphin caretakers upload videos of new behaviors, which are automatically annotated by the system. Users confirm or modify the annotations.

Behavior Feature Analysis and Incremental Learning: The system extracts time-series features of the new behaviors and incorporates them into the model through incremental learning. BiLSTM models capture the temporal dependencies, allowing the system to use Elastic Weight Consolidation (EWC) to ensure that the model retains the ability to recognize old behaviors while learning new ones.

Behavior Addition and Data Integration: The new behaviors are added to the system’s behavior library after learning. They can then be automatically recognized and classified in future video data. The behavior recognition results are organized and exported as structured files, thereby helping users track and analyze dolphin behaviors over time.

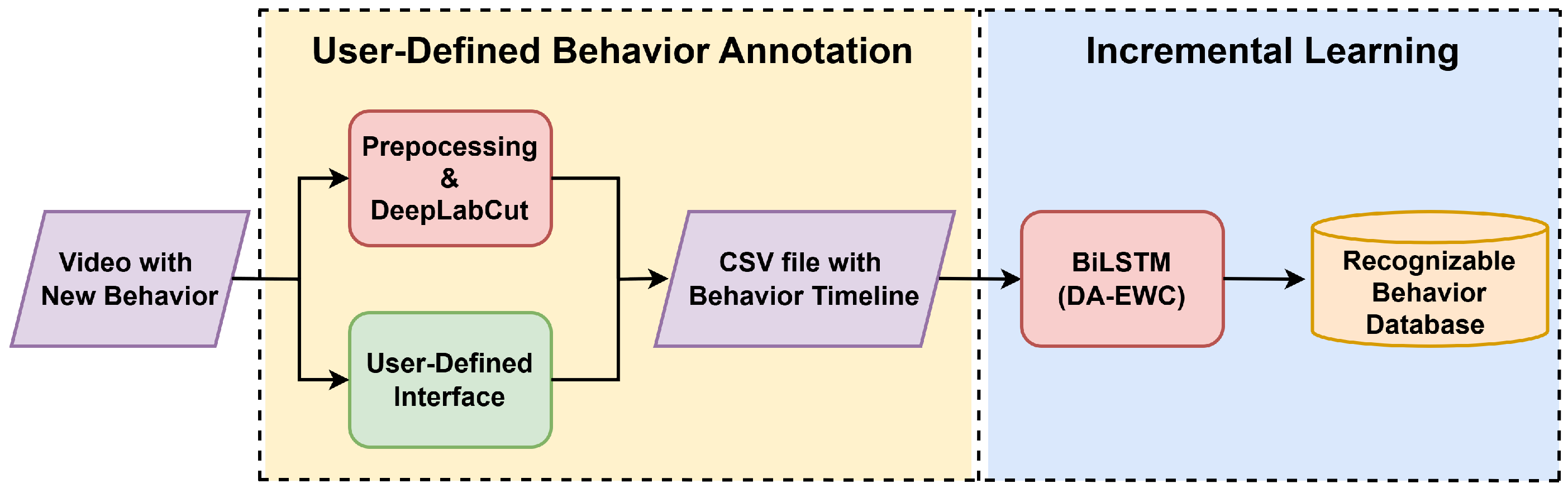

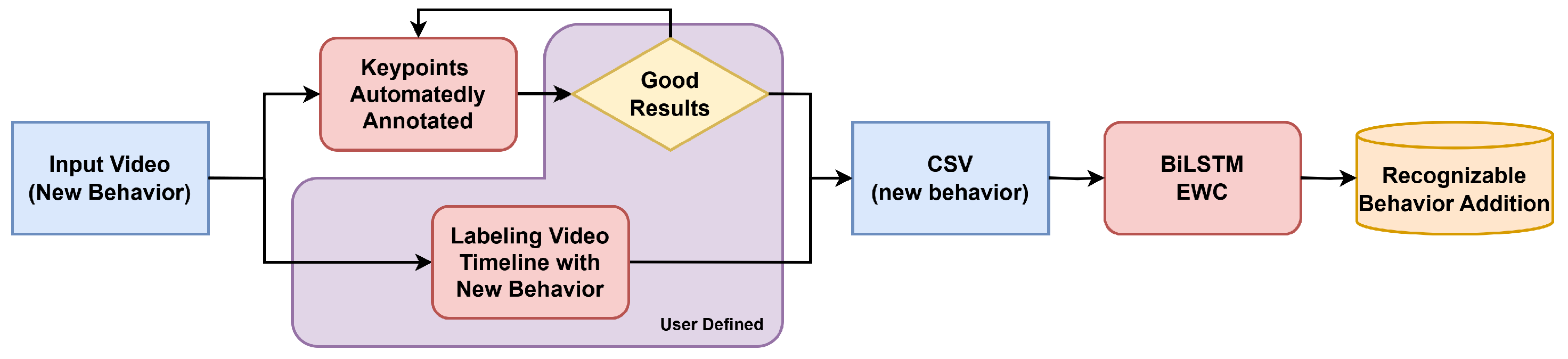

The proposed system introduces a user-driven behavior recognition framework that enables seamless integration of new behaviors through incremental learning. The process is divided into two major components: user-defined behavior annotation and incremental learning, as illustrated in

Figure 2.

Users begin by providing video data containing new behaviors, which undergo a preprocessing and keypoint detection pipeline using DeepLabCut. This step extracts skeletal keypoints and refines the video data, ensuring optimal feature extraction. Additionally, a user-defined interface allows researchers and caretakers to manually annotate behaviors, defining the start and end times for each action. The output of this module is a CSV file containing behavior timelines, which serves as structured input for the incremental learning process.

Once new behaviors are annotated, the system employs Bidirectional Long Short-Term Memory (BiLSTM) with Dynamic Adaptive Elastic Weight Consolidation (DA-EWC) to incrementally update the behavior recognition model. Unlike traditional models that require full retraining, the DA-EWC mechanism ensures that newly added behaviors do not overwrite previously learned knowledge, thereby preserving classification accuracy over time. The updated model is then stored in the Recognizable Behavior Database, making the system capable of recognizing both existing and newly integrated behaviors.

3.2. Data Acquisition and Image Preprocessing

3.2.1. Data Acquisition

Data acquisition involves collecting video footage of dolphin behaviors. In this study, the system uses 15 HS-T057SJ-D (HISHARP, Taoyuan, Taiwan) cameras with a 5 MP resolution (2880 × 1620), strategically positioned above the dolphin pools at Farglory Ocean Park. These cameras capture high-definition videos at a frame rate of 5 frames per second, providing clear and detailed views of the dolphins’ behaviors. The system records approximately 300 h of footage, which is essential for capturing a wide range of dolphin activities over an extended period. The overhead camera setup ensures a consistent and unobstructed view of the dolphins’ movements, which is crucial for accurate pose estimation and behavior analysis. The recorded video data are then transmitted to a central PC for further processing in the subsequent stages of the system.

Figure 3 shows the dolphins under the monitors.

3.2.2. Image Preprocessing

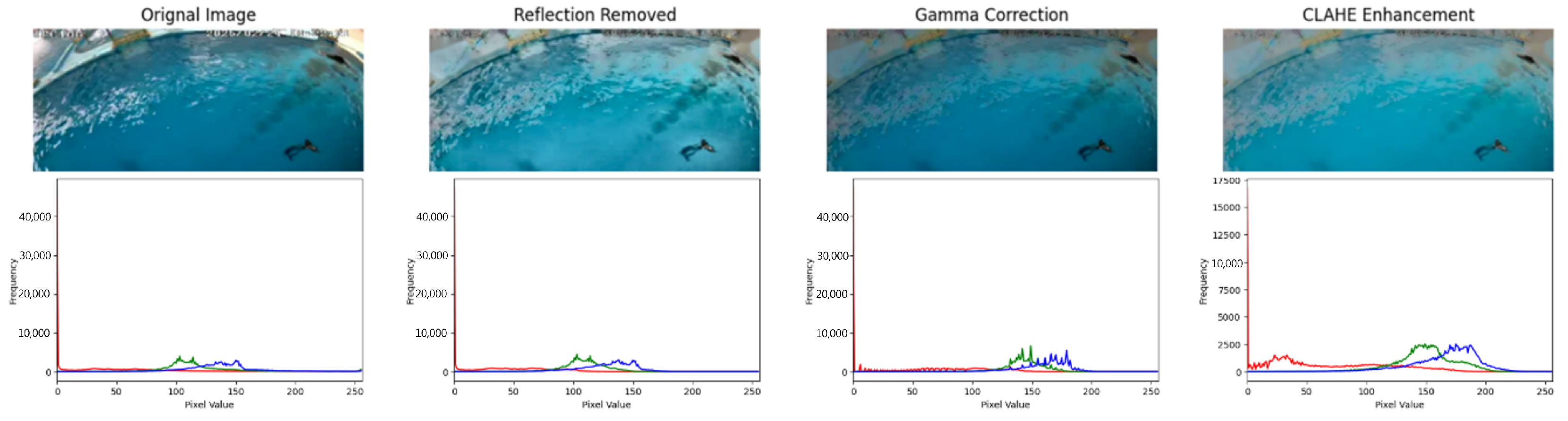

Preprocessing is particularly important due to the challenges posed by the aquatic environment where the dolphins are located. Water surface reflections can create bright, distracting spots in images, making it difficult to accurately detect keypoints. Uneven lighting conditions further complicate visibility, affecting the clarity of the dolphins’ bodies across different environments. Additionally, low contrast can obscure contours, reducing the accuracy of pose estimation and behavior classification. To address these challenges, appropriate preprocessing techniques are applied to minimize the impact of reflections, enhance visibility under varying lighting conditions, and improve the clarity of body features, ultimately ensuring more precise behavior recognition.

In this study, preprocessing is applied to improve image quality for accurate pose estimation and behavior analysis. Navier–Stokes-based inpainting removes water surface reflections by filling in reflective areas with surrounding pixel information, ensuring clearer body outlines. Gamma correction adjusts brightness non-linearly, enhancing both dark and bright regions while preserving mid-tone details, thereby improving overall visibility under varying lighting conditions. CLAHE further enhances contrast by locally adjusting brightness, making the dolphins’ contours more distinguishable. Together, these techniques refine raw image data, reducing environmental interference and enhancing system performance.

Figure 4 shows the RGB histograms under preprocessing.

3.3. Pose Estimation Model

3.3.1. Model Framework and Definition of Keypoints

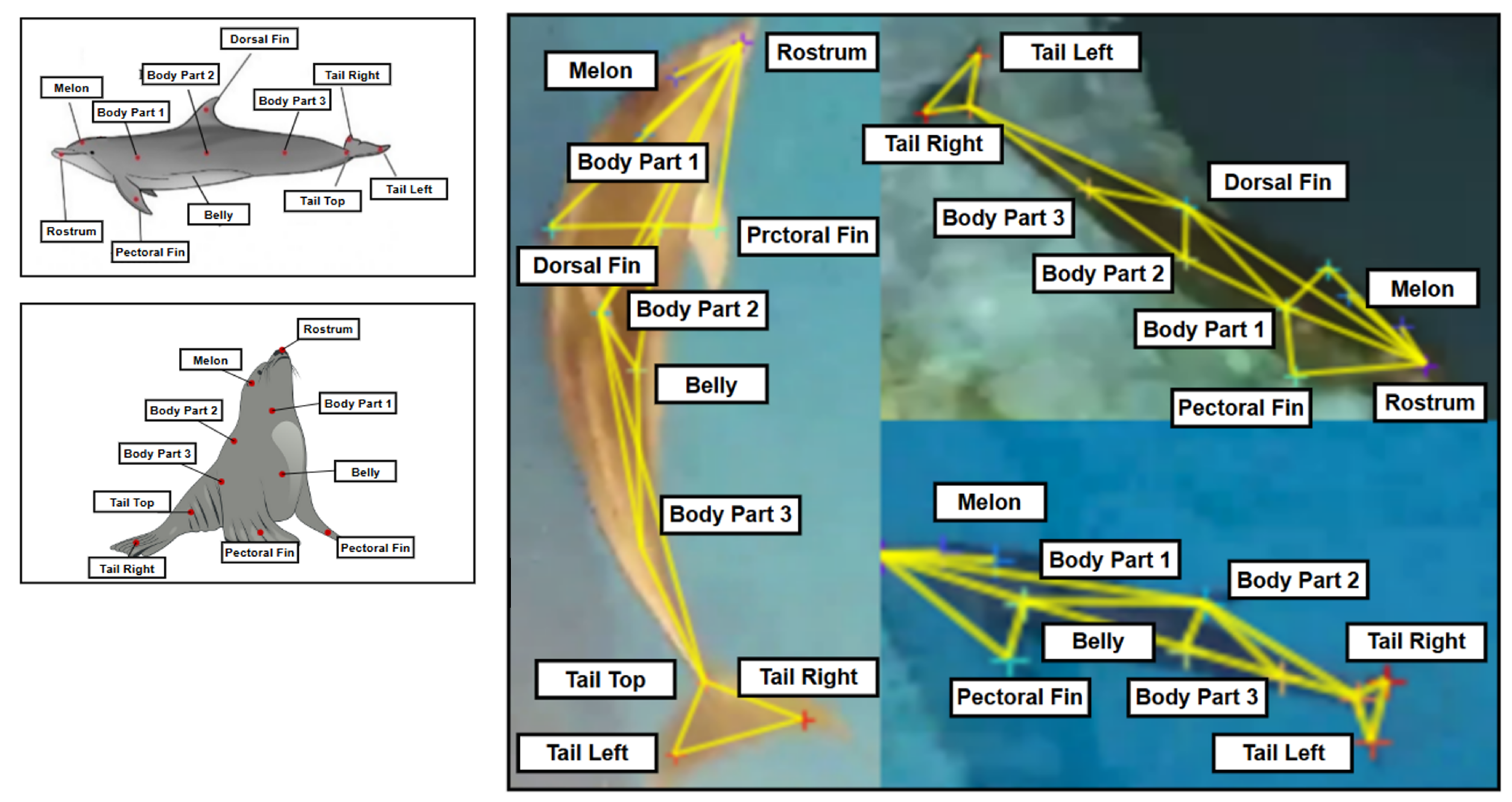

To accurately analyze marine mammal behavior, it is essential to identify and track the key anatomical points on a dolphin’s body. In this study, we labeled the marine mammal skeleton using 11 keypoints: rostrum, melon, dorsal fin, pectoral fin, belly, and tail fin, as well as other critical parts of the body. These keypoints are fundamental for pose estimation and behavior classification, as they provide clear and consistent reference locations for analyzing the dolphin’s movements and posture.

We extracted one image per second from the video, creating a dataset of 30,000 labeled dolphin and sea lion skeleton images with 11 keypoints.

Figure 5 shows the 11 keypoints of the dolphin and sea lion. Accurate annotations are crucial for the system’s performance, enabling precise movement detection and behavior recognition. This dataset serves as the foundation for training DeepLabCut, the core pose estimation model in the behavior recognition system.

DeepLabCut (DLC) is a deep learning-based pose estimation framework designed for tracking keypoints in animals. It utilizes a convolutional neural network (CNN) architecture to extract spatial features from input images and predict keypoint locations with high accuracy.

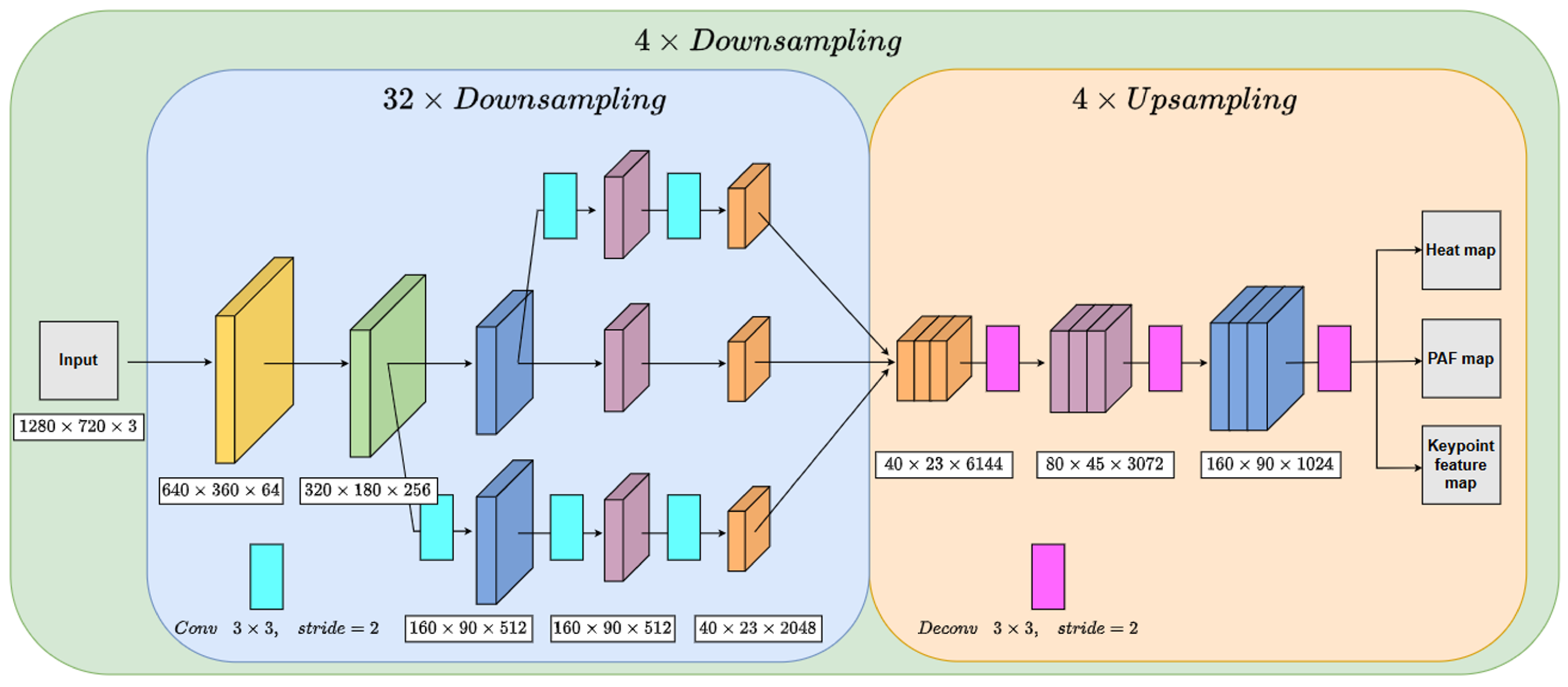

Figure 6 shows the structure of DeepLabCut, which consists of the following stages:

Input: The model takes an RGB image (e.g., 1280 × 720 × 3) as input, which contains the target animal for keypoint detection. The image is processed through multiple convolutional layers for feature extraction.

Downsampling (Feature Extraction): The network progressively reduces the image resolution while increasing feature depth, using 3 × 3 convolutional layers with a stride of 2. The extracted feature maps capture the hierarchical spatial information necessary for keypoint localization.

Upsampling (Keypoint Prediction): The network reconstructs high-resolution feature maps through deconvolution layers, generating three outputs: heatmaps (keypoint probability), Part Affinity Field (PAF) maps (keypoint connectivity), and keypoint feature maps (contextual information).

DeepLabCut (DLC) leverages ResNet50 as its backbone for feature extraction, enabling precise keypoint detection. ResNet50 is a deep convolutional neural network (CNN) with residual connections, enabling the efficient learning of spatial features while mitigating the vanishing gradient problem. It processes input images through multiple stages of downsampling, progressively refining feature representations for pose estimation.

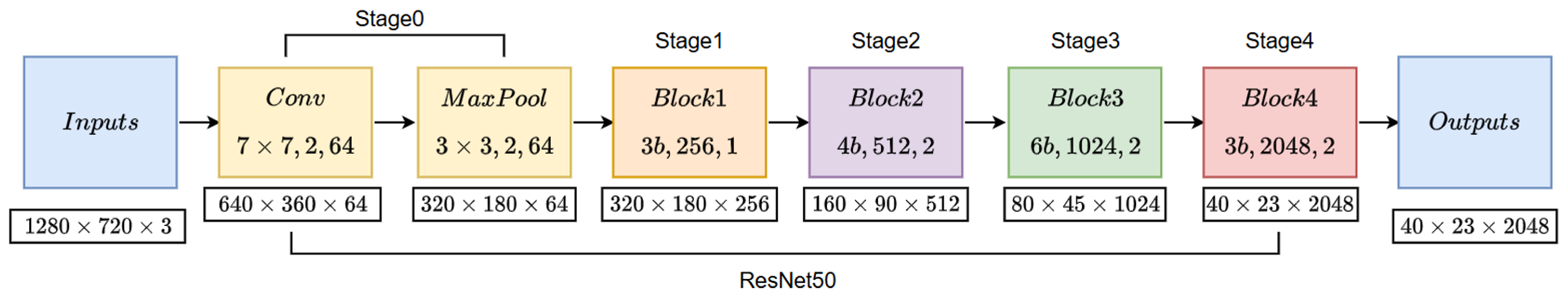

Figure 7 shows the structure of ResNet50, which consists of the following stages:

Input: The network takes an RGB image (1280 × 720 × 3) as input. The initial 7 × 7 convolutional layer (stride = 2, 64 filters) extracts basic edge and texture features, followed by a 3 × 3 max-pooling layer (stride = 2, 64 filters) to reduce spatial dimensions.

Feature Extraction (Residual Blocks): ResNet50 consists of four main stages of residual blocks:

- −

Stage 1: Block 1 (3 bottleneck layers, 256 filters) extracts mid-level features while maintaining a 320 × 180 resolution.

- −

Stage 2: Block 2 (4 bottleneck layers, 512 filters) further downsamples the feature maps to a 160 × 90 resolution.

- −

Stage 3: Block 3 (6 bottleneck layers, 1024 filters) captures high-level semantic information at an 80 × 45 resolution.

- −

Stage 4: Block 4 (3 bottleneck layers, 2048 filters) refines deep feature representations at a 40 × 23 resolution.

Output: The final feature maps (40 × 23 × 2048) are passed to the pose estimation module in DLC, generating heatmaps and Part Affinity Fields (PAFs) for keypoint localization.

In DeepLabCut, ResNet50 serves as a feature extractor, progressively learning spatial patterns from input images. Its residual connections help retain important details while preventing overfitting, which is crucial for pose estimation. After feature extraction, DLC generates heatmaps that predict keypoint locations with confidence scores, enabling precise body posture analysis.

3.3.2. Model Training and Optimization

To ensure accurate and robust keypoint detection, we utilized a series of optimization techniques during training. These optimizations helped improve keypoint localization accuracy, prevent overfitting, and adapt the model to varying environmental conditions. In this study, we applied heatmap-based keypoint localization, progressive training, and early stopping to enhance the model’s performance. These techniques are summarized as follows:

Heatmap-based Keypoint Localization: Heatmap-based keypoint localization—DLC generated 2D probability maps (heatmaps) for each keypoint, where the pixel values represent the confidence scores for the keypoint positions. The Root Mean Square Error (RMSE) metric was used to evaluate localization accuracy, ensuring that the model effectively learned precise keypoint placements.

Early Stopping: To prevent overfitting, the training process was monitored for validation loss improvements. If the model failed to improve after 10 consecutive epochs, training was halted, and the best-performing model weights were retained. This approach optimized computational efficiency while maintaining high generalization capability.

Progressive Training: To enhance model adaptability and avoid overfitting, progressive training was implemented. Instead of training the model with all behaviors at once, we gradually introduced increasing complexity in two stages:

Initial Training on Basic Keypoints

The model was first trained to recognize 11 anatomical keypoints using a dataset of 1200 frames from four individuals. Training was conducted over 10 epochs with a batch size of 32, primarily focusing on learning the body structure. The model achieved an RMSE of 4.3 pixels, indicating a reasonable ability to detect keypoints but with some limitations in fine-grained movement recognition.

Introducing Behavioral Context

The model was further trained to recognize keypoints during different behaviors (e.g., social interactions such as chasing and playful swimming). This phase included 2000 frames with increased variability in lighting and movement dynamics. After 5 additional epochs, the RMSE slightly increased to 5.0 pixels, reflecting the challenge of adapting to more dynamic body positions.

By training the model incrementally, progressive training helped prevent overfitting and enabled the system to adapt effectively to new behaviors and environmental conditions. This approach allowed the model to build a robust understanding of keypoints before introducing complexity, resulting in faster convergence and improved generalization.

3.4. Uploading and Annotating New Behavior Videos

3.4.1. Overview of System Functions

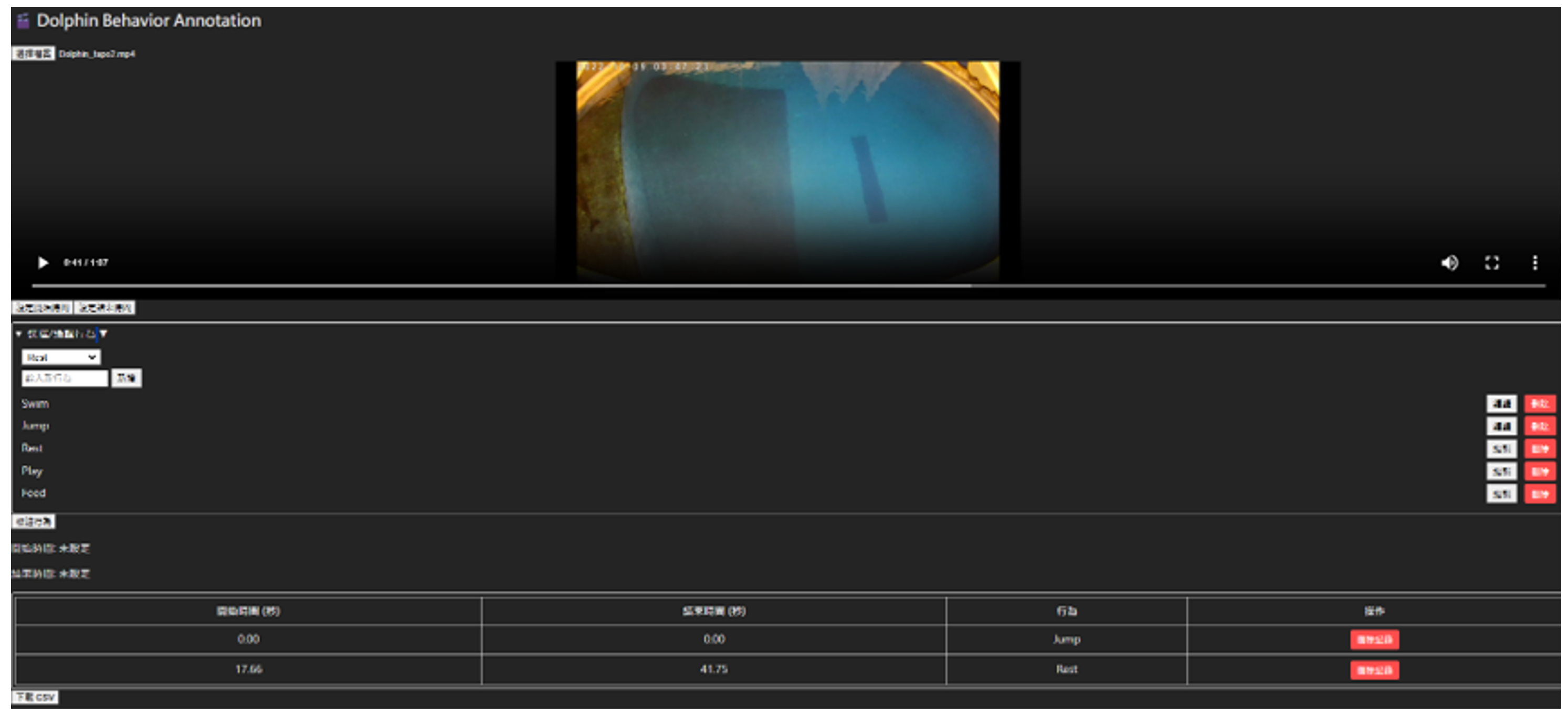

The structure of the user-defined interface is shown in

Figure 8. The new behavior video uploading and annotation module is a critical component of the system, enabling dynamic behavior expansion and continuous learning. It provides a streamlined and accurate way to annotate and integrate new behavior data into the system. The module supports the following primary functions:

Uploading New Behavior Videos: Users can upload videos containing new behaviors in various formats (e.g., MP4 or AVI).

Automated Initial Annotation: The system uses pre-trained models to automatically generate behavior classifications and keypoint annotations.

Interactive User Corrections: Users can review and modify the automated annotations via an intuitive interface to ensure accuracy.

Data Storage and Integration: Confirmed behavior data are stored in the system’s behavior library for subsequent analysis and classification.

3.4.2. Technical Implementation

The structure of the user-defined interface is shown in

Figure 9. The system supports multiple video formats, including MP4, AVI, and MOV, and ensures video integrity and resolution compatibility during the upload process. Once a video is uploaded, it is segmented into static frames at a rate of 5 frames per second to align with the system’s data processing requirements. Basic preprocessing techniques are applied to enhance image quality, optimizing conditions for keypoint detection:

Automated Keypoint Localization: For automated annotation, the system integrates a pre-trained DeepLabCut model to detect and label 11 skeletal keypoints on dolphins. The model generates heatmaps for each keypoint, identifying the highest-confidence pixel as the predicted location.

Interactive User Annotation: The interactive annotation module allows users to review and modify behavior labels through an intuitive interface. Users can upload and preview videos, set behavior labels by marking start and end times, and manually adjust keypoint positions when necessary. The system provides tools for adding, editing, and deleting behavior labels, ensuring that annotations are accurate and free of overlap. To facilitate further analysis, users can export their annotations as a CSV file, preserving structured data for additional processing.

The system stores user-confirmed annotations in standardized formats such as JSON and CSV, ensuring seamless integration with future training and analysis processes. Newly annotated behaviors are incorporated into the system’s behavior library, allowing for automatic recognition in subsequent video data. Each video containing new behavior annotations is assigned a unique identifier, enabling efficient indexing and retrieval for future reference and continued refinement of the behavior classification system.

3.5. Behavior Analysis and Incremental Learning

3.5.1. Recurrent Neural Networks (RNNs) and BiLSTM for Behavior Recognition

Recurrent neural networks (RNNs) are widely used in time-series analysis due to their ability to capture temporal dependencies in sequential data. Unlike traditional neural networks, RNNs process information step by step, allowing them to retain contextual information over time. However, standard RNNs suffer from vanishing gradient issues, making them inefficient for learning long-term dependencies.

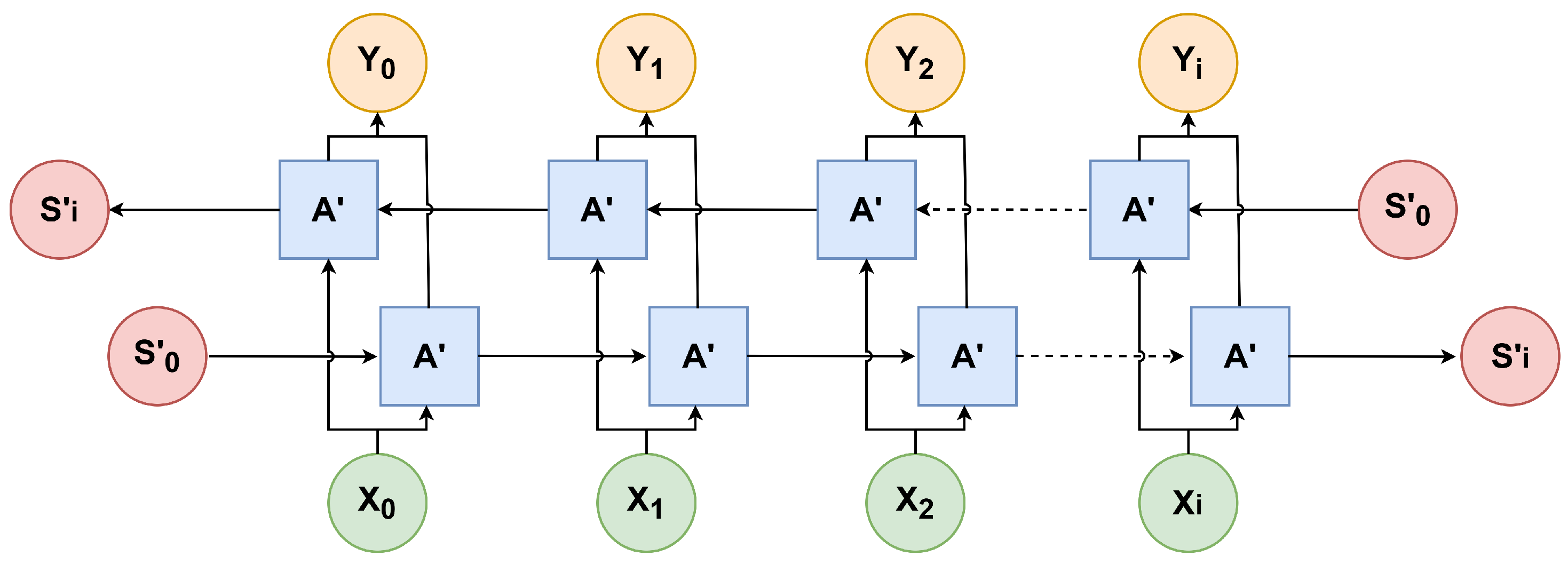

The Bidirectional Long Short-Term Memory (BiLSTM) network structure shown in

Figure 10 enhances behavior recognition by processing sequences in both forward and backward directions. Unlike standard LSTMs, which only retain information from past frames, BiLSTM consists of two parallel LSTM layers—one processing inputs from left to right and the other from right to left.

In the diagram, X represents the input feature sequences, such as the dolphin’s keypoint coordinates over time. Each input is processed by two LSTM layers (denoted as A’), capturing contextual information from both past and future frames. The hidden states from these two directions are combined to generate a more comprehensive representation of the movement sequence. The outputs Y correspond to the behavior predictions at each time step. This bidirectional structure enables the model to detect motion cues more effectively, improving the accuracy of behavior classification. By leveraging information from both preceding and succeeding frames, BiLSTM ensures that subtle yet crucial movement transitions are not overlooked, making it particularly advantageous for recognizing complex behaviors in animal movement analysis.

In the unrolled BiLSTM diagram, the solid horizontal arrows pointing right along the time axis represent the forward LSTM hidden states being passed sequentially from the initial state through each time step; conversely, the solid horizontal arrows pointing left represent the backward LSTM hidden states being propagated in reverse from the sequence-end initial state . The dashed arrows indicate that the intermediate, repeated structures across multiple time steps have been omitted for clarity, and the red circles at the left and right mark the BiLSTM’s initial and final hidden states at the start and end of the sequence, which can be used to initialize downstream classifiers or to carry state information across sequences.

In this system, BiLSTM is used to classify dolphin behaviors based on skeletal movement extracted from 11 keypoints detected by DeepLabCut. These keypoints include the rostrum, dorsal fin, and tail fin, as well as other critical body parts. The model processes sequential frames to learn the motion dynamics, analyzing features such as the following:

Velocity and acceleration of keypoints;

Relative angles between body parts;

Trajectory and movement direction;

Temporal consistency of motion patterns.

To enhance accuracy, data normalization and smoothing techniques (such as Gaussian filtering) are applied before feeding the extracted features into the BiLSTM model. The final processed time-series data are segmented into fixed-length windows, with each segment representing a specific time interval. This structured approach ensures robust behavior classification, even for complex and overlapping behaviors.

3.5.2. Incremental Learning for Adapting to New Behaviors

To ensure that the model does not overfit to newly introduced behaviors, the system employs a balanced training strategy by selectively sampling data to maintain an equilibrium between old and new behavior instances during training. A small subset of previously labeled behavior data is incorporated into the training set, reinforcing the model’s ability to recognize earlier behaviors while integrating new ones. This strategy prevents performance degradation on past behaviors, enabling the BiLSTM to maintain robustness as it adapts to an expanding dataset.

The incremental learning process is initiated when the user provides new behavior annotations via a CSV file. The model then selectively retrains itself using a combination of newly introduced data and a portion of previously labeled behavior data. This ensures that while adapting to new behavior patterns, the model retains its ability to classify past behaviors. To evaluate the effectiveness of this process, the system assesses performance on both old and new behavior categories, ensuring that incremental learning does not compromise classification accuracy.

The system achieves this by leveraging Bayes’ theorem for parameter updates, ensuring that the model incorporates new knowledge while preserving prior learning. The posterior probability of model parameters

given new behavior data

D is expressed as shown in Equation (

1):

where

is the probability of event

occurring after observing event

D (posterior probability),

is the probability of event

D occurring given that

has already occurred (likelihood function),

is the prior probability of event

, and

D is the total probability of event

D.

To mitigate catastrophic forgetting, the system employs the Fisher Information Matrix (FIM) to quantify the importance of each model parameter in recognizing previously learned behaviors. The Fisher Information Matrix is defined as shown in Equation (

2):

where a higher value of

I(

) indicates that a parameter is crucial for past behavior classification. Using Elastic Weight Consolidation (EWC), a regularization term is incorporated into the loss function to prevent drastic changes to these critical parameters, as shown in Equation (

3):

where

represents the loss computed from new behavior data,

is a regularization factor,

is the Fisher information for parameter

, and

denotes the optimal parameter values from previous training. This regularization ensures that previously learned behaviors remain intact while the model incorporates new behaviors.

By integrating transfer learning, only a subset of BiLSTM layers is updated during retraining, while earlier layers remain frozen to preserve foundational motion representations. This significantly reduces computational overhead, accelerates adaptation to new behaviors, and minimizes the amount of new labeled data required. Through the combined use of Bayesian parameter updates, Fisher Information Matrix constraints, and selective transfer learning, the system achieves an efficient and scalable incremental learning framework, ensuring continuous model improvement while maintaining stability across evolving datasets.

3.5.3. Dynamic Adaptive Elastic Weight Consolidation (DA-EWC)

To ensure that the model effectively retains past knowledge while learning new behaviors, this study proposes a Dynamic Adaptive Elastic Weight Consolidation (DA-EWC) mechanism. The conventional Elastic Weight Consolidation (EWC) method estimates parameter importance using the Fisher Information Matrix (FIM) and incorporates a regularization term in the loss function to preserve weights critical for the classification of previously learned behaviors. However, EWC has two major limitations: (1) the FIM is highly dependent on data from a single previously learned behavior, leading to a tendency for earlier learned behaviors to be forgotten; and (2) the use of a fixed regularization strength may hinder the model’s adaptability to new behaviors. To address these issues, DA-EWC introduces a Dynamic Fisher Information Matrix (D-FIM) and adaptive regularization, which dynamically adjust the weight update strategy to enhance the robustness and flexibility of incremental learning.

Traditional EWC computes the Fisher Information Matrix based only on the most recently learned behavior, resulting in a gradual forgetting of earlier behaviors. To mitigate this issue, DA-EWC employs a dynamic weighting mechanism that considers the influence of all past behaviors during new behavior acquisition. Specifically, the Fisher information at each learning stage is adjusted based on its temporal weight, ensuring that earlier learned behaviors remain adequately protected rather than being entirely disregarded over time. This mechanism reduces the model’s over-reliance on recently learned behaviors, leading to more stable behavior recognition performance. Equation (

4) shows the Dynamic Fisher Information Matrix:

where

is the Dynamic Fisher Information Matrix (D-FIM),

represents the Fisher information at the

t-th learning stage, and

is a weighting factor that satisfies

, where earlier behavioral data are assigned a lower weight but are still retained.

Moreover, conventional EWC applies a fixed regularization coefficient (

) to constrain parameter updates. However, a fixed

can lead to two extreme scenarios: (1) when the new behavior is highly similar to past behaviors, the model should allow more flexible parameter updates, yet a fixed

may overly restrict updates, impeding the learning of new behaviors; and (2) when the new behavior significantly differs from past behaviors, the model should strengthen protection for previously learned behaviors, but a fixed

may result in catastrophic forgetting. To address this limitation, DA-EWC introduces an adaptive regularization mechanism, enabling

to be dynamically adjusted based on the similarity between new and past behaviors. When the similarity is high, the system reduces the regularization strength, allowing the model to adapt more effectively. Conversely, when the behavior difference is substantial, the system increases protection for previous knowledge to mitigate catastrophic forgetting. Therefore, DA-EWC dynamically adjusts

based on the similarity between new and previous behaviors to modify the strength of protection, as shown in Equation (

5):

where

is the dynamically adjusted regularization coefficient,

is an adjustment factor that controls sensitivity to behavioral similarity, and

represents the parameter distance between new and previous behaviors (e.g., Euclidean distance or KL divergence).

When the new behavior is similar to a previous behavior (i.e., is small), decreases, allowing more flexibility in updates. Conversely, when the new behavior significantly differs from the previous behavior, increases to strengthen the protection of past knowledge.

By incorporating this dynamic and adaptive mechanism, DA-EWC enables the system to retain previously learned behavior recognition capabilities while flexibly adapting to new behaviors. This approach leads to more stable behavior recognition and prevents the model from forgetting earlier behaviors as it continues learning new ones, ultimately improving recognition accuracy and reliability.

4. Results and Discussion

4.1. Experimental Environment

Table 1 outlines the hardware specifications and software versions used to train the neural network and develop the dolphin skeleton detection and behavior analysis system in this study. The computational setup was deployed at Farglory Ocean Park, utilizing an Nvidia RTX 3060 GPU for accelerated model training. The proposed model was implemented using TensorFlow, DeepLabCut, and other open-source deep learning frameworks. Training and testing were conducted on Windows and the Google Colab platform, leveraging cloud-based resources to enhance computational efficiency and facilitate model optimization.

4.2. Experiment Using DeepLabCut

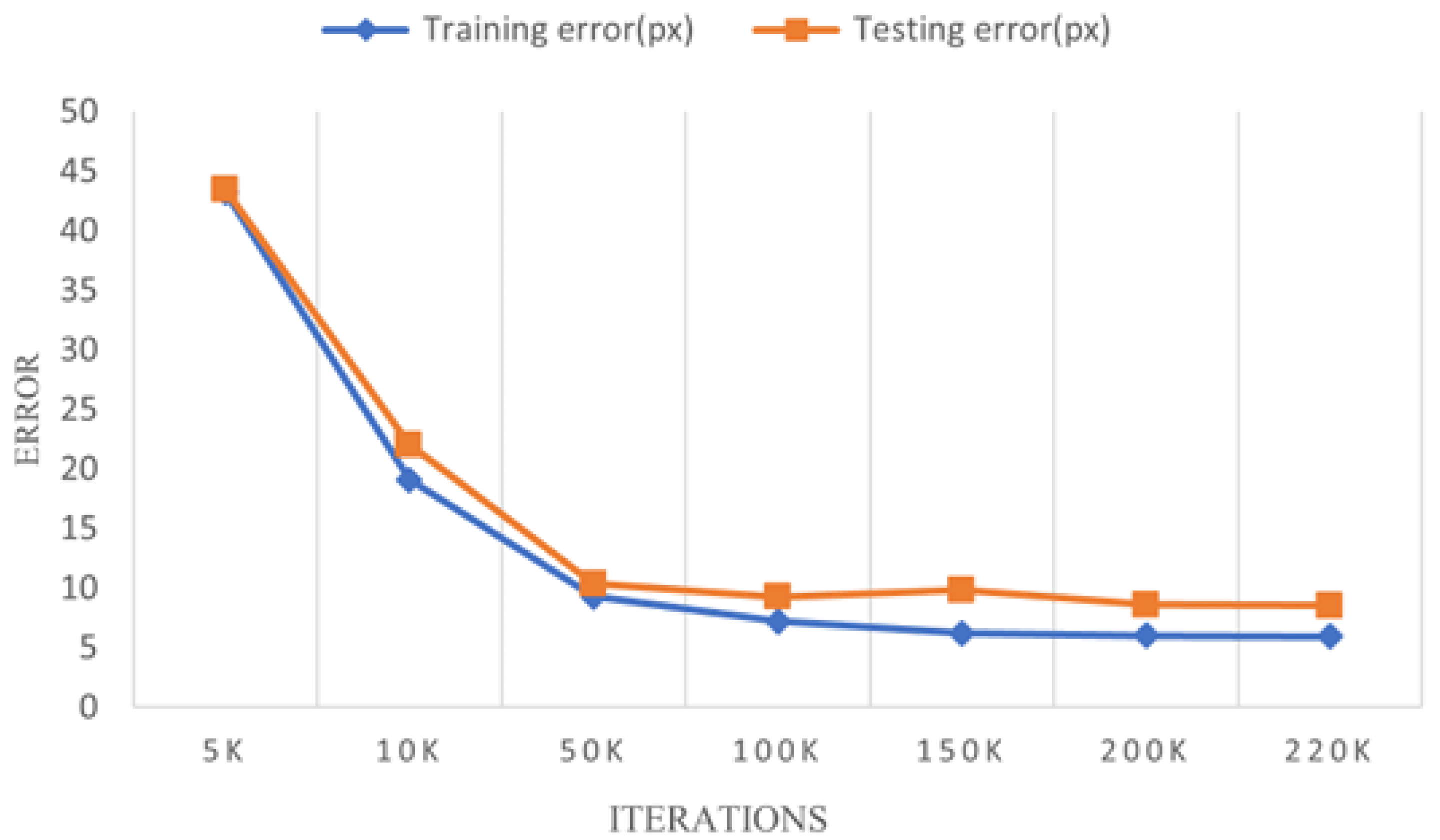

In this experiment, we employed DeepLabCut (DLC) for pose estimation, aiming to accurately detect eleven keypoints on the dolphin’s body. The network was trained with a batch size of 8, and the learning rate schedule was carefully tuned over multiple stages to balance convergence speed and model stability, as shown in

Table 2.

Figure 11 shows the training and testing error (in terms of the mean squared error or RMSE) as a function of the training iterations. During the initial phase (0–7500 iterations), the model rapidly converged under a higher learning rate, while subsequent steps gradually reduced the learning rate to refine keypoint localization without overfitting.

After convergence at around 220,000 iterations, we evaluated the final model on a testing set to measure the Root Mean Square Error (RMSE) for each keypoint.

Table 3 illustrates the RMSE values across the eleven anatomical landmarks, including the rostrum, melon, dorsal fin, pectoral fins, belly, and tail fin. Most keypoints achieved RMSE values below 6 pixels, indicating a high level of precision in dolphin pose estimation.

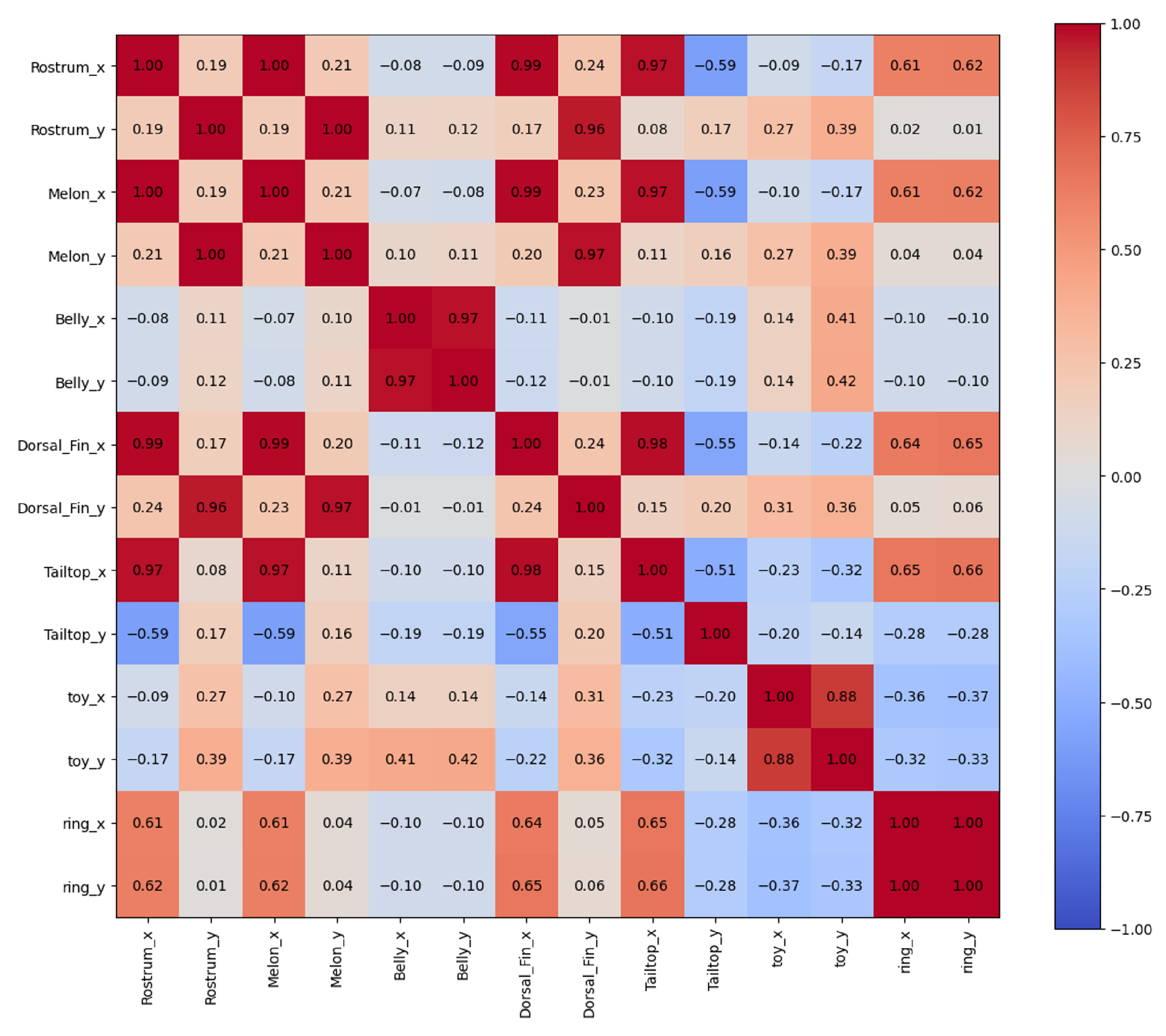

Additionally, we present a heatmap in

Figure 12 that visualizes the correlation or importance distribution among these keypoints. Each cell indicates how strongly two keypoints moved together or affected model confidence. High correlation values typically appeared between physically adjacent landmarks (e.g., rostrum–melon), suggesting that DLC effectively captured structural relationships in the dolphin’s body. Lower or negative correlations often involved distant or flexibly moving body parts (e.g., tail and rostrum), where relative motions differed significantly.

4.3. Experiment Using BiLSTM

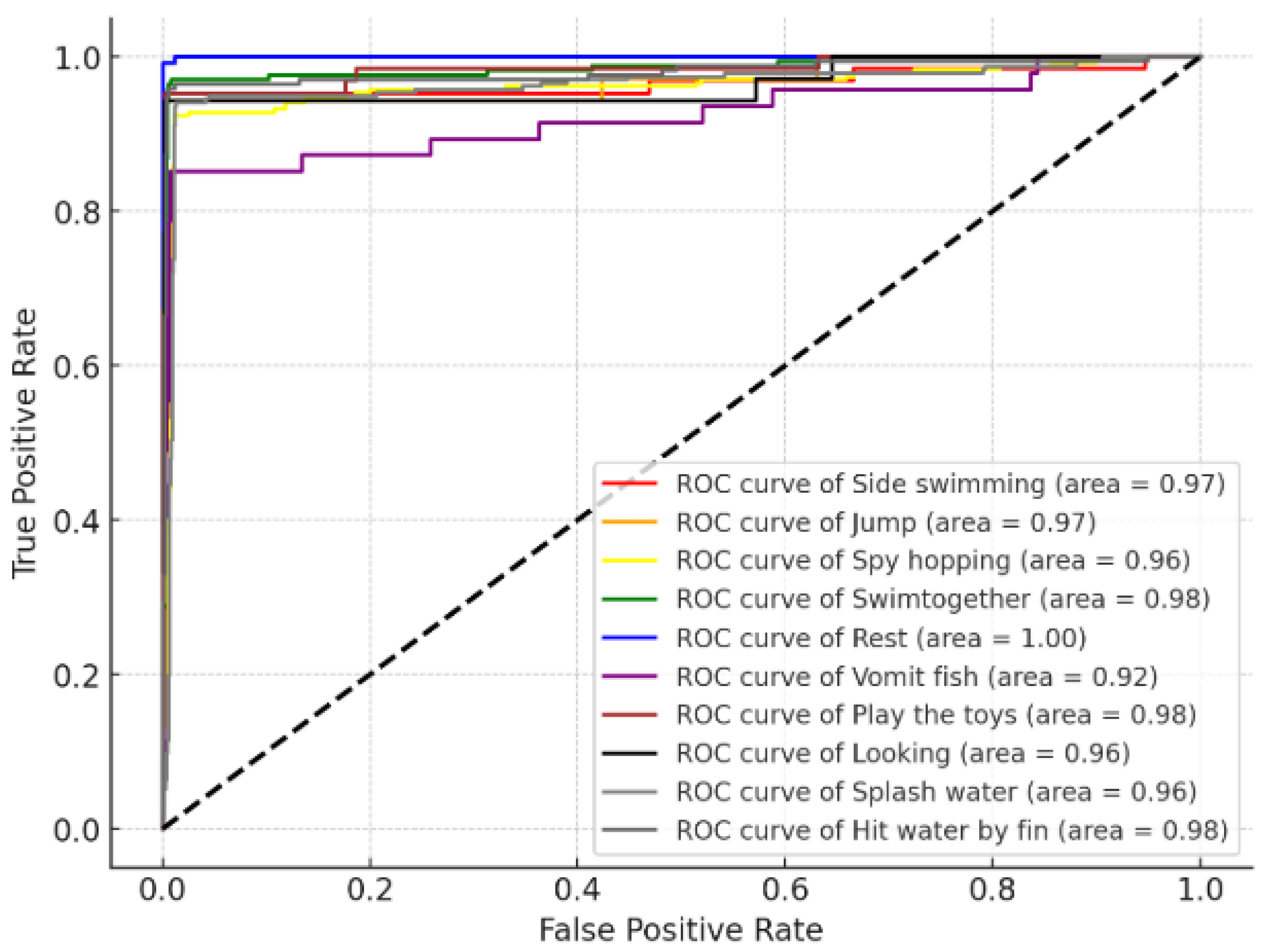

Building upon the pose estimation results from DeepLabCut, a BiLSTM (Bidirectional Long Short-Term Memory) model was employed to classify 10 dolphin behaviors by leveraging time-series keypoint data.

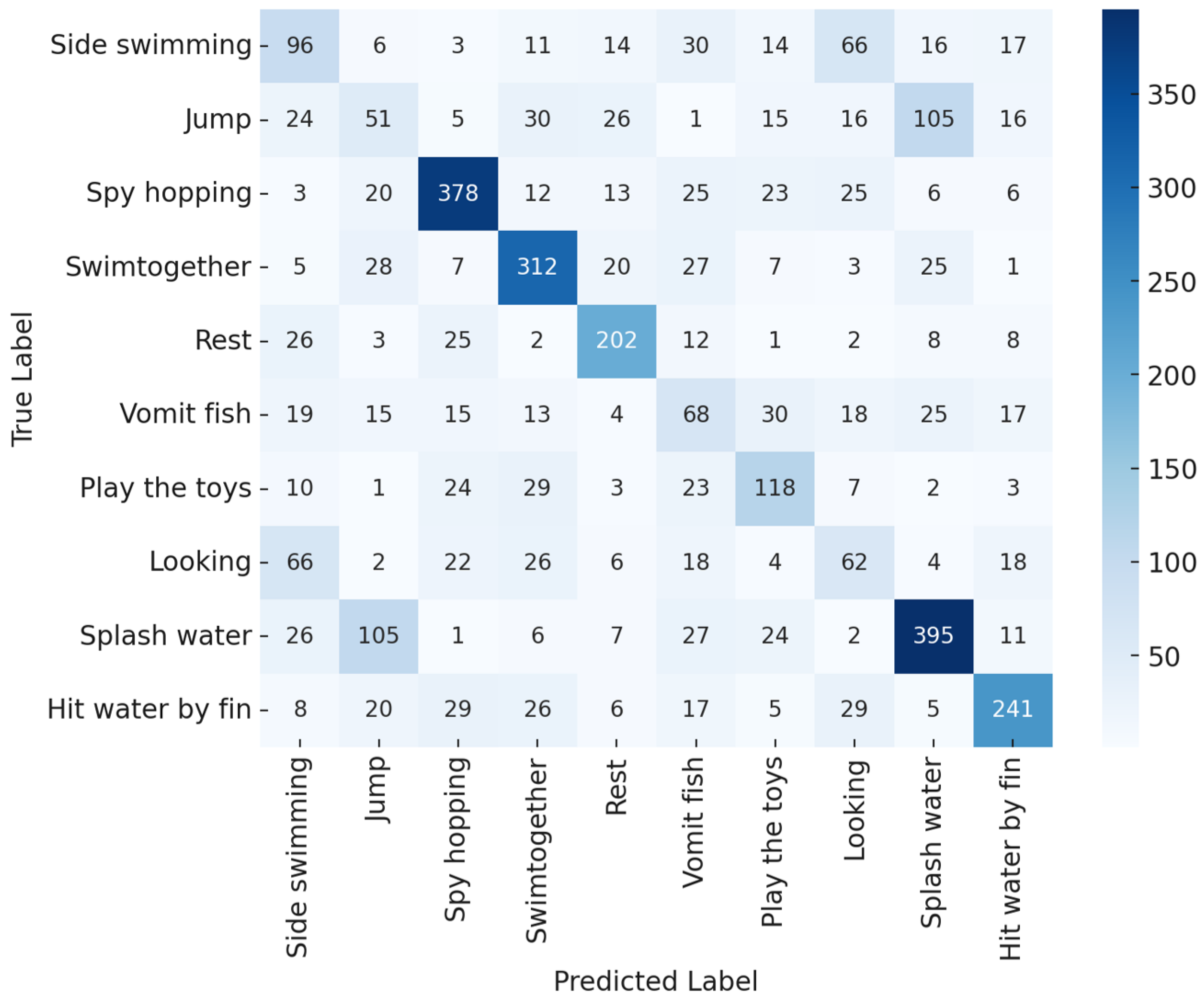

Figure 13 illustrates the multi-class ROC curves, each representing a distinct behavior. The average AUC was approximately 0.97, indicating that the BiLSTM model effectively captured temporal dependencies for most behaviors. Certain categories, such as “rest” (AUC = 1.00) and “swim together” (AUC = 0.98), demonstrated particularly strong separability.

Figure 14 shows a 10 × 10 confusion matrix, highlighting the diagonal cells with high correct classifications (e.g., 378 for “spy hopping”, 312 for “swim together”, 202 for “rest”, and 395 for “splash water”). Notable misclassifications include “jump” and “splash water”, where similar water movements may lead to confusion, and “side swimming” and “looking”, which occasionally share comparable poses or angles. Overall, the BiLSTM achieved robust multi-class recognition, although behaviors with overlapping motion cues can still pose classification challenges.

4.4. Experiment Using Incremental Learning

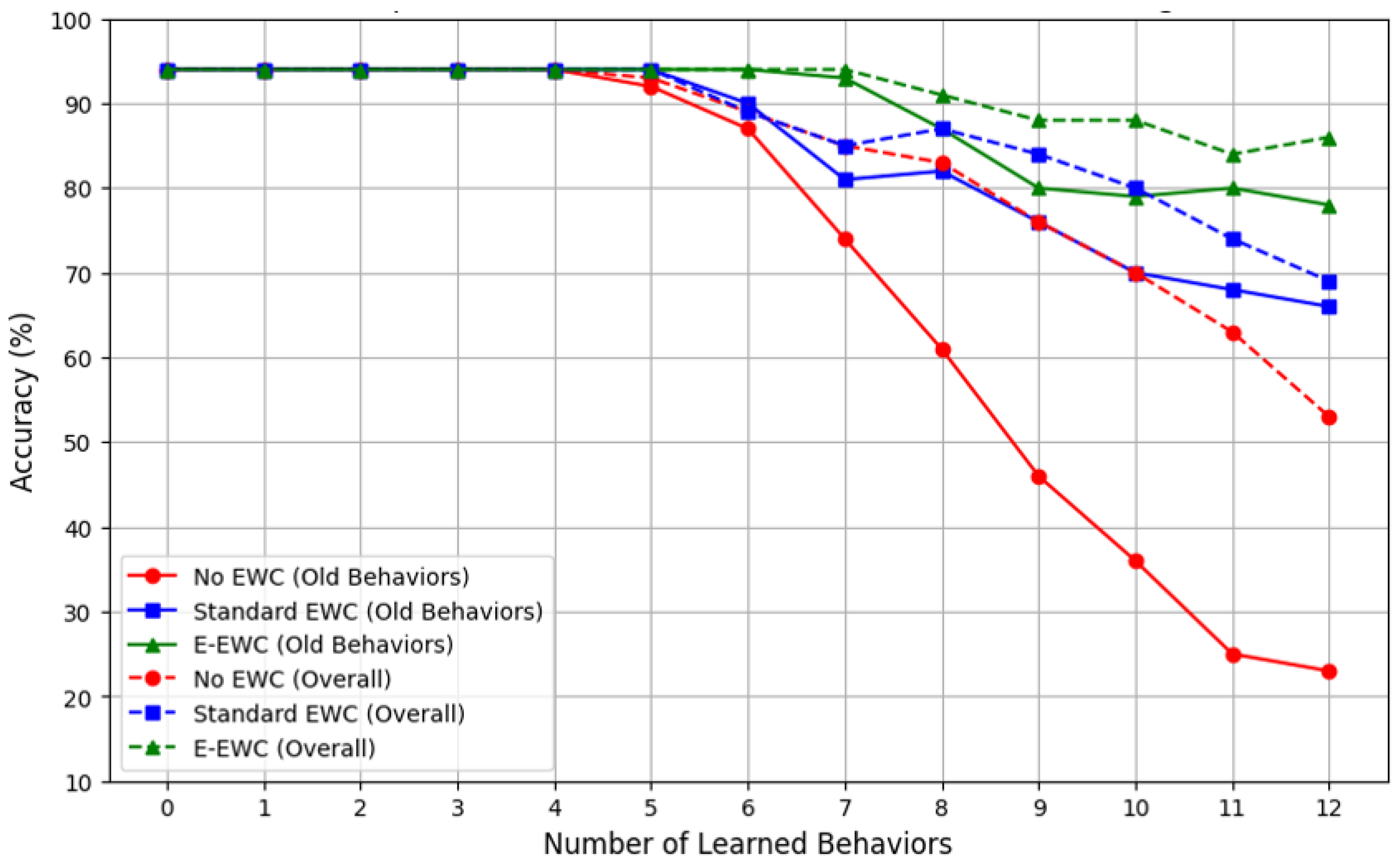

To evaluate the system’s ability to learn new behaviors without forgetting previously acquired ones, we compared three approaches: No EWC, Standard EWC, and Dynamic Adaptive EWC (DA-EWC).

Figure 15 illustrates the accuracy trends for both old and newly introduced behaviors as the total number of learned behaviors increased from 5 to 12. The results of these approaches are summarized as follows:

No EWC (Red lines): The model rapidly lost accuracy on earlier behaviors, indicating a severe form of catastrophic forgetting once new behaviors were introduced.

Standard EWC (Blue lines): By adding regularization based on the Fisher Information Matrix, the model better retained old behaviors compared to No EWC. Nonetheless, performance gradually declined as more behaviors were learned.

DA-EWC (Green lines): Building upon EWC with dynamic adjustments, the model maintained higher accuracy on old behaviors and achieved robust overall performance, even when the behavior set expanded to 12 categories.

These results, shown in

Figure 15, highlight the importance of incrementally learning new behaviors while preserving existing knowledge. Although regular EWC mitigated some catastrophic forgetting, DA-EWC offered further improvements in stability, indicating that adaptive regularization and enhanced parameter protection can substantially benefit long-term behavior recognition.

5. Discussion

5.1. Comparison of Experimental Results

Table 4 presents a comparison of various methods for dolphin behavior recognition, including Compressive Tracking, OpenPose, Faster R-CNN, ADD-LSTM, and our proposed system. While ADD-LSTM achieved around 94% accuracy, our approach attained 96.5% accuracy and higher recall, precision, and F-score (93.7%, 94.6%, and 94.4%, respectively). These results suggest that combining DeepLabCut pose estimation, BiLSTM-based temporal analysis, and incremental learning (DA-EWC) effectively captures both spatial and temporal features, surpassing methods designed primarily for human or generic object detection.

5.2. Error Analysis

The confusion matrices in

Table 5 and

Table 6 reveal notable misclassification patterns between similar behaviors. The highest confusion occurred between jump and splash water, as well as between side swimming and looking.

For jump and splash water, the misclassification likely stems from their sequential nature. Dolphins often jump before landing back in the water, creating a large splash. This overlapping visual pattern makes it difficult for the model to distinguish between classifying the behavior as the jump itself or the subsequent water splash. The confusion matrix indicates that 105 instances of jump were incorrectly labeled as splash water, and vice versa, suggesting that refining temporal features in behavior segmentation could improve accuracy.

Similarly, side swimming and looking share visual similarities. In both behaviors, the dolphin partially submerges with one side exposed, sometimes surfacing with one eye visible. This resemblance may lead the model to confuse the two, as seen in the confusion matrix, where 66 instances of side swimming were classified as looking, and vice versa. The challenge arises from the model’s reliance on pose estimation, which may not capture subtle facial or posture cues differentiating these behaviors.

To address these issues, future improvements could focus on refining temporal modeling for behaviors that occur in close succession and incorporating additional spatial cues to differentiate subtle head positioning in side swimming and looking.

6. Conclusions

This study aimed to develop a flexible and user-driven marine mammal behavior recognition system capable of addressing the limitations of traditional approaches in behavior monitoring and classification. The proposed system combines pose estimation using DeepLabCut and behavior classification using BiLSTM models, enabling precise recognition of animal behaviors across diverse environments.

A key innovation of the system lies in its incremental learning framework, which allows users to autonomously add new behaviors without requiring extensive retraining or large annotated datasets. This feature significantly reduces the cost and complexity of expanding the system’s capabilities, making it more adaptable to diverse research and monitoring scenarios. The experimental results demonstrate that the system achieves high classification accuracy while maintaining computational efficiency. By seamlessly integrating new behaviors, the system facilitates real-time monitoring and long-term behavior analysis, offering valuable insights into animal welfare, ecological research, and conservation efforts.

Through its innovative design and robust performance, the system addresses critical challenges in the field of animal behavior recognition, providing a flexible, high-performance solution suitable for various controlled environments.

This study makes significant contributions to the field of animal behavior recognition by addressing critical limitations in traditional methods and proposing innovative solutions. The system introduces a user-driven behavior expansion feature, enabling users to autonomously add new behaviors by uploading and annotating a small amount of video data. This eliminates the need for extensive retraining of the model, drastically reducing both time and cost. This innovation enhances the system’s flexibility, making it adaptable to a wide range of applications.

In addition, the integration of DeepLabCut for pose estimation with LSTM models allows the system to achieve high accuracy in classifying complex animal behaviors. This combination improves the system’s adaptability across species and environments, offering a reliable and efficient approach to behavior monitoring. Another key innovation is the implementation of an incremental learning framework, which ensures the seamless integration of new behaviors while preventing the loss of previously learned behaviors. This ensures stable and robust system performance during continuous updates.

The practical implications of this study are also noteworthy. By designing a system that is both intuitive and efficient, it addresses the needs of non-expert users, making it accessible to a broader audience. Its application spans various domains, including animal welfare monitoring, ecological research, and conservation efforts. Overall, this research overcomes the limitations of traditional behavior recognition systems, sets a new standard for flexibility and scalability, and lays a solid foundation for future developments in this field.

Although this study has made significant progress in animal behavior recognition, there remain numerous areas for future exploration to further enhance the system’s performance and expand its application scope. First, while the incremental learning mechanism effectively supports the seamless integration of new behaviors, its stability and efficiency need to be validated in larger-scale behavior categories and more diverse environments. Future research could explore advanced learning methods to improve the model’s generalization capabilities. Second, although the current system focuses on pose estimation and behavior classification, incorporating multi-modal data, such as audio signals, physiological data (e.g., heart rate), or environmental factors, could provide a more comprehensive analysis of animal behavior. This would be particularly beneficial for studying complex interactions, such as group dynamics or responses to environmental stressors.

Finally, the system’s application range could be extended to include broader animal species and field scenarios, such as behavior monitoring in wild environments. By integrating edge computing technologies, the system could be deployed on resource-constrained field devices to achieve real-time behavior recognition and data transmission, further enhancing its practicality and impact.