1. Introduction

Underwater target recognition technology has evolved alongside advancements in sonar technology, signal detection theory, and computer technology [

1,

2]. Subsequent progress in information theory, adaptive signal processing, and modern spectral estimation has driven further developments in this field. This technology holds significant importance across multiple domains [

3]. In marine resource exploration, it enables the effective identification of underwater mineral deposits and biological resources to optimize resource utilization efficiency [

4]. For military defense applications, underwater target recognition is critical for detecting and classifying submarines, unmanned underwater vehicles (UUVs), and other strategic threats, thereby enhancing national maritime defense capabilities [

5]. In environmental conservation, the technology facilitates the monitoring of marine ecosystems and supports the timely identification and mitigation of environmental pollution issues [

6].

Underwater target signals across various physical fields are inherently unstable due to the complex marine environment. These signals exhibit dynamic time–space–frequency variations, nonlinear and non-Gaussian characteristics, multipath effects, and interference from reverberation and environmental noise. A single sensor can only capture partial target information, leading to uncertainties in data acquisition and resulting in imprecise, incomplete, and unreliable observations [

7]. Consequently, underwater target identification based solely on a single sensor suffers from low recognition accuracy, poor robustness, and limited reliability. To achieve a comprehensive and accurate perception of underwater targets, multi-sensor information fusion is essential.

Information fusion theory originated in underwater signal processing, and the marine domain has remained a primary area of focus for fusion research. In military applications, maritime information fusion serves as a core enabling technology for naval intelligence systems. In civilian applications, the demand for oceanic information fusion continues to grow in areas such as maritime safety monitoring, emergency response to maritime incidents, search and rescue operations, marine environmental protection, resource exploration, and disaster prevention [

8,

9]. Traditional methods in underwater target fusion perception include Fisher discriminant analysis, principal component analysis, rough set theory, approximate grid filtering, wavelet hierarchical image fusion, particle filtering, hidden Markov models, double Markov chain models, entropy-based approaches, and joint sparsity models (JSMs).

Several studies have contributed to the development of underwater target fusion recognition. J. Yong employed a voting-based fusion approach incorporating Dempster–Shafer (D-S) theory for local decision fusion, demonstrating enhanced robustness in low signal-to-noise-ratio (SNR) and adversarial environments [

10]. J. Xu explored feature-level fusion of ship acoustic and magnetic field characteristics, utilizing correlation-based processing and M-S diagrams and achieving over 80% classification accuracy in sea trials [

11]. X. Pan introduced an adaptive, multi-feature fusion network for underwater target recognition, incorporating data preprocessing, multi-dimensional feature extraction, and adaptive feature fusion modules to improve classification accuracy [

12].

With the increasing demand for intelligent and rapid underwater target perception, research has shifted towards machine learning-based approaches. Neural network-based sensor information fusion offers a unified internal knowledge representation, enabling automatic knowledge acquisition and parallel associative reasoning. This has emerged as a key research focus in recent years [

13]. X. Han proposed a one-dimensional convolutional neural network (1D-CNN) combined with a long short-term memory (LSTM) network, effectively leveraging the temporal characteristics of ship noise signals to enhance classification accuracy [

14]. Q. Zhang developed a 2D-CNN-based approach to underwater target signal recognition, utilizing frequency-domain information. The proposed ensemble network, consisting of three distinct 2D-CNNs trained on different spectral representations, demonstrated improved recognition performance [

15]. S. Zhang introduced both a feature-level fusion model based on multi-category feature subsets and a decision-level fusion model using D-S evidence theory, achieving superior classification performance compared to single-feature methods [

16].

Deep learning-based methods have also been applied to underwater target perception. X. Cao designed a stacked sparse autoencoder (SSAE) model trained on a dataset comprising underwater acoustic signals from different ocean depths, achieving a 5% improvement in classification accuracy with joint feature inputs [

17]. Y. Dong examined networked underwater target detection scenarios, demonstrating the efficiency of information fusion through case studies [

18]. T. Fei integrated an ensemble learning scheme within the Dempster–Shafer framework for object classification, considering classifier reliability and hypothesis support, and evaluated its performance with synthetic aperture sonar images [

19]. L. Hu investigated target detection and localization using a sensor network comprising active sources, multiple distributed passive sensors, and a fusion center [

20]. Addressing shared information among sensors, P. Braca proposed two diffusion schemes based on contact data from local detection and tracked data through local tracking [

21]. Lin et al. explored an underwater target classification approach that utilizes chaotic characteristics of the flow field, integrating chaos theory and power spectrum density analysis with a two-step SVM for enhanced obstacle recognition [

22]. J. Yan implemented a two-step approach combining local decision-making and external fusion, where a fuzzy membership function assigned weights based on signal reliability, followed by hybrid Bayesian fusion [

23]. X. Zhou developed a deep learning-based data compression and multi-hydrophone fusion (DCMF) model, utilizing a stacked sparse autoencoder and multi-input fusion network to efficiently extract joint frequency–depth features [

24]. K. Song proposed an improved deep regularized canonical correlation analysis (CCA) fusion method for noisy multi-source underwater sensor data, demonstrating enhanced classification efficiency and accuracy [

25]. Xu et al. proposed an underwater acoustic target recognition model that integrates 3D Mel-frequency cepstral coefficients (MFCCs) and 3D Mel features with a multi-scale depthwise separable convolutional network and a multi-scale channel attention mechanism, demonstrating strong classification performance [

26].

Despite these advancements, underwater target recognition remains highly challenging due to the increasing maneuverability, stealth, and automation of targets, as well as the complexity of the underwater environment. The performance of underwater target recognition is affected by various factors, among which data imbalance has become one of the key issues restricting recognition performance. Specifically, there are two types of data imbalance in multi-physical field sensing scenarios. On the one hand, the number of valid data samples collected via different physical field sensing systems varies greatly due to differences in sensing capabilities and environmental conditions, resulting in an inter-field data imbalance. On the other hand, the number of available samples for different target categories is also significantly different, leading to intra-class data imbalance. The coexistence of these two types of imbalance severely limits the effective training and feature learning of classification models such as neural networks, which may easily cause model bias and degrade recognition accuracy and robustness. Therefore, how to design an adaptive recognition scheme for different physical fields and construct a more robust neural network model under the condition of generally imbalanced multi-physical field signal samples has become a critical issue that needs to be addressed.

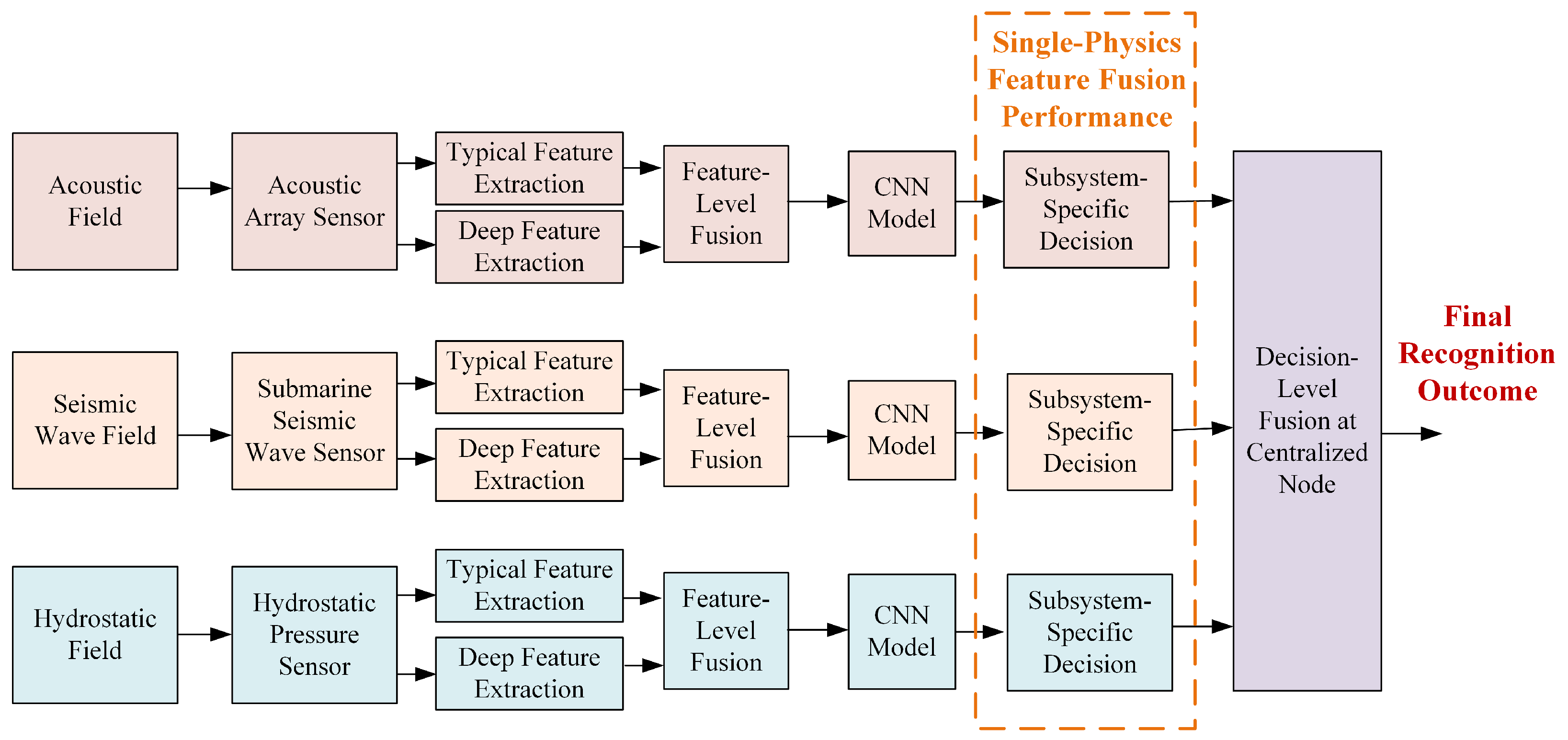

To tackle these challenges, this paper integrates deep learning and information fusion theory to propose a multi-level fusion perception method for underwater targets. We design an intelligent network fusion framework for underwater target recognition, incorporating both feature-level and decision-level fusion across multiple physical fields, including acoustic, pressure, and seismic fields. An autoencoder-based network is employed to extract deep features of underwater targets, which are then fused with conventional typical features to form a multi-dimensional heterogeneous feature set. Furthermore, neural network-based classification models are developed for each physical field subsystem. To address class imbalance and varying sample difficulty, we introduce a C-Focal Loss function tailored to the three underwater target categories. Finally, we propose an evidence-theoretic network fusion framework and a neural network-based DS evidence fusion algorithm to achieve deep fusion perception of underwater targets.

The remainder of this paper is structured as follows.

Section 2 investigates underwater target recognition using multi-physical-field sensing and introduces an intelligent fusion recognition framework based on multiple sensor modalities.

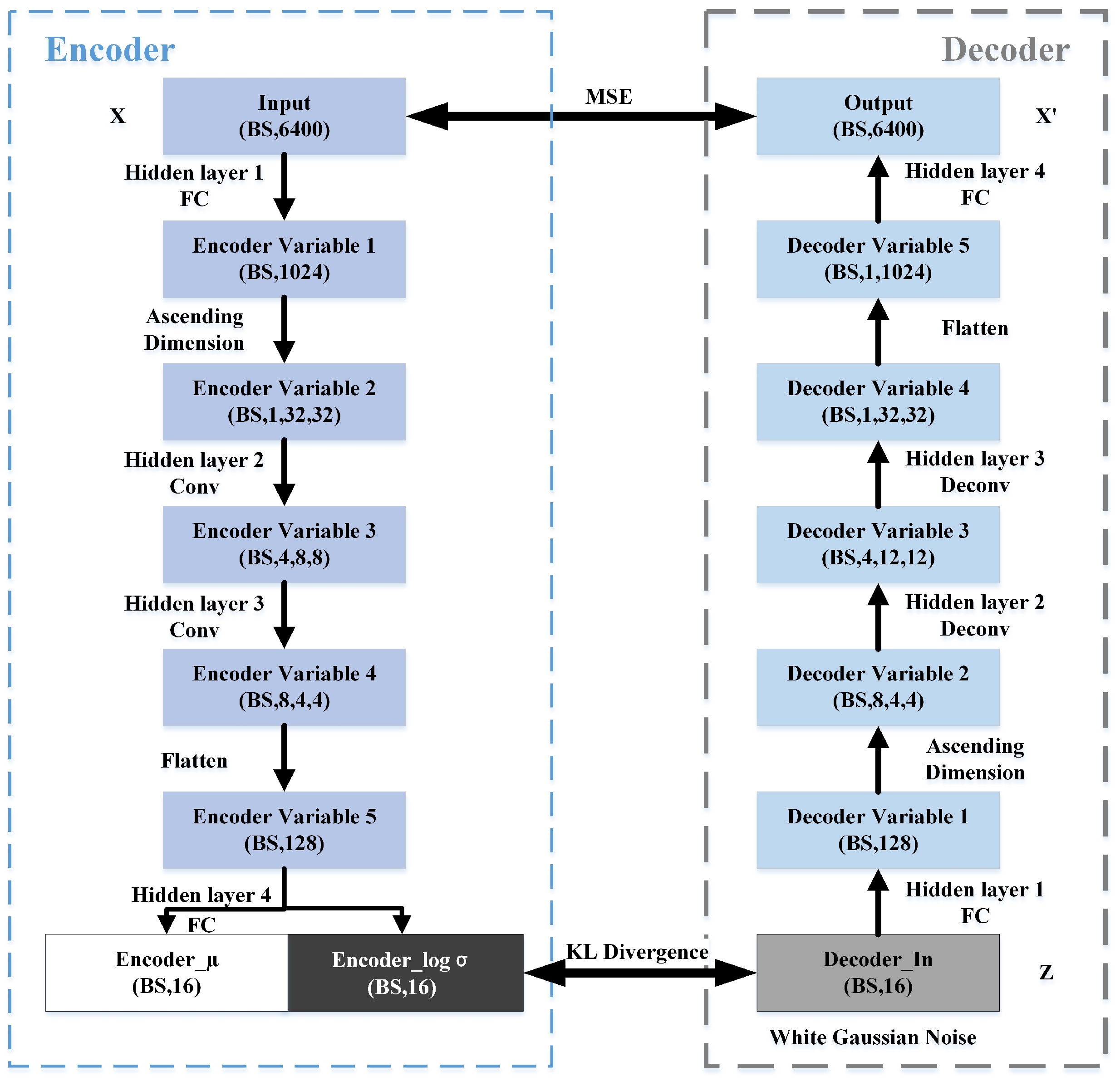

Section 2.1 presents a single-physical-field feature-level fusion approach, extracting multi-dimensional typical features and employing a variational autoencoder network to extract deep features and forming a comprehensive fusion feature set for subsequent classification.

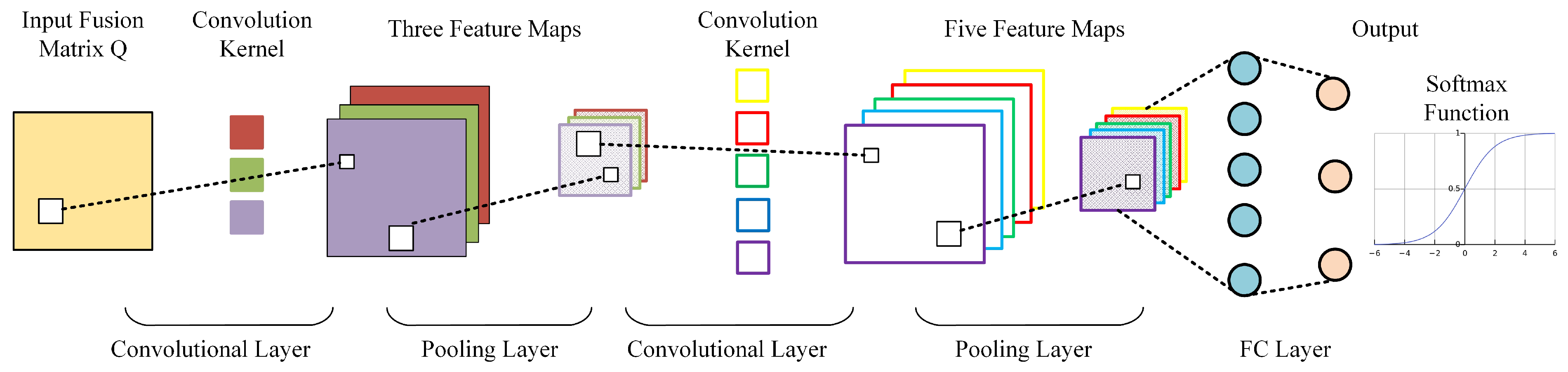

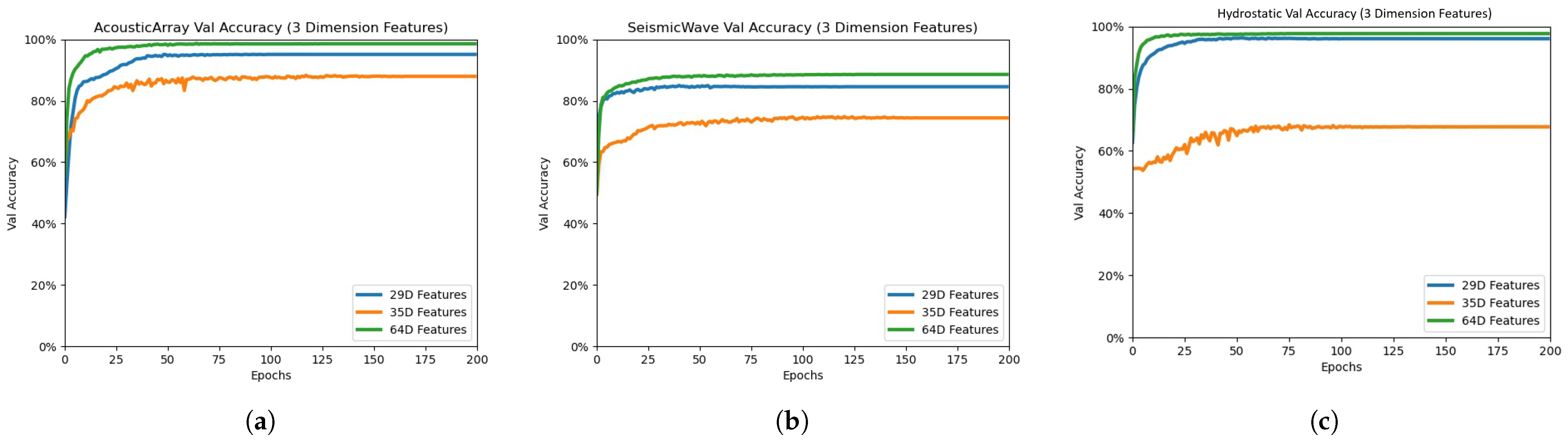

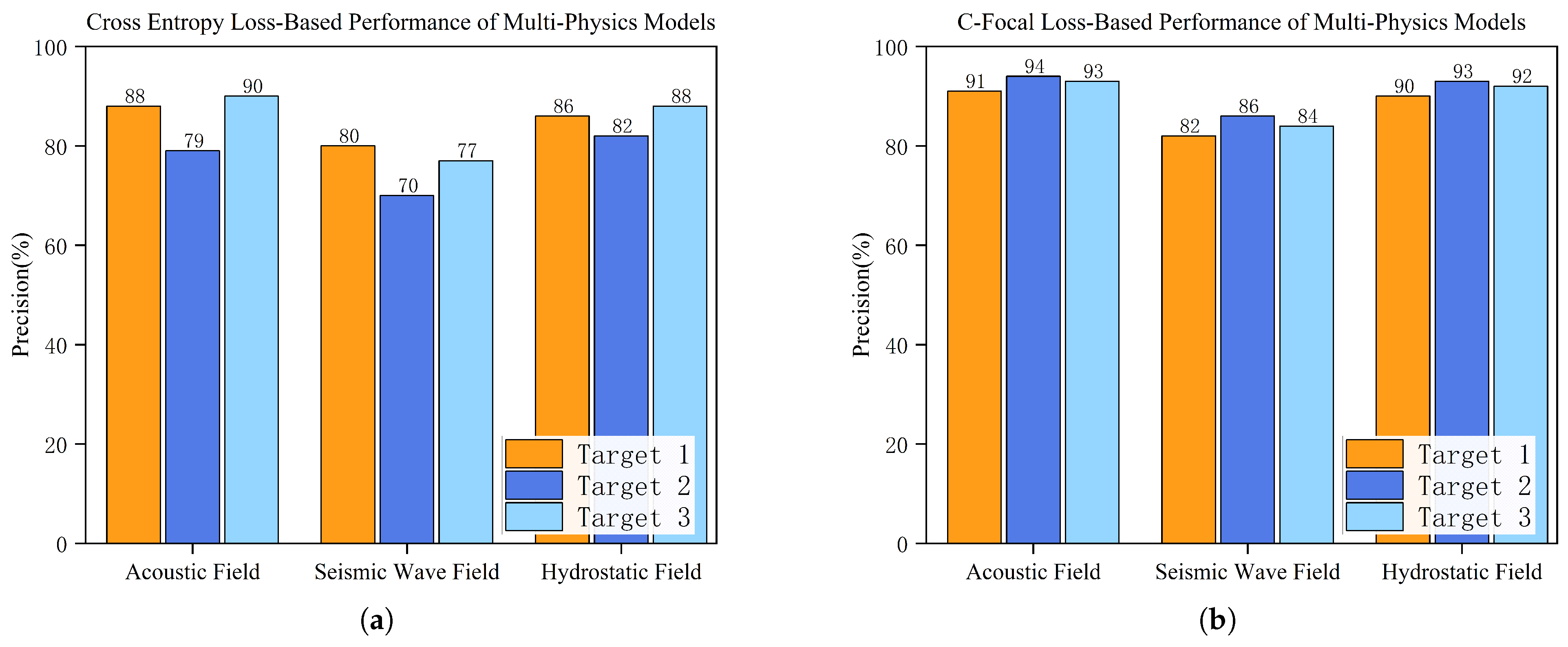

Section 2.2 develops neural network models for each physical field subsystem and introduces the C-Focal Loss function to address sample imbalance among the three target categories.

Section 2.3 explores multi-physical-field decision fusion using evidence theory and proposes an evidence-theoretic network fusion architecture and algorithm.

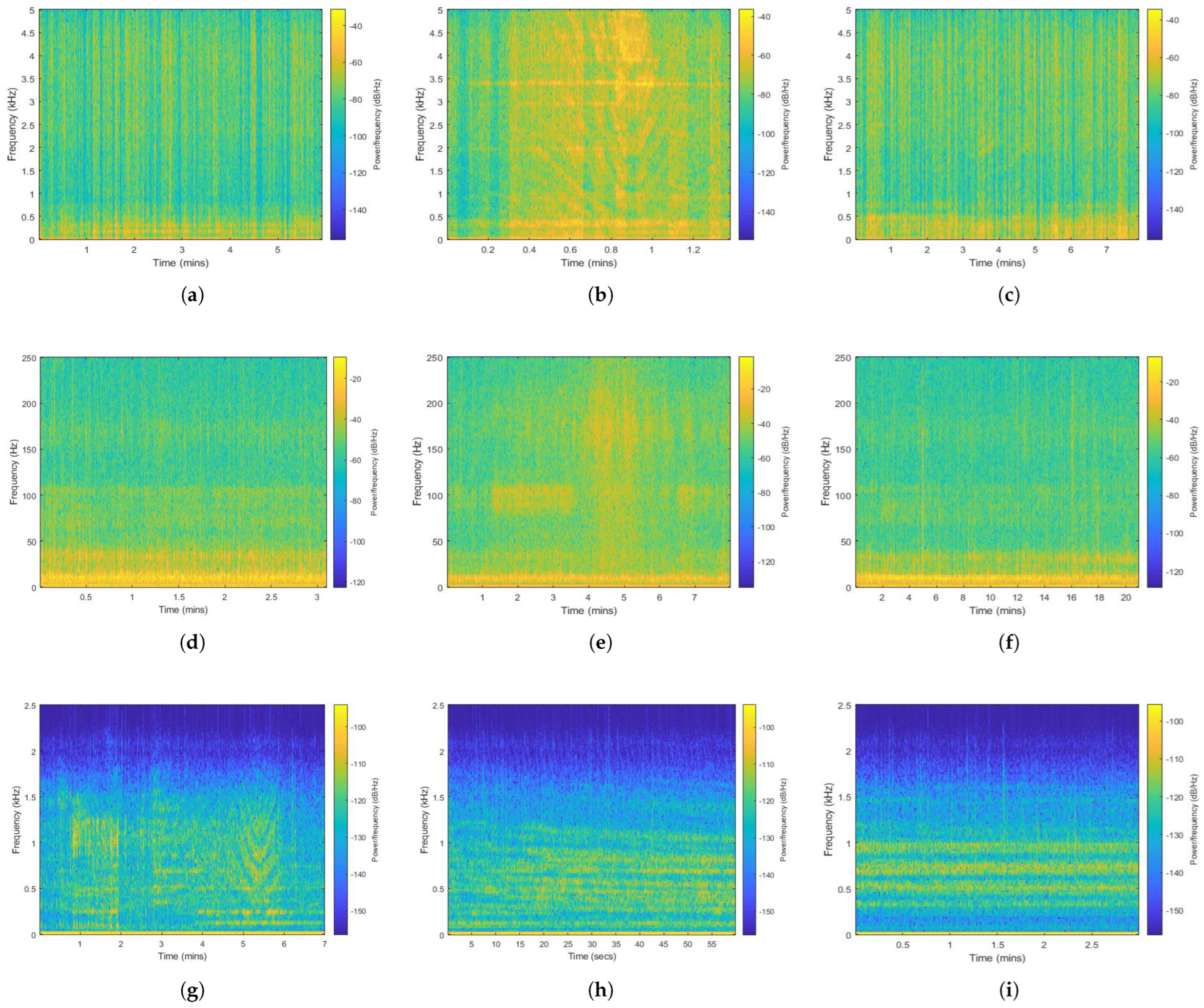

Section 3 presents experimental validation using real-world signals, including the construction of a multi-physical-field signal acquisition system, comparative classification results of individual subsystems, and decision-level fusion at the fusion center. Performance analysis across different physical fields is conducted to verify the effectiveness of the proposed approach.

4. Discussion

The multi-physical field deep fusion perception method proposed in this paper considers imbalanced sample distributions across underwater target signal acquisitions through independent decision-making by physical field subsystems. Each physical field operates through mutually independent subsystem recognition systems, effectively reducing cross-physical-field signal interference. Within each subsystem’s neural network classifier, we implement feature fusion between typical signal characteristics and VAE-generated deep features, facilitating a comprehensive extraction of multi-dimensional heterogeneous characteristics from individual samples to enhance classification capability. A dedicated C-Focal Loss function is designed to address inter-class imbalance in practical underwater target sample collections, significantly enhancing single subsystem recognition accuracy. Finally, decision-level fusion via the NNDS algorithm further optimizes the multi-physical field recognition system’s performance. This hierarchical fusion architecture—spanning sample processing, feature extraction, and decision integration—ultimately achieves 97.15% accuracy in multi-physical field underwater target recognition, demonstrating the framework’s technical superiority.

However, this paper faced certain limitations. From a multi-physics perspective, this study focused on acoustic, seismic, and hydrostatic pressure fields due to limitations in experimental equipment and data availability. Future work should incorporate underwater magnetic and electric fields to comprehensively extract multi-physics signatures of submerged targets. Subsequent research priorities include developing rapid multi-physics fusion-recognition techniques to achieve more efficient and accurate underwater target perception across broader physical domains.

From practical deployment perspectives, underwater surveillance primarily involves detecting uncharacterized targets, particularly non-cooperative platforms like UUVs where pre-collected training data are unavailable. Future research should prioritize investigating few-shot and zero-shot intelligent perception paradigms, leveraging meta-learning architectures or cross-domain transfer mechanisms to enable the precise classification of unidentified non-cooperative underwater targets with limited supervision, demonstrating superior generalization capabilities under extreme data scarcity conditions.