AI-Enhanced Real-Time Monitoring of Marine Pollution: Part 2—A Spectral Analysis Approach

Abstract

1. Introduction

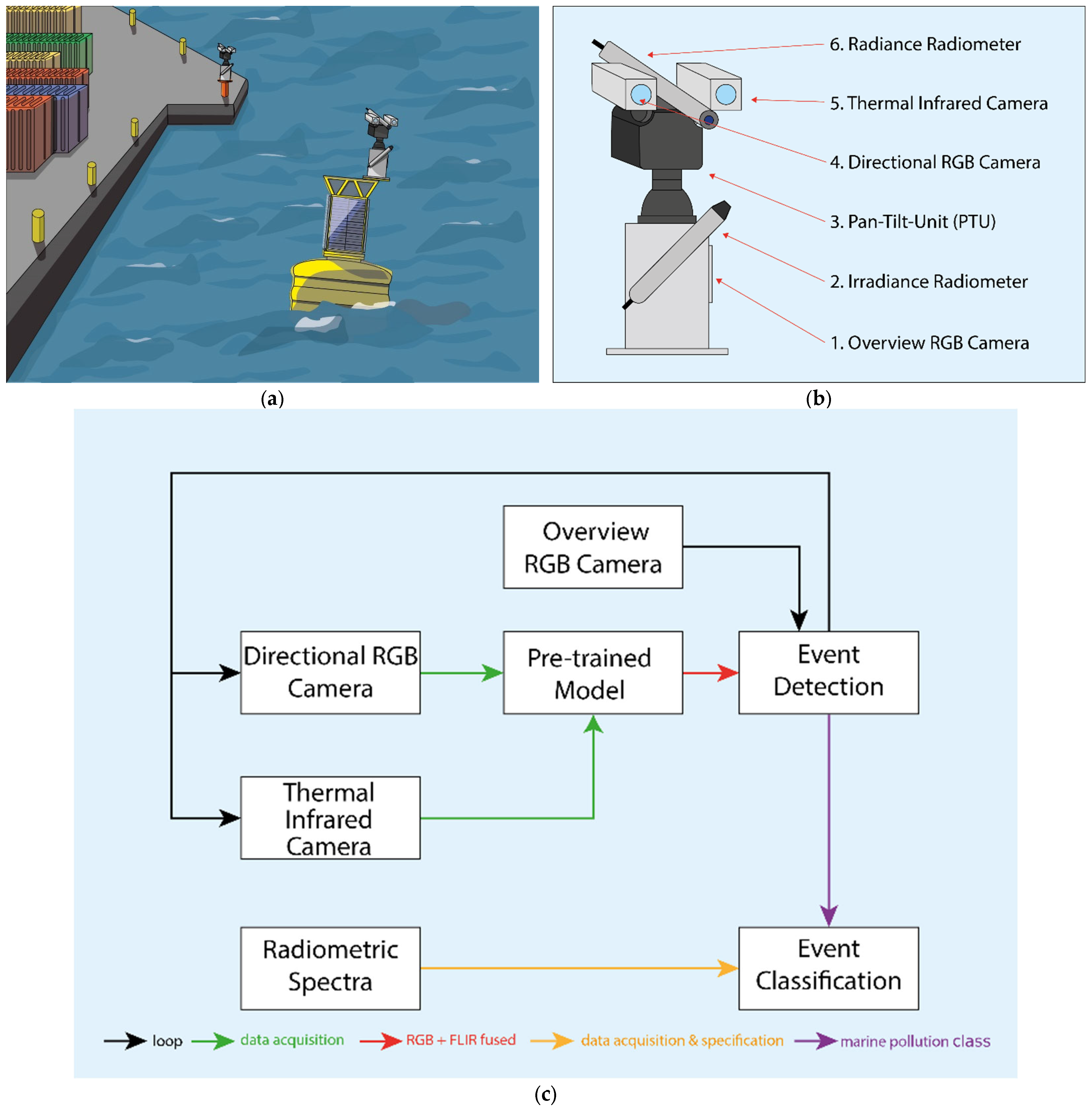

2. Design of Spectral Sensor Model for Marine Pollution Monitoring

2.1. An Overview Unit

2.2. A Directional Unit

3. Validation Through Synthetic Marine Pollution Dataset

3.1. Campaign 1: Static Litter Data

3.2. Campaign 2: Floating Basin Litter Data

3.3. Campaign 3: Grouped Floating-Litter Data

4. AI-Based Synthetic Litter Analysis

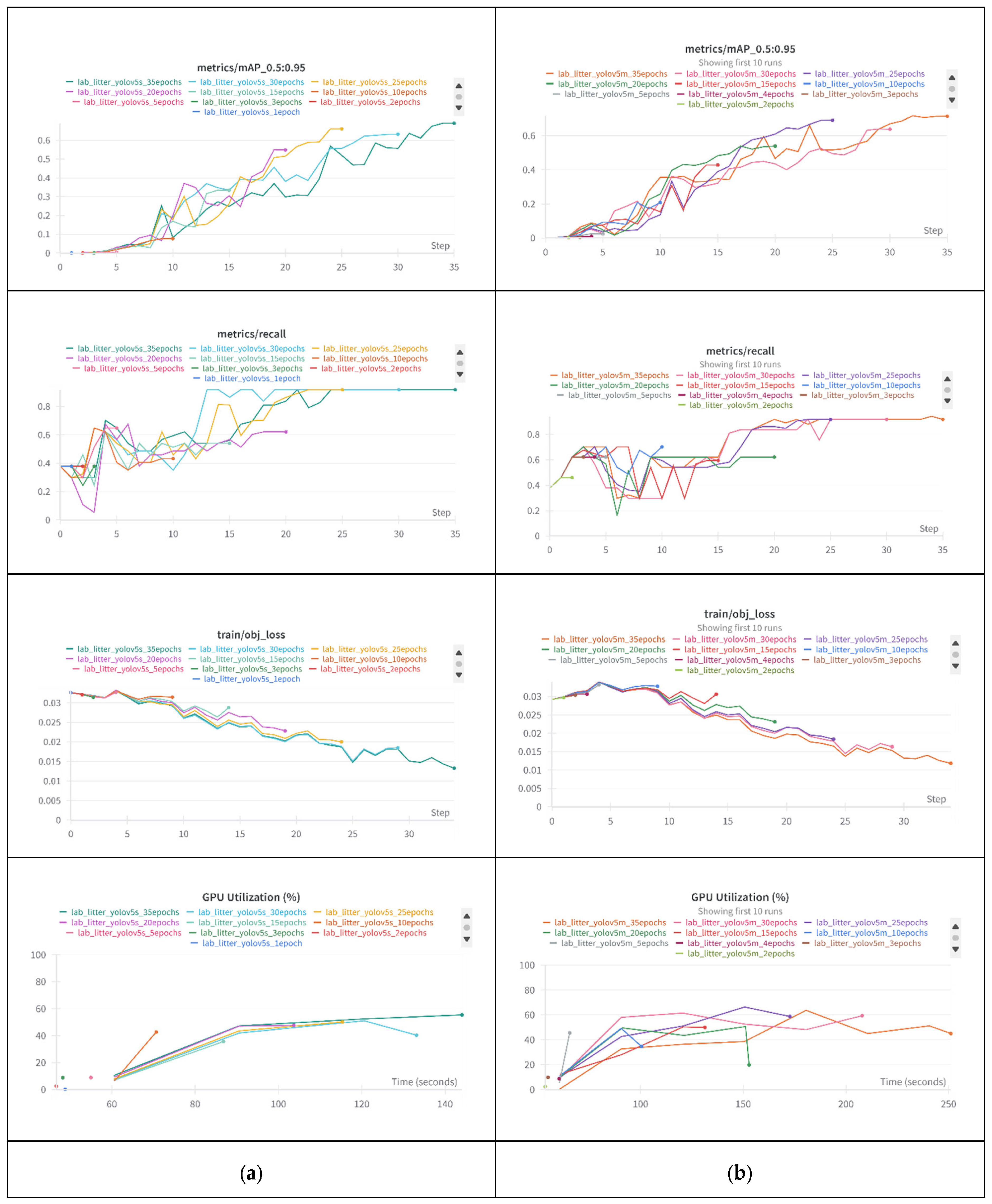

4.1. Campaign 1 and YOLOv5

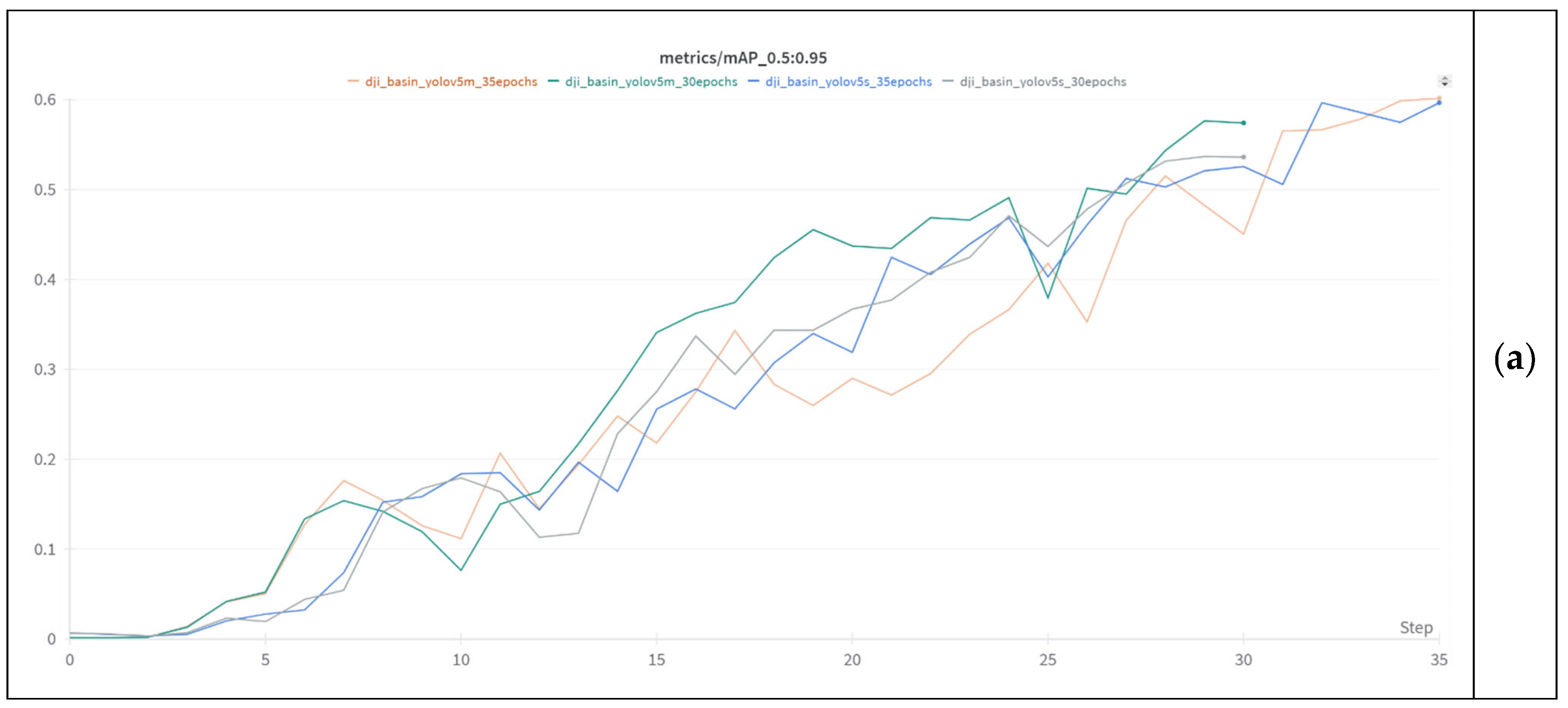

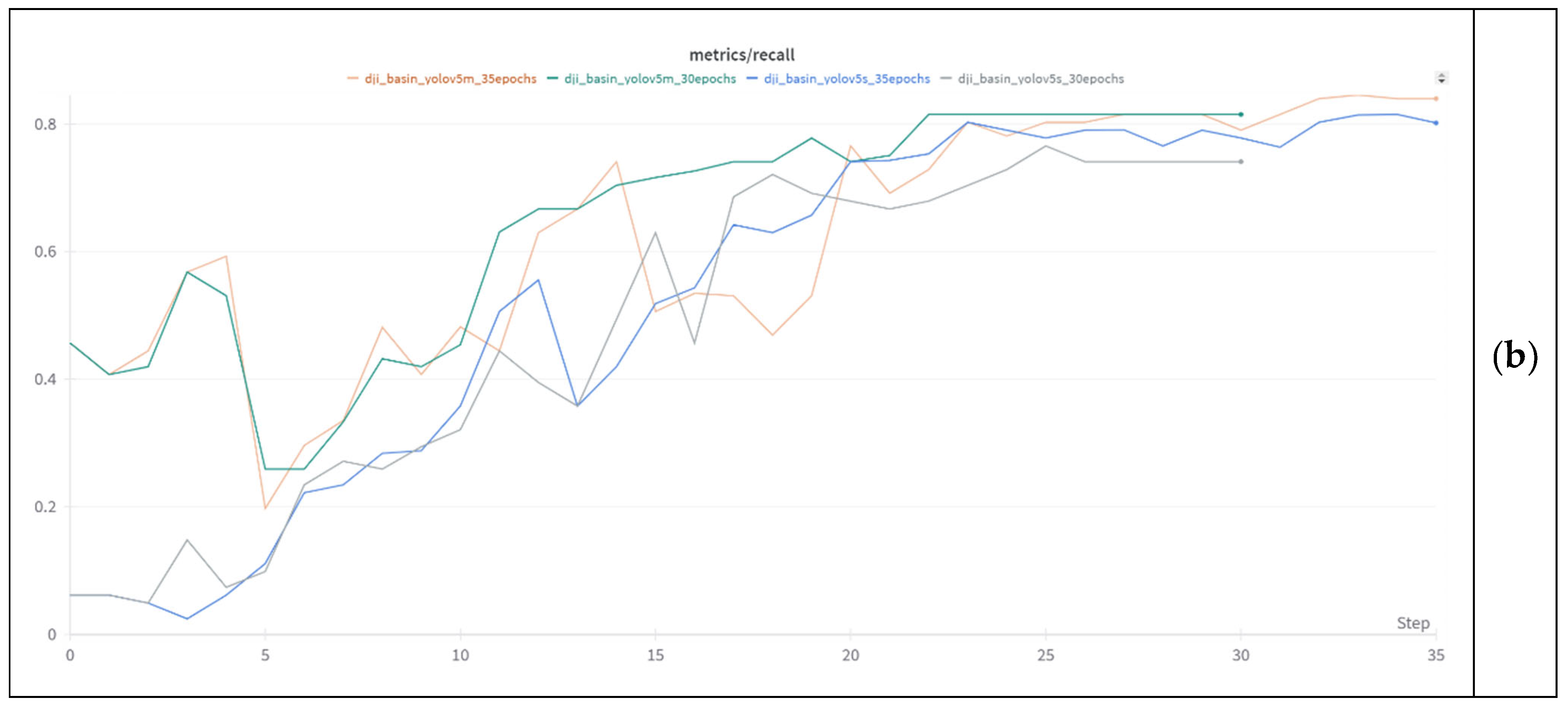

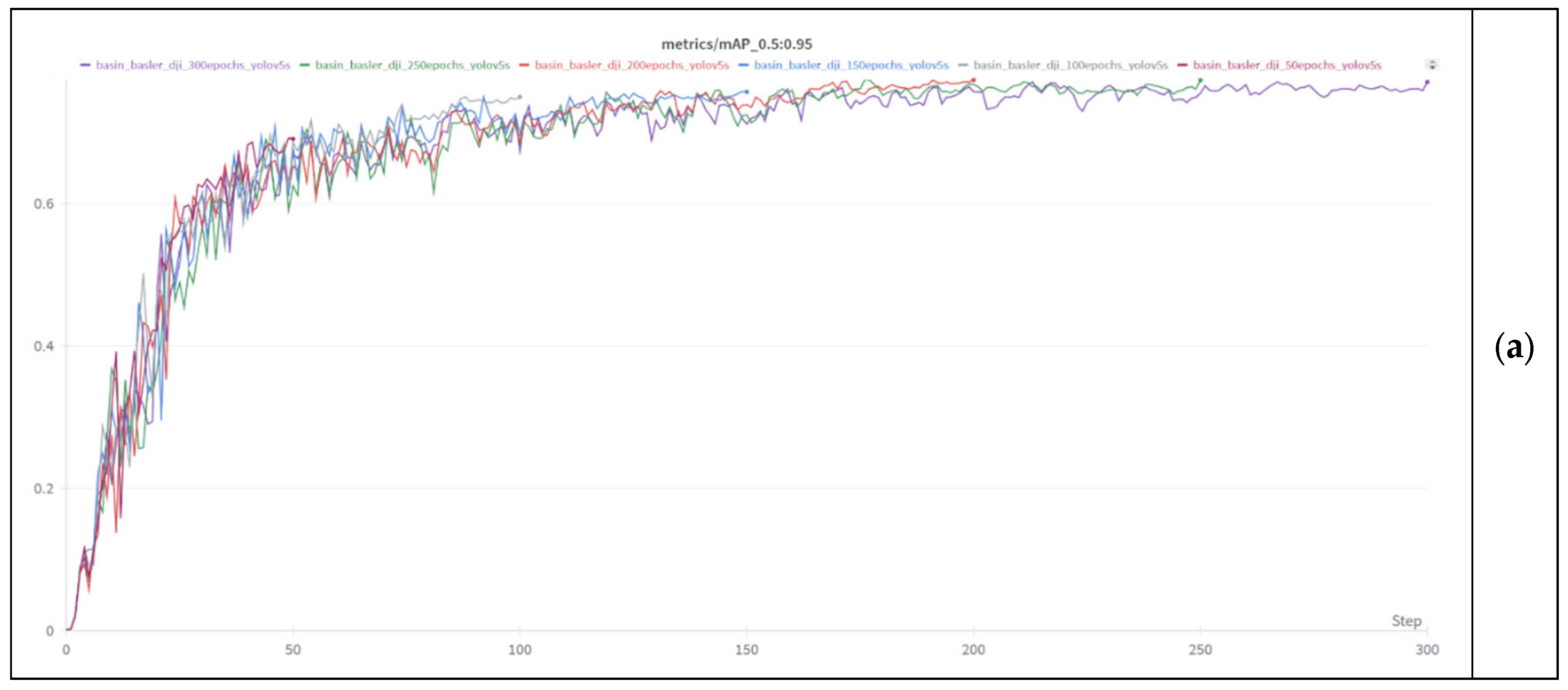

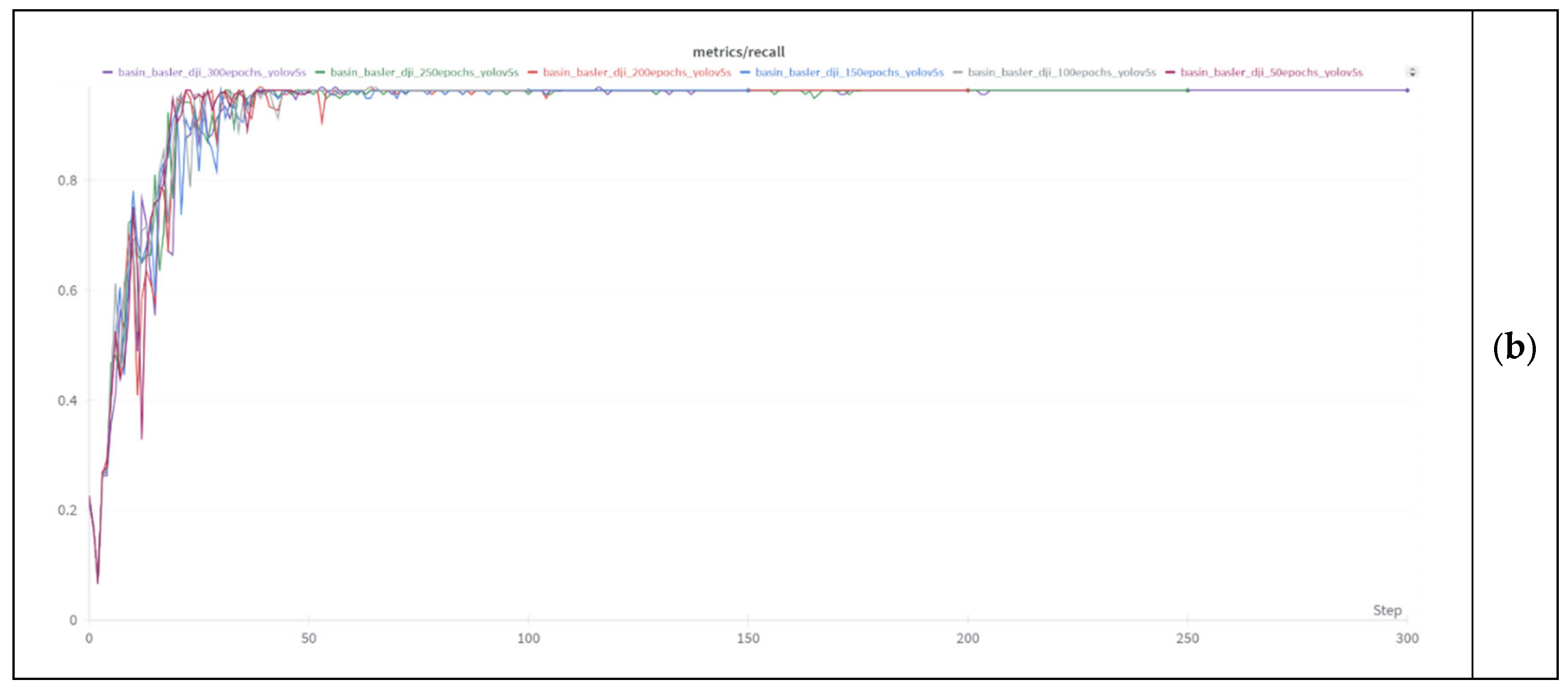

4.2. Campaign 2 and YOLOv5

4.3. Campaign 3 and YOLOv5

4.4. All Campaigns and YOLOv5

4.5. Impact of Synthetic Litter Image Quality on YOLOv5

5. Discussion

5.1. Performance Evaluation: Detection and Classification

5.2. Multispectral Fusion for Enhanced Detection

5.3. Environmental Factors Affecting Performance

5.4. Comparative Analysis Involving Existing Studies

5.5. Impact of Synthetic Litter Image Quality on YOLOv5 Performance

- Campaign 1 (lab-litter dataset): MSE = 19,617.5237, SNR = 1.8389, PSNR = 5.2044;

- Campaign 2 (basin-litter dataset): MSE = 9004.2796, SNR = 4.7582, PSNR = 8.5863;

- Campaign 3 (buoy-litter dataset): MSE = 7418.2185, SNR = 2.4230, PSNR = 9.4278.

5.6. Real-World Implications

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- NAOO. Where Does Marine Debris Come from? US Department of Commerce, NAOO, National Ocean Service. 2022. Available online: https://marinedebris.noaa.gov/discover-marine-debris (accessed on 4 April 2024).

- COAPS. Center for Ocean-Atmospheric Prediction Studies. Global Model for Monitoring Marine Litter. 2022. Available online: https://www.coaps.fsu.edu/our-expertise/global-model-for-marine-litter (accessed on 4 April 2024).

- OECD. Organisation for Economic Cooperation and Development. Global Plastics Outlook, The Ocean. 2022. Available online: https://www.oecd.org/environment/plastics/ (accessed on 4 April 2024).

- UNEP. Global Environment Outlook—GEO-6: Healthy Planet, Healthy People. United Nations Environment Programme. 2022. Available online: https://www.unep.org/resources/global-environment-outlook-6 (accessed on 4 April 2024).

- MSFD. Marine Strategy Framework Directive 2008/56/EC. European Parliament and Council. 2008. Available online: https://eur-lex.europa.eu/legal-content/en/ALL/?uri=CELEX%3A32008L0056 (accessed on 4 April 2024).

- EU. The EU Blue Economy Report 2019. Directorate-General for Maritime Affairs and Fisheries, European Commission, Publications Office of the European Union. 2019. Available online: https://op.europa.eu/en/publication-detail/-/publication/676bbd4a-7dd9-11e9-9f05-01aa75ed71a1/language-en/ (accessed on 4 April 2024).

- UN. Sustainable Development Goals Report. United Nations. 2022. Available online: https://unstats.un.org/sdgs/report/2022/ (accessed on 4 April 2024).

- WHO. World Health Organization Report on Water Sanitation and Health. 2022. Available online: https://www.who.int/publications/i/item/9789240076297 (accessed on 4 April 2024).

- OSPAR Commission. CEMP Guidelines for Marine Monitoring and Assessment of Beach Litter; OSPAR Agreement 2020-02; OSPAR Commission: London, UK, 2020. [Google Scholar] [CrossRef]

- Prakash, N.; Stahl, F.; Mueller, C.L.; Ferdinand, O.; Zielinski, O. Intelligent Marine Pollution Analysis on Spectral Data. In Proceedings of the OCEANS 2021: San Diego—Porto, San Diego, CA, USA, 20–23 September 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Armitage, S.; Awty-Carroll, K.; Clewley, D.; Martinez-Vicente, V. Detection Classification of Floating Plastic Litter using a Vessel-Mounted Video Camera Deep Learning. Remote Sens. 2022, 14, 3425. [Google Scholar] [CrossRef]

- Zielinski, O.; Busch, J.A.; Cembella, A.D.; Daly, K.L.; Engelbrektsson, J.; Hannides, A.K.; Schmidt, H. Detecting Marine Hazardous Substances and Organisms: Sensors for Pollutants, Toxins and Pathogens. Ocean Sci. 2009, 5, 329–349. [Google Scholar] [CrossRef]

- Bagheri, M.; Farshforoush, N.; Bagheri, K.; Shemirani, A.I. Applications of Artificial Intelligence Technologies in Water Environments: From Basic Techniques to Novel Tiny Machine Learning Systems. Process Saf. Environ. Prot. 2023, 180, 10–22. [Google Scholar] [CrossRef]

- Herruzo-Ruiz, A.M.; Peralbo-Nolina, A.; López, C.M.; Michán, C.; Alhama, J.; Chicano-Gálvez, E. Mass Spectrometry Imaging in Environmental Monitoring: From a Scarce Existing Past to a Promising Future. Trends Environ. Anal. Chem. 2024, 42, e00228. [Google Scholar] [CrossRef]

- Hyspex. Hyperspectral Imaging for Plastic Recycling: Classifying Mixed Plastic Waste. HySpex by Neo. 2024. Available online: https://www.hyspex.com/media/qpfh4inu/hyspex_plastics.pdf (accessed on 4 April 2024).

- Ben-Shoushan, R.; Brook, A. Fused Thermal and RGB Imagery for Robust Detection and Classification of Dynamic Objects in Mixed Datasets via Pre-Trained High-Level CNN. Remote Sens. 2023, 15, 723. [Google Scholar] [CrossRef]

- Bustos, N.; Mashhadi, M.; Lai-Yuen, S.K.; Sarkar, S.; Das, T.K. A Systematic Literature Review on Object Detection using Near Infrared and Thermal Images. Neurocomputing 2023, 560, 126804. [Google Scholar] [CrossRef]

- Zhao, T.; Yuan, M.; Jiang, F.; Wang, N.; Wei, X. Removal and Selection: Improving RGB-Infrared Object Detection via Coarse-to-Fine Fusion. arXiv 2024. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv5: A State-of-the-Art Real-Time Object Detection System. 2021. Available online: https://docs.ultralytics.com/yolov5/ (accessed on 4 April 2024).

- Ma, J.; Ma, R.; Pan, Q.; Liang, X.; Wang, J.; Ni, X. A Global Review of Progress in Remote Sensing and Monitoring of Marine Pollution. Water 2023, 15, 3491. [Google Scholar] [CrossRef]

- Goddijn-Murphy, L.; Martínez-Vicente, V.; Dierssen, H.M.; Raimondi, V.; Gandini, E.; Foster, R.; Chirayath, V. Emerging Technologies for Remote Sensing of Floating and Submerged Plastic Litter. Remote Sens. 2024, 16, 1770. [Google Scholar] [CrossRef]

- Taneepanichskul, N.; Hailes, H.C.; Miodownik, M. Using Hyperspectral Imaging to Identify and Classify Large Microplastic Contamination in Industrial Composting Processes. Front. Sustain. 2024, 5, 1332163. [Google Scholar] [CrossRef]

- Vansteenwegen, D.; Ruddick, K.; Cattrijsse, A.; Vanhellemont, Q.; Beck, M. The Pan-and-Tilt Hyperspectral Radiometer System (PANTHYR) for Autonomous Satellite Validation Measurements—Prototype Design and Testing. Remote Sens. 2019, 11, 1360. [Google Scholar] [CrossRef]

- Ade, C.; Hestir, E.L.; Avouris, D.M.; Burmistrova, J.; Nickles, C.; Lopez, A.M.; Barreto, B.L.; Vellanoweth, J.; Smalldon, R.; Lee, C.M. SHIFT: Ramses Trios Radiometer Above Water Measurements, Santa Barbara Sea, CA; ORNL DAAC: Oak Ridge, TN, USA, 2023. [Google Scholar] [CrossRef]

- Vabson, V.; Ansko, I.; Duong, K.; Vendt, R.; Kuusk, J.; Ruddick, K.; Bialek, A.; Tilstone, G.H.; Gossn, J.I.; Kwiatkowska, E. Complete characterisation of ocean color radiometers. Front. Remote Sens. 2024, 5, 1320454. [Google Scholar] [CrossRef]

- SURF. Sea Surface Facility. 2023. Available online: https://uol.de/icbm/prozesse-und-sensorik-mariner-grenzflaechen/equipment-and-infrastructure/surf (accessed on 4 April 2024).

- Tzutalin. LabelImg. 2015. Available online: https://github.com/tzutalin/labelImg (accessed on 4 April 2024).

- WandB. Weights and Biases. 2025. Available online: https://wandb.ai/site (accessed on 4 April 2024).

- Moorton, Z.; Kurt, Z.; Woo, W.L. Is the Use of Deep Learning an Appropriate Means to Locate Debris in the Ocean Without Harming Aquatic Wildlife? Mar. Pollut. Bull. 2022, 181, 113853. [Google Scholar] [CrossRef] [PubMed]

| Study | Method | F1-Score | mAP | Key Limitations |

|---|---|---|---|---|

| Armitage et al. (2022) [11] | Vessel-mounted RGB Camera + Deep Learning | 0.75 | 0.78 | Limited detection range, affected by lighting |

| Ben-Shoushan and Brook (2023) [16] | Thermal + RGB CNN Model | 0.81 | 0.83 | Limited dataset, no hyperspectral integration |

| This Study | Synthetic Litter Data (RGB) + YOLOv5 | 1.0 | 0.92 | Environmental factors still impact accuracy |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Prakash, N.; Zielinski, O. AI-Enhanced Real-Time Monitoring of Marine Pollution: Part 2—A Spectral Analysis Approach. J. Mar. Sci. Eng. 2025, 13, 636. https://doi.org/10.3390/jmse13040636

Prakash N, Zielinski O. AI-Enhanced Real-Time Monitoring of Marine Pollution: Part 2—A Spectral Analysis Approach. Journal of Marine Science and Engineering. 2025; 13(4):636. https://doi.org/10.3390/jmse13040636

Chicago/Turabian StylePrakash, Navya, and Oliver Zielinski. 2025. "AI-Enhanced Real-Time Monitoring of Marine Pollution: Part 2—A Spectral Analysis Approach" Journal of Marine Science and Engineering 13, no. 4: 636. https://doi.org/10.3390/jmse13040636

APA StylePrakash, N., & Zielinski, O. (2025). AI-Enhanced Real-Time Monitoring of Marine Pollution: Part 2—A Spectral Analysis Approach. Journal of Marine Science and Engineering, 13(4), 636. https://doi.org/10.3390/jmse13040636