Intelligent Detection of Oceanic Front in Offshore China Using EEFD-Net with Remote Sensing Data

Abstract

1. Introduction

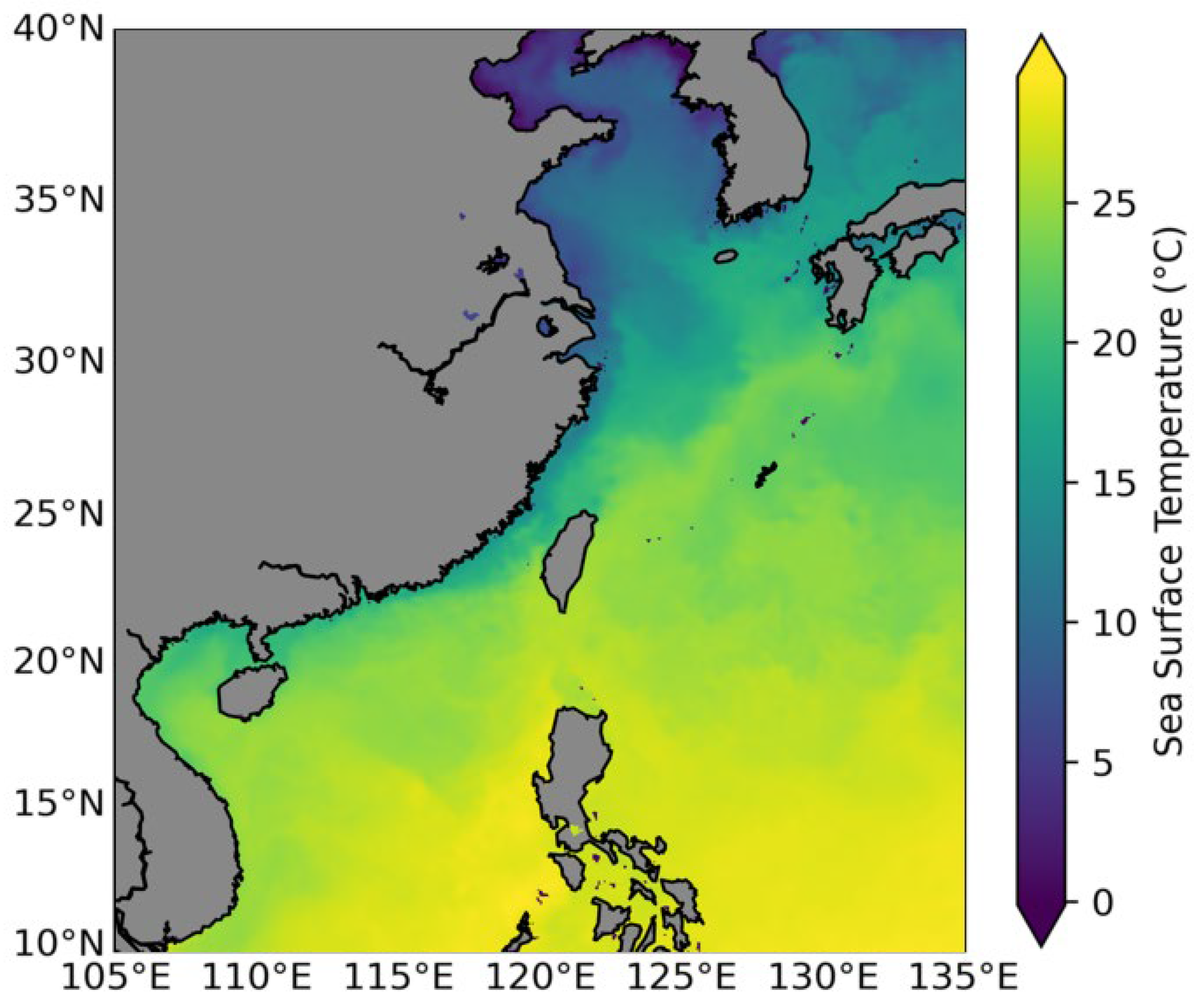

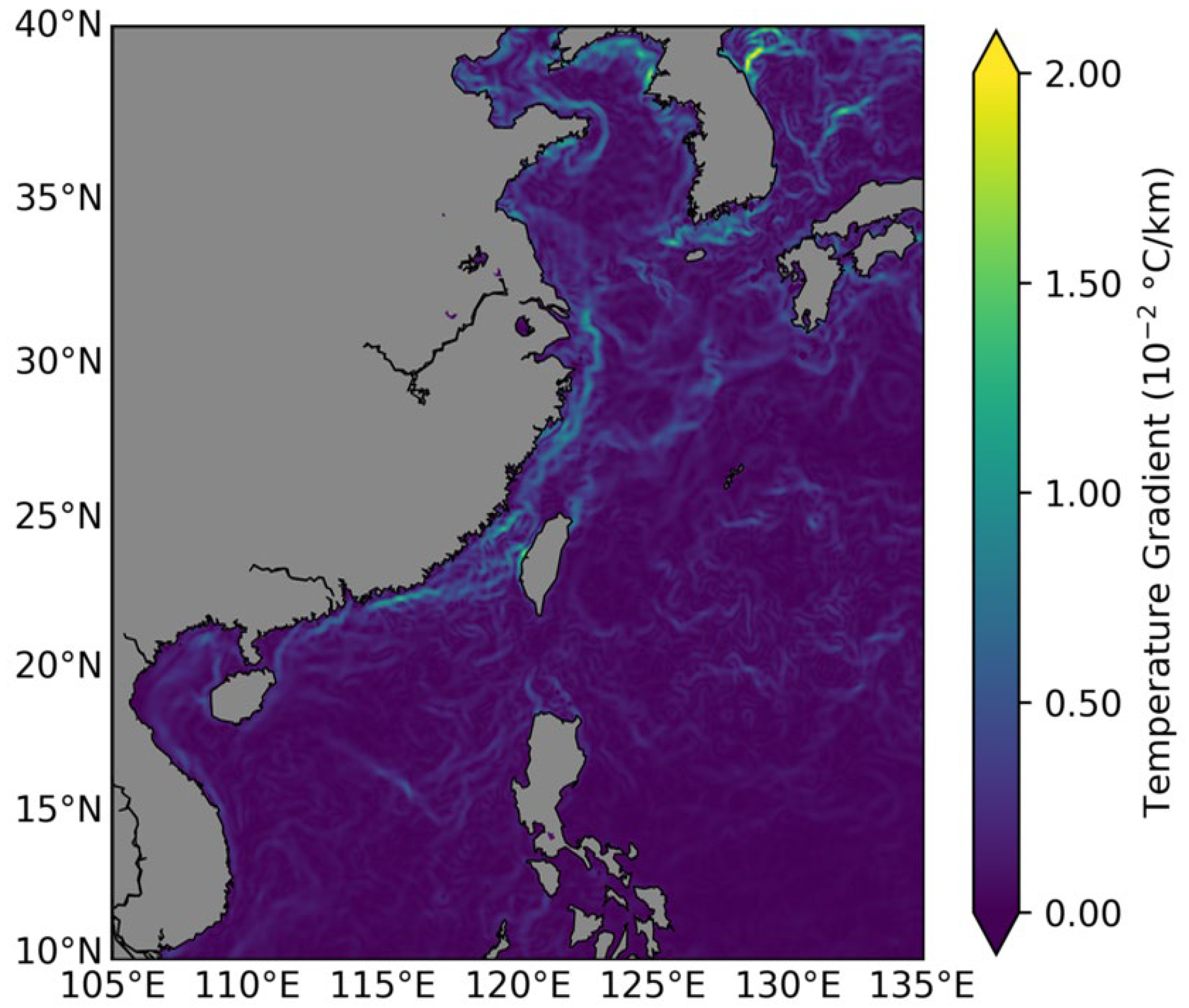

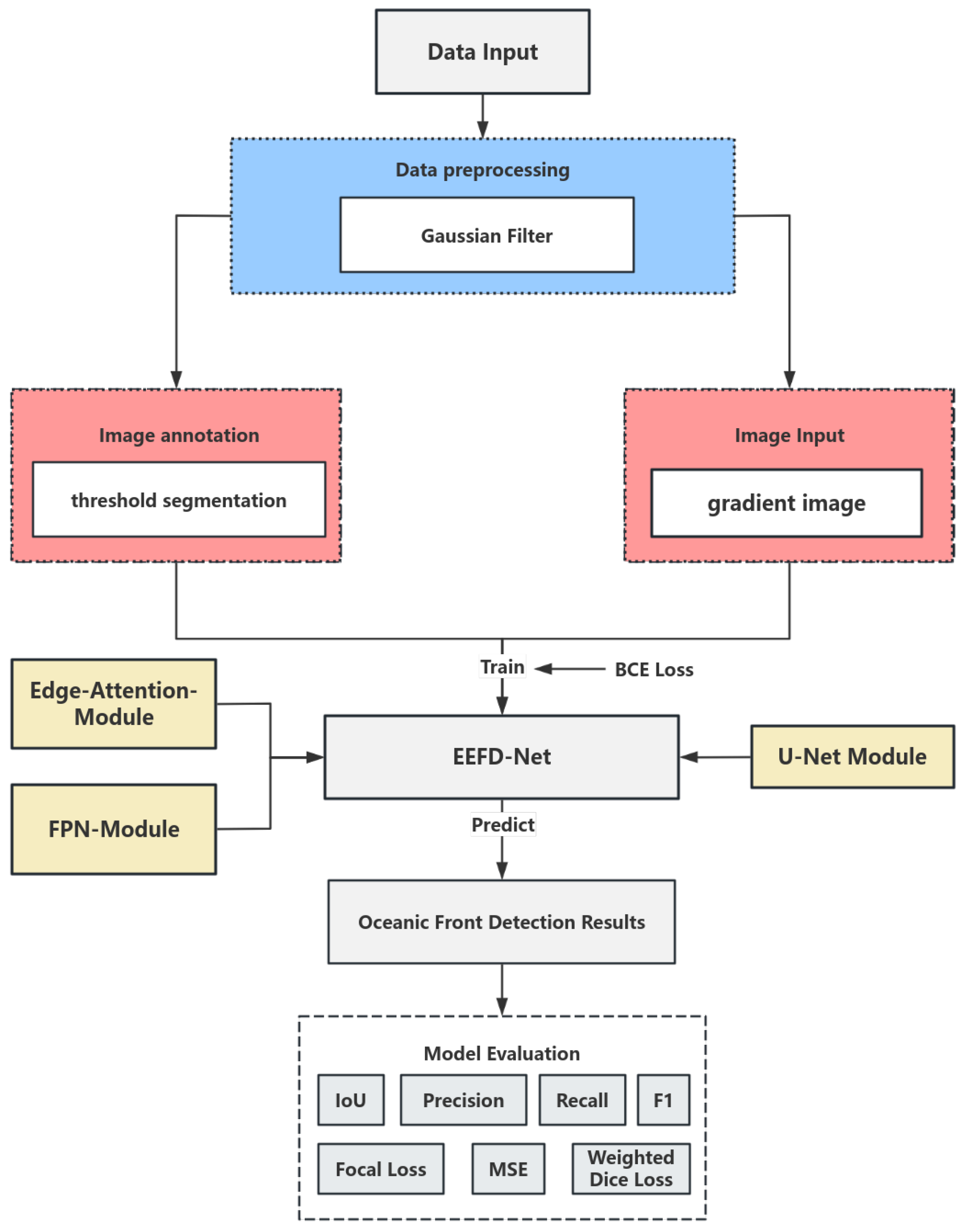

2. Datasets and Data Processing

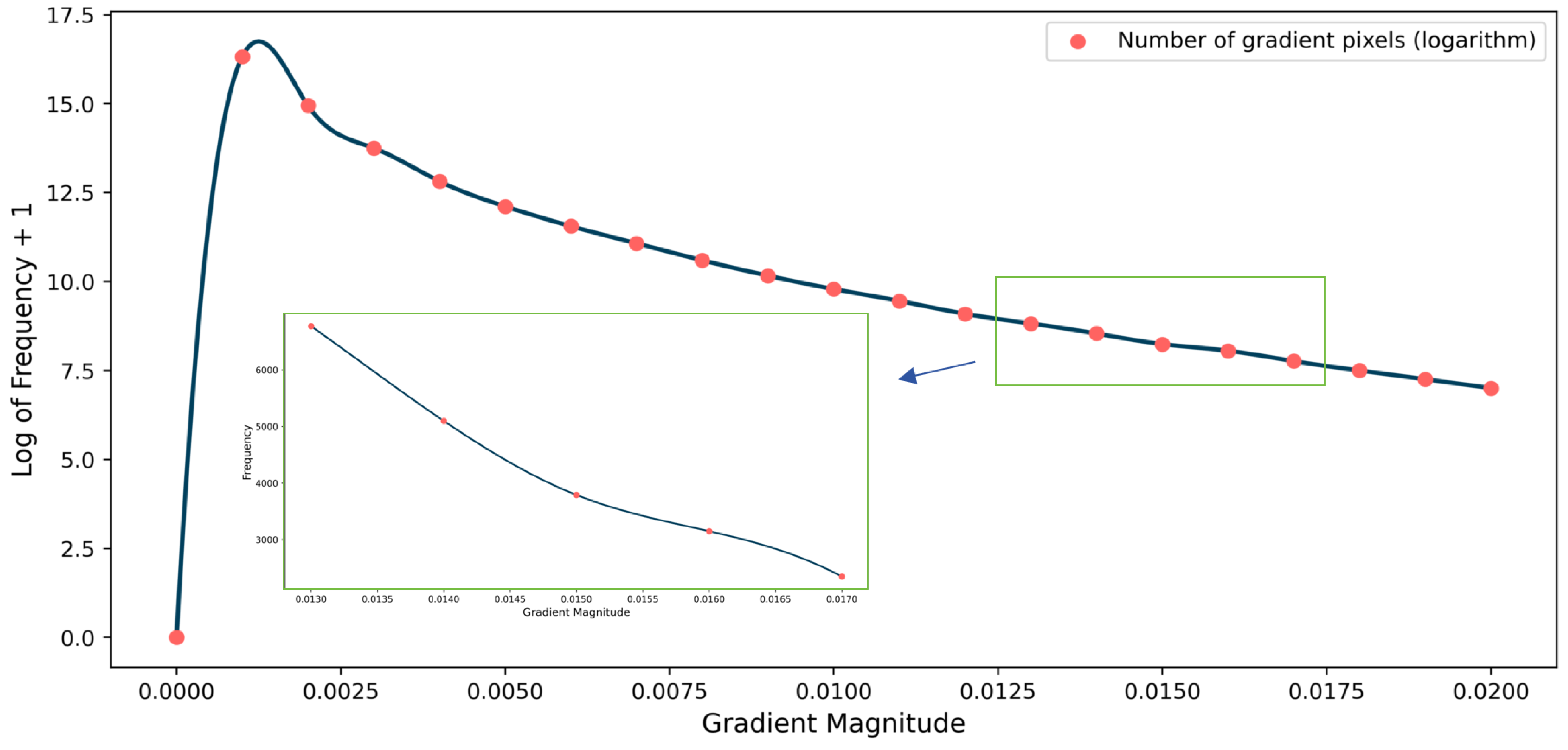

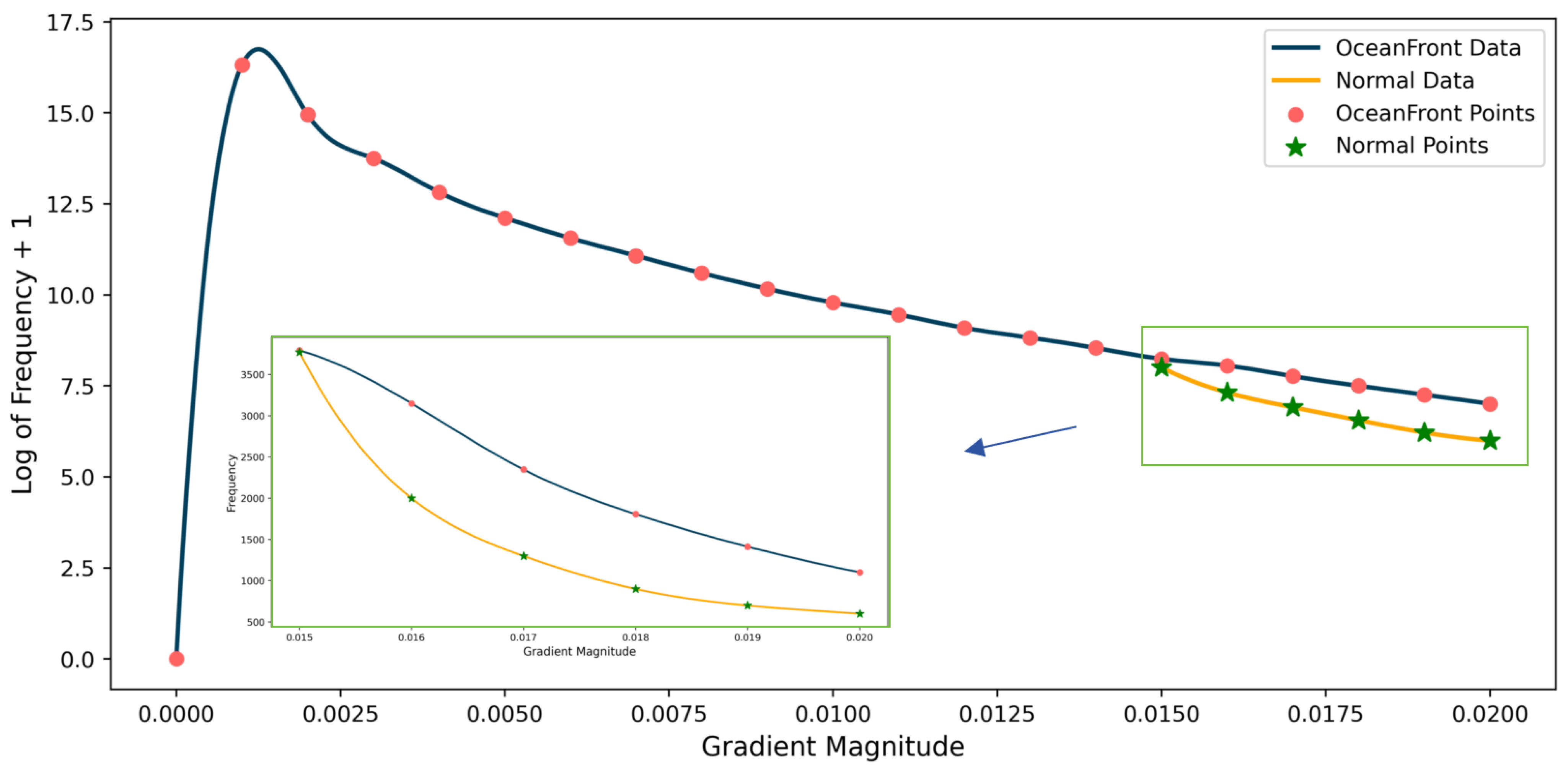

2.1. Dataset Introduction

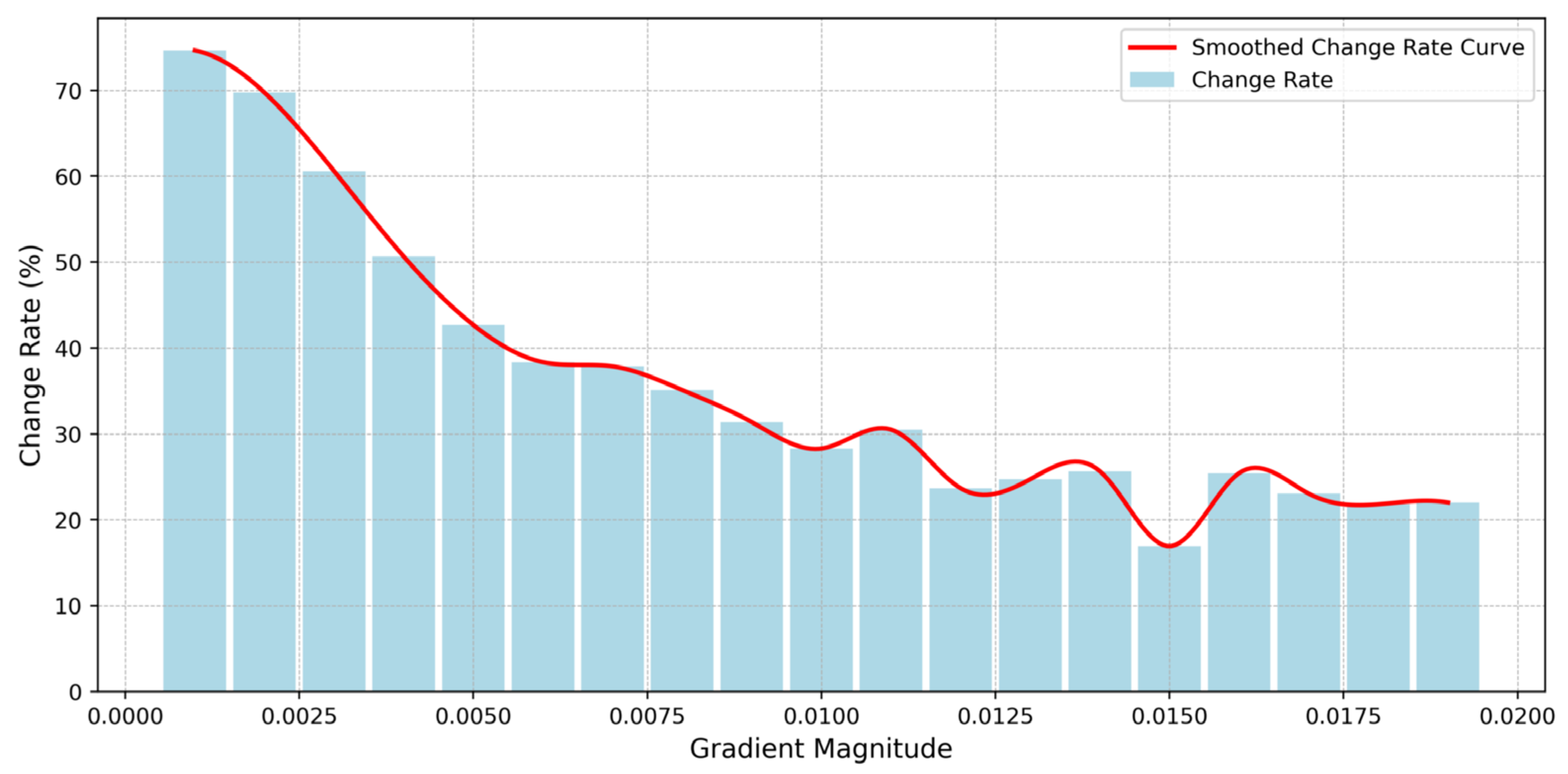

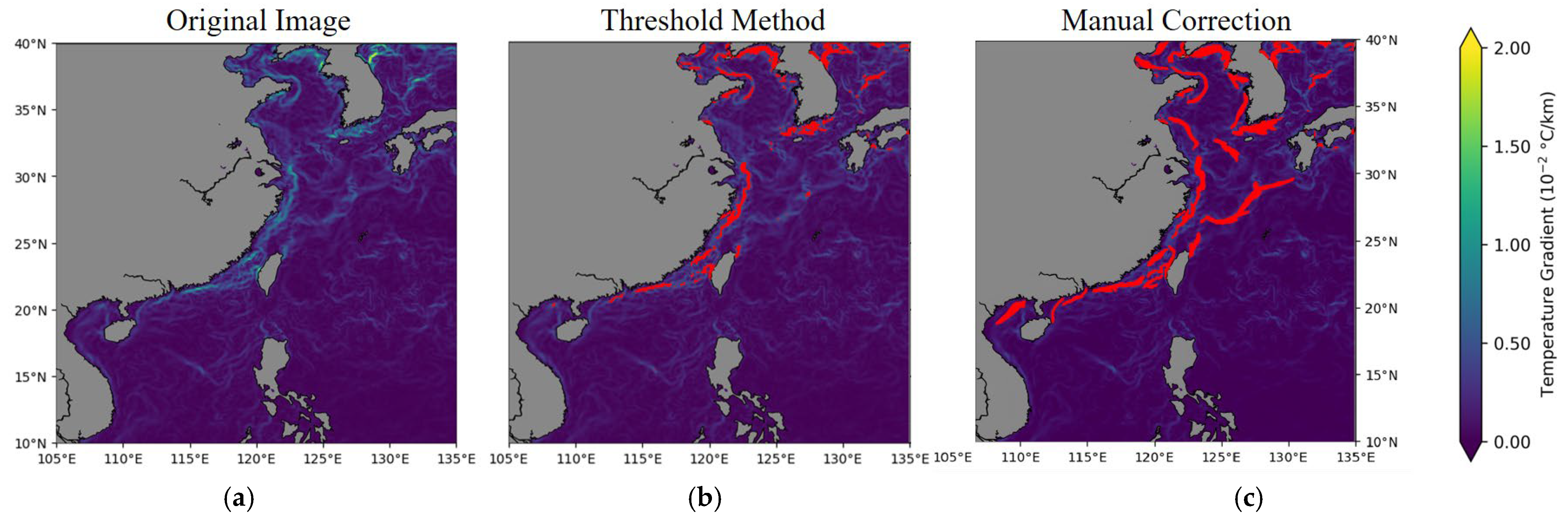

2.2. Data Set Annotation

3. Model Description

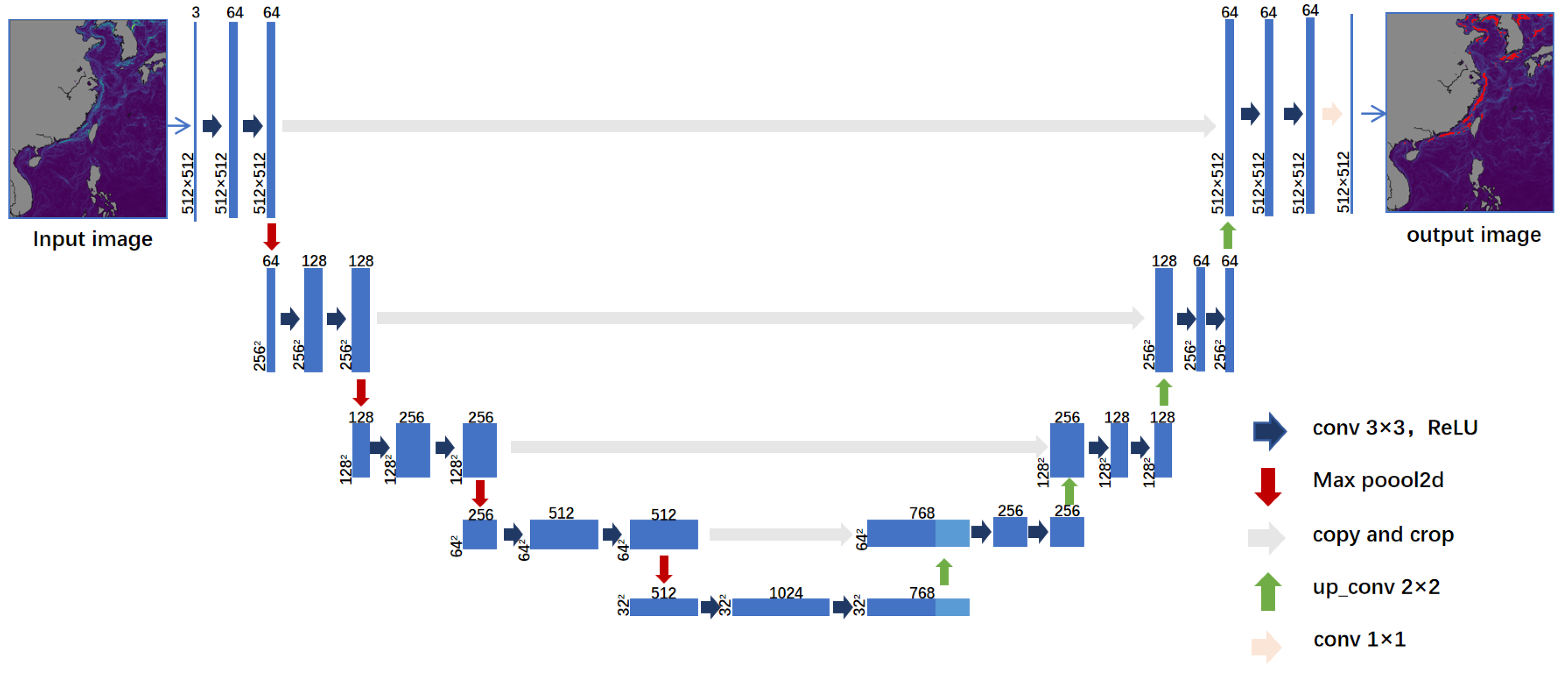

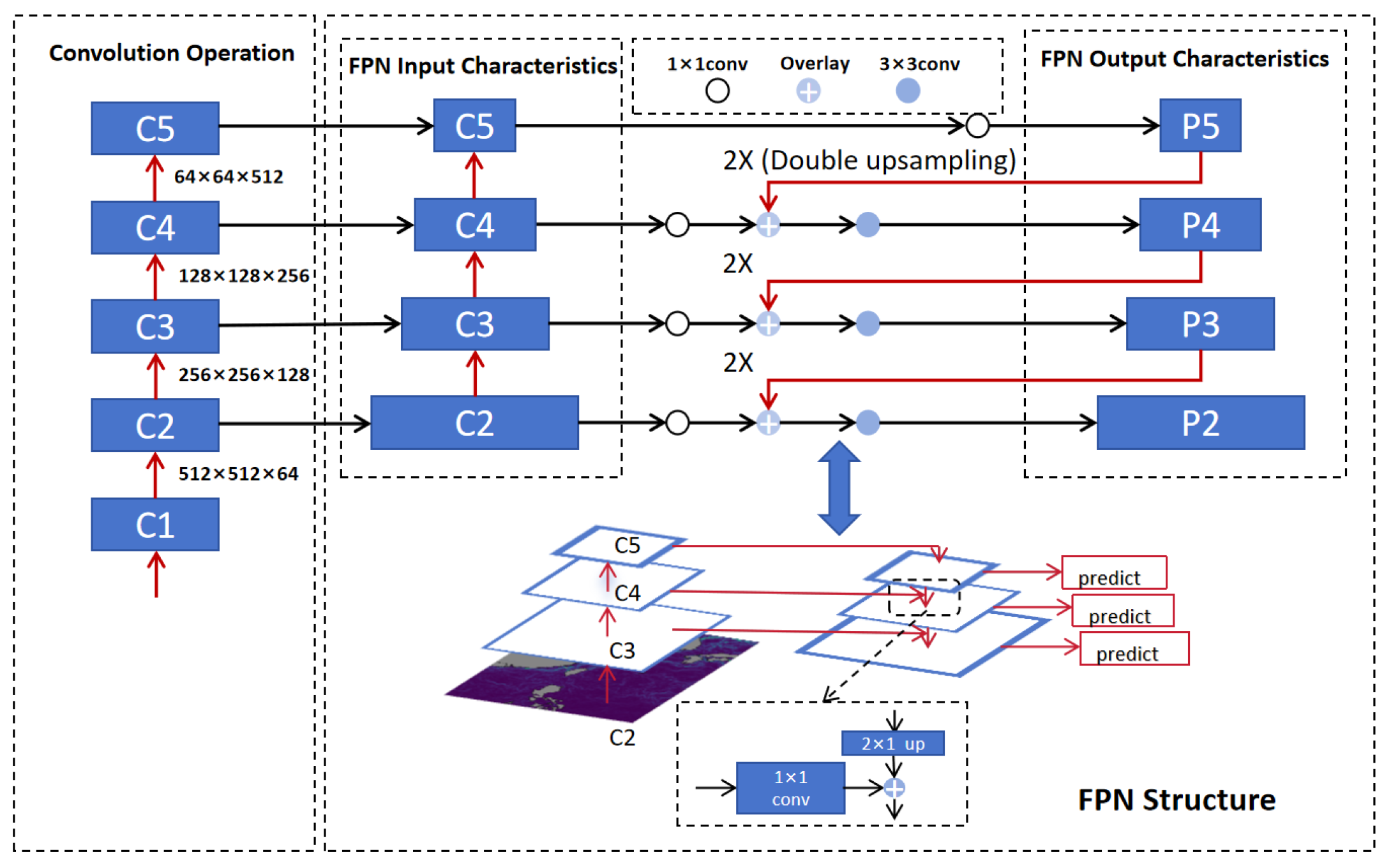

3.1. Existing Algorithm

3.1.1. Basic Principles of U-Net

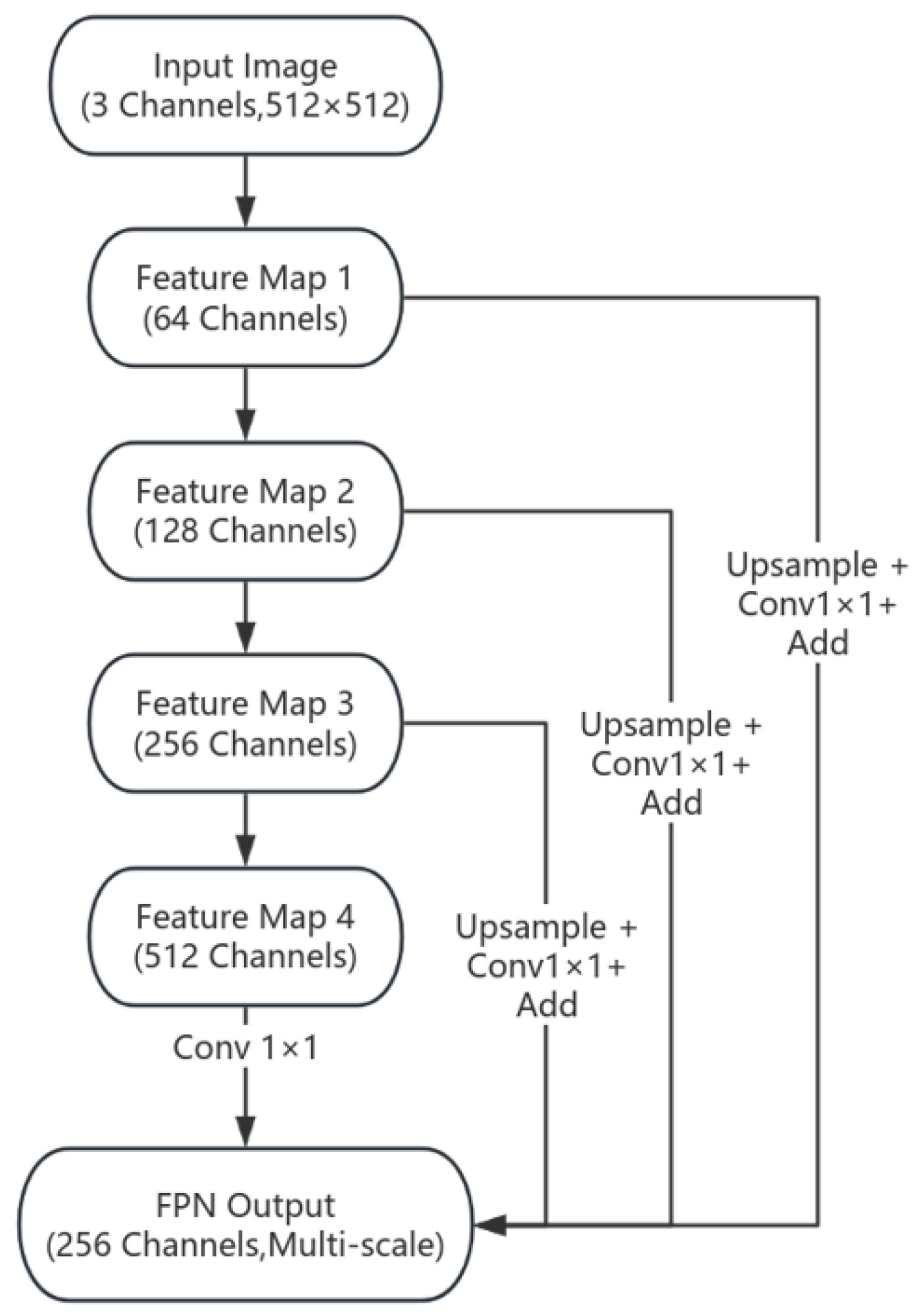

3.1.2. Basic Principles of FPN

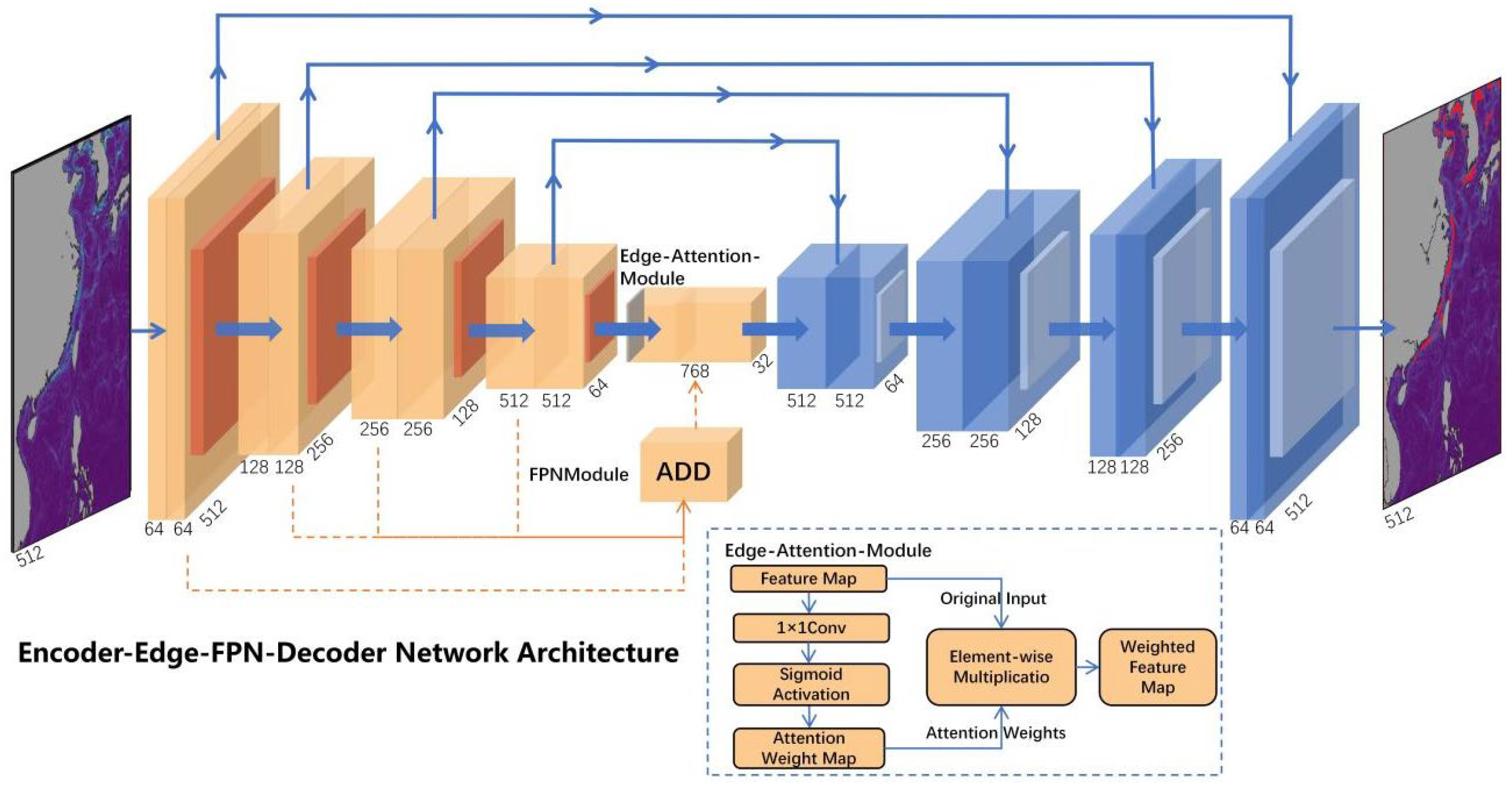

3.2. Improve Algorithm (EEFD-Net)

4. Experimental Environment and Results

4.1. Experimental Preparation

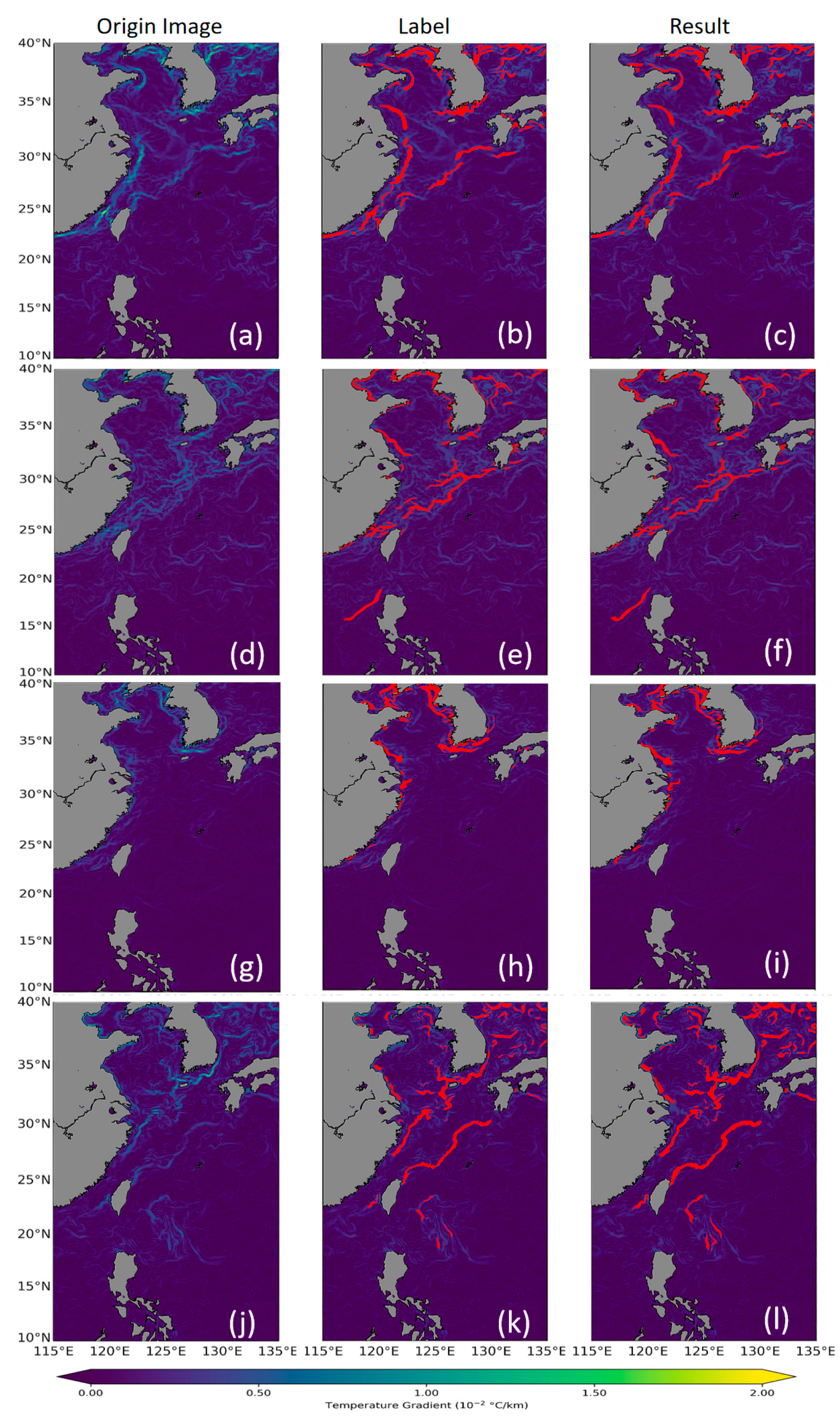

4.2. Result and Analysis

4.3. Model Validation

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bost, C.; Cotté, C.; Bailleul, F.; Cherel, Y.; Charrassin, J.B.; Guinet, C.; Ainley, D.G.; Weimerskirch, H. The importance of oceanographic fronts to marine birds and mammals of the southern oceans. J. Mar. Syst. 2008, 78, 363–376. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Cayula, J.F.; Cornillon, P. Edge detection algorithm for SST images. J. Atmos. Oceanic Technol. 1992, 9, 67–80. [Google Scholar] [CrossRef]

- Belkin, I.M.; O’Reilly, J.E. An algorithm for oceanic front detection in chlorophyll and SST satellite imagery. J. Mar. Syst. 2009, 78, 319–326. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, W.; Chen, Y.; Chen, W.; Ma, L.; Meng, Z. Ocean front reconstruction method based on K-means algorithm iterative hierarchical clustering sound speed profile. J. Mar. Sci. Eng. 2021, 9, 1233. [Google Scholar] [CrossRef]

- Simhadri, K.K.; Iyengar, S.S.; Holyer, R.J.; Lybanon, M.; Zachary, J. Wavelet-based feature extraction from oceanographic images. IEEE Trans. Geosci. Remote Sens. 1998, 36, 767–778. [Google Scholar] [CrossRef]

- Kostianoy, A.G.; Ginzburg, A.I.; Frankignoulle, M.; Delille, B. Fronts in the Southern Indian Ocean as inferred from satellite sea surface temperature data. J. Mar. Syst. 2004, 45, 55–73. [Google Scholar] [CrossRef]

- Hopkins, J.; Challenor, P.; Shaw, A.G.P. A new statistical modeling approach to ocean front detection from SST satellite images. J. Atmos. Ocean. Technol. 2010, 27, 173–191. [Google Scholar] [CrossRef]

- Pi, Q.L.; Hu, J.Y. Analysis of sea surface temperature fronts in the Taiwan Strait and its adjacent area using an advanced edge detection method. Sci. China Earth Sci. 2010, 53, 1008–1016. [Google Scholar] [CrossRef]

- Sangeetha, D.; Deepa, P. FPGA implementation of cost-effective robust Canny edge detection algorithm. J. Real-Time Image Process. 2019, 16, 957–970. [Google Scholar] [CrossRef]

- Oram, J.J.; McWilliams, J.C.; Stolzenbach, K.D. Gradient-based edge detection and feature classification of sea-surface images of the Southern California Bight. Remote Sens. Environ. 2008, 112, 2397–2415. [Google Scholar] [CrossRef]

- Kirches, G.; Paperin, M.; Klein, H.; Brockmann, C.; Stelzer, K. GRADHIST—A method for detection and analysis of oceanic fronts from remote sensing data. Remote Sens. Environ. 2016, 181, 264–280. [Google Scholar] [CrossRef]

- Dong, D.; Shi, Q.; Hao, P.; Huang, H.; Yang, J.; Guo, B.; Gao, Q. Intelligent Detection of Marine Offshore Aquaculture with High-Resolution Optical Remote Sensing Images. J. Mar. Sci. Eng. 2024, 12, 1012. [Google Scholar] [CrossRef]

- Cheng, C.; Hou, X.; Wang, C.; Wen, X.; Liu, W.; Zhang, F. A Pruning and Distillation Based Compression Method for Sonar Image Detection Models. J. Mar. Sci. Eng. 2024, 12, 1033. [Google Scholar] [CrossRef]

- Zhao, Z.-Q.; Zheng, P.; Xu, S.-T.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Li, Z.; Zheng, B.; Chao, D.; Zhu, W.; Li, H.; Duan, J.; Zhang, X.; Zhang, Z.; Fu, W.; Zhang, Y. Underwater-Yolo: Underwater Object Detection Network with Dilated Deformable Convolutions and Dual-Branch Occlusion Attention Mechanism. J. Mar. Sci. Eng. 2024, 12, 2291. [Google Scholar] [CrossRef]

- Yanowitz, S.D.; Bruckstein, A.M. A new method for image segmentation. Comput. Vis. Graph. Image Process. 1989, 46, 82–95. [Google Scholar] [CrossRef]

- Cheng, H.D.; Jiang, X.H.; Sun, Y.; Wang, J. Color image segmentation: Advances and prospects. Pattern Recognit. 2001, 34, 2259–2281. [Google Scholar] [CrossRef]

- Plaksyvyi, A.; Skublewska-Paszkowska, M.; Powroźnik, P. A Comparative Analysis of Image Segmentation Using Classical and Deep Learning Approach. Adv. Sci. Technol. Res. J. 2023, 17, 127–139. [Google Scholar] [CrossRef]

- Udupa, J.K.; LeBlanc, V.R.; Zhuge, Y.; Imielinska, C.; Schmidt, H.; Currie, L.M.; Hirsch, B.E.; Woodburn, J. A framework for evaluating image segmentation algorithms. Comput. Med. Imaging Graph. 2006, 30, 75–87. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.; Porikli, F.; Plaza, A.J.; Kehtarnavaz, N.; Terzopoulos, D. Image segmentation using deep learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3523–3542. [Google Scholar] [CrossRef]

- Lima, E.; Sun, X.; Dong, J.; Wang, H.; Yang, Y.; Liu, L. Learning and transferring convolutional neural network knowledge to ocean front recognition. IEEE Geosci. Remote Sens. Lett. 2017, 14, 354–358. [Google Scholar] [CrossRef]

- Sun, X.; Wang, C.; Dong, J.; Lima, E.; Yang, Y. A multiscale deep framework for ocean fronts detection and fine-grained location. IEEE Geosci. Remote Sens. Lett. 2018, 16, 178–182. [Google Scholar] [CrossRef]

- Li, Q.; Zhong, G.; Xie, C.; Hedjam, R. Weak edge identification network for ocean front detection. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1501905. [Google Scholar] [CrossRef]

- Hu, J.; Li, Q.; Xie, C.; Zhong, G. Ocean front detection with bi-directional progressive fusion attention network. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1502005. [Google Scholar] [CrossRef]

- Li, Y.; Liang, J.; Da, H.; Chang, L.; Li, H. A deep learning method for ocean front extraction in remote sensing imagery. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1502305. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Lecture Notes in Computer Science; Navab, N., Hornegger, J., Wells, W., Frangi, A., Eds.; Springer: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar] [CrossRef]

- Zhu, L.; Lee, F.; Cai, J.; Yu, H.; Chen, Q. An improved feature pyramid network for object detection. Neurocomputing 2022, 483, 127–139. [Google Scholar] [CrossRef]

- Bock, S.; Weiß, M. A proof of local convergence for the Adam optimizer. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; IEEE: New York, NY, USA, 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Zhou, D.; Fang, J.; Song, X.; Guan, C.; Yin, J.; Dai, Y.; Yang, R. IoU loss for 2D/3D object detection. In Proceedings of the 2019 International Conference on 3D Vision (3DV), Quebec City, QC, Canada, 16–19 September 2019; IEEE: New York, NY, USA, 2019; pp. 85–94. [Google Scholar] [CrossRef]

- Guindon, B.; Zhang, Y. Application of the dice coefficient to accuracy assessment of object-based image classification. Can. J. Remote Sens. 2017, 43, 48–61. [Google Scholar] [CrossRef]

- Liu, Y.C.; Tan, D.S.; Chen, J.C.; Cheng, W.-H.; Hua, K.-L. Segmenting hepatic lesions using residual attention U-Net with an adaptive weighted dice loss. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; IEEE: New York, NY, USA, 2019; pp. 3322–3326. [Google Scholar] [CrossRef]

- Felt, V.; Kacker, S.; Kusters, J.; Pendergrast, J.; Cahoy, K. Fast ocean front detection using deep learning edge detection models. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4204812. [Google Scholar] [CrossRef]

- Yang, Y.; Lam, K.M.; Sun, X.; Dong, J.; Lguensat, R. An efficient algorithm for ocean-front evolution trend recognition. Remote Sens. 2022, 14, 259. [Google Scholar] [CrossRef]

- Niu, R.; Tan, Y.; Ye, F.; Gong, F.; Huang, H.; Zhu, Q.; Hao, Z. SQNet: Simple and fast model for ocean front identification. Remote Sens. 2023, 15, 2339. [Google Scholar] [CrossRef]

| Predicted Result | ||

|---|---|---|

| Positive | Negative | |

| Actual Condition | ||

| True | TP (True Positive) | TN (True Negative) |

| False | FP (True Negative) | FN (False Negative) |

| Module | Metrics (%) | ||||

|---|---|---|---|---|---|

| IoU | Weighted Dice | Precision | Recall | F1 Score | |

| FPN | 95.79 | 93.21 | 95.72 | 96.15 | 95.93 |

| FPN + Edge-Attention | 97.80 | 94.21 | 95.56 | 95.32 | 95.44 |

| U-Net | 96.04 | 95.36 | 95.68 | 96.05 | 95.86 |

| U-Net + Edge-Attention | 97.77 | 95.18 | 94.21 | 96.00 | 95.10 |

| U-Net + FPN | 95.23 | 93.54 | 95.86 | 95.55 | 95.70 |

| EEFD-Net | 98.81 | 95.56 | 96.32 | 96.13 | 96.22 |

| Module | Loss Function (%) | ||

|---|---|---|---|

| MSE Loss (10−2) | Focal Loss (10−2) | Dice Loss (10−2) | |

| FPN | 0.729 | 9.126 | 6.790 |

| FPN + Edge-Attention | 0.555 | 8.549 | 5.790 |

| U-Net | 0.151 | 2.974 | 4.640 |

| U-Net + Edge-Attention | 0.760 | 2.189 | 4.827 |

| U-Net + FPN | 0.453 | 3.329 | 6.460 |

| EEFD-Net | 0.034 | 1.650 | 4.440 |

| Module | Metrics (%) | |

|---|---|---|

| mIoU | mWDSC | |

| Threshold Method | 76.77 | 69.29 |

| Deeplab v3+ | 89.93 | 87.25 |

| GoogleNet Inception | 95.90 | 93.96 |

| SQNet | 91.63 | 92.84 |

| LSENet | 85.58 | 93.56 |

| BPFANet | 93.99 | 95.40 |

| EEFD-Net | 98.81 | 95.56 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kong, R.; Liu, Z.; Wu, Y.; Fang, Y.; Kong, Y. Intelligent Detection of Oceanic Front in Offshore China Using EEFD-Net with Remote Sensing Data. J. Mar. Sci. Eng. 2025, 13, 618. https://doi.org/10.3390/jmse13030618

Kong R, Liu Z, Wu Y, Fang Y, Kong Y. Intelligent Detection of Oceanic Front in Offshore China Using EEFD-Net with Remote Sensing Data. Journal of Marine Science and Engineering. 2025; 13(3):618. https://doi.org/10.3390/jmse13030618

Chicago/Turabian StyleKong, Ruijie, Ze Liu, Yifei Wu, Yong Fang, and Yuan Kong. 2025. "Intelligent Detection of Oceanic Front in Offshore China Using EEFD-Net with Remote Sensing Data" Journal of Marine Science and Engineering 13, no. 3: 618. https://doi.org/10.3390/jmse13030618

APA StyleKong, R., Liu, Z., Wu, Y., Fang, Y., & Kong, Y. (2025). Intelligent Detection of Oceanic Front in Offshore China Using EEFD-Net with Remote Sensing Data. Journal of Marine Science and Engineering, 13(3), 618. https://doi.org/10.3390/jmse13030618