Precise PIV Measurement in Low SNR Environments Using a Multi-Task Convolutional Neural Network

Abstract

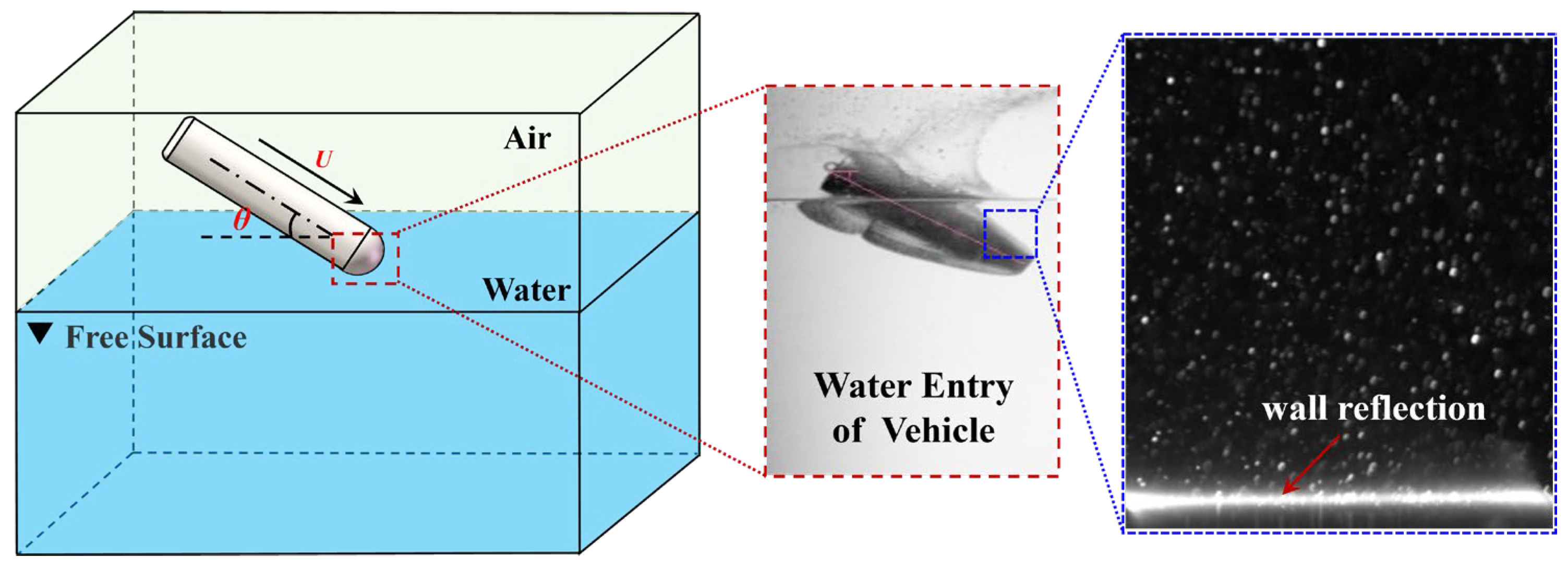

1. Introduction

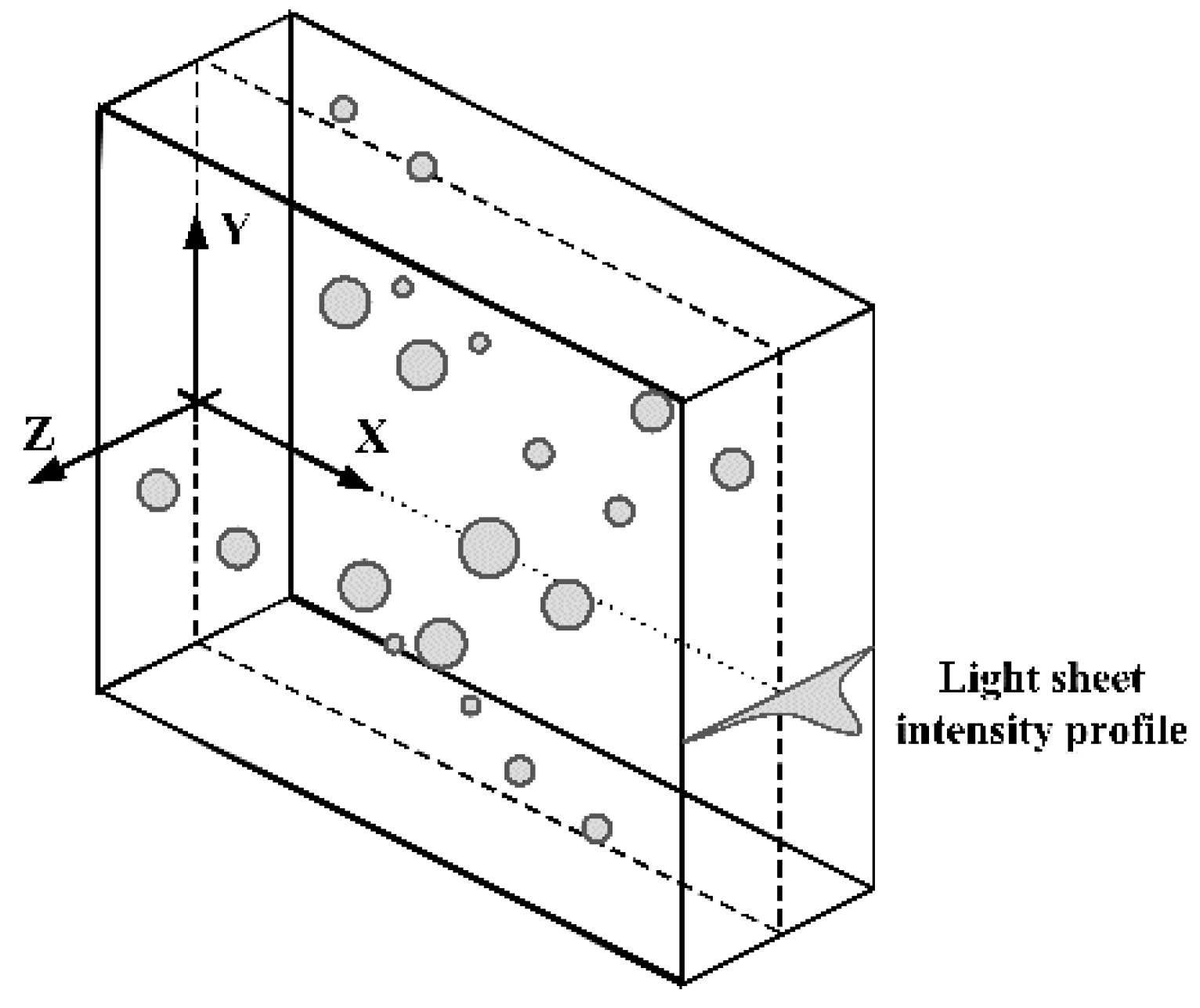

2. Synthetic PIV Datasets

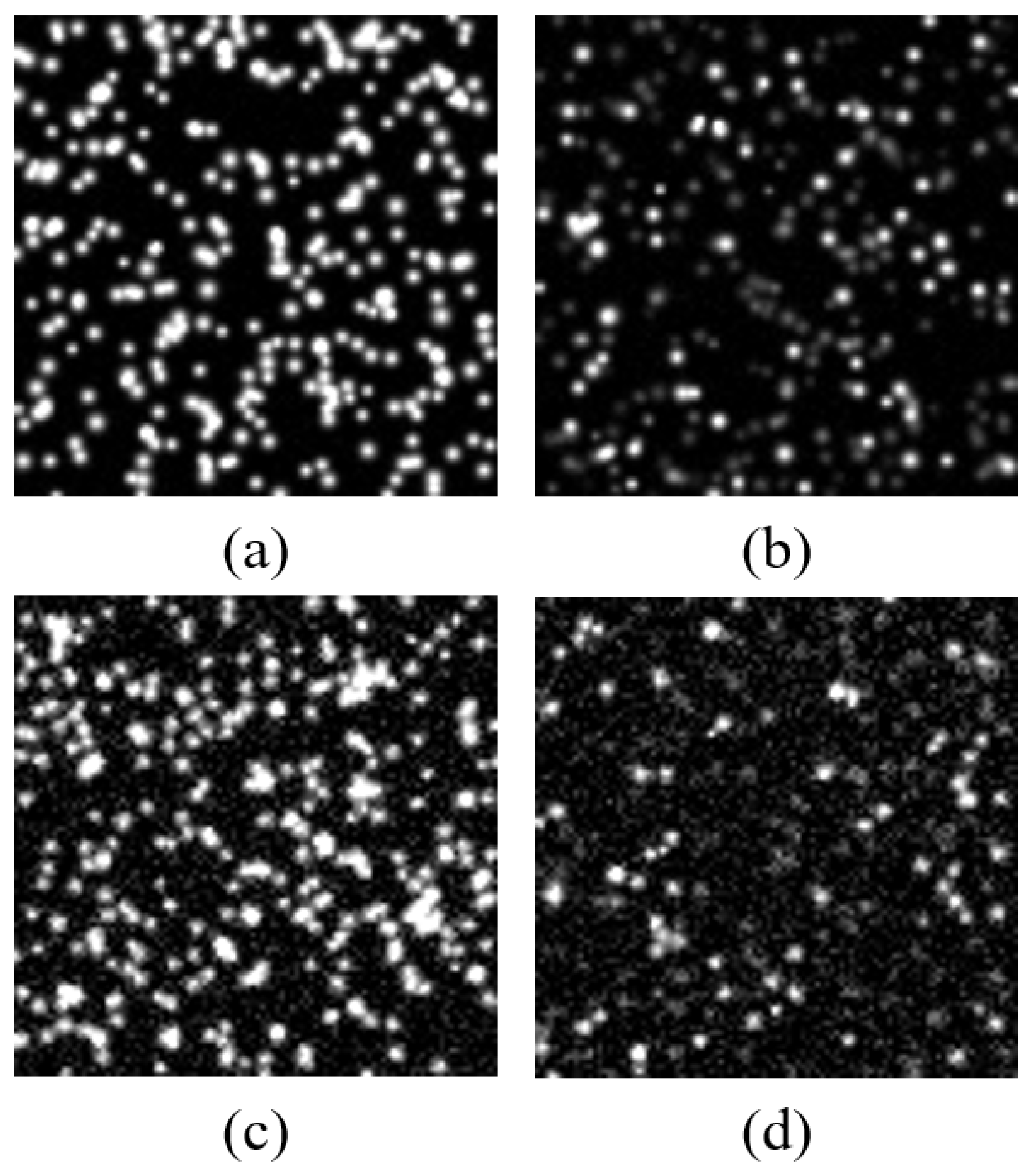

2.1. Synthetic Particle Images

2.2. Training Dataset

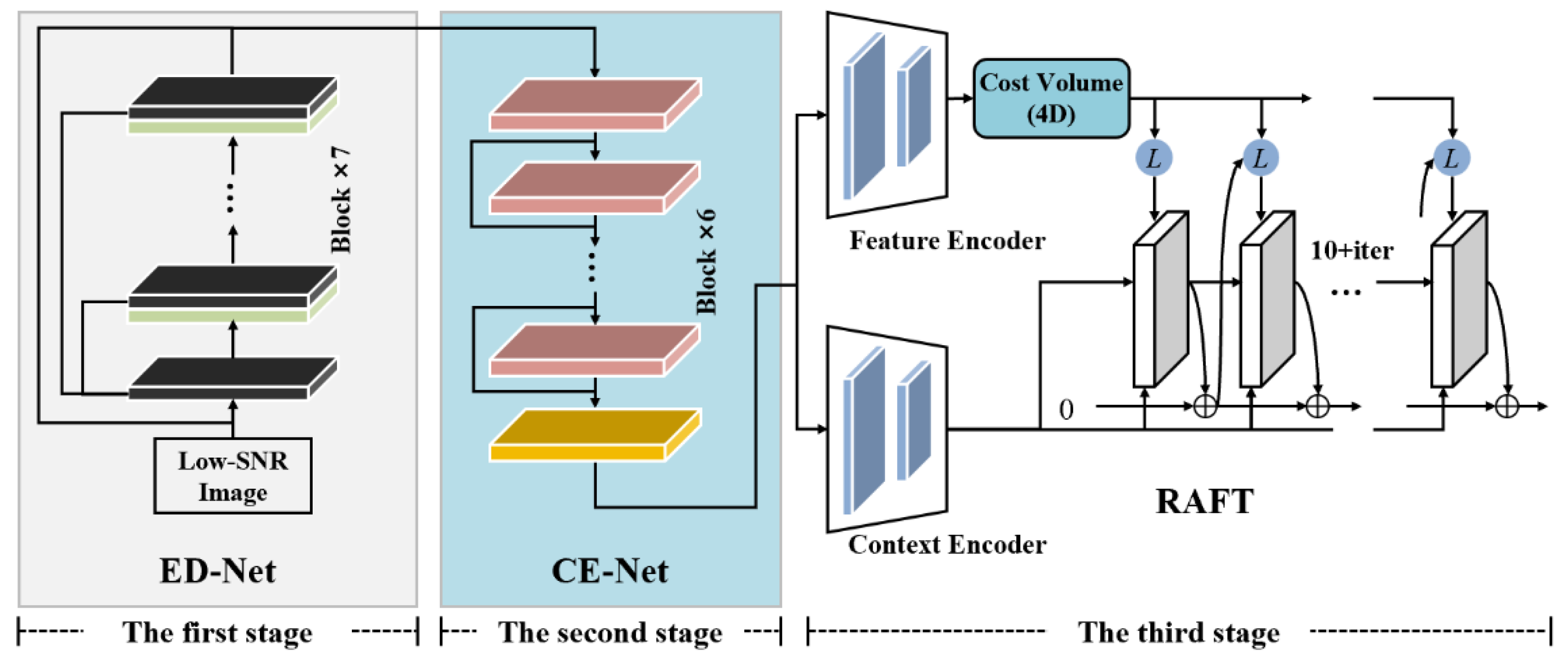

3. PIV-RAFT-EN Algorithm

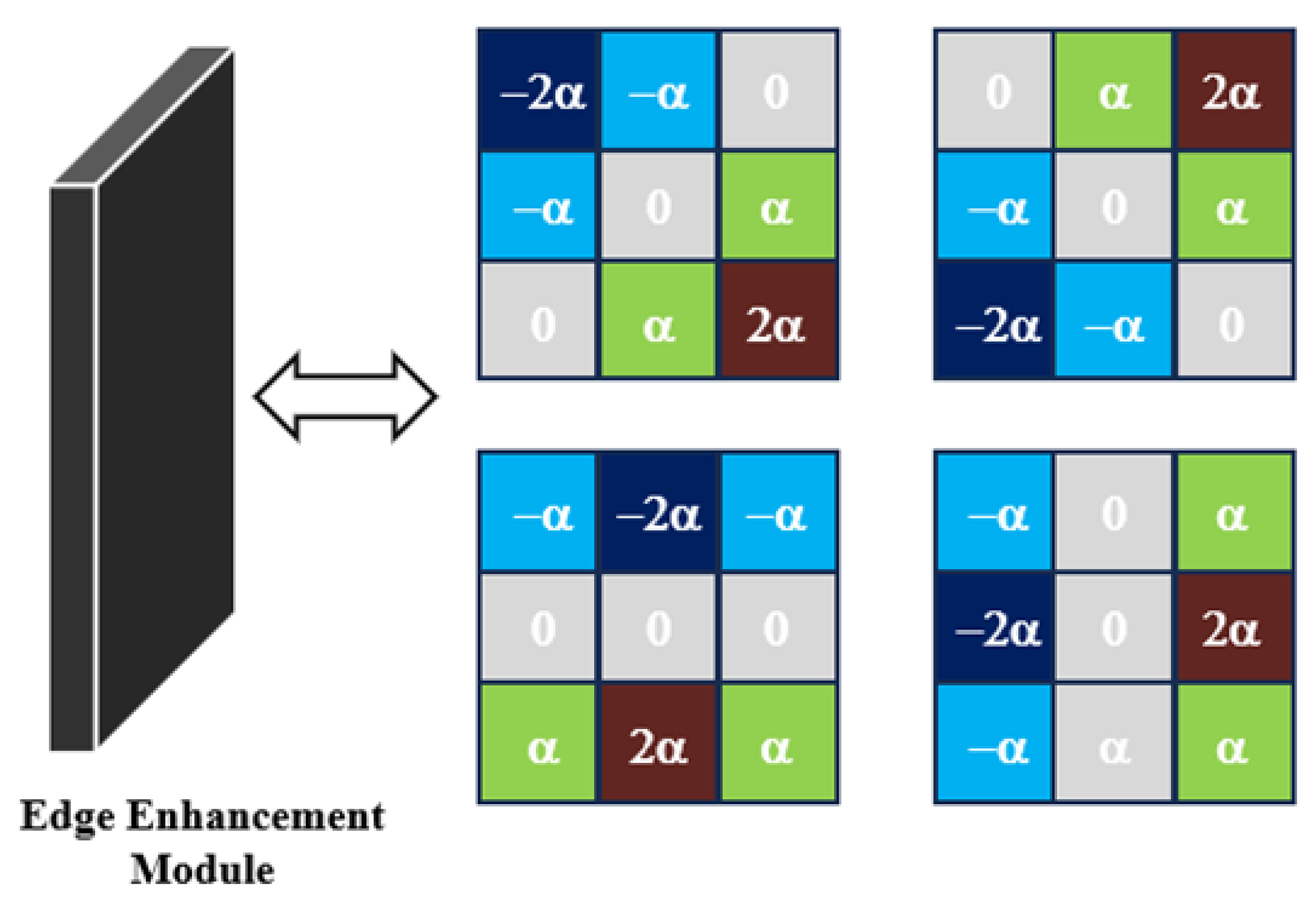

3.1. ED-Net

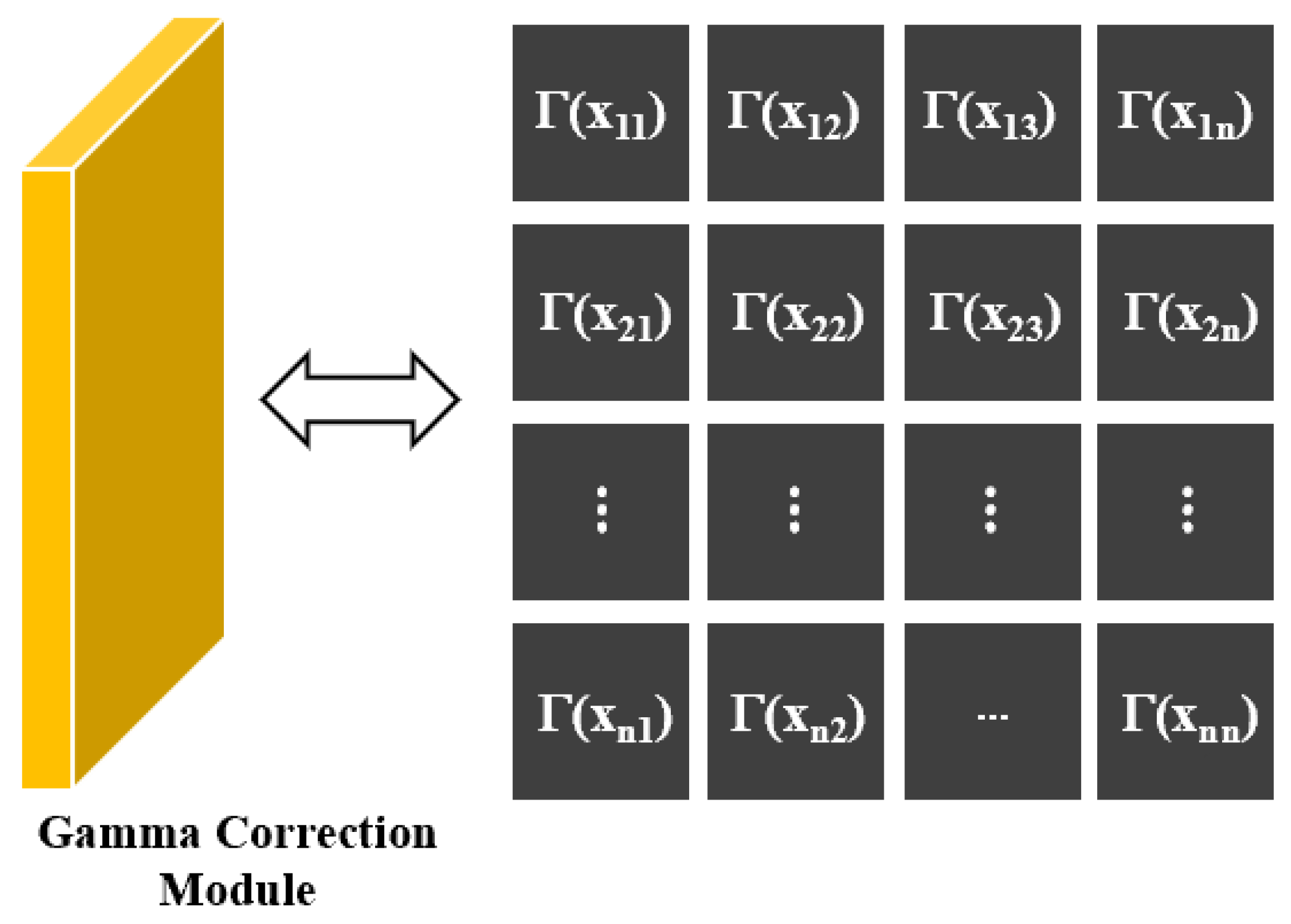

3.2. CE-Net

3.3. RAFT

4. Comparisons

4.1. Systematic Errors

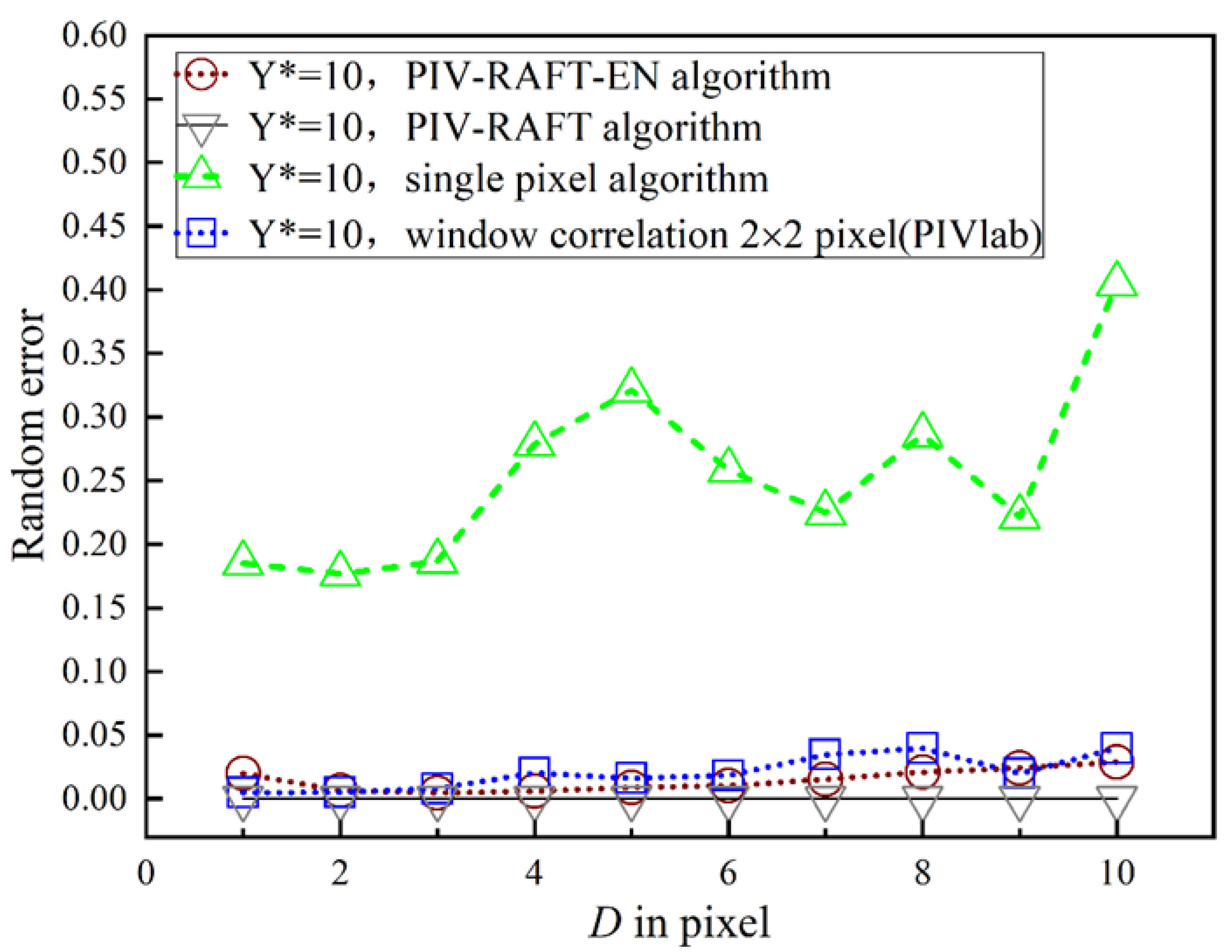

4.2. Random Errors

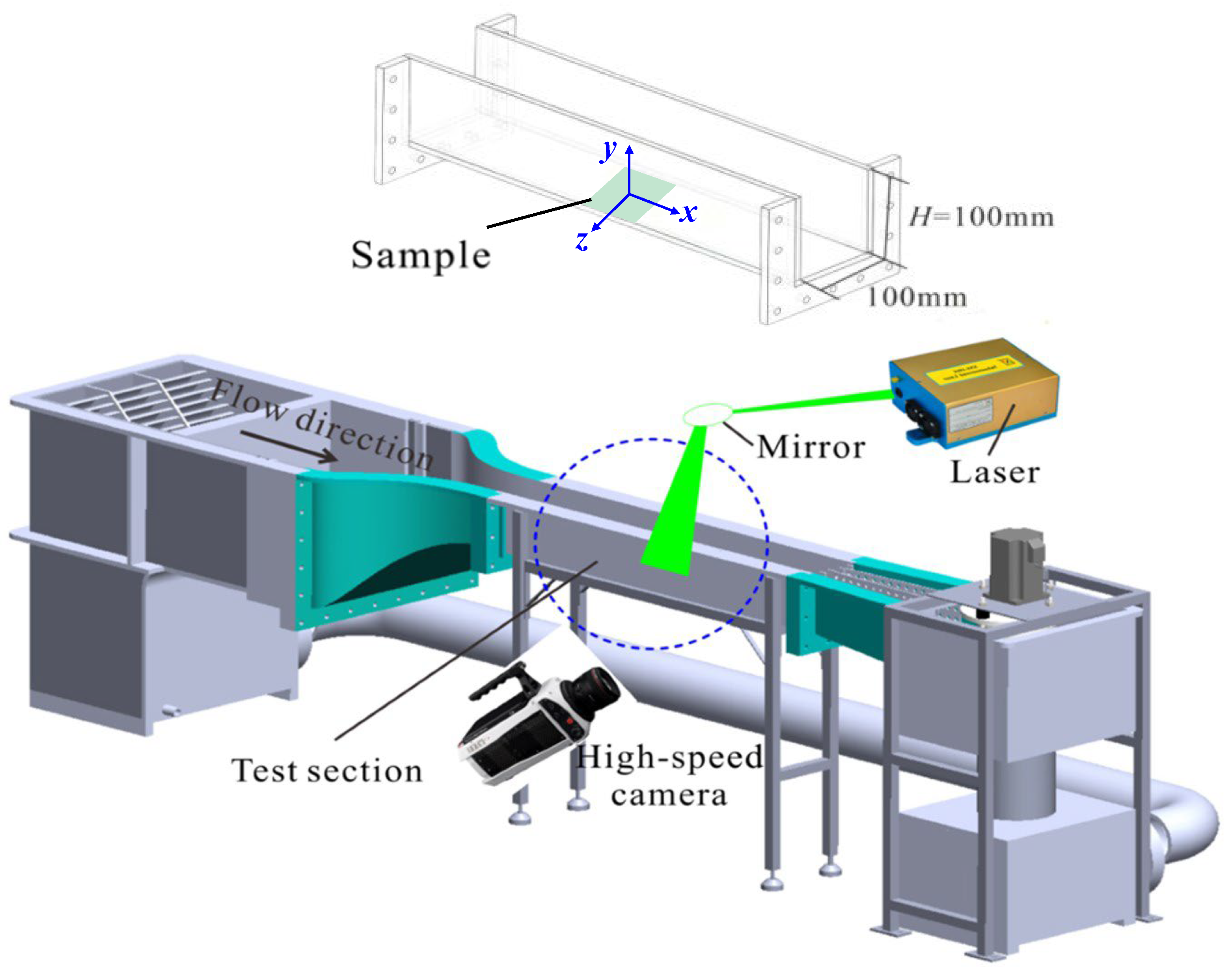

5. Applications

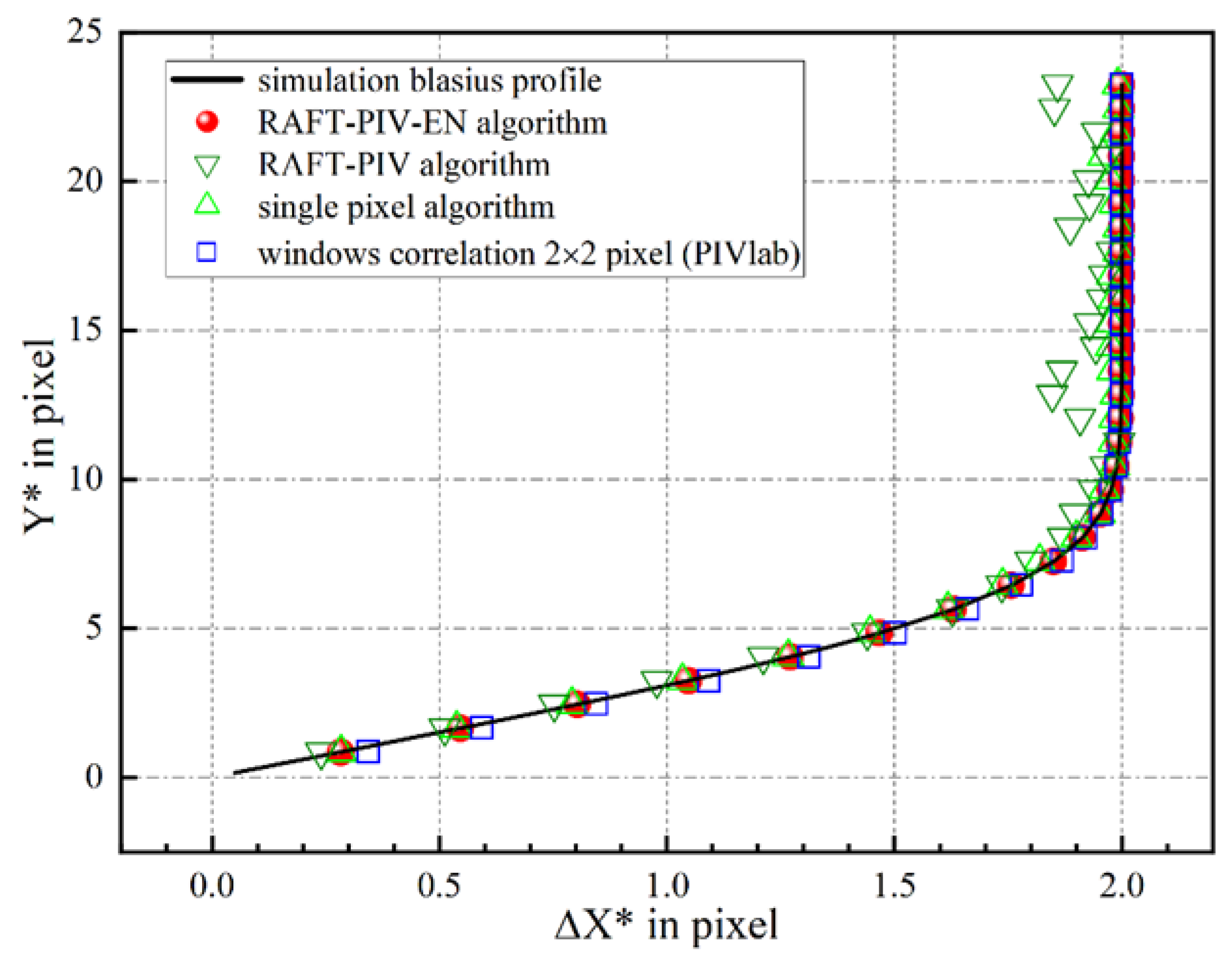

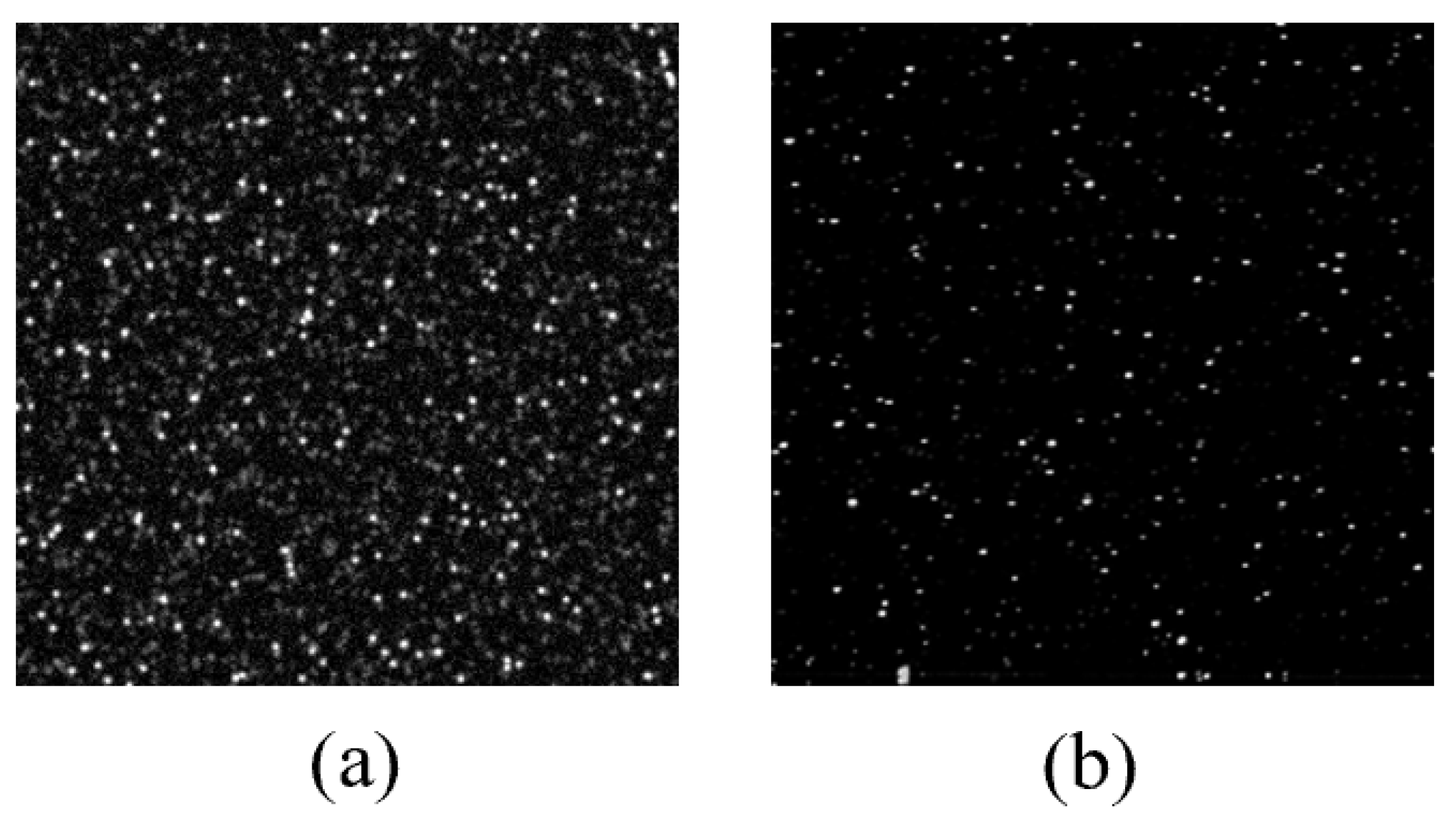

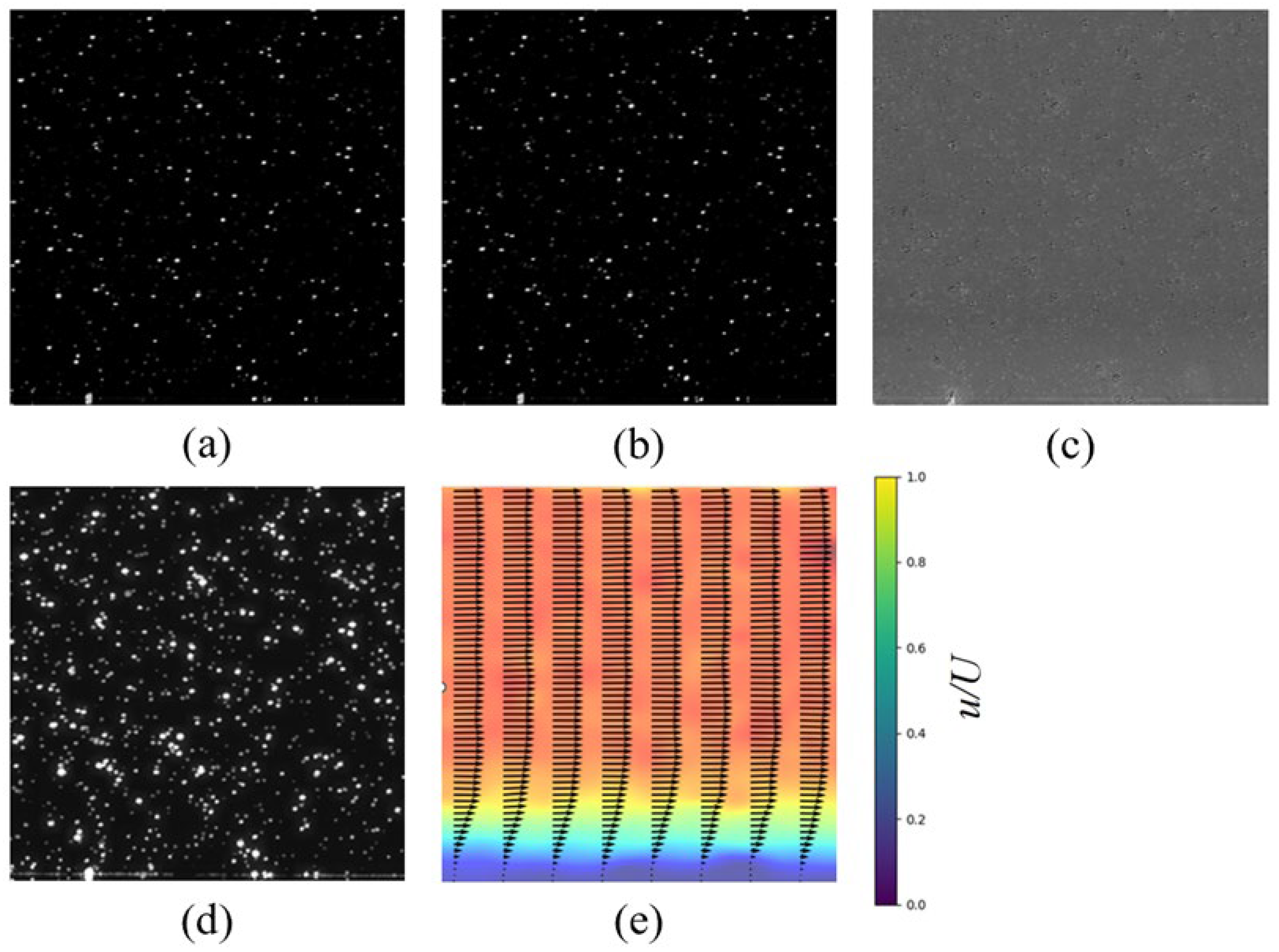

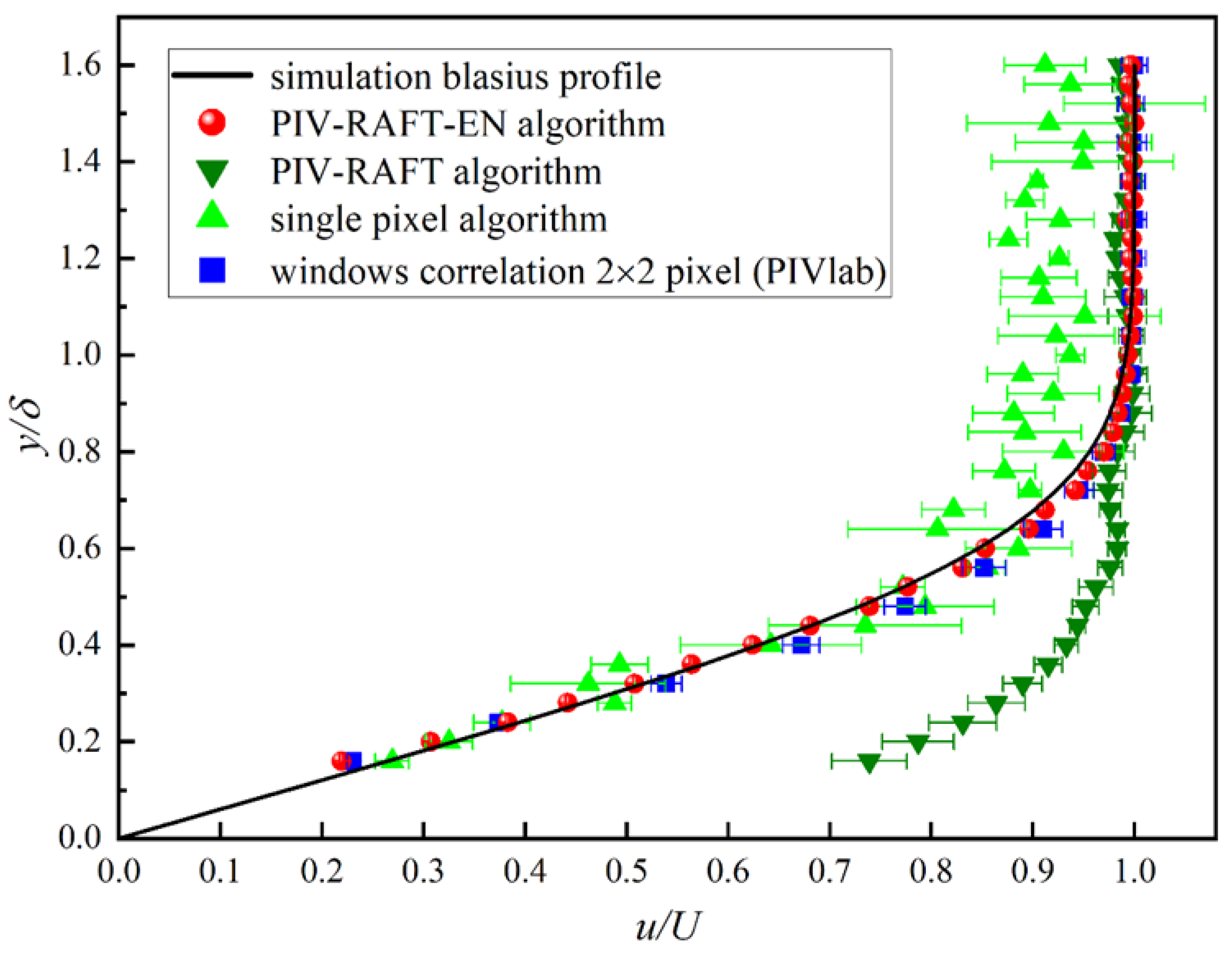

5.1. Accurate Measurement of Laminar Flow

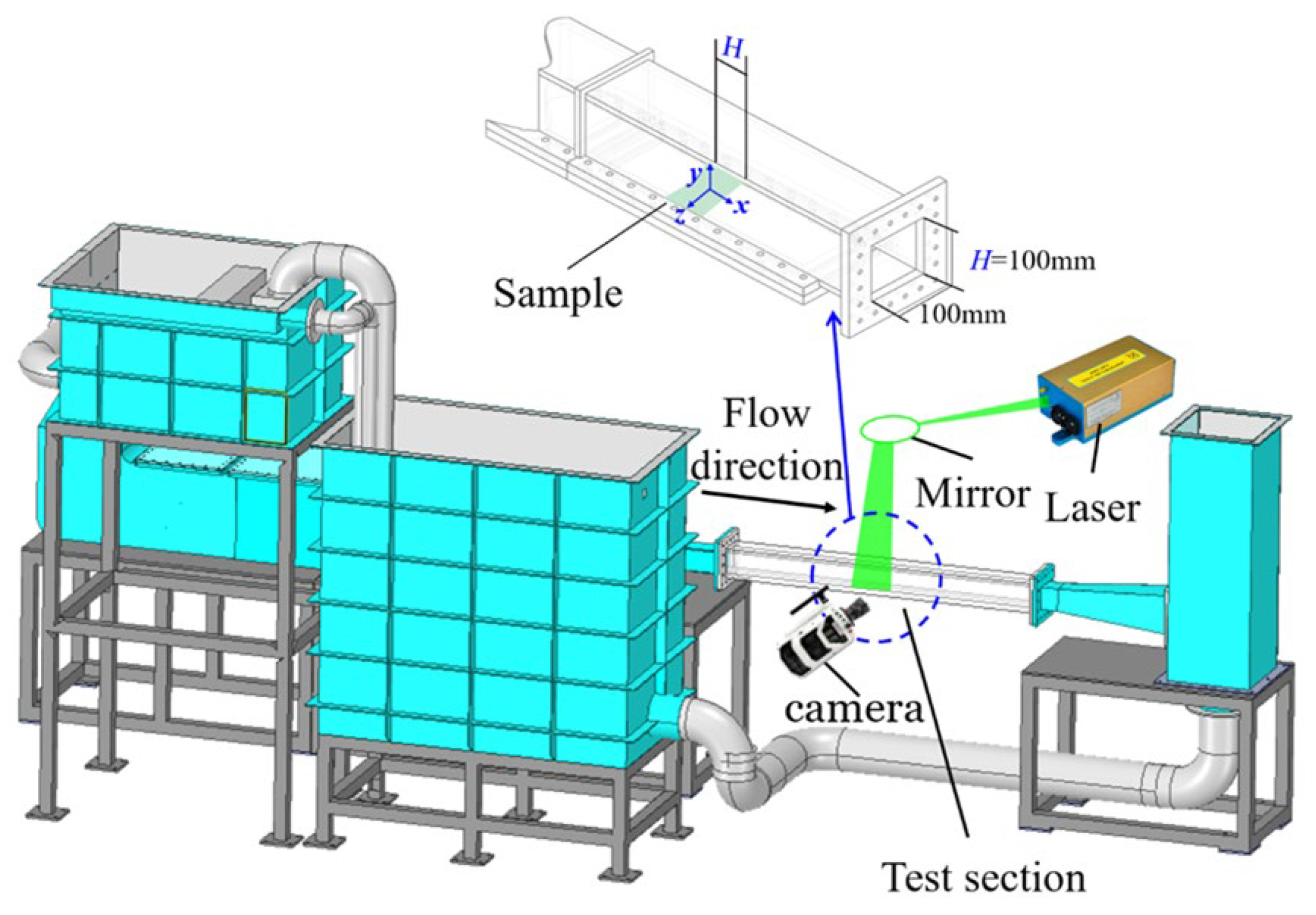

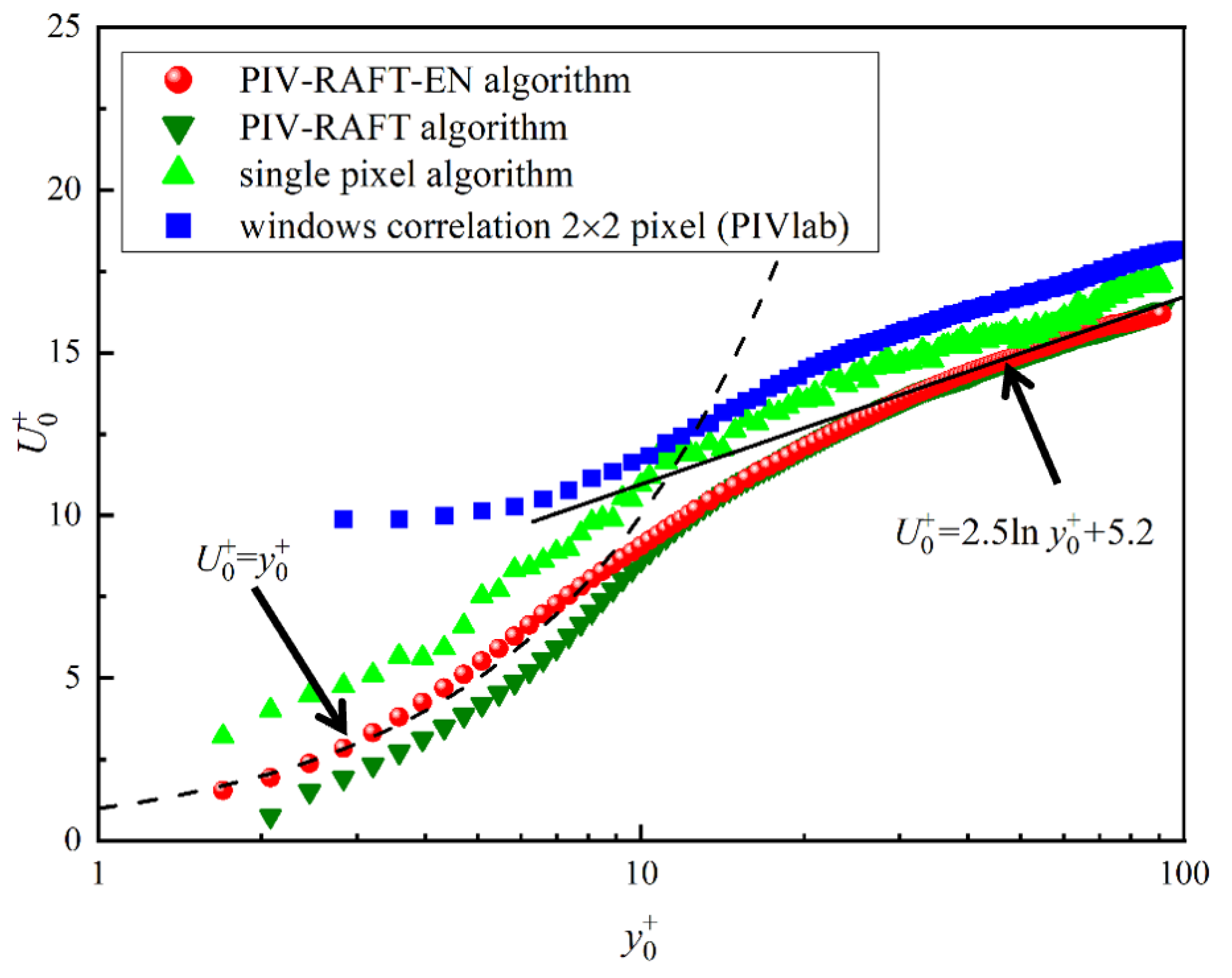

5.2. Accurate Measurement on Turbulent Boundary Layer Flow

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Oldenziel, G.; Sridharan, S.; Westerweel, J. Measurement of high-Re turbulent pipe flow using Single-Pixel PIV. Exp. Fluids 2023, 64, 164. [Google Scholar] [CrossRef]

- Chau, T.V.; Jung, S.; Kim, M.; Na, W.-B. Analysis of the Bending Height of Flexible Marine Vegetation. J. Mar. Sci. Eng. 2024, 12, 1054. [Google Scholar] [CrossRef]

- Chen, S.; Zhang, Y.; Su, T.; Gong, Y. PIV Experimental Research and Numerical Simulation of the Pigging Process. J. Mar. Sci. Eng. 2024, 12, 549. [Google Scholar] [CrossRef]

- D’Agostino, D.; Diez, M.; Felli, M.; Serani, A. PIV Snapshot Clustering Reveals the Dual Deterministic and Chaotic Nature of Propeller Wakes at Macro- and Micro-Scales. J. Mar. Sci. Eng. 2023, 11, 1220. [Google Scholar] [CrossRef]

- He, T.; Hu, H.; Tang, D.; Chen, X.; Meng, J.; Cao, Y.; Lv, X. Experimental Study on the Effects of Waves and Current on Ice Melting. J. Mar. Sci. Eng. 2023, 11, 1209. [Google Scholar] [CrossRef]

- Lin, Y.-T.; Liu, L.; Sheng, B.; Yuan, Y.; Hu, K. Laboratory Studies of Internal Solitary Waves Propagating and Breaking over Submarine Canyons. J. Mar. Sci. Eng. 2023, 11, 355. [Google Scholar] [CrossRef]

- Le, A.V.; Fenech, M. Image-Based Experimental Measurement Techniques to Characterize Velocity Fields in Blood Microflows. Front Physiol. 2022, 13, 886675. [Google Scholar] [CrossRef]

- Liu, L.; Zhao, L.; Wang, Y.; Zhang, S.; Song, M.; Huang, X.; Lu, Z. Research on the Enhancement of the Separation Efficiency for Discrete Phases Based on Mini Hydrocyclone. J. Mar. Sci. Eng. 2022, 10, 1606. [Google Scholar] [CrossRef]

- Meng, Z.; Zhang, J.; Hu, Y.; Ancey, C. Temporal Prediction of Landslide-Generated Waves Using a Theoretical–Statistical Combined Method. J. Mar. Sci. Eng. 2023, 11, 1151. [Google Scholar] [CrossRef]

- Ning, C.; Li, Y.; Huang, P.; Shi, H.; Sun, H. Numerical Analysis of Single-Particle Motion Using CFD-DEM in Varying-Curvature Elbows. J. Mar. Sci. Eng. 2022, 10, 62. [Google Scholar] [CrossRef]

- Orzech, M.; Yu, J.; Wang, D.; Landry, B.; Zuniga-Zamalloa, C.; Braithwaite, E.; Trubac, K.; Gray, C. Laboratory Measurements of Surface Wave Propagation through Ice Floes in Salt Water. J. Mar. Sci. Eng. 2022, 10, 1483. [Google Scholar] [CrossRef]

- Adrian, R.J.; Westerweel, J. Particle Image Velocimetry; Cambridge University Press: Cambridge, MA, USA, 2011. [Google Scholar]

- Raffel, M.; Willert, C.E.; Scarano, F.; Kähler, C.J.; Wereley, S.T.; Kompenhans, J. Particle Image Velocimetry: A Practical Guide; Springer: Berlin, Germany, 2018. [Google Scholar]

- Wereley, S.T.; Gui, L.; Meinhart, C. Advanced algorithms for microscale particle image velocimetry. AIAA J. 2002, 40, 1047–1055. [Google Scholar] [CrossRef]

- Kaehler, C.J.; Scharnowski, S.; Cierpka, C. On the uncertainty of digital PIV and PTV near walls. Exp. Fluids 2012, 52, 1641–1656. [Google Scholar] [CrossRef]

- Gao, Z.; Li, X.; Ye, H. Aberration correction for flow velocity measurements using deep convolutional neural networks. Infrared Laser Eng. 2020, 49, 20200267. [Google Scholar]

- Weng, W.; Fan, W.; Liao, G.; Qin, J. Wavelet-based image denoising in (digital) particle image velocimetry. Signal Process. 2001, 81, 1503–1512. [Google Scholar] [CrossRef]

- Adatrao, S.; Sciacchitano, A. Elimination of unsteady background reflections in PIV images by anisotropic diffusion. Meas. Sci. Technol. 2019, 30, 035204. [Google Scholar] [CrossRef]

- Grayson, K.; de Silva, C.M.; Hutchins, N.; Marusic, I. Impact of mismatched and misaligned laser light sheet profiles on PIV performance. Exp. Fluids 2018, 59, 2. [Google Scholar] [CrossRef]

- Wang, L.; Pan, C.; Liu, J.; Cai, C. Ratio-cut background removal method and its application in near-wall PTV measurement of a turbulent boundary layer. Meas. Sci. Technol. 2021, 32, 25302. [Google Scholar] [CrossRef]

- Theunissen, R.; Scarano, F.; Riethmuller, M. On improvement of PIV image interrogation near stationary interfaces. Exp. Fluids 2008, 45, 557–572. [Google Scholar] [CrossRef]

- Raben, J.S.; Hariharan, P.; Robinson, R.; Malinauskas, R.; Vlachos, P.P. Time-resolved particle image velocimetry measurements with wall shear stress and uncertainty quantification for the FDA nozzle model. Cardiovasc. Eng. Technol. 2016, 7, 7–22. [Google Scholar] [CrossRef]

- Becker, F.; Wieneke, B.; Petra, S.; Schroder, A.; Schnorr, C. Variational adaptive correlation method for flow estimation. IEEE Trans Image Process. 2011, 21, 3053–3065. [Google Scholar] [CrossRef] [PubMed]

- Scharnowski, S.; Kaehler, C.J. Particle image velocimetry—Classical operating rules from today’s perspective. Opt. Lasers Eng. 2020, 135, 106185. [Google Scholar] [CrossRef]

- Yu, C.; Bi, X.; Fan, Y. Deep learning for fluid velocity field estimation: A review. Ocean Eng. 2023, 271, 113693. [Google Scholar] [CrossRef]

- Wereley, S.T.; Meinhart, C.D. Second-order accurate particle image velocimetry. Exp. Fluids 2001, 31, 258–268. [Google Scholar] [CrossRef]

- Wieneke, B.; Pfeiffer, K. Adaptive PIV with variable interrogation window size and shape. In Proceedings of the 15th International Symposium on Applications of Laser Techniques to Fluid Mechanics, Lisbon, Portugal, 5–8 July 2010. [Google Scholar]

- Kaehler, C.J.; Scharnowski, S.; Cierpka, C. On the resolution limit of digital particle image velocimetry. Exp. Fluids 2012, 52, 1629–1639. [Google Scholar] [CrossRef]

- Theunissen, R.; Scarano, F.; Riethmuller, M.L. Spatially adaptive PIV interrogation based on data ensemble. Exp. Fluids 2010, 48, 875–887. [Google Scholar] [CrossRef]

- Scarano, F. Iterative image deformation methods in PIV. Meas. Sci. Technol. 2001, 13, R1. [Google Scholar] [CrossRef]

- Westerweel, J.; Scarano, F. Universal outlier detection for PIV data. Exp. Fluids 2005, 39, 1096–1100. [Google Scholar] [CrossRef]

- Stanislas, M.; Abdelsalam, D.G.; Coudert, S. CCD camera response to diffraction patterns simulating particle images. Appl. Optics 2013, 52, 4715–4723. [Google Scholar] [CrossRef]

- Theunissen, R.; Scarano, F.; Riethmuller, M.L. An adaptive sampling and windowing interrogation method in PIV. Meas. Sci. Technol. 2006, 18, 275. [Google Scholar] [CrossRef]

- Xie, Z.; Wang, H.; Xu, D. Spatiotemporal optimization on cross correlation for particle image velocimetry. Phys. Fluids 2022, 34, 55105. [Google Scholar] [CrossRef]

- Westerweel, J.; Poelma, C.; Lindken, R. Two-point ensemble correlation method for µPIV applications. In Proceedings of the 11th International Symposium on ‘Applications of Laser Techniques to Fluid Mechanics, Lisbon, Portugal, 8–11 July 2002. [Google Scholar]

- Westerweel, J.; Geelhoed, P.F.; Lindken, R. Single-Pixel resolution ensemble correlation for micro-PIV applications. Exp. Fluids 2004, 37, 375–384. [Google Scholar] [CrossRef]

- Li, H.; Cao, Y.; Wang, X.; Wan, X.; Xiang, Y.; Yuan, H.; Lv, P.; Duan, H. Accurate PIV measurement on slip boundary using Single-Pixel algorithm. Meas. Sci. Technol. 2022, 33, 55302. [Google Scholar] [CrossRef]

- Chuang, H.-S.; Gui, L.; Wereley, S.T. Nano-resolution flow measurement based on single pixel evaluation PIV. Microfluid. Nanofluid. 2012, 13, 49–64. [Google Scholar] [CrossRef]

- Karchevskiy, M.N.; Tokarev, M.P.; Yagodnitsyna, A.A.; Kozinkin, L.A. Correlation algorithm for computing the velocity fields in microchannel flows with high resolution. Thermophys. Aeromechanics 2015, 22, 745–754. [Google Scholar] [CrossRef]

- Corpetti, T.; Heitz, D.; Arroyo, G.; Mémin, E.; Santa-Cruz, A. Fluid experimental flow estimation based on an optical-flow scheme. Exp. Fluids 2006, 40, 80–97. [Google Scholar] [CrossRef]

- Horn, B.K.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef]

- Wang, B.; Cai, Z.; Shen, L.; Liu, T. An analysis of physics-based optical flow. J. Comput. Appl. Math. 2015, 276, 62–80. [Google Scholar] [CrossRef]

- Khalid, M.; Pénard, L.; Mémin, E. Optical flow for image-based river velocity estimation. Flow Meas. Instrum. 2019, 65, 110–121. [Google Scholar] [CrossRef]

- Chen, J.; Duan, H.; Song, Y.; Cai, Z.; Yang, G.; Liu, T. Motion estimation for complex fluid flows using Helmholtz decomposition. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 2129–2146. [Google Scholar] [CrossRef]

- Yang, Z.; Johnson, M. Hybrid particle image velocimetry with the combination of cross-correlation and optical flow method J. Vis. 2017, 20, 625–638. [Google Scholar] [CrossRef]

- Liu, T. OpenOpticalFlow: An open source program for extraction of velocity fields from flow visualization images. J. Open Res. Softw. 2017, 5, 29. [Google Scholar] [CrossRef]

- Tao, W.; Liu, Y.; Ma, Z.; Hu, W. Two-Dimensional Flow Field Measurement Method for Sediment-Laden Flow Based on Optical Flow Algorithm. Appl. Sci. 2022, 12, 2720. [Google Scholar] [CrossRef]

- Ruhnau, P.; Stahl, A.; Schnörr, C. Variational estimation of experimental fluid flows with physics-based spatio-temporal regularization. Meas. Sci. Technol. 2007, 18, 755. [Google Scholar] [CrossRef]

- Schmidt, B.; Sutton, J. Improvements in the accuracy of wavelet-based optical flow velocimetry (wOFV) using an efficient and physically based implementation of velocity regularization. Exp. Fluids 2020, 61, 1–17. [Google Scholar] [CrossRef]

- Cassisa, C.; Simoens, S.; Prinet, V.; Shao, L. Subgrid scale formulation of optical flow for the study of turbulent flow. Exp. Fluids 2011, 51, 1739–1754. [Google Scholar] [CrossRef]

- Alvarez, L.; Castaño, C.; García, M.; Krissian, K.; Mazorra, L.; Salgado, A.; Sánchez, J. Variational second order flow estimation for PIV sequences. Exp. Fluids 2008, 44, 291–304. [Google Scholar] [CrossRef]

- Zhong, Q.; Yang, H.; Yin, Z. An optical flow algorithm based on gradient constancy assumption for PIV image processing. Meas. Sci. Technol. 2017, 28, 55208. [Google Scholar] [CrossRef]

- Liu, T.; Merat, A.; Makhmalbaf, M.; Fajardo, C.; Merati, P. Comparison between optical flow and cross-correlation methods for extraction of velocity fields from particle images. Exp. Fluids 2015, 56, 1–23. [Google Scholar] [CrossRef]

- Rabault, J.; Kolaas, J.; Jensen, A. Performing particle image velocimetry using artificial neural networks: A proof-of-concept. Meas. Sci. Technol. 2017, 28, 125301. [Google Scholar] [CrossRef]

- Lee, Y.; Yang, H.; Yin, Z. PIV-DCNN: Cascaded deep convolutional neural networks for particle image velocimetry. Exp. Fluids 2017, 58, 171. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Fischer, P.; Ilg, E.; Hausser, P.; Hazirbas, C.; Golkov, V.; Van Der Smagt, P.; Cremers, D.; Brox, T. Flownet: Learning optical flow with convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ilg, E.; Mayer, N.; Saikia, T.; Keuper, M.; Dosovitskiy, A.; Brox, T. Flownet 2.0: Evolution of optical flow estimation with deep networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Hui, T.-W.; Tang, X.; Loy, C.C. Liteflownet: A lightweight convolutional neural network for optical flow estimation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2018), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Sun, D.; Yang, X.; Liu, M.-Y.; Kautz, J. Pwc-net: Cnns for optical flow using pyramid, warping, and cost volume. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Teed, Z.; Deng, J. Raft: Recurrent all-pairs field transforms for optical flow. In Proceedings of the ECCV European Conference on Computer Vision (ECCV 2020), Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Yu, C.; Bi, X.; Fan, Y.; Han, Y.; Kuai, Y. LightPIVNet: An Effective Convolutional Neural Network for Particle Image Velocimetry. IEEE Trans. Instrum. Meas. 2021, 70, 1–15. [Google Scholar] [CrossRef]

- Han, Y.; Wang, Q. An attention-mechanism incorporated deep recurrent optical flow network for particle image velocimetry. Phys. Fluids 2023, 35, 75122. [Google Scholar]

- Lagemann, C.; Lagemann, K.; Mukherjee, S.; Schroder, W. Deep recurrent optical flow learning for particle image velocimetry data. Nat. Mach. Intell. 2021, 3, 641–651. [Google Scholar] [CrossRef]

- Lagemann, C.; Lagemann, K.; Mukherjee, S.; Schroder, W. Generalization of deep recurrent optical flow estimation for particle-image velocimetry data. Meas. Sci. Technol. 2022, 33, 94003. [Google Scholar] [CrossRef]

- Liang, T.; Jin, Y.; Li, Y.; Wang, T. Edcnn: Edge enhancement-based densely connected network with compound loss for low-dose ct denoising. In Proceedings of the 2020 15th IEEE International Conference on Signal Processing (ICSP), Beijing, China, 18–22 October 2020. [Google Scholar]

- Luthra, A.; Sulakhe, H.; Mittal, T.; Iyer, A.; Yadav, S. Eformer: Edge Enhancement based Transformer for Medical Image Denoising. arXiv 2021, arXiv:2109.08044. [Google Scholar]

- Alivanoglou, A.; Likas, A. Probabilistic models based on the π-sigmoid distribution. In Proceedings of the Artificial Neural Networks in Pattern Recognition: Third IAPR Workshop, ANNPR 2008, Paris, France, 2–4 July 2008. Proceedings 3. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; You, C.; Peng, D.; Lv, P.; Li, H. Precise PIV Measurement in Low SNR Environments Using a Multi-Task Convolutional Neural Network. J. Mar. Sci. Eng. 2025, 13, 613. https://doi.org/10.3390/jmse13030613

Wang Y, You C, Peng D, Lv P, Li H. Precise PIV Measurement in Low SNR Environments Using a Multi-Task Convolutional Neural Network. Journal of Marine Science and Engineering. 2025; 13(3):613. https://doi.org/10.3390/jmse13030613

Chicago/Turabian StyleWang, Yichao, Chenxi You, Di Peng, Pengyu Lv, and Hongyuan Li. 2025. "Precise PIV Measurement in Low SNR Environments Using a Multi-Task Convolutional Neural Network" Journal of Marine Science and Engineering 13, no. 3: 613. https://doi.org/10.3390/jmse13030613

APA StyleWang, Y., You, C., Peng, D., Lv, P., & Li, H. (2025). Precise PIV Measurement in Low SNR Environments Using a Multi-Task Convolutional Neural Network. Journal of Marine Science and Engineering, 13(3), 613. https://doi.org/10.3390/jmse13030613