Ship Contour: A Novel Ship Instance Segmentation Method Using Deep Snake and Attention Mechanism

Abstract

1. Introduction

2. Literature Review

2.1. Instance Segmentation Algorithms

2.2. Ship Instance Segmentation Algorithms

3. A Proposed Approach

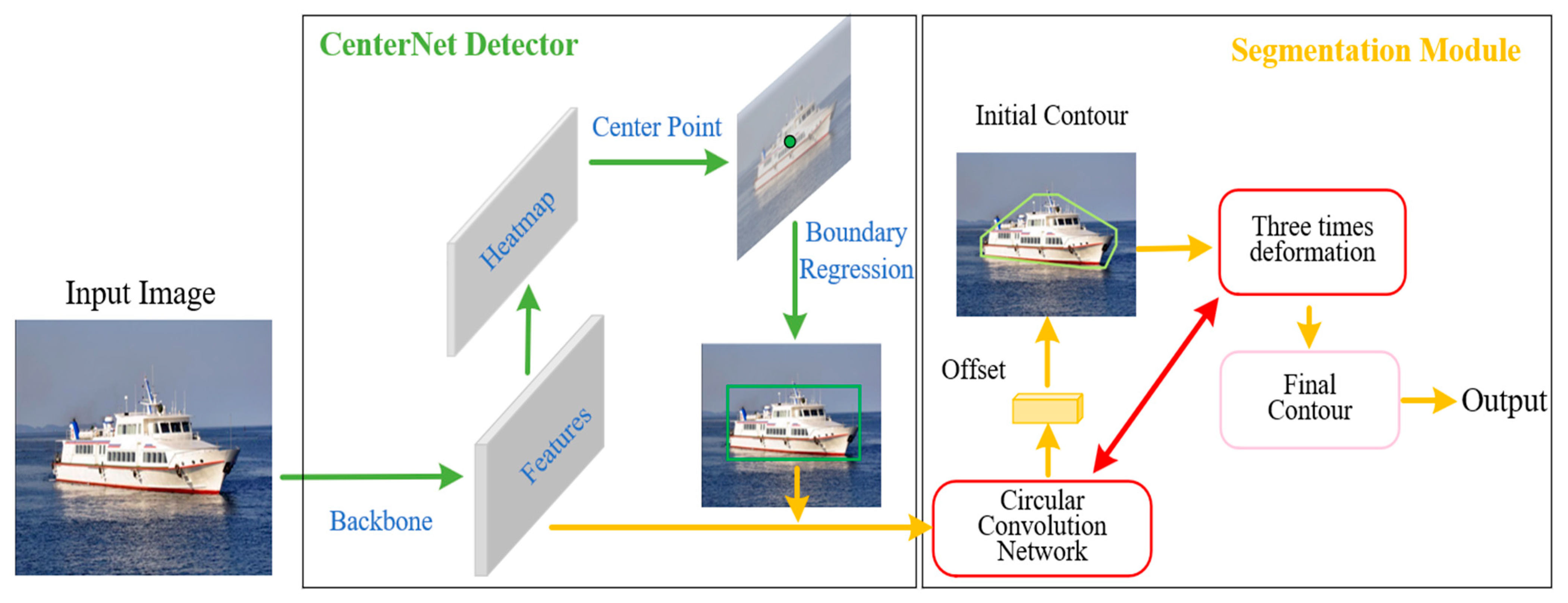

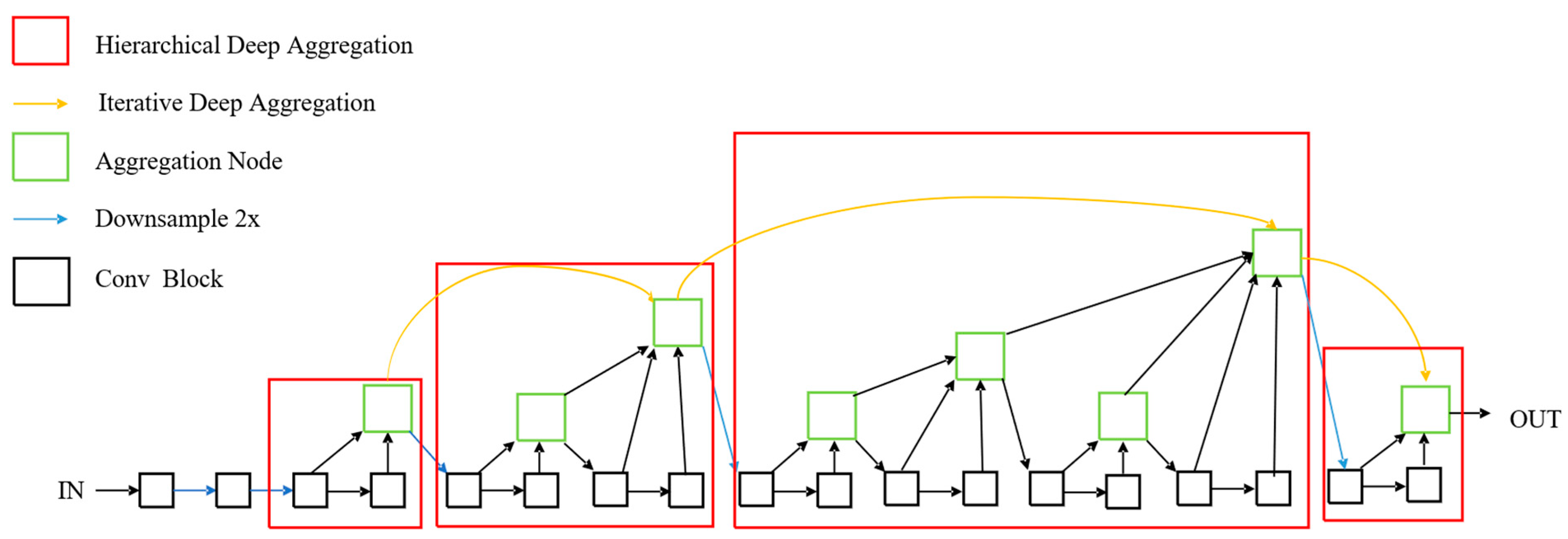

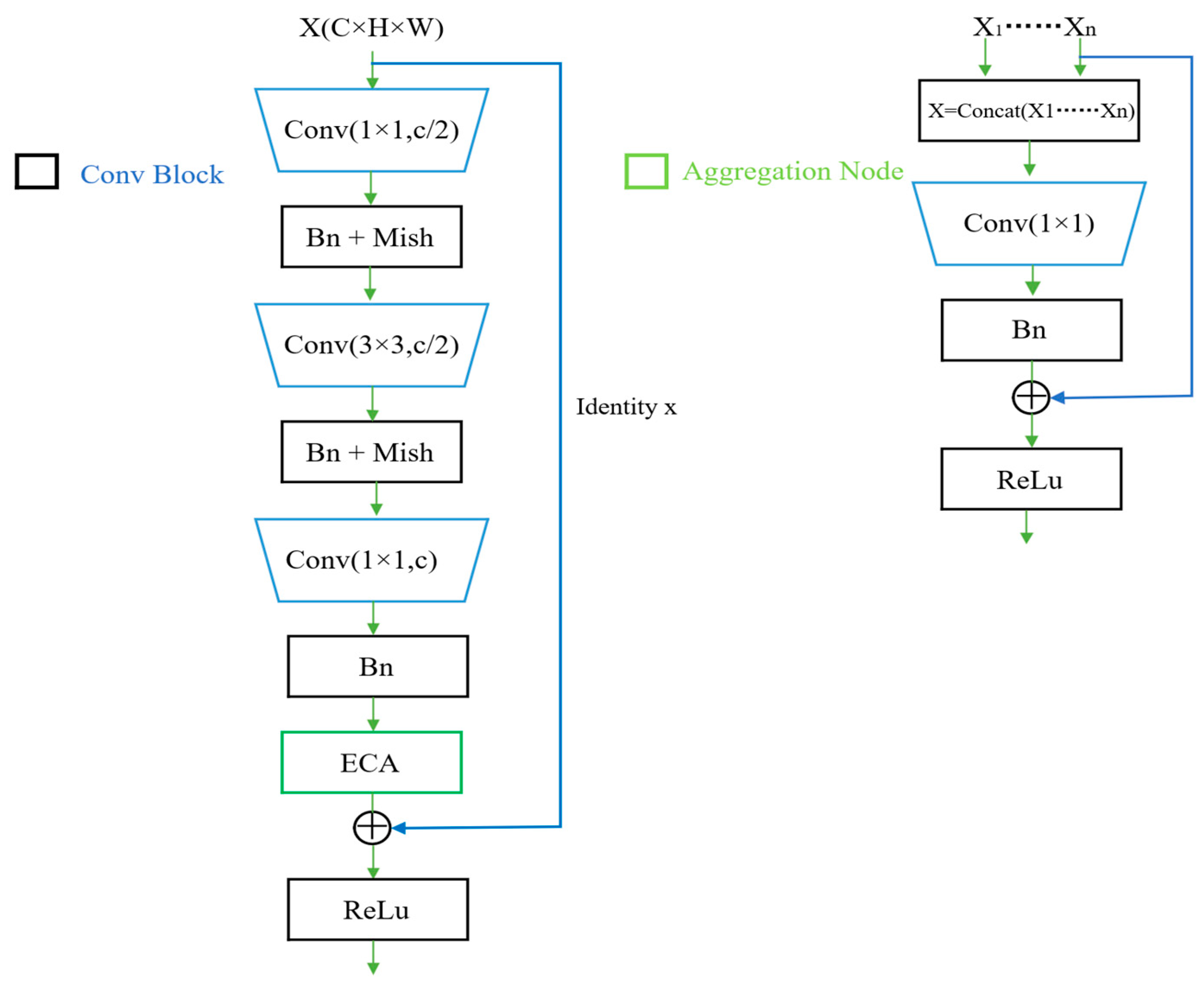

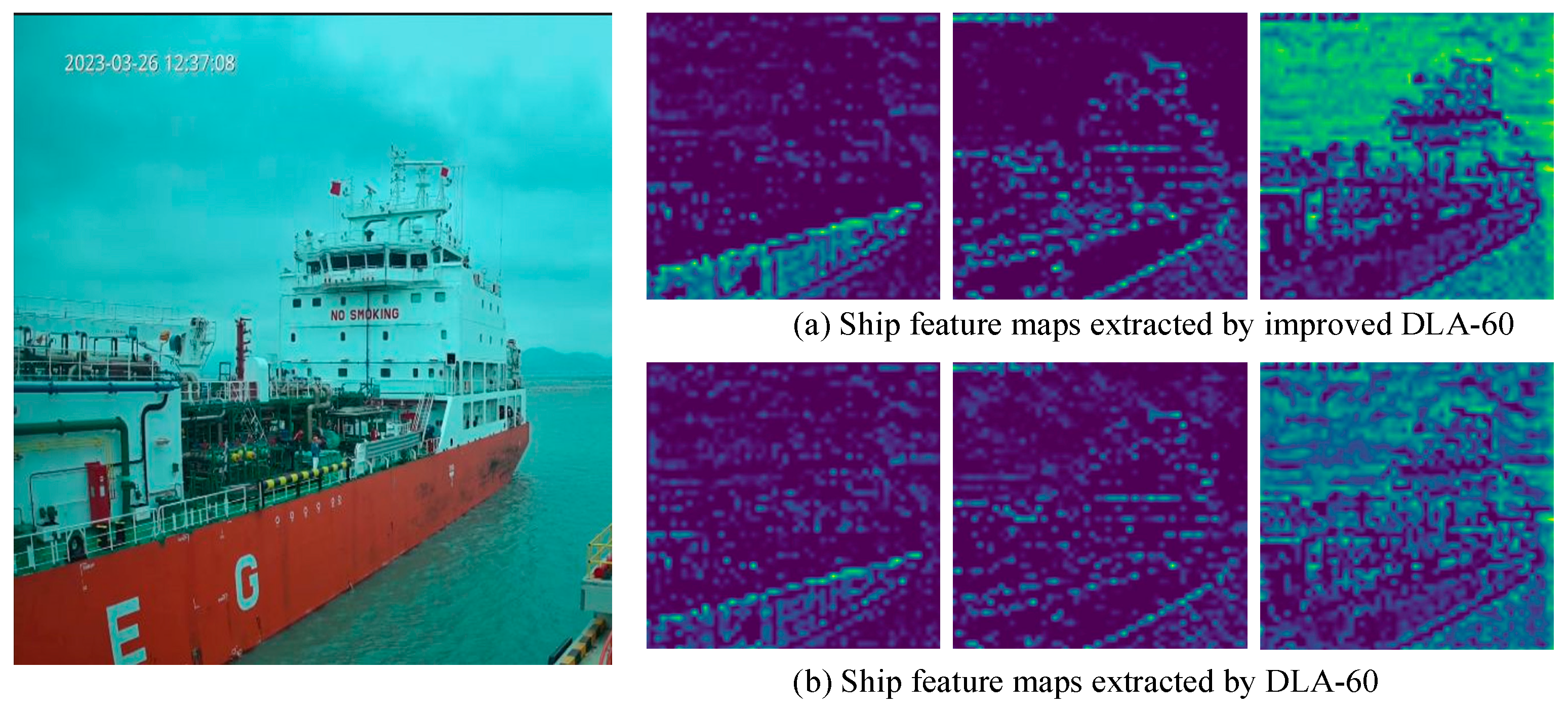

3.1. An Improved CenterNet Detector

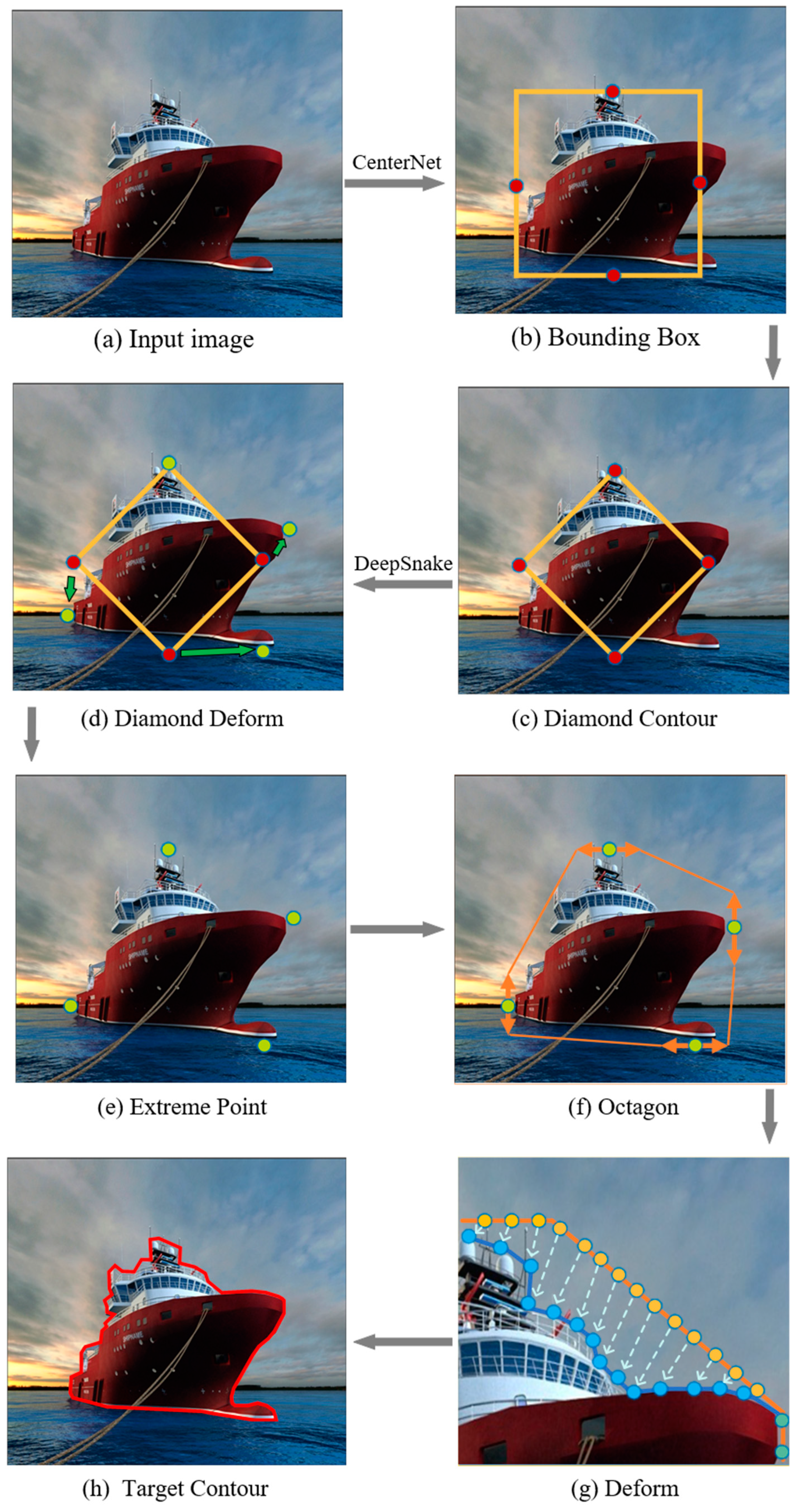

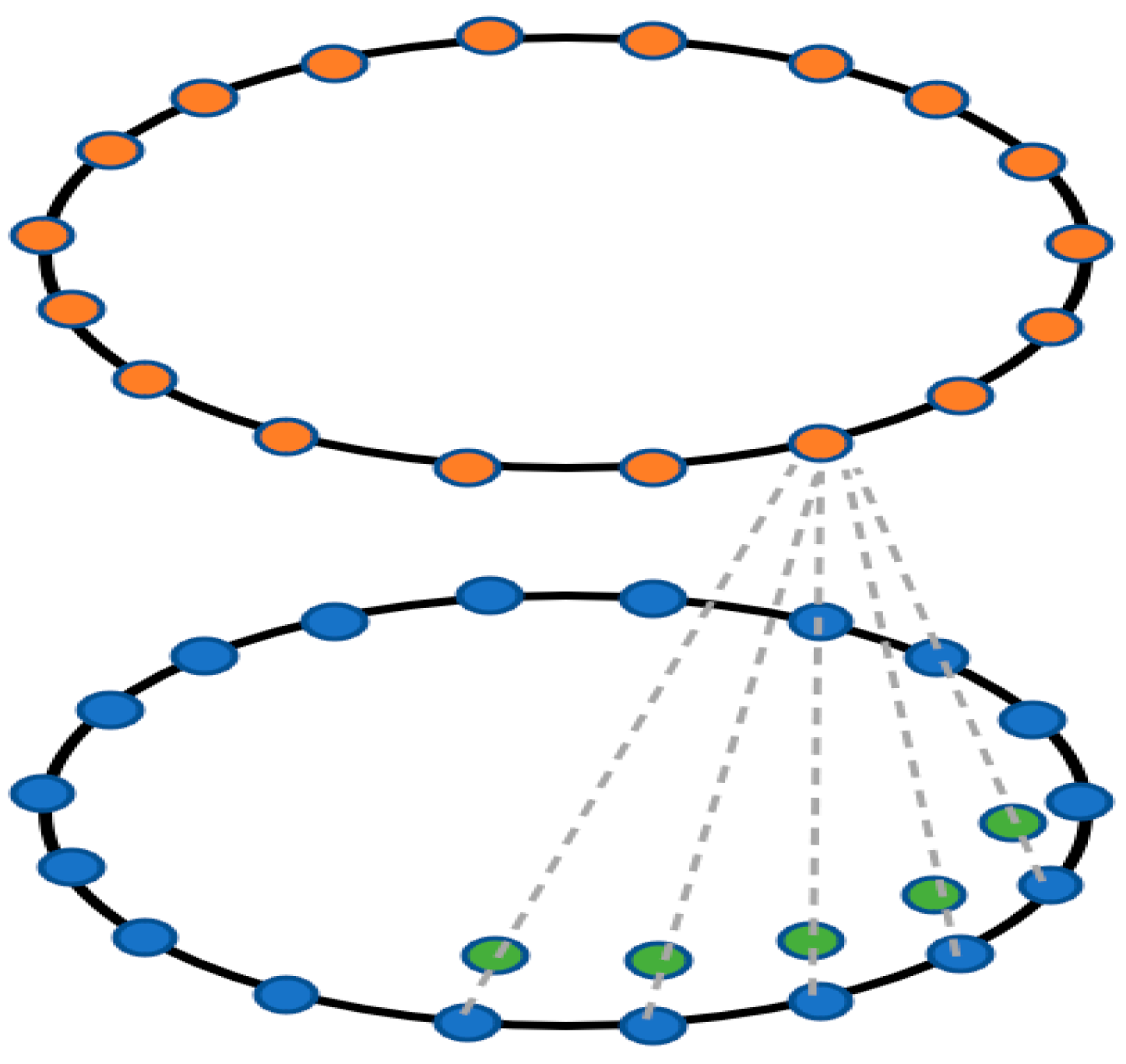

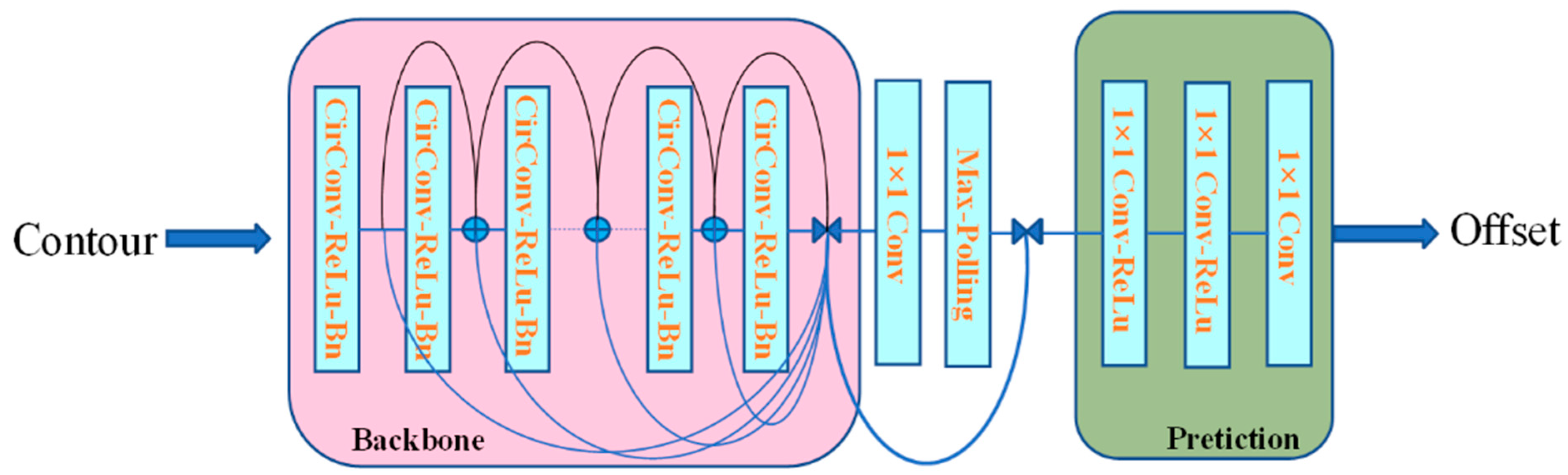

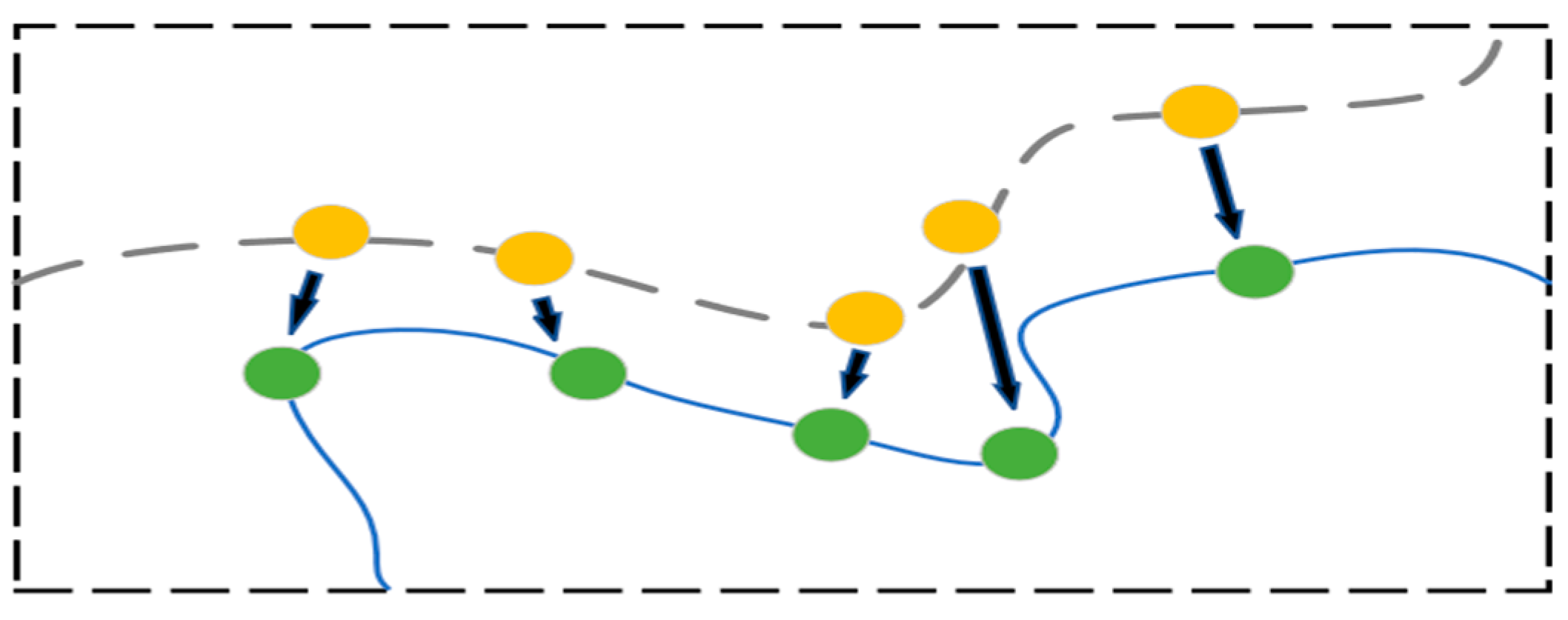

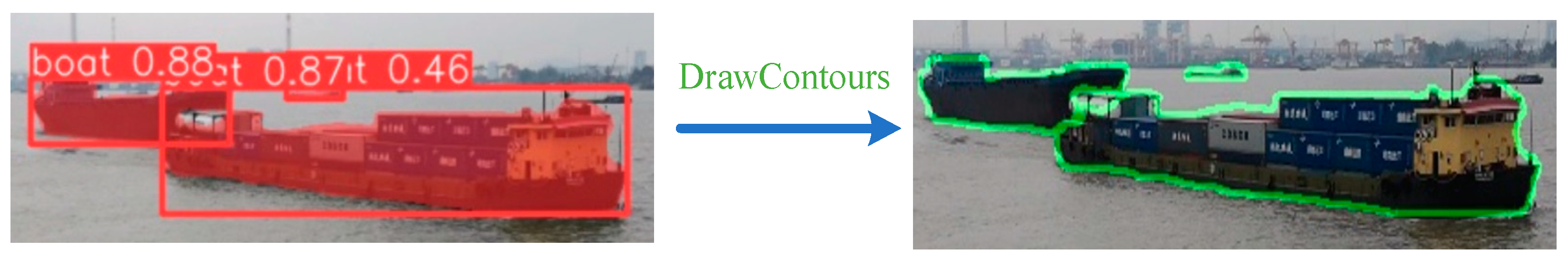

3.2. The Ship Contour for Ship Segmentation

4. A Case Study

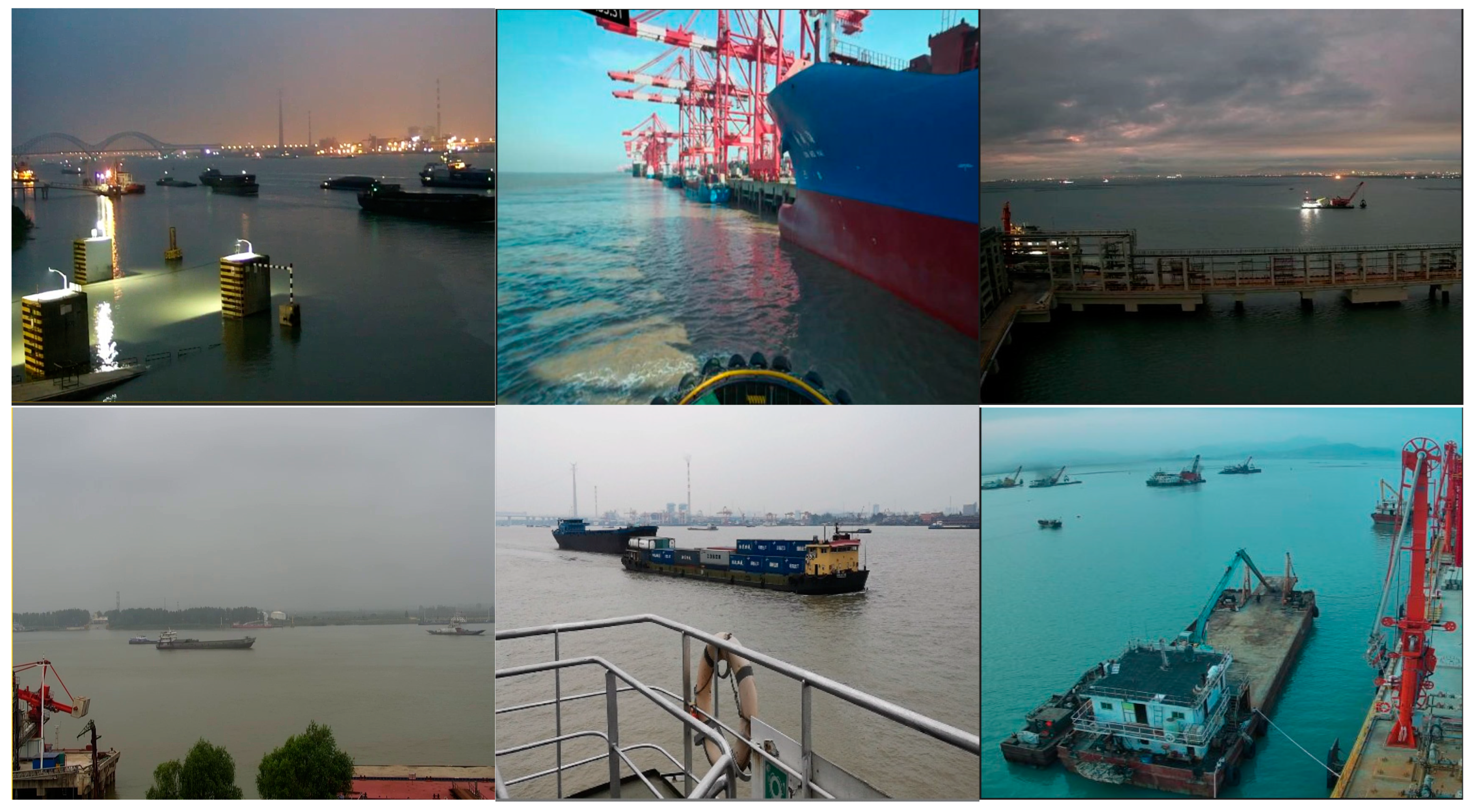

4.1. A Dataset for Ship Instance Segmentation

4.2. An Experimental Platform

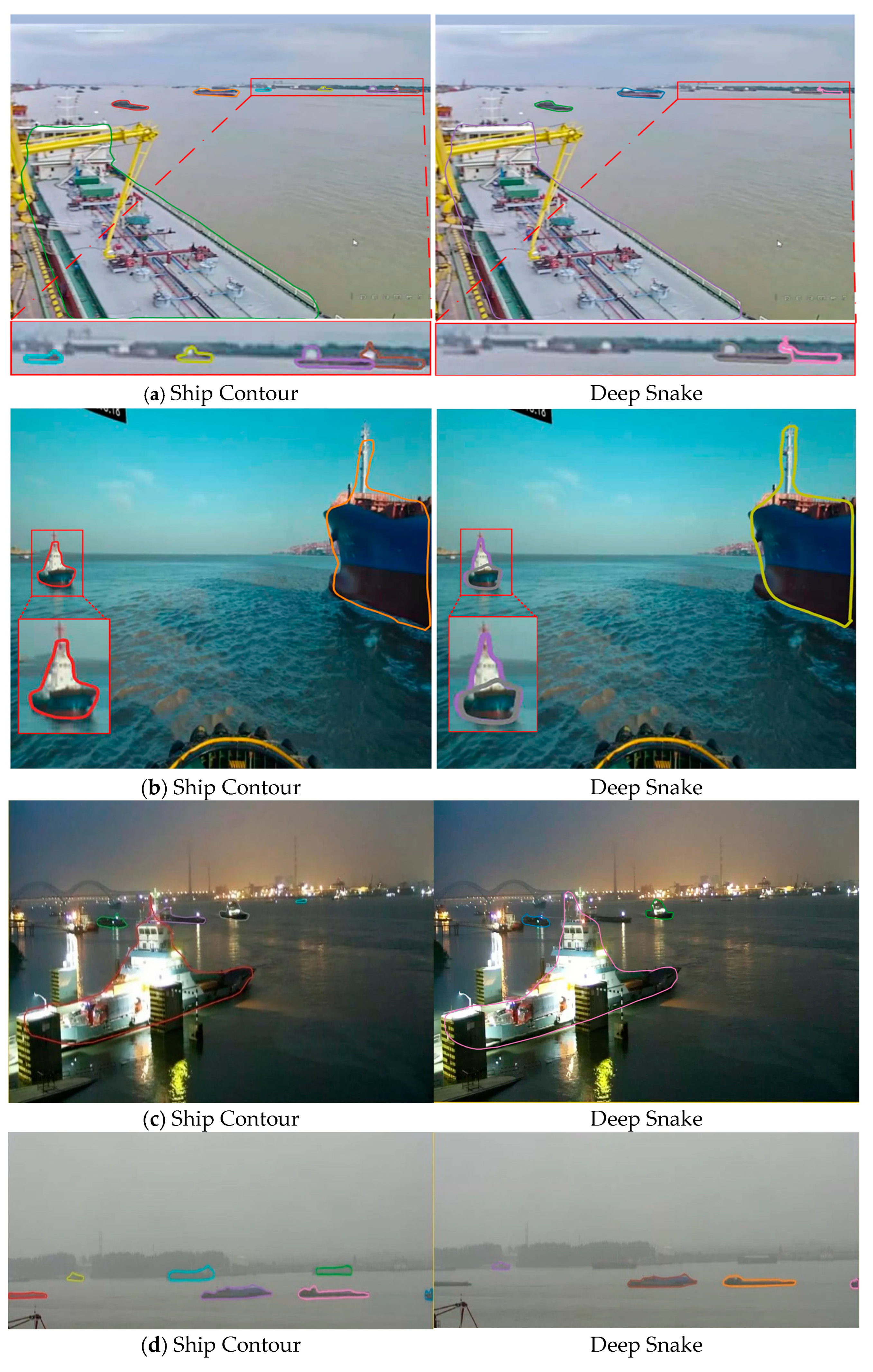

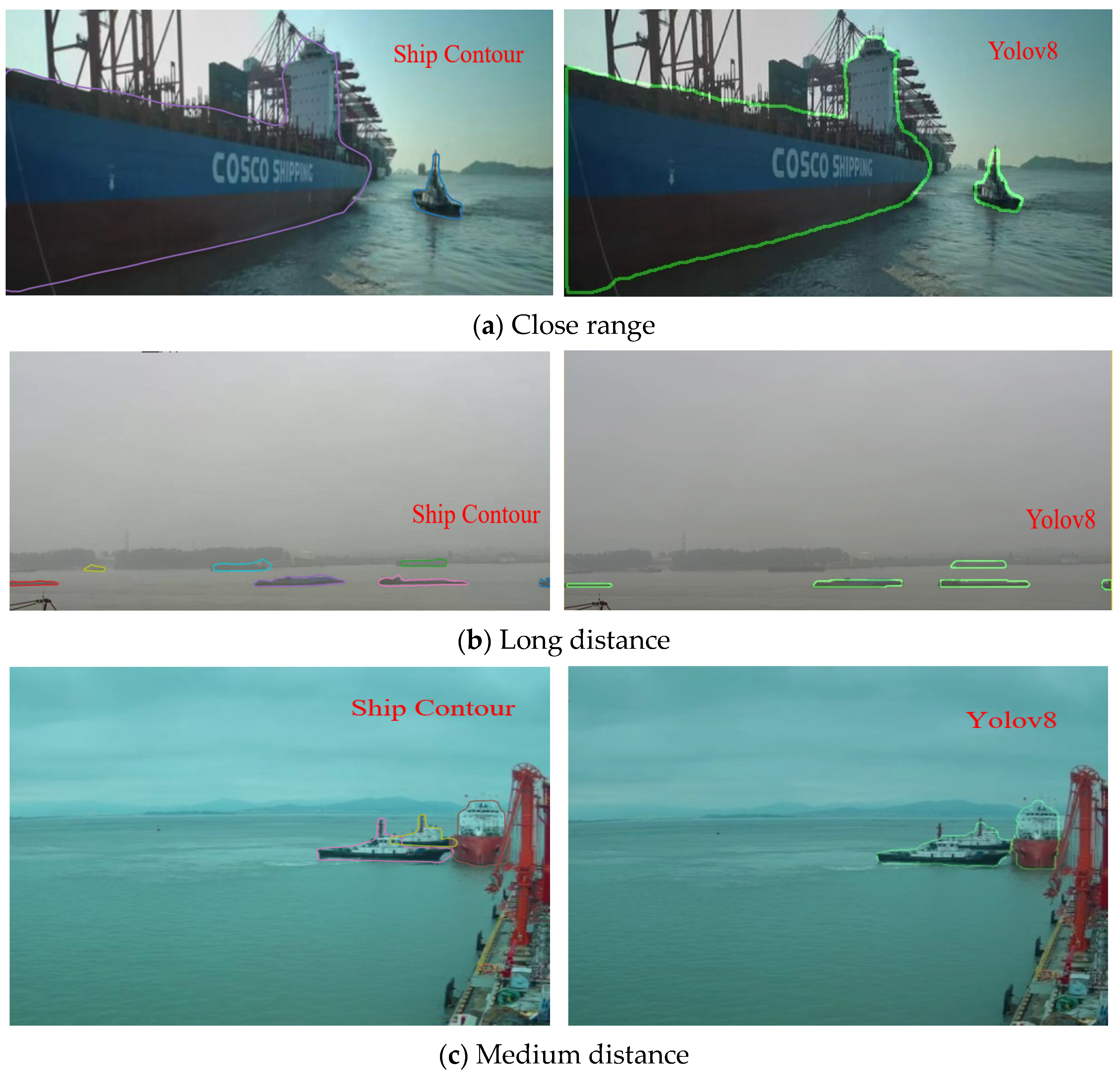

4.3. Comparison with State-of-the-Art Methods

4.4. Ablation Study

4.5. Experimental Results and Analysis Based on Public Datasets

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Petković, M.; Vujović, I. Distance Estimation Approach for Maritime Traffic Surveillance Using Instance Segmentation. J. Mar. Sci. Eng. 2024, 12, 78. [Google Scholar] [CrossRef]

- Murthy, A.S.D.; Rao, S.K.; Naik, K.S.; Das, R.P.; Jahan, K.; Raju, K.L. Tracking of a Manoeuvering Target Ship Using Radar Measurements. Indian J. Sci. Technol. 2015, 8, 1. [Google Scholar] [CrossRef]

- Kim, H.; Kim, D.; Park, B.; Lee, S.-M. Artificial Intelligence Vision-Based Monitoring System for Ship Berthing. IEEE Access 2020, 8, 227014–227023. [Google Scholar] [CrossRef]

- Eum, H.; Bae, J.; Yoon, C.; Kim, E. Ship Detection Using Edge-Based Segmentation and Histogram of Oriented Gradient with Ship Size Ratio. Int. J. Fuzzy Log. Intell. Syst. 2015, 15, 251–259. [Google Scholar] [CrossRef]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT: Real-Time Instance Segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9156–9165. [Google Scholar]

- Wang, X.; Kong, T.; Shen, C.; Jiang, Y.; Li, L. SOLO: Segmenting Objects by Locations. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XVIII. Springer: Berlin/Heidelberg, Germany, 2020; pp. 649–665. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as Points. arXiv 2019, arXiv:1904.07850v2. [Google Scholar]

- Yu, F.; Wang, D.; Shelhamer, E.; Darrell, T. Deep Layer Aggregation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2403–2412. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar]

- Misra, D. Mish: A Self Regularized Non-Monotonic Activation Function. arXiv 2019, arXiv:1908.08681. [Google Scholar]

- Peng, S.; Jiang, W.; Pi, H.; Li, X.; Bao, H.; Zhou, X. Deep Snake for Real-Time Instance Segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8530–8539. [Google Scholar]

- Zheng, X.; Wang, H.; Shang, Y.; Chen, G.; Zou, S.; Yuan, Q. Starting from the Structure: A Review of Small Object Detection Based on Deep Learning. Image Vis. Comput. 2024, 146, 105054. [Google Scholar]

- Duan, H.; Ma, F.; Miao, L.; Zhang, C. A Semi-Supervised Deep Learning Approach for Vessel Trajectory Classification Based on AIS Data. Ocean Coast. Manag. 2022, 218, 106015. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Huang, Z.; Huang, L.; Gong, Y.; Huang, C.; Wang, X. Mask Scoring R-CNN. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6402–6411. [Google Scholar]

- Wang, X.; Zhang, R.; Kong, T.; Li, L.; Shen, C. SOLOv2: Dynamic and Fast Instance Segmentation. Adv. Neural Inf. Process. Syst. 2020, 33, 17721–17732. [Google Scholar]

- Yang, Q.; Peng, J.; Chen, D.; Zhang, H. Road Scene Instance Segmentation Based on Improved SOLOv2. Electronics 2023, 12, 4169. [Google Scholar] [CrossRef]

- Kass, M.; Witkin, A.; Terzopoulos, D. Snakes: Active Contour Models. Int. J. Comput. Vis. 1988, 1, 321–331. [Google Scholar] [CrossRef]

- Marcos, D.; Tuia, D.; Kellenberger, B.; Zhang, L.; Bai, M.; Liao, R.; Urtasun, R. Learning Deep Structured Active Contours End-to-End. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8877–8885. [Google Scholar]

- Yang, Z.; Xu, Y.; Xue, H.; Zhang, Z.; Urtasun, R.; Wang, L.; Lin, S.; Hu, H. Dense RepPoints: Representing Visual Objects with Dense Point Sets. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Springer: Cham, Switzerland, 2020; Volume 12366, pp. 227–244. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment Anything. In Proceedings of the IEEE International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 3992–4003. [Google Scholar]

- Wang, X.; Zhang, X.; Cao, Y.; Wang, W.; Shen, C.; Huang, T. SegGPT: Segmenting Everything In Context. arXiv 2023, arXiv:2304.03284. [Google Scholar]

- Sun, Y.; Su, L.; Luo, Y.; Meng, H.; Li, W.; Zhang, Z.; Wang, P.; Zhang, W. Global Mask R-CNN for Marine Ship Instance Segmentation. Neurocomputing 2022, 480, 257–270. [Google Scholar] [CrossRef]

- Huang, Z.; Li, R. Orientated Silhouette Matching for Single-Shot Ship Instance Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 463–477. [Google Scholar] [CrossRef]

- Ma, F.; Kang, Z.; Chen, C.; Sun, J.; Deng, J. MrisNet: Robust Ship Instance Segmentation in Challenging Marine Radar Environments. J. Mar. Sci. Eng. 2023, 12, 72. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, C.; Shang, S.; Chen, X. SwinSeg: Swin Transformer and MLP Hybrid Network for Ship Segmentation in Maritime Surveillance System. Ocean. Eng. 2023, 281, 114885. [Google Scholar] [CrossRef]

- Sun, Y.; Su, L.; Luo, Y.; Meng, H.; Zhang, Z.; Zhang, W.; Yuan, S. IRDCLNet: Instance Segmentation of Ship Images Based on Interference Reduction and Dynamic Contour Learning in Foggy Scenes. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6029–6043. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Zhu, X.; Hu, H.; Lin, S.; Dai, J. Deformable Convnets V2: More Deformable, Better Results. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9300–9308. [Google Scholar]

- Yue, Z.; Yanyan, F.; Shangyou, Z.; Bing, P. Facial Expression Recognition Based on Convolutional Neural Network. In Proceedings of the IEEE International Conference on Software Engineering and Service Sciences, Beijing, China, 18–20 October 2019; pp. 410–413. [Google Scholar]

- Liu, Z.; Liew, J.H.; Chen, X.; Feng, J. DANCE: A Deep Attentive Contour Model for Efficient Instance Segmentation. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2021; pp. 345–354. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision–ECCV 2014: 13th European conference, Zurich, Switzerland, 6–12 September 2014; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Springer: Cham, Switzerland, 2014; Volume 8693, pp. 740–755. [Google Scholar]

- Sun, Z.; Meng, C.; Huang, T.; Zhang, Z.; Chang, S. Marine Ship Instance Segmentation by Deep Neural Networks Using a Global and Local Attention (GALA) Mechanism. PLoS ONE 2023, 18, e0279248. [Google Scholar] [CrossRef] [PubMed]

| Dataset | 2023 Ship-seg |

| Images | 2300 |

| Train; Instances | 1610; 4392 |

| Test; Instances | 690; 1867 |

| Number of the boat instances | 6259 |

| Size | 512 |

| Type | Visual images |

| Task | Instance segmentation |

| Backbone | AP0.5 (%) | AP0.5:0.95 (%) | AR0.5 (%) | AR0.5:0.95 (%) | Gflops | Param | |

|---|---|---|---|---|---|---|---|

| Yolov8 | Darknet-53 | 0.934 | 0.617 | 0.956 | 0.653 | 42.7 M | 11.7 |

| Solov2 | Resnet-101 | 0.940 | 0.575 | 0.953 | 0.653 | 62.1 M | 65.0 |

| U-net | Vgg-16 | 0.960 | - | 0.96 | - | 226.1 M | 24.9 |

| Segformer | MiT-B2 | 0.913 | 0.931 | 113.4 M | 27.3 | ||

| Yolact++ | Resnet-101 | 0.912 | 0.58 | - | - | 42.1 M | 21.3 |

| Deep Snake | DLA-34 | 0.931 | 0.618 | 0.955 | 0.664 | 25.9 M | 16.3 |

| Ship Contour | DLA-60 | 0.944 | 0.636 | 0.965 | 0.674 | 42.3 M | 23.5 |

| Method | C-Net+ | CDO | AP0.5 (%) | AP0.5:0.95 (%) | AR0.5 (%) | AR0.5:0.95 (%) |

|---|---|---|---|---|---|---|

| M0 | × | × | 0.931 | 0.618 | 0.955 | 0.664 |

| M1 | √ | × | 0.942 | 0.634 | 0.965 | 0.671 |

| M2 | × | √ | 0.941 | 0.624 | 0.964 | 0.665 |

| M3 | √ | √ | 0.944 | 0.636 | 0.965 | 0.674 |

| Method | Backbone | AP0.5 (%) | AP0.5:0.95 (%) | AR0.5 (%) | AR0.5:0.95 (%) | FPS |

|---|---|---|---|---|---|---|

| Solov2 | Resnet-101 | 0.869 | 0.573 | 0.911 | 0.642 | 26 |

| Deep Snake | DLA-34 | 0.865 | 0.590 | 0.913 | 0.644 | 47 |

| Ship Contour | DLA-60 | 0.877 | 0.613 | 0.917 | 0.665 | 30 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, C.; Hu, S.; Ma, F.; Sun, J.; Lu, T.; Wu, B. Ship Contour: A Novel Ship Instance Segmentation Method Using Deep Snake and Attention Mechanism. J. Mar. Sci. Eng. 2025, 13, 519. https://doi.org/10.3390/jmse13030519

Chen C, Hu S, Ma F, Sun J, Lu T, Wu B. Ship Contour: A Novel Ship Instance Segmentation Method Using Deep Snake and Attention Mechanism. Journal of Marine Science and Engineering. 2025; 13(3):519. https://doi.org/10.3390/jmse13030519

Chicago/Turabian StyleChen, Chen, Songtao Hu, Feng Ma, Jie Sun, Tao Lu, and Bing Wu. 2025. "Ship Contour: A Novel Ship Instance Segmentation Method Using Deep Snake and Attention Mechanism" Journal of Marine Science and Engineering 13, no. 3: 519. https://doi.org/10.3390/jmse13030519

APA StyleChen, C., Hu, S., Ma, F., Sun, J., Lu, T., & Wu, B. (2025). Ship Contour: A Novel Ship Instance Segmentation Method Using Deep Snake and Attention Mechanism. Journal of Marine Science and Engineering, 13(3), 519. https://doi.org/10.3390/jmse13030519