Band Weight-Optimized BiGRU Model for Large-Area Bathymetry Inversion Using Satellite Images

Abstract

1. Introduction

2. Materials and Methods

2.1. Analysis Area

2.2. Datasets

2.2.1. EnMAP–Hyperspectral Satellite Images

2.2.2. Sentinel-2–Multispectral Satellite Images

2.2.3. Landsat 9–Multispectral Satellite Images

2.2.4. ICESat-2 ATL03 Data

2.3. Methods

2.3.1. Stumpf

2.3.2. BoBiLSTM

2.3.3. BWO_BiGRU Model

- Self-attention mechanism

- BiGRU (Bidirectional Gated Recurrent Unit)

- Band Weight-Optimized Algorithm

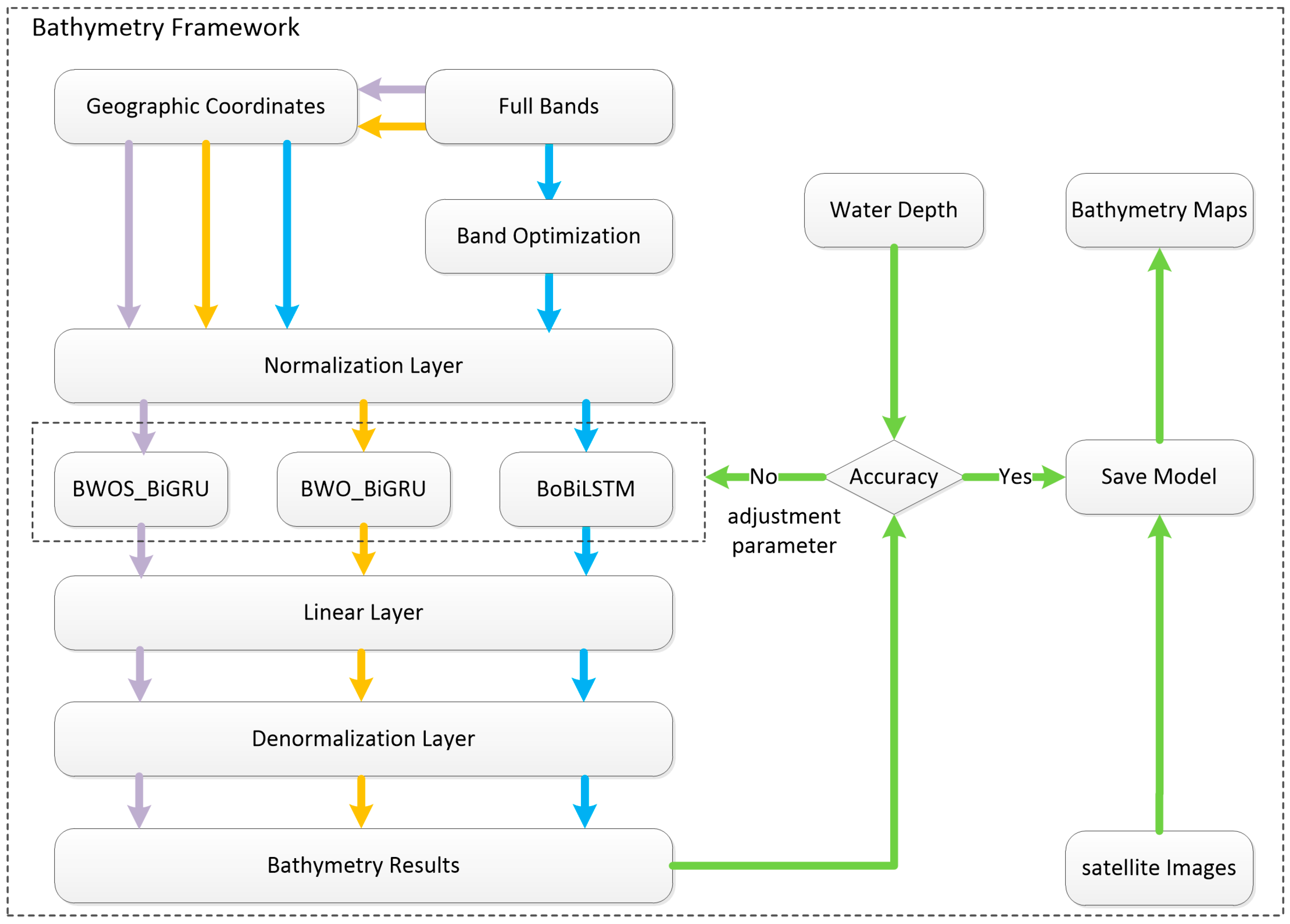

- Bathymetry inversion framework

2.3.4. Evaluation of Model Performance

3. Results

3.1. Bathymetry Inversion Using Different Satellite Images

3.1.1. Correction Results of ICESat-2 Data

3.1.2. Bathymetry Inversion from Different Satellite Images

3.2. Effect Analysis of Bathymetry Inversion

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cui, X.; Xing, Z.; Yang, F.; Fan, M.; Ma, Y.; Sun, Y. A Method for Multibeam Seafloor Terrain Classification Based on Self-Adaptive Geographic Classification Unit. Appl. Acoust. 2020, 157, 107029. [Google Scholar] [CrossRef]

- Asner, G.P.; Vaughn, N.R.; Balzotti, C.; Brodrick, P.G.; Heckler, J. High-Resolution Reef Bathymetry and Coral Habitat Complexity from Airborne Imaging Spectroscopy. Remote Sens. 2020, 12, 310. [Google Scholar] [CrossRef]

- Lecours, V.; Dolan, M.F.J.; Micallef, A.; Lucieer, V.L. A Review of Marine Geomorphometry, the Quantitative Study of the Seafloor. Hydrol. Earth Syst. Sci. 2016, 20, 3207–3244. [Google Scholar] [CrossRef]

- Virtasalo, J.J.; Korpinen, S.; Kotilainen, A.T. Assessment of the Influence of Dredge Spoil Dumping on the Seafloor Geological Integrity. Front. Mar. Sci. 2018, 5, 131. [Google Scholar] [CrossRef]

- Hedley, J.; Roelfsema, C.; Chollett, I.; Harborne, A.; Heron, S.; Weeks, S.; Skirving, W.; Strong, A.; Eakin, C.; Christensen, T.; et al. Remote Sensing of Coral Reefs for Monitoring and Management: A Review. Remote Sens. 2016, 8, 118. [Google Scholar] [CrossRef]

- Mestdagh, S.; Amiri-Simkooei, A.; van der Reijden, K.J.; Koop, L.; O’Flynn, S.; Snellen, M.; Van Sluis, C.; Govers, L.L.; Simons, D.G.; Herman, P.M.J.; et al. Linking the Morphology and Ecology of Subtidal Soft-Bottom Marine Benthic Habitats: A Novel Multiscale Approach. Estuar. Coast. Shelf Sci. 2020, 238, 106687. [Google Scholar] [CrossRef]

- Diesing, M.; Green, S.L.; Stephens, D.; Lark, R.M.; Stewart, H.A.; Dove, D. Mapping Seabed Sediments: Comparison of Manual, Geostatistical, Object-Based Image Analysis and Machine Learning Approaches. Cont. Shelf Res. 2014, 84, 107–119. [Google Scholar] [CrossRef]

- Dietrich, J.T. Bathymetric Structure-from-Motion: Extracting Shallow Stream Bathymetry from Multi-View Stereo Photogrammetry. Earth Surf. Process. Landf. 2016, 42, 355–364. [Google Scholar] [CrossRef]

- Cao, B.; Fang, Y.; Jiang, Z.; Gao, L.; Hu, H. Shallow Water Bathymetry from WorldView-2 Stereo Imagery Using Two-Media Photogrammetry. Eur. J. Remote Sens. 2019, 52, 506–521. [Google Scholar] [CrossRef]

- Zhou, Y.; Lu, L.; Li, L.; Zhang, Q.; Zhang, P. A Generic Method to Derive Coastal Bathymetry from Satellite Photogrammetry for Tsunami Hazard Assessment. Geophys. Res. Lett. 2021, 48, e2021GL095142. [Google Scholar] [CrossRef]

- Popielarczyk, D. Determination of Survey Boat “Heave” Motion with the Use of RTS Technique. In Proceedings of the 10th International Conference “Environmental Engineering”, Vilnius, Lithuania, 27–28 April 2017. [Google Scholar]

- Rowley, T.; Ursic, M.; Konsoer, K.; Langendoen, E.; Mutschler, M.; Sampey, J.; Pocwiardowski, P. Comparison of Terrestrial Lidar, SfM, and MBES Resolution and Accuracy for Geomorphic Analyses in Physical Systems that Experience Subaerial and Subaqueous Conditions. Geomorphology 2020, 355, 107056. [Google Scholar] [CrossRef]

- Borrelli, M.; Legare, B.; McCormack, B.; dos Santos, P.P.G.M.; Solazzo, D. Absolute Localization of Targets Using a Phase-Measuring Sidescan Sonar in Very Shallow Waters. Remote Sens. 2023, 15, 1626. [Google Scholar] [CrossRef]

- Pessanha, V.S.; Chu, P.C.; Gough, M.K.; Orescanin, M.M. Coupled Model to Predict Wave-Induced Liquefaction and Morphological Changes. J. Sea Res. 2023, 192, 102351. [Google Scholar] [CrossRef]

- Ghorbanidehno, H.; Lee, J.; Farthing, M.; Hesser, T.; Kitanidis, P.K.; Darve, E.F. Novel Data Assimilation Algorithm for Nearshore Bathymetry. J. Atmos. Ocean. Technol. 2019, 36, 699–715. [Google Scholar] [CrossRef]

- Wu, J.; Hao, X.; Li, T.; Shen, L. Adjoint-Based High-Order Spectral Method of Wave Simulation for Coastal Bathymetry Reconstruction. J. Fluid Mech. 2023, 972, A41. [Google Scholar] [CrossRef]

- Danilo, C.; Melgani, F. Wave Period and Coastal Bathymetry Using Wave Propagation on Optical Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6307–6319. [Google Scholar] [CrossRef]

- Wei, Z.; Guo, J.; Zhu, C.; Yuan, J.; Chang, X.; Ji, B. Evaluating Accuracy of HY-2A/GM-Derived Gravity Data with the Gravity-Geologic Method to Predict Bathymetry. Front. Earth Sci. 2021, 9, 636246. [Google Scholar] [CrossRef]

- Sun, Y.; Zheng, W.; Li, Z.; Zhou, Z. Improved the Accuracy of Seafloor Topography from Altimetry-Derived Gravity by the Topography Constraint Factor Weight Optimization Method. Remote Sens. 2021, 13, 2277. [Google Scholar] [CrossRef]

- Hsiao, Y.-S.; Hwang, C.; Cheng, Y.-S.; Chen, L.-C.; Hsu, H.-J.; Tsai, J.-H.; Liu, C.-L.; Wang, C.-C.; Liu, Y.-C.; Kao, Y.-C. High-Resolution Depth and Coastline Over Major Atolls of South China Sea from Satellite Altimetry and Imagery. Remote Sens. Environ. 2016, 176, 69–83. [Google Scholar] [CrossRef]

- Xing, S.; Wang, D.; Xu, Q.; Lin, Y.; Li, P.; Liu, C. Characteristic Analysis of the Green-Channel Waveforms with ALB Mapper5000. In Proceedings of the 2019 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Chongqing, China, 11–13 December 2019; pp. 1–4. [Google Scholar]

- Szafarczyk, A.; Tos, C. The Use of Green Laser in LiDAR Bathymetry: State of the Art and Recent Advancements. Sensors 2022, 23, 292. [Google Scholar] [CrossRef] [PubMed]

- Guo, K.; Xu, W.; Liu, Y.; He, X.; Tian, Z. Gaussian Half-Wavelength Progressive Decomposition Method for Waveform Processing of Airborne Laser Bathymetry. Remote Sens. 2017, 10, 35. [Google Scholar] [CrossRef]

- Huang, L.; Meng, J.; Fan, C.; Zhang, J.; Yang, J. Shallow Sea Topography Detection from Multi-Source SAR Satellites: A Case Study of Dazhou Island in China. Remote Sens. 2022, 14, 5184. [Google Scholar] [CrossRef]

- Bian, X.; Shao, Y.; Zhang, C.; Xie, C.; Tian, W. The Feasibility of Assessing Swell-Based Bathymetry Using SAR Imagery from Orbiting Satellites. ISPRS J. Photogramm. Remote Sens. 2020, 168, 124–130. [Google Scholar] [CrossRef]

- Han, T.; Zhang, H.; Cao, W.; Le, C.; Wang, C.; Yang, X.; Ma, Y.; Li, D.; Wang, J.; Lou, X. Cost-Efficient Bathymetric Mapping Method Based on Massive Active–Passive Remote Sensing Data. ISPRS J. Photogramm. Remote Sens. 2023, 203, 285–300. [Google Scholar] [CrossRef]

- Gabr, B.; Ahmed, M.; Marmoush, Y. PlanetScope and Landsat 8 Imageries for Bathymetry Mapping. J. Mar. Sci. Eng. 2020, 8, 143. [Google Scholar] [CrossRef]

- Zhang, H.; Ma, Y.; Zhang, J.; Zhao, X.; Zhang, X.; Leng, Z. Atmospheric Correction Model for Water–Land Boundary Adjacency Effects in Landsat-8 Multispectral Images and Its Impact on Bathymetric Remote Sensing. Remote Sens. 2022, 14, 4769. [Google Scholar] [CrossRef]

- Niroumand-Jadidi, M.; Legleiter, C.J.; Bovolo, F. River Bathymetry Retrieval from Landsat-9 Images Based on Neural Networks and Comparison to SuperDove and Sentinel-2. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5250–5260. [Google Scholar] [CrossRef]

- Bergsma, E.W.J.; Almar, R.; Maisongrande, P. Radon-Augmented Sentinel-2 Satellite Imagery to Derive Wave-Patterns and Regional Bathymetry. Remote Sens. 2019, 11, 1918. [Google Scholar] [CrossRef]

- Babbel, B.J.; Parrish, C.E.; Magruder, L.A. ICESat-2 Elevation Retrievals in Support of Satellite-Derived Bathymetry for Global Science Applications. Geophys. Res. Lett. 2021, 48, e2020GL090629. [Google Scholar] [CrossRef]

- Granadeiro, J.P.; Belo, J.; Henriques, M.; Catalão, J.; Catry, T. Using Sentinel-2 Images to Estimate Topography, Tidal-Stage Lags and Exposure Periods over Large Intertidal Areas. Remote Sens. 2021, 13, 320. [Google Scholar] [CrossRef]

- Cheng, L.; Ma, L.; Cai, W.; Tong, L.; Li, M.; Du, P. Integration of Hyperspectral Imagery and Sparse Sonar Data for Shallow Water Bathymetry Mapping. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3235–3249. [Google Scholar] [CrossRef]

- Ma, S.; Tao, Z.; Yang, X.; Yu, Y.; Zhou, X.; Li, Z. Bathymetry Retrieval from Hyperspectral Remote Sensing Data in Optical-Shallow Water. IEEE Trans. Geosci. Remote Sens. 2014, 52, 1205–1212. [Google Scholar] [CrossRef]

- Alevizos, E. A Combined Machine Learning and Residual Analysis Approach for Improved Retrieval of Shallow Bathymetry from Hyperspectral Imagery and Sparse Ground Truth Data. Remote Sens. 2020, 12, 3489. [Google Scholar] [CrossRef]

- Niroumand-Jadidi, M.; Bovolo, F.; Bruzzone, L. Water Quality Retrieval from PRISMA Hyperspectral Images: First Experience in a Turbid Lake and Comparison with Sentinel-2. Remote Sens. 2020, 12, 3984. [Google Scholar] [CrossRef]

- Braga, F.; Fabbretto, A.; Vanhellemont, Q.; Bresciani, M.; Giardino, C.; Scarpa, G.M.; Manfè, G.; Concha, J.A.; Brando, V.E. Assessment of PRISMA Water Reflectance Using Autonomous Hyperspectral Radiometry. ISPRS J. Photogramm. Remote Sens. 2022, 192, 99–114. [Google Scholar] [CrossRef]

- Alevizos, E.; Le Bas, T.; Alexakis, D.D. Assessment of PRISMA Level-2 Hyperspectral Imagery for Large Scale Satellite-Derived Bathymetry Retrieval. Mar. Geod. 2022, 45, 251–273. [Google Scholar] [CrossRef]

- Minghelli, A.; Vadakke-Chanat, S.; Chami, M.; Guillaume, M.; Migne, E.; Grillas, P.; Boutron, O. Estimation of Bathymetry and Benthic Habitat Composition from Hyperspectral Remote Sensing Data (BIODIVERSITY) Using a Semi-Analytical Approach. Remote Sens. 2021, 13, 1999. [Google Scholar] [CrossRef]

- Minghelli, A.; Vadakke-Chanat, S.; Chami, M.; Guillaume, M.; Peirache, M. Benefit of the Potential Future Hyperspectral Satellite Sensor (BIODIVERSITY) for Improving the Determination of Water Column and Seabed Features in Coastal Zones. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 1222–1232. [Google Scholar] [CrossRef]

- Becker, M.; Schreiner, S.; Auer, S.; Cerra, D.; Gege, P.; Bachmann, M.; Roitzsch, A.; Mitschke, U.; Middelmann, W. Reconnaissance of Coastal Areas Using Simulated EnMAP Data in an ERDAS IMAGINE Environment; SPIE: Bellingham, WA, USA, 2018; Volume 10790. [Google Scholar]

- Dörnhöfer, K.; Oppelt, N. Mapping Benthic Substrate Coverage and Bathymetry Using Bio-optical Modelling—An EnMAP Case Study in the Coastal Waters of Helgoland. In Proceedings of the 2014 6th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Lausanne, Switzerland, 24–27 June 2014; pp. 1–6. [Google Scholar]

- Lyzenga, D.R. Shallow-Water Bathymetry Using Combined Lidar and Passive Multispectral Scanner Data. Int. J. Remote Sens. 1985, 6, 115–125. [Google Scholar] [CrossRef]

- Stumpf, R.P.; Holderied, K.; Sinclair, M. Determination of Water Depth with High-Resolution Satellite Imagery over Variable Bottom Types. Limnol. Oceanogr. 2003, 48, 547–556. [Google Scholar] [CrossRef]

- Lyzenga, D.R.; Malinas, N.P.; Tanis, F.J. Multispectral Bathymetry Using a Simple Physically Based Algorithm. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2251–2259. [Google Scholar] [CrossRef]

- Najar, M.A.; Benshila, R.; Bennioui, Y.E.; Thoumyre, G.; Almar, R.; Bergsma, E.W.J.; Delvit, J.-M.; Wilson, D.G. Coastal Bathymetry Estimation from Sentinel-2 Satellite Imagery: Comparing Deep Learning and Physics-Based Approaches. Remote Sens. 2022, 14, 1196. [Google Scholar] [CrossRef]

- Ghorbanidehno, H.; Lee, J.; Farthing, M.; Hesser, T.; Darve, E.F.; Kitanidis, P.K. Deep Learning Technique for Fast Inference of Large-Scale Riverine Bathymetry. Adv. Water Resour. 2021, 147, 103715. [Google Scholar] [CrossRef]

- Yang, L.; Liu, M.; Liu, N.; Guo, J.; Lin, L.; Zhang, Y.; Du, X.; Xu, Y.; Zhu, C.; Wang, Y. Recovering Bathymetry from Satellite Altimetry-Derived Gravity by Fully Connected Deep Neural Network. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1502805. [Google Scholar] [CrossRef]

- Misra, A.; Vojinovic, Z.; Ramakrishnan, B.; Luijendijk, A.; Ranasinghe, R. Shallow Water Bathymetry Mapping Using Support Vector Machine (SVM) Technique and Multispectral Imagery. Int. J. Remote Sens. 2018, 39, 4431–4450. [Google Scholar] [CrossRef]

- Sun, S.; Chen, Y.; Mu, L.; Le, Y.; Zhao, H. Improving Shallow Water Bathymetry Inversion through Nonlinear Transformation and Deep Convolutional Neural Networks. Remote Sens. 2023, 15, 4247. [Google Scholar] [CrossRef]

- Li, X.; Li, J.; Williams, Z.; Huang, X.; Carroll, M.; Wang, J. Enhanced Deep Learning Super-Resolution for Bathymetry Data. In Proceedings of the 2022 IEEE/ACM International Conference on Big Data Computing, Applications and Technologies (BDCAT), Vancouver, WA, USA, 6–9 December 2022; pp. 49–57. [Google Scholar]

- Alevizos, E.; Nicodemou, V.C.; Makris, A.; Oikonomidis, I.; Roussos, A.; Alexakis, D.D. Integration of Photogrammetric and Spectral Techniques for Advanced Drone-Based Bathymetry Retrieval Using a Deep Learning Approach. Remote Sens. 2022, 14, 4160. [Google Scholar] [CrossRef]

- Mandlburger, G.; Kölle, M.; Nübel, H.; Soergel, U. BathyNet: A Deep Neural Network for Water Depth Mapping from Multispectral Aerial Images. PFG J.Photogramm. Remote Sens. Geoinf. Sci. 2021, 89, 71–89. [Google Scholar] [CrossRef]

- Zhong, J.; Sun, J.; Lai, Z.; Song, Y. Nearshore Bathymetry from ICESat-2 LiDAR and Sentinel-2 Imagery Datasets Using Deep Learning Approach. Remote Sens. 2022, 14, 4229. [Google Scholar] [CrossRef]

- Huang, Y.; He, Y.; Zhu, X.; Yu, J.; Chen, Y. Faint Echo Extraction from ALB Waveforms Using a Point Cloud Semantic Segmentation Model. Remote Sens. 2023, 15, 2326. [Google Scholar] [CrossRef]

- Xi, X.; Chen, M.; Wang, Y.; Yang, H. Band-Optimized Bidirectional LSTM Deep Learning Model for Bathymetry Inversion. Remote Sens. 2023, 15, 3472. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, M.; Xi, X.; Yang, H. Bathymetry Inversion Using Attention-Based Band Optimization Model for Hyperspectral or Multispectral Satellite Imagery. Water 2023, 15, 3205. [Google Scholar] [CrossRef]

- Nott, J. A 6000 Year Tropical Cyclone Record from Western Australia. Quat. Sci. Rev. 2011, 30, 713–722. [Google Scholar] [CrossRef]

- Xie, C.; Chen, P.; Zhang, Z.; Pan, D. Satellite-Derived Bathymetry Combined with Sentinel-2 and ICESat-2 Datasets Using Machine Learning. Front. Earth Sci. 2023, 11, 1111817. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, X.; Ma, Y.; Xu, N.; Zhang, W.; Li, S. Signal Photon Extraction Method for Weak Beam Data of ICESat-2 Using Information Provided by Strong Beam Data in Mountainous Areas. Remote Sens. 2021, 13, 863. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Lao, J.; Wang, C.; Zhu, X.; Xi, X.; Nie, S.; Wang, J.; Cheng, F.; Zhou, G. Retrieving Building Height in Urban Areas Using ICESat-2 Photon-Counting LiDAR Data. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102596. [Google Scholar] [CrossRef]

- WillyWeather. Available online: https://tides.willyweather.com.au/wa/gascoyne/shark-bay.html (accessed on 5 October 2023).

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is All You Need. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS’17), Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Cho, K.; van Merriënboer, B.; Bahdanau, D.; Bengio, Y. On the Properties of Neural Machine Translation Encoder-Decoder Approaches. In Proceedings of the SSST-8, Eighth Workshop on Syntax, Semantics and Structure in Statistical Translation, Doha, Qatar, 25 October 2014; pp. 103–111. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Le, Y.; Hu, M.; Chen, Y.; Yan, Q.; Zhang, D.; Li, S.; Zhang, X.; Wang, L. Investigating the Shallow-Water Bathymetric Capability of Zhuhai-1 Spaceborne Hyperspectral Images Based on ICESat-2 Data and Empirical Approaches: A Case Study in the South China Sea. Remote Sens. 2022, 14, 3406. [Google Scholar] [CrossRef]

- Leng, Z.; Zhang, J.; Ma, Y.; Zhang, J. ICESat-2 Bathymetric Signal Reconstruction Method Based on a Deep Learning Model with Active–Passive Data Fusion. Remote Sens. 2023, 15, 460. [Google Scholar] [CrossRef]

- Slawinski, D.; Branson, P.; Rochester, W. Mapping Blue Carbon Mitigation Opportunity: DEM. v1. CSIRO. Data Collection. 2024. Available online: https://data.csiro.au/collection/csiro%3A62139v1 (accessed on 12 July 2024). [CrossRef]

- Suosaari, E.P.; Reid, R.P.; Playford, P.E.; Foster, J.S.; Stolz, J.F.; Casaburi, G.; Hagan, P.D.; Chirayath, V.; Macintyre, I.G.; Planavsky, N.J.; et al. New multi-scale perspectives on the stromatolites of Shark Bay, Western Australia. Sci. Rep. 2016, 6, 20557. [Google Scholar] [CrossRef]

- Suosaari, E.P.; Reid, R.P.; Oehlert, A.M.; Playford, P.E.; Steffensen, C.K.; Andres, M.S.; Suosaari, G.V.; Milano, G.R.; Eberli, G.P. Stromatolite Provinces of Hamelin Pool: Physiographic Controls on Stromatolites and Associated Lithofacies. J. Sediment. Res. 2019, 89, 207–226. [Google Scholar] [CrossRef]

| EnMAP Band | Wavelength (nm) | Sentinel-2 Band | Wavelength (nm) | Landsat 9 Band | Wavelength (nm) |

|---|---|---|---|---|---|

| 1–3 | 420–432 | ||||

| 4–7 | 437–452 | 1 | 433–453 | 1 | 433–451 |

| 8–22 | 457–523 | 2 | 458–523 | 2 | 452–512 |

| 23–26 | 528–543 | ||||

| 27–34 | 548–585 | 3 | 543–578 | 3 | 533–590 |

| 35–43 | 591–638 | ||||

| 44–49 | 644–676 | 4 | 650–680 | 4 | 636–673 |

| 50–52 | 682–696 | 8 | 503–676 | ||

| 53–55 | 703–716 | 5 | 698–713 | ||

| 56–57 | 723–730 | ||||

| 58–59 | 737–745 | 6 | 733–748 | ||

| 60–63 | 752–774 | ||||

| 64–65 | 781–789 | 7 | 773–793 | ||

| 66–79 | 796–899 | 8 | 785–900 | ||

| 80–83 | 907–931 | 8a | 865–885 | 5 | 851–879 |

| 84–86 | 939–955 | ||||

| 87–91 | 963–996 | 9 | 935–955 | ||

| 41–43(SWIR) | 1359–1383 | 10 | 1360–1390 | 9 | 1363–1384 |

| 54–62 (SWIR) | 1569–1658 | 11 | 1565–1655 | 6 | 1566–1651 |

| 90–113 (SWIR) | 2100–2295 | 12 | 2100–2280 | 7 | 2107–2294 |

| 10 (TIRS) | 10,600–11,190 | ||||

| 11 (TIRS) | 11,500–12,510 |

| ATL03 Strips Date | Time (UTC) | Track Used | Geographic Coordinates |

|---|---|---|---|

| 20181231 | 10:41 | GT1L | 114°6′28′′ E, 25°50′4′′ S–114°10′14″ E, 26°24′54″ S |

| GT2L | 114°8′26″ E, 25°50′16″ S–114°11′56″ E, 26°22′24″ S | ||

| GT3L | 114°10′24″ E, 25°50′23″ S–114°13′36″ E, 26°19′40″ S | ||

| 20200329 | 6:37 | GT1R | 114°2′4″ E, 25°49′41″ S–114°6′1″ E, 26°26′15″ S |

| GT2R | 114°4′1″ E, 25°49′43″ S–114°7′51″ E, 26°25′8″ S | ||

| GT3R | 114°5′59″ E, 25°49′48″ S–114°9′35″ E, 26°23′4″ S | ||

| 20201215 | 5:56 | GT1L | 114°2′35″ E, 26°24′25″ S–114°6′19″ E, 25°50′1″ S |

| GT2L | 114°0′34″ E, 26°25′0″ S–114°4′8″ E, 25°52′4″ S | ||

| GT3L | 113°59′50″ E, 26°13′41″ S–114°2′10″ E, 25°52′15″ S | ||

| 20210113 | 4:32 | GT1L | 114°10′3″ E, 26°27′14″ S–114°14′3″ E, 25°50′19″ S |

| GT2L | 114°8′7′′ E, 26°26′58′′ S–114°12′8′′ E, 25°50′1′′ S | ||

| GT3L | 114°6′8′′ E, 26°27′9′′ S–114°9′56′′ E, 25°52′16′′ S | ||

| 20210626 | 8:56 | GT1R | 114°5′57′′ E, 25°49′45′′ S–114°9′38′′ E, 26°23′43′′ S |

| GT2R | 114°7′55′′ E, 25°49′48′′ S–114°11′29′′ E, 26°22′38′′ S | ||

| GT3R | 114°9′59′′ E, 25°50′51′′ S–114°13′22′′ E, 26°21′55′′ S | ||

| 20220314 | 8:15 | GT1L | 114°0′47′′ E, 26°22′57′′ S–114°3′52′′ E, 25°54′35′′ S |

| GT2L | 113°59′50′′ E, 26°13′36′′ S–114°2′25′′ E, 25°49′48′′ S |

| Model | Parameters | |||||

|---|---|---|---|---|---|---|

| Layers | Neural Network Units | Activation Function | Loss Function | Optimizer | Others | |

| BoBiLSTM | 2 | 128 | tanh | MSE | Adam | Dropout: 0.5 |

| BWO_BiGRU | Self-attention: sigmoid BiGRU: tanh | |||||

| BWOS_BiGRU | ||||||

| ATL03 Strips | Points | Depth Before Correction (m) | Depth After Correction (m) | Elevation Difference (m) | |||

|---|---|---|---|---|---|---|---|

| Min | Max | Min | Max | Min | Max | ||

| 20181231GT1L | 7660 | 2.974 | 14.001 | 1.437 | 9.639 | 1.537 | 4.362 |

| 20181231GT2L | 21,947 | 2.999 | 9.999 | 1.436 | 6.655 | 1.563 | 3.344 |

| 20181231GT3L | 10,916 | 1.998 | 10.998 | 0.689 | 7.400 | 1.309 | 3.598 |

| 20200329GT1R | 13,170 | 1.999 | 11.999 | 0.590 | 8.048 | 1.409 | 3.951 |

| 20200329GT2R | 14,046 | 1.999 | 11.999 | 0.590 | 8.047 | 1.409 | 3.952 |

| 20200329GT3R | 17,145 | 1.999 | 14.999 | 0.590 | 10.283 | 1.409 | 4.716 |

| 20201215GT1L | 26,124 | 1.999 | 14.999 | 1.090 | 10.784 | 0.909 | 4.215 |

| 20201215GT2L | 22,985 | 0.999 | 14.999 | 0.344 | 10.784 | 0.655 | 4.215 |

| 20201215GT3L | 15,595 | 2.999 | 13.999 | 1.836 | 10.038 | 1.163 | 3.961 |

| 20210113GT1L | 15,030 | 2.999 | 8.998 | 1.536 | 6.009 | 1.463 | 2.989 |

| 20210113GT2L | 24,199 | 1.999 | 9.999 | 0.790 | 6.756 | 1.209 | 3.243 |

| 20210113GT3L | 25,700 | 2.999 | 10.999 | 1.536 | 7.502 | 1.463 | 3.497 |

| 20210626GT1R | 25,416 | 2.999 | 13.998 | 0.836 | 9.037 | 2.163 | 4.962 |

| 20210626GT2R | 25,919 | 2.999 | 11.999 | 0.836 | 7.546 | 2.163 | 4.453 |

| 20210626GT3R | 16,346 | 2.999 | 9.998 | 0.836 | 6.054 | 2.163 | 3.944 |

| 20220314GT1L | 22,557 | 2.999 | 13.999 | 1.636 | 9.838 | 1.363 | 4.161 |

| 20220314GT2L | 13,859 | 2.999 | 14.999 | 1.636 | 10.583 | 1.363 | 4.416 |

| Model | Satellite Sensor | Band | Band Ratio | R2 | RMSE (m) |

|---|---|---|---|---|---|

| Stumpf | EnMAP | 35/29 | 0.89 | 0.79 | |

| BoBiLSTM | 1, 2, 3, 4, 6, 18, 29, 35, 37, 38, 39, 42, 43, 44, 45, 46, 47, 52, 53 | 18/38, 35/29 | 0.93 | 0.64 | |

| BWO_BiGRU | 1–67 (VNIR) | 0.93 | 0.64 | ||

| BWOS_BiGRU | 1–67 (VNIR) | 35/29 | 0.93 | 0.63 | |

| Stumpf | Sentinel-2 | 3/2 | 0.66 | 1.41 | |

| BoBiLSTM | 2, 3, 4, 9 | 3/2 | 0.91 | 0.72 | |

| BWO_BiGRU | 1–9, 11, 12 | 0.91 | 0.72 | ||

| BWOS_BiGRU | 1–9, 11, 12 | 3/2 | 0.91 | 0.70 | |

| Stumpf | Landsat 9 | 3/2 | 0.54 | 1.64 | |

| BoBiLSTM | 2, 3, 4 | 3/2 | 0.89 | 0.77 | |

| BWO_BiGRU | 1–7, 10 | 0.91 | 0.71 | ||

| BWOS_BiGRU | 1–7, 10 | 3/2 | 0.91 | 0.69 |

| Model | Satellite Sensor | RMSE of Water Depth Interval (m) | ||||||

|---|---|---|---|---|---|---|---|---|

| [0–2 m] | [2–4 m] | [4–6 m] | [6–8 m] | [8–10 m] | [10–12 m] | [0–12 m] | ||

| Stumpf | EnMAP | 0.99 | 0.82 | 0.72 | 0.61 | 0.95 | 2.78 | 0.79 |

| BoBiLSTM | 0.84 | 0.69 | 0.58 | 0.46 | 0.70 | 4.66 | 0.64 | |

| BWO_BiGRU | 0.81 | 0.70 | 0.59 | 0.45 | 0.70 | 4.82 | 0.64 | |

| BWOS_BiGRU | 0.83 | 0.70 | 0.58 | 0.45 | 0.69 | 4.58 | 0.63 | |

| Stumpf | Sentinel-2 | 2.01 | 1.39 | 0.94 | 0.93 | 2.11 | 4.07 | 1.41 |

| BoBiLSTM | 0.91 | 0.79 | 0.65 | 0.55 | 0.78 | 4.80 | 0.72 | |

| BWO_BiGRU | 0.90 | 0.81 | 0.64 | 0.54 | 0.80 | 4.55 | 0.72 | |

| BWOS_BiGRU | 0.88 | 0.79 | 0.63 | 0.53 | 0.76 | 4.78 | 0.70 | |

| Stumpf | Landsat 9 | 2.40 | 1.71 | 0.90 | 1.00 | 2.50 | 4.48 | 1.64 |

| BoBiLSTM | 0.97 | 0.82 | 0.71 | 0.58 | 0.86 | 4.61 | 0.77 | |

| BWO_BiGRU | 0.91 | 0.79 | 0.65 | 0.50 | 0.79 | 4.53 | 0.71 | |

| BWOS_BiGRU | 0.87 | 0.77 | 0.64 | 0.50 | 0.78 | 4.50 | 0.69 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xi, X.; Guo, G.; Gu, J. Band Weight-Optimized BiGRU Model for Large-Area Bathymetry Inversion Using Satellite Images. J. Mar. Sci. Eng. 2025, 13, 246. https://doi.org/10.3390/jmse13020246

Xi X, Guo G, Gu J. Band Weight-Optimized BiGRU Model for Large-Area Bathymetry Inversion Using Satellite Images. Journal of Marine Science and Engineering. 2025; 13(2):246. https://doi.org/10.3390/jmse13020246

Chicago/Turabian StyleXi, Xiaotao, Gongju Guo, and Jianxiang Gu. 2025. "Band Weight-Optimized BiGRU Model for Large-Area Bathymetry Inversion Using Satellite Images" Journal of Marine Science and Engineering 13, no. 2: 246. https://doi.org/10.3390/jmse13020246

APA StyleXi, X., Guo, G., & Gu, J. (2025). Band Weight-Optimized BiGRU Model for Large-Area Bathymetry Inversion Using Satellite Images. Journal of Marine Science and Engineering, 13(2), 246. https://doi.org/10.3390/jmse13020246