OWTDNet: A Novel CNN-Mamba Fusion Network for Offshore Wind Turbine Detection in High-Resolution Remote Sensing Images

Abstract

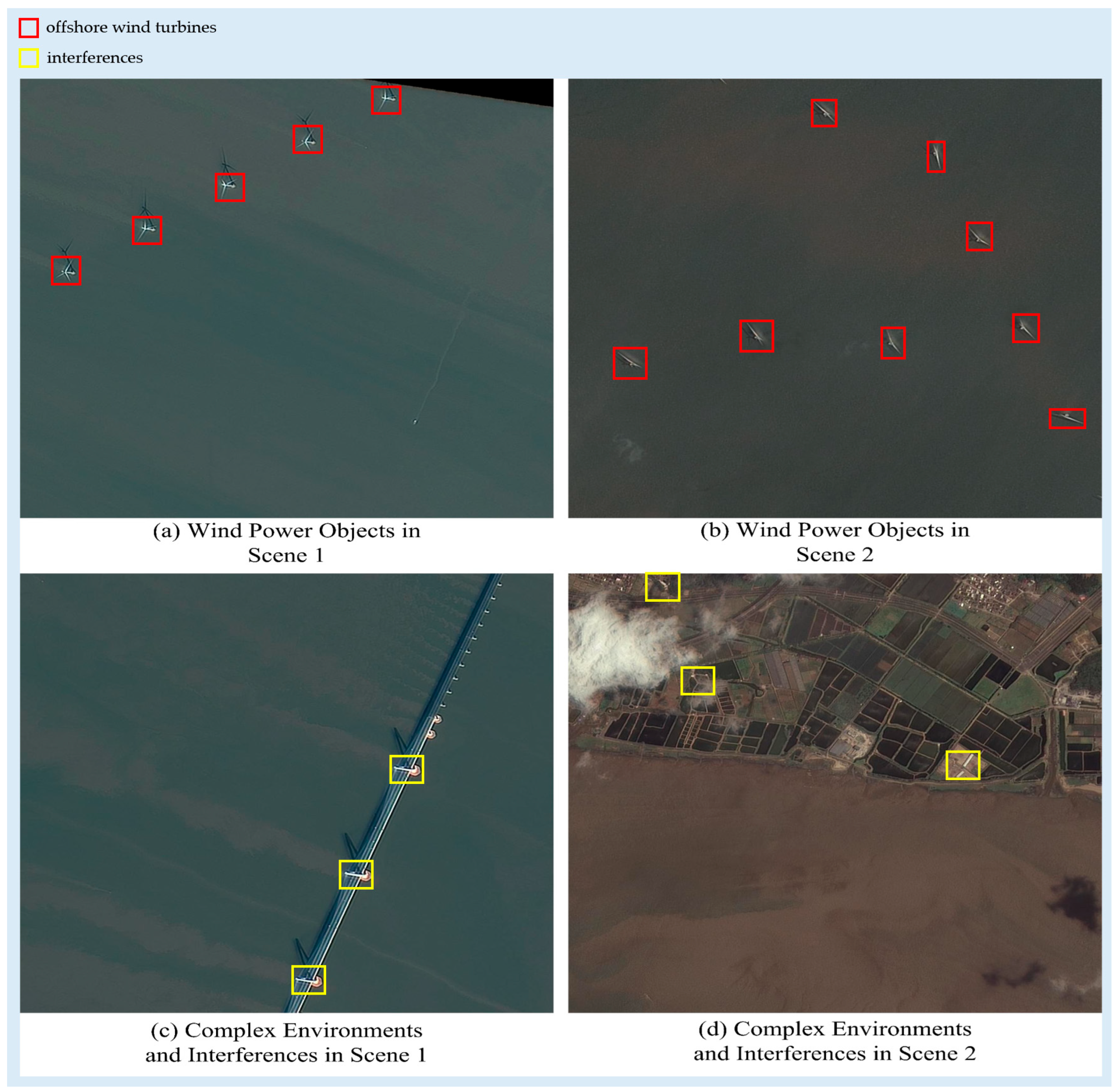

1. Introduction

- (1)

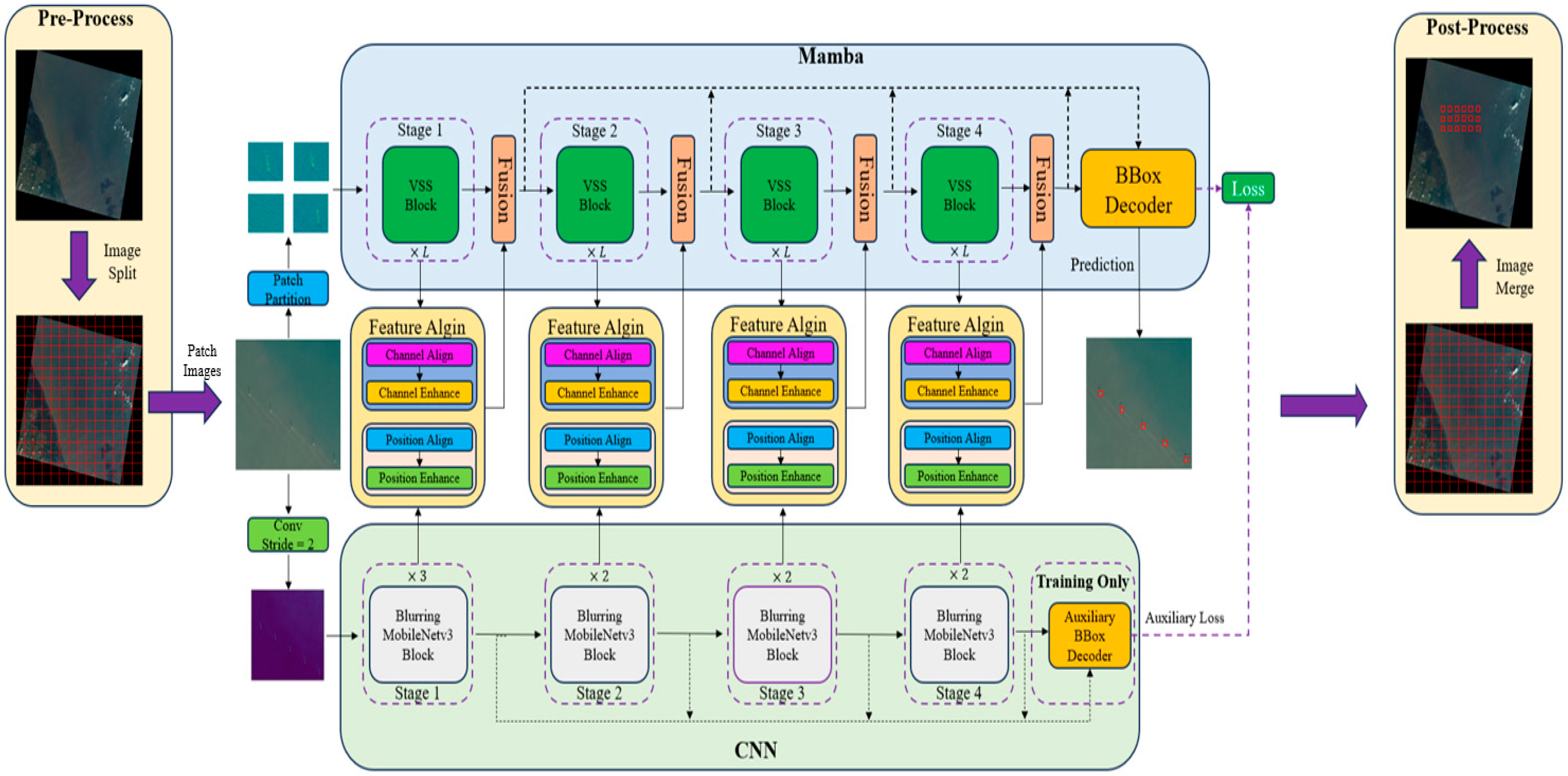

- We propose a novel offshore wind turbine detection network called OWTDNet, which synergistically integrates Mamba and CNNs as feature encoding backbones.

- (2)

- An auxiliary lightweight encoding branch termed B-Mv3 is introduced to enrich the local feature information related to wind turbine targets.

- (3)

- We introduce a feature alignment module to mitigate disparities between Mamba and CNN features, focusing on both channel and position dimensions.

- (4)

- A feature enhancement approach is proposed to amplify the response weights of small-sized OWTs targets against backgrounds and interferences.

- (5)

- Extensive experimental results demonstrate that our OWTDNet achieves an optimal balance between performance and cost, and the proposed algorithm has been successfully applied in a real-world ocean monitoring system.

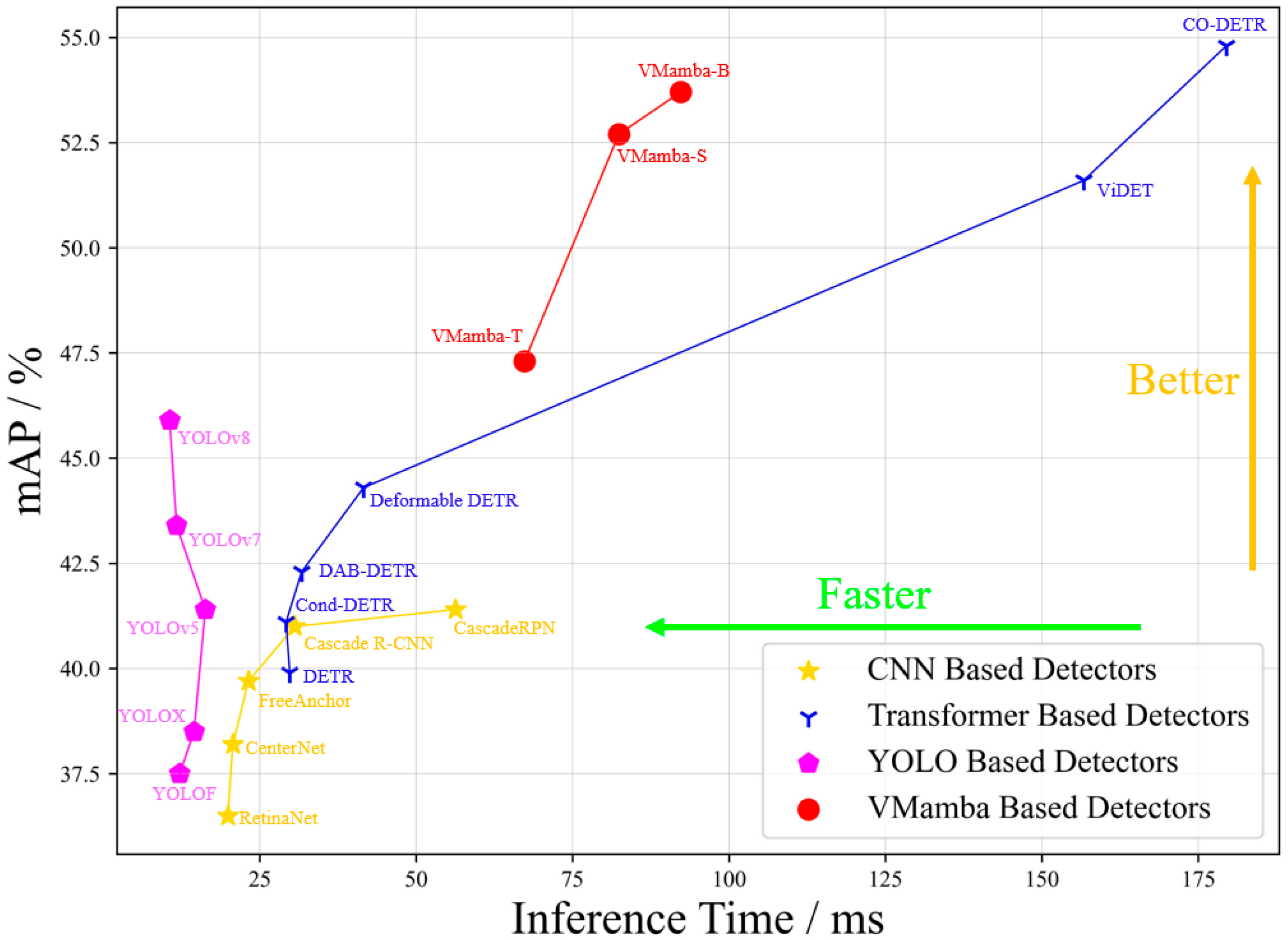

2. Related Works

2.1. CNN-Based Object Detection

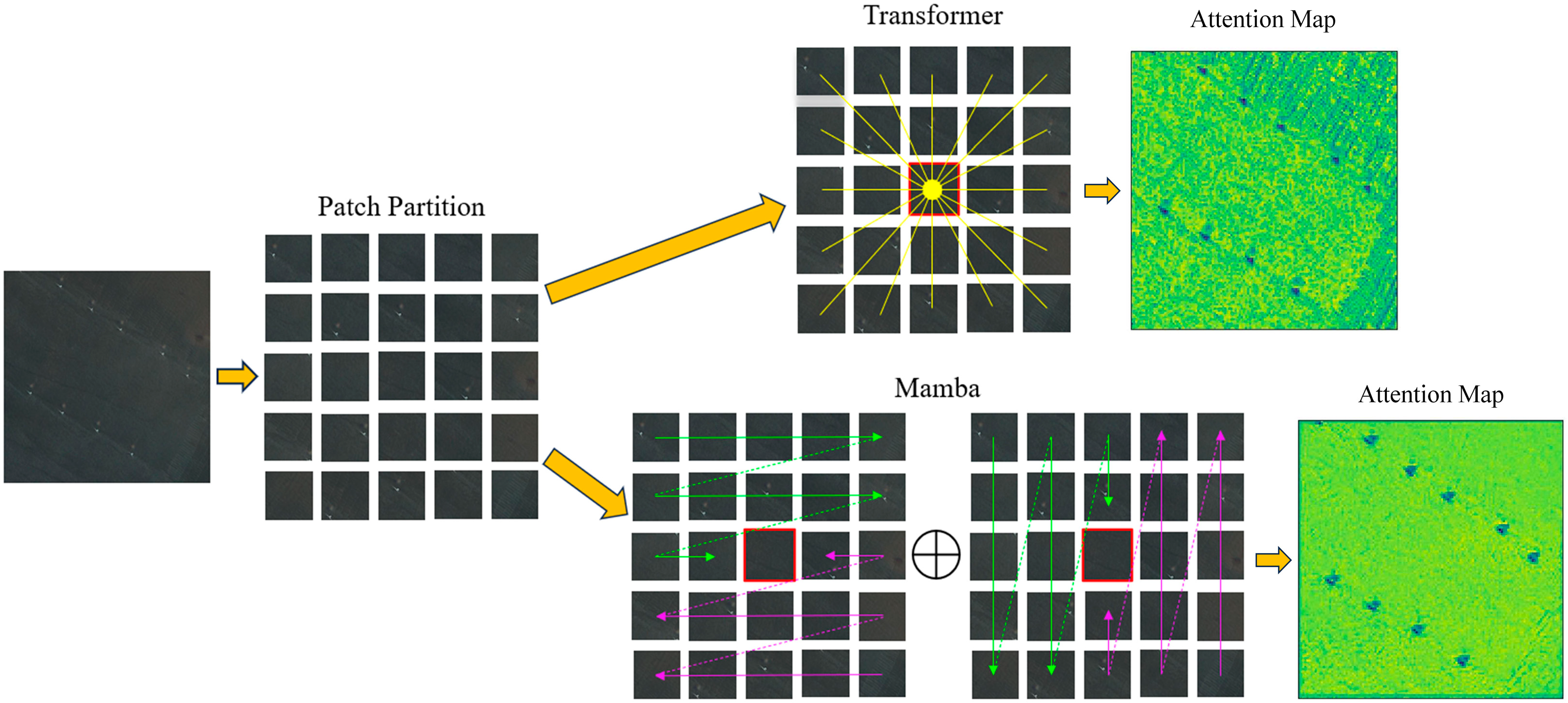

2.2. Transformer-Based Object Detection

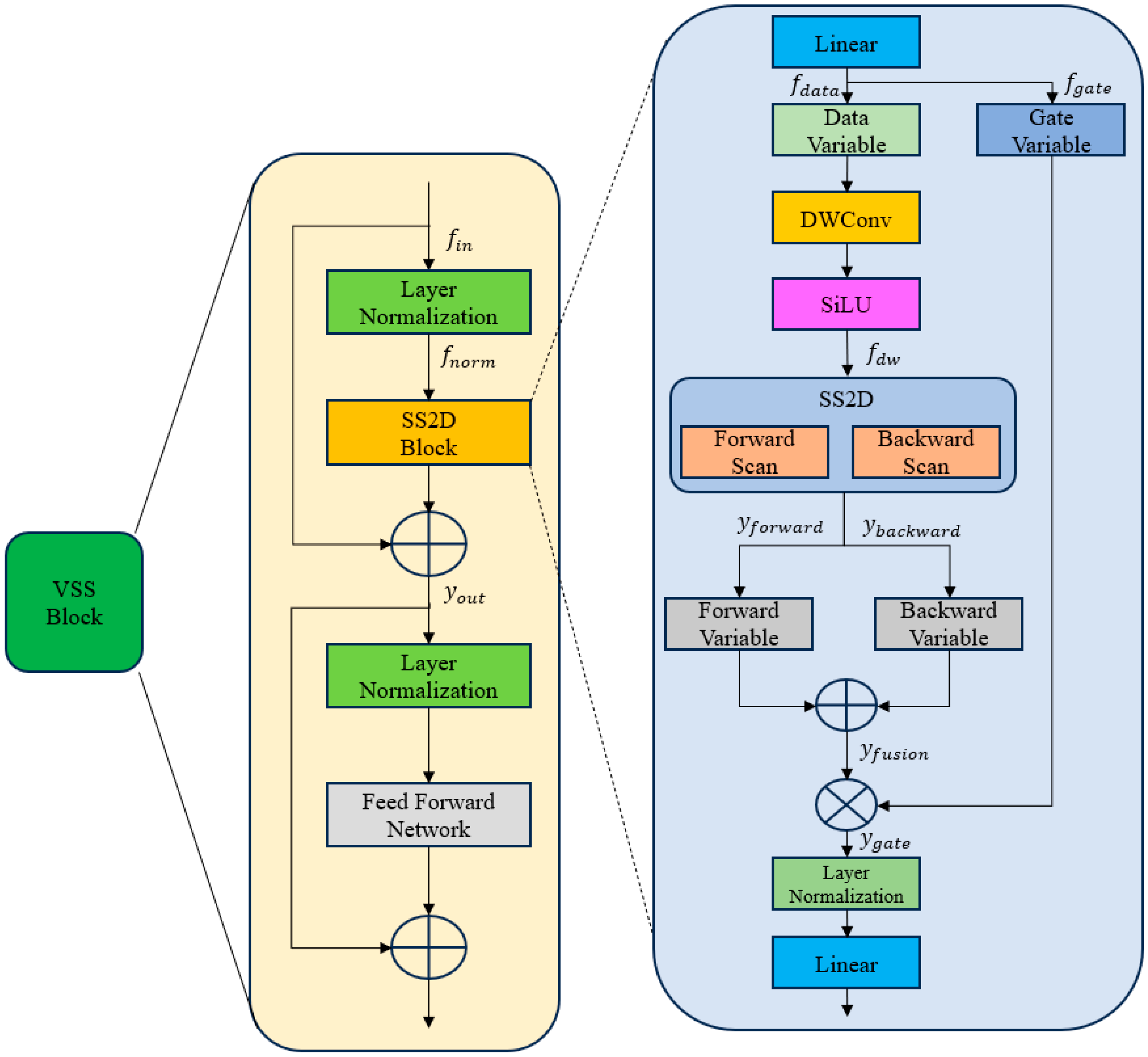

2.3. Mamba-Based Object Detection

3. Materials and Methods

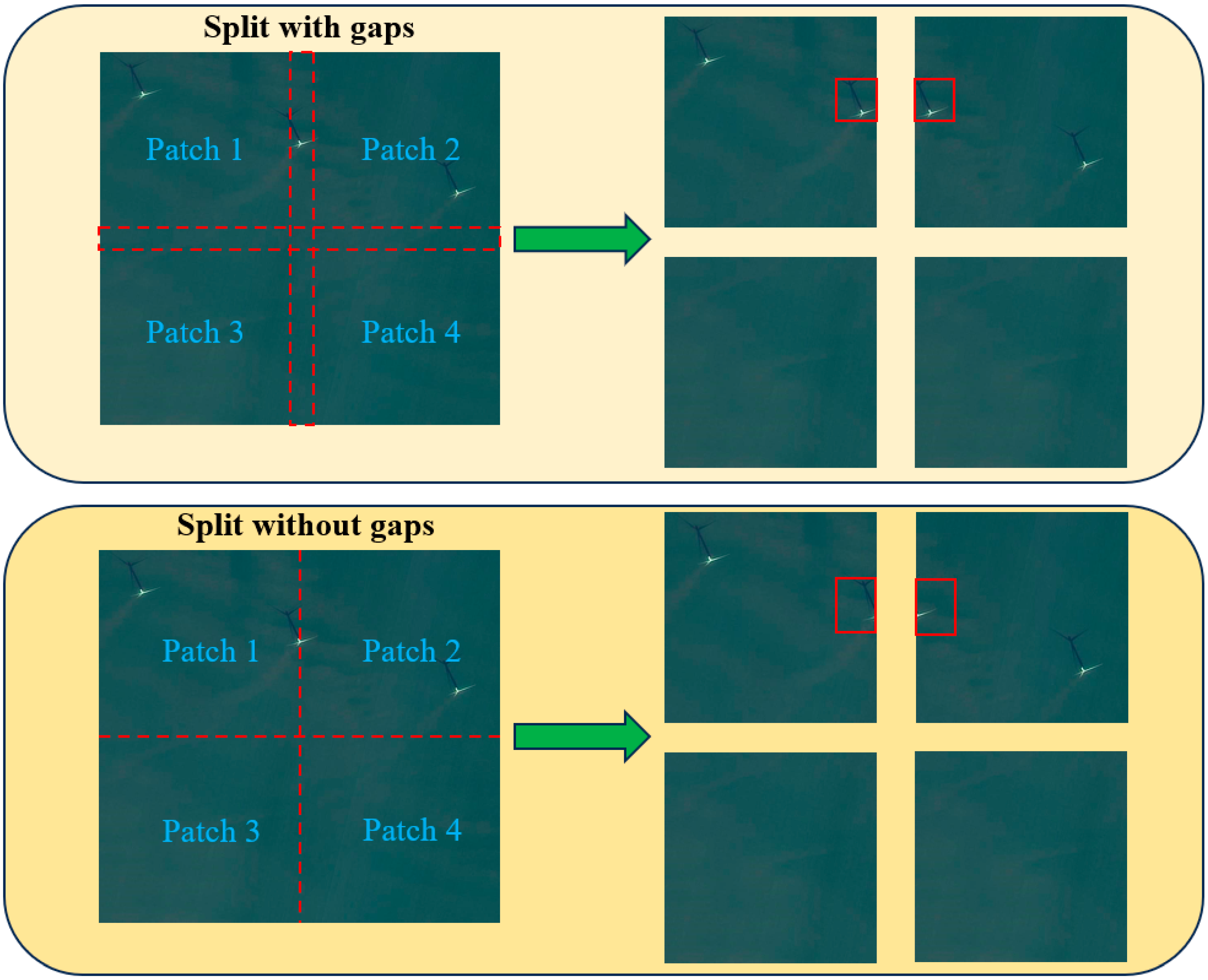

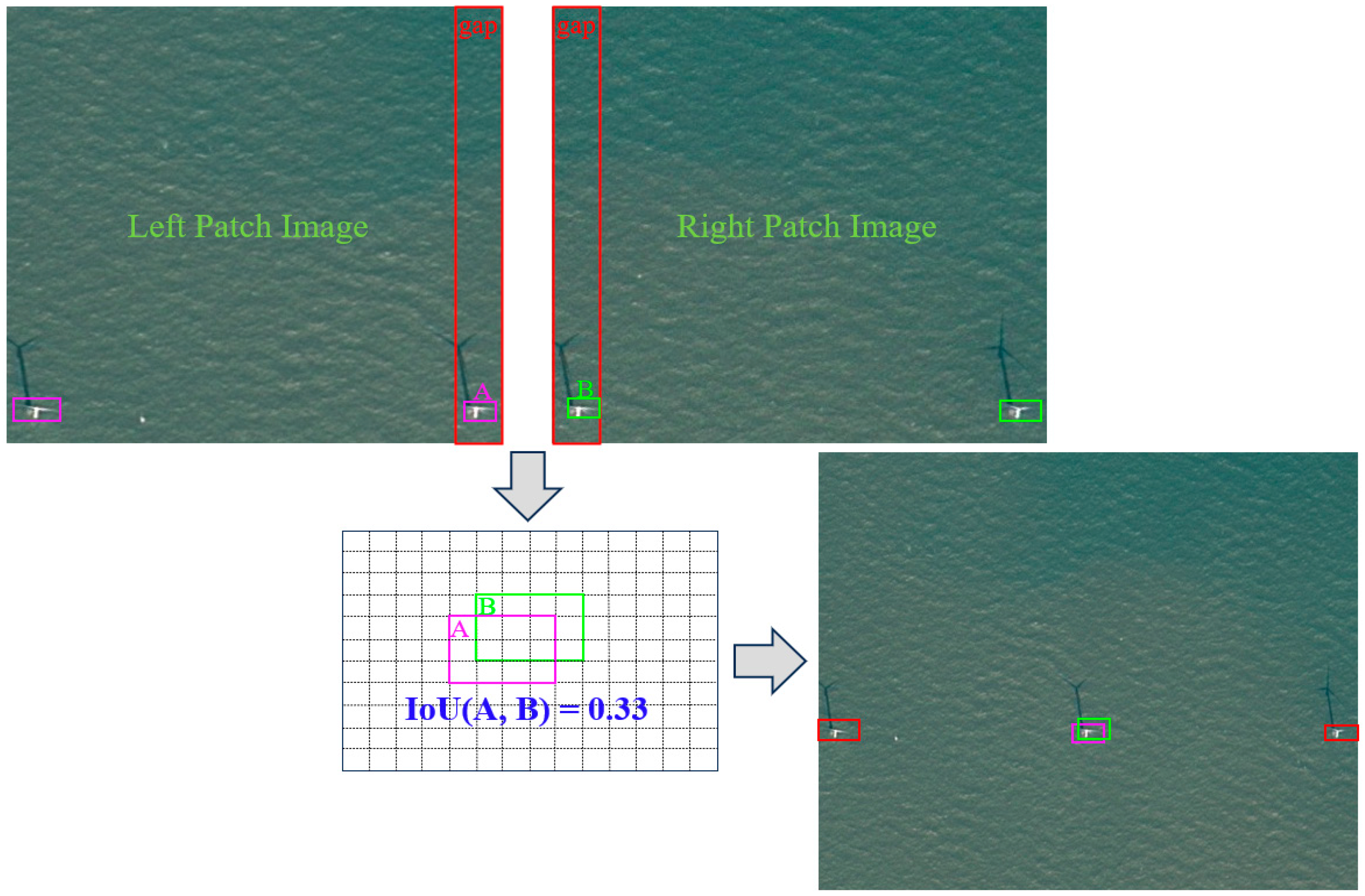

3.1. Pre-Processing of High-Resolution Remote Images

3.2. Review of Mamba

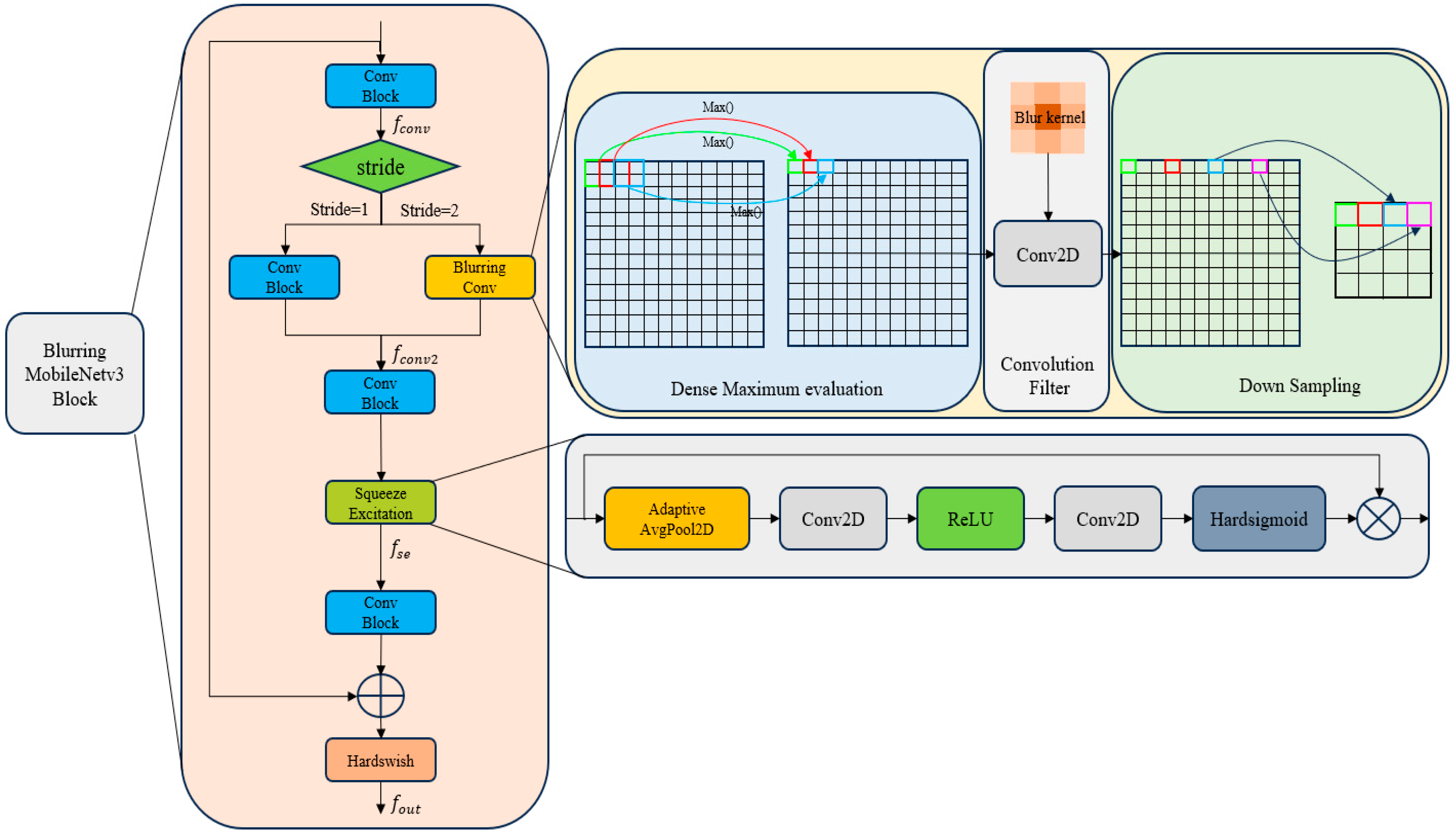

3.3. CNN Encoding Network

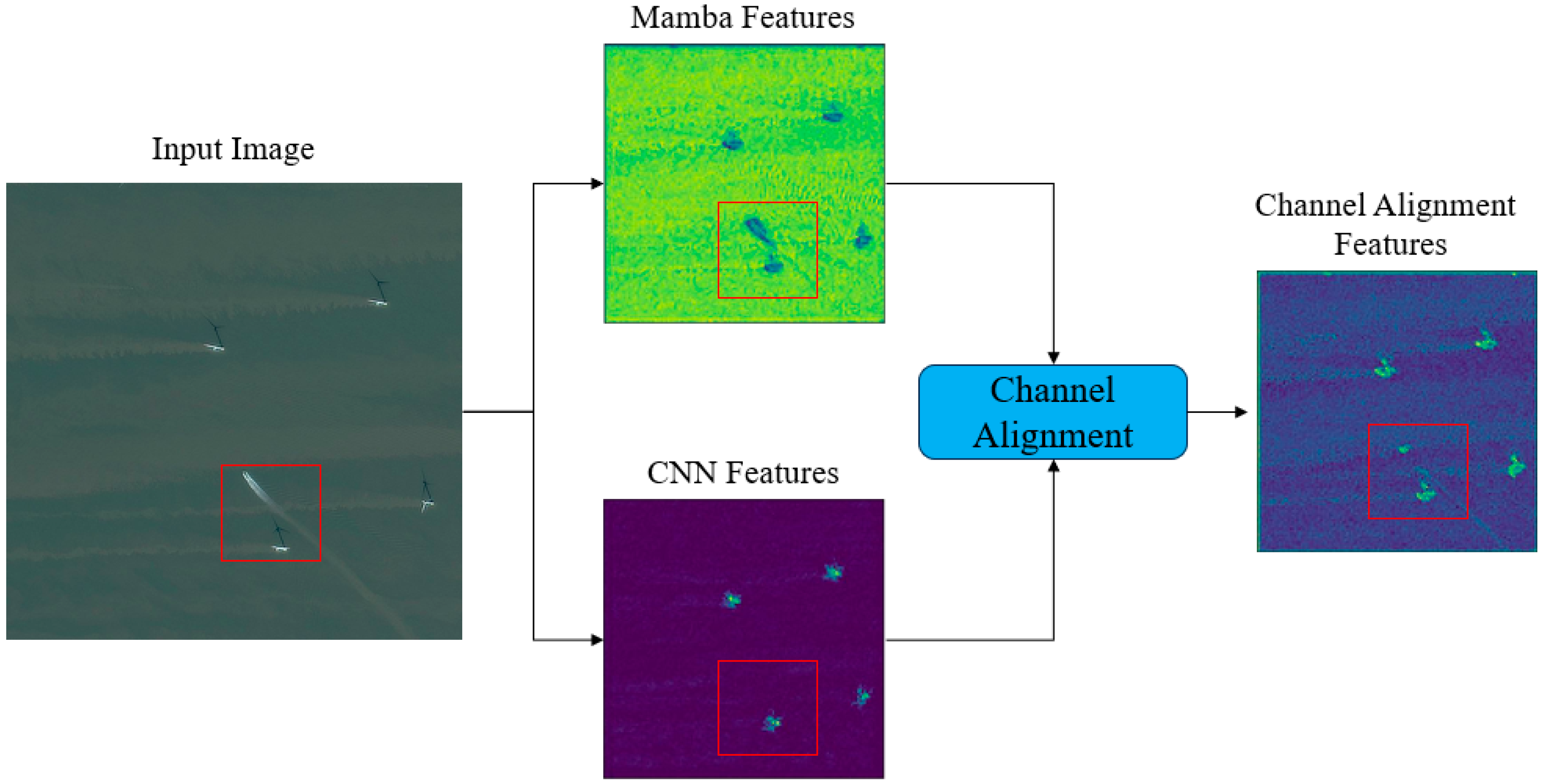

3.4. Feature Alignment Module

3.4.1. Channel Alignment Process

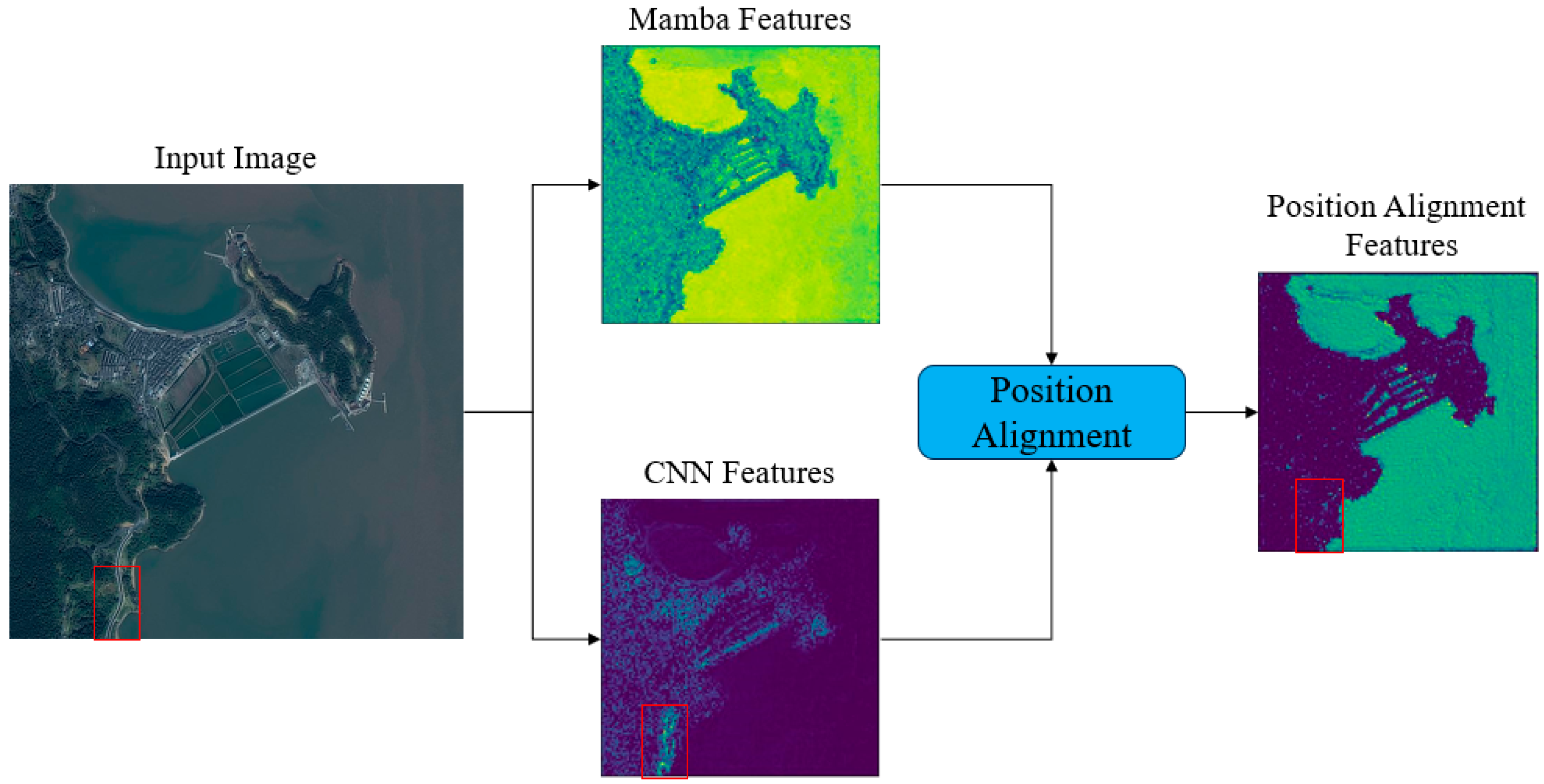

3.4.2. Positional Alignment Process

3.5. Loss Functions

4. Results

4.1. Satellite Datasets

4.2. Experimental Setups

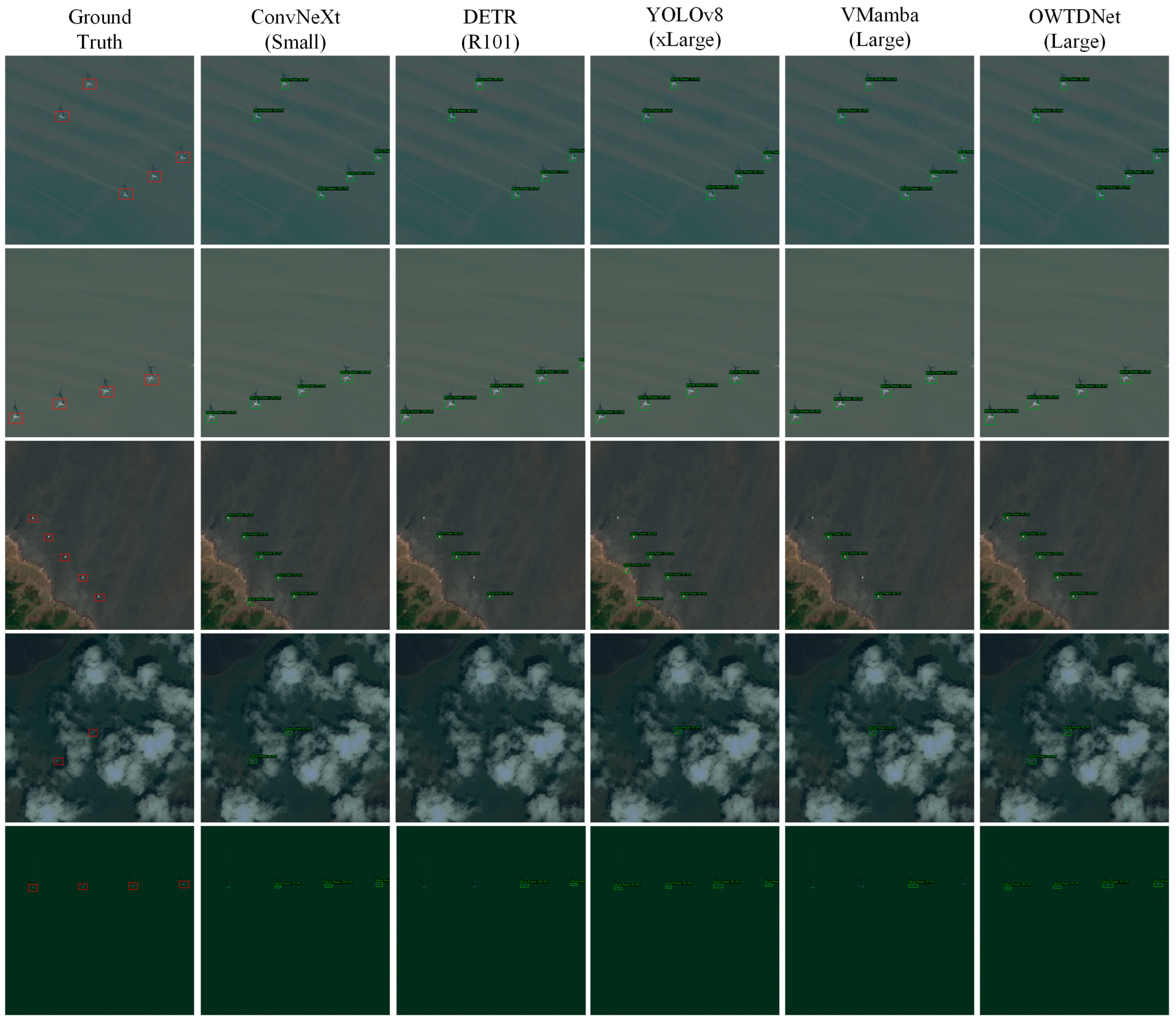

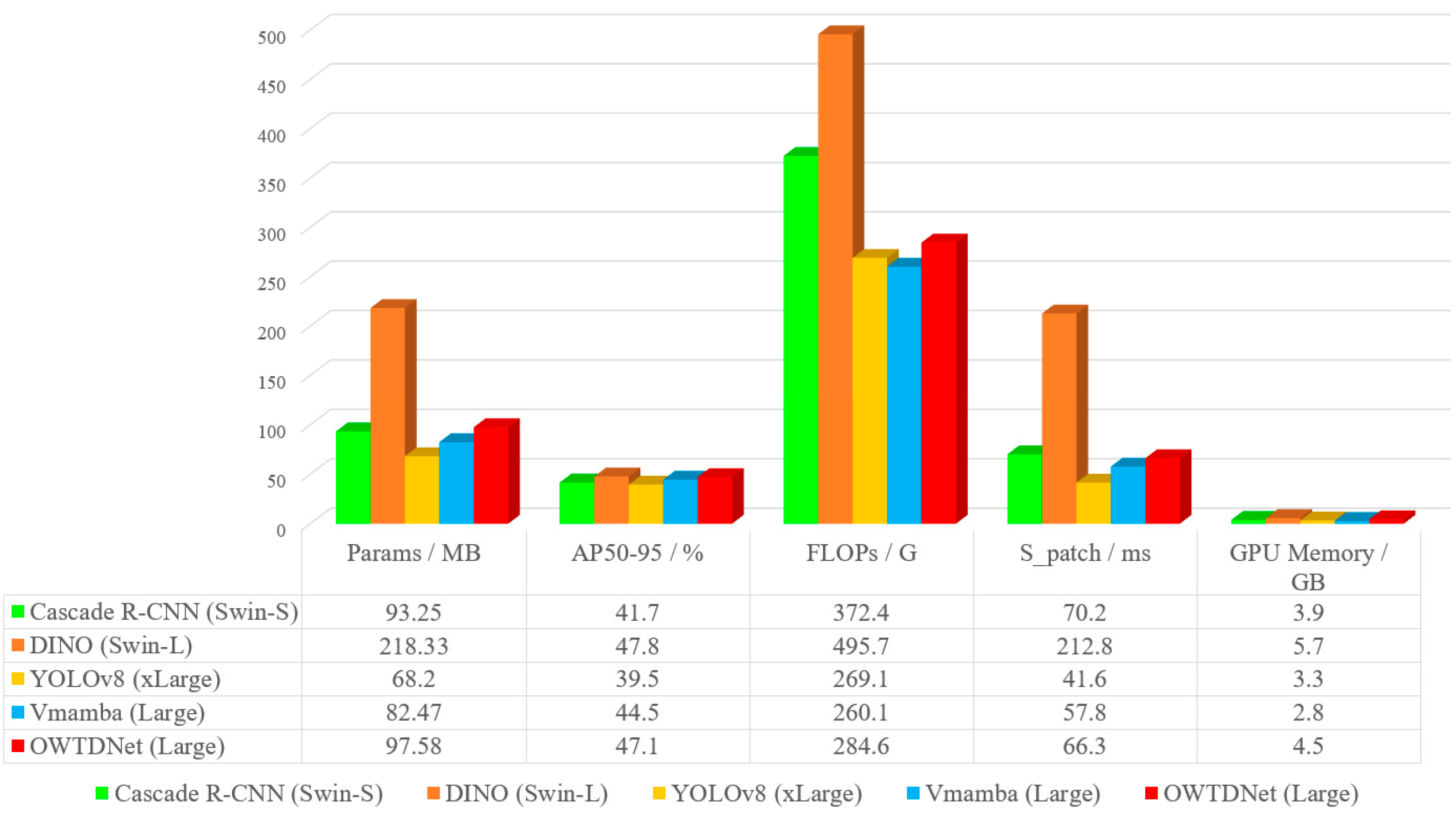

4.3. Main Results

4.4. Ablation Study

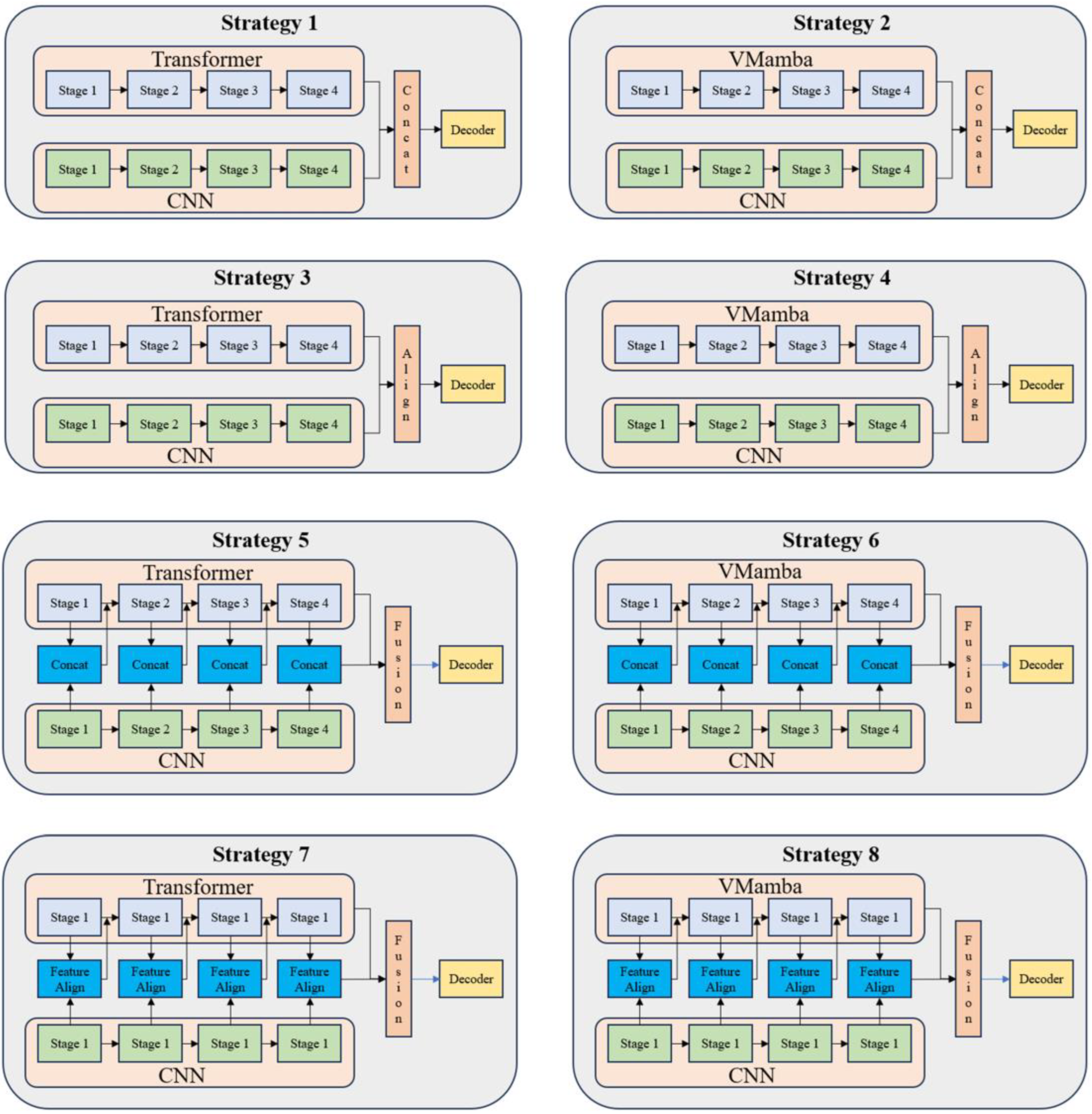

4.4.1. Discussion of Splitting Strategy

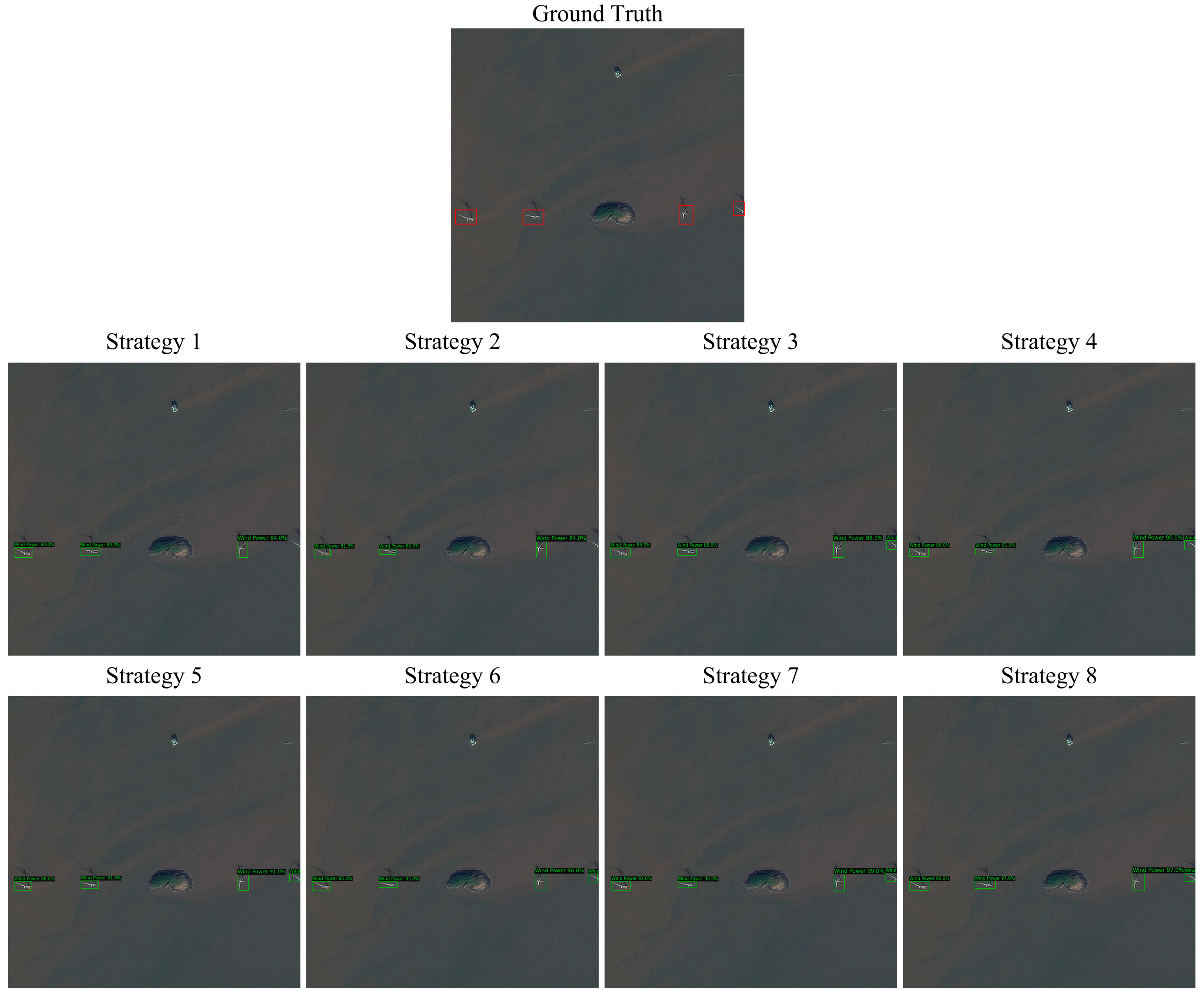

4.4.2. Discussion of Feature Alignment

4.4.3. Discussion of Blurring Convolution

4.4.4. Discussion of Feature Enhance in Alignment Module

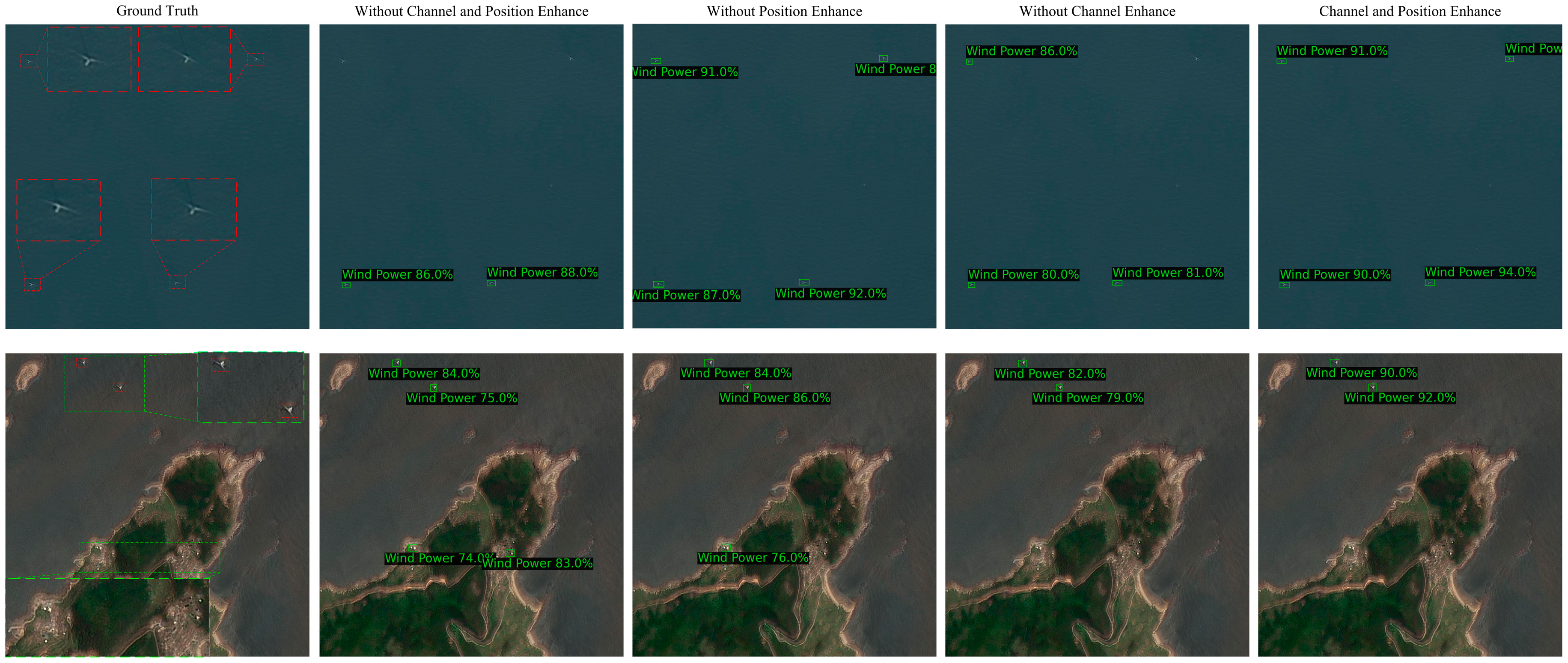

4.4.5. Discussion of Loss Functions

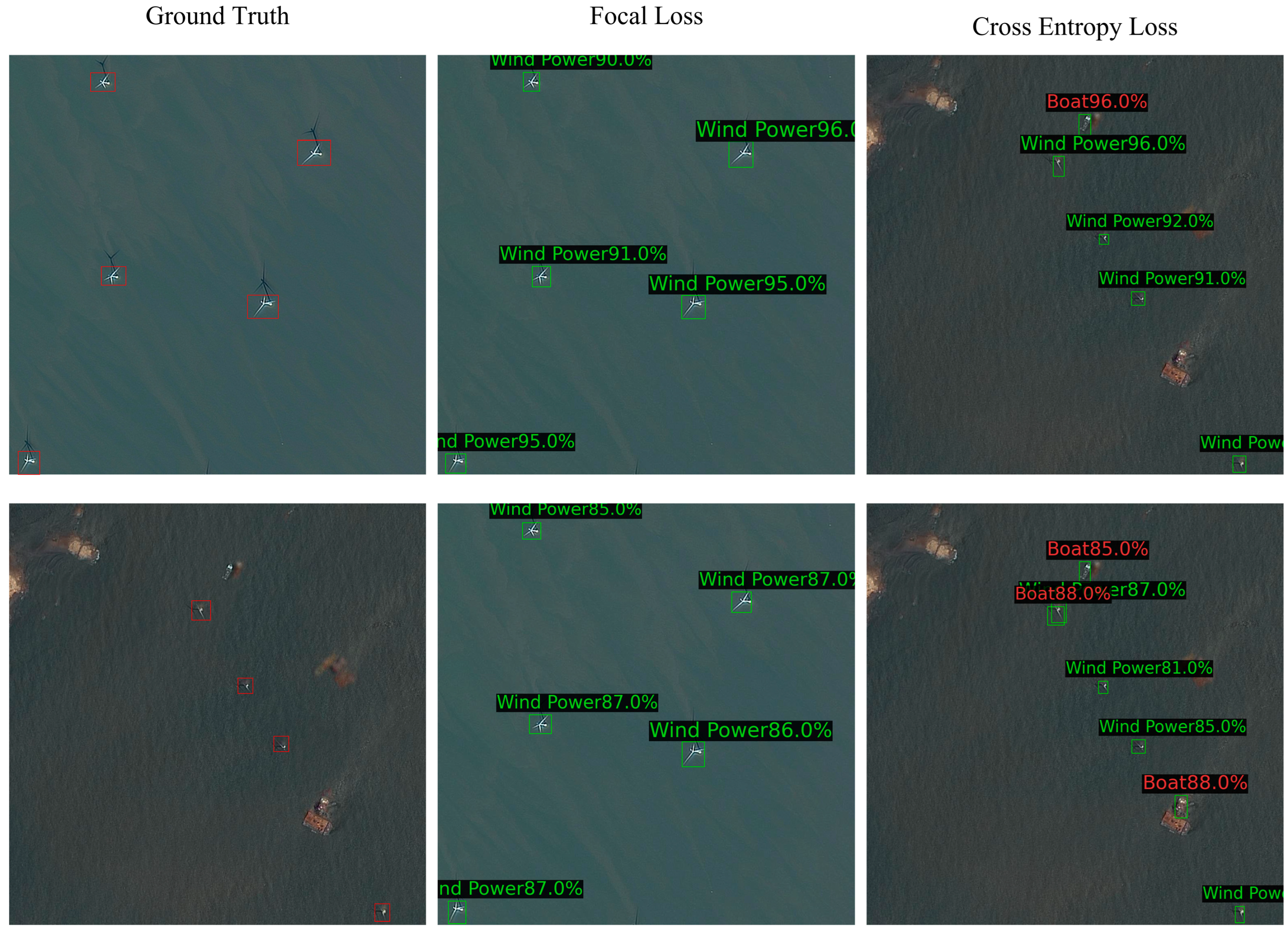

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, J.; Jia, X.; Hu, J.; Tan, K. Moving vehicle detection for remote sensing video surveillance with nonstationary satellite platform. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 5185–5198. [Google Scholar] [CrossRef]

- Melillos, G.; Themistocleous, K.; Danezis, C.; Michaelides, S.; Hadjimitsis, D.G.; Jacobsen, S.; Tings, B. The use of remote sensing for maritime surveillance for security and safety in Cyprus. In Detection and Sensing of Mines, Explosive Objects, and Obscured Targets XXV; SPIE: Washington, DC, USA, 2020; Volume 11418, pp. 141–152. [Google Scholar]

- Zhou, H.; Yuan, X.; Zhou, H.; Shen, H.; Ma, L.; Sun, L.; Sun, H. Surveillance of pine wilt disease by high resolution satellite. J. For. Res. 2022, 33, 1401–1408. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Wang, Y.; Bashir, S.M.A.; Khan, M.; Ullah, Q.; Wang, R.; Song, Y.; Niu, Y. Remote sensing image super-resolution and object detection: Benchmark and state of the art. Expert Syst. Appl. 2022, 197, 116793. [Google Scholar] [CrossRef]

- Yang, Z.; Liu, S.; Hu, H.; Wang, L.; Lin, S. Reppoints: Point set representation for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9657–9666. [Google Scholar]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Online, 10–17 October 2021; pp. 3520–3529. [Google Scholar]

- Li, W.; Chen, Y.; Hu, K.; Zhu, J. Oriented reppoints for aerial object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 1829–1838. [Google Scholar]

- Li, X.; Deng, J.; Fang, Y. Few-shot object detection on remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5601614. [Google Scholar] [CrossRef]

- Lu, X.; Ji, J.; Xing, Z.; Miao, Q. Attention and feature fusion SSD for remote sensing object detection. IEEE Trans. Instrum. Meas. 2021, 70, 1010309. [Google Scholar] [CrossRef]

- Shivappriya, S.N.; Priyadarsini, M.J.P.; Stateczny, A.; Puttamadappa, C.; Parameshachari, B.D. Cascade object detection and remote sensing object detection method based on trainable activation function. Remote Sens. 2021, 13, 200. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Kaur, R.; Singh, S. A comprehensive review of object detection with deep learning. Digit. Signal Process. 2023, 132, 103812. [Google Scholar] [CrossRef]

- Amjoud, A.B.; Amrouch, M. Object detection using deep learning, CNNs and vision Transformers: A review. IEEE Access 2023, 11, 35479–35516. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar]

- Uijlings, J.R.; Van De Sande, K.E.; Gevers, T.; Smeulders, A.W. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision, ICCV, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 11976–11986. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; Kwon, Y.; Xie, T.; Fang, J.; Michael, K.; Lorna; Abhiram, V.; et al. ultralytics/yolov5: v6.1—TensorRT, TensorFlow Edge TPU and OpenVINO Export and Inference; Zenodo: Geneva, Switzerland, 2022. [Google Scholar] [CrossRef]

- Chen, Q.; Wang, Y.; Yang, T.; Zhang, X.; Cheng, J.; Sun, J. You only look one-level feature. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 19–25 June 2021; pp. 13039–13048. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7464–7475. [Google Scholar]

- Wu, T.; Dong, Y. YOLO-SE: Improved YOLOv8 for remote sensing object detection and recognition. Appl. Sci. 2023, 13, 12977. [Google Scholar] [CrossRef]

- Park, M.H.; Choi, J.H.; Lee, W.J. Object detection for various types of vessels using the YOLO algorithm. J. Adv. Mar. Eng. Technol. 2024, 48, 81–88. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Gevorgyan, Z. SIoU loss: More powerful learning for bounding box regression. arXiv 2022, arXiv:2205.12740. [Google Scholar] [CrossRef]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding box regression loss with dynamic focusing mechanism. arXiv 2023, arXiv:2301.10051. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End to-end object detection with transformers. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Meng, D.; Chen, X.; Fan, Z.; Zeng, G.; Li, H.; Yuan, Y.; Wang, J. Conditional detr for fast training convergence. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Online, 10–17 October 2021; pp. 3651–3660. [Google Scholar]

- Liu, S.; Li, F.; Zhang, H.; Yang, X.; Qi, X.; Su, H.; Zhang, L. Dab-detr: Dynamic anchor boxes are better queries for detr. arXiv 2022, arXiv:2201.12329. [Google Scholar] [CrossRef]

- Zhang, H.; Li, F.; Liu, S.; Zhang, L.; Su, H.; Zhu, J.; Shum, H.Y. Dino: Detr with improved denoising anchor boxes for end-to-end object detection. arXiv 2022, arXiv:2203.03605. [Google Scholar]

- Wang, Z.; Li, C.; Xu, H.; Zhu, X. Mamba YOLO: SSMs-Based YOLO For Object Detection. arXiv 2024, arXiv:2406.05835. [Google Scholar] [CrossRef]

- Zhou, W.; Kamata, S.I.; Wang, H.; Wong, M.S. Mamba-in-Mamba: Centralized Mamba-Cross-Scan in Tokenized Mamba Model for Hyperspectral Image Classification. arXiv 2024, arXiv:2405.12003. [Google Scholar] [CrossRef]

- Dong, W.; Zhu, H.; Lin, S.; Luo, X.; Shen, Y.; Liu, X.; Zhang, B. Fusion-mamba for cross-modality object detection. arXiv 2024, arXiv:2404.09146. [Google Scholar] [CrossRef]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision mamba: Efficient visual representation learning with bidirectional state space model. arXiv 2024, arXiv:2401.09417. [Google Scholar] [CrossRef]

- Xie, J.; Tian, T.; Hu, R.; Yang, X.; Xu, Y.; Zan, L. A Novel Detector for Wind Turbines in Wide-Ranging, Multi-Scene Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 17725–17738. [Google Scholar] [CrossRef]

- Zhao, S.; Chen, H.; Zhang, X.; Xiao, P.; Bai, L.; Ouyang, W. Rs-mamba for large remote sensing image dense prediction. arXiv 2024, arXiv:2404.02668. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Volume 18, pp. 234–241. [Google Scholar]

- Liu, M.; Dan, J.; Lu, Z.; Yu, Y.; Li, Y.; Li, X. CM-UNet: Hybrid CNN-Mamba UNet for Remote Sensing Image Semantic Segmentation. arXiv 2024, arXiv:2405.10530. [Google Scholar]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Jiao, J.; Liu, Y. Vmamba: Visual state space model. Adv. Neural Inf. Process. Syst. 2024, 37, 103031–103063. [Google Scholar]

- Zhang, R. Making convolutional networks shift-invariant again. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 7324–7334. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Adam, H. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Zheng, K.; Chen, Y.; Wang, J.; Liu, Z.; Bao, S.; Zhan, J.; Shen, N. Enhancing Remote Sensing Semantic Segmentation Accuracy and Efficiency Through Transformer and Knowledge Distillation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 4074–4092. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, T.; Zhao, L.; Hu, L.; Wang, Z.; Niu, Z.; Cheng, P.; Chen, K.; Zeng, X.; Wang, Z. Ringmo-lite: A remote sensing lightweight network with cnn-transformer hybrid framework. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5608420. [Google Scholar] [CrossRef]

| Splitting Strategies | Advantages | Disadvantages |

|---|---|---|

| 1 |

|

|

| 2 |

|

|

| Satellite Name | Spatial Resolution | Longitude Range | Latitude Range |

|---|---|---|---|

| GF1 | Full color with 2 m | ||

| GF2 | Full color with 1 m | ||

| GF7 | Full color with 0.8 m | ||

| ZY1 | Full color with 5 m | ||

| ZY3 | Full color with 2.1 m |

| Platform | Name | Description |

|---|---|---|

| Train Hardware | CPU | Intel Xeon 6330 2.0 GHz |

| GPU | NVIDIA RTX4090 48 GB × 4 | |

| Memory | DDR5 6800 MHz 64 GB | |

| Deploy Hardware | CPU | Intel 12700KF 3.6 GHz |

| GPU | NVIDIA RTX3090 24 GB × 1 | |

| Memory | DDR4 3200MHz 64 GB | |

| Software | Anaconda | Version 4.12.0 |

| Python | Version 3.8.5 | |

| CUDA | Version 12.1 | |

| cuDNN | Version 8.9.3 | |

| Pytorch | Version 2.1.0 | |

| MMCV | Version 2.1.0 | |

| ultralytics | Version 8.0.6 | |

| VMamba | Version 20240525 | |

| MMDetection | Version 3.3.0 |

| Method Category | Method Name | Proposed Year | Learning Rate | Train Epochs | Batch Size | Augmentations |

|---|---|---|---|---|---|---|

| CNN | Faster R-CNN | 2015 | 0.001 | 30 | 32 | |

| RetinaNet | 2017 | 0.005 | 30 | 32 | RandomResize | |

| Cascade R-CNN [53] | 2018 | 0.002 | 30 | 32 | RandomCrop | |

| CenterNet | 2019 | 0.001 | 30 | 32 | RandomFlip | |

| ConvNext | 2022 | 0.0001 | 30 | 32 | ||

| Transformer | DETR | 2020 | 0.0001 | 45 | 8 | |

| Deformable DETR | 2021 | 0.0001 | 45 | 8 | RandomResize | |

| Conditional DETR | 2021 | 0.0001 | 45 | 8 | RandomCrop | |

| DAB-DETR | 2022 | 0.0001 | 45 | 8 | RandomFlip | |

| DINO | 2023 | 0.0001 | 45 | 8 | ||

| YOLO | YOLOv3 | 2018 | 0.001 | 50 | 64 | RandomResize |

| YOLOF | 2021 | 0.001 | 50 | 64 | RandomCrop | |

| YOLOX | 2021 | 0.001 | 50 | 64 | RandomFlip | |

| YOLOv8 | 2023 | 0.001 | 50 | 64 | ||

| Mamba | VMamba | 2024 | 0.0001 | 35 | 12 | RandomResize |

| Mamba-YOLO | 2025 | 0.01 | 35 | 12 | RandomCrop | |

| OWTDNet | CNN+Mamba | 0.0001 | 35 | 12 | RandomFlip |

| Method Name | Backbone | Params /MB | /% | /% | /% | FLOPs /T | /ms | /s | GPU /GB |

|---|---|---|---|---|---|---|---|---|---|

| Faster R-CNN | R50 | 80.29 | 28.3 ± 0.2 | 42.3 ± 0.27 | 34.9 ± 0.21 | 0.35 | 51.43 | 99.4 | 4.8 |

| R101 | 99.28 | 32.7 ± 017 | 50.9 ± 0.22 | 36.8 ± 0.19 | 0.43 | 55.6 | 107.3 | 5.3 | |

| RetinaNet | R50 | 37.96 | 31.3 ± 0.26 | 45.7 ± 0.27 | 34.2 ± 0.23 | 0.26 | 22.7 | 43.8 | 2.8 |

| R101 | 56.96 | 33.6 ± 0.22 | 51.3 ± 0.24 | 37.5 ± 0.22 | 0.32 | 29.5 | 57.1 | 3.8 | |

| Swin-T | 38.47 | 36.3 ± 0.23 | 55.1 ± 0.26 | 42.0 ± 0.27 | 0.31 | 48.3 | 92.8 | 4.4 | |

| Swin-S | 59.77 | 39.7 ± 0.19 | 59.4 ± 0.22 | 46.5 ± 0.24 | 0.44 | 68.9 | 132.9 | 4.7 | |

| Cascade R-CNN | R50 | 69.39 | 33.2 ± 0.18 | 50.7 ± 0.22 | 36.3 ± 0.21 | 0.24 | 35.3 | 64.2 | 3.3 |

| R101 | 88.39 | 34.7 ± 0.15 | 52.8 ± 0.21 | 38.4 ± 0.19 | 0.32 | 38.9 | 75.7 | 4.3 | |

| Swin-T | 71.93 | 37.5 ± 0.21 | 56.4 ± 0.26 | 40.7 ± 0.24 | 0.29 | 52.6 | 101.6 | 3.6 | |

| Swin-S | 93.25 | 41.7 ± 0.16 | 62.2 ± 0.19 | 48.3 ± 0.16 | 0.37 | 70.2 | 135.7 | 3.9 | |

| CenterNet | R50 | 32.29 | 30.4 ± 0.27 | 47.4 ± 0.27 | 33.6 ± 0.29 | 0.21 | 21.3 | 41.1 | 2.9 |

| R101 | 51.28 | 35.1 ± 0.22 | 53.5 ± 0.25 | 39.2 ± 0.23 | 0.29 | 26.4 | 50.9 | 3.9 | |

| ConvNeXt | Tiny | 48.09 | 37.7 ± 0.21 | 57.3 ± 0.21 | 41.7 ± 0.21 | 0.27 | 29.7 | 57.3 | 4.6 |

| Small | 69.73 | 38.6 ± 0.15 | 58.1 ± 0.19 | 42.1 ± 0.22 | 0.36 | 38.1 | 73.5 | 5.3 | |

| DETR | R50 | 41.57 | 36.4 ± 0.19 | 52.0 ± 0.22 | 38.1 ± 0.18 | 0.09 | 26.2 | 50.5 | 3.9 |

| R101 | 60.56 | 39.8 ± 0.17 | 56.7 ± 0.21 | 43.2 ± 0.19 | 0.18 | 35.1 | 67.8 | 4.3 | |

| Deformable DETR | R50 | 40.11 | 35.8 ± 0.24 | 53.9 ± 0.23 | 40.4 ± 0.22 | 0.2 | 37.2 | 71.8 | 4.5 |

| Conditional DETR | R50 | 43.47 | 36.9 ± 0.22 | 56.1 ± 0.22 | 39.4 ± 0.24 | 0.11 | 35.3 | 68.1 | 4.1 |

| DAB-DETR | R50 | 43.72 | 37.2 ± 0.25 | 55.7 ± 0.24 | 41.3 ± 0.26 | 0.11 | 38.4 | 74.2 | 4.2 |

| DINO | R50 | 47.71 | 38.4 ± 0.21 | 56.2 ± 0.27 | 40.9 ± 0.18 | 0.28 | 51.3 | 99.6 | 4.6 |

| Swin-L | 218.33 | 47.8 ± 0.14 | 67.8 ± 0.16 | 53.2 ± 0.12 | 0.49 | 212.8 | 411.3 | 5.7 | |

| YOLOv3 | DarkNet-53 | 61.95 | 29.7 ± 0.35 | 43.5 ± 0.42 | 34.3 ± 0.34 | 0.2 | 14.1 | 27.2 | 4.1 |

| YOLOF | R50 | 44.16 | 33.7 ± 0.27 | 51.7 ± 0.35 | 36.6 ± 0.29 | 0.11 | 15.4 | 29.7 | 2.8 |

| YOLOX | Tiny | 5.06 | 31.2 ± 0.31 | 49.6 ± 0.32 | 35.2 ± 0.27 | 0.02 | 11.2 | 21.6 | 2.5 |

| Small | 8.97 | 33.1 ± 0.29 | 51.8 ± 0.33 | 35.6 ± 0.28 | 0.03 | 13.1 | 25.3 | 2.8 | |

| Large | 54.21 | 34.8 ± 0.31 | 53.3 ± 0.26 | 38.7 ± 0.24 | 0.19 | 19.8 | 38.2 | 5.4 | |

| xLarge | 99.07 | 39.2 ± 0.25 | 58.2 ± 0.22 | 43.1 ± 0.22 | 0.36 | 26.7 | 51.5 | 7.5 | |

| YOLOv8 | Small | 11.2 | 31.9 ± 0.31 | 47.1 ± 0.29 | 37.6 ± 0.26 | 0.03 | 8.7 | 16.8 | 1.6 |

| Medium | 25.9 | 35.3 ± 0.33 | 54.2 ± 0.26 | 39.2 ± 0.25 | 0.08 | 16.9 | 32.6 | 1.8 | |

| Large | 43.7 | 37.7 ± 0.29 | 56.5 ± 0.22 | 41.4 ± 0.29 | 0.17 | 26.3 | 50.7 | 2.1 | |

| xLarge | 68.2 | 39.5 ± 0.27 | 59.1 ± 0.24 | 45.3 ± 0.23 | 0.26 | 41.6 | 80.3 | 3.3 | |

| VMamba | Tiny | 53.86 | 38.3 ± 0.22 | 57.6 ± 0.23 | 42.3 ± 0.22 | 0.16 | 35.2 | 68.2 | 2.2 |

| Small | 68.18 | 43.3 ± 0.17 | 62.6 ± 0.24 | 48.7 ± 0.17 | 0.19 | 41.3 | 79.8 | 2.4 | |

| Large | 82.47 | 44.5 ± 0.16 | 66.4 ± 0.19 | 51.6 ± 0.14 | 0.21 | 57.8 | 110.4 | 2.8 | |

| OWTDNet | Tiny | 66.23 | 40.7 ± 0.18 | 61.5 ± 0.23 | 46.4 ± 0.19 | 0.22 | 46.3 | 89.4 | 3.7 |

| Small | 82.68 | 45.6 ± 0.15 | 68.7 ± 0.24 | 50.7 ± 0.16 | 0.25 | 50.6 | 97.6 | 4.2 | |

| Large | 97.58 | 47.1 ± 0.16 | 67.1 ± 0.18 | 52.4 ± 0.12 | 0.28 | 66.3 | 127.7 | 4.5 |

| Method Name | Backbone | Split | /% | /% | Pre-Process Time/s | Post-Process Time/s | /s |

|---|---|---|---|---|---|---|---|

| Cascade R-CNN | Swin-S | 1 | 41.4 | 0.74 | 5.1 | 3.8 | 144.6 |

| 2 | 41.7 | 0.71 | 4.5 | 0 | 140.0 | ||

| DINO | Swin-L | 1 | 48.1 | 0.86 | 5.1 | 4.3 | 420.7 |

| 2 | 47.8 | 0.82 | 4.5 | 0 | 415.8 | ||

| YOLOv8 | xLarge | 1 | 38.2 | 0.71 | 5.1 | 4.6 | 89.2 |

| 2 | 39.5 | 0.65 | 4.5 | 0 | 84.8 | ||

| VMamba | Large | 1 | 43.9 | 0.77 | 5.1 | 3.8 | 119.3 |

| 2 | 44.5 | 0.74 | 4.5 | 0 | 114.9 | ||

| OWTDNet | Large | 1 | 46.8 | 0.79 | 5.1 | 3.7 | 136.5 |

| 2 | 47.1 | 0.76 | 4.5 | 0 | 132.2 |

| Strategy | Upper Branch | Lower Branch | /% | /ms |

|---|---|---|---|---|

| 1 | Swin Transformer [53] | R50 | 44.8 | 89.6 |

| B-Mv3 | 44.3 | 83.2 | ||

| 2 | VMamba | R50 | 45.2 | 60.7 |

| B-Mv3 | 44.9 | 52.1 | ||

| 3 | Swin Transformer | R50 | 45.2 | 96.7 |

| B-Mv3 | 45.4 | 88.2 | ||

| 4 | VMamba | R50 | 45.3 | 63.2 |

| B-Mv3 | 45.2 | 55.3 | ||

| 5 | Swin Transformer | R50 | 45.8 | 101.6 |

| B-Mv3 | 45.9 | 91.2 | ||

| 6 | VMamba | R50 | 45.5 | 68.4 |

| B-Mv3 | 45.7 | 57.1 | ||

| 7 | Swin Transformer | R50 | 46.9 | 114.9 |

| B-Mv3 | 46.5 | 101.7 | ||

| 8 | VMamba | R50 | 47.5 | 77.9 |

| B-Mv3 | 47.1 | 66.3 |

| Method Name | Backbone | Blurring Convolution | Data Augmentation | /% | /ms |

|---|---|---|---|---|---|

| Cascade R-CNN | R50 | random shift | 35.1 | 39.3 | |

| 34.6 | 39.3 | ||||

| random shift | 33.2 | 35.3 | |||

| 31.6 | 35.3 | ||||

| Mv3 | random shift | 34.3 | 26.1 | ||

| 33.8 | 26.1 | ||||

| random shift | 29.5 | 22.4 | |||

| 28.7 | 22.4 | ||||

| DINO | R50 | random shift | 38.6 | 55.7 | |

| 38.3 | 55.7 | ||||

| random shift | 38.4 | 51.3 | |||

| 38.2 | 51.3 | ||||

| Mv3 | random shift | 37.7 | 44.2 | ||

| 37.5 | 44.2 | ||||

| random shift | 37.4 | 41.1 | |||

| 37.1 | 41.1 | ||||

| OWTDNet | R50 | random shift | 47.9 | 82.3 | |

| 48.1 | 82.3 | ||||

| random shift | 47.5 | 77.9 | |||

| 46.8 | 77.9 | ||||

| Mv3 | random shift | 47.1 | 66.3 | ||

| 46.9 | 66.3 | ||||

| random shift | 46.7 | 61.2 | |||

| 46.3 | 61.2 |

| Backbone | Channel Enhance | Position Enhance | /% | /% | /% |

|---|---|---|---|---|---|

| Tiny | 39.1 | 59.1 | 44.6 | ||

| 40.2 | 61.1 | 45.9 | |||

| 39.8 | 60.3 | 45.2 | |||

| 40.7 | 61.5 | 46.4 | |||

| Small | 44.2 | 65.9 | 48.7 | ||

| 45.4 | 67.5 | 50.2 | |||

| 44.8 | 66.5 | 49.3 | |||

| 45.6 | 68.7 | 50.7 | |||

| Large | 45.7 | 66.5 | 51.8 | ||

| 46.8 | 66.9 | 49.8 | |||

| 46.2 | 66.2 | 52.1 | |||

| 47.1 | 67.1 | 52.4 |

| Method Name | Backbone | Class Loss | Reg Loss | /% |

|---|---|---|---|---|

| Cascade R-CNN | Swin-S | Cross Entropy | Smooth L1 | 37.4 |

| IoU | 39.1 | |||

| GIoU | 39.7 | |||

| DIoU | 39.5 | |||

| CIoU | 39.6 | |||

| Focal | Smooth L1 | 40.6 | ||

| IoU | 41.4 | |||

| GIoU | 41.5 | |||

| DIoU | 41.5 | |||

| CIoU | 41.7 | |||

| DINO | Swin-L | Cross Entropy | Smooth L1 | 45.8 |

| IoU | 46.6 | |||

| GIoU | 46.8 | |||

| DIoU | 46.9 | |||

| CIoU | 47.1 | |||

| Focal | Smooth L1 | 45.7 | ||

| IoU | 46.9 | |||

| GIoU | 47.4 | |||

| DIoU | 47.5 | |||

| CIoU | 47.8 | |||

| VMamba | Large | Cross Entropy | Smooth L1 | 42.2 |

| IoU | 43.5 | |||

| GIoU | 43.8 | |||

| DIoU | 44.2 | |||

| CIoU | 44.1 | |||

| Focal | Smooth L1 | 42.9 | ||

| IoU | 43.7 | |||

| GIoU | 44.1 | |||

| DIoU | 44.4 | |||

| CIoU | 44.5 | |||

| OWTDNet | Large | Cross Entropy | Smooth L1 | 44.2 |

| IoU | 45.1 | |||

| GIoU | 45.8 | |||

| DIoU | 46.2 | |||

| CIoU | 46.4 | |||

| Focal | Smooth L1 | 45.2 | ||

| IoU | 46.3 | |||

| GIoU | 46.9 | |||

| DIoU | 47.0 | |||

| CIoU | 47.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sha, P.; Lu, S.; Xu, Z.; Yu, J.; Li, L.; Zou, Y.; Zhao, L. OWTDNet: A Novel CNN-Mamba Fusion Network for Offshore Wind Turbine Detection in High-Resolution Remote Sensing Images. J. Mar. Sci. Eng. 2025, 13, 2124. https://doi.org/10.3390/jmse13112124

Sha P, Lu S, Xu Z, Yu J, Li L, Zou Y, Zhao L. OWTDNet: A Novel CNN-Mamba Fusion Network for Offshore Wind Turbine Detection in High-Resolution Remote Sensing Images. Journal of Marine Science and Engineering. 2025; 13(11):2124. https://doi.org/10.3390/jmse13112124

Chicago/Turabian StyleSha, Pengcheng, Sujie Lu, Zongjie Xu, Jianhai Yu, Lei Li, Yibo Zou, and Linlin Zhao. 2025. "OWTDNet: A Novel CNN-Mamba Fusion Network for Offshore Wind Turbine Detection in High-Resolution Remote Sensing Images" Journal of Marine Science and Engineering 13, no. 11: 2124. https://doi.org/10.3390/jmse13112124

APA StyleSha, P., Lu, S., Xu, Z., Yu, J., Li, L., Zou, Y., & Zhao, L. (2025). OWTDNet: A Novel CNN-Mamba Fusion Network for Offshore Wind Turbine Detection in High-Resolution Remote Sensing Images. Journal of Marine Science and Engineering, 13(11), 2124. https://doi.org/10.3390/jmse13112124