UWS-YOLO: Advancing Underwater Sonar Object Detection via Transfer Learning and Orthogonal-Snake Convolution Mechanisms

Abstract

1. Introduction

1.1. Research Gap and Motivation

- Most existing methods are directly adapted from optical image detection frameworks without exploiting sonar-specific spatial–spectral characteristics, resulting in suboptimal feature representation.

- The scarcity of large-scale annotated sonar datasets hinders effective training from scratch, leading to overfitting and poor cross-domain generalization.

- Standard convolution operators struggle to accurately model elongated, low-contrast targets such as pipelines, cables, or debris.

- Cross-modal transfer learning between optical and acoustic imagery remains underexplored, leaving untapped potential for leveraging abundant optical underwater datasets.

1.2. Contributions

- We propose UWS-YOLO, a sonar-specific object detection framework that addresses the dual challenges of detecting small, blurred, and elongated targets and maintaining real-time performance under limited annotated sonar datasets.

- We introduce the C2F-Ortho module in the backbone to enhance fine-grained feature representation by integrating orthogonal channel attention, improving sensitivity to low-contrast and small-scale targets.

- We design the DySnConv module in the detection head, which leverages Dynamic Snake Convolution to adaptively capture elongated and contour-aligned structures such as underwater pipelines and cables.

- We propose a cross-modal transfer learning strategy that pre-trains the network on large-scale optical underwater imagery and fine-tunes it on sonar data, effectively mitigating overfitting and bridging the modality gap.

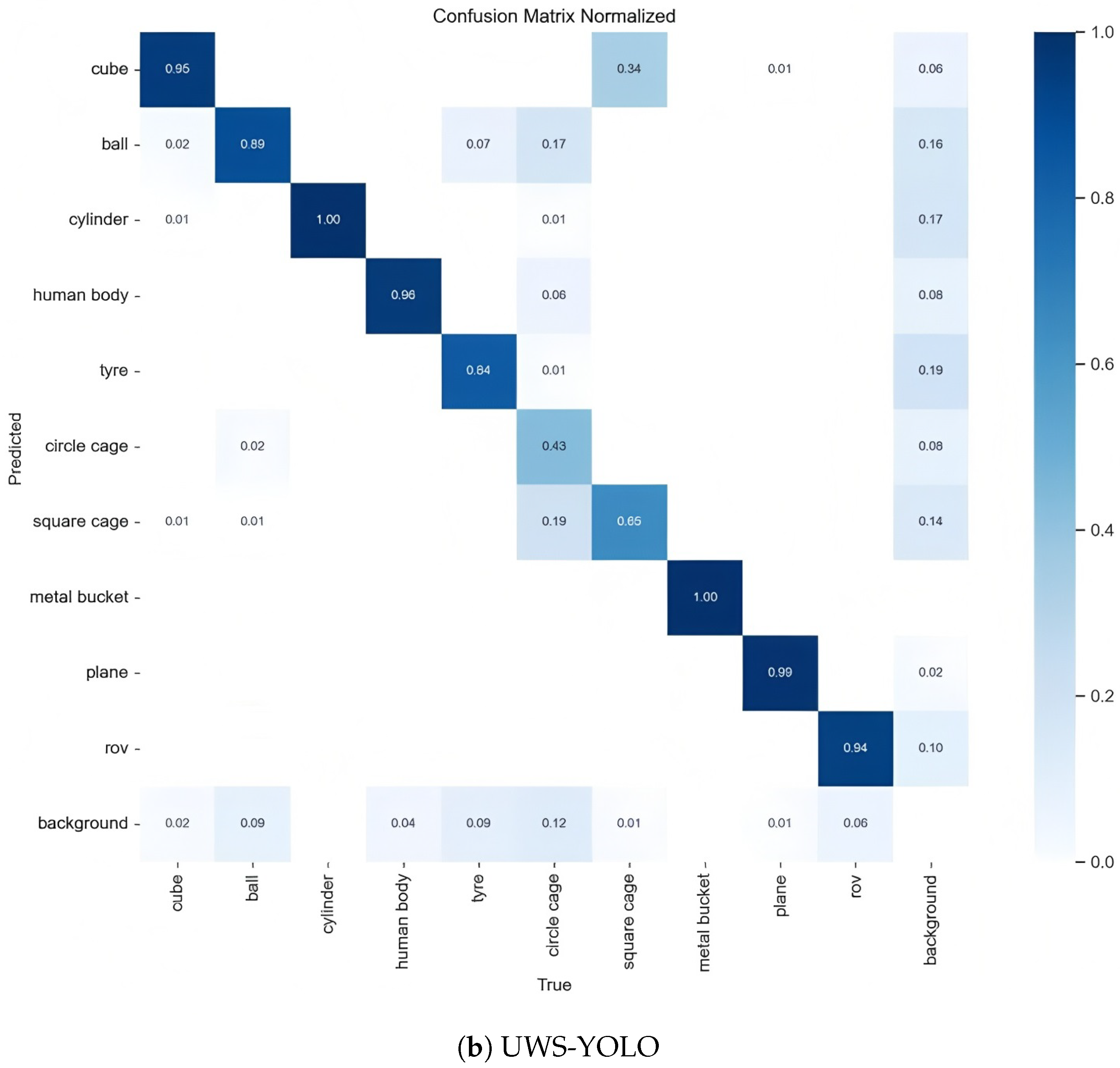

- Extensive experiments on the public UATD sonar dataset show that UWS-YOLO achieves a +3.5% mAP@0.5 improvement over the YOLOv8n baseline and outperforms seven state-of-the-art detectors in both accuracy and recall while retaining real-time performance (158 FPS) with lightweight computational complexity (8.8 GFLOPs).

1.3. Impact Statement

2. Related Work

2.1. Object Detection

2.2. Underwater Object Detection in Sonar Imagery

2.3. Current Challenges

3. Methods

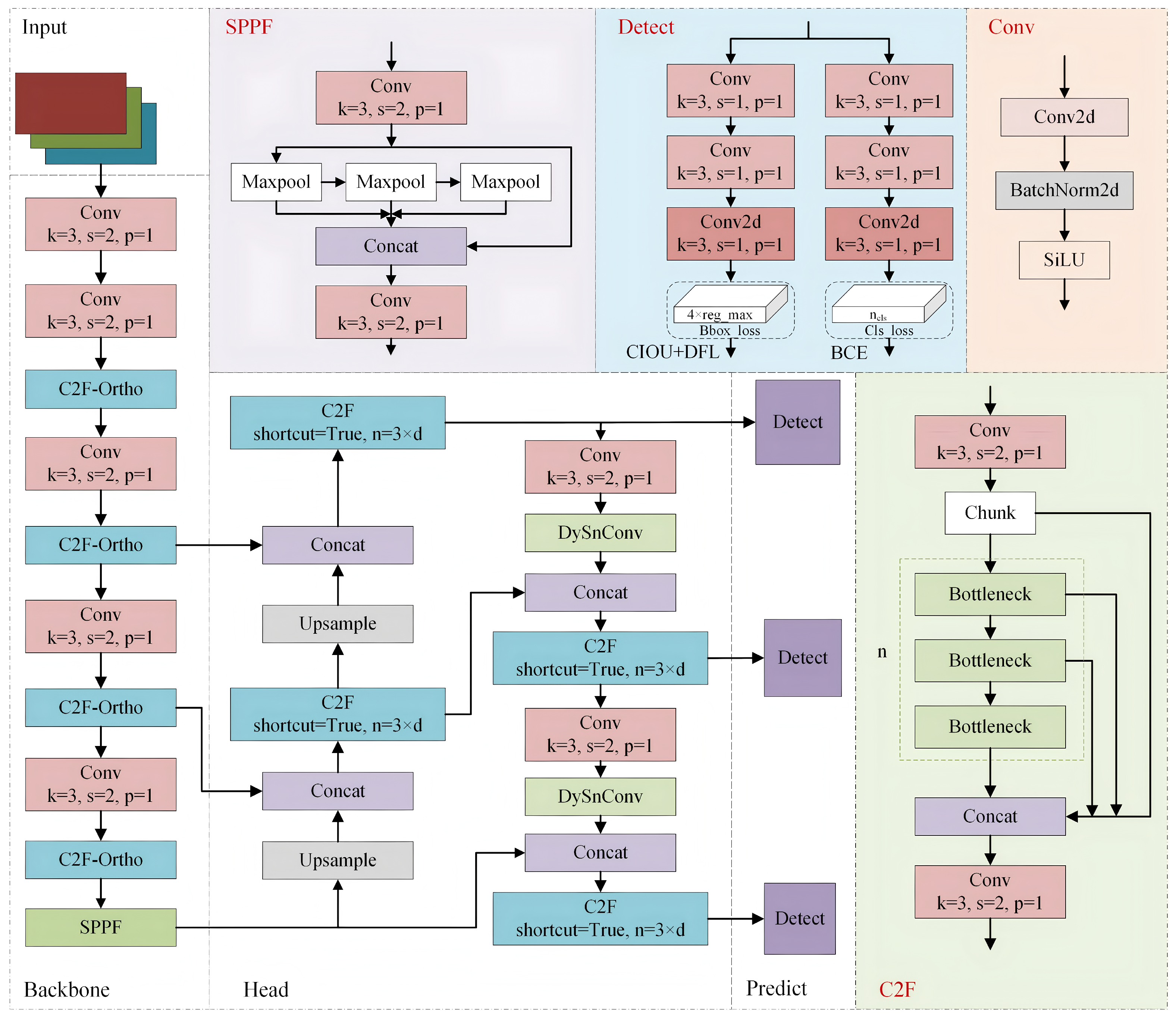

3.1. Overall Architecture of UWS-YOLO

3.1.1. Input Module

3.1.2. Backbone Network

3.1.3. Head Network

3.1.4. Prediction Module

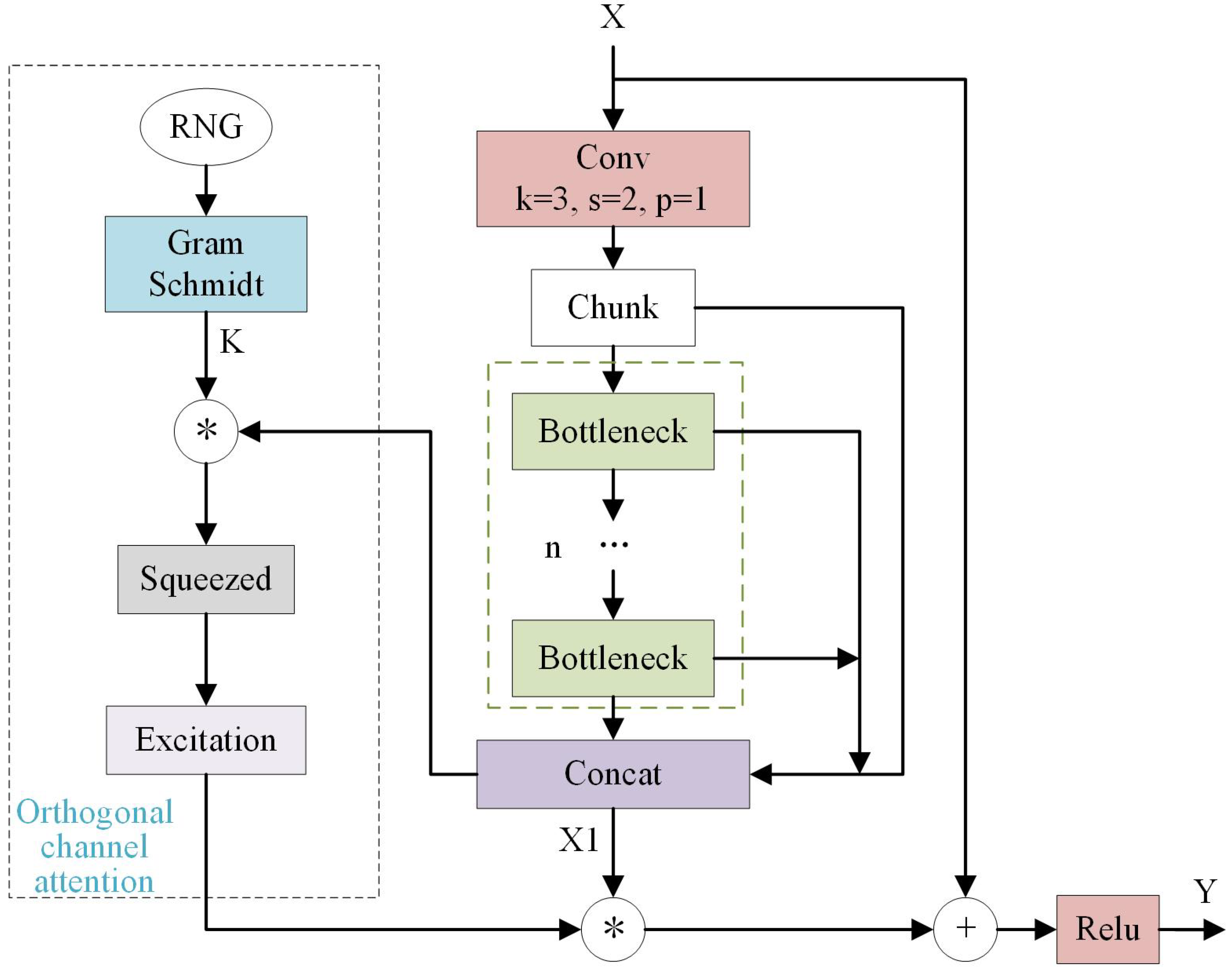

3.2. C2F-Ortho Module

3.2.1. Orthogonal Projection

3.2.2. Orthogonality Regularization

| Algorithm 1 Orthogonal Channel Attention (OCA) Filter Initialization |

| Require: Input feature dimensions Ensure: Orthogonal projection matrix

|

3.2.3. Excitation Step

3.2.4. Theoretical Advantages

- Maximized Feature Diversity: Orthogonal rows of form an orthonormal basis, minimizing redundancy and ensuring descriptors are statistically independent.

- Improved Gradient Flow: Orthogonal matrices are norm-preserving, stabilizing gradients and mitigating vanishing/exploding issues.

- Noise Robustness: Decorrelated descriptors suppress noise co-adaptation, reducing spurious activations in cluttered sonar data.

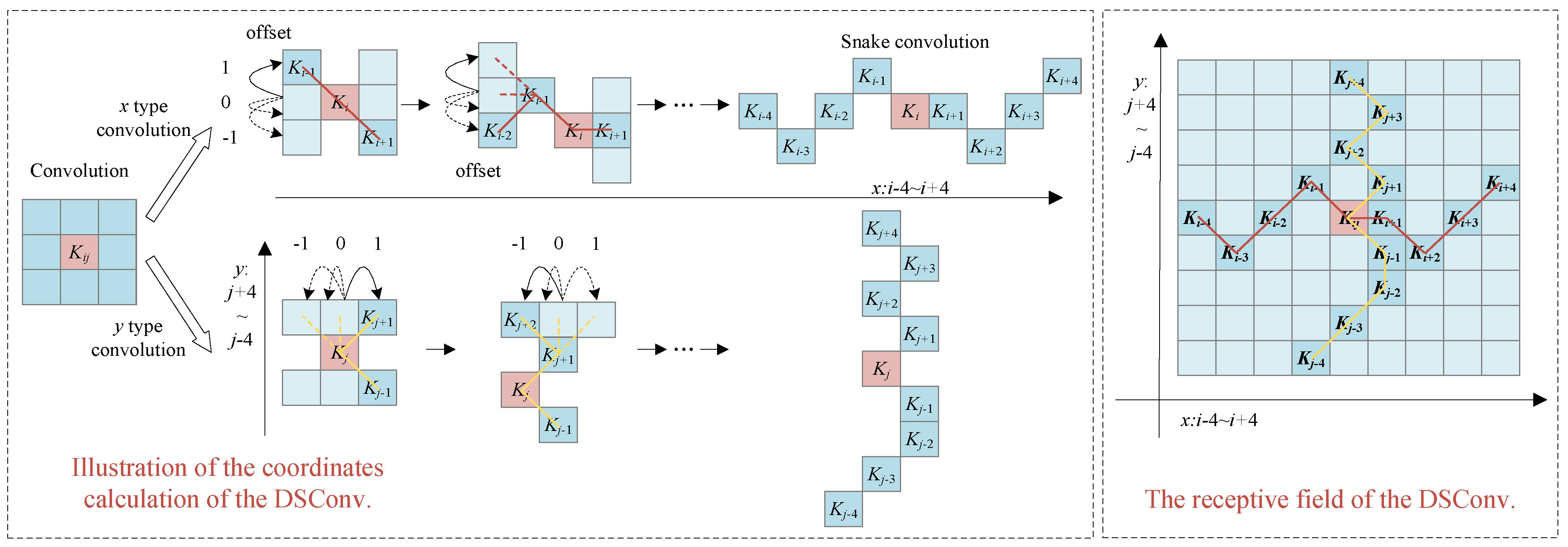

3.3. DySnConv Module

3.3.1. Module Architecture

3.3.2. Dynamic Snake Convolution Principle

3.3.3. Offset Learning and Coordinate Transformation

3.3.4. Advantages

- Geometric Adaptability: Learns spatially flexible sampling patterns that conform to elongated or curved target contours, ensuring precise structural alignment.

- Multi-Scale Feature Fusion: Combines standard convolution and DSConv outputs to capture both contextual information and fine structural cues.

- Enhanced Tubular Target Detection: Demonstrates superior accuracy for slender objects (e.g., pipelines) where fixed-grid kernels often underperform.

- Computational Efficiency: Achieves high geometric adaptivity with minimal additional computational overhead, making it viable for real-time sonar applications.

3.4. Transfer Learning

3.4.1. Method Overview

- Source-domain pre-training: UWS-YOLO is first trained on a large-scale underwater optical image dataset. This stage enables the model to learn generic, modality-independent visual representations, including shape primitives, boundary structures, and semantic context.

- Target-domain fine-tuning: The pre-trained weights are then used to initialize the model for sonar imagery. Fine-tuning adapts these generic representations to sonar-specific signal patterns, accommodating domain characteristics such as high-intensity backscatter, granular texture, and acoustic shadow geometry.

3.4.2. Domain Gap Analysis

- Evidence of domain adaptation: Models trained from scratch exhibit entangled feature spaces with poor inter-class separation. In contrast, cross-modal transfer produces more compact, well-separated clusters in the embedding space, as reflected by higher silhouette scores and lower within-class variance. This indicates that pre-training suppresses modality-specific noise while structuring features according to semantic similarity.

- Invariance of transferred knowledge: Consistent gains in both discrimination and generalization suggest that the transferred knowledge encodes modality-invariant object properties—e.g., cylindrical curvature of pipes, axial symmetry of divers, or composite geometry of ROVs. Pre-training instills a strong morphological prior that is subsequently adapted to acoustic signal characteristics, enabling accurate mapping from sonar imagery to abstract object representations.

3.4.3. Rationale and Benefits

- Improved generalization: Rich source-domain supervision alleviates overfitting on small sonar datasets.

- Feature alignment: Fine-tuning adapts generic visual priors to sonar-specific noise and texture distributions, bridging the perceptual gap between modalities.

- Accelerated convergence: Initialization from pre-trained weights reduces training time relative to random initialization.

- Enhanced detection performance: Morphological priors improve object localization and classification, particularly under complex underwater clutter and occlusion.

4. Experiments

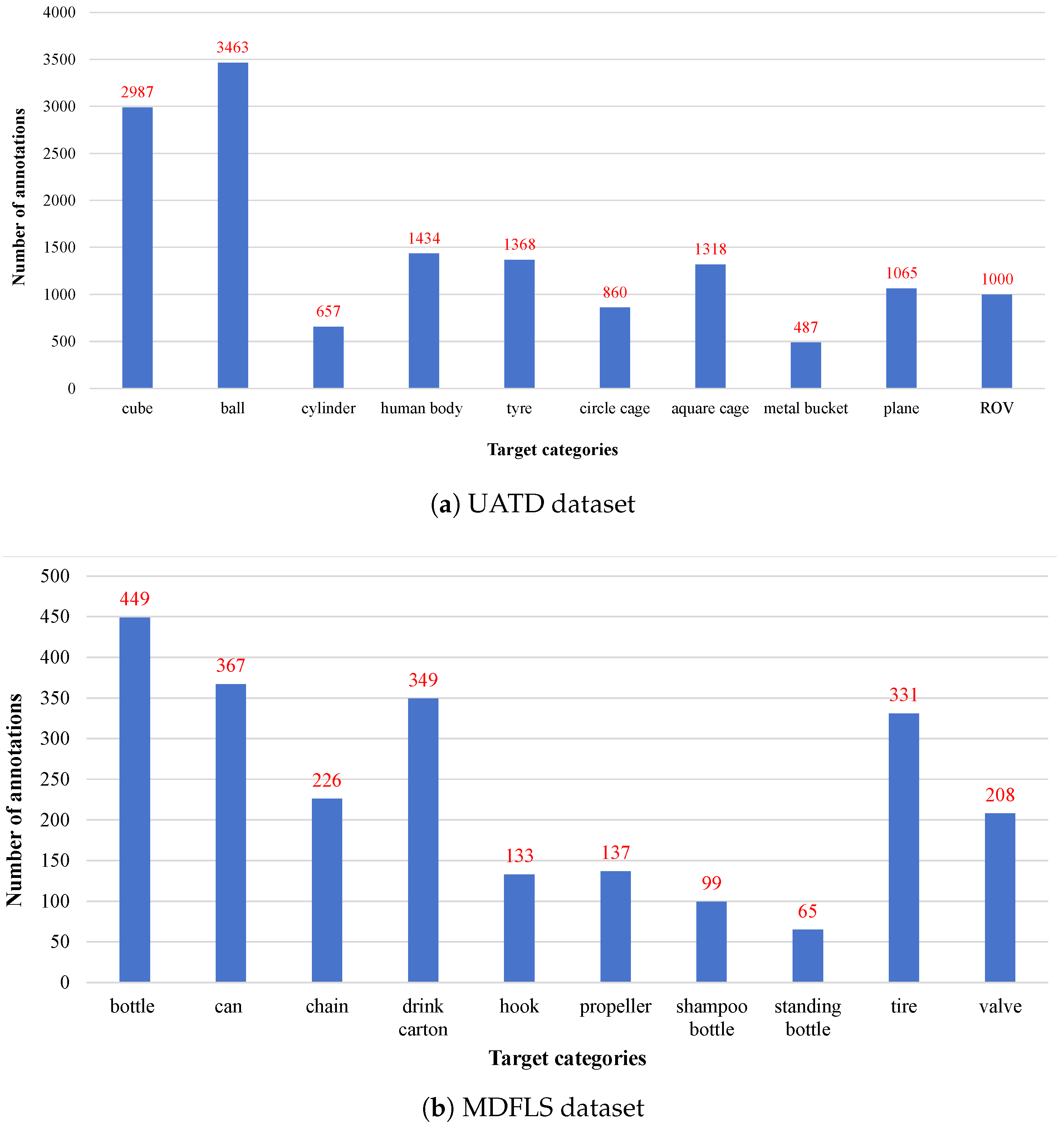

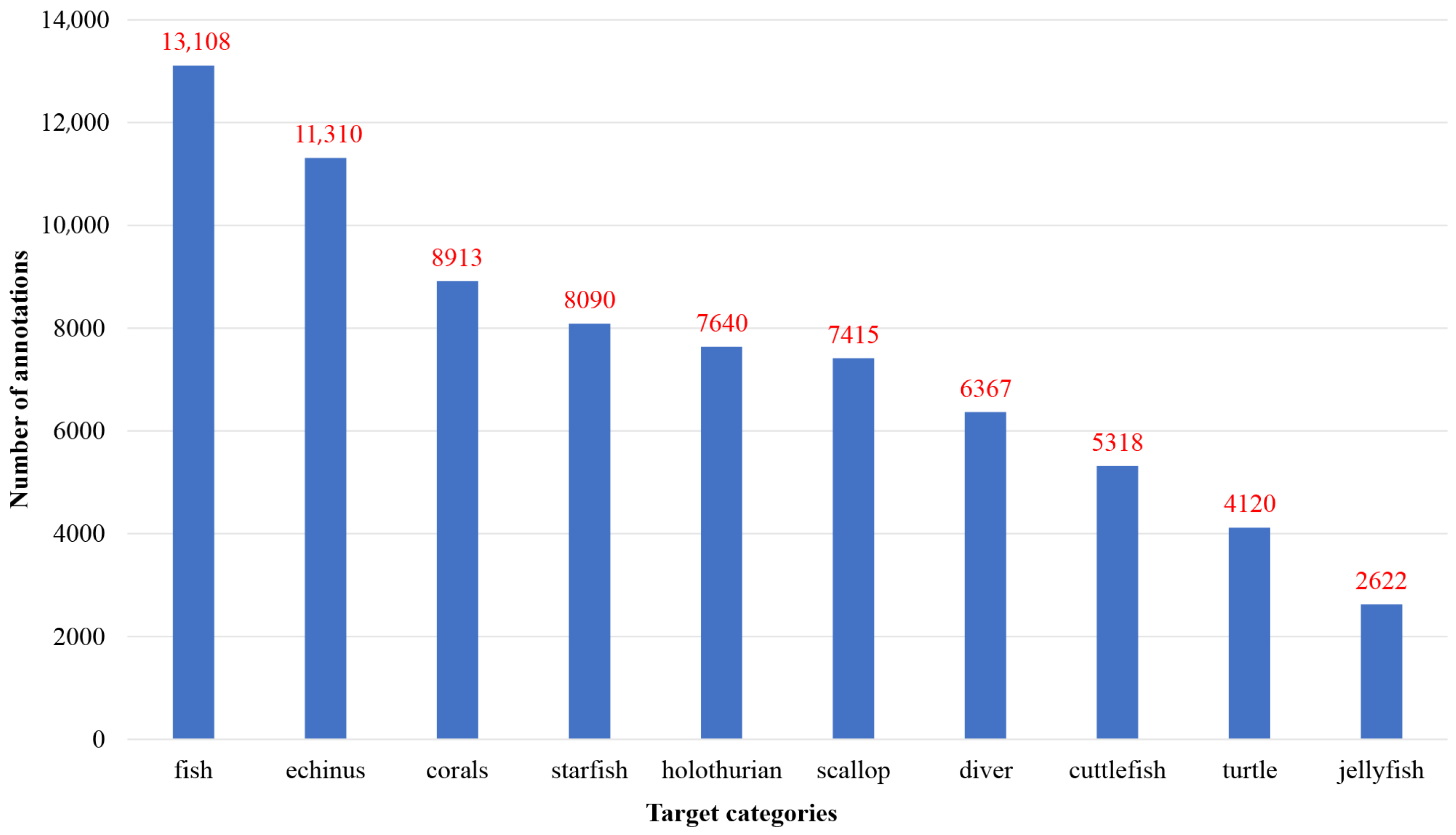

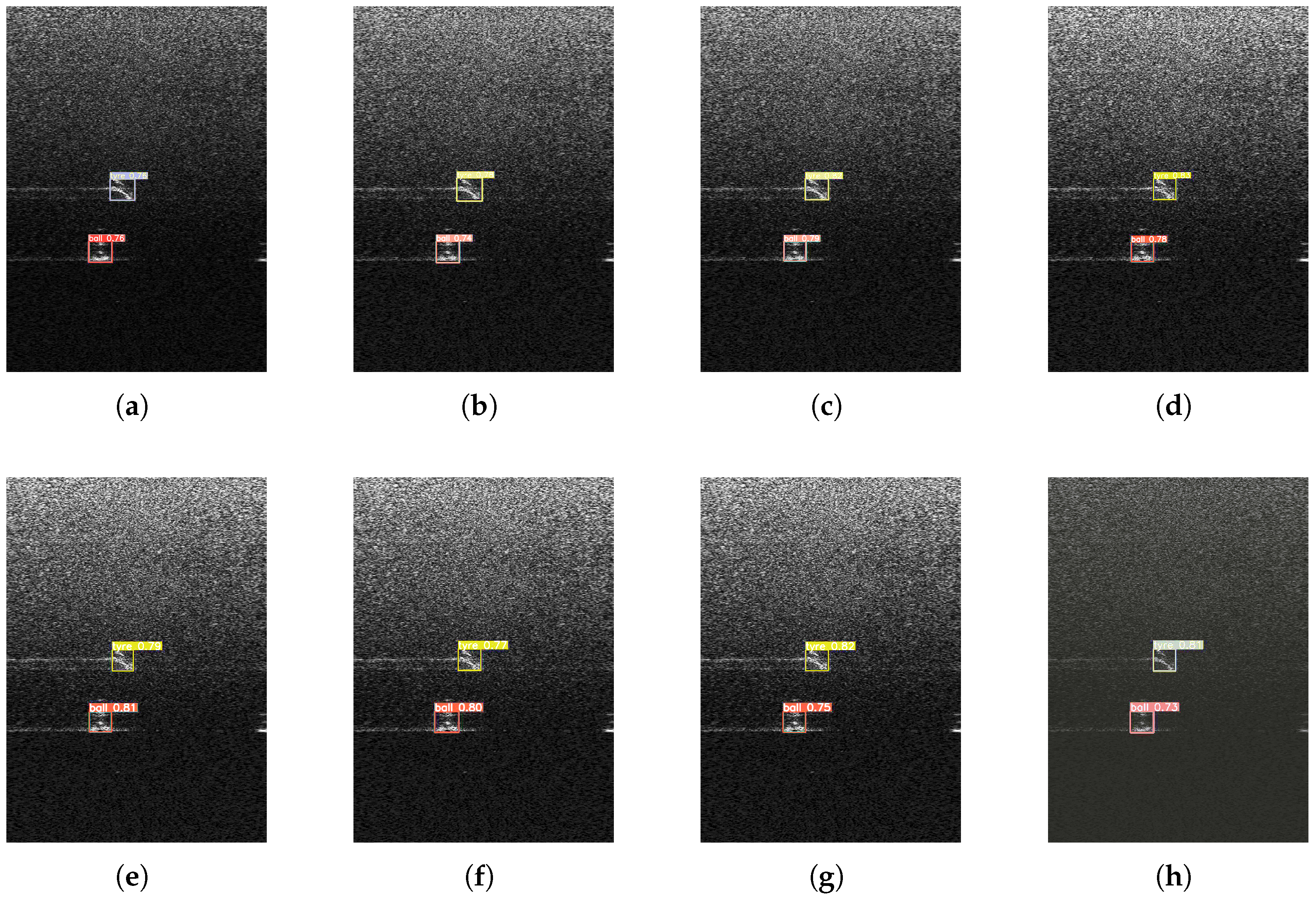

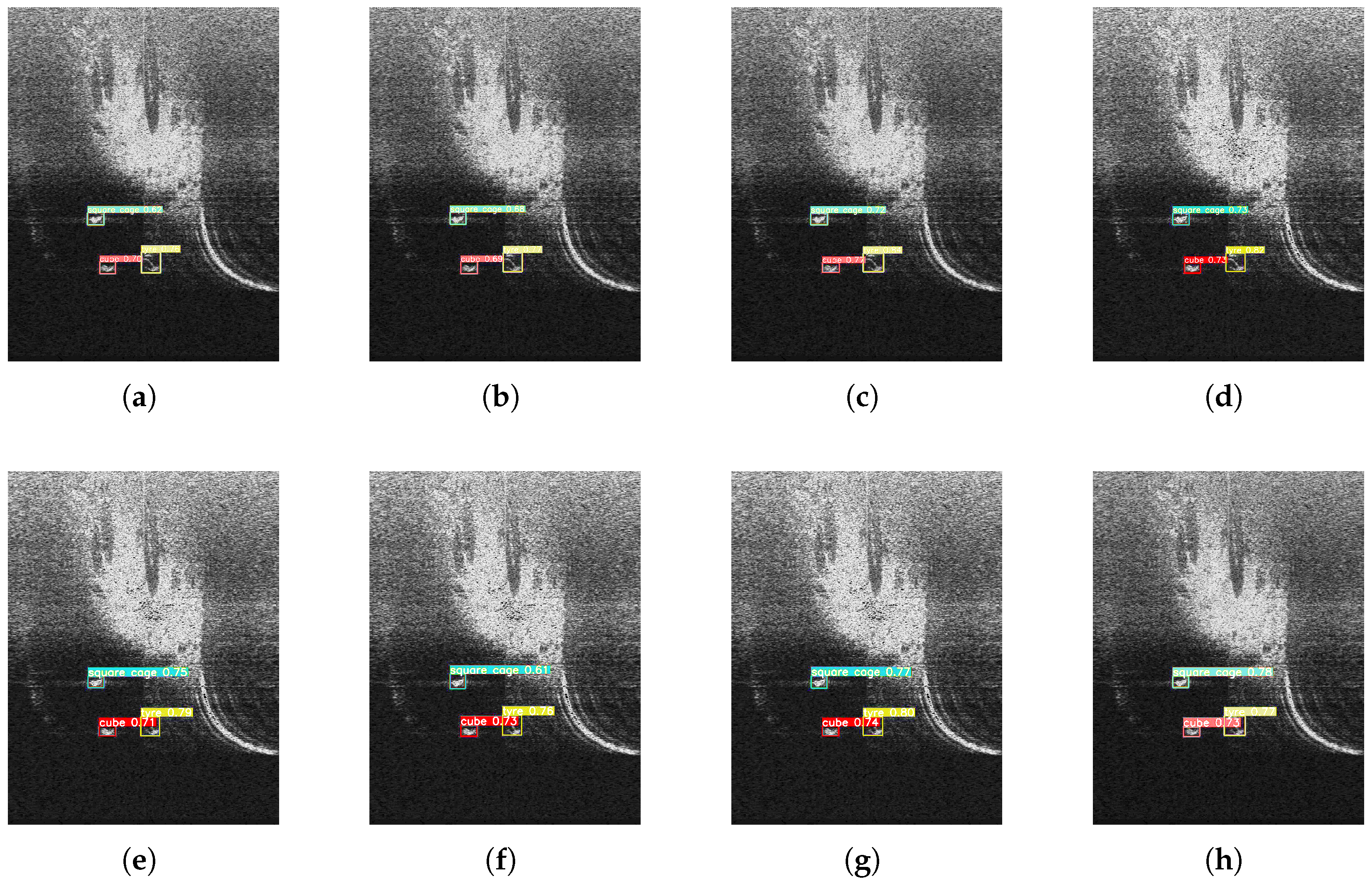

4.1. Datasets

4.1.1. UATD Dataset

4.1.2. MDFLS Dataset

4.1.3. RUOD Dataset

4.2. Experimental Setup

4.3. Evaluation Metrics

- —True Positives, correctly predicted positive instances;

- —False Positives, incorrectly predicted positive instances;

- —False Negatives, actual positives missed by the model;

- —precision at recall for class i;

- C—total number of target classes.

4.4. Ablation Studies

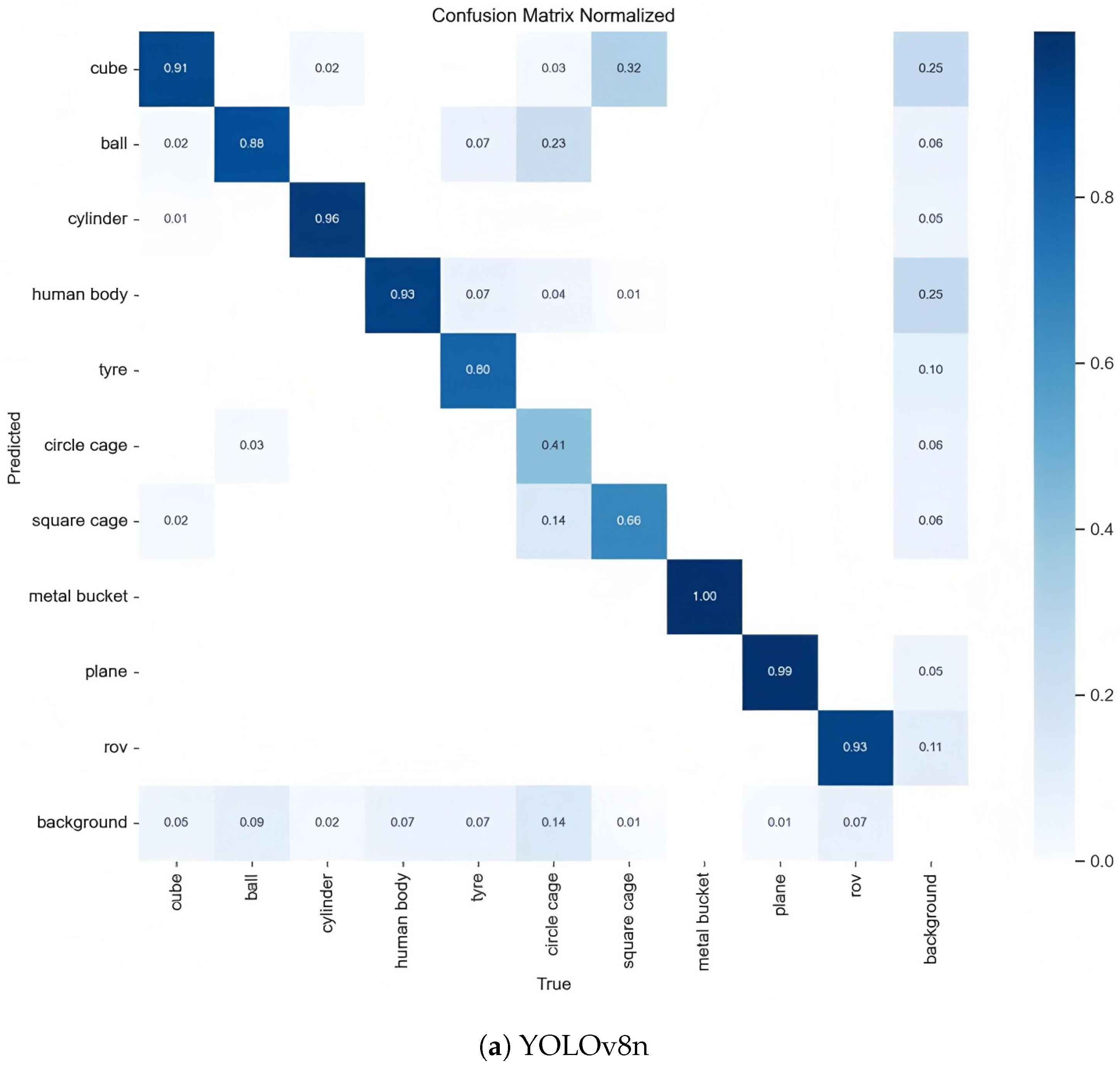

4.4.1. Module-Wise Ablation Analysis

4.4.2. Hyperparameter Sensitivity Analysis

4.5. Comparative Experiments with Other Cutting-Edge Approaches

4.6. Generalization and Robustness Analysis

4.7. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hożýn, S. A Review of Underwater Mine Detection and Classification in Sonar Imagery. Electronics 2021, 10, 2943. [Google Scholar] [CrossRef]

- Er, M.J.; Chen, J.; Zhang, Y.; Gao, W. Research Challenges, Recent Advances, and Popular Datasets in Deep Learning-Based Underwater Marine Object Detection: A Review. Sensors 2023, 23, 1990. [Google Scholar] [CrossRef] [PubMed]

- Sarkar, P.; De, S.; Gurung, S. A Survey on Underwater Object Detection. In Intelligence Enabled Research: DoSIER 2021; Springer: Singapore, 2022; pp. 91–104. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems 28 (NIPS 2015), Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Sapkota, R.; Qureshi, R.; Calero, M.F.; Badgujar, C.; Nepal, U.; Poulose, A.; Zeno, P.; Vaddevolu, U.B.P.V.; Yan, H.; Karkee, M. YOLOv10 to Its Genesis: A Decadal and Comprehensive Review of the You Only Look Once Series. arXiv 2024, arXiv:2406.19407. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision (ECCV 2016), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Galceran, E.; Djapic, V.; Carreras, M.; Williams, D.P. A Real-Time Underwater Object Detection Algorithm for Multi-Beam Forward Looking Sonar. Ifac Proc. Vol. 2012, 45, 306–311. [Google Scholar] [CrossRef]

- Fan, Z.; Xia, W.; Liu, X.; Li, H. Detection and Segmentation of Underwater Objects from Forward-Looking Sonar Based on a Modified Mask RCNN. Signal Image Video Process. 2021, 15, 1135–1143. [Google Scholar] [CrossRef]

- Lu, Y.; Zhang, J.; Chen, Q.; Xu, C.; Irfan, M.; Chen, Z. AquaYOLO: Enhancing YOLOv8 for Accurate Underwater Object Detection for Sonar Images. J. Mar. Sci. Eng. 2025, 13, 73. [Google Scholar] [CrossRef]

- Manik, H.M.; Rohman, S.; Hartoyo, D. Underwater Multiple Objects Detection and Tracking Using Multibeam and Side Scan Sonar. Int. J. Appl. Inf. Syst. 2014, 2, 1–4. [Google Scholar]

- Li, L.; Li, Y.; Yue, C.; Xu, G.; Wang, H.; Feng, X. Real-Time Underwater Target Detection for AUV Using Side Scan Sonar Images Based on Deep Learning. Appl. Ocean Res. 2023, 138, 103630. [Google Scholar] [CrossRef]

- Wang, S.; Liu, X.; Yu, S.; Zhu, X.; Chen, B.; Sun, X. Design and Implementation of SSS-Based AUV Autonomous Online Object Detection System. Electronics 2024, 13, 1064. [Google Scholar] [CrossRef]

- Williams, D.P. Fast Target Detection in Synthetic Aperture Sonar Imagery: A New Algorithm and Large-Scale Performance Analysis. IEEE J. Ocean. Eng. 2014, 40, 71–92. [Google Scholar] [CrossRef]

- Galusha, A.; Galusha, G.; Keller, J.; Zare, A. A Fast Target Detection Algorithm for Underwater Synthetic Aperture Sonar Imagery. In Detection and Sensing of Mines, Explosive Objects, and Obscured Targets XXIII; SPIE: Bellingham, WA, USA, 2018; pp. 358–370. [Google Scholar]

- Williams, D.P.; Brown, D.C. New Target Detection Algorithms for Volumetric Synthetic Aperture Sonar Data. In Proceedings of the 179th Meeting of the Acoustical Society of America, Virtually, 7–11 December 2020; AIP Publishing: Melville, NY, USA, 2020. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Wang, W.; Wu, L.; Chen, S.; Hu, X.; Li, J.; Tang, J.; Yang, J. Generalized Focal Loss: Learning Qualified and Distributed Bounding Boxes for Dense Object Detection. In Proceedings of the 34th Conference on Neural Information Processing Systems (NeurIPS 2020), Virtual, 6–12 December 2020; pp. 21002–21012. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; AAAI Press: Palo Alto, CA, USA, 2020; pp. 12993–13000. [Google Scholar]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. TOOD: Task-Aligned One-Stage Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV 2021), Montreal, QC, Canada, 11–17 October 2021; pp. 3490–3499. [Google Scholar]

- Xie, K.; Yang, J.; Qiu, K. A Dataset with Multibeam Forward-Looking Sonar for Underwater Object Detection. Sci. Data 2022, 9, 739. [Google Scholar] [CrossRef] [PubMed]

- Fu, C.; Liu, R.; Fan, X.; Chen, P.; Fu, H.; Yuan, W.; Zhu, M.; Luo, Z. Rethinking General Underwater Object Detection: Datasets, Challenges, and Solutions. Neurocomputing 2023, 517, 243–256. [Google Scholar] [CrossRef]

- Zhao, L.; Yun, Q.; Yuan, F.; Ren, X.; Jin, J.; Zhu, X. YOLOv7-CHS: An Emerging Model for Underwater Object Detection. J. Mar. Sci. Eng. 2023, 11, 1949. [Google Scholar] [CrossRef]

| Existing Approach/Trend | Identified Limitation (Gap) | Our Proposed Solution |

|---|---|---|

| Handcrafted feature-based methods (e.g., SIFT, HOG) for sonar target detection | Sensitive to scale, rotation, and noise; poor performance in cluttered environments | Replaces handcrafted features with deep CNN-based hierarchical feature learning tailored to sonar characteristics (OrthoNet) |

| Two-stage deep detectors (e.g., Faster R-CNN) applied to sonar imagery | High computational cost; difficulty meeting real-time AUV constraints | Adopts a lightweight YOLO-based single-stage architecture optimized for low-latency sonar detection |

| Single-stage detectors (YOLO, SSD) directly adapted from optical imagery | Lack of sonar-specific feature enhancement; limited robustness to acoustic noise and clutter | Integrates orthogonal channel attention to selectively amplify discriminative features while suppressing irrelevant noise |

| Training with limited labeled sonar datasets | Overfitting; poor cross-domain generalization | Employs cross-modal transfer learning from large-scale optical underwater datasets with domain adaptation for sonar |

| Standard convolution operators in detection heads | Limited ability to model elongated, low-contrast structures (e.g., cables, pipelines) | Introduces Dynamic Snake Convolution (DySnConv) to adapt receptive fields to target geometry |

| Modality-specific research (FLS, SSS, SAS) | Lack of unified frameworks; innovations remain siloed | Develops a flexible detection framework applicable across sonar modalities via shared architecture components |

| Environment Type | Configuration |

|---|---|

| CPU | Intel(R) Xeon(R) Silver 4214 @ 2.20 GHz |

| GPU | NVIDIA GeForce RTX 2080 Ti |

| RAM | 512 GB |

| Operating System | Windows 10 |

| Deep Learning Frameworks | PyTorch 1.9.0, CUDA 10.2, cuDNN 10.2 |

| Programming Language | Python 3.9 |

| Hyperparameter | Value |

|---|---|

| Epochs | 100 |

| Batch Size | 32 |

| Image Resolution | |

| Momentum | 0.937 |

| Initial Learning Rate () | 0.001 |

| Final Learning Rate () | 0.01 |

| Optimizer | Adam |

| Group | Components | mAP@ 0.5 | mAP@ 0.5:0.95 | F1 | Params (M) | GFLOPs | FPS | mAP-S | mAP-T | mAP-C |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Baseline (YOLOv8n) | 83.6 | 54.2 | 0.845 | 3.01 | 8.1 | 212 | 70.1 | 75.3 | 88.5 |

| 2 | +C2F-Ortho | 84.0 | 55.0 | 0.839 | 3.05 | 8.1 | 185 | 72.5 | 76.0 | 88.2 |

| 3 | +DySnConv | 84.6 | 55.8 | 0.832 | 3.45 | 8.7 | 172 | 71.2 | 79.8 | 87.9 |

| 4 | +Both Modules | 86.1 | 57.9 | 0.859 | 3.49 | 8.8 | 166 | 73.8 | 81.1 | 90.1 |

| 5 | +Transfer Learning | 87.1 | 59.5 | 0.863 | 3.49 | 8.8 | 158 | 75.9 | 82.6 | 91.5 |

| Model | P (%) | R (%) | mAP@0.5 (%) | Parameters | GFLOPs | FPS |

|---|---|---|---|---|---|---|

| YOLOv5n | 84.2 | 74.2 | 81.0 | 1,772,695 | 4.2 | 270 |

| YOLOv5s | 83.5 | 77.2 | 82.8 | 7,037,095 | 15.8 | 227 |

| YOLOv7 | 87.8 | 83.6 | 82.5 | 36,490,443 | 103.2 | 81 |

| YOLOv7-CHS | 87.0 | 82.1 | 81.9 | 33,585,246 | 40.3 | 111 |

| YOLOv8n | 86.8 | 82.5 | 83.6 | 3,007,598 | 8.1 | 212 |

| YOLOv8s | 87.4 | 80.5 | 86.0 | 11,129,454 | 28.6 | 285 |

| YOLOv8m | 86.0 | 82.2 | 83.0 | 25,845,550 | 78.7 | 123 |

| UWS-YOLO | 87.3 | 84.1 | 87.1 | 3,491,638 | 8.8 | 158 |

| Scenario | Model | mAP@0.5) | FN Rate | FP Rate |

|---|---|---|---|---|

| Scenario 1 | YOLOv8n | 82.1 | 0.18 | 0.12 |

| Scenario 1 | UWS-YOLO | 86.3 | 0.09 | 0.08 |

| Scenario 2 | YOLOv8n | 80.5 | 0.22 | 0.15 |

| Scenario 2 | UWS-YOLO | 84.7 | 0.11 | 0.10 |

| Model | mAP@0.5 (MDFLS) | mAP@0.5 Under Noise () | FPS (Jetson) | Power (W) |

|---|---|---|---|---|

| YOLOv5n | 68.9 | 71.5 | 58 | 18.2 |

| YOLOv8n | 72.1 | 71.5 | 49 | 20.1 |

| YOLOv8s | 75.3 | 75.8 | 32 | 23.8 |

| UWS-YOLO | 76.4 | 78.4 | 37 | 22.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, L.; Ren, X.; Fu, L.; Yun, Q.; Yang, J. UWS-YOLO: Advancing Underwater Sonar Object Detection via Transfer Learning and Orthogonal-Snake Convolution Mechanisms. J. Mar. Sci. Eng. 2025, 13, 1847. https://doi.org/10.3390/jmse13101847

Zhao L, Ren X, Fu L, Yun Q, Yang J. UWS-YOLO: Advancing Underwater Sonar Object Detection via Transfer Learning and Orthogonal-Snake Convolution Mechanisms. Journal of Marine Science and Engineering. 2025; 13(10):1847. https://doi.org/10.3390/jmse13101847

Chicago/Turabian StyleZhao, Liang, Xu Ren, Lulu Fu, Qing Yun, and Jiarun Yang. 2025. "UWS-YOLO: Advancing Underwater Sonar Object Detection via Transfer Learning and Orthogonal-Snake Convolution Mechanisms" Journal of Marine Science and Engineering 13, no. 10: 1847. https://doi.org/10.3390/jmse13101847

APA StyleZhao, L., Ren, X., Fu, L., Yun, Q., & Yang, J. (2025). UWS-YOLO: Advancing Underwater Sonar Object Detection via Transfer Learning and Orthogonal-Snake Convolution Mechanisms. Journal of Marine Science and Engineering, 13(10), 1847. https://doi.org/10.3390/jmse13101847