Multi-Module Fusion Model for Submarine Pipeline Identification Based on YOLOv5

Abstract

1. Introduction

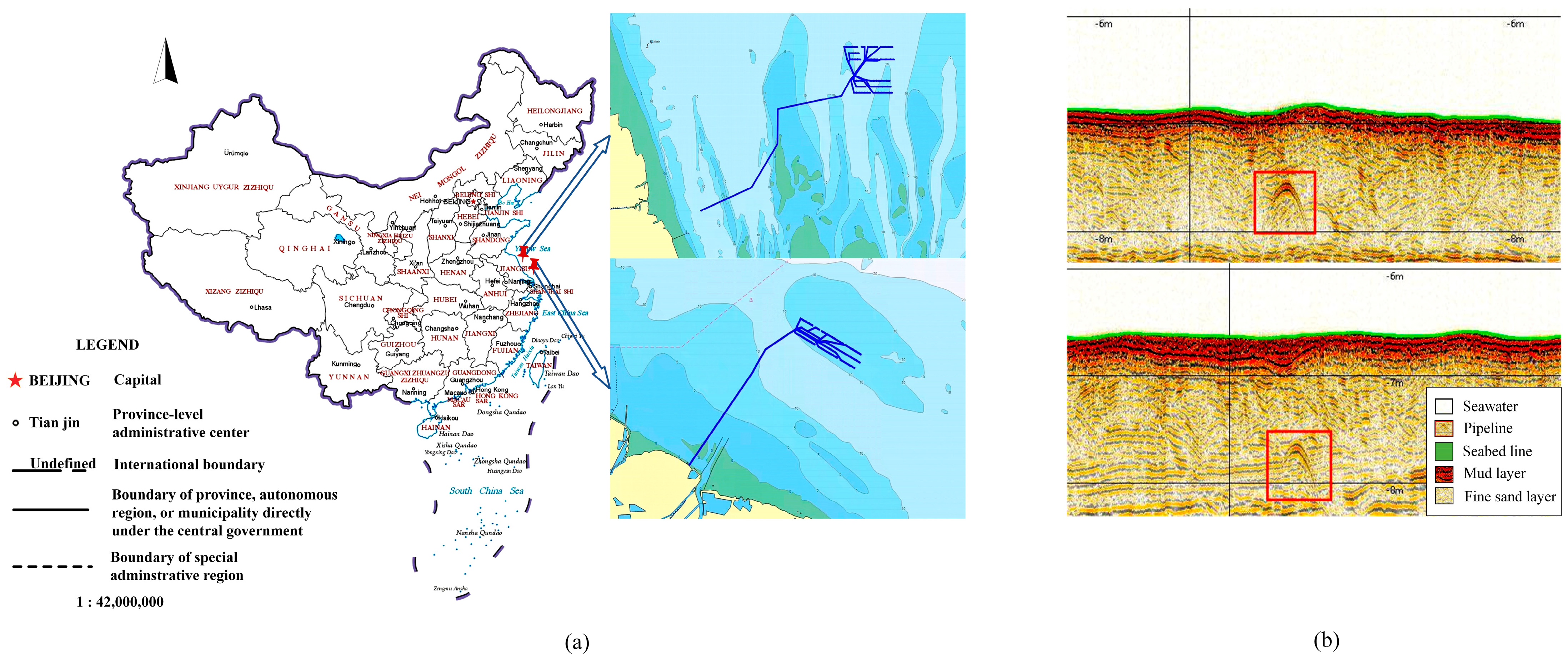

2. Experimental Data and Model

2.1. Experimental Background

2.2. Experimental Equipment

2.3. Data Preprocessing

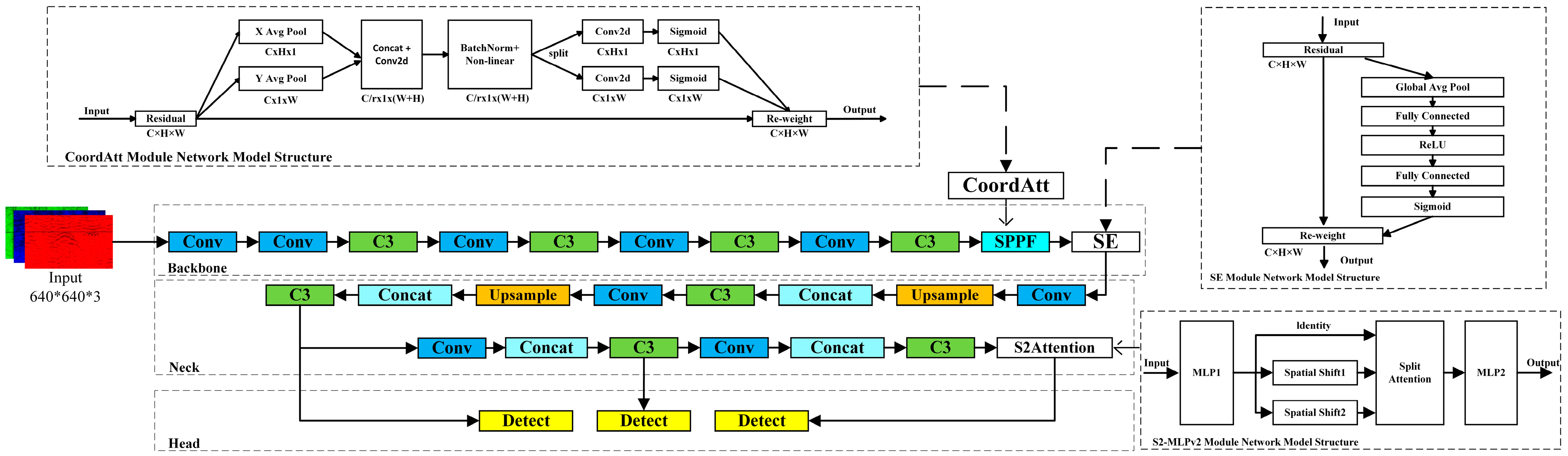

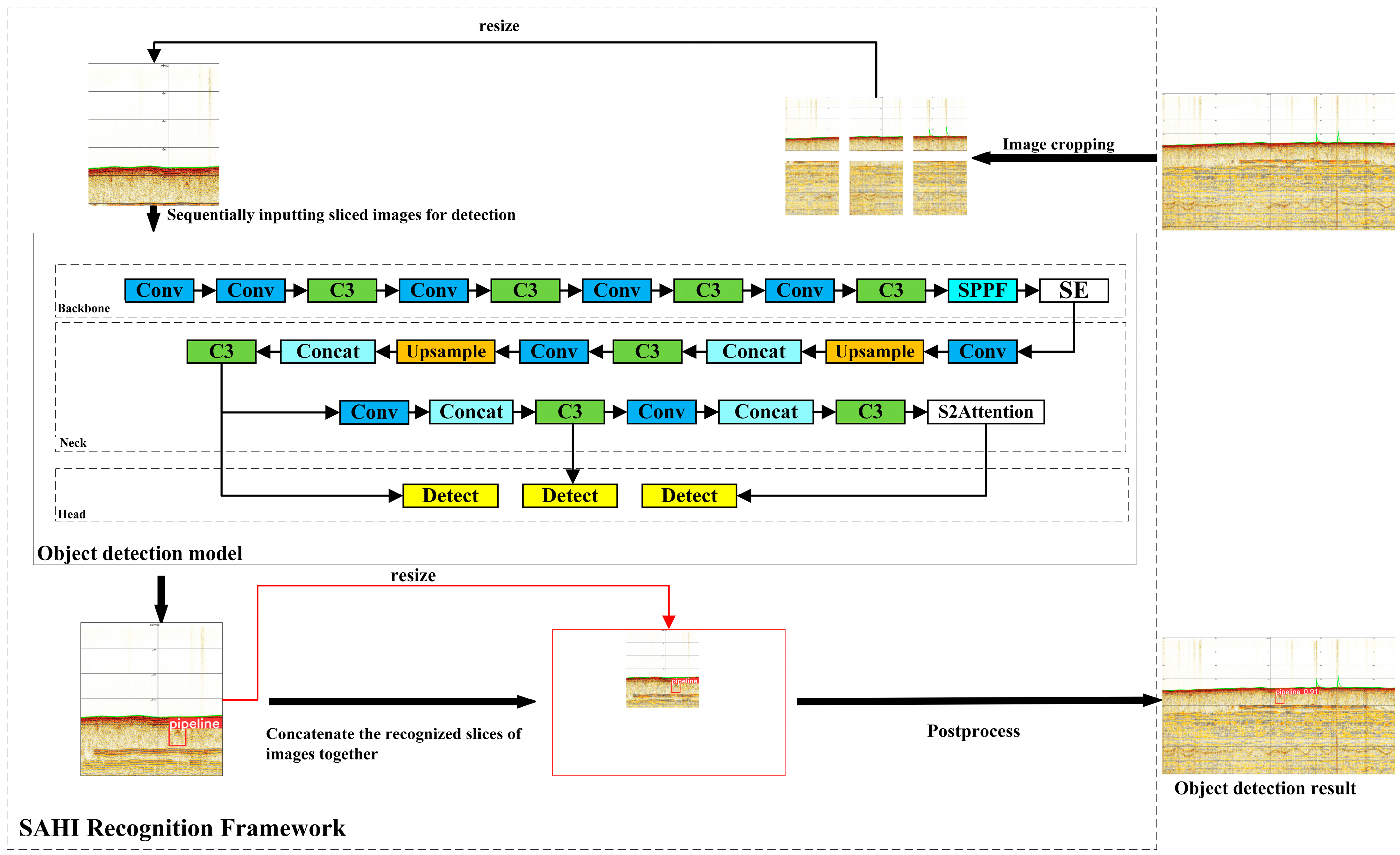

2.4. Experimental Model

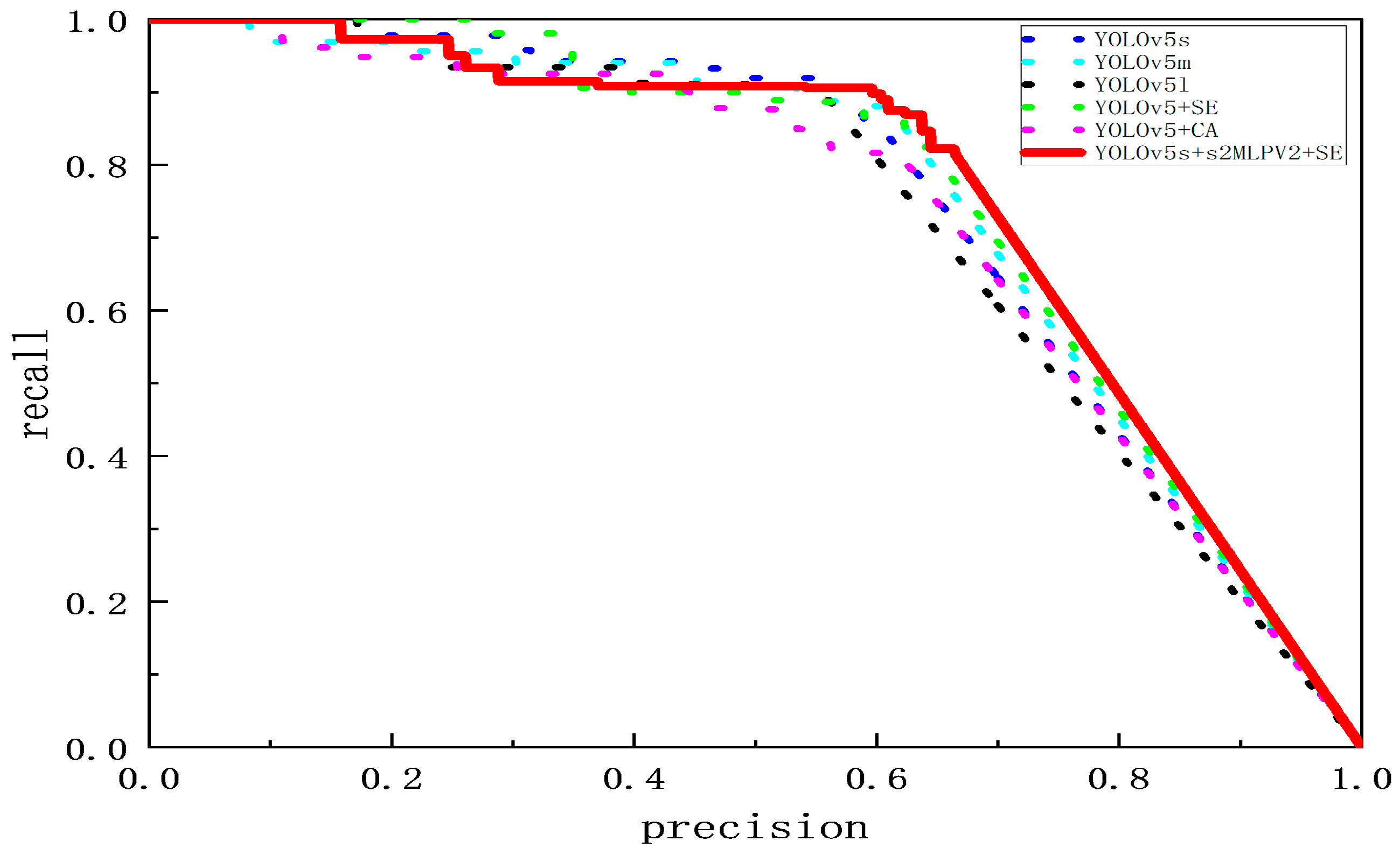

3. Experimental Results and Analysis

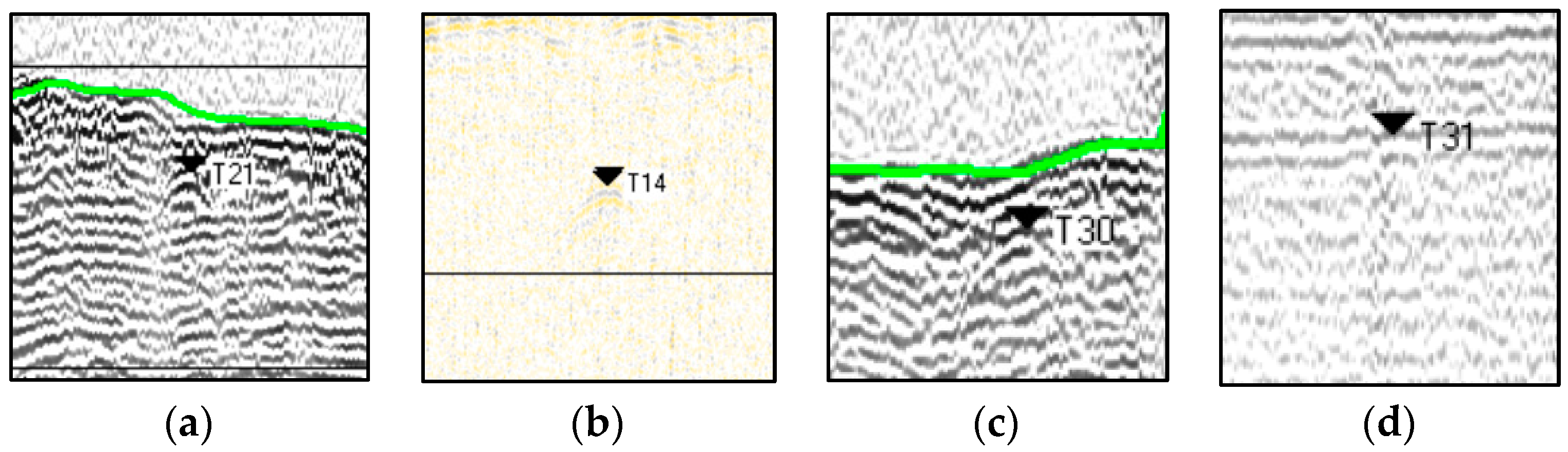

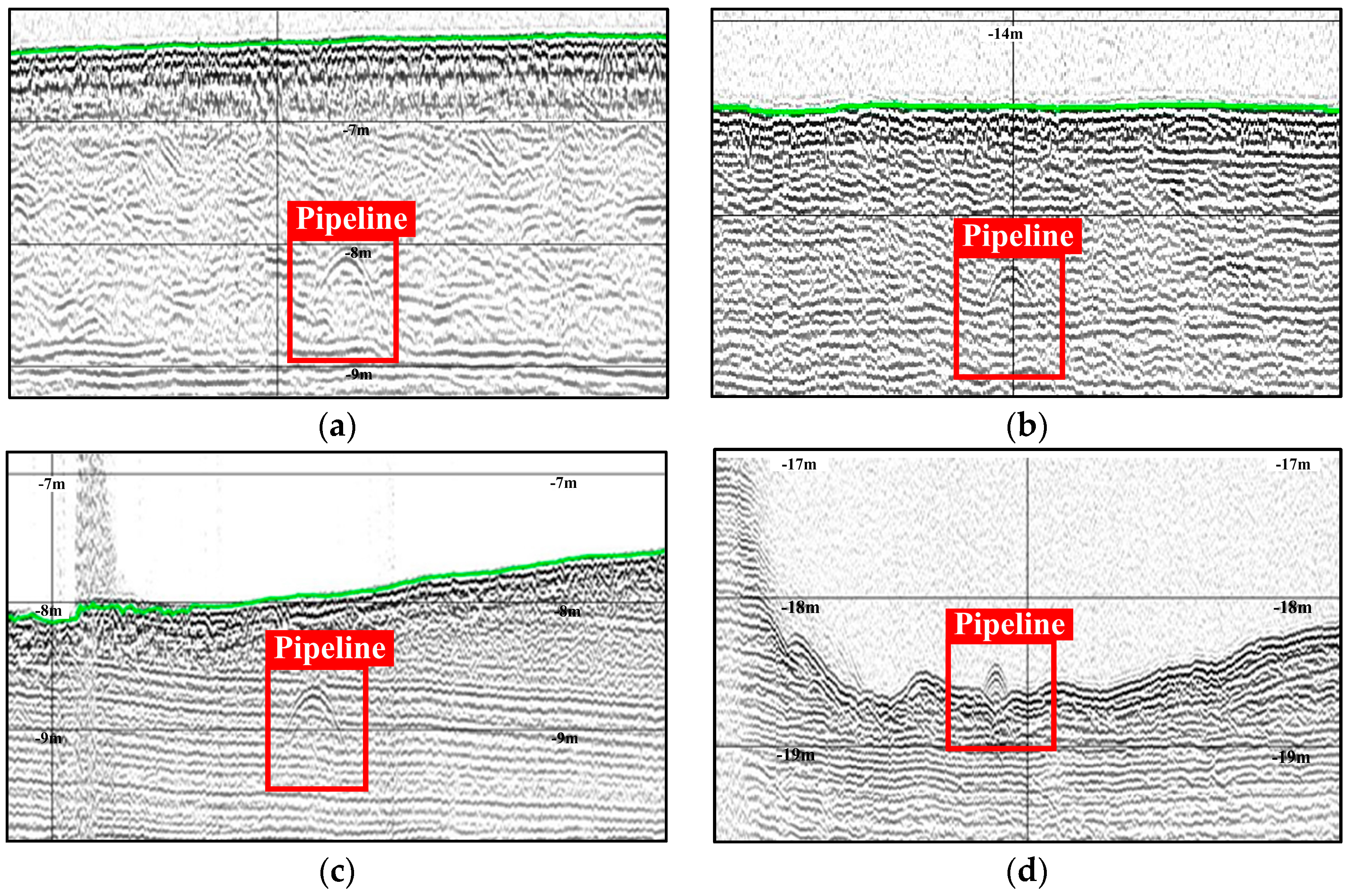

- Noise impact: Within the bandwidth constraints of the system, extraneous acoustic signals can introduce interference into the sonar-generated image. When a pipeline is positioned near the water surface, the sonar effective beam aperture narrows, and hence reduces the apparent scale of the pipeline within the imagery. This situation presents challenges in differentiating the pipeline from other reflecting objects. Figure 6a.

- Substrate influence: Different depths and substrates require different detection frequencies. Hard seabeds such as sand, rock, coral reefs, and shells severely limit the depth of acoustic penetration. This restriction hinders the instrument exploration depth, preventing the SBP from effectively receiving echo signals. Figure 6b depicts the impact of a substrate influence on pipeline mapping.

- Ship swing: During measurement operations, fluctuations in the ship velocity and heading can lead to vessel oscillations. This motion has an effect on the distance between the survey equipment and the pipeline, resulting in distortions to the representation of the pipeline shape within the captured image. Figure 6c shows distortions in pipeline shape caused by ship swing.

- Air bubble effect: When a considerable volume of air bubbles encircles the transducer within the water medium, the vibrational wave generated through the transducer fails to transmit efficiently into the water as an acoustic pulse. This causes the loss of the pipeline image information such that the SPB will not effectively receive echo signals. Figure 6d shows the loss of the pipeline image information caused by air bubble effect.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Healey, A.J.; Seo, Y.G.T. Dynamic Motions of Marine Pipelines on the Ocean Bottom. J. Energy Resour. Technol. 1984, 106, 65–69. [Google Scholar] [CrossRef]

- Wang, K.; Jin, W. The Application Of Acoustic Detection Technology In The Investigation Of Submarine Pipelines. J. Appl. Sci. Process Eng. 2023, 27, 2911–2921. [Google Scholar] [CrossRef]

- Gao, L.; Gu, H.-T.; Feng, L. Research on Submarine Buried Oil and Gas Pipeline Autonomous Inspection System of USV. In Proceedings of the ISOPE International Ocean and Polar Engineering Conference, Honolulu, HI, USA, 16 June 2019. [Google Scholar]

- Kumudham, R.; Lakshmi, S.; Rajendran, V. Detection of Pipeline Using Machine Learning Algorithm and Analysing the Effect of Resolution Enhancement on Object Recognition Accuracy. J. Xidian Univ. 2020, 14, 1026–1037. [Google Scholar] [CrossRef]

- Byrne, B.W. Book Review: Offshore Geotechnical Engineering: Principles and Practice. Géotechnique 2011, 61, 1093. [Google Scholar] [CrossRef]

- Baker, J.H.A. Alternative Approaches to Pipeline Survey: The Pipeline Engineer’s View. In SUT Subtech; Springer: Dordrecht, The Netherlands, 1991. [Google Scholar] [CrossRef]

- Kaiser, M.J. A Review of Deepwater Pipeline Construction in the U.S. Gulf of Mexico–Contracts, Cost, and Installation Methods. J. Marine. Sci. Appl. 2016, 15, 288–306. [Google Scholar] [CrossRef]

- Lurton, X. An Introduction to Underwater Acoustics: Principles and Applications; Springer: Berlin/Heidelberg, Germany, 2003; Volume 84, p. 265. [Google Scholar] [CrossRef][Green Version]

- Jacobi, M.; Karimanzira, D. Multi Sensor Underwater Pipeline Tracking with AUVs. In Proceedings of the 2014 Oceans—St. John’s, St. John’s, NL, Canada, 14–19 September 2014; pp. 1–6. [Google Scholar] [CrossRef]

- Lv, Y.; Dong, Y.; Li, Y.; Wu, H.; Hu, K.; Hu, H.; Feng, W. Submarine Cable Fault Identification Based on FCN-GRU-SVM. E3S Web Conf. 2022, 360, 01055. [Google Scholar] [CrossRef]

- Bharti, V.; Lane, D.; Wang, S. A Semi-Heuristic Approach for Tracking Buried Subsea Pipelines Using Fluxgate Magnetometers. In Proceedings of the 2020 IEEE 16th International Conference on Automation Science and Engineering (CASE), Hong Kong, 20–21 August 2020; pp. 469–475. [Google Scholar] [CrossRef]

- Li, S.; Zhao, J.; Zhang, H.; Zhang, Y. Automatic Detection of Pipelines from Sub-Bottom Profiler Sonar Images. IEEE J. Oceanic Eng. 2022, 47, 417–432. [Google Scholar] [CrossRef]

- Guan, M.; Cheng, Y.; Li, Q.; Wang, C.; Fang, X.; Yu, J. An Effective Method for Submarine Buried Pipeline Detection via Multi-Sensor Data Fusion. IEEE Access 2019, 7, 125300–125309. [Google Scholar] [CrossRef]

- Wunderlich, J.; Wendt, G.; Müller, S. High-Resolution Echo-Sounding and Detection of Embedded Archaeological Objects with Nonlinear Sub-Bottom Profilers. Mar. Geophys. Res. 2005, 26, 123–133. [Google Scholar] [CrossRef]

- Wang, C.; Jiang, Y.; Wang, K.; Wei, F. A Field-Programmable Gate Array System for Sonar Image Recognition Based on Convolutional Neural Network. Proc. Inst. Mech. Eng. Part I J. Syst. Control. Eng. 2021, 235, 1808–1818. [Google Scholar] [CrossRef]

- Zheng, G.; Zhang, H.; Li, Y.; Zhao, J. A Universal Automatic Bottom Tracking Method of Side Scan Sonar Data Based on Semantic Segmentation. Remote Sens. 2021, 13, 1945. [Google Scholar] [CrossRef]

- Li, J.; Chen, L.; Shen, J.; Xiao, X.; Liu, X.; Sun, X.; Wang, X.; Li, D. Improved Neural Network with Spatial Pyramid Pooling and Online Datasets Preprocessing for Underwater Target Detection Based on Side Scan Sonar Imagery. Remote Sens. 2023, 15, 440. [Google Scholar] [CrossRef]

- Chen, Z.; Wu, R.; Lin, Y.; Li, C.; Chen, S.; Yuan, Z.; Chen, S.; Zou, X. Plant Disease Recognition Model Based on Improved YOLOv5. Agronomy 2022, 12, 365. [Google Scholar] [CrossRef]

- Yang, L.; Yuan, G.; Zhou, H.; Liu, H.; Chen, J.; Wu, H. RS-YOLOX: A High-Precision Detector for Object Detection in Satellite Remote Sensing Images. Appl. Sci. 2022, 12, 8707. [Google Scholar] [CrossRef]

- Keles, M.C.; Salmanoglu, B.; Guzel, M.S.; Gursoy, B.; Bostanci, G.E. Evaluation of YOLO Models with Sliced Inference for Small Object Detection. arXiv 2022, arXiv:2203.04799. [Google Scholar] [CrossRef]

- Jocher, G. YOLOv5 by Ultralytics 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 1 March 2024).

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13708–13717. [Google Scholar] [CrossRef]

- Weston, J.; Sukhbaatar, S. System 2 Attention (Is Something You Might Need Too). arXiv 2023, arXiv:2311.11829. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Akyon, F.C.; Altinuc, S.O.; Temizel, A. Slicing Aided Hyper Inference and Fine-Tuning for Small Object Detection. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 966–970. [Google Scholar] [CrossRef]

| Parameter | Configuration |

|---|---|

| Operating system | Windows 11 |

| Deep learning framework | PyTorch 1.9.1+cu111 |

| Programming language | python3.9 |

| GPU accelerated environment | cuda11.1 |

| GPU | NVIDIA GeForce RTX 4060 Laptop |

| CPU | 12th Gen Intel(R) Core(TM) i7-12650H |

| Models | P | R | mAP@0.5 |

|---|---|---|---|

| YOLOv5s | 0.852 | 0.438 | 0.681 |

| YOLOv5m | 0.861 | 0.610 | 0.749 |

| YOLOv5l | 0.840 | 0.555 | 0.729 |

| YOLOv5s+SE | 0.845 | 0.637 | 0.754 |

| YOLOv5s+CA | 0.812 | 0.623 | 0.727 |

| YOLOv5s+S2-MLPv2+SE | 0.848 | 0.651 | 0.760 |

| Models | P | R | F1 |

|---|---|---|---|

| YOLOv5s | 0.885 | 0.576 | 0.698 |

| YOLOv5m | 0.796 | 0.872 | 0.832 |

| YOLOv5l | 0.870 | 0.856 | 0.863 |

| YOLOv5s+SE | 0.808 | 0.840 | 0.823 |

| YOLOv5s+CA | 0.824 | 0.872 | 0.818 |

| YOLOv5s+S2-MLPv2+SE | 0.825 | 0.992 | 0.900 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Duan, B.; Wang, S.; Luo, C.; Chen, Z. Multi-Module Fusion Model for Submarine Pipeline Identification Based on YOLOv5. J. Mar. Sci. Eng. 2024, 12, 451. https://doi.org/10.3390/jmse12030451

Duan B, Wang S, Luo C, Chen Z. Multi-Module Fusion Model for Submarine Pipeline Identification Based on YOLOv5. Journal of Marine Science and Engineering. 2024; 12(3):451. https://doi.org/10.3390/jmse12030451

Chicago/Turabian StyleDuan, Bochen, Shengping Wang, Changlong Luo, and Zhigao Chen. 2024. "Multi-Module Fusion Model for Submarine Pipeline Identification Based on YOLOv5" Journal of Marine Science and Engineering 12, no. 3: 451. https://doi.org/10.3390/jmse12030451

APA StyleDuan, B., Wang, S., Luo, C., & Chen, Z. (2024). Multi-Module Fusion Model for Submarine Pipeline Identification Based on YOLOv5. Journal of Marine Science and Engineering, 12(3), 451. https://doi.org/10.3390/jmse12030451