GT-YOLO: Nearshore Infrared Ship Detection Based on Infrared Images

Abstract

1. Introduction

- (1)

- Based on its physical properties, the resolution of infrared images is lower compared to visible light images. This results in limited information for small target ships, and during the feature extraction process, crucial details are easily lost, thereby affecting the detection.

- (2)

- From an ocean perspective, ships of the same category exhibit different scales in the images, which can easily result in the loss of small targets. This necessitates that detection algorithms have a stronger capability for multi-scale target detection.

- (3)

- Coastal areas and ports often harbor a substantial number of ships. The occurrence of mutual occlusion between ships poses a significant challenge for the accurate positioning and classification of ships in nearshore environments.

- (1)

- To capture distant contextual information of the targets, this paper introduces a feature fusion module based on a fused attention mechanism. This module enhances feature fusion while suppressing noise introduced by shallow feature layers.

- (2)

- To suppress the unclear detail information caused by low resolution and its impact on small object detection, the SPD-Conv module was introduced to improve the detection accuracy of small objects.

- (3)

- To address the issue of dense occlusion, which is prone to occur near the coast, this paper introduces Soft-NMS to ensure that the detection model still has excellent detection performance in dense occlusion scenes.

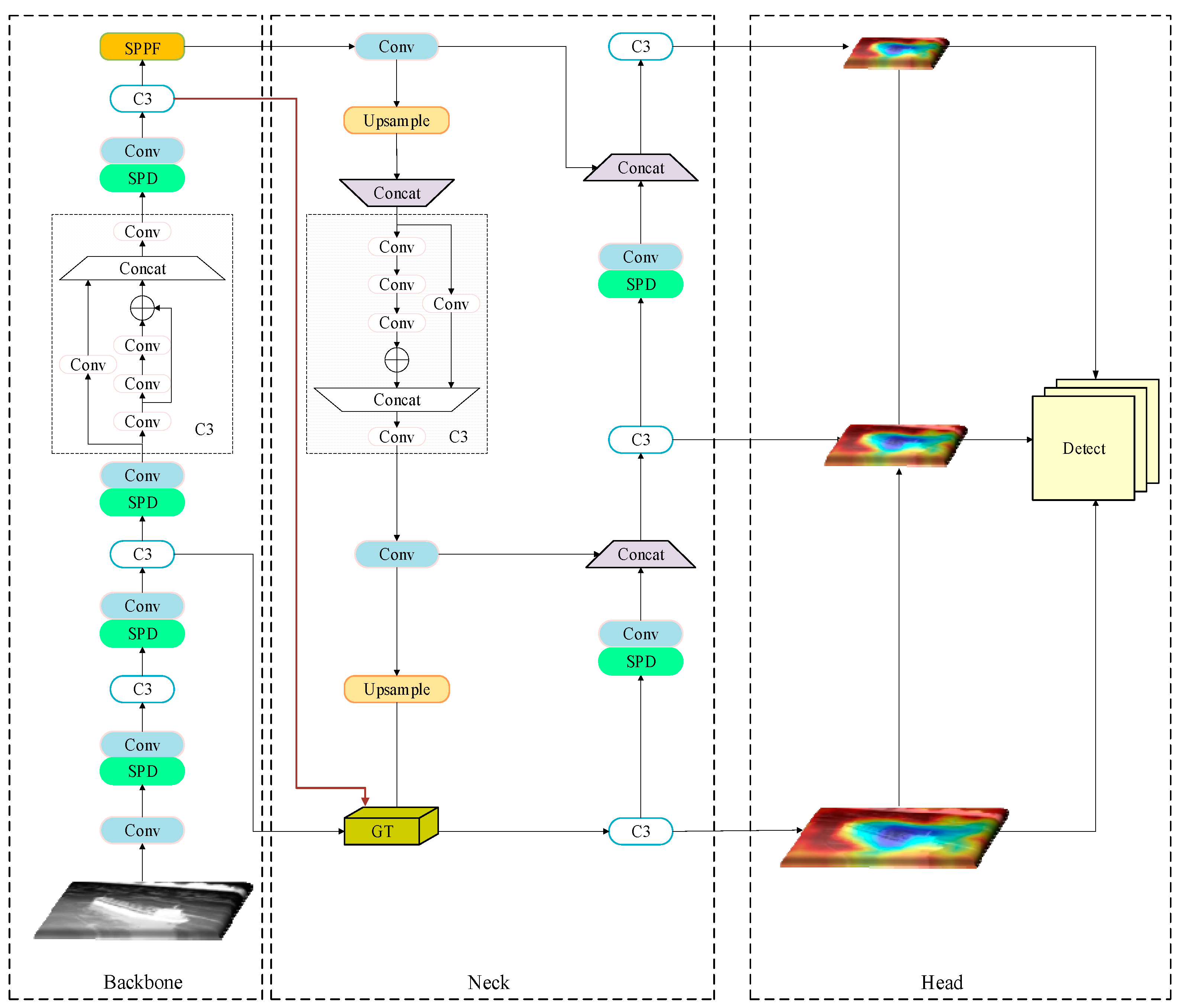

2. GT_YOLO

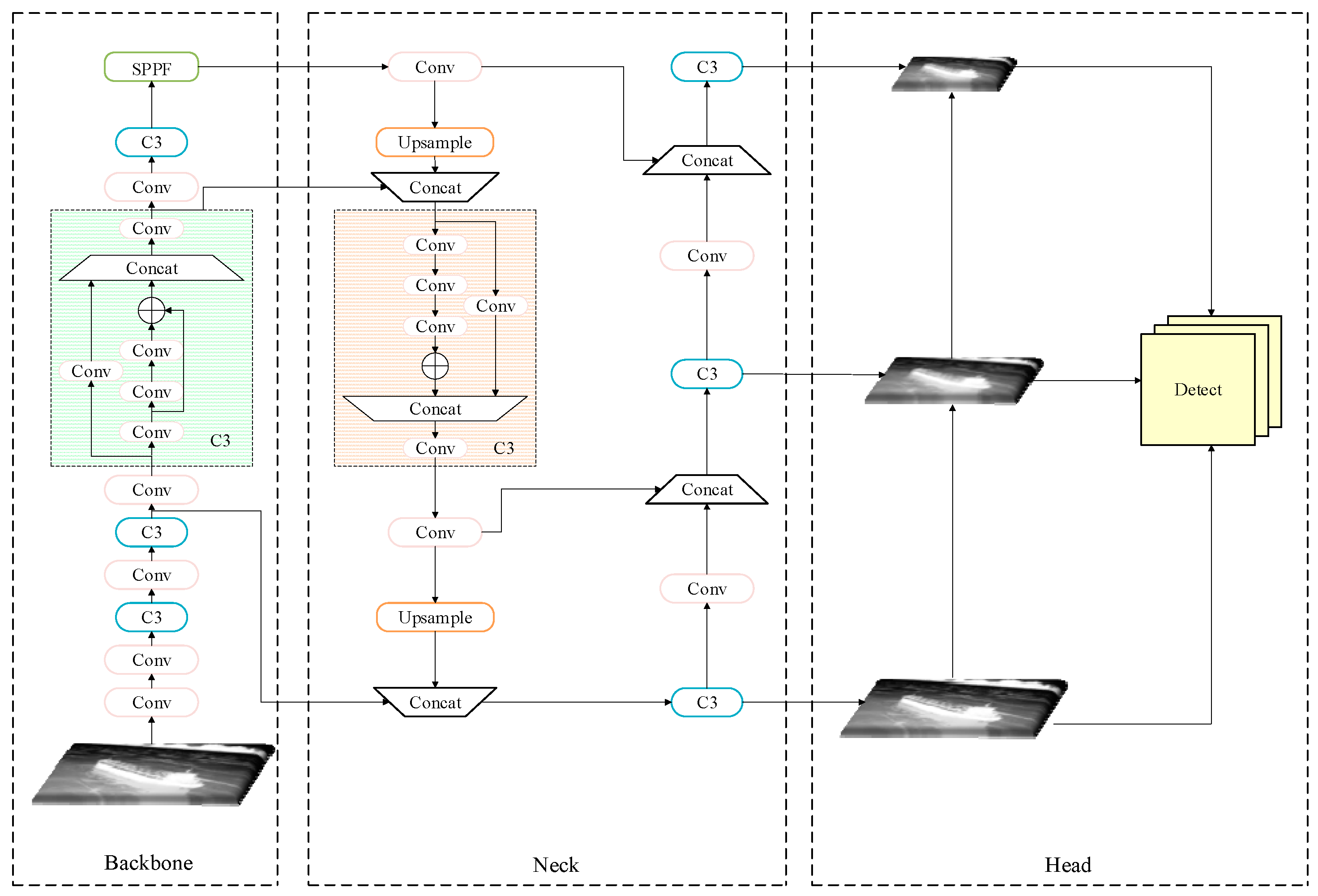

2.1. YOLOv5

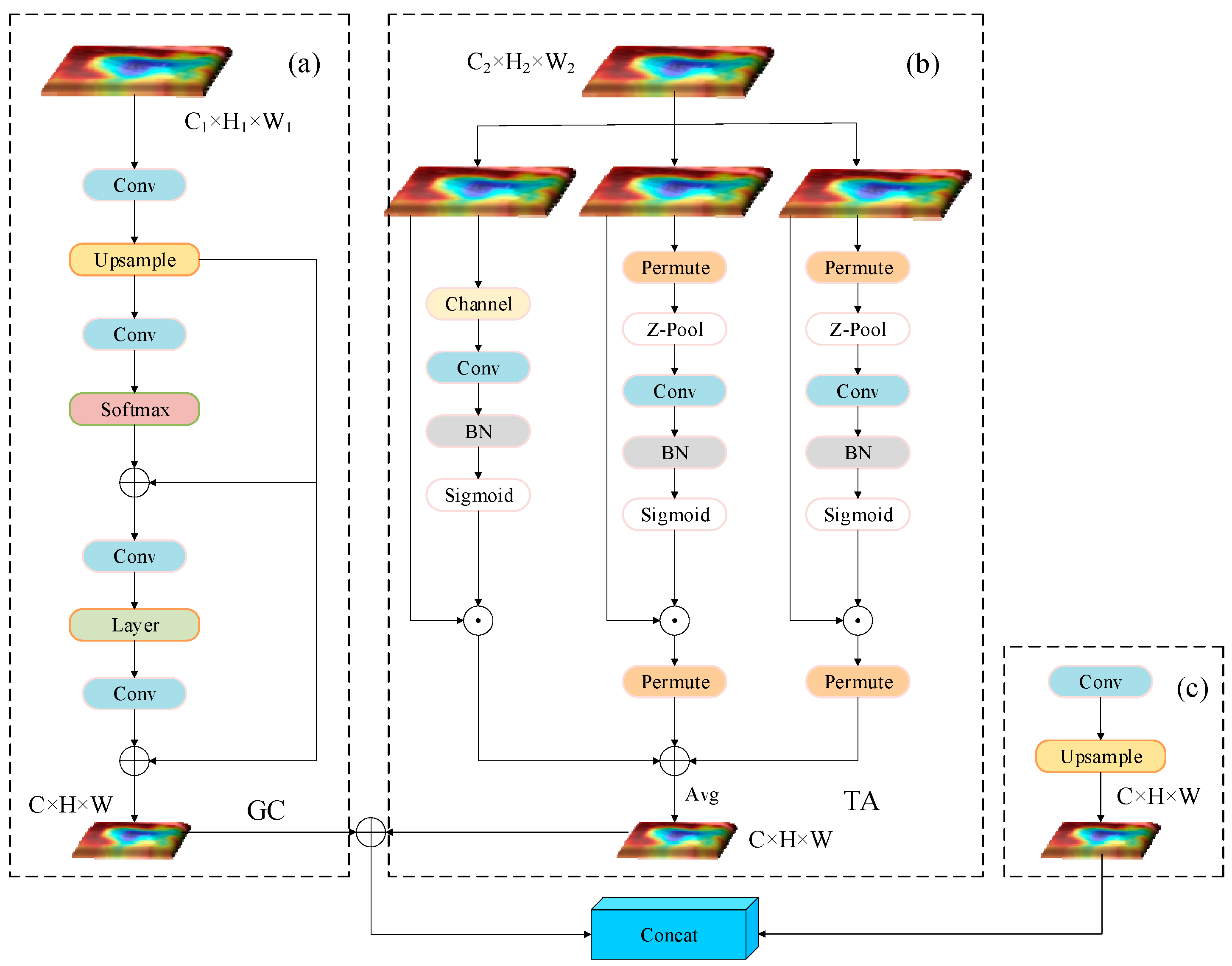

2.2. Feature Fusion Enhancement

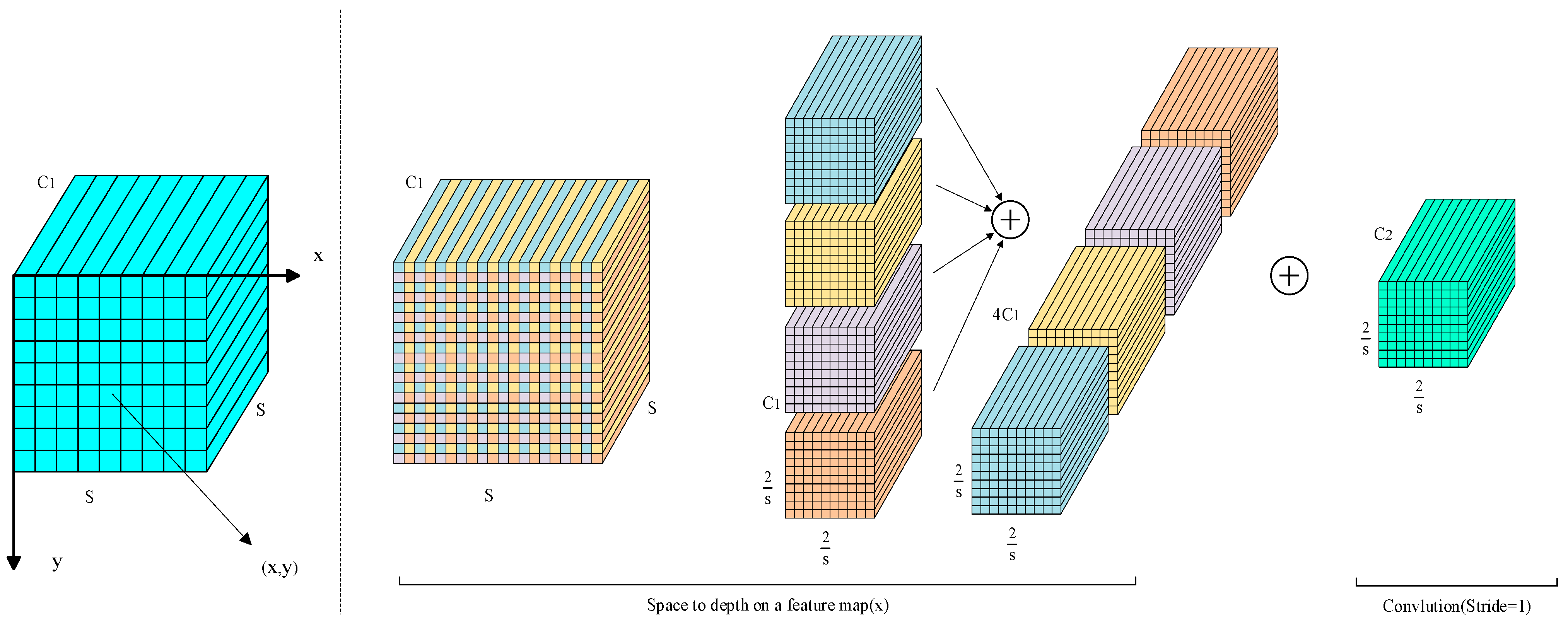

2.3. SPD-Conv

2.4. Soft-NMS

3. Experiments

3.1. Experimental Settings

3.2. Infrared Ship Dataset

3.3. Evaluation Metrics

3.4. Results and Discussion

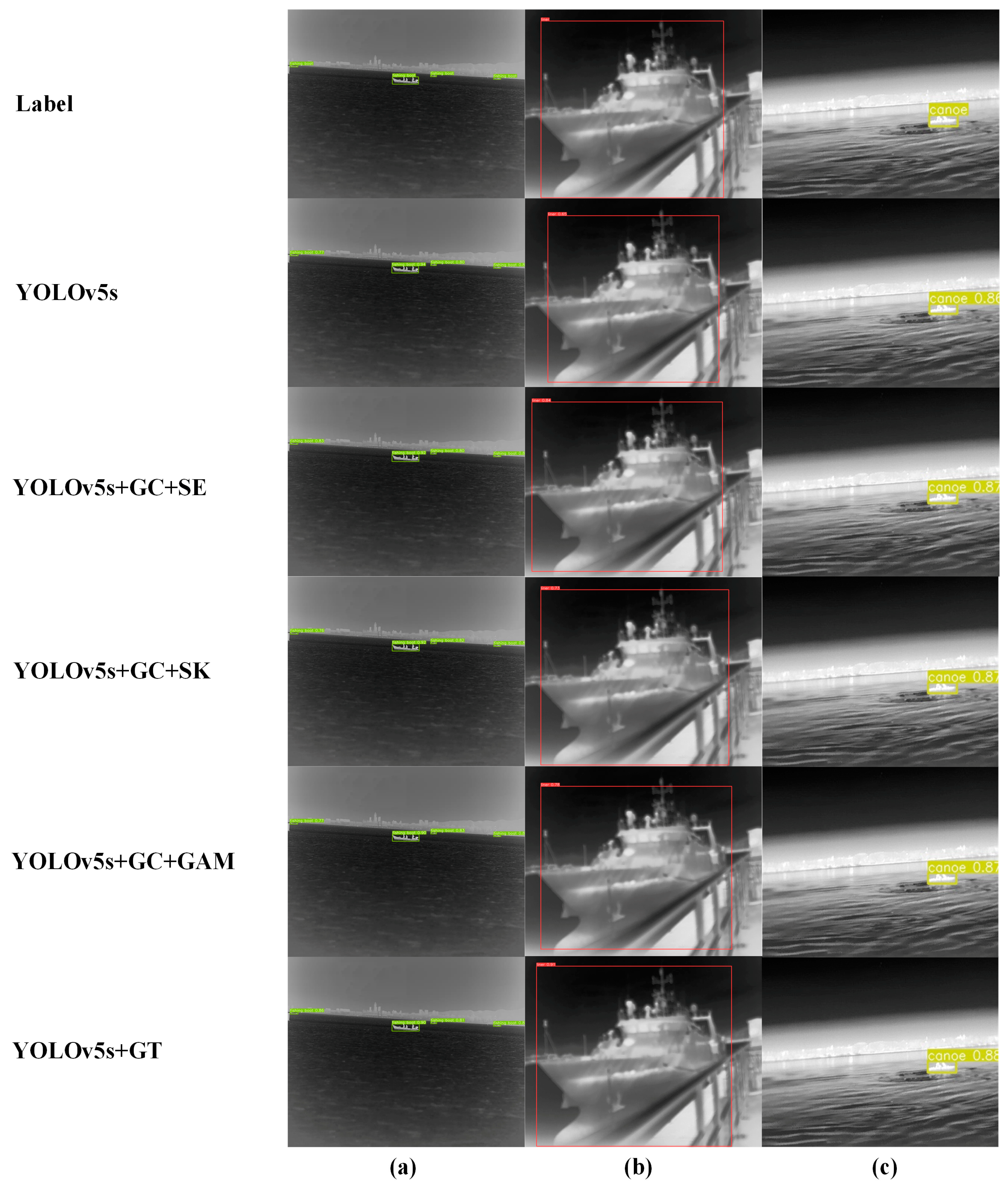

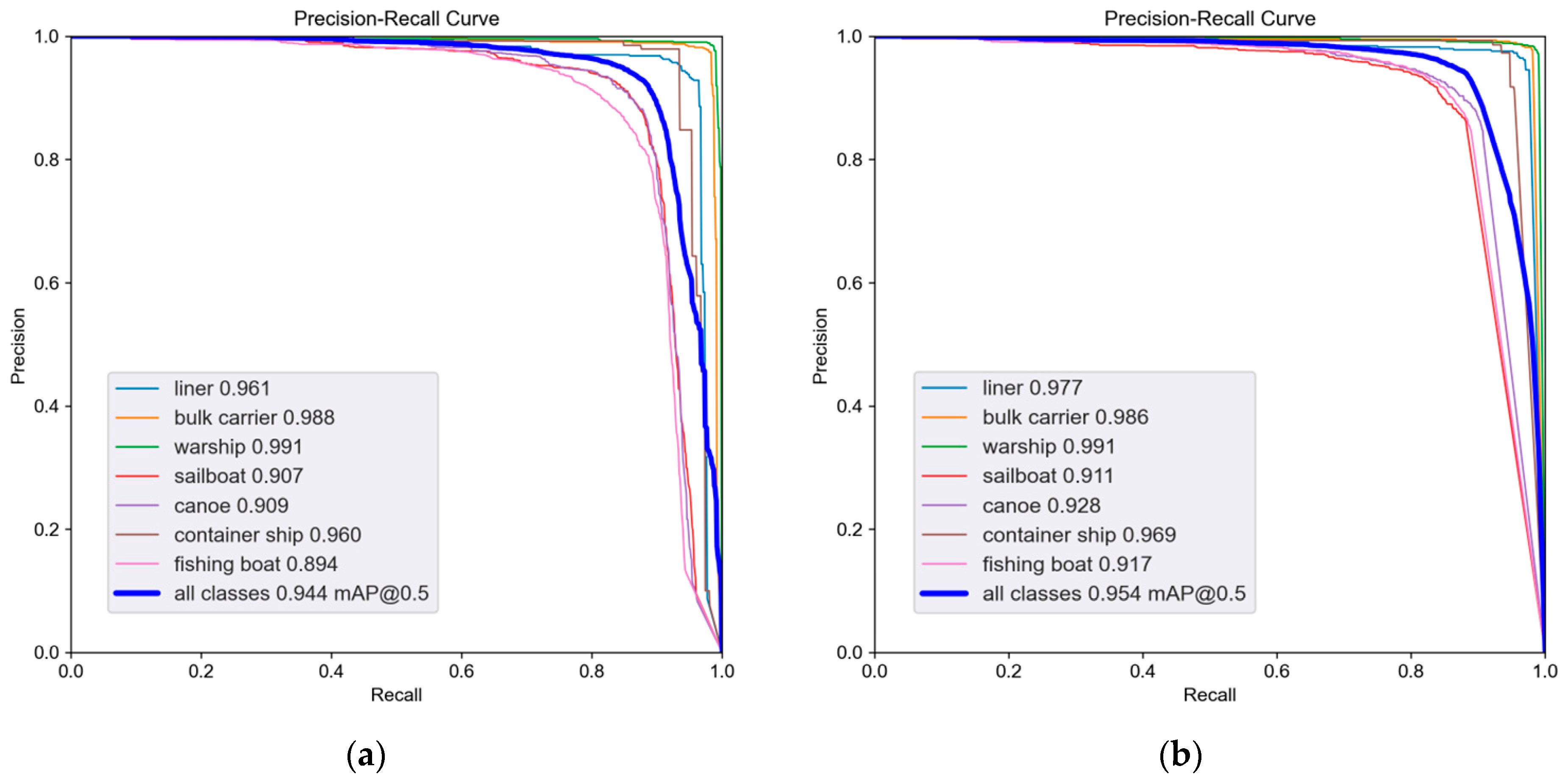

3.4.1. The Impact of the Feature Fusion Module

3.4.2. Ablation Experiment

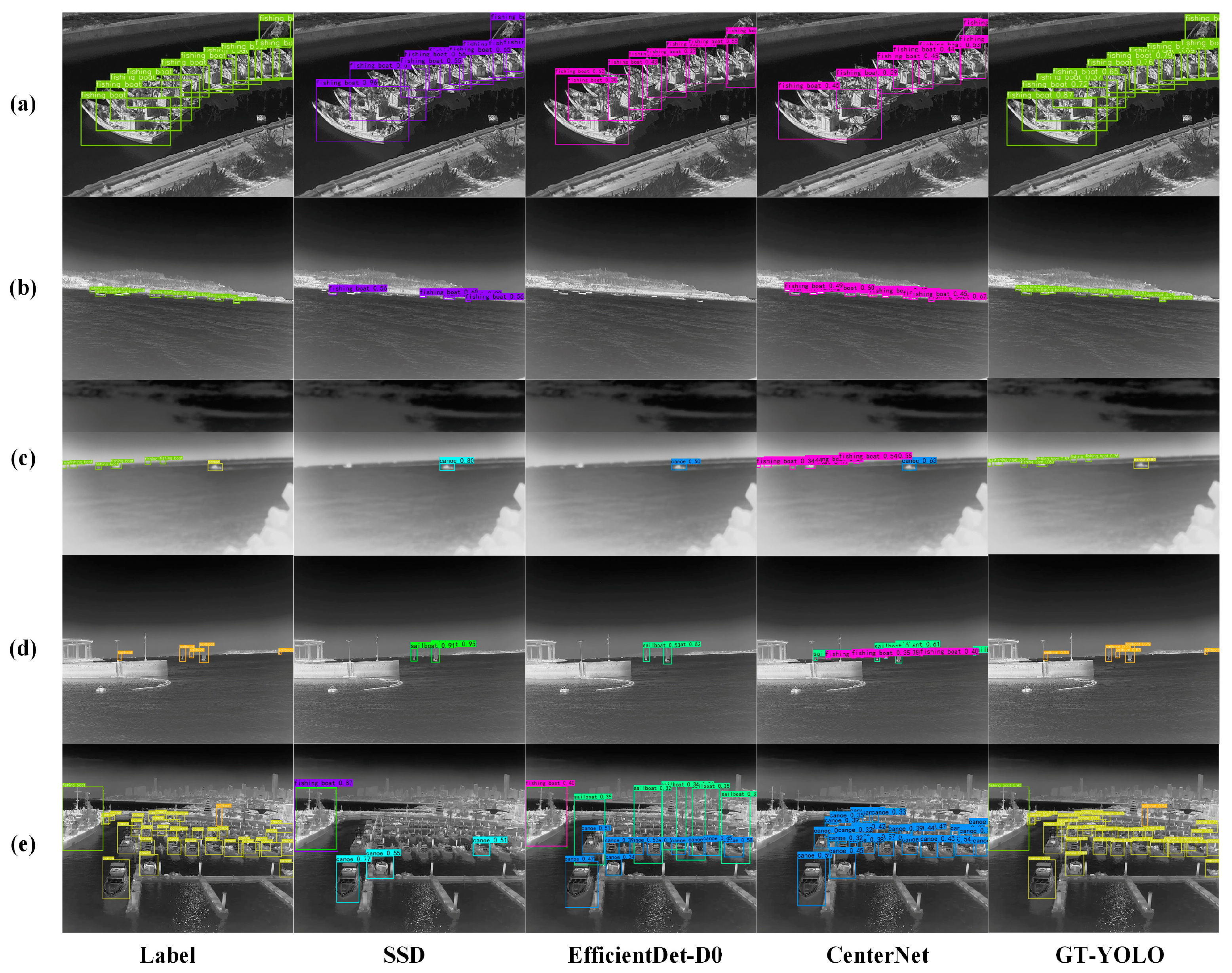

3.4.3. Comparison with Other Algorithms

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhu, J.; Yang, Y.; Cheng, Y. A Millimeter-Wave Radar-Aided Vision Detection Method for Water Surface Small Object Detection. J. Mar. Sci. Eng. 2023, 11, 1794. [Google Scholar] [CrossRef]

- Li, Y.; Wang, R.; Gao, D.; Liu, Z. A Floating-Waste-Detection Method for Unmanned Surface Vehicle Based on Feature Fusion and Enhancement. J. Mar. Sci. Eng. 2023, 11, 2234. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick et, al. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Computer Vision–ECCV 2016, In Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016, Proceedings, Part I 14; Lecture notes in computer science; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: A simple and strong anchor-free object detector. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1922–1933. [Google Scholar] [CrossRef] [PubMed]

- Shi, Q.; Zhang, C.; Chen, Z.; Lu, F.; Ge, L.; Wei, S. An infrared small target detection method using coordinate attention and feature fusion. Infrared Phys. Technol. 2023, 131, 104614. [Google Scholar] [CrossRef]

- Ye, J.; Yuan, Z.; Qian, C.; Li, X. Caa-yolo: Combined-attention-augmented yolo for infrared ocean ships detection. Sensors 2022, 22, 3782. [Google Scholar] [CrossRef] [PubMed]

- Si, J.; Song, B.; Wu, J.; Lin, W.; Huang, W.; Chen, S. Maritime Ship Detection Method for Satellite Images Based on Multiscale Feature Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 6642–6655. [Google Scholar] [CrossRef]

- Guo, H.; Gu, D. Closely arranged inshore ship detection using a bi-directional attention feature pyramid network. Int. J. Remote Sens. 2023, 44, 7106–7125. [Google Scholar] [CrossRef]

- Wang, J.; Pan, Q.; Lu, D.; Zhang, Y. An Efficient Ship-Detection Algorithm Based on the Improved YOLOv5. Electronics 2023, 12, 3600. [Google Scholar] [CrossRef]

- Shi, T.; Ding, Y.; Zhu, W. YOLOv5s_2E: Improved YOLOv5s for Aerial Small Target Detection. IEEE Access 2023, 11, 80479–80490. [Google Scholar] [CrossRef]

- Cao, Y.; Xu, J.; Lin, S.; Wei, F.; Hu, H. Gcnet: Non-local networks meet squeeze-excitation networks and beyond. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019; pp. 1971–1980. [Google Scholar]

- Misra, D.; Nalamada, T.; Arasanipalai, A.U.; Hou, Q. Rotate to attend: Convolutional triplet attention module. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3139–3148. [Google Scholar]

- Kim, Y.; Kang, B.N.; Kim, D. San: Learning relationship between convolutional features for multi-scale object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 316–331. [Google Scholar]

- Sunkara, R.; Luo, T. No more strided convolutions or pooling: A new CNN building block for low-resolution images and small objects. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases; Springer Nature: Cham, Switzerland, 2022; pp. 443–459. [Google Scholar]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-NMS--improving object detection with one line of code. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5561–5569. [Google Scholar]

- InfiRay Dataset [OL]. Available online: http://openai.iraytek.com/apply/Sea_shipping.html/ (accessed on 15 March 2023).

- Konovalenko, I.; Maruschak, P.; Kozbur, H.; Brezinová, J.; Brezina, J.; Nazarevich, B.; Shkira, Y. Influence of uneven lighting on quantitative indicators of surface defects. Machines 2022, 10, 194. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global attention mechanism: Retain information to enhance channel-spatial interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective kernel networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 510–519. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao HY, M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

| Name | Configuration |

|---|---|

| Learning rate | 0.01 |

| Momentum | 0.937 |

| Data enhancement | MOSAIC |

| Epochs | 150 |

| Batch size | 8 |

| Model | mAP0.5 (%) | mAP0.75 (%) | mAP0.5:0.95 (%) | GFLOPS | Parameters (M) | FPS |

|---|---|---|---|---|---|---|

| YOLOv5s | 0.944 | 0.777 | 0.695 | 15.8 | 7 | 244 |

| 5s + GC + SE | 0.947 | 0.774 | 0.695 | 17.0 | 7.2 | 243 |

| 5s + GC + GAM | 0.948 | 0.779 | 0.699 | 18.4 | 7.3 | 217 |

| 5s + GC + SK | 0.947 | 0.776 | 0.698 | 21.4 | 7.5 | 210 |

| 5s + GT | 0.948 | 0.783 | 0.704 | 17.1 | 7.2 | 238 |

| Model | mAP0.75 (%) | mAP0.5:0.95 (%) | GFLOPS | Parameters (M) |

|---|---|---|---|---|

| YOLOv5s | 77.7 | 69.5 | 15.8 | 7 |

| YOLOv5s + GT | 78.3 | 70.4 | 17.1 | 7.2 |

| YOLOv5s + GT + SPD | 79.2 | 71.3 | 34.4 | 8.7 |

| YOLOv5s + GT + SPD + Soft-NMS | 83.4 | 74.5 | 34.4 | 8.7 |

| Class | YOLOv5s | GT-YOLO | ||

|---|---|---|---|---|

| AP0.75 (%) | AP0.5:0.95 (%) | AP0.75 (%) | AP0.5:0.95 (%) | |

| Liner | 78.3 | 68 | 85.8 | 74.4 |

| Bulk carrier | 94.9 | 82 | 96.4 | 85.7 |

| Warship | 95.7 | 85.2 | 96.9 | 87.5 |

| Sailboat | 59.8 | 56.7 | 69 | 62.6 |

| Canoe | 67.3 | 59.9 | 75.8 | 65.4 |

| Container ship | 91.6 | 80.7 | 93.1 | 84.6 |

| Fishing boat | 56.5 | 54.3 | 66.6 | 60.8 |

| Model | mAP0.5 (%) | mAP0.5:0.95 (%) | GFLOPS | Parameters (M) |

|---|---|---|---|---|

| YOLOv5s | 94.4 | 69.5 | 15.8 | 7.0 |

| YOLOv5m | 95.2 | 71.1 | 47.9 | 20.9 |

| YOLOv7-Tiny [25] | 92.9 | 65.6 | 13.2 | 6.0 |

| YOLOv8s | 93.2 | 71 | 28.5 | 11.1 |

| GT-YOLO | 95.4 | 74.5 | 34.4 | 8.7 |

| Model | mAP0.5 (%) | mAP0.5:0.95 (%) | GFLOPS | Parameters (M) | FPS |

|---|---|---|---|---|---|

| SSD | 78 | 46.6 | 39 | 12.50 | 149 |

| EfficientDet-D0 | 62.2 | 39.5 | 4.8 | 3.83 | 53 |

| CenterNet | 78.5 | 48.6 | 70 | 32 | 125 |

| GT-YOLO | 95.4 | 74.5 | 34.4 | 8.7 | 152 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Wang, B.; Huo, L.; Fan, Y. GT-YOLO: Nearshore Infrared Ship Detection Based on Infrared Images. J. Mar. Sci. Eng. 2024, 12, 213. https://doi.org/10.3390/jmse12020213

Wang Y, Wang B, Huo L, Fan Y. GT-YOLO: Nearshore Infrared Ship Detection Based on Infrared Images. Journal of Marine Science and Engineering. 2024; 12(2):213. https://doi.org/10.3390/jmse12020213

Chicago/Turabian StyleWang, Yong, Bairong Wang, Lile Huo, and Yunsheng Fan. 2024. "GT-YOLO: Nearshore Infrared Ship Detection Based on Infrared Images" Journal of Marine Science and Engineering 12, no. 2: 213. https://doi.org/10.3390/jmse12020213

APA StyleWang, Y., Wang, B., Huo, L., & Fan, Y. (2024). GT-YOLO: Nearshore Infrared Ship Detection Based on Infrared Images. Journal of Marine Science and Engineering, 12(2), 213. https://doi.org/10.3390/jmse12020213