Underwater Refractive Stereo Vision Measurement and Simulation Imaging Model Based on Optical Path

Abstract

1. Introduction

2. Methods

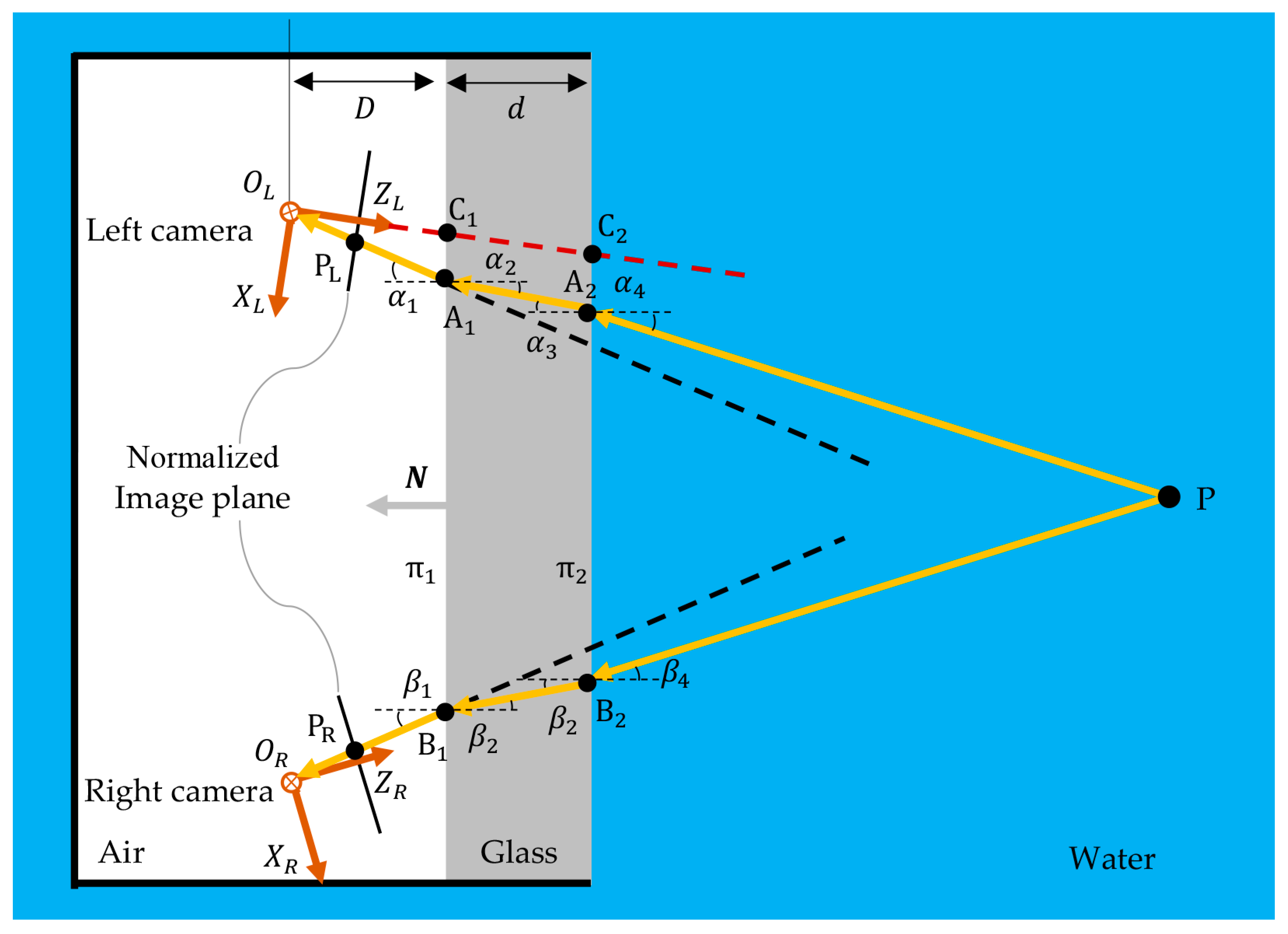

2.1. Stereo Measurement Model with Two Flat Interfaces

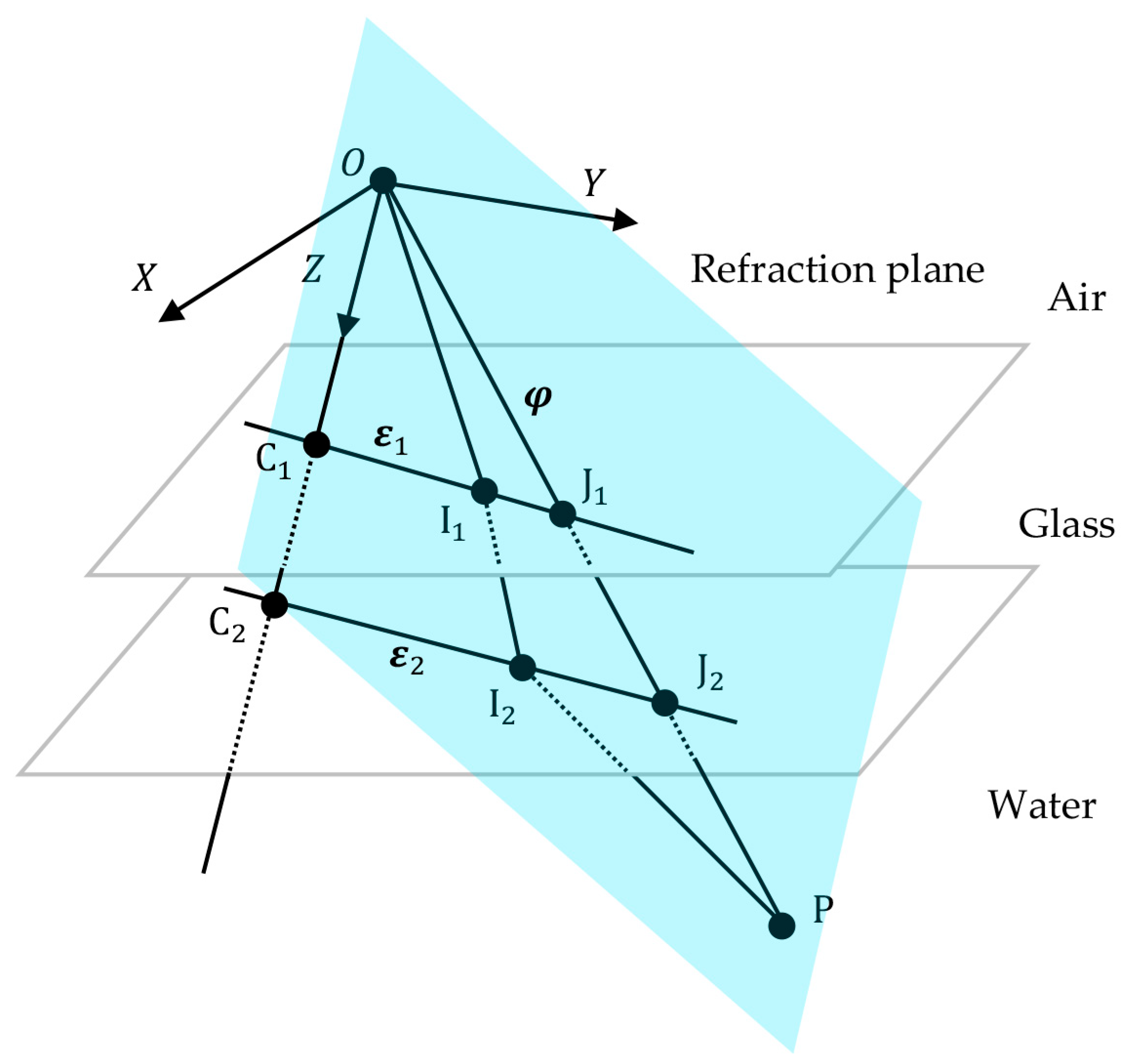

2.2. Simulation Imaging Model with Two Flat Interfaces

3. Results

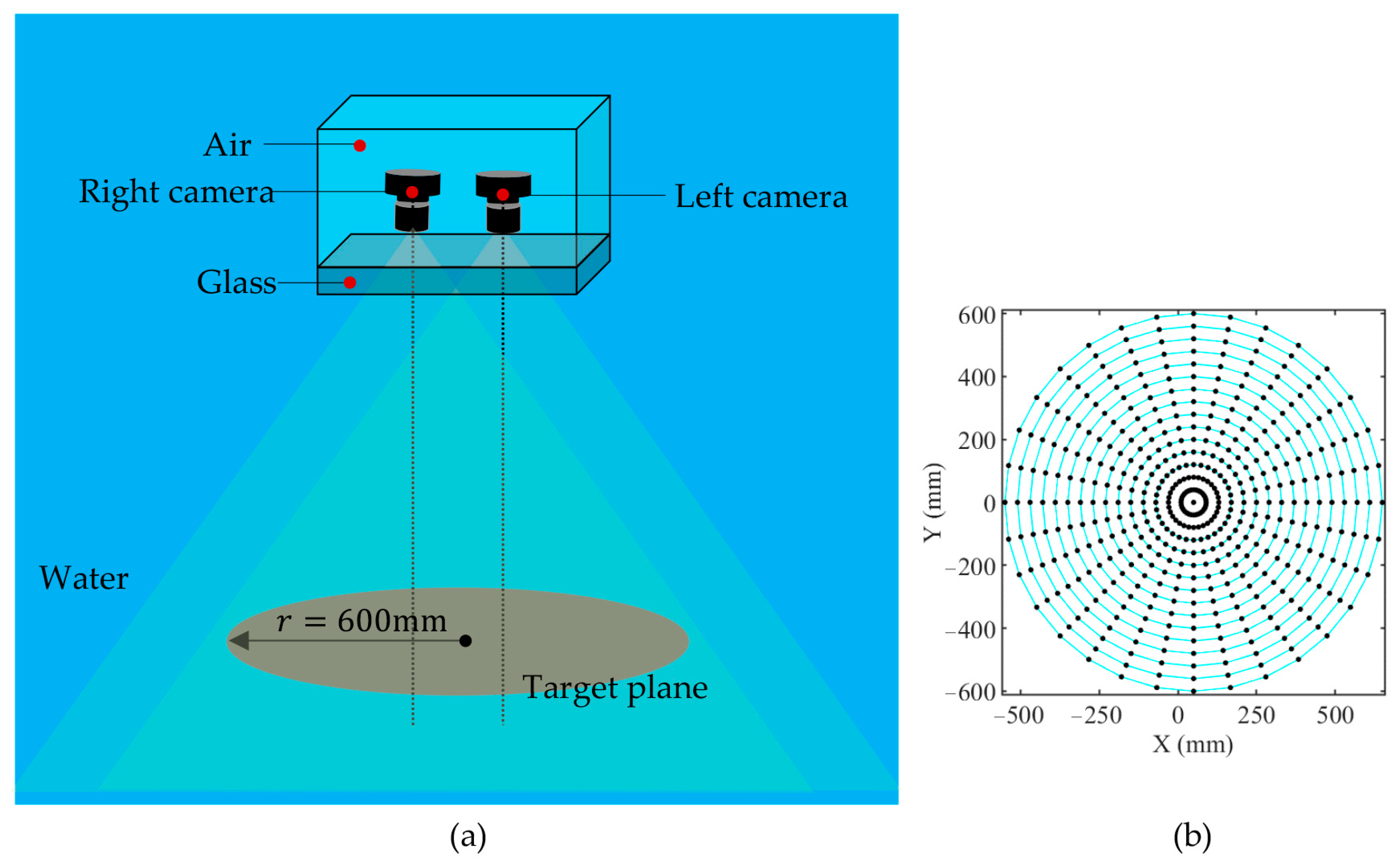

3.1. Experimental Design

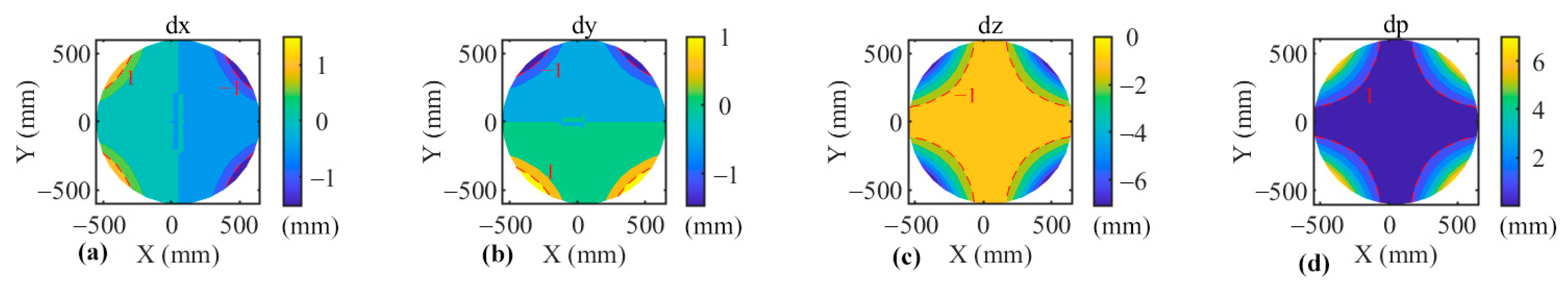

3.2. Intersection of Refracted Light from the Left and Right Cameras

3.3. Performance Evaluation of Stereo Measurement Model

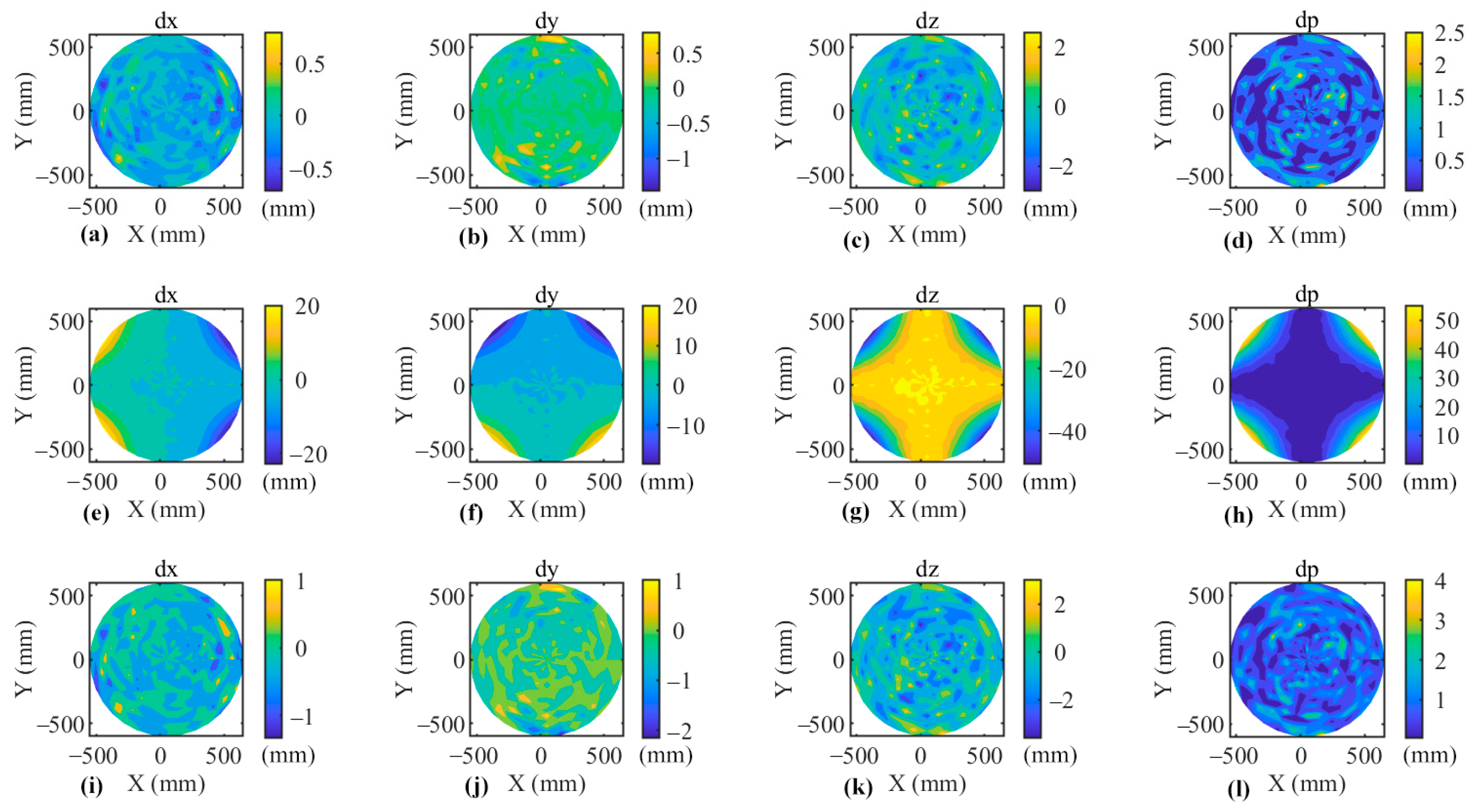

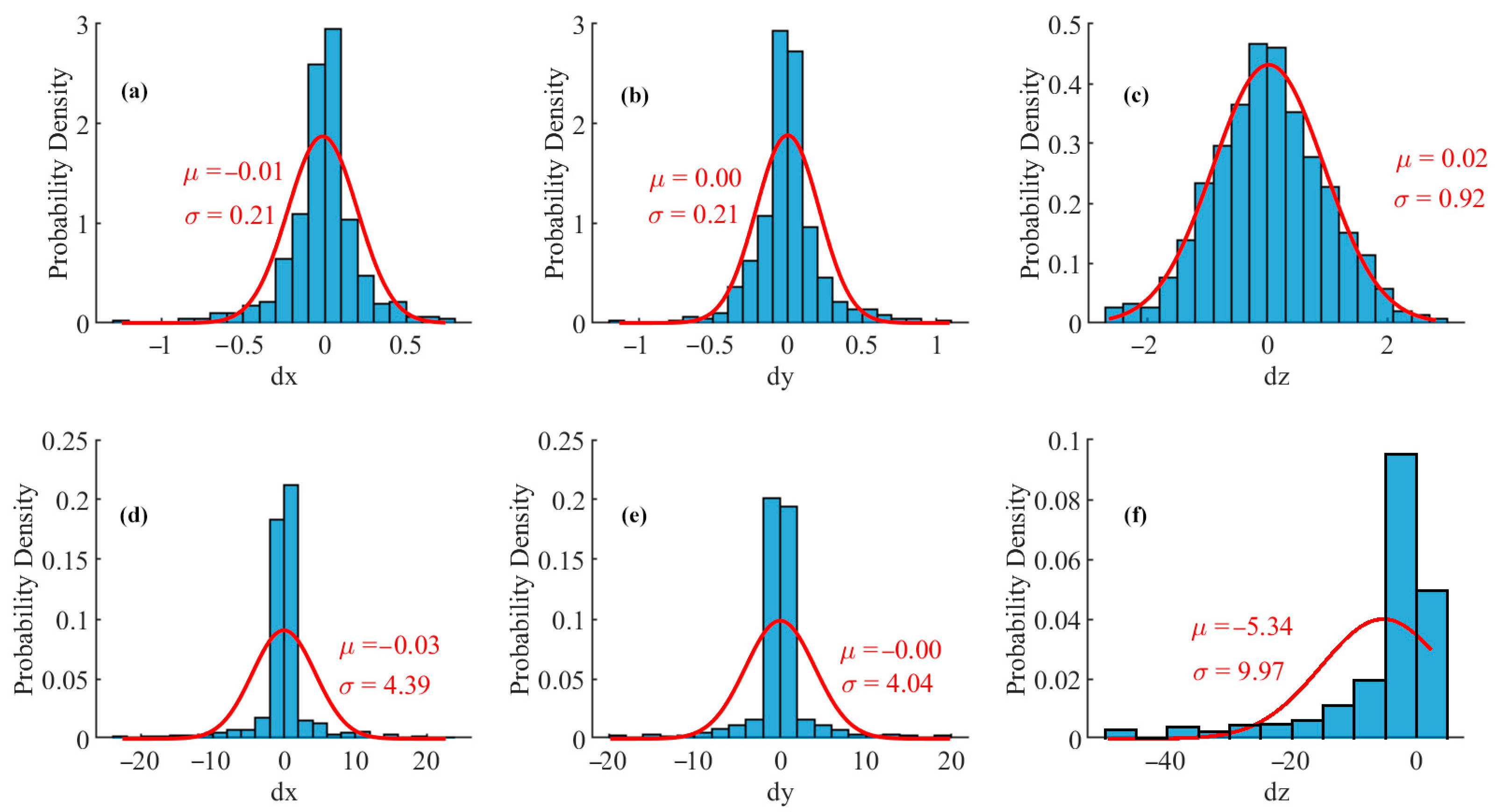

3.3.1. Ideal Conditions

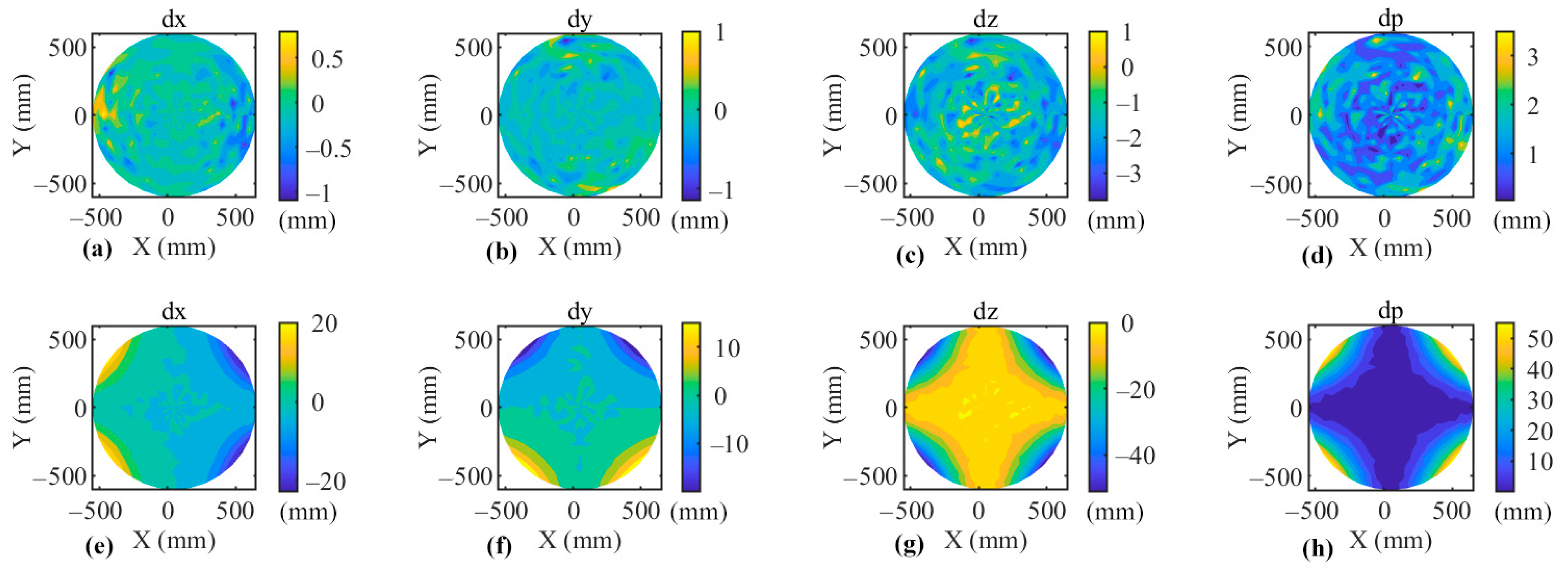

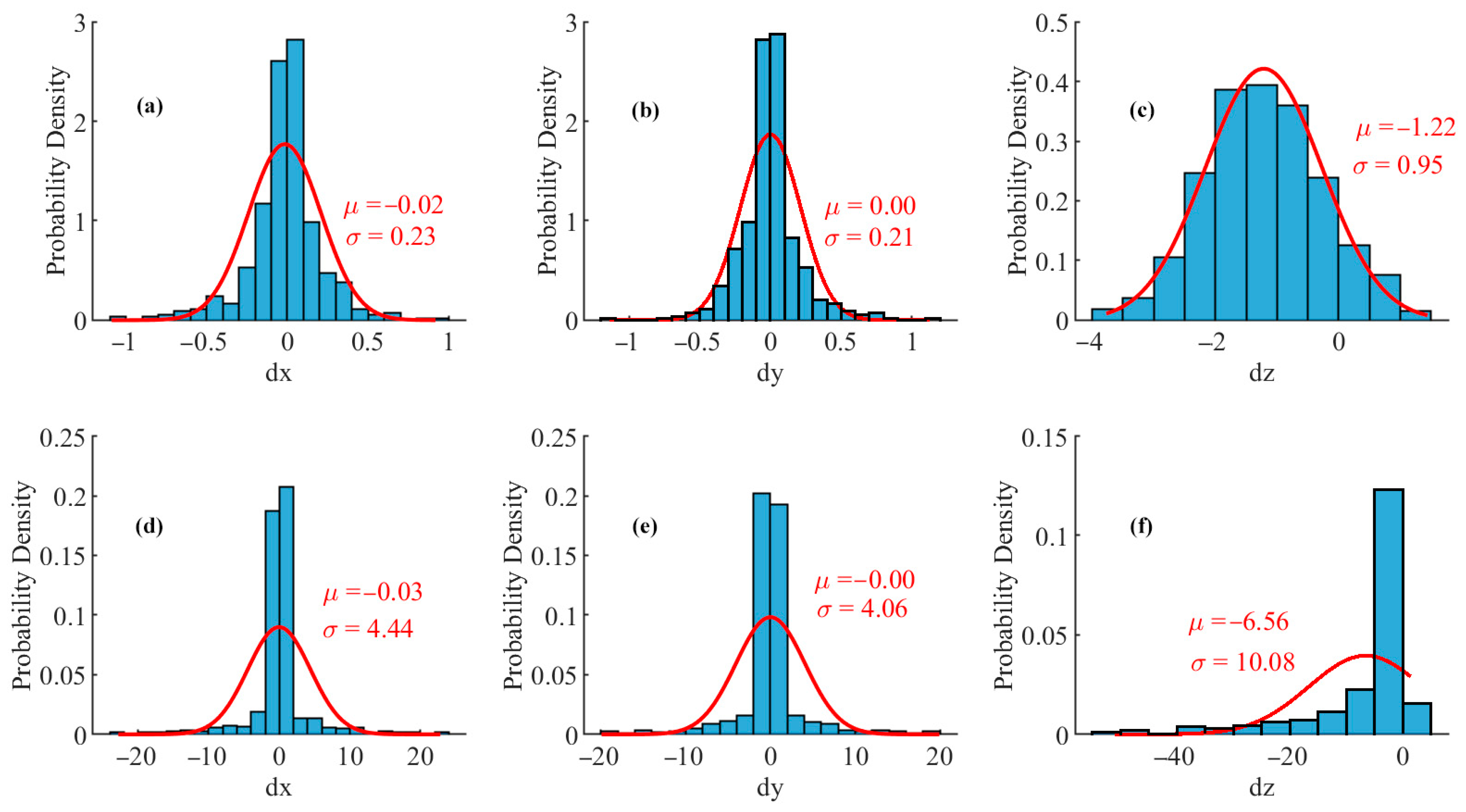

3.3.2. Simulated Pixel Coordinates Containing Errors

3.3.3. Simulated Pixel Coordinates with Errors and n3 with Deviation

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wu, T.; Hou, S.; Sun, W.; Shi, J.; Yang, F.; Zhang, J.; Wu, G.; He, X. Visual measurement method for three-dimensional shape of underwater bridge piers considering multirefraction correction. Automat. Constr. 2023, 146, 104706. [Google Scholar] [CrossRef]

- Nocerino, E.; Menna, F.; Gruen, A.; Troyer, M.; Capra, A.; Castagnetti, C.; Rossi, P.; Brooks, A.J.; Schmitt, R.J.; Holbrook, S.J. Coral Reef Monitoring by Scuba Divers Using Underwater Photogrammetry and Geodetic Surveying. Remote Sens. 2020, 12, 3036. [Google Scholar] [CrossRef]

- Liu, S.; Xu, H.; Lin, Y.; Gao, L. Visual Navigation for Recovering an AUV by Another AUV in Shallow Water. Sensors 2019, 19, 1889. [Google Scholar] [CrossRef]

- Bruno, F.; Bianco, G.; Muzzupappa, M.; Barone, S.; Razionale, A.V. Experimentation of structured light and stereo vision for underwater 3D reconstruction. ISPRS J. Photogramm. 2011, 66, 508–518. [Google Scholar] [CrossRef]

- Kazakidi, A.; Zabulis, X.; Tsakiris, D.P. Vision-based 3D motion reconstruction of octopus arm swimming and comparison with an 8-arm underwater robot. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015. [Google Scholar]

- Chadebecq, F.; Vasconcelos, F.; Lacher, R.; Maneas, E.; Desjardins, A.; Ourselin, S.; Vercauteren, T.; Stoyanov, D. Refractive Two-View Reconstruction for Underwater 3D Vision. Int. J. Comput. Vis. 2020, 128, 1101–1117. [Google Scholar] [CrossRef]

- Tong, Z.; Gu, L.; Shao, X. Refraction error analysis in stereo vision for system parameters optimization. Measurement 2023, 222, 113650. [Google Scholar] [CrossRef]

- Treibitz, T.; Schechner, Y.Y. Active Polarization Descattering. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 385–399. [Google Scholar] [CrossRef] [PubMed]

- Schechner, Y.Y.; Karpel, N. Recovery of Underwater Visibility and Structure by Polarization Analysis. IEEE J. Ocean. Eng. 2005, 30, 570–587. [Google Scholar] [CrossRef]

- Łuczyński, T.; Pfingsthorn, M.; Birk, A. The Pinax-model for accurate and efficient refraction correction of underwater cameras in flat-pane housings. Ocean. Eng. 2017, 133, 9–22. [Google Scholar] [CrossRef]

- Menna, F.; Nocerino, E.; Fassi, F.; Remondino, F. Geometric and Optic Characterization of a Hemispherical Dome Port for Underwater Photogrammetry. Sensors 2016, 16, 48. [Google Scholar] [CrossRef]

- Kwon, Y.; Casebolt, J.B. Effects of light refraction on the accuracy of camera calibration and reconstruction in underwater motion analysis. Sport. Biomech. 2006, 5, 95–120. [Google Scholar] [CrossRef] [PubMed]

- Fabio, M.; Erica, N.; Salvatore, T.; Fabio, R. A photogrammetric approach to survey floating and semi-submerged objects. In Proceedings of the Videometrics, Range Imaging, and Applications XII, and Automated Visual Inspection, Munich, Germany, 13–16 May 2013. [Google Scholar]

- Kang, L.; Wu, L.; Yang, Y. Experimental study of the influence of refraction on underwater three-dimensional reconstruction using the SVP camera model. Appl. Opt. 2012, 51, 7591–7603. [Google Scholar] [CrossRef]

- Lavest, J.M.; Rives, G.; Laprest, J.T. Underwater Camera Calibration. In Proceedings of the European Conference on Computer Vision (ECCV) 2000, Dublin, Ireland, 26 June–1 July 2000. [Google Scholar]

- Treibitz, T.; Schechner, Y.; Kunz, C.; Singh, H. Flat Refractive Geometry. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 51–65. [Google Scholar] [CrossRef]

- Kang, L.; Wu, L.; Wei, Y.; Lao, S.; Yang, Y. Two-view underwater 3D reconstruction for cameras with unknown poses under flat refractive interfaces. Pattern Recogn. 2017, 69, 251–269. [Google Scholar] [CrossRef]

- Shortis, M. Calibration Techniques for Accurate Measurements by Underwater Camera Systems. Sensors 2015, 15, 30810–30826. [Google Scholar] [CrossRef]

- Li, R.; Li, H.; Zou, W.; Smith, R.G.; Curran, T.A. Quantitative photogrammetric analysis of digital underwater video imagery. IEEE J. Ocean. Eng. 1997, 22, 364–375. [Google Scholar] [CrossRef]

- Jordt-Sedlazeck, A.; Koch, R. Refractive Structure-from-Motion on Underwater Images. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013. [Google Scholar]

- Agrawal, A.; Ramalingam, S.; Taguchi, Y.; Chari, V. A theory of multi-layer flat refractive geometry. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Chen, X.; Yang, Y.H. Two-View Camera Housing Parameters Calibration for Multi-layer Flat Refractive Interface. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Yau, T.; Gong, M.; Yang, Y. Underwater Camera Calibration Using Wavelength Triangulation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Telem, G.; Filin, S. Photogrammetric modeling of underwater environments. ISPRS J. Photogramm. 2010, 65, 433–444. [Google Scholar] [CrossRef]

- Dolereit, T.; von Lukas, U.F.; Kuijper, A. Underwater stereo calibration utilizing virtual object points. In Proceedings of the Oceans 2015, Genova, Italy, 18–21 May 2015. [Google Scholar]

- Qiu, C.; Wu, Z.; Kong, S.; Yu, J. An Underwater Micro Cable-Driven Pan-Tilt Binocular Vision System with Spherical Refraction Calibration. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Su, Z.; Pan, J.; Lu, L.; Dai, M.; He, X.; Zhang, D. Refractive three-dimensional reconstruction for underwater stereo digital image correlation. Opt. Express 2021, 29, 12131. [Google Scholar] [CrossRef]

- Li, G.; Klingbeil, L.; Zimmermann, F.; Huang, S.; Kuhlmann, H. An Integrated Positioning and Attitude Determination System for Immersed Tunnel Elements: A Simulation Study. Sensors 2020, 20, 7296. [Google Scholar] [CrossRef]

- Cowen, S.; Briest, S.; Dombrowski, J. Underwater docking of autonomous undersea vehicles using optical terminal guidance. In Proceedings of the Oceans ’97—MTS/IEEE Conference, Halifax, NS, Canada, 6–9 October 1997; Volume 2, pp. 1143–1147. [Google Scholar]

- Sun, Y.; Zhou, T.; Zhang, L.; Chai, P. Underwater Camera Calibration Based on Double Refraction. J. Mar. Sci. Eng. 2024, 12, 842. [Google Scholar] [CrossRef]

- Qi, G.; Shi, Z.; Hu, Y.; Fan, H.; Dong, J. Refraction calibration of housing parameters for a flat-port underwater camera. Opt. Eng. 2022, 61, 104105. [Google Scholar] [CrossRef]

- Zhang, Z.Y. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Heikkila, J.; Silven, O. A four-step camera calibration procedure with implicit image correction. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, Puerto Rico, 17–19 June 1997. [Google Scholar]

- Gao, Y.; Cheng, T.; Su, Y.; Xu, X.; Zhang, Y.; Zhang, Q. High-efficiency and high-accuracy digital image correlation for three-dimensional measurement. Opt. Laser Eng. 2015, 65, 73–80. [Google Scholar] [CrossRef]

- Millard, R.C.; Seaver, G. An index of refraction algorithm for seawater over temperature, pressure, salinity, density, and wavelength. Deep Sea Res. Part A Oceanogr. Res. Pap. 1990, 37, 1909–1926. [Google Scholar] [CrossRef]

| (mm) | (mm) | ||||

|---|---|---|---|---|---|

| 100 | 5 | 1 | 1.54 | 1.33 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, G.; Huang, S.; Yin, Z.; Li, J.; Zhang, K. Underwater Refractive Stereo Vision Measurement and Simulation Imaging Model Based on Optical Path. J. Mar. Sci. Eng. 2024, 12, 1955. https://doi.org/10.3390/jmse12111955

Li G, Huang S, Yin Z, Li J, Zhang K. Underwater Refractive Stereo Vision Measurement and Simulation Imaging Model Based on Optical Path. Journal of Marine Science and Engineering. 2024; 12(11):1955. https://doi.org/10.3390/jmse12111955

Chicago/Turabian StyleLi, Guanqing, Shengxiang Huang, Zhi Yin, Jun Li, and Kefei Zhang. 2024. "Underwater Refractive Stereo Vision Measurement and Simulation Imaging Model Based on Optical Path" Journal of Marine Science and Engineering 12, no. 11: 1955. https://doi.org/10.3390/jmse12111955

APA StyleLi, G., Huang, S., Yin, Z., Li, J., & Zhang, K. (2024). Underwater Refractive Stereo Vision Measurement and Simulation Imaging Model Based on Optical Path. Journal of Marine Science and Engineering, 12(11), 1955. https://doi.org/10.3390/jmse12111955