Salmon Salar Optimization: A Novel Natural Inspired Metaheuristic Method for Deep-Sea Probe Design for Unconventional Subsea Oil Wells

Abstract

1. Introduction

2. Related Works

3. Materials and Methods

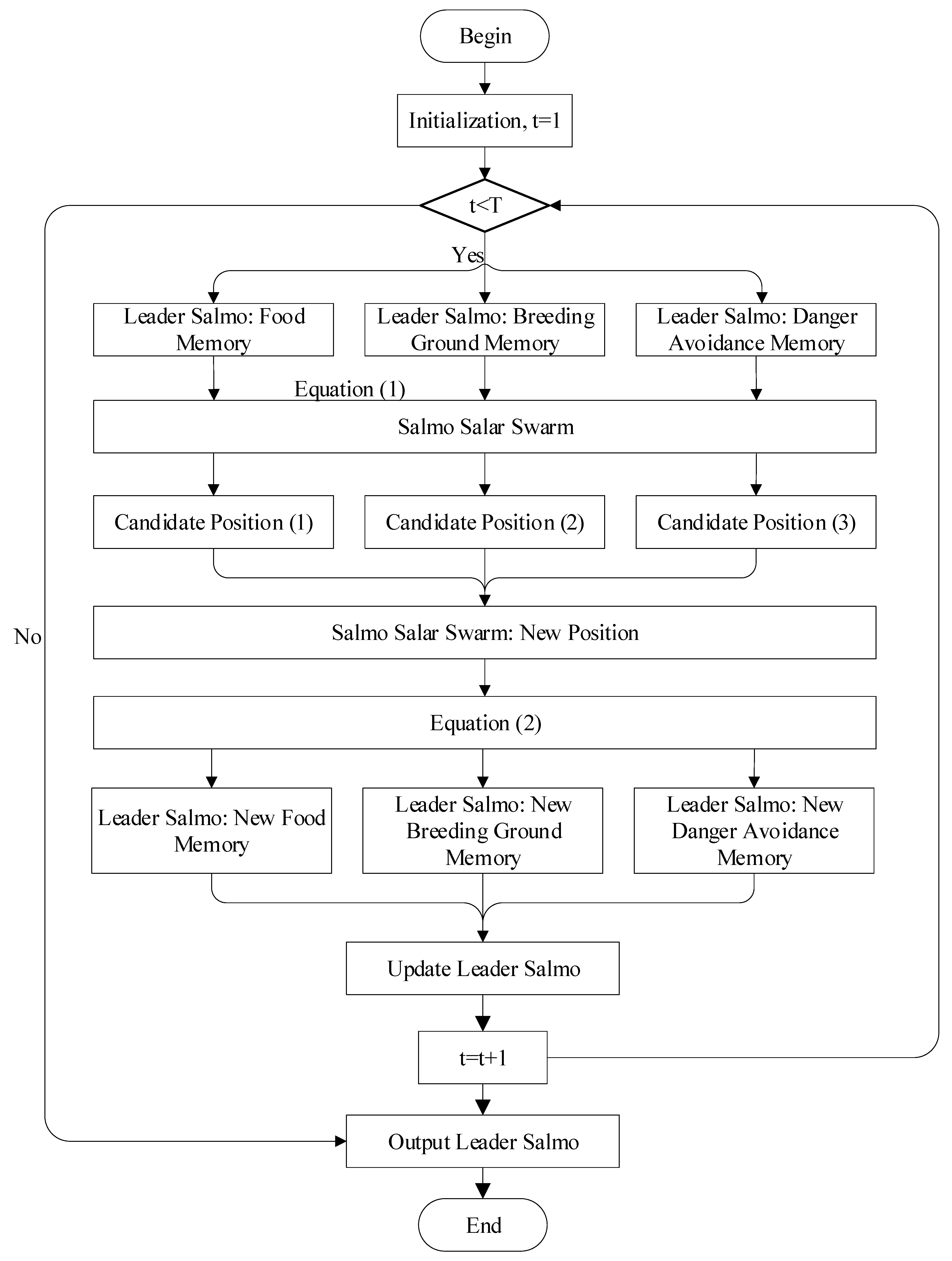

3.1. Salmon Salar Optimization

3.2. The Multi-Purpose Fusion Search Strategy

| Algorithm 1 Pseudo-code of SSO. |

|

4. Results

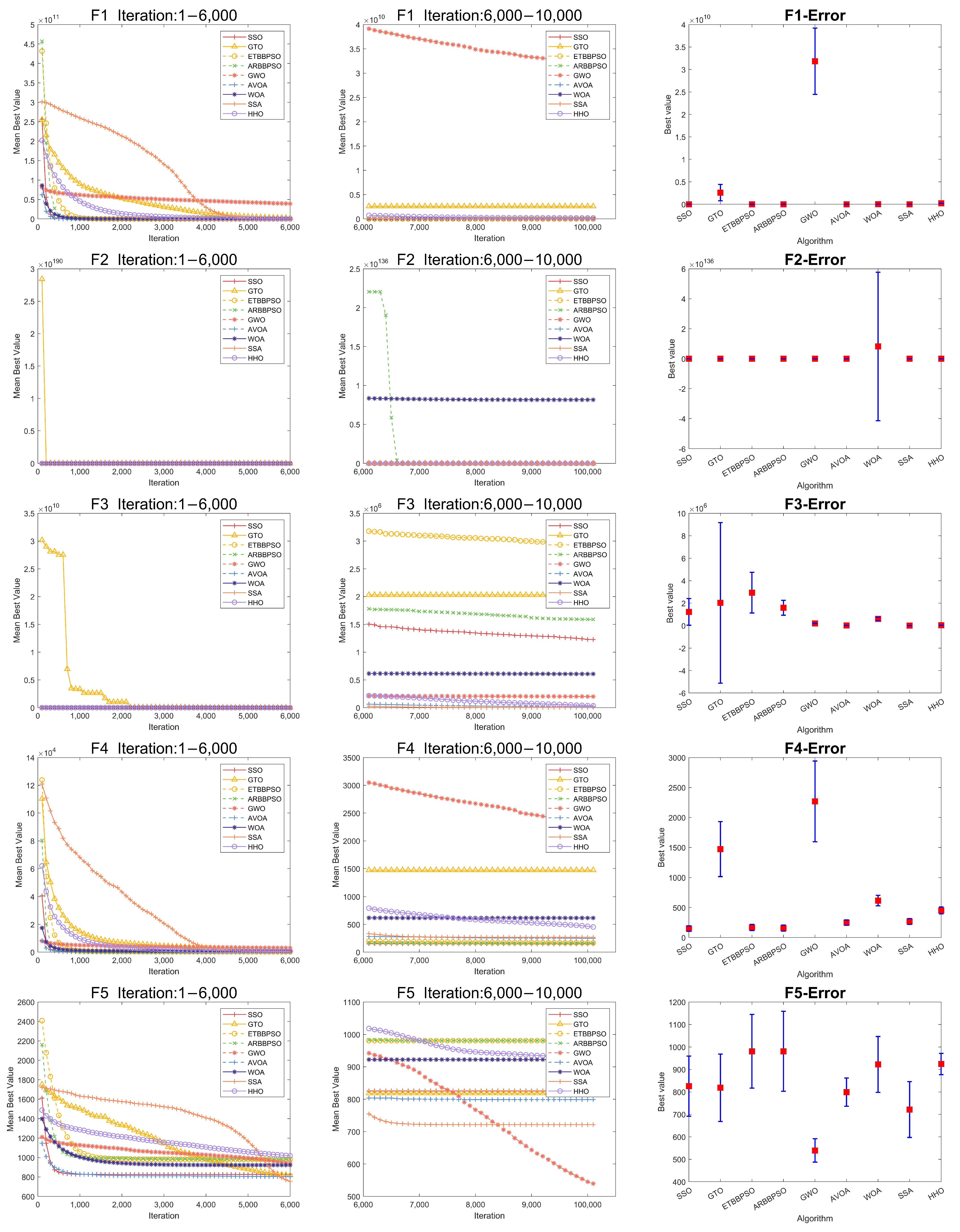

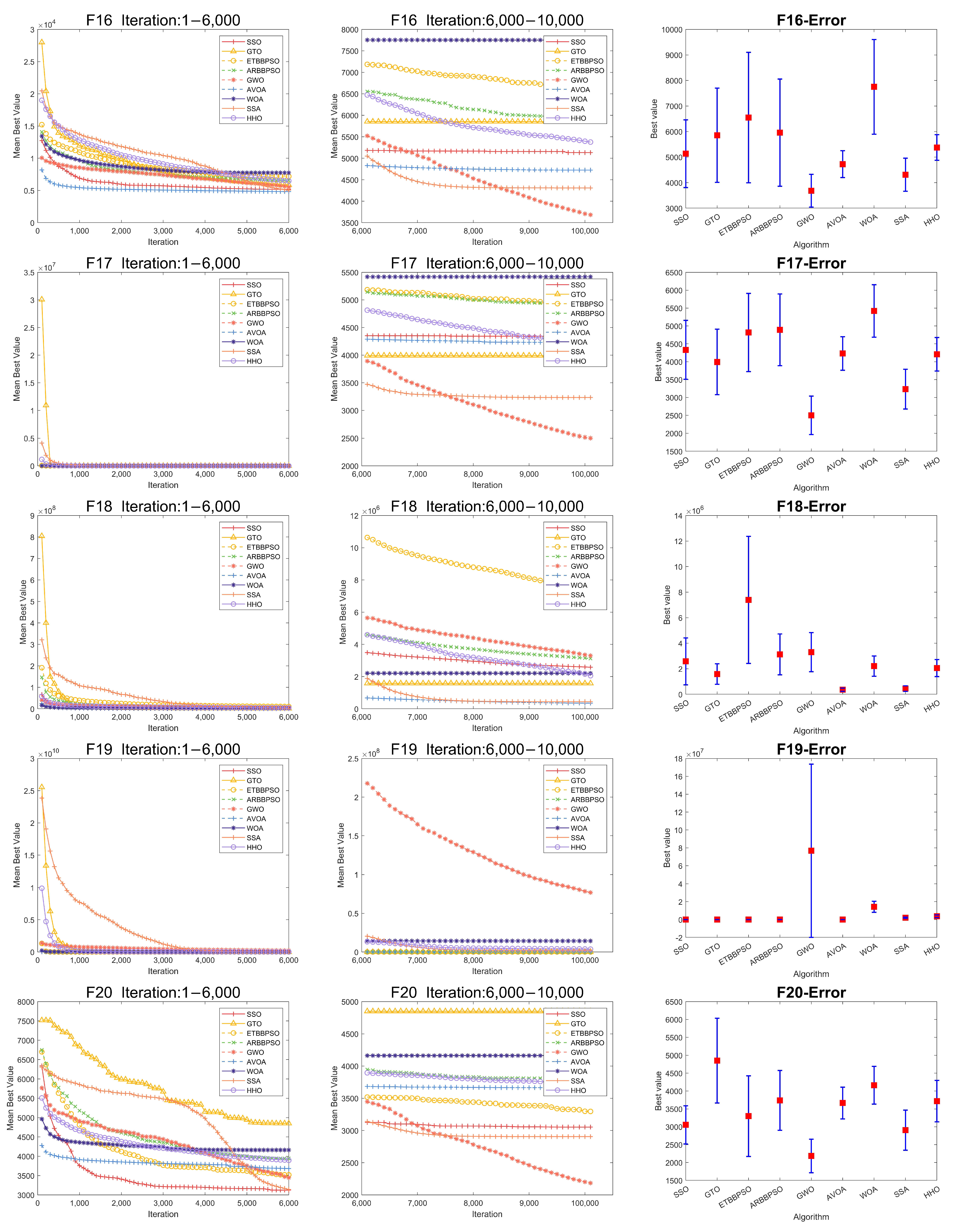

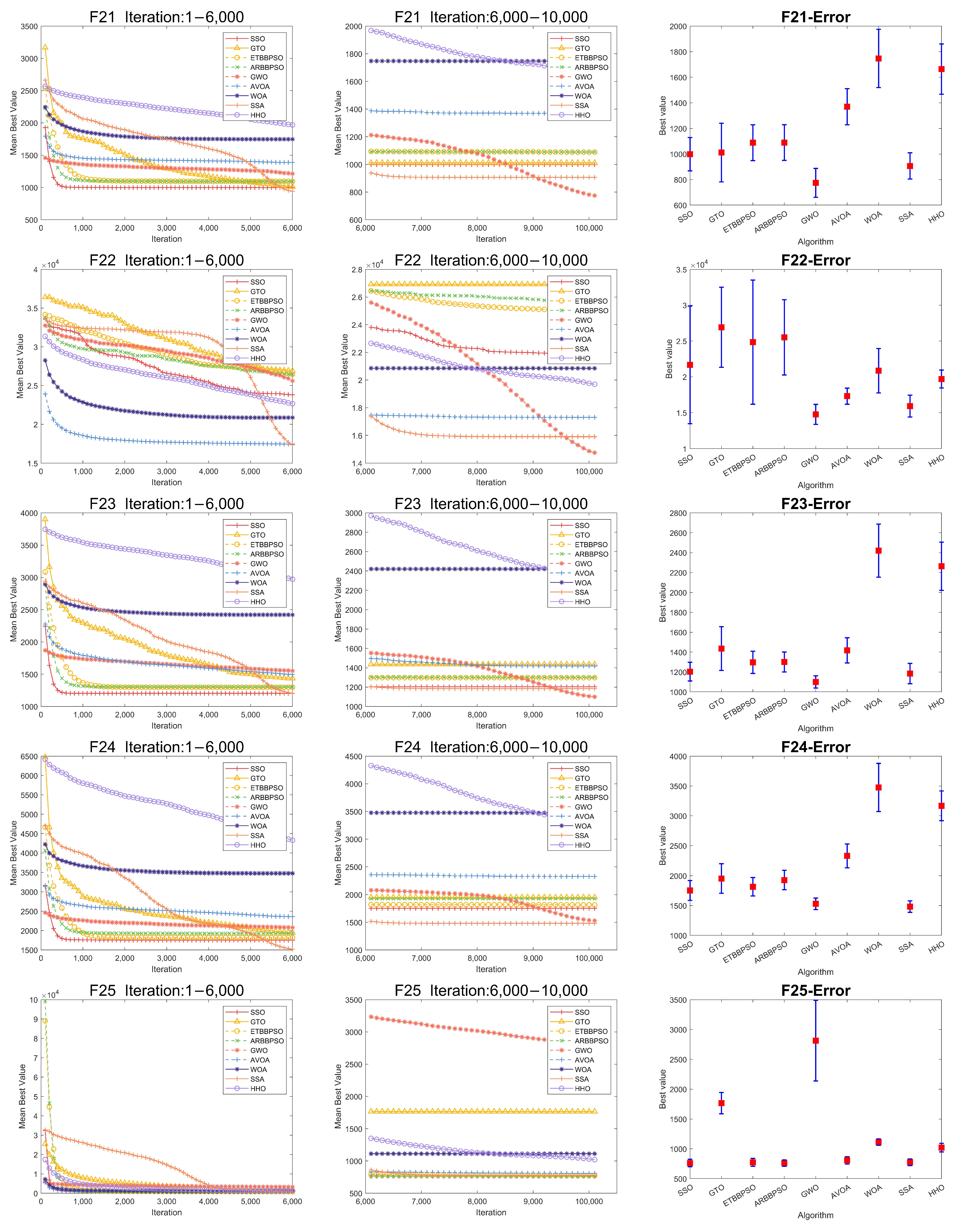

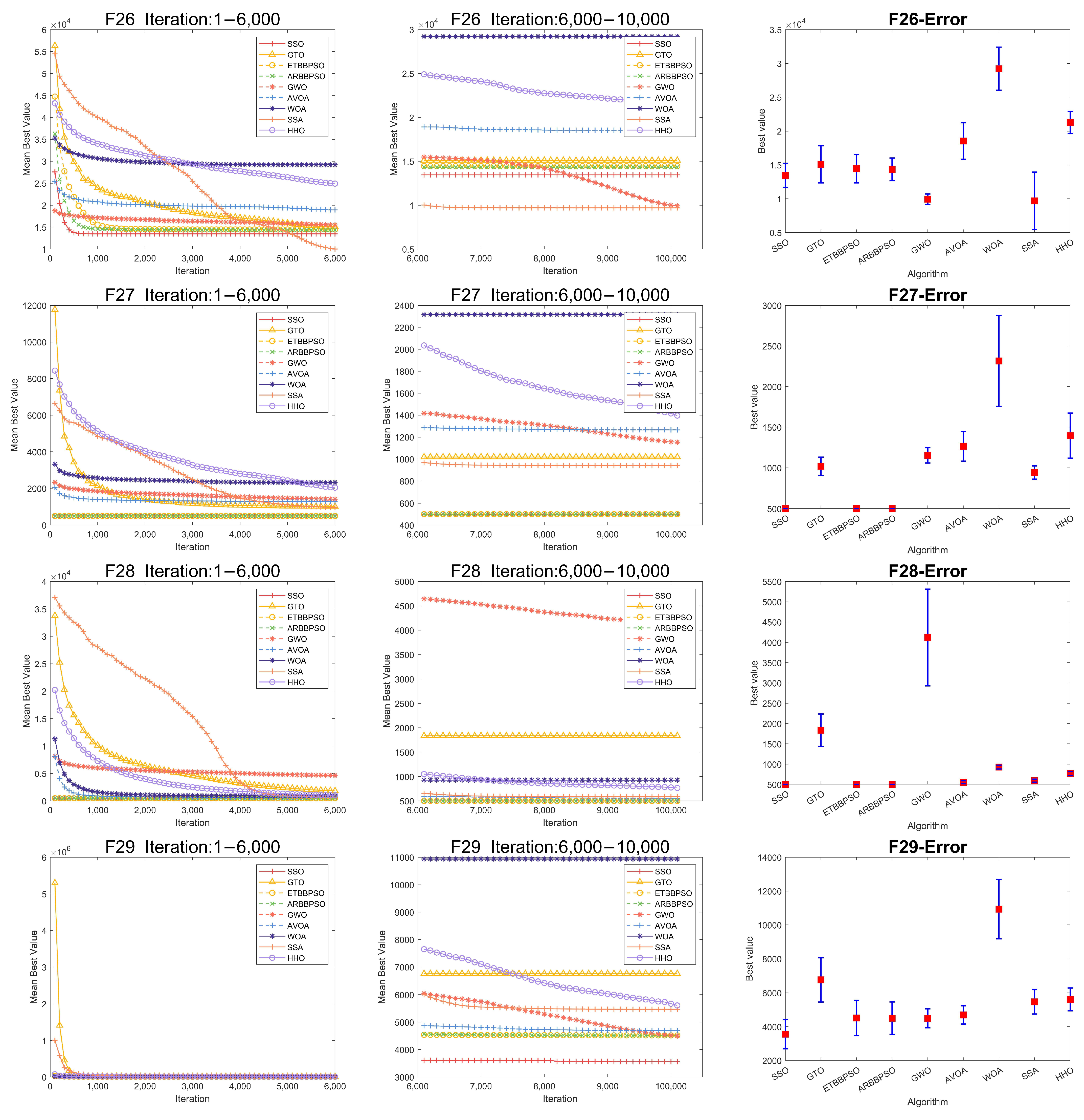

4.1. Numerical Experiments with CEC2017

- Unimodal Functions, ;

- Simple Multimodal Functions, ;

- Hybrid Functions, ;

- Composition Functions, .

- Population size for all algorithms, 100;

- Max iteration times, 1.000 ;

- Dimension, 100;

- Individual runs, 37.

- search range, [−100, +100]

Numerical Analysis

- In , The rank of SSO is 3, the first method is AVOA, the difference between the two methods is 76.174%;

- In , The rank of SSO is 3, the first method is AVOA, the difference between the two methods is 100%;

- In , The rank of SSO is 6, the first method is AVOA, the difference between the two methods is 98.343%;

- In , The rank of SSO is 1, the second method is ARBBPSO, the difference between the two methods is 5.42%;

- In , The rank of SSO is 4, the first method is GWO, the difference between the two methods is 34.647%;

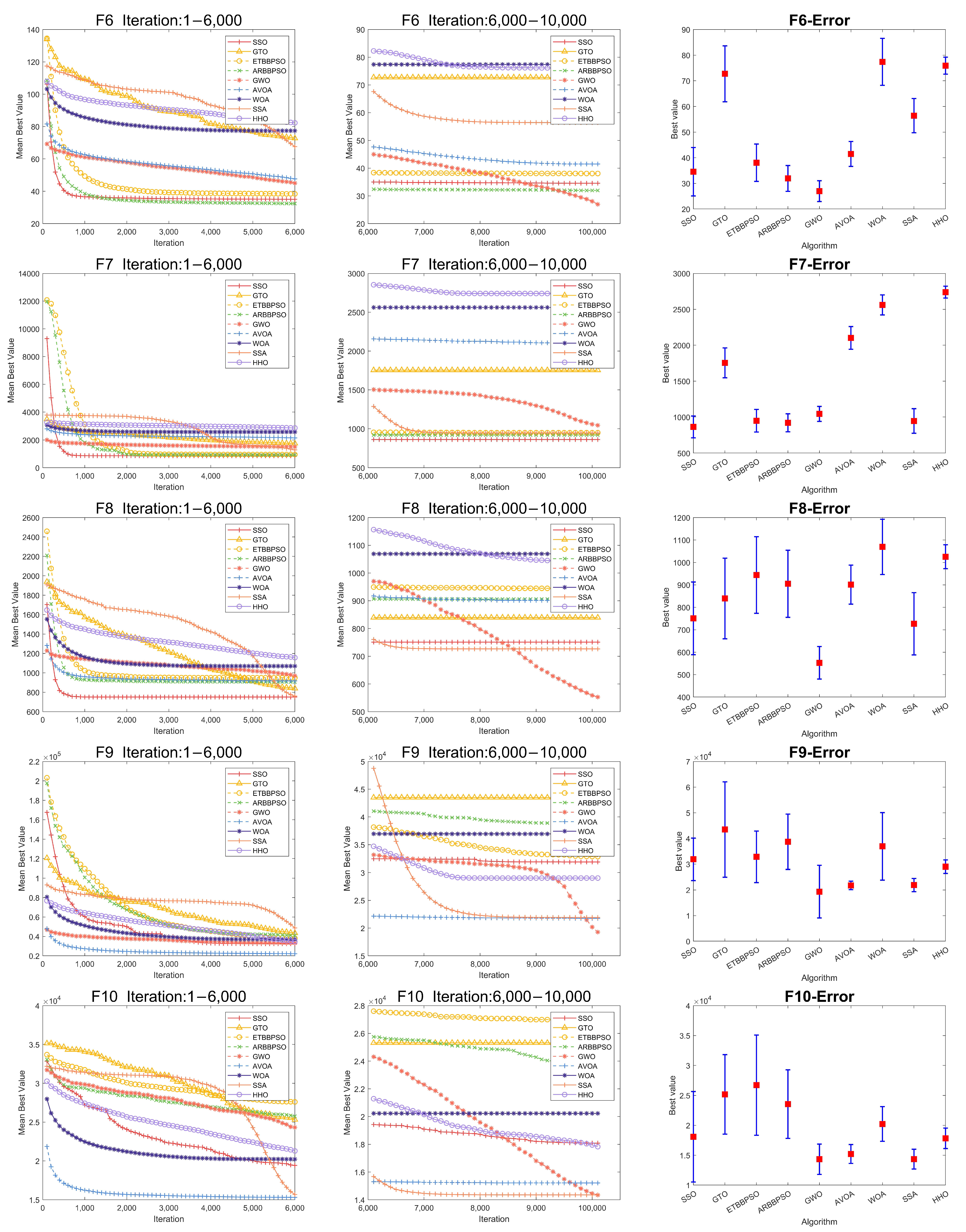

- In , The rank of SSO is 3, the first method is GWO, the difference between the two methods is 21.938%;

- In , The rank of SSO is 1, the second method is ARBBPSO, the difference between the two methods is 6.178%;

- In , The rank of SSO is 2, the first method is GWO, the difference between the two methods is 26.383%;

- In , The rank of SSO is 4, the first method is GWO, the difference between the two methods is 39.515%;

- In ,The rank of SSO is 5, the first method is GWO, the difference between the two methods is 20.694%;

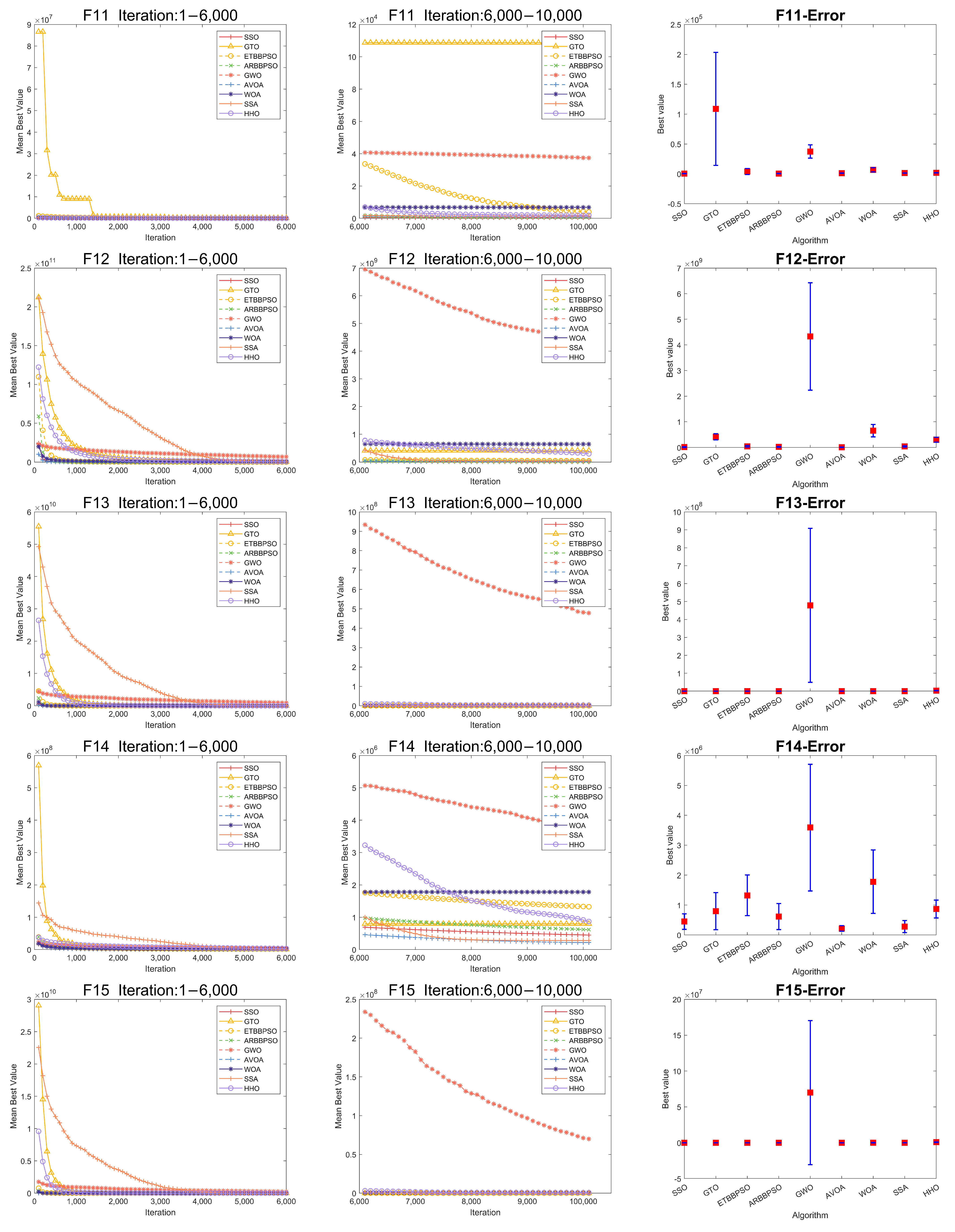

- In , The rank of SSO is 1, the second method is ARBBPSO, the difference between the two methods is 14.329%;

- In , The rank of SSO is 2, the first method is AVOA, the difference between the two methods is 34.981%;

- In , The rank of SSO is 3, the first method is ETBBPSO, the difference between the two methods is 18.873%;

- In , The rank of SSO is 2, the first method is AVOA, the difference between the two methods is 51.522%;

- In , The rank of SSO is 1, the second method is ETBBPSO, the difference between the two methods is 14.277%;

- In , The rank of SSO is 3, the first method is GWO, the difference between the two methods is 28.221%;

- In , The rank of SSO is 5, the first method is GWO, the difference between the two methods is 42.281%;

- In , The rank of SSO is 5, the first method is AVOA, the difference between the two methods is 86.234%;

- In , The rank of SSO is 1, the second method is ARBBPSO, the difference between the two methods is 27.192%;

- In , The rank of SSO is 2, the first method is GWO, the difference between the two methods is 28.455%;

- In , The rank of SSO is 2, the first method is GWO, the difference between the two methods is 22.436%;

- In , The rank of SSO is 6, the first method is GWO, the difference between the two methods is 31.869%;

- In , The rank of SSO is 2, the first method is GWO, the difference between the two methods is 8.565%;

- In , The rank of SSO is 2, the first method is GWO, the difference between the two methods is 12.753%;

- In , The rank of SSO is 2, the first method is ARBBPSO, the difference between the two methods is 0.131%;

- In , The rank of SSO is 2, the first method is GWO, the difference between the two methods is 26.263%;

- In , The rank of SSO is 1, the second method is ARBBPSO, the difference between the two methods is 0%;

- In ,The rank of SSO is 1, the second method is ARBBPSO, the difference between the two methods is 0%;

- In , The rank of SSO is 1, the second method is GWO, the difference between the two methods is 20.934%;

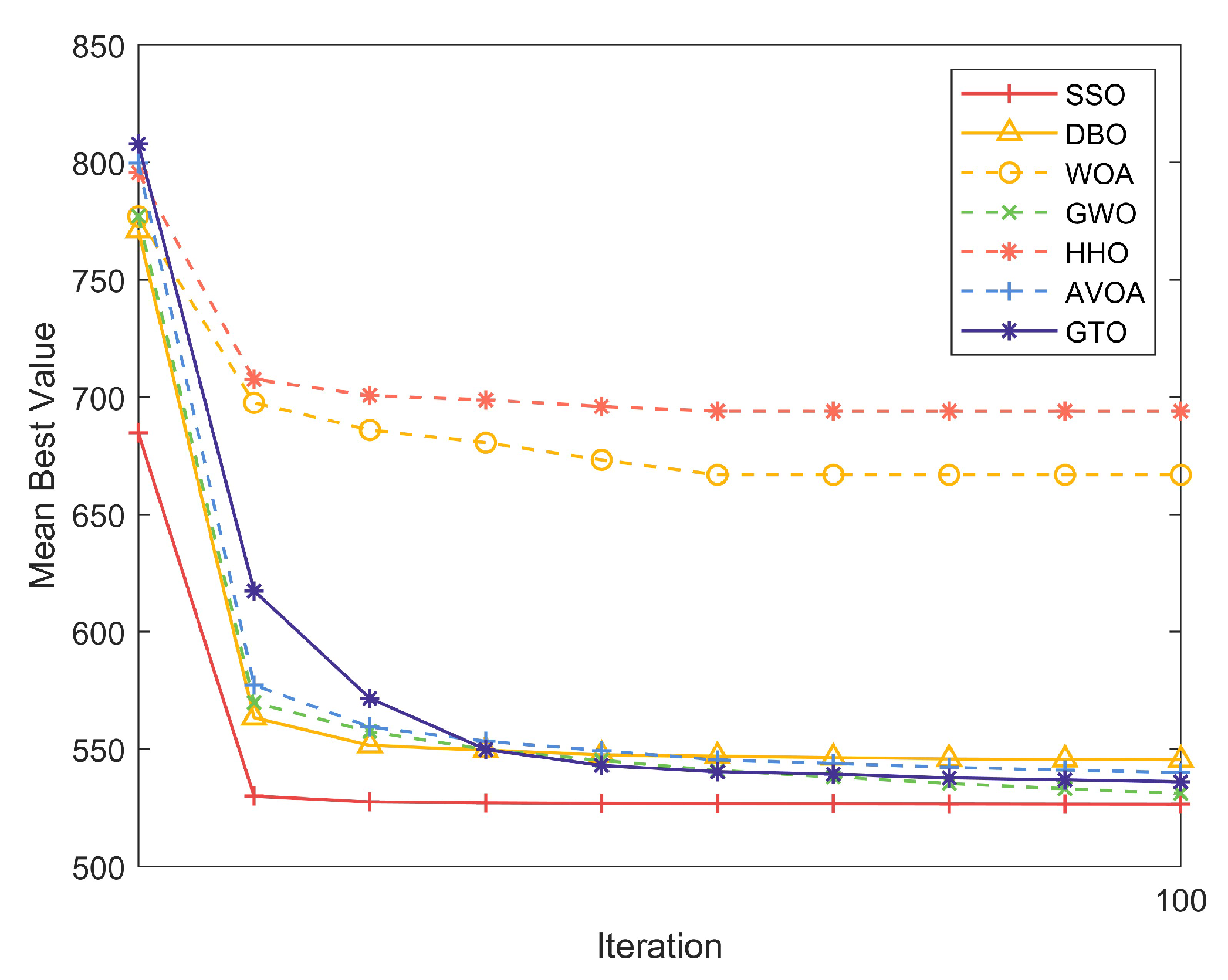

4.2. Numerical Experiments with CEC2022

4.3. Optimization Problem Formulation for Deep-Sea Probe Design

- : Wall thickness of the cylindrical section,

- : Wall thickness of the end caps,

- r: Radius of the probe,

- l: Length of the probe,

- : Material density,

- : Maximum internal pressure capacity,

- : Battery energy storage,

- : Sensor diameter.

4.4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Petrovic, A.; Damaševičius, R.; Jovanovic, L.; Toskovic, A.; Simic, V.; Bacanin, N.; Zivkovic, M.; Spalević, P. Marine Vessel Classification and Multivariate Trajectories Forecasting Using Metaheuristics-Optimized eXtreme Gradient Boosting and Recurrent Neural Networks. Appl. Sci. 2023, 13, 9181. [Google Scholar] [CrossRef]

- Yaseen, Z.M.; Melini Wan Mohtar, W.H.; Homod, R.Z.; Alawi, O.A.; Abba, S.I.; Oudah, A.Y.; Togun, H.; Goliatt, L.; Ul Hassan Kazmi, S.S.; Tao, H. Heavy metals prediction in coastal marine sediments using hybridized machine learning models with metaheuristic optimization algorithm. Chemosphere 2024, 352, 141329. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.H.; Wang, X.J.; Zhang, X.Z.; Saad, M.; Zhao, R.J. Numerical Investigation of the Impacts of Large Particles on the Turbulent Flow and Surface Wear in Series-Connected Bends. J. Mar. Sci. Eng. 2024, 12, 164. [Google Scholar] [CrossRef]

- Nguyen, T.H.H.; Hou, T.H.; Pham, H.A.; Tsai, C.C. Oil Spill Sensitivity Analysis of the Coastal Waters of Taiwan Using an Integrated Modelling Approach. J. Mar. Sci. Eng. 2024, 12, 155. [Google Scholar] [CrossRef]

- Xing, R.; Zhang, Y.; Feng, Y.; Ji, F. Performance Analysis of a WPCN-Based Underwater Acoustic Communication System. J. Mar. Sci. Eng. 2023, 12, 43. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; Volume 4, pp. 942–1948. [Google Scholar] [CrossRef]

- Fister, I.; Yang, X.S.; Brest, J. A comprehensive review of firefly algorithms. Swarm Evol. Comput. 2013, 13, 34–46. [Google Scholar] [CrossRef]

- Zhou, X.G.; Zhang, G.J. Differential evolution with underestimation-based multimutation strategy. IEEE Trans. Cybern. 2019, 49, 1353–1364. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Liu, Y.; Gao, Y.; Wang, J. Carbon Emission Trading Policy and Carbon Emission Efficiency: An Empirical Analysis of China’s Prefecture-Level Cities. Front. Energy Res. 2021, 9, 793601. [Google Scholar] [CrossRef]

- Zhang, X.; Zou, D.; Shen, X. A novel simple particle swarm optimization algorithm for global optimization. Mathematics 2018, 6, 287. [Google Scholar] [CrossRef]

- Qiao, J.; Zhou, H.; Yang, C. Bare-Bones Multiobjective Particle Swarm Optimization Based on Parallel Cell Balanceable Fitness Estimation. IEEE Access 2018, 6, 32493–32506. [Google Scholar] [CrossRef]

- Zhang, X.; Melbourne, S.; Sarkar, C.; Chiaradia, A.; Webster, C. Effects of green space on walking: Does size, shape and density matter? Urban Stud. 2020, 57, 3402–3420. [Google Scholar] [CrossRef]

- Singh, G.; Singh, A. A hybrid algorithm using particle swarm optimization for solving transportation problem. Neural Comput. Appl. 2020, 32, 11699–11716. [Google Scholar] [CrossRef]

- Meng, X.; Li, J.; Member, S.; Dai, X.; Dou, J. Variable Neighborhood Search for a Colored Traveling Salesman Problem. IEEE Trans. Intell. Transp. Syst. 2018, 19, 1018–1026. [Google Scholar] [CrossRef]

- Meng, X.; Li, J.; Member, S.; Zhou, M.; Dai, X.; Dou, J. Population-Based Incremental Learning Algorithm for a Serial Colored Traveling Salesman Problem. IEEE Trans. Syst. Man, Cybern. Syst. 2018, 48, 277–288. [Google Scholar] [CrossRef]

- Al-Andoli, M.; Tan, S.C.; Cheah, W.P. Parallel stacked autoencoder with particle swarm optimization for community detection in complex networks. Appl. Intell. 2022, 52, 3366–3386. [Google Scholar] [CrossRef]

- Ahandani, M.A.; Abbasfam, J.; Kharrati, H. Parameter identification of permanent magnet synchronous motors using quasi-opposition-based particle swarm optimization and hybrid chaotic particle swarm optimization algorithms. Appl. Intell. 2022, 52, 13082–13096. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, X.; Jin, S.; Greaves, D. Phase-resolved real-time ocean wave prediction with quantified uncertainty based on variational Bayesian machine learning. Appl. Energy 2022, 324, 119711. [Google Scholar] [CrossRef]

- Hu, P.; Pan, J.S.; Chu, S.C.; Sun, C. Multi-surrogate assisted binary particle swarm optimization algorithm and its application for feature selection. Appl. Soft Comput. 2022, 121, 108736. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, Z. Forecasting Chinese provincial carbon emissions using a novel grey prediction model considering spatial correlation. Expert Syst. Appl. 2022, 209, 118261. [Google Scholar] [CrossRef]

- Lu, B.; Zhou, C. Particle Swarm Algorithm and Its Application in Tourism Route Design and Optimization. Comput. Intell. Neurosci. 2022, 2022, 6467086. [Google Scholar] [CrossRef]

- Pan, J.; Bardhan, R. Evaluating the risk of accessing green spaces in COVID-19 pandemic: A model for public urban green spaces (PUGS) in London. Urban For. Urban Green. 2022, 74, 127648. [Google Scholar] [CrossRef] [PubMed]

- Gao, Y.; Du, W.; Yan, G. Selectively-informed particle swarm optimization. Sci. Rep. 2015, 5, 9295. [Google Scholar] [CrossRef] [PubMed]

- Liang, X.; Li, W.; Zhang, Y.; Zhou, M. An adaptive particle swarm optimization method based on clustering. Soft Comput. 2015, 19, 431–448. [Google Scholar] [CrossRef]

- Li, J.; Zhang, J.; Jiang, C.; Zhou, M. Composite Particle Swarm Optimizer with Historical Memory for Function Optimization. IEEE Trans. Cybern. 2015, 45, 2350–2363. [Google Scholar] [CrossRef]

- Pornsing, C.; Sodhi, M.S.; Lamond, B.F. Novel self-adaptive particle swarm optimization methods. Soft Comput. 2016, 20, 3579–3593. [Google Scholar] [CrossRef]

- Guo, J.; Sato, Y. A pair-wise bare bones particle swarm optimization algorithm. In Proceedings of the 2017 IEEE/ACIS 16th International Conference on Computer and Information Science (ICIS), Wuhan, China, 24–26 May 2017; Number 1. pp. 353–358. [Google Scholar] [CrossRef]

- Guo, J.; Sato, Y. A Bare Bones Particle Swarm Optimization Algorithm with Dynamic Local Search. In Advances in Swarm Intelligence: 8th International Conference, ICSI 2017, Fukuoka, Japan, 27 July–1 August 2017, Proceedings, Part I; Tan, Y., Takagi, H., Shi, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 158–165. [Google Scholar] [CrossRef]

- Guo, J.; Sato, Y. A Hierarchical Bare Bones Particle Swarm Optimization Algorithm. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 1936–1941. [Google Scholar] [CrossRef]

- Kennedy, J. Bare bones particle swarms. In Proceedings of the 2003 IEEE Swarm Intelligence Symposium, SIS’03 (Cat. No.03EX706), Indianapolis, IN, USA, 26 April 2003; pp. 80–87. [Google Scholar] [CrossRef]

- Xu, X.; Rong, H.; Trovati, M.; Liptrott, M.; Bessis, N. CS-PSO: Chaotic particle swarm optimization algorithm for solving combinatorial optimization problems. Soft Comput. 2018, 22, 783–795. [Google Scholar] [CrossRef]

- Tian, D.; Shi, Z. MPSO: Modified particle swarm optimization and its applications. Swarm Evol. Comput. 2018, 41, 49–68. [Google Scholar] [CrossRef]

- Ghasemi, M.; Akbari, E.; Rahimnejad, A.; Razavi, S.E.; Ghavidel, S.; Li, L. Phasor particle swarm optimization: A simple and efficient variant of PSO. Soft Comput. 2019, 23, 9701–9718. [Google Scholar] [CrossRef]

- Guo, J.; Sato, Y. A fission-fusion hybrid bare bones particle swarm optimization algorithm for single-objective optimization problems. Appl. Intell. 2019, 49, 3641–3651. [Google Scholar] [CrossRef]

- Xu, G.; Cui, Q.; Shi, X.; Ge, H.; Zhan, Z.H.; Lee, H.P.; Liang, Y.; Tai, R.; Wu, C. Particle swarm optimization based on dimensional learning strategy. Swarm Evol. Comput. 2019, 45, 33–51. [Google Scholar] [CrossRef]

- Xu, Y.; Pi, D. A reinforcement learning-based communication topology in particle swarm optimization. Neural Comput. Appl. 2020, 32, 10007–10032. [Google Scholar] [CrossRef]

- Yamanaka, Y.; Yoshida, K. Simple gravitational particle swarm algorithm for multimodal optimization problems. PLoS ONE 2021, 16, e0248470. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Jin, B.; Yang, J.; Xu, L. Sea surface temperature prediction using a cubic B-spline interpolation and spatiotemporal attention mechanism. Remote Sens. Lett. 2021, 12, 478–487. [Google Scholar] [CrossRef]

- Wang, Z.J.; Zhan, Z.H.; Kwong, S.; Jin, H.; Zhang, J. Adaptive Granularity Learning Distributed Particle Swarm Optimization for Large-Scale Optimization. IEEE Trans. Cybern. 2021, 51, 1175–1188. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Wang, Z.; Ying, Y.; Xiao, F. Multipopulation Particle Swarm Optimization Algorithm with Neighborhood Learning. Sci. Program. 2022, 2022, 8312450. [Google Scholar] [CrossRef]

- Tian, H.; Guo, J.; Xiao, H.; Yan, K.; Sato, Y. An electronic transition-based bare bones particle swarm optimization algorithm for high dimensional optimization problems. PLoS ONE 2022, 17, e0271925. [Google Scholar] [CrossRef]

- Guo, Q.; Su, Z.; Chiao, C. Carbon emissions trading policy, carbon finance, and carbon emissions reduction: Evidence from a quasi-natural experiment in China. Econ. Chang. Restruct. 2022, 55, 1445–1480. [Google Scholar] [CrossRef]

- Zhou, G.; Guo, J.; Yan, K.; Zhou, G.; Li, B. An Atomic Retrospective Learning Bare Bone Particle Swarm Optimization. In Advances in Swarm Intelligence. ICSI 2023; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2023; Volume 13968, pp. 168–179. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. Dung beetle optimizer: A new meta-heuristic algorithm for global optimization. J. Supercomput. 2023, 79, 7305–7336. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Abdollahzadeh, B.; Gharehchopogh, F.S.; Mirjalili, S. African vultures optimization algorithm: A new nature-inspired metaheuristic algorithm for global optimization problems. Comput. Ind. Eng. 2021, 158, 107408. [Google Scholar] [CrossRef]

- Abdollahzadeh, B.; Soleimanian Gharehchopogh, F.; Mirjalili, S. Artificial gorilla troops optimizer: A new nature-inspired metaheuristic algorithm for global optimization problems. Int. J. Intell. Syst. 2021, 36, 22535. [Google Scholar] [CrossRef]

| F | Type | SSO | DBO | WOA | GWO | HHO | AVOA | GTO | ARBBPSO | ETBBPSO |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Mean | 2.808 × 104 | 5.748 × 107 | 3.515 × 107 | 3.184 × 1010 | 2.097 × 108 | 6.690 × 103 | 2.604 × 109 | 2.912 × 104 | 1.656 × 104 |

| Std | 2.760 × 104 | 3.705 × 107 | 1.173 × 107 | 7.385 × 109 | 2.181 × 107 | 9.224 × 103 | 1.822 × 109 | 3.091 × 104 | 2.186 × 104 | |

| Best | 1.851 × 101 | 1.490 × 106 | 1.560 × 107 | 2.009 × 1010 | 1.621 × 108 | 9.860 × 100 | 7.170 × 108 | 1.369 × 102 | 4.990 × 100 | |

| Worst | 8.870 × 104 | 1.431 × 108 | 7.225 × 107 | 5.204 × 1010 | 2.517 × 108 | 3.450 × 104 | 8.877 × 109 | 1.281 × 105 | 1.120 × 105 | |

| Rank | 3 | 6 | 5 | 9 | 7 | 1 | 8 | 4 | 2 | |

| 2 | Mean | 1.909 × 1089 | 1.845 × 10134 | 8.159 × 10135 | 6.547 × 10125 | 1.117 × 1073 | 1.326 × 1030 | 3.553 × 10119 | 9.078 × 10125 | 7.782 × 10114 |

| Std | 7.879 × 1089 | 1.122 × 10135 | 4.955 × 10136 | 3.976 × 10126 | 5.615 × 1073 | 5.600 × 1030 | 1.423 × 10120 | 5.522 × 10126 | 3.073 × 10115 | |

| Best | 1.679 × 1068 | 2.434 × 1098 | 2.676 × 10110 | 5.418 × 1097 | 6.231 × 1059 | 3.731 × 1013 | 9.303 × 1099 | 6.574 × 1084 | 2.233 × 1088 | |

| Worst | 4.579 × 1090 | 6.822 × 10135 | 3.014 × 10137 | 2.418 × 10127 | 3.375 × 1074 | 2.681 × 1031 | 7.773 × 10120 | 3.359 × 10127 | 1.718 × 10116 | |

| Rank | 3 | 8 | 9 | 6 | 2 | 1 | 5 | 7 | 4 | |

| 3 | Mean | 1.227 × 106 | 3.241 × 105 | 6.068 × 105 | 2.008 × 105 | 3.406 × 104 | 2.034 × 104 | 2.030 × 106 | 1.589 × 106 | 2.934 × 106 |

| Std | 1.186 × 106 | 1.856 × 104 | 1.497 × 105 | 1.767 × 104 | 8.364 × 103 | 6.828 × 103 | 7.150 × 106 | 6.647 × 105 | 1.808 × 106 | |

| Best | 4.535 × 105 | 2.578 × 105 | 3.368 × 105 | 1.638 × 105 | 1.796 × 104 | 8.612 × 103 | 2.438 × 105 | 6.560 × 105 | 1.059 × 106 | |

| Worst | 4.888 × 106 | 3.516 × 105 | 8.400 × 105 | 2.421 × 105 | 5.648 × 104 | 3.839 × 104 | 4.276 × 107 | 3.261 × 106 | 8.476 × 106 | |

| Rank | 6 | 4 | 5 | 3 | 2 | 1 | 8 | 7 | 9 | |

| 4 | Mean | 1.493 × 102 | 4.300 × 102 | 6.164 × 102 | 2.268 × 103 | 4.530 × 102 | 2.495 × 102 | 1.473 × 103 | 1.578 × 102 | 1.683 × 102 |

| Std | 4.601 × 101 | 7.513 × 101 | 8.827 × 101 | 6.743 × 102 | 5.750 × 101 | 4.472 × 101 | 4.581 × 102 | 5.156 × 101 | 5.145 × 101 | |

| Best | 8.963 × 101 | 2.765 × 102 | 4.498 × 102 | 1.096 × 103 | 3.622 × 102 | 1.930 × 102 | 7.983 × 102 | 7.623 × 101 | 7.982 × 101 | |

| Worst | 2.934 × 102 | 5.789 × 102 | 8.222 × 102 | 4.021 × 103 | 5.927 × 102 | 3.663 × 102 | 2.757 × 103 | 3.117 × 102 | 2.893 × 102 | |

| Rank | 1 | 5 | 7 | 9 | 6 | 4 | 8 | 2 | 3 | |

| 5 | Mean | 8.255 × 102 | 1.089 × 103 | 9.223 × 102 | 5.395 × 102 | 9.242 × 102 | 7.989 × 102 | 8.184 × 102 | 9.809 × 102 | 9.808 × 102 |

| Std | 1.336 × 102 | 1.545 × 102 | 1.244 × 102 | 5.188 × 101 | 4.758 × 101 | 6.290 × 101 | 1.499 × 102 | 1.781 × 102 | 1.639 × 102 | |

| Best | 5.462 × 102 | 8.141 × 102 | 7.312 × 102 | 4.092 × 102 | 8.221 × 102 | 6.398 × 102 | 6.021 × 102 | 6.248 × 102 | 6.706 × 102 | |

| Worst | 1.081 × 103 | 1.361 × 103 | 1.250 × 103 | 6.650 × 102 | 1.038 × 103 | 9.522 × 102 | 1.393 × 103 | 1.369 × 103 | 1.270 × 103 | |

| Rank | 4 | 9 | 5 | 1 | 6 | 2 | 3 | 8 | 7 | |

| 6 | Mean | 3.455 × 101 | 7.188 × 101 | 7.737 × 101 | 2.697 × 101 | 7.590 × 101 | 4.148 × 101 | 7.272 × 101 | 3.194 × 101 | 3.806 × 101 |

| Std | 9.455 × 100 | 9.560 × 100 | 9.166 × 100 | 4.047 × 100 | 3.312 × 100 | 4.854 × 100 | 1.090 × 101 | 5.052 × 100 | 7.284 × 100 | |

| Best | 1.108 × 101 | 5.496 × 101 | 6.168 × 101 | 1.967 × 101 | 6.720 × 101 | 3.192 × 101 | 4.958 × 101 | 1.972 × 101 | 2.120 × 101 | |

| Worst | 5.381 × 101 | 9.361 × 101 | 1.048 × 102 | 3.501 × 101 | 8.179 × 101 | 5.283 × 101 | 9.305 × 101 | 4.202 × 101 | 5.119 × 101 | |

| Rank | 3 | 6 | 9 | 1 | 8 | 5 | 7 | 2 | 4 | |

| 7 | Mean | 8.635 × 102 | 1.507 × 103 | 2.561 × 103 | 1.044 × 103 | 2.740 × 103 | 2.101 × 103 | 1.754 × 103 | 9.204 × 102 | 9.485 × 102 |

| Std | 1.510 × 102 | 3.905 × 102 | 1.393 × 102 | 1.037 × 102 | 8.270 × 101 | 1.582 × 102 | 2.081 × 102 | 1.256 × 102 | 1.575 × 102 | |

| Best | 6.102 × 102 | 1.036 × 103 | 2.212 × 103 | 8.211 × 102 | 2.560 × 103 | 1.773 × 103 | 1.337 × 103 | 6.931 × 102 | 6.999 × 102 | |

| Worst | 1.147 × 103 | 3.145 × 103 | 2.894 × 103 | 1.309 × 103 | 2.932 × 103 | 2.442 × 103 | 2.239 × 103 | 1.212 × 103 | 1.326 × 103 | |

| Rank | 1 | 5 | 8 | 4 | 9 | 7 | 6 | 2 | 3 | |

| 8 | Mean | 7.509 × 102 | 1.143 × 103 | 1.069 × 103 | 5.528 × 102 | 1.026 × 103 | 9.013 × 102 | 8.395 × 102 | 9.049 × 102 | 9.439 × 102 |

| Std | 1.620 × 102 | 1.525 × 102 | 1.231 × 102 | 7.252 × 101 | 5.340 × 101 | 8.687 × 101 | 1.798 × 102 | 1.495 × 102 | 1.705 × 102 | |

| Best | 5.174 × 102 | 7.631 × 102 | 8.041 × 102 | 4.036 × 102 | 8.413 × 102 | 7.323 × 102 | 5.891 × 102 | 5.801 × 102 | 5.612 × 102 | |

| Worst | 1.132 × 103 | 1.405 × 103 | 1.476 × 103 | 7.012 × 102 | 1.108 × 103 | 1.102 × 103 | 1.533 × 103 | 1.296 × 103 | 1.223 × 103 | |

| Rank | 2 | 9 | 8 | 1 | 7 | 4 | 3 | 5 | 6 |

| F | Type | SSO | DBO | WOA | GWO | HHO | AVOA | GTO | ARBBPSO | ETBBPSO |

|---|---|---|---|---|---|---|---|---|---|---|

| 9 | Mean | 3.189 × 104 | 3.533 × 104 | 3.694 × 104 | 1.929 × 104 | 2.902 × 104 | 2.176 × 104 | 4.350 × 104 | 3.872 × 104 | 3.285 × 104 |

| Std | 8.257 × 103 | 9.423 × 103 | 1.313 × 104 | 1.023 × 104 | 2.590 × 103 | 1.672 × 103 | 1.855 × 104 | 1.076 × 104 | 1.002 × 104 | |

| Best | 1.398 × 104 | 1.751 × 104 | 2.303 × 104 | 9.616 × 103 | 2.394 × 104 | 1.901 × 104 | 2.294 × 104 | 1.777 × 104 | 1.762 × 104 | |

| Worst | 4.255 × 104 | 4.880 × 104 | 8.197 × 104 | 4.220 × 104 | 3.367 × 104 | 2.774 × 104 | 7.876 × 104 | 8.490 × 104 | 4.980 × 104 | |

| Rank | 4 | 6 | 7 | 1 | 3 | 2 | 9 | 8 | 5 | |

| 10 | Mean | 1.808 × 104 | 1.735 × 104 | 2.023 × 104 | 1.434 × 104 | 1.783 × 104 | 1.521 × 104 | 2.516 × 104 | 2.355 × 104 | 2.671 × 104 |

| Std | 7.565 × 103 | 1.543 × 103 | 2.902 × 103 | 2.535 × 103 | 1.718 × 103 | 1.573 × 103 | 6.647 × 103 | 5.728 × 103 | 8.382 × 103 | |

| Best | 1.069 × 104 | 1.446 × 104 | 1.545 × 104 | 1.057 × 104 | 1.334 × 104 | 1.213 × 104 | 1.270 × 104 | 1.233 × 104 | 9.839 × 103 | |

| Worst | 3.297 × 104 | 2.025 × 104 | 2.601 × 104 | 2.693 × 104 | 2.084 × 104 | 1.979 × 104 | 3.375 × 104 | 2.947 × 104 | 3.364 × 104 | |

| Rank | 5 | 3 | 6 | 1 | 4 | 2 | 8 | 7 | 9 | |

| 11 | Mean | 4.957 × 102 | 1.867 × 104 | 6.803 × 103 | 3.741 × 104 | 1.861 × 103 | 1.202 × 103 | 1.087 × 105 | 5.786 × 102 | 3.974 × 103 |

| Std | 1.357 × 102 | 3.081 × 104 | 3.448 × 103 | 1.107 × 104 | 1.889 × 102 | 2.255 × 102 | 9.458 × 104 | 2.244 × 102 | 5.112 × 103 | |

| Best | 2.573 × 102 | 2.700 × 103 | 4.322 × 103 | 1.755 × 104 | 1.512 × 103 | 7.107 × 102 | 2.676 × 104 | 2.266 × 102 | 3.565 × 102 | |

| Worst | 7.958 × 102 | 1.483 × 105 | 2.567 × 104 | 6.831 × 104 | 2.247 × 103 | 1.602 × 103 | 5.289 × 105 | 1.058 × 103 | 2.318 × 104 | |

| Rank | 1 | 7 | 6 | 8 | 4 | 3 | 9 | 2 | 5 | |

| 12 | Mean | 1.830 × 107 | 4.235 × 108 | 6.533 × 108 | 4.330 × 109 | 3.029 × 108 | 1.190 × 107 | 4.165 × 108 | 2.783 × 107 | 4.958 × 107 |

| Std | 1.196 × 107 | 2.298 × 108 | 2.408 × 108 | 2.095 × 109 | 9.060 × 107 | 6.478 × 106 | 1.147 × 108 | 1.477 × 107 | 2.681 × 107 | |

| Best | 6.615 × 106 | 7.719 × 107 | 2.185 × 108 | 1.473 × 109 | 1.635 × 108 | 3.719 × 106 | 2.026 × 108 | 7.105 × 106 | 5.747 × 106 | |

| Worst | 5.860 × 107 | 9.782 × 108 | 1.079 × 109 | 9.595 × 109 | 5.550 × 108 | 2.737 × 107 | 7.120 × 108 | 8.331 × 107 | 1.144 × 108 | |

| Rank | 2 | 7 | 8 | 9 | 5 | 1 | 6 | 3 | 4 | |

| 13 | Mean | 9.419 × 103 | 9.405 × 106 | 9.002 × 104 | 4.787 × 108 | 3.105 × 106 | 3.811 × 104 | 3.234 × 104 | 9.378 × 103 | 7.642 × 103 |

| Std | 1.186 × 104 | 1.835 × 107 | 3.417 × 104 | 4.293 × 108 | 5.306 × 105 | 1.059 × 104 | 1.306 × 104 | 1.530 × 104 | 1.233 × 104 | |

| Best | 6.528 × 102 | 1.196 × 105 | 4.051 × 104 | 9.201 × 104 | 2.014 × 106 | 2.153 × 104 | 1.419 × 104 | 2.912 × 102 | 2.868 × 102 | |

| Worst | 3.599 × 104 | 9.361 × 107 | 1.596 × 105 | 1.853 × 109 | 4.130 × 106 | 6.888 × 104 | 6.511 × 104 | 8.090 × 104 | 4.816 × 104 | |

| Rank | 3 | 8 | 6 | 9 | 7 | 5 | 4 | 2 | 1 | |

| 14 | Mean | 4.505 × 105 | 3.598 × 106 | 1.782 × 106 | 3.591 × 106 | 8.700 × 105 | 2.184 × 105 | 7.977 × 105 | 6.176 × 105 | 1.325 × 106 |

| Std | 2.583 × 105 | 3.441 × 106 | 1.059 × 106 | 2.115 × 106 | 3.002 × 105 | 9.406 × 104 | 6.201 × 105 | 4.363 × 105 | 6.751 × 105 | |

| Best | 1.425 × 105 | 1.266 × 105 | 4.800 × 105 | 6.837 × 105 | 3.137 × 105 | 5.758 × 104 | 9.775 × 104 | 1.427 × 105 | 4.959 × 105 | |

| Worst | 1.131 × 106 | 1.302 × 107 | 4.792 × 106 | 9.880 × 106 | 1.634 × 106 | 4.261 × 105 | 3.816 × 106 | 2.290 × 106 | 2.803 × 106 | |

| Rank | 2 | 9 | 7 | 8 | 5 | 1 | 4 | 3 | 6 | |

| 15 | Mean | 6.377 × 103 | 7.555 × 105 | 1.308 × 105 | 7.000 × 107 | 7.655 × 105 | 2.200 × 104 | 7.456 × 103 | 7.748 × 103 | 7.439 × 103 |

| Std | 5.773 × 103 | 1.481 × 106 | 2.144 × 105 | 1.005 × 108 | 3.090 × 105 | 7.872 × 103 | 4.781 × 103 | 1.019 × 104 | 1.312 × 104 | |

| Best | 1.849 × 102 | 4.700 × 104 | 2.620 × 104 | 4.352 × 105 | 1.394 × 105 | 6.418 × 103 | 3.032 × 103 | 1.612 × 102 | 1.635 × 102 | |

| Worst | 2.138 × 104 | 7.265 × 106 | 1.054 × 106 | 3.816 × 108 | 1.849 × 106 | 4.234 × 104 | 2.806 × 104 | 4.044 × 104 | 7.599 × 104 | |

| Rank | 1 | 7 | 6 | 9 | 8 | 5 | 3 | 4 | 2 | |

| 16 | Mean | 5.132 × 103 | 5.921 × 103 | 7.751 × 103 | 3.684 × 103 | 5.377 × 103 | 4.724 × 103 | 5.854 × 103 | 5.957 × 103 | 6.546 × 103 |

| Std | 1.326 × 103 | 9.500 × 102 | 1.853 × 103 | 6.423 × 102 | 5.029 × 102 | 5.282 × 102 | 1.846 × 103 | 2.099 × 103 | 2.552 × 103 | |

| Best | 3.655 × 103 | 3.863 × 103 | 4.193 × 103 | 2.205 × 103 | 4.483 × 103 | 3.733 × 103 | 3.794 × 103 | 3.357 × 103 | 3.885 × 103 | |

| Worst | 9.912 × 103 | 7.955 × 103 | 1.340 × 104 | 4.936 × 103 | 6.998 × 103 | 6.212 × 103 | 9.924 × 103 | 1.031 × 104 | 1.209 × 104 | |

| Rank | 3 | 6 | 9 | 1 | 4 | 2 | 5 | 7 | 8 |

| F | Type | SSO | DBO | WOA | GWO | HHO | AVOA | GTO | ARBBPSO | ETBBPSO |

|---|---|---|---|---|---|---|---|---|---|---|

| 17 | Mean | 4.331 × 103 | 5.316 × 103 | 5.418 × 103 | 2.500 × 103 | 4.207 × 103 | 4.230 × 103 | 3.994 × 103 | 4.892 × 103 | 4.818 × 103 |

| Std | 8.247 × 102 | 1.054 × 103 | 7.340 × 102 | 5.376 × 102 | 4.684 × 102 | 4.697 × 102 | 9.162 × 102 | 1.001 × 103 | 1.093 × 103 | |

| Best | 2.686 × 103 | 3.639 × 103 | 4.328 × 103 | 1.546 × 103 | 3.531 × 103 | 3.003 × 103 | 2.678 × 103 | 2.963 × 103 | 3.252 × 103 | |

| Worst | 6.121 × 103 | 8.138 × 103 | 7.694 × 103 | 4.048 × 103 | 5.525 × 103 | 4.990 × 103 | 6.177 × 103 | 7.715 × 103 | 7.388 × 103 | |

| Rank | 5 | 8 | 9 | 1 | 3 | 4 | 2 | 7 | 6 | |

| 18 | Mean | 2.582 × 106 | 5.795 × 106 | 2.207 × 106 | 3.302 × 106 | 2.056 × 106 | 3.554 × 105 | 1.581 × 106 | 3.123 × 106 | 7.391 × 106 |

| Std | 1.838 × 106 | 5.146 × 106 | 7.891 × 105 | 1.528 × 106 | 6.659 × 105 | 1.237 × 105 | 8.044 × 105 | 1.601 × 106 | 4.976 × 106 | |

| Best | 7.702 × 105 | 4.827 × 105 | 7.334 × 105 | 7.652 × 105 | 8.964 × 105 | 1.826 × 105 | 4.847 × 105 | 7.828 × 105 | 1.886 × 106 | |

| Worst | 7.819 × 106 | 2.227 × 107 | 4.676 × 106 | 6.225 × 106 | 3.805 × 106 | 6.416 × 105 | 4.410 × 106 | 8.323 × 106 | 2.448 × 107 | |

| Rank | 5 | 8 | 4 | 7 | 3 | 1 | 2 | 6 | 9 | |

| 19 | Mean | 5.543 × 103 | 1.876 × 106 | 1.434 × 107 | 7.688 × 107 | 3.721 × 106 | 1.077 × 104 | 2.169 × 104 | 7.613 × 103 | 1.652 × 104 |

| Std | 7.741 × 103 | 2.501 × 106 | 6.244 × 106 | 9.678 × 107 | 1.493 × 106 | 8.269 × 103 | 2.103 × 104 | 1.145 × 104 | 1.577 × 104 | |

| Best | 1.186 × 102 | 4.900 × 104 | 2.480 × 106 | 5.702 × 106 | 1.125 × 106 | 2.071 × 103 | 1.116 × 103 | 1.396 × 102 | 1.491 × 102 | |

| Worst | 4.335 × 104 | 1.205 × 107 | 2.536 × 107 | 4.576 × 108 | 6.880 × 106 | 4.351 × 104 | 7.993 × 104 | 4.363 × 104 | 5.512 × 104 | |

| Rank | 1 | 6 | 8 | 9 | 7 | 3 | 5 | 2 | 4 | |

| 20 | Mean | 3.053 × 103 | 3.737 × 103 | 4.162 × 103 | 2.184 × 103 | 3.717 × 103 | 3.664 × 103 | 4.850 × 103 | 3.737 × 103 | 3.296 × 103 |

| Std | 5.343 × 102 | 6.889 × 102 | 5.279 × 102 | 4.699 × 102 | 5.790 × 102 | 4.433 × 102 | 1.186 × 103 | 8.359 × 102 | 1.128 × 103 | |

| Best | 1.318 × 103 | 2.061 × 103 | 3.318 × 103 | 1.209 × 103 | 2.461 × 103 | 2.420 × 103 | 2.189 × 103 | 2.255 × 103 | 1.451 × 103 | |

| Worst | 3.761 × 103 | 4.976 × 103 | 5.401 × 103 | 3.215 × 103 | 4.876 × 103 | 4.418 × 103 | 7.470 × 103 | 5.557 × 103 | 6.233 × 103 | |

| Rank | 2 | 7 | 8 | 1 | 5 | 4 | 9 | 6 | 3 | |

| 21 | Mean | 9.983 × 102 | 1.432 × 103 | 1.747 × 103 | 7.743 × 102 | 1.664 × 103 | 1.370 × 103 | 1.011 × 103 | 1.090 × 103 | 1.088 × 103 |

| Std | 1.302 × 102 | 1.335 × 102 | 2.281 × 102 | 1.130 × 102 | 1.967 × 102 | 1.409 × 102 | 2.289 × 102 | 1.394 × 102 | 1.405 × 102 | |

| Best | 8.102 × 102 | 1.145 × 103 | 1.290 × 103 | 6.484 × 102 | 1.267 × 103 | 1.049 × 103 | 7.903 × 102 | 8.929 × 102 | 8.240 × 102 | |

| Worst | 1.282 × 103 | 1.724 × 103 | 2.225 × 103 | 1.326 × 103 | 2.080 × 103 | 1.761 × 103 | 1.600 × 103 | 1.577 × 103 | 1.443 × 103 | |

| Rank | 2 | 7 | 9 | 1 | 8 | 6 | 3 | 5 | 4 | |

| 22 | Mean | 2.166 × 104 | 1.800 × 104 | 2.085 × 104 | 1.476 × 104 | 1.969 × 104 | 1.730 × 104 | 2.692 × 104 | 2.551 × 104 | 2.484 × 104 |

| Std | 8.214 × 103 | 2.021 × 103 | 3.096 × 103 | 1.398 × 103 | 1.251 × 103 | 1.118 × 103 | 5.593 × 103 | 5.254 × 103 | 8.665 × 103 | |

| Best | 1.341 × 104 | 1.397 × 104 | 1.660 × 104 | 1.157 × 104 | 1.537 × 104 | 1.492 × 104 | 1.829 × 104 | 1.133 × 104 | 1.276 × 104 | |

| Worst | 3.406 × 104 | 2.250 × 104 | 2.691 × 104 | 1.859 × 104 | 2.211 × 104 | 2.052 × 104 | 3.415 × 104 | 2.974 × 104 | 3.452 × 104 | |

| Rank | 6 | 3 | 5 | 1 | 4 | 2 | 9 | 8 | 7 | |

| 23 | Mean | 1.203 × 103 | 1.789 × 103 | 2.420 × 103 | 1.100 × 103 | 2.264 × 103 | 1.418 × 103 | 1.436 × 103 | 1.301 × 103 | 1.298 × 103 |

| Std | 9.380 × 101 | 1.926 × 102 | 2.668 × 102 | 6.199 × 101 | 2.421 × 102 | 1.267 × 102 | 2.203 × 102 | 1.001 × 102 | 1.120 × 102 | |

| Best | 1.051 × 103 | 1.370 × 103 | 1.855 × 103 | 9.835 × 102 | 1.804 × 103 | 1.134 × 103 | 1.169 × 103 | 1.116 × 103 | 1.046 × 103 | |

| Worst | 1.376 × 103 | 2.222 × 103 | 3.034 × 103 | 1.235 × 103 | 2.739 × 103 | 1.726 × 103 | 1.949 × 103 | 1.479 × 103 | 1.508 × 103 | |

| Rank | 2 | 7 | 9 | 1 | 8 | 5 | 6 | 4 | 3 | |

| 24 | Mean | 1.753 × 103 | 2.492 × 103 | 3.476 × 103 | 1.530 × 103 | 3.168 × 103 | 2.330 × 103 | 1.952 × 103 | 1.927 × 103 | 1.815 × 103 |

| Std | 1.663 × 102 | 2.807 × 102 | 4.040 × 102 | 9.677 × 101 | 2.494 × 102 | 1.994 × 102 | 2.455 × 102 | 1.607 × 102 | 1.550 × 102 | |

| Best | 1.462 × 103 | 1.758 × 103 | 2.537 × 103 | 1.297 × 103 | 2.619 × 103 | 1.899 × 103 | 1.587 × 103 | 1.629 × 103 | 1.484 × 103 | |

| Worst | 2.172 × 103 | 3.261 × 103 | 4.256 × 103 | 1.706 × 103 | 3.718 × 103 | 2.763 × 103 | 2.540 × 103 | 2.250 × 103 | 2.172 × 103 | |

| Rank | 2 | 7 | 9 | 1 | 8 | 6 | 5 | 4 | 3 |

| F | Type | SSO | DBO | WOA | GWO | HHO | AVOA | GTO | ARBBPSO | ETBBPSO |

|---|---|---|---|---|---|---|---|---|---|---|

| 25 | Mean | 7.624 × 102 | 2.310 × 103 | 1.113 × 103 | 2.813 × 103 | 1.021 × 103 | 8.076 × 102 | 1.766 × 103 | 7.614 × 102 | 7.745 × 102 |

| Std | 6.221 × 101 | 3.353 × 103 | 5.379 × 101 | 6.758 × 102 | 7.246 × 101 | 6.130 × 101 | 1.783 × 102 | 5.359 × 101 | 6.691 × 101 | |

| Best | 6.506 × 102 | 7.359 × 102 | 1.010 × 103 | 1.868 × 103 | 8.443 × 102 | 6.832 × 102 | 1.467 × 103 | 6.328 × 102 | 6.448 × 102 | |

| Worst | 9.385 × 102 | 1.559 × 104 | 1.203 × 103 | 4.875 × 103 | 1.140 × 103 | 9.031 × 102 | 2.123 × 103 | 8.624 × 102 | 8.997 × 102 | |

| Rank | 2 | 8 | 6 | 9 | 5 | 4 | 7 | 1 | 3 | |

| 26 | Mean | 1.346 × 104 | 1.788 × 104 | 2.922 × 104 | 9.924 × 103 | 2.127 × 104 | 1.854 × 104 | 1.510 × 104 | 1.433 × 104 | 1.445 × 104 |

| Std | 1.774 × 103 | 3.869 × 103 | 3.190 × 103 | 7.893 × 102 | 1.640 × 103 | 2.705 × 103 | 2.739 × 103 | 1.675 × 103 | 2.077 × 103 | |

| Best | 1.043 × 104 | 1.018 × 104 | 2.117 × 104 | 8.657 × 103 | 1.867 × 104 | 1.417 × 104 | 1.026 × 104 | 1.085 × 104 | 1.021 × 104 | |

| Worst | 1.875 × 104 | 2.475 × 104 | 3.657 × 104 | 1.159 × 104 | 2.513 × 104 | 2.298 × 104 | 2.191 × 104 | 1.800 × 104 | 1.965 × 104 | |

| Rank | 2 | 6 | 9 | 1 | 8 | 7 | 5 | 3 | 4 | |

| 27 | Mean | 5.000 × 102 | 1.206 × 103 | 2.317 × 103 | 1.153 × 103 | 1.396 × 103 | 1.265 × 103 | 1.018 × 103 | 5.000 × 102 | 5.000 × 102 |

| Std | 3.785 × 10−4 | 2.531 × 102 | 5.599 × 102 | 9.433 × 101 | 2.785 × 102 | 1.821 × 102 | 1.125 × 102 | 5.206 × 10−4 | 5.264 × 10−4 | |

| Best | 5.000 × 102 | 7.054 × 102 | 1.422 × 103 | 9.829 × 102 | 1.056 × 103 | 9.671 × 102 | 8.444 × 102 | 5.000 × 102 | 5.000 × 102 | |

| Worst | 5.000 × 102 | 1.959 × 103 | 3.740 × 103 | 1.351 × 103 | 2.277 × 103 | 1.732 × 103 | 1.268 × 103 | 5.000 × 102 | 5.000 × 102 | |

| Rank | 1 | 6 | 9 | 5 | 8 | 7 | 4 | 2 | 3 | |

| 28 | Mean | 5.000 × 102 | 1.200 × 104 | 9.275 × 102 | 4.120 × 103 | 7.670 × 102 | 5.559 × 102 | 1.835 × 103 | 5.000 × 102 | 5.000 × 102 |

| Std | 4.422 × 10−4 | 7.865 × 103 | 4.799 × 101 | 1.190 × 103 | 4.914 × 101 | 3.666 × 101 | 3.997 × 102 | 4.211 × 10−4 | 5.674 × 10−4 | |

| Best | 5.000 × 102 | 6.750 × 102 | 8.462 × 102 | 1.866 × 103 | 6.391 × 102 | 5.000 × 102 | 1.188 × 103 | 5.000 × 102 | 5.000 × 102 | |

| Worst | 5.000 × 102 | 2.256 × 104 | 1.051 × 103 | 8.132 × 103 | 8.968 × 102 | 6.575 × 102 | 2.845 × 103 | 5.000 × 102 | 5.000 × 102 | |

| Rank | 1 | 9 | 6 | 8 | 5 | 4 | 7 | 2 | 3 | |

| 29 | Mean | 3.553 × 103 | 6.307 × 103 | 1.093 × 104 | 4.494 × 103 | 5.608 × 103 | 4.691 × 103 | 6.756 × 103 | 4.502 × 103 | 4.514 × 103 |

| Std | 8.641 × 102 | 1.198 × 103 | 1.755 × 103 | 5.604 × 102 | 6.617 × 102 | 5.392 × 102 | 1.300 × 103 | 9.586 × 102 | 1.046 × 103 | |

| Best | 1.911 × 103 | 3.721 × 103 | 8.172 × 103 | 3.257 × 103 | 4.514 × 103 | 3.146 × 103 | 4.721 × 103 | 2.849 × 103 | 3.025 × 103 | |

| Worst | 5.768 × 103 | 9.492 × 103 | 1.531 × 104 | 6.276 × 103 | 6.787 × 103 | 5.560 × 103 | 9.779 × 103 | 7.920 × 103 | 8.239 × 103 | |

| Rank | 1 | 7 | 9 | 2 | 6 | 5 | 8 | 3 | 4 | |

| Average Rank | 2.6207 | 6.6897 | 7.2759 | 4.3793 | 5.6897 | 3.5862 | 5.7931 | 4.3448 | 4.6207 | |

| F | Type | SSO | DBO | WOA | GWO | HHO | AVOA | GTO |

|---|---|---|---|---|---|---|---|---|

| 1 | Mean | 8.450 × 10−14 | 9.707 × 100 | 1.223 × 101 | 5.130 × 103 | 1.502 × 100 | 2.827 × 10−13 | 5.531 × 104 |

| Std | 3.934 × 10−14 | 2.158 × 101 | 1.619 × 101 | 3.105 × 103 | 6.404 × 10−1 | 1.164 × 10−13 | 2.432 × 105 | |

| Best | 5.684 × 10−14 | 5.684 × 10−14 | 6.837 × 10−1 | 8.533 × 102 | 3.373 × 10−1 | 1.137 × 10−13 | 8.955 × 102 | |

| Worst | 1.705 × 10−13 | 8.868 × 101 | 7.809 × 101 | 1.232 × 104 | 2.876 × 100 | 6.253 × 10−13 | 1.487 × 106 | |

| Rank | 1 | 4 | 5 | 6 | 3 | 2 | 7 | |

| 2 | Mean | 2.039 × 100 | 4.625 × 101 | 5.740 × 101 | 6.977 × 101 | 5.700 × 101 | 3.560 × 101 | 5.656 × 101 |

| Std | 1.824 × 100 | 2.145 × 101 | 1.793 × 101 | 2.433 × 101 | 2.279 × 101 | 2.364 × 101 | 1.401 × 101 | |

| Best | 1.986 × 10−1 | 6.377 × 100 | 6.135 × 100 | 4.497 × 101 | 4.462 × 100 | 9.160 × 10−4 | 9.619 × 100 | |

| Worst | 6.481 × 100 | 1.207 × 102 | 9.476 × 101 | 1.759 × 102 | 1.454 × 102 | 6.775 × 101 | 7.504 × 101 | |

| Rank | 1 | 3 | 6 | 7 | 5 | 2 | 4 | |

| 3 | Mean | 7.457 × 10−3 | 1.299 × 101 | 5.617 × 101 | 1.395 × 100 | 3.816 × 101 | 1.102 × 101 | 1.680 × 101 |

| Std | 2.705 × 10−2 | 6.720 × 100 | 1.235 × 101 | 1.513 × 100 | 1.011 × 101 | 6.929 × 100 | 9.457 × 100 | |

| Best | 1.137 × 10−13 | 1.712 × 100 | 3.042 × 101 | 3.802 × 10−2 | 2.057 × 101 | 6.057 × 10−1 | 2.112 × 100 | |

| Worst | 1.232 × 10−1 | 2.645 × 101 | 8.445 × 101 | 6.357 × 100 | 6.284 × 101 | 2.599 × 101 | 5.112 × 101 | |

| Rank | 1 | 4 | 7 | 2 | 6 | 3 | 5 | |

| 4 | Mean | 5.623 × 101 | 8.186 × 101 | 1.172 × 102 | 3.746 × 101 | 8.384 × 101 | 8.659 × 101 | 7.928 × 101 |

| Std | 2.091 × 101 | 2.469 × 101 | 3.671 × 101 | 1.430 × 101 | 1.422 × 101 | 2.900 × 101 | 2.119 × 101 | |

| Best | 2.288 × 101 | 3.285 × 101 | 4.975 × 101 | 1.431 × 101 | 5.699 × 101 | 2.885 × 101 | 4.020 × 101 | |

| Worst | 1.224 × 102 | 1.304 × 102 | 2.060 × 102 | 9.085 × 101 | 1.219 × 102 | 1.662 × 102 | 1.598 × 102 | |

| Rank | 2 | 4 | 7 | 1 | 5 | 6 | 3 | |

| 5 | Mean | 3.504 × 101 | 3.804 × 102 | 2.158 × 103 | 7.464 × 101 | 1.415 × 103 | 1.552 × 103 | 8.221 × 102 |

| Std | 7.383 × 101 | 3.077 × 102 | 1.139 × 103 | 5.327 × 101 | 2.363 × 102 | 4.757 × 102 | 8.107 × 102 | |

| Best | 1.791 × 10−1 | 4.877 × 100 | 6.416 × 102 | 1.225 × 100 | 9.214 × 102 | 2.568 × 102 | 4.134 × 101 | |

| Worst | 4.034 × 102 | 1.065 × 103 | 5.899 × 103 | 2.777 × 102 | 1.843 × 103 | 2.986 × 103 | 2.835 × 103 | |

| Rank | 1 | 3 | 7 | 2 | 5 | 6 | 4 |

| F | Type | SSO | DBO | WOA | GWO | HHO | AVOA | GTO |

|---|---|---|---|---|---|---|---|---|

| 6 | Mean | 5.617 × 103 | 1.832 × 104 | 4.887 × 103 | 9.515 × 105 | 7.657 × 103 | 3.968 × 103 | 5.974 × 103 |

| Std | 6.412 × 103 | 4.973 × 104 | 5.665 × 103 | 3.539 × 106 | 6.873 × 103 | 4.852 × 103 | 9.473 × 103 | |

| Best | 6.222 × 101 | 2.311 × 102 | 1.899 × 102 | 2.544 × 102 | 3.994 × 102 | 1.379 × 102 | 1.454 × 102 | |

| Worst | 2.102 × 104 | 3.079 × 105 | 1.867 × 104 | 1.773 × 107 | 2.848 × 104 | 1.861 × 104 | 5.192 × 104 | |

| Rank | 3 | 6 | 2 | 7 | 5 | 1 | 4 | |

| 7 | Mean | 3.543 × 101 | 8.382 × 101 | 1.520 × 102 | 4.246 × 101 | 1.010 × 102 | 7.208 × 101 | 1.589 × 102 |

| Std | 1.194 × 101 | 3.448 × 101 | 5.237 × 101 | 1.361 × 101 | 3.128 × 101 | 3.763 × 101 | 8.407 × 101 | |

| Best | 2.171 × 101 | 3.203 × 101 | 5.223 × 101 | 2.271 × 101 | 5.024 × 101 | 3.137 × 101 | 6.022 × 101 | |

| Worst | 6.577 × 101 | 1.829 × 102 | 2.940 × 102 | 8.749 × 101 | 1.558 × 102 | 1.919 × 102 | 4.697 × 102 | |

| Rank | 1 | 4 | 6 | 2 | 5 | 3 | 7 | |

| 8 | Mean | 2.105 × 101 | 4.558 × 101 | 4.910 × 101 | 3.523 × 101 | 3.935 × 101 | 2.643 × 101 | 1.045 × 102 |

| Std | 4.800 × 10−1 | 3.979 × 101 | 3.129 × 101 | 3.342 × 101 | 2.003 × 101 | 7.248 × 100 | 7.315 × 101 | |

| Best | 2.003 × 101 | 2.186 × 101 | 2.824 × 101 | 2.164 × 101 | 2.816 × 101 | 2.108 × 101 | 3.293 × 101 | |

| Worst | 2.244 × 101 | 1.649 × 102 | 1.717 × 102 | 1.471 × 102 | 1.492 × 102 | 4.390 × 101 | 3.282 × 102 | |

| Rank | 1 | 5 | 6 | 3 | 4 | 2 | 7 | |

| 9 | Mean | 1.653 × 102 | 1.809 × 102 | 1.813 × 102 | 1.921 × 102 | 1.813 × 102 | 1.808 × 102 | 1.809 × 102 |

| Std | 3.039 × 10−13 | 5.324 × 10−2 | 5.481 × 10−1 | 1.362 × 101 | 3.341 × 10−1 | 9.752 × 10−9 | 2.468 × 10−1 | |

| Best | 1.653 × 102 | 1.808 × 102 | 1.808 × 102 | 1.808 × 102 | 1.808 × 102 | 1.808 × 102 | 1.808 × 102 | |

| Worst | 1.653 × 102 | 1.809 × 102 | 1.828 × 102 | 2.329 × 102 | 1.821 × 102 | 1.808 × 102 | 1.823 × 102 | |

| Rank | 1 | 4 | 5 | 7 | 6 | 2 | 3 | |

| 10 | Mean | 1.272 × 102 | 1.206 × 102 | 1.431 × 103 | 4.844 × 102 | 3.147 × 102 | 2.645 × 102 | 1.016 × 102 |

| Std | 9.533 × 101 | 9.312 × 101 | 1.078 × 103 | 5.006 × 102 | 2.695 × 102 | 2.349 × 102 | 5.654 × 10−1 | |

| Best | 1.525 × 101 | 1.003 × 102 | 1.008 × 102 | 1.003 × 102 | 5.298 × 101 | 4.508 × 101 | 1.007 × 102 | |

| Worst | 4.689 × 102 | 6.317 × 102 | 3.247 × 103 | 1.850 × 103 | 9.701 × 102 | 8.968 × 102 | 1.032 × 102 | |

| Rank | 3 | 2 | 7 | 6 | 5 | 4 | 1 | |

| 11 | Mean | 3.216 × 102 | 3.426 × 102 | 2.985 × 102 | 6.291 × 102 | 3.496 × 102 | 3.270 × 102 | 3.152 × 102 |

| Std | 4.173 × 101 | 1.483 × 102 | 9.834 × 101 | 2.079 × 102 | 6.459 × 101 | 4.502 × 101 | 1.122 × 102 | |

| Best | 3.000 × 102 | 4.547 × 10−13 | 6.195 × 10−1 | 3.006 × 102 | 2.661 × 101 | 3.000 × 102 | 3.556 × 10−3 | |

| Worst | 4.000 × 102 | 7.009 × 102 | 4.001 × 102 | 1.171 × 103 | 4.050 × 102 | 4.000 × 102 | 7.605 × 102 | |

| Rank | 3 | 5 | 1 | 7 | 6 | 4 | 2 | |

| 12 | Mean | 2.000 × 102 | 2.754 × 102 | 3.029 × 102 | 2.534 × 102 | 3.098 × 102 | 2.616 × 102 | 2.486 × 102 |

| Std | 2.000 × 10−4 | 2.589 × 101 | 4.893 × 101 | 1.284 × 101 | 5.648 × 101 | 2.173 × 101 | 8.500 × 100 | |

| Best | 2.000 × 102 | 2.459 × 102 | 2.446 × 102 | 2.351 × 102 | 2.517 × 102 | 2.392 × 102 | 2.406 × 102 | |

| Worst | 2.000 × 102 | 3.662 × 102 | 4.432 × 102 | 2.884 × 102 | 5.061 × 102 | 3.453 × 102 | 2.864 × 102 | |

| Rank | 1 | 5 | 6 | 3 | 7 | 4 | 2 | |

| Average Rank | 1.58 | 4.08 | 5.42 | 4.42 | 5.17 | 3.25 | 4.08 | |

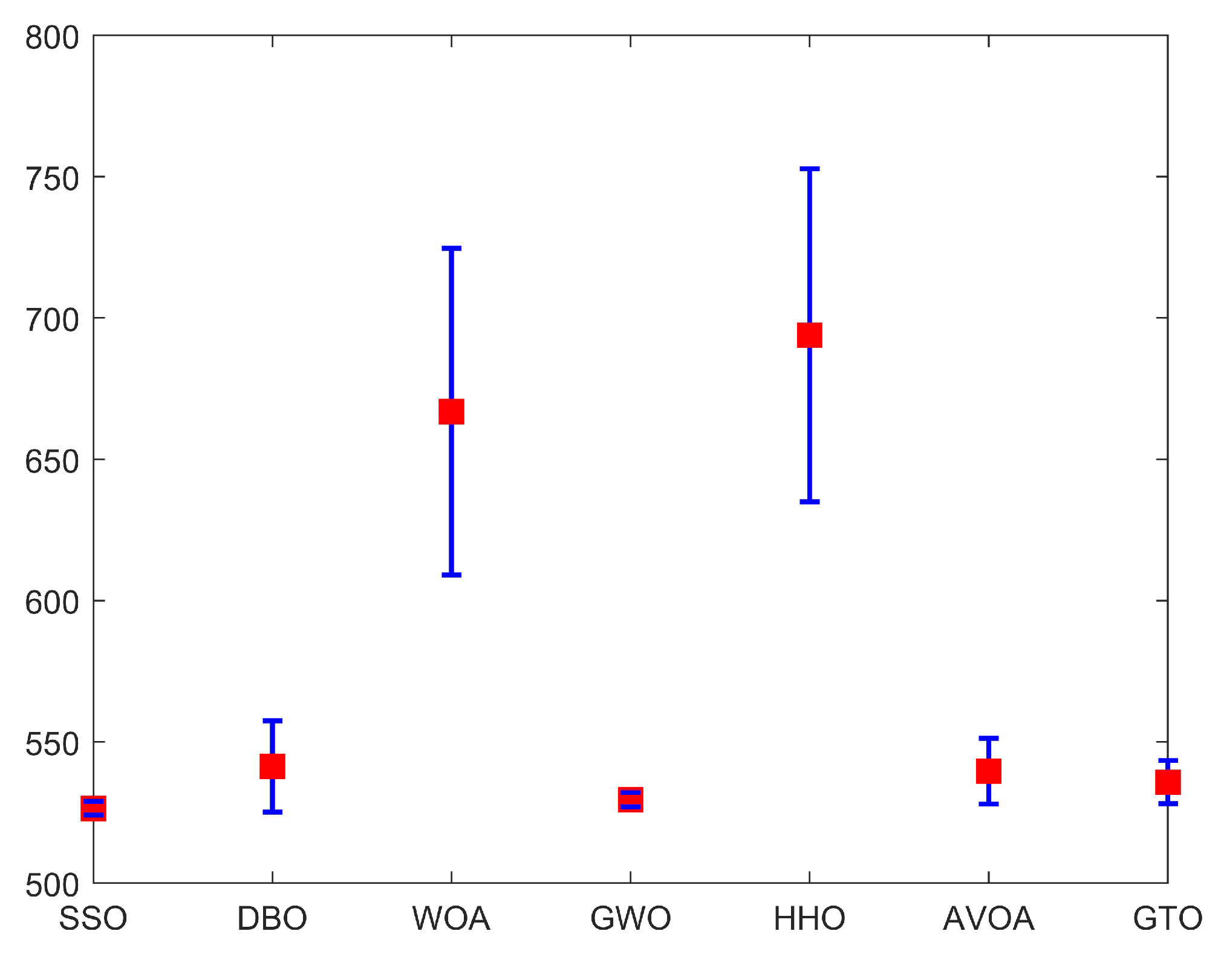

| Type | SSO | DBO | WOA | GWO | HHO | AVOA | GTO |

|---|---|---|---|---|---|---|---|

| Mean | 5.265 × 102 | 5.414 × 102 | 6.669 × 102 | 5.295 × 102 | 6.939 × 102 | 5.396 × 102 | 5.357 × 102 |

| Std | 2.496 × 100 | 1.613 × 101 | 5.779 × 101 | 2.538 × 100 | 5.889 × 101 | 1.169 × 101 | 7.616 × 100 |

| Best | 5.248 × 102 | 5.248 × 102 | 5.532 × 102 | 5.251 × 102 | 5.908 × 102 | 5.270 × 102 | 5.264 × 102 |

| Worst | 5.327 × 102 | 5.909 × 102 | 7.907 × 102 | 5.324 × 102 | 8.123 × 102 | 5.918 × 102 | 5.563 × 102 |

| Rank | 1 | 5 | 6 | 2 | 7 | 4 | 3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, J.; Yan, Z.; Sato, Y.; Zuo, Q. Salmon Salar Optimization: A Novel Natural Inspired Metaheuristic Method for Deep-Sea Probe Design for Unconventional Subsea Oil Wells. J. Mar. Sci. Eng. 2024, 12, 1802. https://doi.org/10.3390/jmse12101802

Guo J, Yan Z, Sato Y, Zuo Q. Salmon Salar Optimization: A Novel Natural Inspired Metaheuristic Method for Deep-Sea Probe Design for Unconventional Subsea Oil Wells. Journal of Marine Science and Engineering. 2024; 12(10):1802. https://doi.org/10.3390/jmse12101802

Chicago/Turabian StyleGuo, Jia, Zhou Yan, Yuji Sato, and Qiankun Zuo. 2024. "Salmon Salar Optimization: A Novel Natural Inspired Metaheuristic Method for Deep-Sea Probe Design for Unconventional Subsea Oil Wells" Journal of Marine Science and Engineering 12, no. 10: 1802. https://doi.org/10.3390/jmse12101802

APA StyleGuo, J., Yan, Z., Sato, Y., & Zuo, Q. (2024). Salmon Salar Optimization: A Novel Natural Inspired Metaheuristic Method for Deep-Sea Probe Design for Unconventional Subsea Oil Wells. Journal of Marine Science and Engineering, 12(10), 1802. https://doi.org/10.3390/jmse12101802