Identification of Large Yellow Croaker under Variable Conditions Based on the Cycle Generative Adversarial Network and Transfer Learning

Abstract

1. Introduction

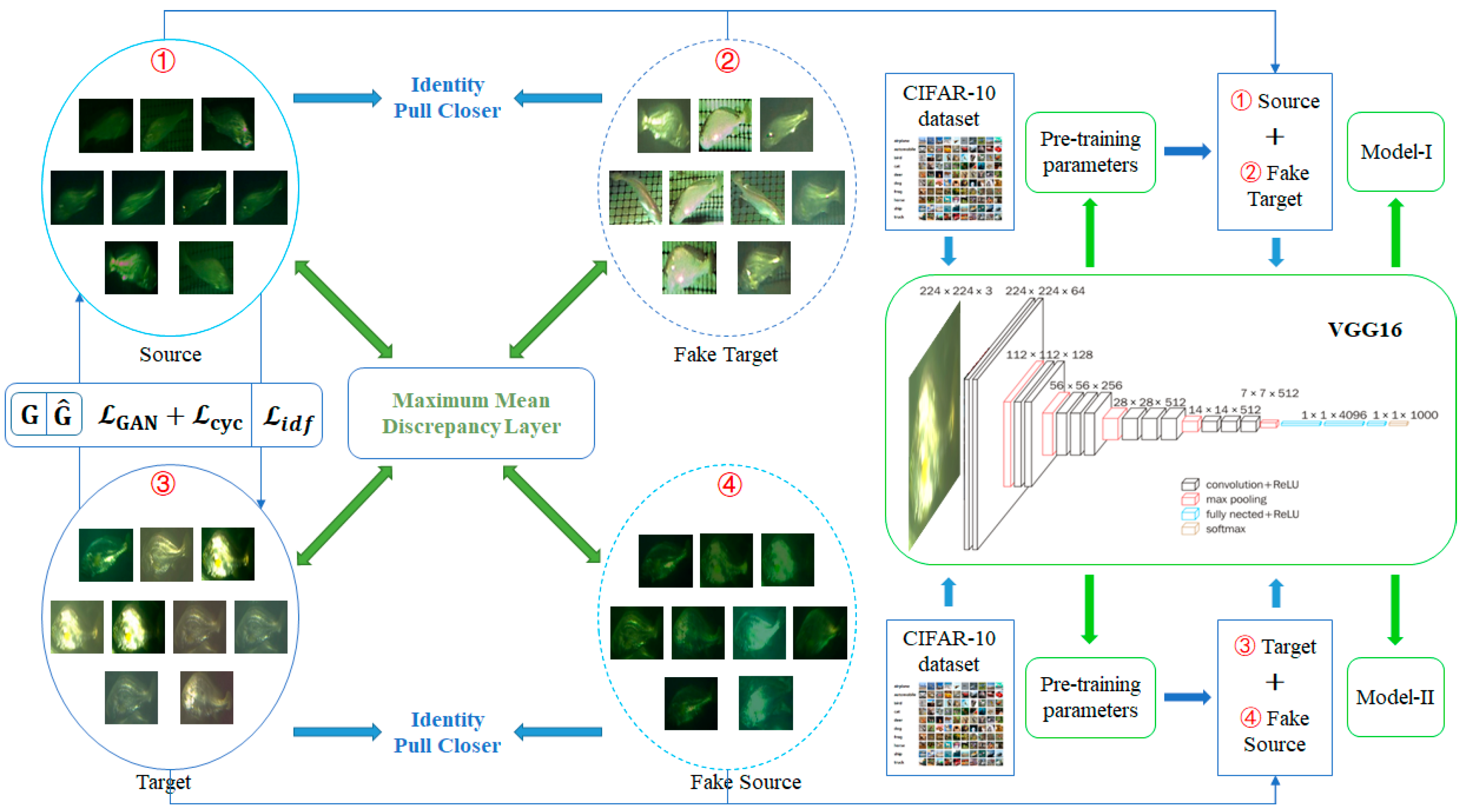

2. Proposed Method

2.1. Method Overview

2.2. CycleGAN-Based Translation

2.3. Identity Foreground Loss

2.4. Maximum Mean Discrepancy

2.5. Full Objective Function

2.6. Transfer Learning

3. Experiments

3.1. Datasets and Evaluation Protocol

3.2. Implementation Details

4. Evaluation

4.1. Performance of Direct Transfer

4.2. Effectiveness of the CycleGAN

4.3. Necessity of Identity Foreground Loss

4.4. Importance of Maximum Mean Discrepancy

4.5. Practicability of Our Method

4.6. Parameter Sensitivity

4.7. Comparison with State-of-the-Art Methods

4.8. Effectiveness of Transfer Learning

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wu, Y.; Yu, X.; Suo, N.; Bai, H.; Ke, Q.; Chen, J.; Pan, Y.; Zheng, W.; Xu, P. Thermal tolerance, safety margins and acclimation capacity assessments reveal the climate vulnerability of large yellow croaker aquaculture. Aquaculture 2022, 561, 738665. [Google Scholar] [CrossRef]

- Bai, Y.; Wang, J.; Zhao, J.; Ke, Q.; Qu, A.; Deng, Y.; Zeng, J.; Gong, J.; Chen, J.; Pan, Y.; et al. Genomic selection for visceral white-nodules diseases resistance in large yellow croaker. Aquaculture 2022, 559, 738421. [Google Scholar] [CrossRef]

- Sandford, M.; Castillo, G.; Hung, T.C. A review of fish identification methods applied on small fish. Rev. Aquac. 2020, 12, 542–554. [Google Scholar] [CrossRef]

- Alaba, S.Y.; Nabi, M.M.; Shah, C.; Prior, J.; Campbell, M.D.; Wallace, F.; Ball, E.B.; Moorhead, R. Class-aware fish species recognition using deep learning for an imbalanced dataset. Sensors 2022, 22, 8268. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.C.; Ubina, N.A.; Cheng, S.C.; Lan, H.Y.; Chen, K.C.; Huang, C.C. A Two-Mode Underwater Smart Sensor Object for Precision Aquaculture Based on AIoT Technology. Sensors 2022, 22, 7603. [Google Scholar] [CrossRef] [PubMed]

- Hsiao, Y.H.; Chen, C.C.; Lin, S.I.; Lin, F.P. Real-world underwater fish recognition and identification, using sparse representation. Ecol. Inform. 2014, 23, 13–21. [Google Scholar] [CrossRef]

- Zhang, Z.; Du, X.; Jin, L.; Wang, S.; Wang, L.; Liu, X. Large-scale underwater fish recognition via deep adversarial learning. Knowl. Inf. Syst. 2022, 64, 353–379. [Google Scholar] [CrossRef]

- Liang, J.M.; Mishra, S.; Cheng, Y.L. Applying Image Recognition and Tracking Methods for Fish Physiology Detection Based on a Visual Sensor. Sensors 2022, 22, 5545. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Liu, W.; Zhu, Y.; Han, W.; Huang, Y.; Li, J. Research on fish identification in tropical waters under unconstrained environment based on transfer learning. Earth Sci. Inform. 2022, 15, 1155–1166. [Google Scholar] [CrossRef]

- Xu, X.; Li, W.; Duan, Q. Transfer learning and SE-ResNet152 networks-based for small-scale unbalanced fish species identification. Comput. Electron. Agric. 2021, 180, 105878. [Google Scholar] [CrossRef]

- Saghafi, M.A.; Hussain, A.; Zaman, H.B.; Saad, M.H.M. Review of person re-identification techniques. IET Comput. Vis. 2014, 8, 455–474. [Google Scholar] [CrossRef]

- Huang, N.; Liu, J.; Miao, Y.; Zhang, Q.; Han, J. Deep learning for visible-infrared cross-modality person re-identification: A comprehensive review. Inf. Fusion 2022, 91, 396–411. [Google Scholar] [CrossRef]

- Shruthi, U.; Nagaveni, V.; Raghavendra, B.K. A review on machine learning classification techniques for plant disease detection. In Proceedings of the 2019 5th International Conference on Advanced Computing & Communication Systems (ICACCS), Coimbatore, India, 15–16 March 2019; pp. 281–284. [Google Scholar]

- Mahmud, M.S.; Zahid, A.; Das, A.K.; Muzammil, M.; Khan, M.U. A systematic literature review on deep learning applica-tions for precision cattle farming. Comput. Electron. Agric. 2021, 187, 106313. [Google Scholar] [CrossRef]

- Duong, H.T.; Hoang, V.T. Dimensionality reduction based on feature selection for rice varieties recognition. In Proceedings of the 2019 4th International Conference on Information Technology (InCIT), Bangkok, Thailand, 24–25 October 2019; pp. 199–284. [Google Scholar]

- Chen, J.; Chen, W.; Zeb, A.; Yang, S.; Zhang, D. Lightweight inception networks for the recognition and detection of rice plant diseases. IEEE Sens. J. 2022, 22, 14628–14638. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, H.; Tian, F.; Zhou, Y.; Zhao, S.; Du, X. Research on sheep face recognition algorithm based on improved AlexNet model. Neural Comput. Appl. 2023, 35, 1–9. [Google Scholar] [CrossRef]

- Peng, Y.; Kondo, N.; Fujiura, T.; Suzuki, T.; Ouma, S.; Yoshioka, H.; Itoyama, E. Dam behavior patterns in Japanese black beef cattle prior to calving: Automated detection using LSTM-RNN. Comput. Electron. Agric. 2020, 169, 105178. [Google Scholar] [CrossRef]

- Barbedo, J. A Review on the Use of Computer Vision and Artificial Intelligence for Fish Recognition, Monitoring, and Management. Fishes 2022, 7, 335. [Google Scholar] [CrossRef]

- Yuan, P.; Song, J.; Xu, H. Fish Image Recognition Based on Residual Network and Few-shot Learning. Trans. Chin. Soc. Agric. Mach. 2022, 53, 282–290. [Google Scholar]

- Wang, J.; Zhu, X.; Gong, S.; Li, W. Transferable joint attribute-identity deep learning for unsupervised person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2275–2284. [Google Scholar]

- Deng, W.; Zheng, L.; Ye, Q.; Kang, G.; Yang, Y.; Jiao, J. Image-image domain adaptation with preserved self-similarity and domain-dissimilarity for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 994–1003. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Ye, M.; Lan, X.; Yuen, P.C. Robust anchor embedding for unsupervised video person re-identification in the wild. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 170–186. [Google Scholar]

- Ye, M.; Li, J.; Ma, A.J.; Zheng, L.; Yuen, P.C. Dynamic graph co-matching for unsupervised video-based person re-identification. IEEE Trans. Image Process. 2019, 28, 2976–2990. [Google Scholar] [CrossRef] [PubMed]

- Tang, Y.; Yang, X.; Wang, N.; Song, B.; Gao, X. CGAN-TM: A novel domain-to-domain transferring method for person re-identification. IEEE Trans. Image Process. 2020, 29, 5641–5651. [Google Scholar] [CrossRef] [PubMed]

- Wei, L.; Zhang, S.; Gao, W.; Tian, Q. Person transfer gan to bridge domain gap for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 79–88. [Google Scholar]

- Zhong, Z.; Zheng, L.; Zheng, Z.; Li, S.; Yang, Y. Camera style adaptation for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5157–5166. [Google Scholar]

- Choi, Y.; Choi, M.; Kim, M.; Ha, J.W.; Kim, S.; Choo, J. Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8789–8797. [Google Scholar]

| Method | Target to Source | Source to Target | ||||

|---|---|---|---|---|---|---|

| Recall | Specificity | mAP | Recall | Specificity | mAP | |

| No Transfer | 52 | 86 | 56.2 | 24.2 | 90.9 | 57.6 |

| Direct Transfer | 47.5 | 100 | 73.8 | 30.3 | 100 | 65.2 |

| CycleGAN | 57.5 | 100 | 78.8 | 18.2 | 97.5 | 61.7 |

| CycleGAN + IDF | 60 | 100 | 80 | 12.1 | 97.5 | 58.9 |

| CycleGAN + MMD | 65 | 95 | 81 | 24.3 | 100 | 65.8 |

| CycleGAN + IDF + MMD | 77.5 | 100 | 88.7 | 69.5 | 97.5 | 85 |

| Target to Source | Source to Target | |||

|---|---|---|---|---|

| Recall | mAP | Recall | mAP | |

| 0 | 57.5 | 78.75 | 18.2 | 61.65 |

| 2.5 | 58.5 | 79.5 | 12 | 58.8 |

| 5 | 60 | 80 | 12.1 | 58.9 |

| 7.5 | 58.75 | 78.3 | 11.6 | 58.3 |

| 10 | 58 | 70.75 | 11.2 | 58.1 |

| Target to Source | Source to Target | |||

|---|---|---|---|---|

| Recall | mAP | Recall | mAP | |

| 0 | 60 | 80 | 12.1 | 58.9 |

| 0.2 | 65.3 | 84 | 30.3 | 65.2 |

| 0.4 | 70.5 | 85.5 | 54.2 | 77.3 |

| 0.6 | 77.5 | 88.7 | 60.1 | 80.9 |

| 0.8 | 77 | 88.4 | 69.5 | 85 |

| 1 | 76 | 88.1 | 69.2 | 84.8 |

| Methods | Target to Source | Source to Target | ||

|---|---|---|---|---|

| Recall | mAP | Recall | mAP | |

| PTGAN [27] | 60 | 80 | 12.1 | 58.9 |

| CamStyle [28] | 71.79 | 80.3 | 33.12 | 70.62 |

| StarGAN [29] | 82.05 | 88.39 | 24.03 | 66.95 |

| Our Method | 77.5 | 88.7 | 69.5 | 85 |

| Training Data | Recall | mAP |

|---|---|---|

| Source | 80.3 | 89.6 |

| F (Target) | 36.4 | 44.3 |

| Source + F (Target) | 96.5 | 96.9 |

| Target | 79.2 | 85 |

| F (Source) | 31.8 | 70.3 |

| Target + F (Source) | 87 | 94 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, S.; Qian, C.; Tu, X.; Zheng, H.; Zhu, L.; Liu, H.; Chen, J. Identification of Large Yellow Croaker under Variable Conditions Based on the Cycle Generative Adversarial Network and Transfer Learning. J. Mar. Sci. Eng. 2023, 11, 1461. https://doi.org/10.3390/jmse11071461

Liu S, Qian C, Tu X, Zheng H, Zhu L, Liu H, Chen J. Identification of Large Yellow Croaker under Variable Conditions Based on the Cycle Generative Adversarial Network and Transfer Learning. Journal of Marine Science and Engineering. 2023; 11(7):1461. https://doi.org/10.3390/jmse11071461

Chicago/Turabian StyleLiu, Shijing, Cheng Qian, Xueying Tu, Haojun Zheng, Lin Zhu, Huang Liu, and Jun Chen. 2023. "Identification of Large Yellow Croaker under Variable Conditions Based on the Cycle Generative Adversarial Network and Transfer Learning" Journal of Marine Science and Engineering 11, no. 7: 1461. https://doi.org/10.3390/jmse11071461

APA StyleLiu, S., Qian, C., Tu, X., Zheng, H., Zhu, L., Liu, H., & Chen, J. (2023). Identification of Large Yellow Croaker under Variable Conditions Based on the Cycle Generative Adversarial Network and Transfer Learning. Journal of Marine Science and Engineering, 11(7), 1461. https://doi.org/10.3390/jmse11071461