A Lightweight Detection Algorithm for Unmanned Surface Vehicles Based on Multi-Scale Feature Fusion

Abstract

:1. Introduction

- (1)

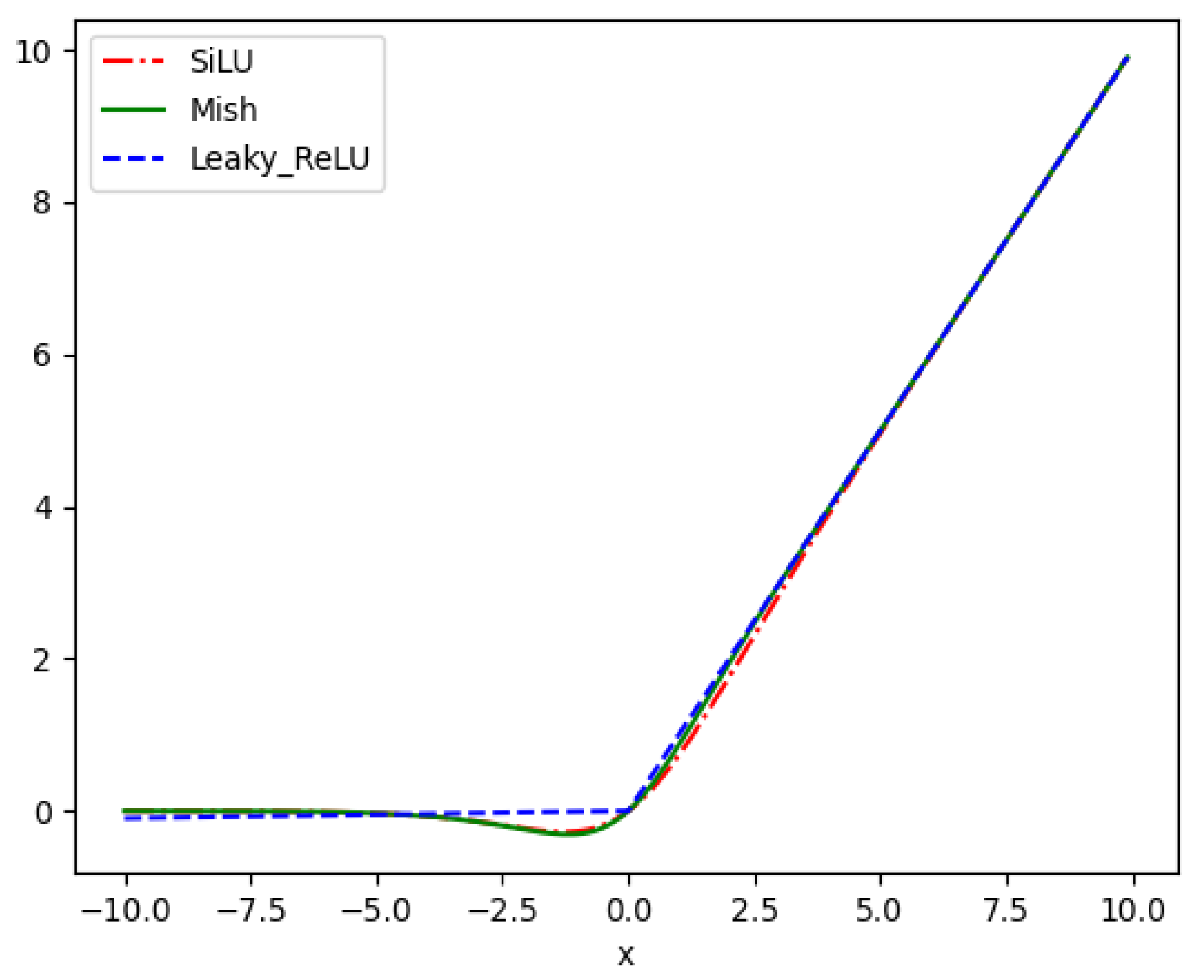

- A multi-scale feature extraction module is designed to enhance the network’s ability to extract target features. Meanwhile, this paper uses the Mish and SiLU activation functions to replace the original activation functions and improve the learning ability of the network.

- (2)

- In this paper, coordinate convolution is used in path aggregation networks to improve the fusion of information from multi-scale feature maps in the up-sampling step. Finally, the dynamic head is used in the prediction process to effectively combine spatial information, multi-scale features, and task awareness.

- (3)

- For USVs, a target detection technique with fewer parameters and reduced computing costs was suggested; it outperforms top lightweight algorithms in a variety of complicated scenarios on water and fully satisfies the timeliness requirements. In addition, a number of model improvement comparison experiments are designated to serve as references for the investigation of techniques for water surface target detection.

2. Related Works

3. Materials and Methods

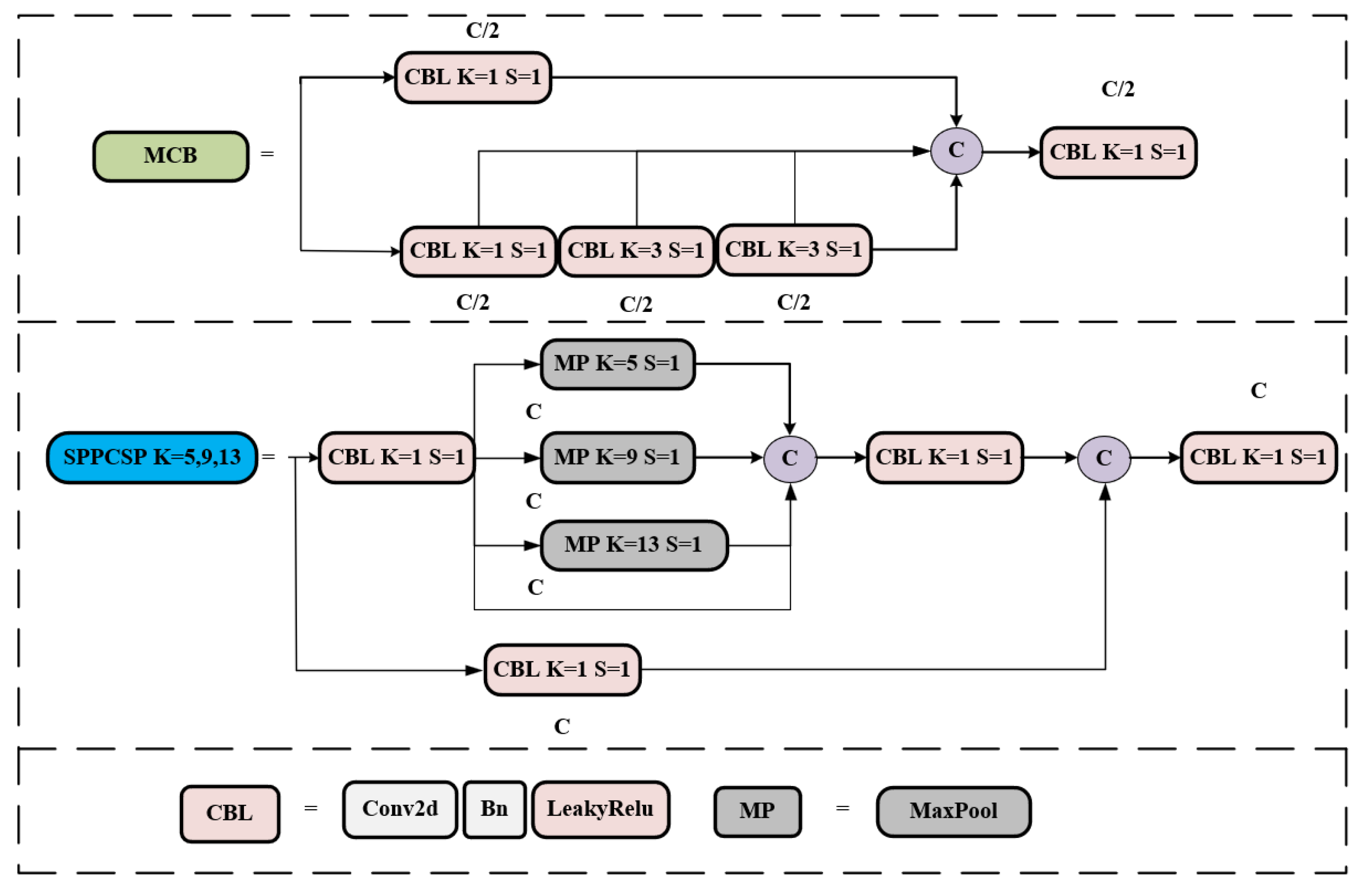

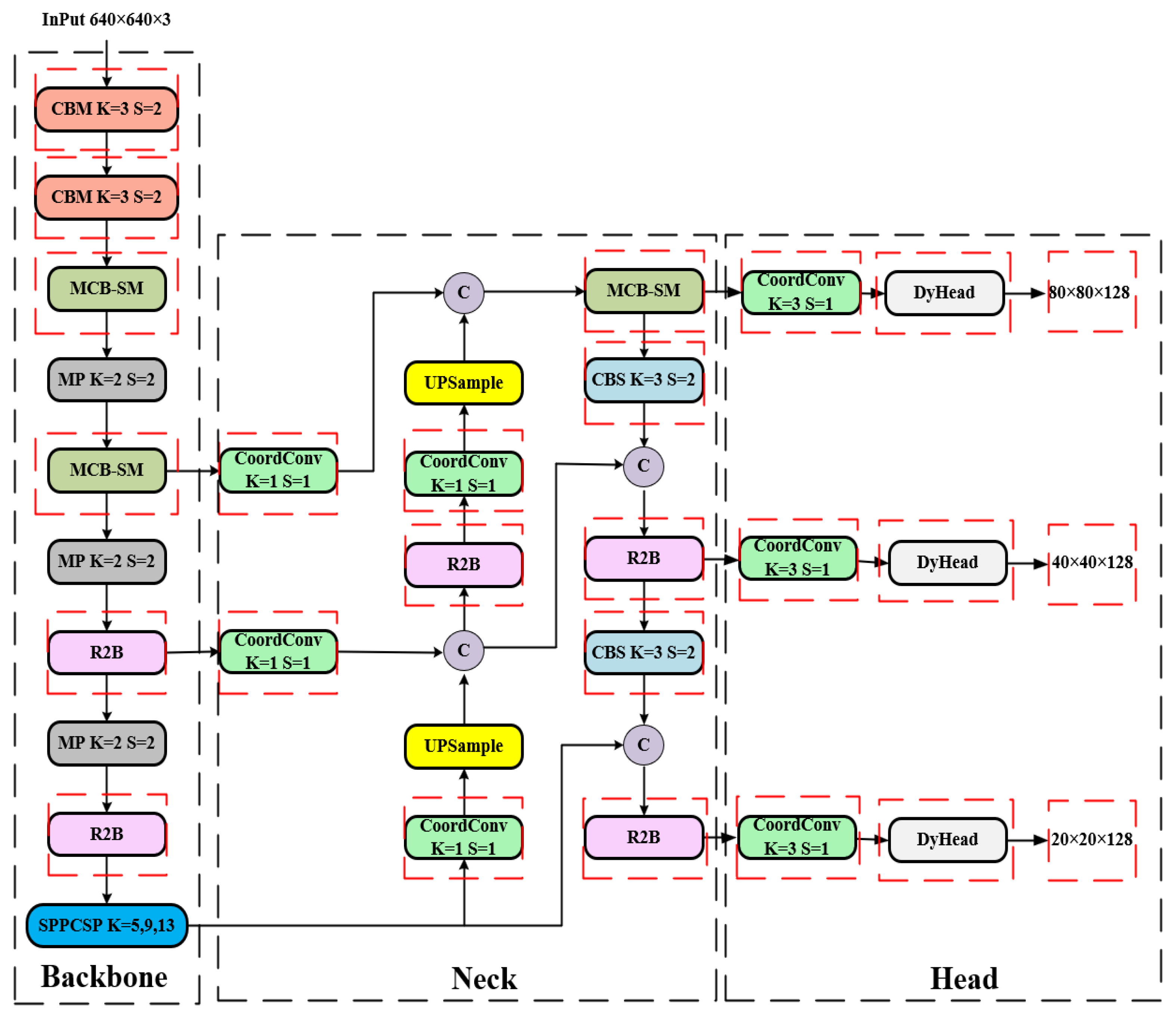

3.1. The YOLOv7-Tiny Detection Framework

3.1.1. Backbone Network

3.1.2. Head Network

3.1.3. Prediction Network

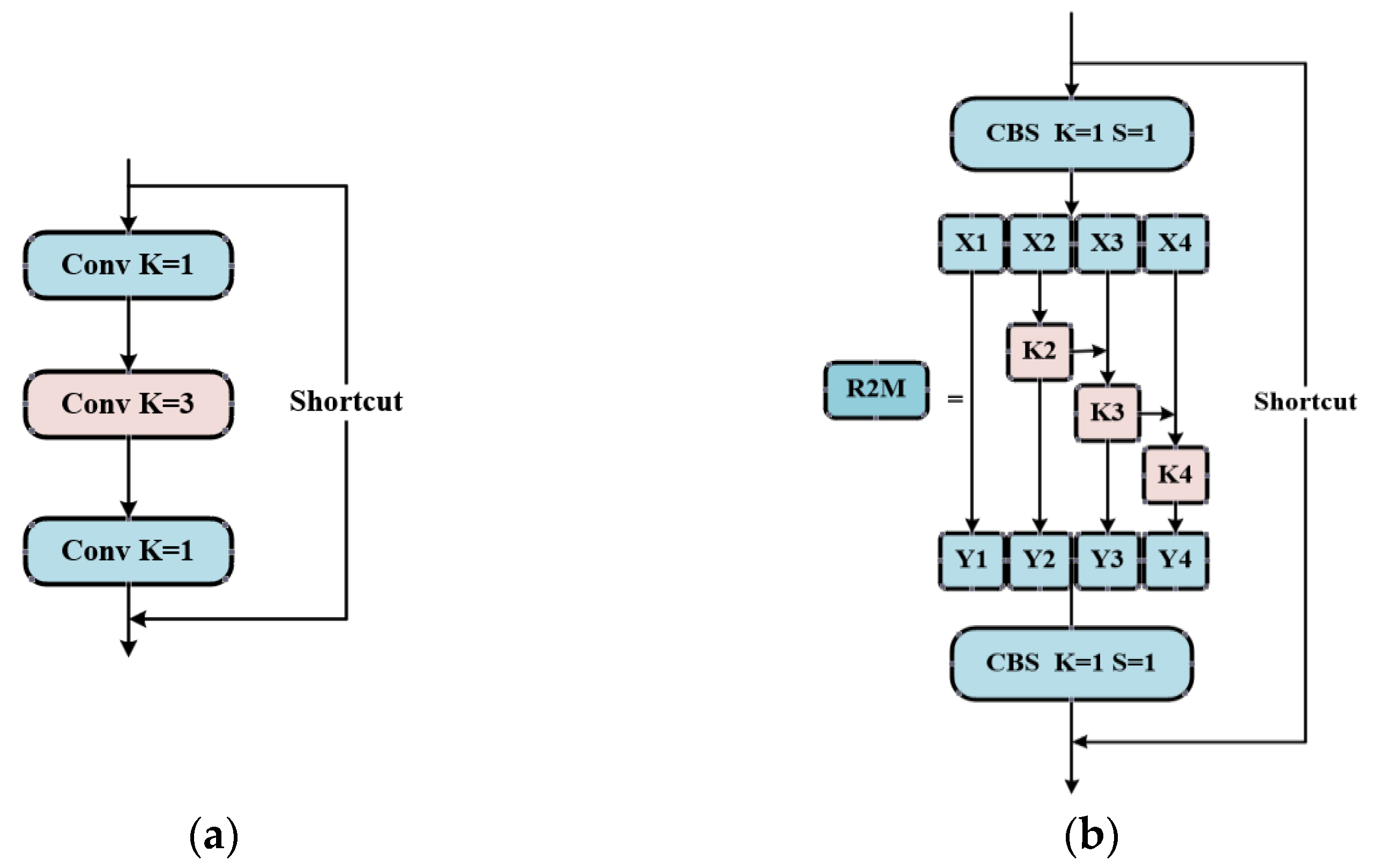

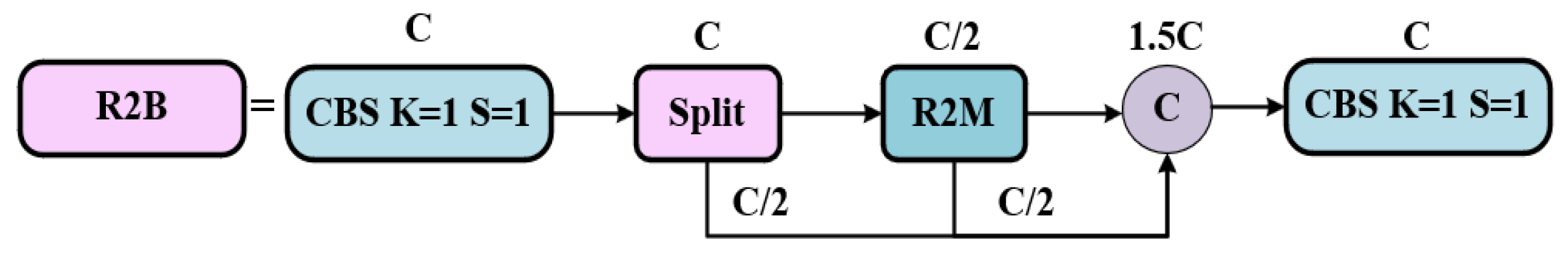

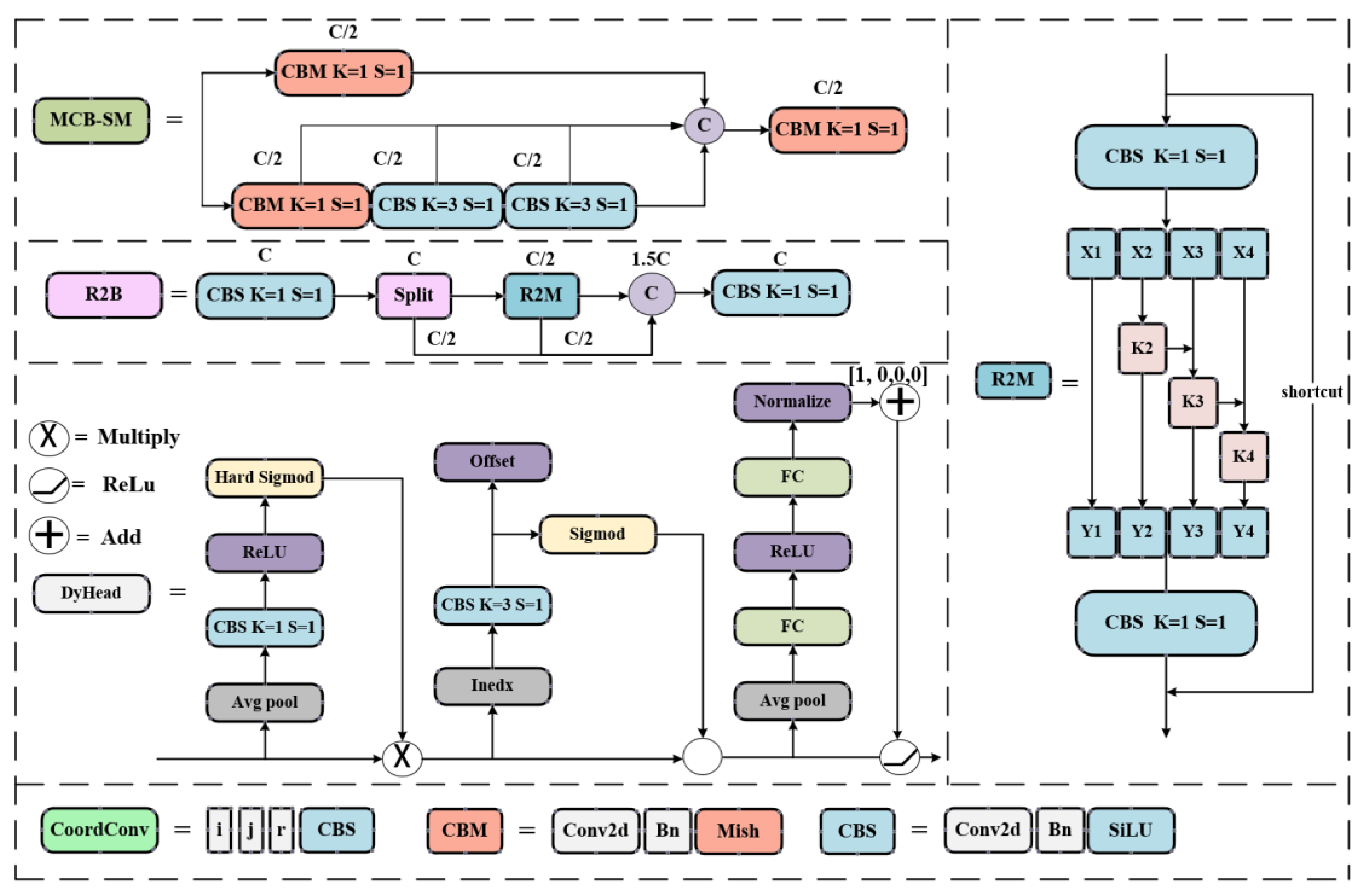

3.2. The New Proposals

3.2.1. The Mish and SiLU Activation Function

3.2.2. Design of Res2Block

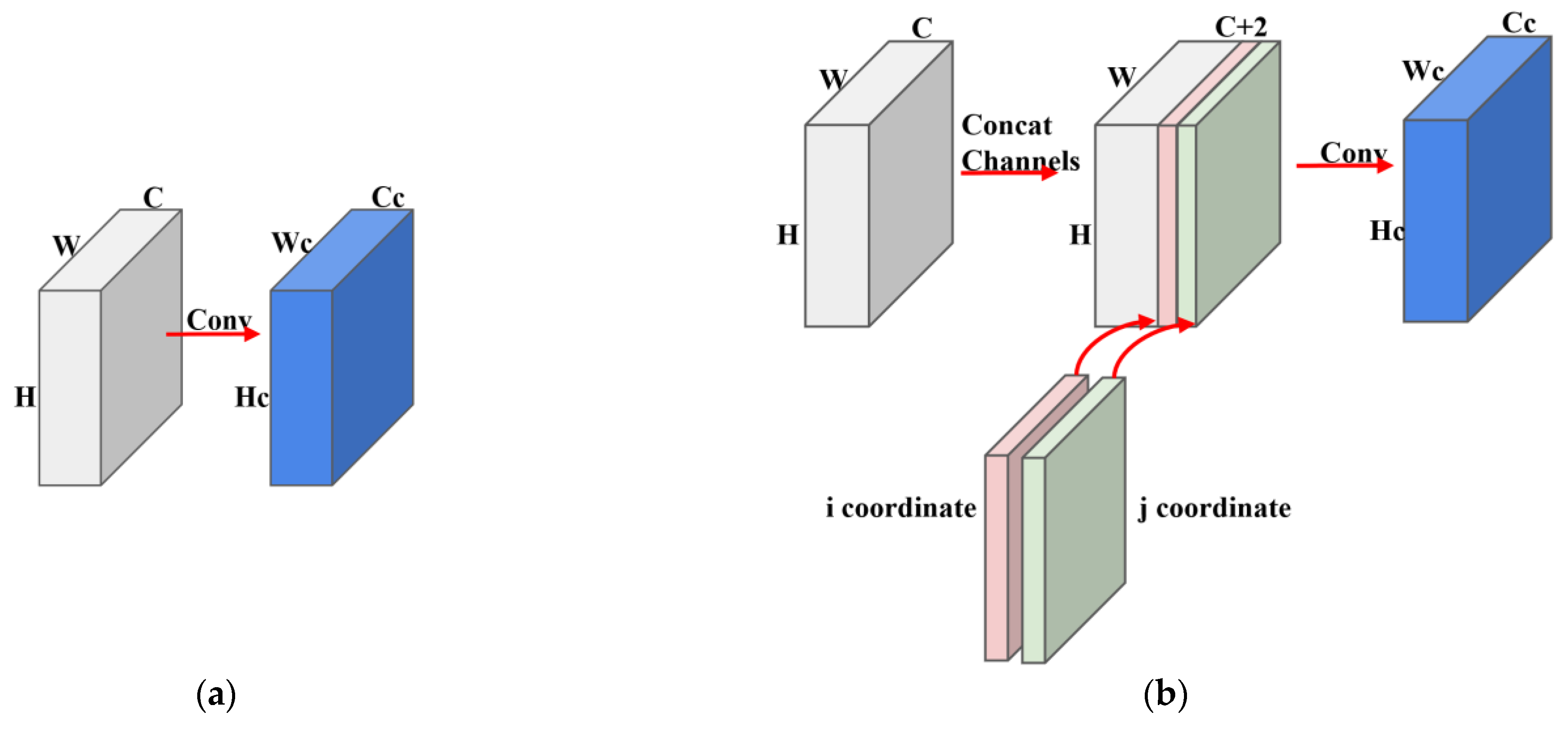

3.2.3. Neck Combined with CoordConv

3.2.4. Head Combined with Dynamic Head

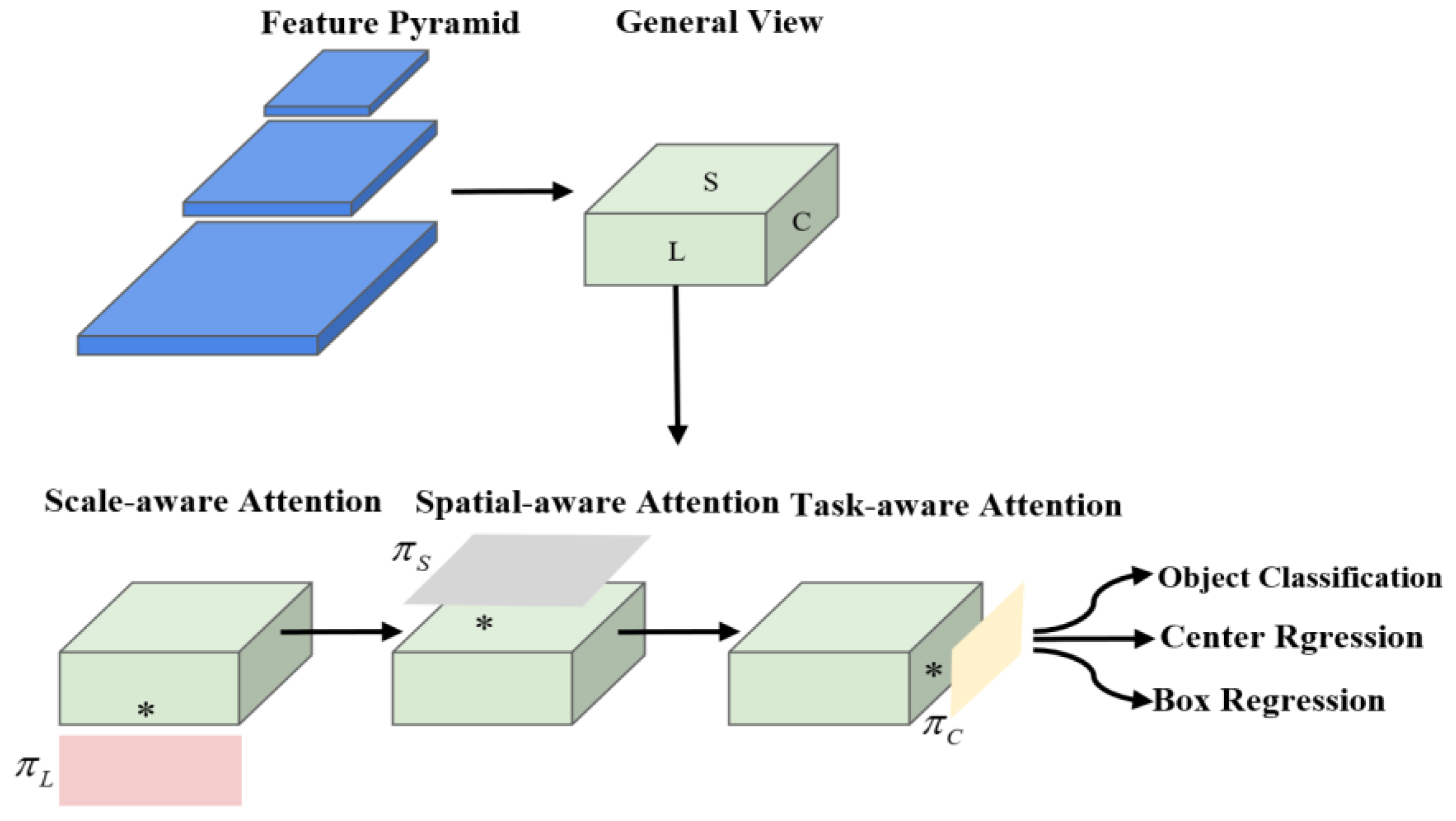

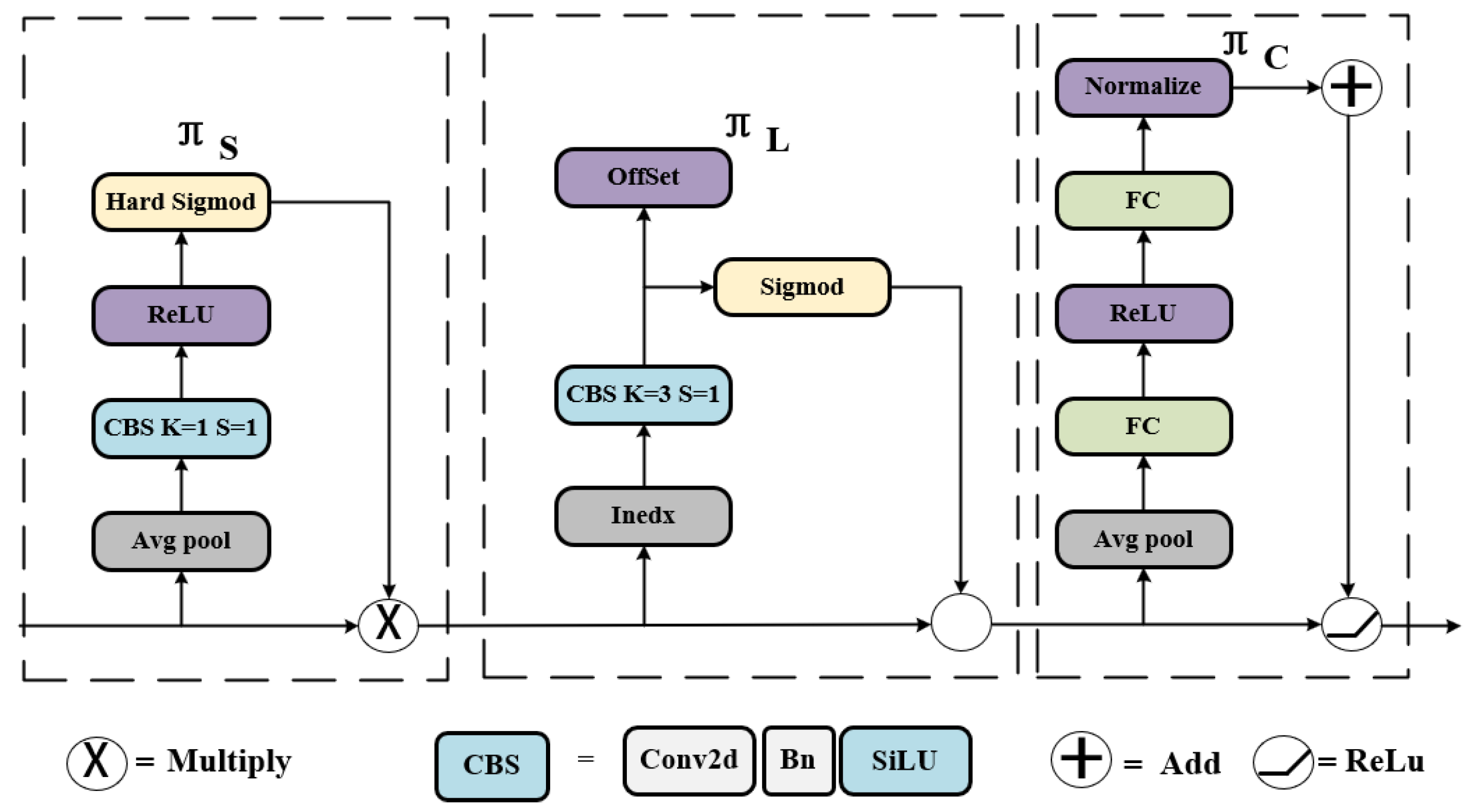

3.2.5. The Proposed Model

4. Experiments

4.1. Experimental Environment and Parameter Setting

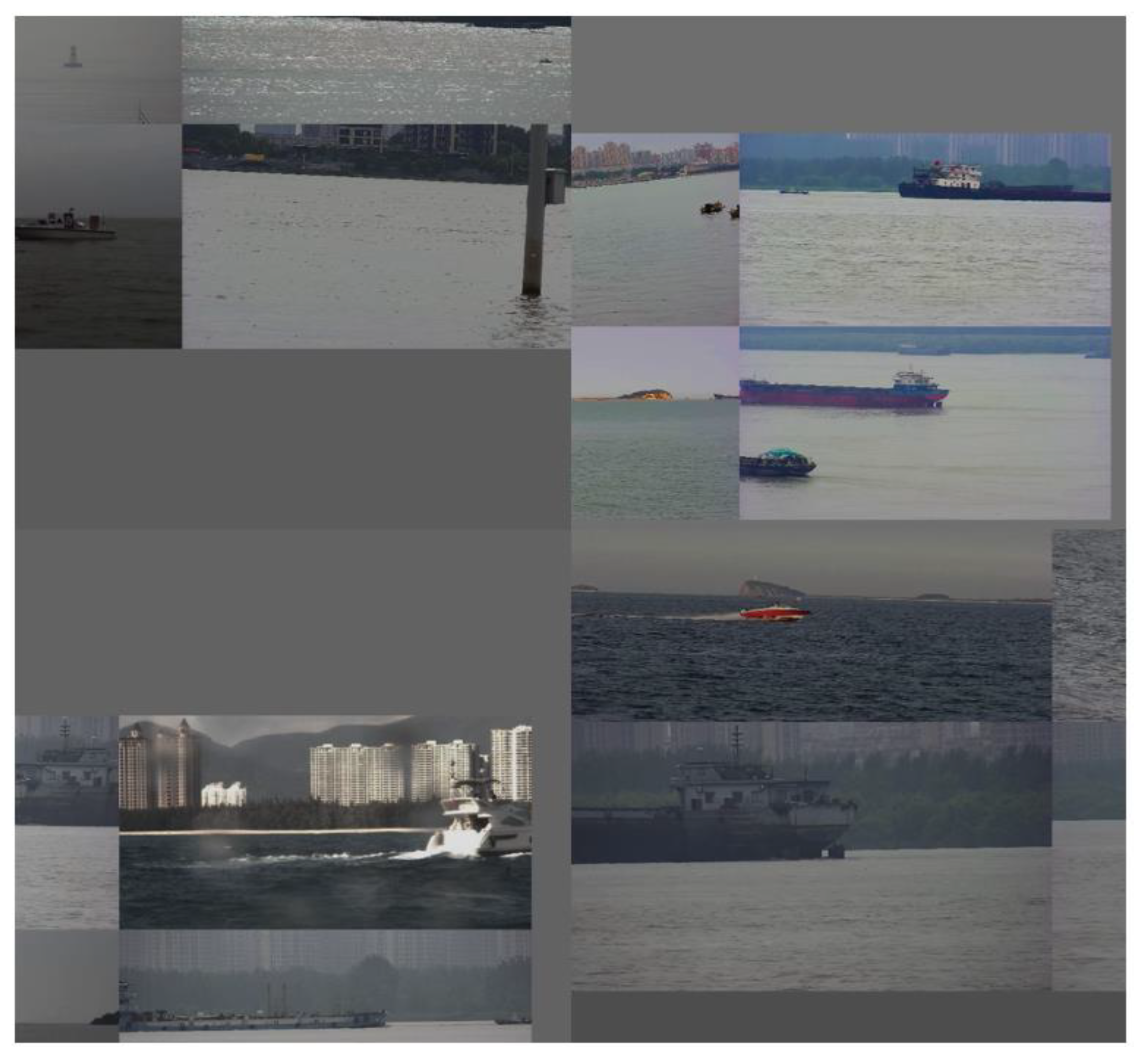

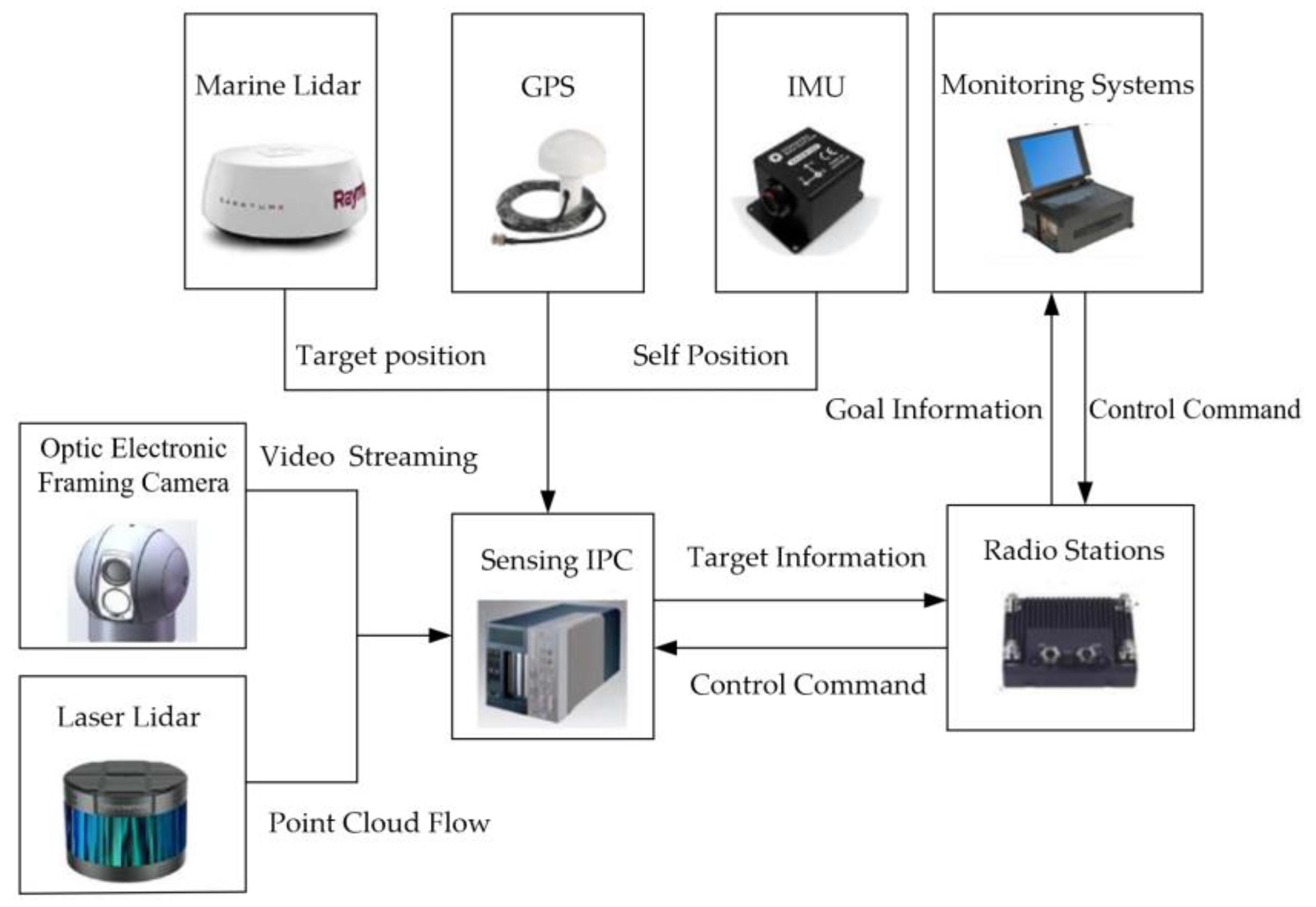

4.2. Introduction to USV and Datasets

4.3. Evaluation Metrics

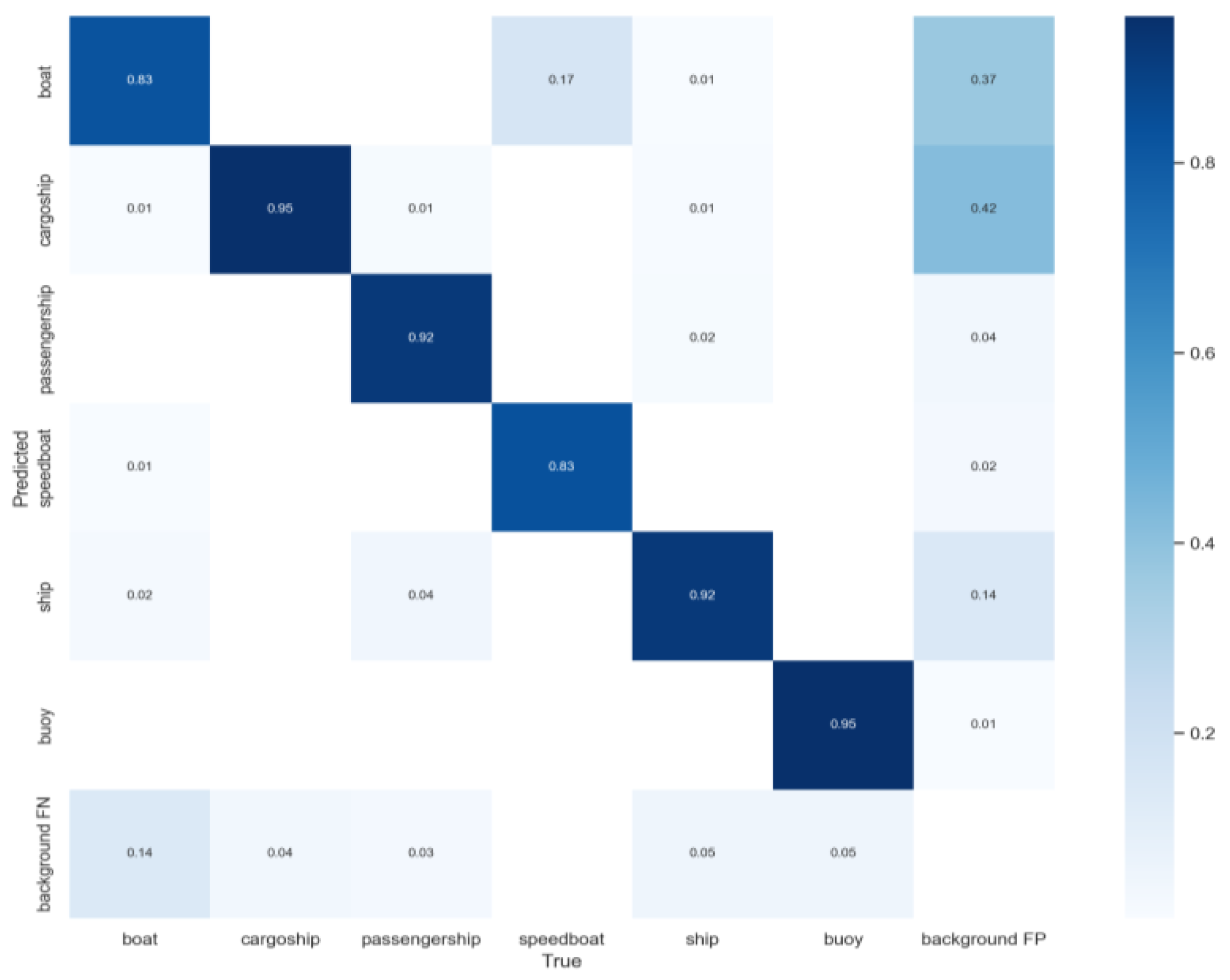

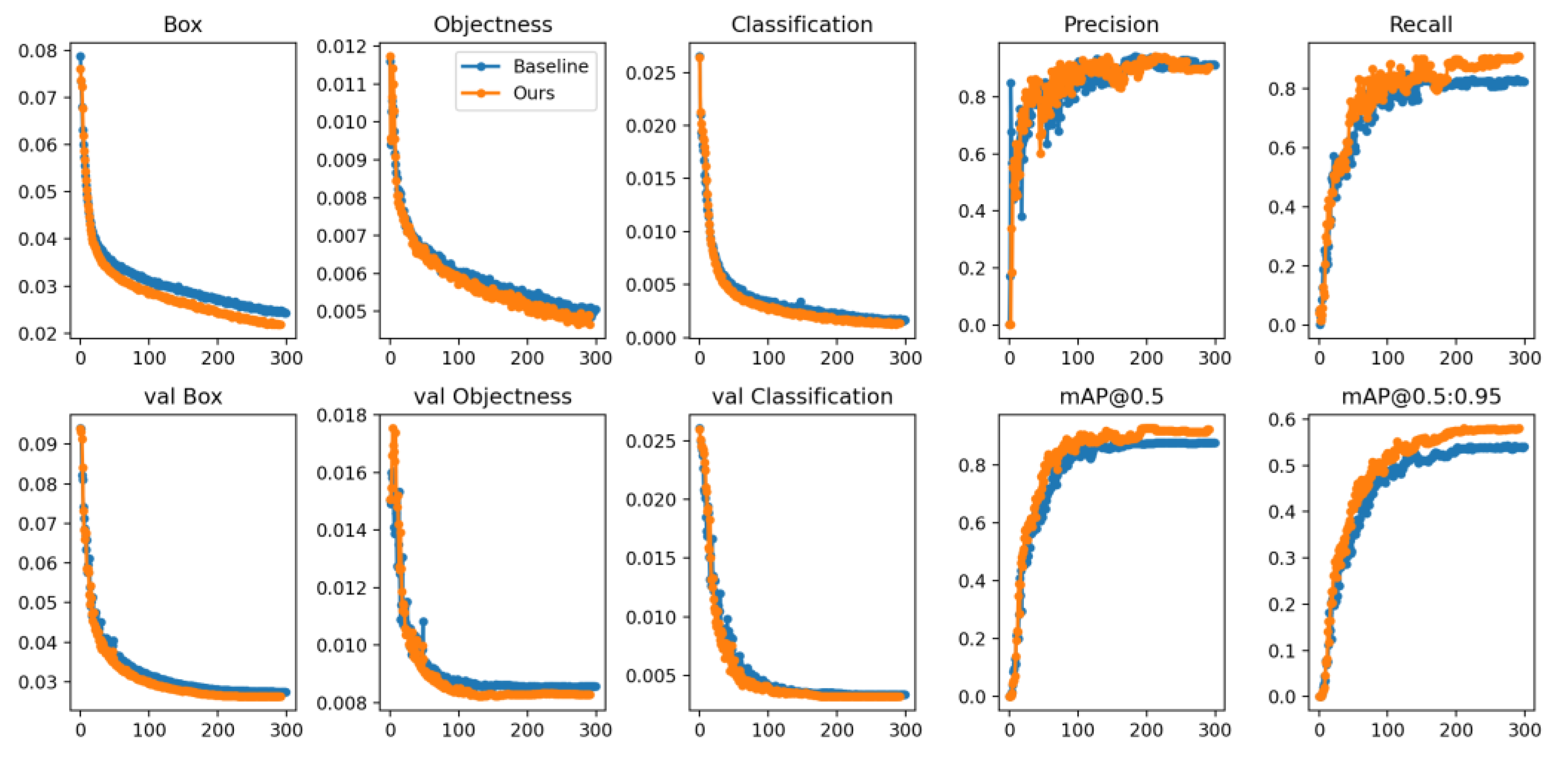

4.4. Experimental Results and Analysis

4.5. Comparison with Other Popular Models

4.6. Ablation Experiments

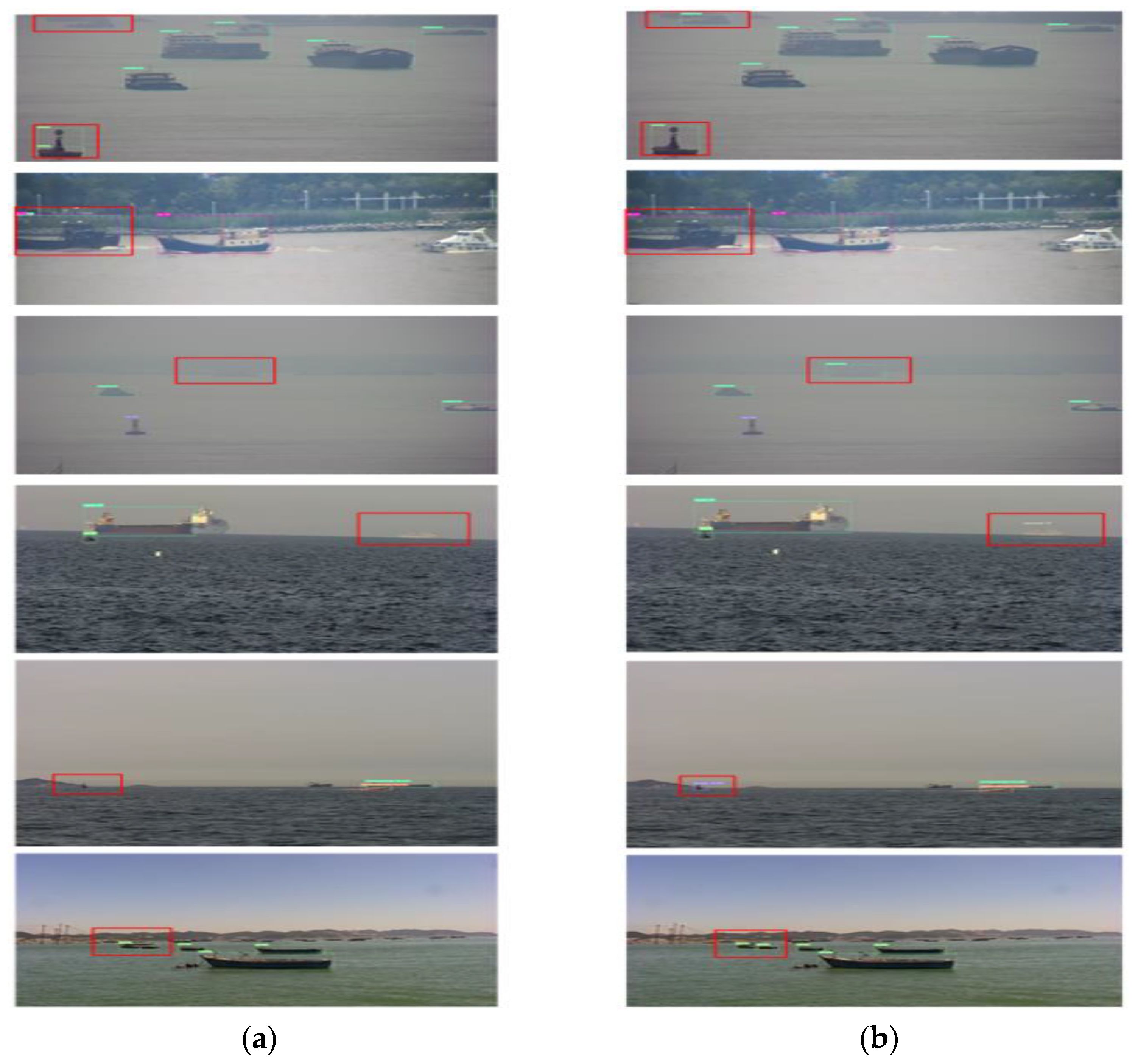

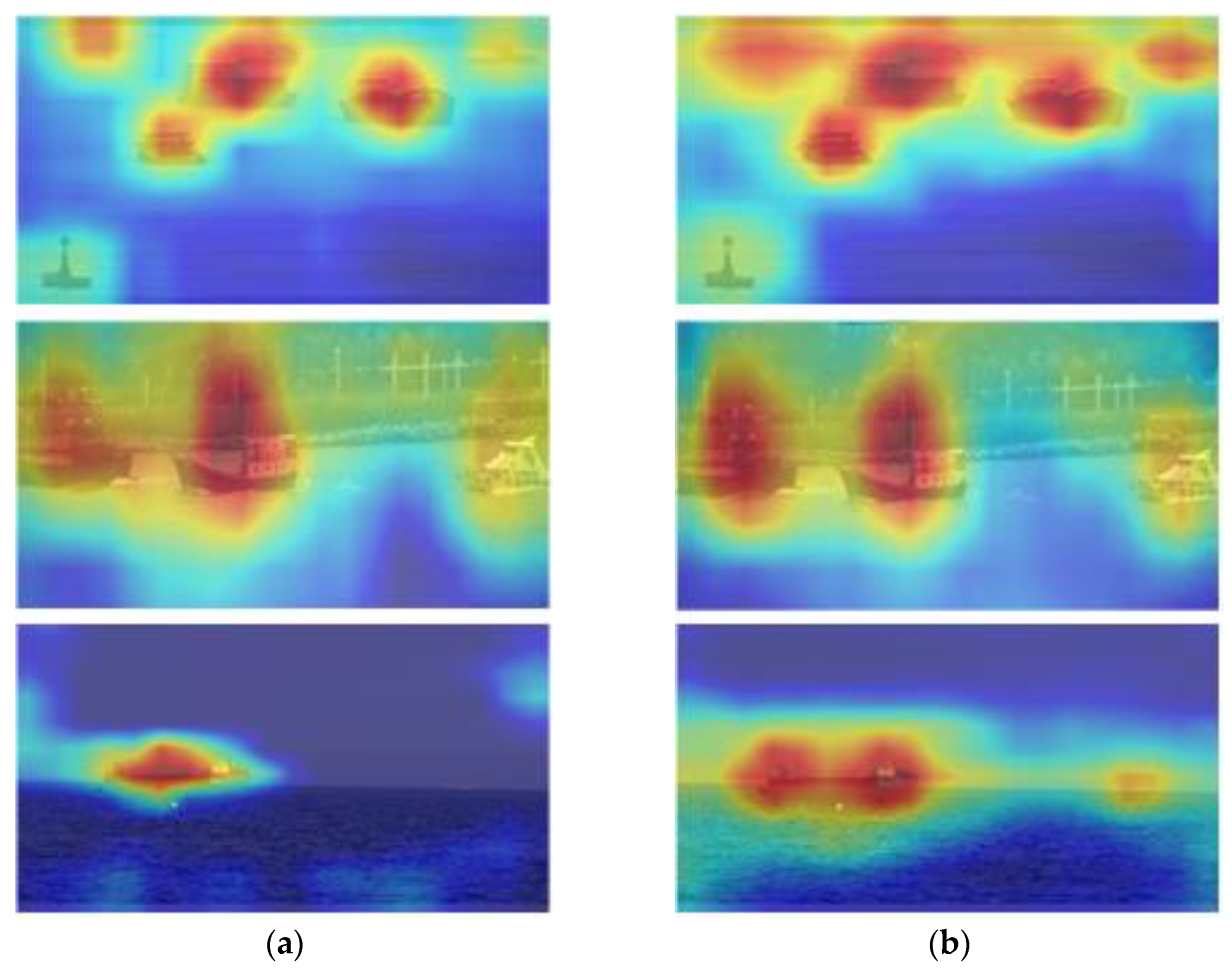

4.7. Comparative Analysis of Visualization Results

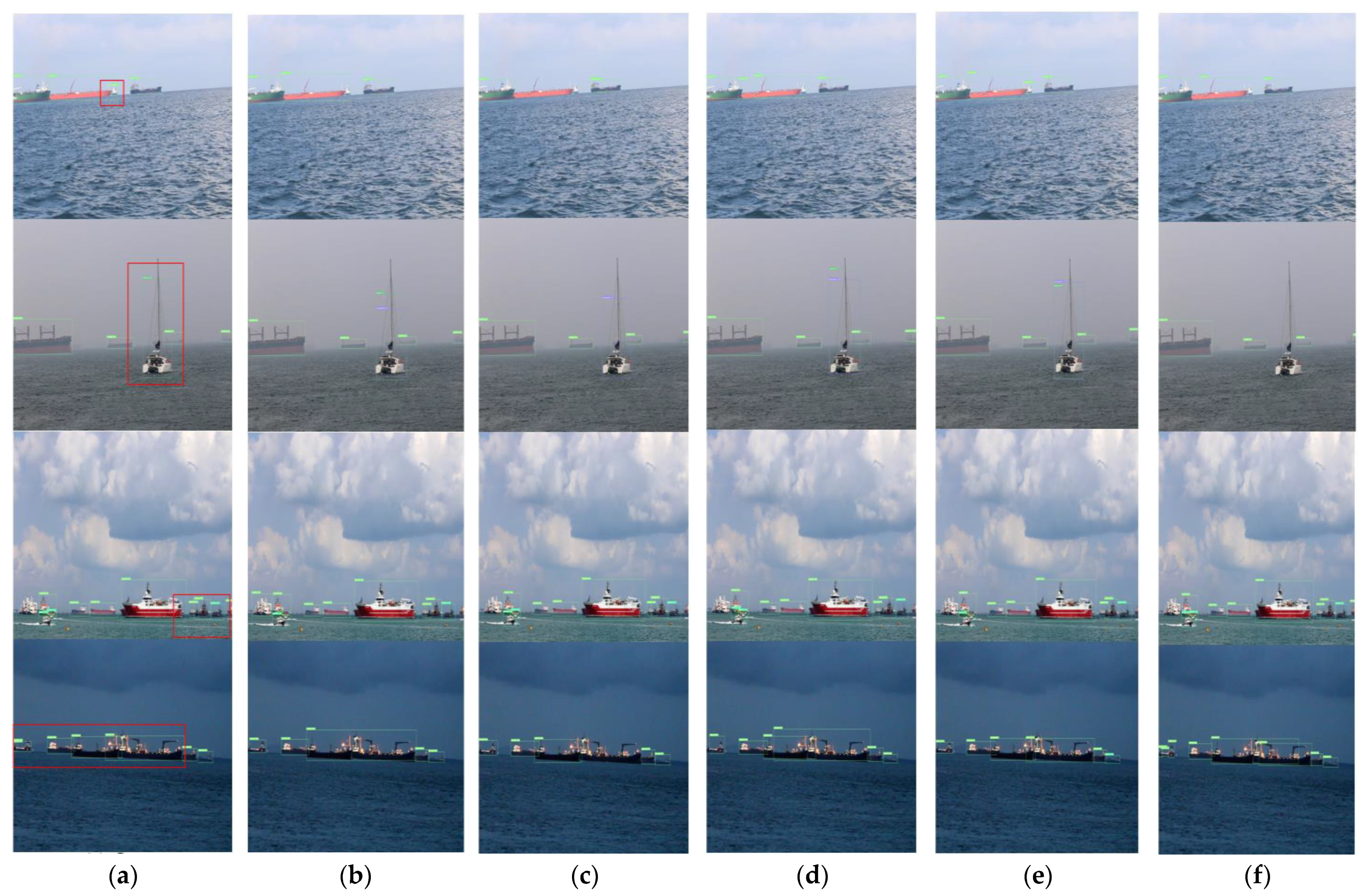

4.8. Experiments in Generalization Ability

5. Conclusions and Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, Z.; Zhang, Y.; Yu, X.; Yuan, C. Unmanned surface vehicles: An overview of developments and challenges. Annu. Rev. Control 2016, 41, 71–93. [Google Scholar] [CrossRef]

- Campbell, S.; Naeem, W.; Irwin, G.W. A review on improving the autonomy of unmanned surface vehicles through intel-ligent collision avoidance manoeuvres. Annu. Rev. Control 2012, 36, 267–283. [Google Scholar] [CrossRef] [Green Version]

- Huang, B.; Zhou, B.; Zhang, S.; Zhu, C. Adaptive prescribed performance tracking control for underactuated autonomous underwater vehicles with input quantization. Ocean. Eng. 2021, 221, 108549. [Google Scholar] [CrossRef]

- Gao, J.; Zhang, J.; Liu, C.; Li, X.; Peng, Y. Camera-LiDAR Cross-Modality Fusion Water Segmentation for Unmanned Surface Vehicles. J. Mar. Sci. Eng. 2022, 10, 744. [Google Scholar] [CrossRef]

- Wang, L.; Fan, S.; Liu, Y.; Li, Y.; Fei, C.; Liu, J.; Liu, B.; Dong, Y.; Liu, Z.; Zhao, X. A Review of Methods for Ship Detection with Electro-Optical Images in Marine Environments. J. Mar. Sci. Eng. 2021, 9, 1408. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision–ECCV 2016, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Lecture Notes in Computer Science book series; Springer: Berlin/Heidelberg, Germany, 2016; Volume 9905, pp. 21–37. [Google Scholar] [CrossRef] [Green Version]

- Liu, K.; Tang, H.; He, S.; Yu, Q.; Xiong, Y.; Wang, N. Performance validation of YOLO variants for object detection. In Proceedings of the 2021 International Conference on Bioinformatics and Intelligent Computing, Harbin, China, 22–24 January 2021; pp. 239–243. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Li, Y.; Guo, J.; Guo, X.; Liu, K.; Zhao, W.; Luo, Y.; Wang, Z. A novel target detection method of the unmanned surface vehicle under all-weather conditions with an improved YOLOV3. Sensors 2020, 20, 4885. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2778–2788. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Martinez-Carranza, J.; Hernandez-Farias, D.I.; Rojas-Perez, L.O.; Cabrera-Ponce, A.A. Language meets YOLOv8 for metric monocular SLAM. J. Real-Time Image Process. 2023, 20, 222–227. [Google Scholar] [CrossRef]

- Fu, H.X.; Li, Y.; Wang, Y.C.; Li, P. Maritime Ship Targets Recognition with Deep Learning. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 9297–9302. [Google Scholar]

- Khasawneh, N.; Fraiwan, M.; Fraiwan, L. Detection of K-complexes in EEG waveform images using faster R-CNN and deep transfer learning. BMC Med. Inf. Decis. 2022, 22, 297. [Google Scholar] [CrossRef]

- Yang, Z.; Li, Y.; Wang, B.; Ding, S.; Jiang, P. A Lightweight Sea Surface Object Detection Network for Unmanned Surface Vehicles. J. Mar. Sci. Eng. 2022, 10, 965. [Google Scholar] [CrossRef]

- Liu, T.; Pang, B.; Zhang, L.; Yang, W.; Sun, X. Sea Surface Object Detection Algorithm Based on YOLO v4 Fused with Reverse Depthwise Separable Convolution (RDSC) for USV. J. Mar. Sci. Eng. 2021, 9, 753. [Google Scholar] [CrossRef]

- Wang, Y.; Li, J.; Tia, Z.; Chen, Z.; Fu, H. Ship Target Detection Algorithm Based on Improved YOLOX_s. In Proceedings of the 2022 IEEE International Conference on Mechatronics and Automation (ICMA), Guilin, China, 7–10 August 2022; pp. 1147–1152. [Google Scholar]

- Ma, R.X.; Bao, K.X.; Yin, Y. Improved Ship Object Detection in Low-Illumination Environments Using RetinaMFANet. J. Mar. Sci. Eng. 2022, 10, 1996. [Google Scholar] [CrossRef]

- Shao, Z.Y.; Lyu, H.; Yin, Y.; Cheng, T.; Gao, X.W.; Zhang, W.J.; Jing, Q.F.; Zhao, Y.J.; Zhang, L.P. Multi-Scale Object Detection Model for Autonomous Ship Navigation in Maritime Environment. J. Mar. Sci. Eng. 2022, 10, 1783. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Misra, D. Mish: A self-regularized non-monotonic activation function. arXiv 2020, arXiv:1908.08681. [Google Scholar]

- Ramachandran, P.; Zoph, B.; Le, Q.V. Searching for activation functions. arXiv 2017, arXiv:1710.05941. [Google Scholar]

- Gao, S.H.; Cheng, M.M.; Zhao, K.; Zhang, X.Y.; Yang, M.H.; Torr, P. Res2Net: A New Multi-Scale Backbone Architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 652–662. [Google Scholar] [CrossRef] [Green Version]

- Liu, R.; Lehman, J.; Molino, P.; Such, F.P.; Frank, E.; Sergeev, A.; Yosinski, J. An intriguing failing of convolutional neural networks and the CoordConv solution. arXiv 2018, arXiv:1807.03247. [Google Scholar]

- Dai, X.Y.; Chen, Y.P.; Xiao, B.; Chen, D.D.; Liu, M.C.; Yuan, L.; Zhang, L. Dynamic Head: Unifying Object Detection Heads with Attentions. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 7369–7378. [Google Scholar]

- Shao, Z.F.; Wu, W.J.; Wang, Z.Y.; Du, W.; Li, C.Y. SeaShips: A Large-Scale Precisely Annotated Dataset for Ship Detection. IEEE Trans. Multimed. 2018, 20, 2593–2604. [Google Scholar] [CrossRef]

- Zhou, Z.G.; Sun, J.E.; Yu, J.B.; Liu, K.Y.; Duan, J.W.; Chen, L.; Chen, C.L.P. An Image-Based Benchmark Dataset and a Novel Object Detector for Water Surface Object Detection. Front. Neurorobot. 2021, 15, 723336. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.X.; Wang, W.J.; Zhu, Y.K.; Pang, R.M.; Vasudevan, V.; et al. Searching for MobileNetV3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Tang, Y.H.; Han, K.; Guo, G.Y.; Xu, C.; Xu, C.; Wang, M.X.; Wang, Y.H. GhostNetV2: Enhance Cheap Operation with Long-Range Attention. arXiv 2022, arXiv:2211.12905. [Google Scholar]

- Ma, N.N.; Zhang, X.Y.; Zheng, H.T.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. Lect. Notes Comput. Sci. 2018, 11218, 122–138. [Google Scholar]

- Yu, G.H.; Chang, Q.Y.; Lv, W.Y.; Cui, C.; Ji, W.; Dang, M.X.; Wang, Q.Q. PP-PicoDet: A Better Real-Time Object Detector on Mobile Devices. arXiv 2021, arXiv:2111.00902. [Google Scholar]

- Chen, J.R.; Kao, S.H.; He, H.; Zhuo, W.P.; Wen, S.; Lee, C.H. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. arXiv 2023, arXiv:2303.03667. [Google Scholar]

- Huang, L.C.; Wang, Z.W.; Fu, X.B. Pedestrian detection using RetinaNet with multi-branch structure and double pooling attention mechanism. Multimed. Tools Appl. 2023. [Google Scholar] [CrossRef]

- Duan, K.W.; Bai, S.; Xie, L.X.; Qi, H.G.; Huang, Q.M.; Tian, Q. CenterNet: Keypoint Triplets for Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27–28 October 2019; pp. 6568–6577. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef] [Green Version]

- Prasad, D.K.; Rajan, D.; Rachmawati, L.; Rajabally, E.; Quek, C. Video Processing From Electro-Optical Sensors for Object Detection and Tracking in a Maritime Environment: A Survey. IEEE Trans. Intell. Transp. 2017, 18, 1993–2016. [Google Scholar] [CrossRef] [Green Version]

| Items | Type |

|---|---|

| operating system | ubuntu 22.04 |

| programming language | python 3.9 |

| deep learning framework | torch 2.0, cuda11.8, cudnn8.8 |

| CPU | Intel i5 9600K 3.7 GHz 6 core |

| GPU | RTX2080 8G |

| Parameter | Configuration |

|---|---|

| learning rate | 0.01 |

| momentum | 0.937 |

| weight decay | 0.0005 |

| batch size | 32 |

| optimizer | SGD |

| image size | 640 × 640 |

| epochs | 300 |

| Class | mAP@0.5 Ours/Baseline% | mAP@0.5:0.95 Ours/Baseline% |

|---|---|---|

| all | 92.85/86.67 | 58.7%/54.3% |

| boat | 81.8/77.7 | 42.6/38.2 |

| cargo ship | 98.4/94.1 | 72.1/68.6 |

| passenger ship | 94.9/91.1 | 70.8/67.9 |

| speed boat | 95.2/83.1 | 48.5/41.2 |

| ship | 96.3/92.1 | 63.3/60.8 |

| buoy | 90.5/81.9 | 54.9/49.1 |

| Model | Param (M) | GFLOPs | Precision% | Recall% | mAP@0.5% | mAP@0.5:0.95% | FPS |

|---|---|---|---|---|---|---|---|

| SSD | 41.1 | 387.0 | 80.46 | 71.37 | 77.46 | 47.93 | 24.84 |

| CenterNet | 32.67 | 70.22 | 87.26 | 76 | 85.8 | 51.1 | 32.12 |

| Faster-RCNN | 137.1 | 370.21 | 84.19 | 79.66 | 81.83 | 50.68 | 10.23 |

| RetinaNet | 38.0 | 170.1 | 88.6 | 81.32 | 87.25 | 53.3 | 19.51 |

| YOLOv4-Tiny | 6.05 | 13.7 | 77.23 | 69.26 | 70.35 | 39.66 | 75.64 |

| YOLOv5s | 7.06 | 15.9 | 88.12 | 83.31 | 87.66 | 53.92 | 55.52 |

| YOLOX-Tiny | 5.10 | 6.51 | 83.23 | 81.62 | 81.56 | 50.23 | 75.97 |

| YOLOv8-Tiny | 3.01 | 8.1 | 85.3 | 81.1 | 85.46 | 52.69 | 72.64 |

| YOLOv7-Tiny-ShuffleNetv2 | 5.66 | 9.2 | 85.8 | 78.24 | 84.71 | 50.24 | 63.44 |

| YOLOv7-Tiny-PicoDet | 4.76 | 29.4 | 79.33 | 84.41 | 86.41 | 52.88 | 60.11 |

| YOLOv7-Tiny-GhostNetv2 | 5.84 | 5.31 | 89.51 | 75.4 | 84.92 | 48.01 | 66.82 |

| YOLOv7-Tiny-MobileNetv3 | 4.86 | 7.52 | 80.2 | 79.4 | 84.2 | 51.1 | 65.69 |

| YOLOv7-Tiny-FasterNet | 3.61 | 7.3 | 85.9 | 82.8 | 87.34 | 52.33 | 70.24 |

| YOLOv7-Tiny | 6.02 | 13.2 | 90.42 | 82.8 | 86.73 | 54.47 | 68.79 |

| Ours | 4.69 | 11.2 | 91.57 | 90.23 | 92.85 | 58.7 | 56.87 |

| Model | Param (M) | GFLOPs | mAP@0.5% | mAP@0.5:0.95% |

|---|---|---|---|---|

| baseline | 6.02 | 13.2 | 86.73 | 54.47 |

| baseline + Mish | 6.02 | 13.2 | 87.33 | 54.93 |

| baseline + Mish + SiLu | 6.02 | 13.2 | 88.56 | 55.82 |

| baseline + Mish + SiLu + R2B | 4.8 | 11.2 | 90.29 | 57.43 |

| baseline + Mish + SiLu+ CoordConv | 4.8 | 11.2 | 91.09 | 57.91 |

| baseline + R2B + SiLu + Mish + CoordConv + DyHead | 4.69 | 11.2 | 92.83 | 58.71 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Du, X.; Zhang, R.; Zhang, J. A Lightweight Detection Algorithm for Unmanned Surface Vehicles Based on Multi-Scale Feature Fusion. J. Mar. Sci. Eng. 2023, 11, 1392. https://doi.org/10.3390/jmse11071392

Zhang L, Du X, Zhang R, Zhang J. A Lightweight Detection Algorithm for Unmanned Surface Vehicles Based on Multi-Scale Feature Fusion. Journal of Marine Science and Engineering. 2023; 11(7):1392. https://doi.org/10.3390/jmse11071392

Chicago/Turabian StyleZhang, Lei, Xiang Du, Renran Zhang, and Jian Zhang. 2023. "A Lightweight Detection Algorithm for Unmanned Surface Vehicles Based on Multi-Scale Feature Fusion" Journal of Marine Science and Engineering 11, no. 7: 1392. https://doi.org/10.3390/jmse11071392

APA StyleZhang, L., Du, X., Zhang, R., & Zhang, J. (2023). A Lightweight Detection Algorithm for Unmanned Surface Vehicles Based on Multi-Scale Feature Fusion. Journal of Marine Science and Engineering, 11(7), 1392. https://doi.org/10.3390/jmse11071392