A Hybrid Excitation Model Based Lightweight Siamese Network for Underwater Vehicle Object Tracking Missions

Abstract

1. Introduction

- (1)

- To solve the problem of the deep learning models that have become increasingly complex as their performance improves, a lightweight network is introduced. Lightweight neural networks are structures that extract the same number of features as regular convolutions but with fewer parameters. The use of lightweight networks reduces the parameter size and computational complexity of the network. This method maintains accuracy, improves temporal aspects, and reduces the computational complexity and cost of the model.

- (2)

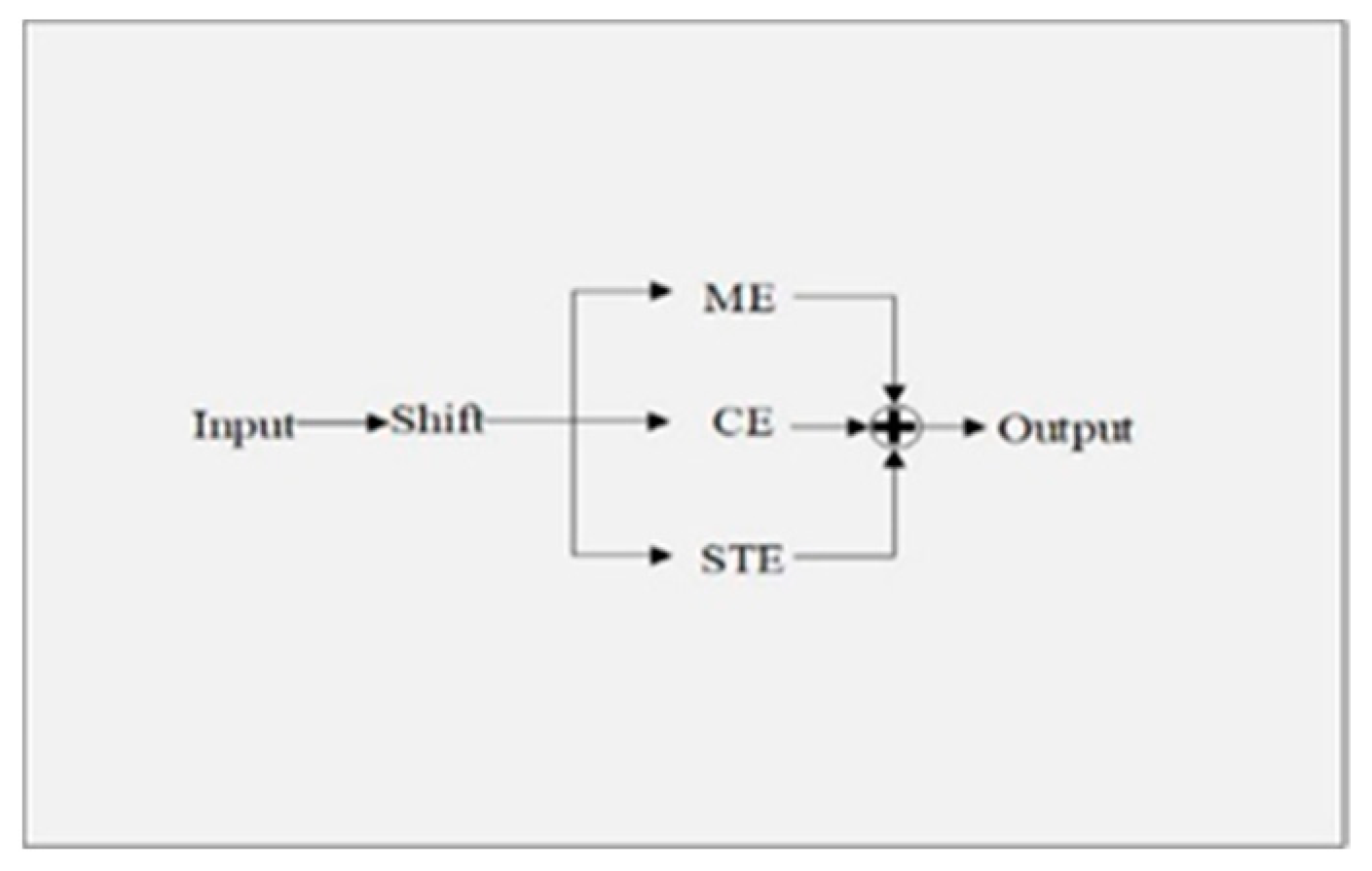

- To enhance the learning and understanding capabilities of the algorithm for the target, we have introduced a mixed excitation model. The mixed excitation model consists of three components: the spatial excitation method for extracting the temporal and spatial relationships of the target, the channel excitation method for extracting weights between different channels, and the motion excitation method for extracting the trajectory relationship of the target between adjacent frames. These pieces of information are combined and applied to the feature extraction network. Multidimensional and comprehensive extraction of target features improves the performance of the algorithm.

- (3)

- Aiming at the problem of easy occlusion in an underwater environment, an adaptive strategy is designed. In addition to ensuring the training accuracy, the complex positive sample is added to make the training more targeted. Due to the presence of water resistance, the movement speed of underwater targets is much slower compared to aerial targets. Considering this difference, a new tracking strategy is proposed to narrow down the tracking range and improve the robustness of the algorithm. The tracking strategy based on underwater environmental characteristics improves the algorithm’s success rate in underwater environments, ensuring good tracking performance even in scenarios with limited visibility and turbid water conditions.

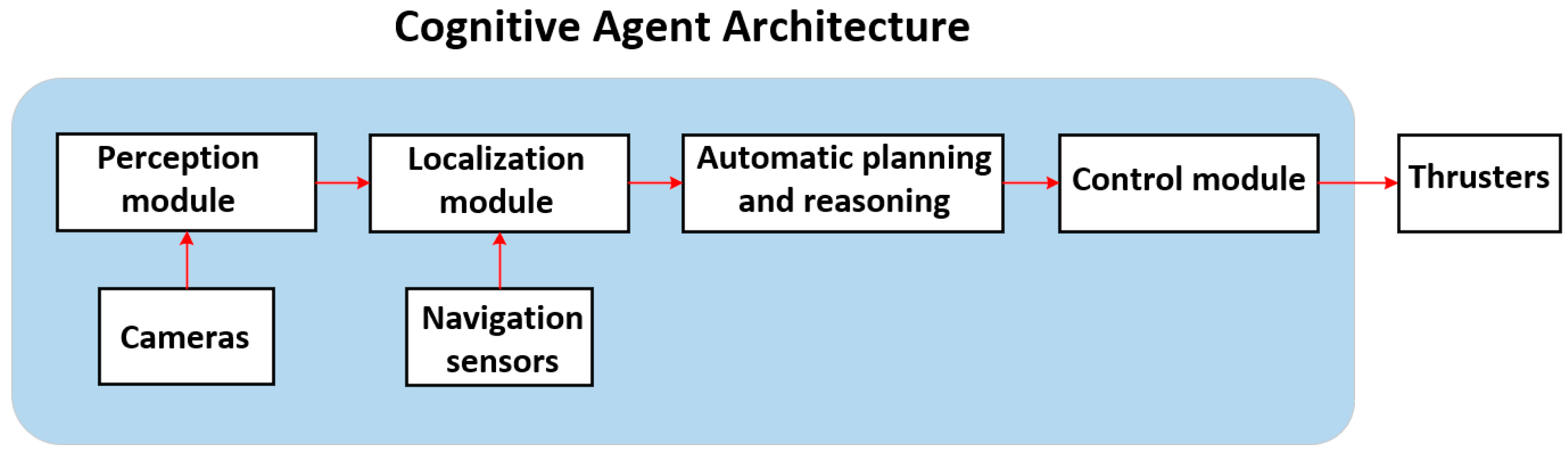

2. Autonomous Control and Target Tracking Architecture for Underwater Vehicles

3. Backbone Network Structure

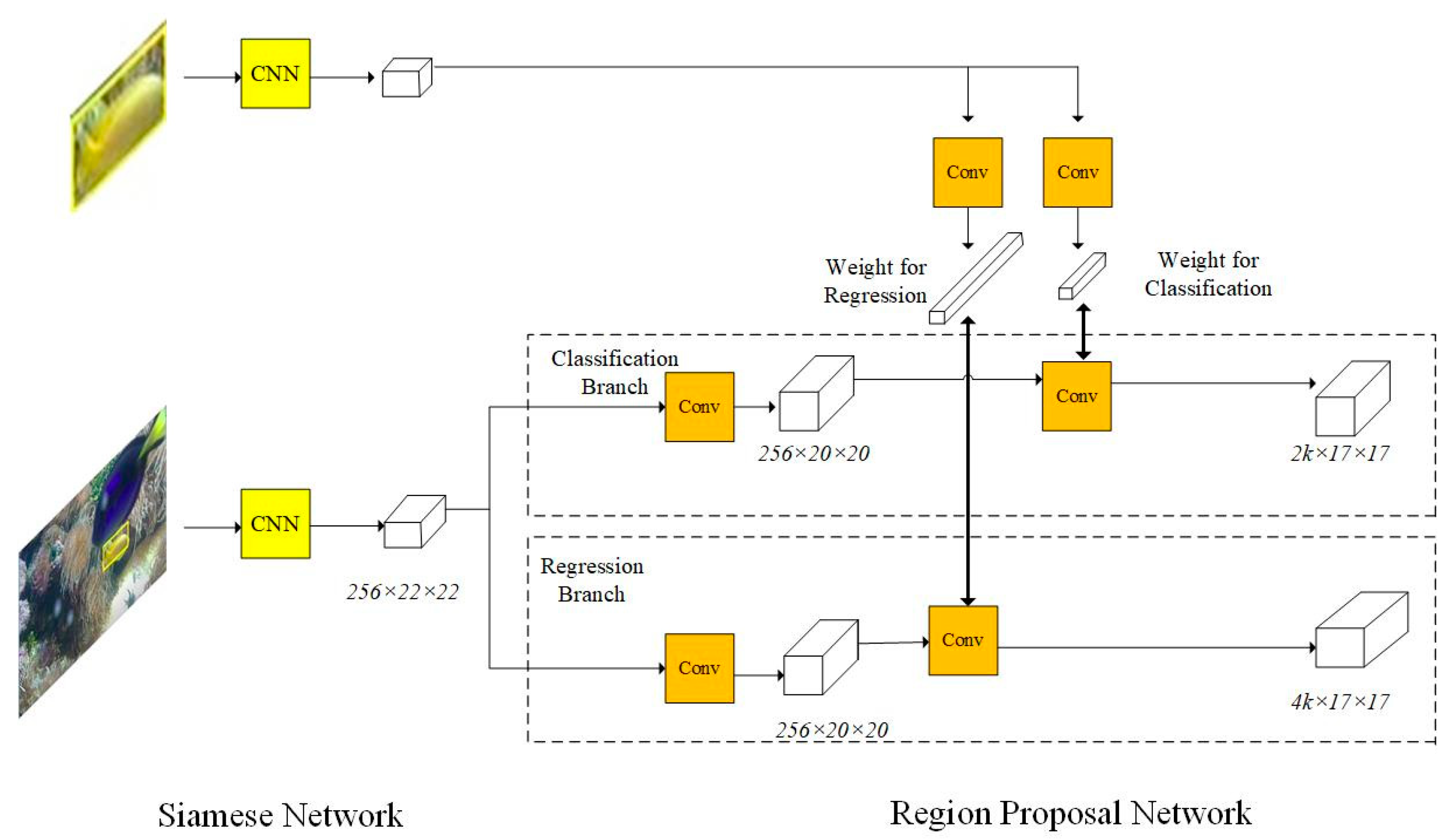

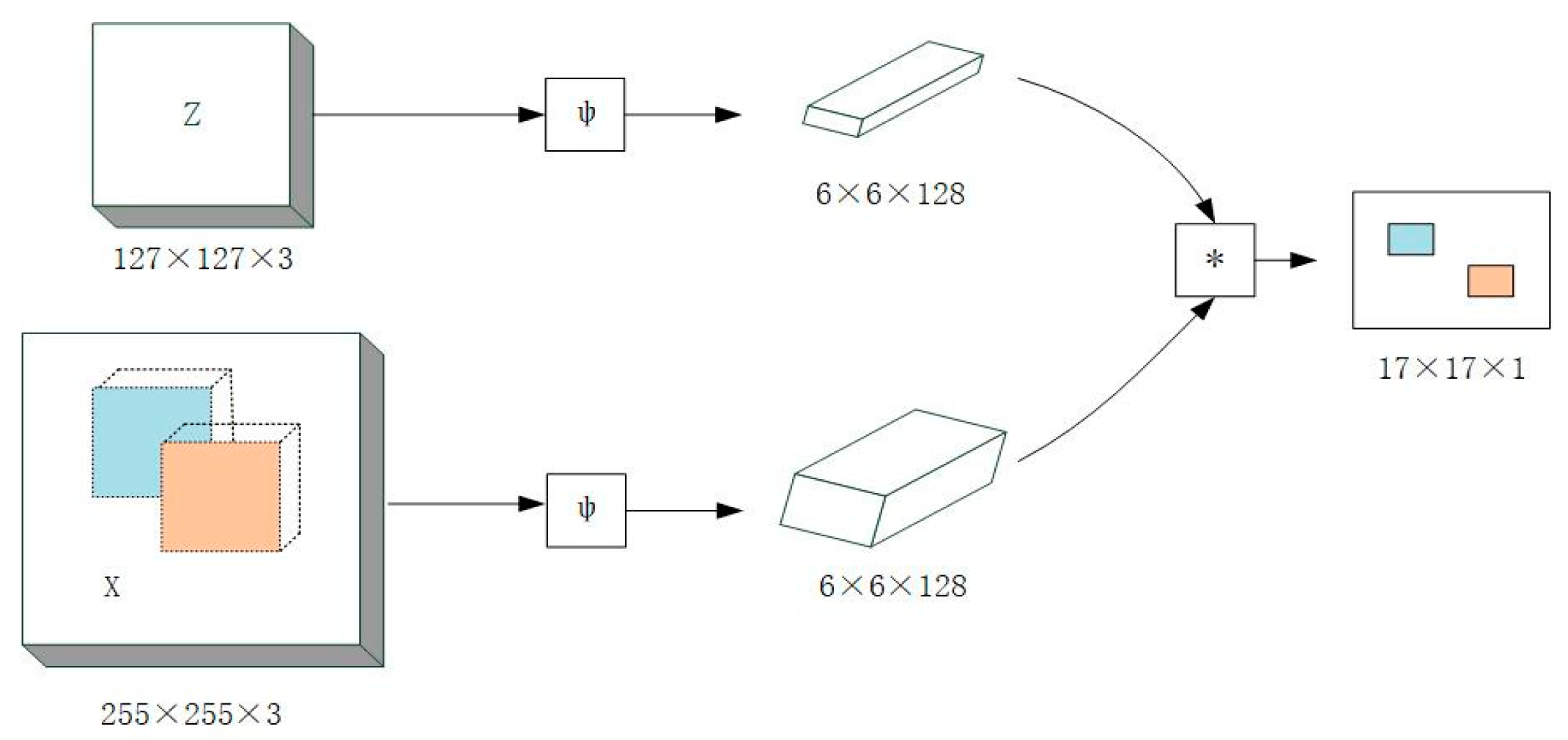

3.1. SiamRPN

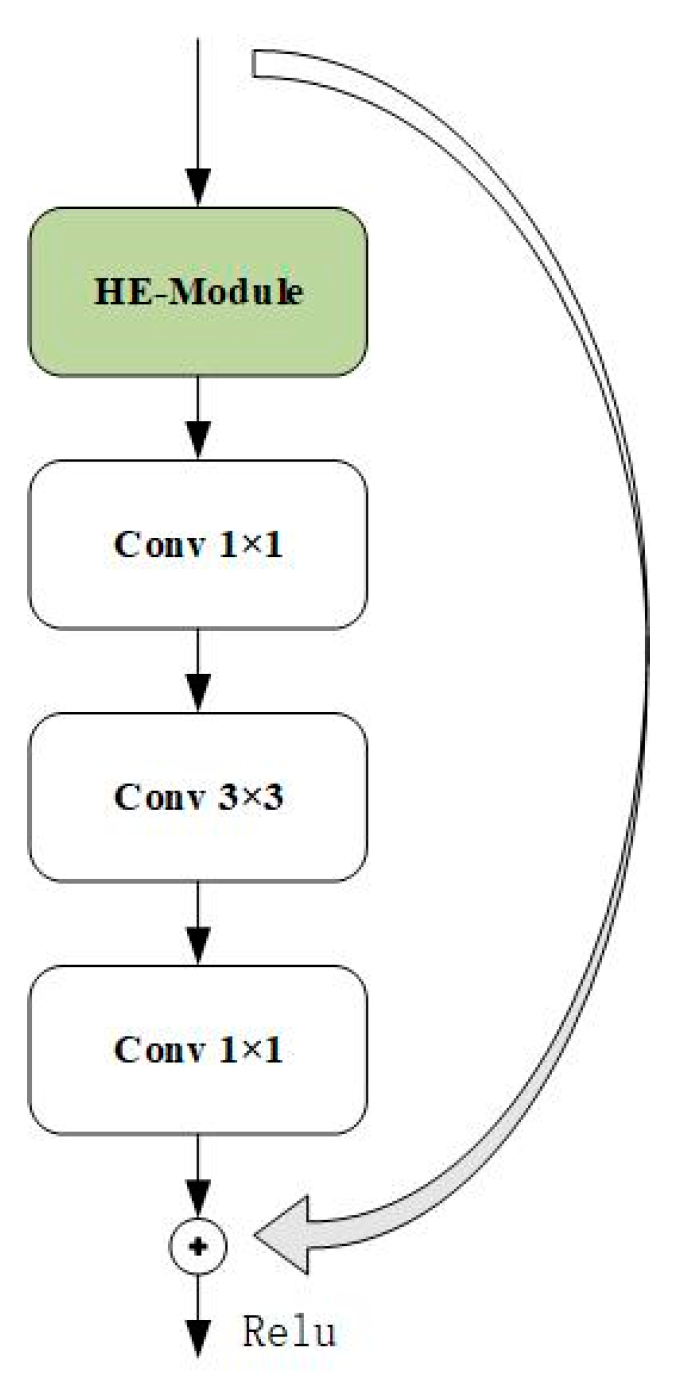

3.2. Residual Network Structure

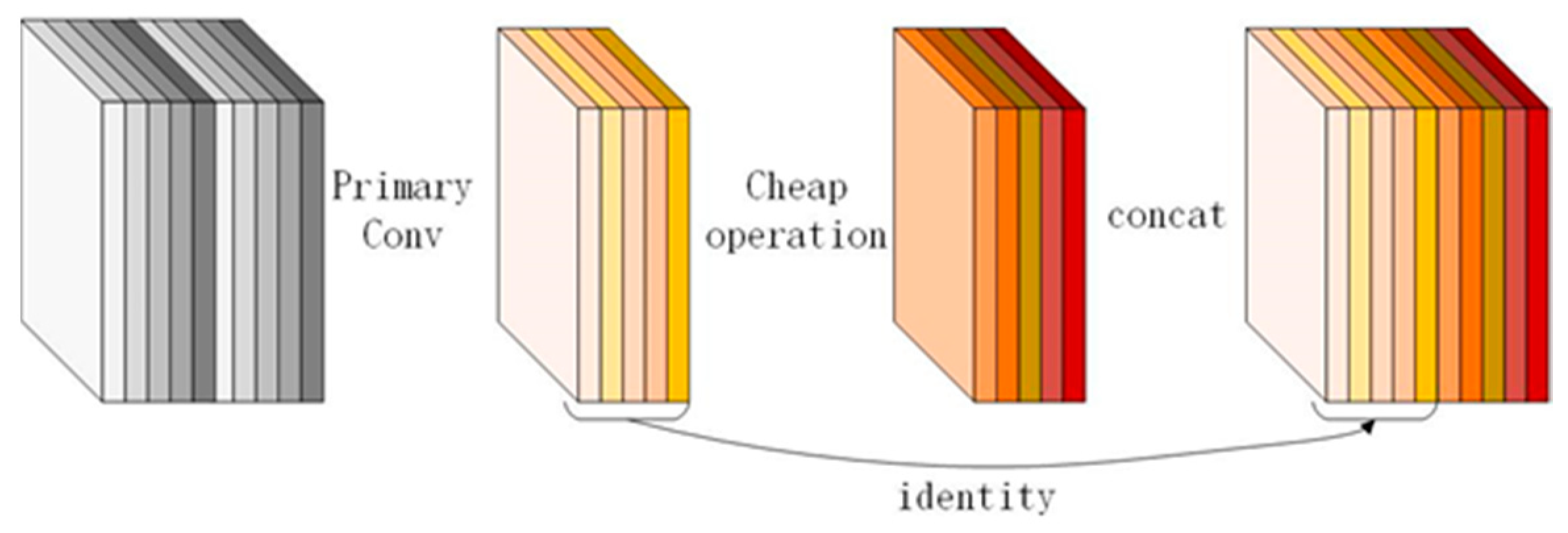

3.3. Lightweight Neural Network Model

3.4. Mixed Excitation Model

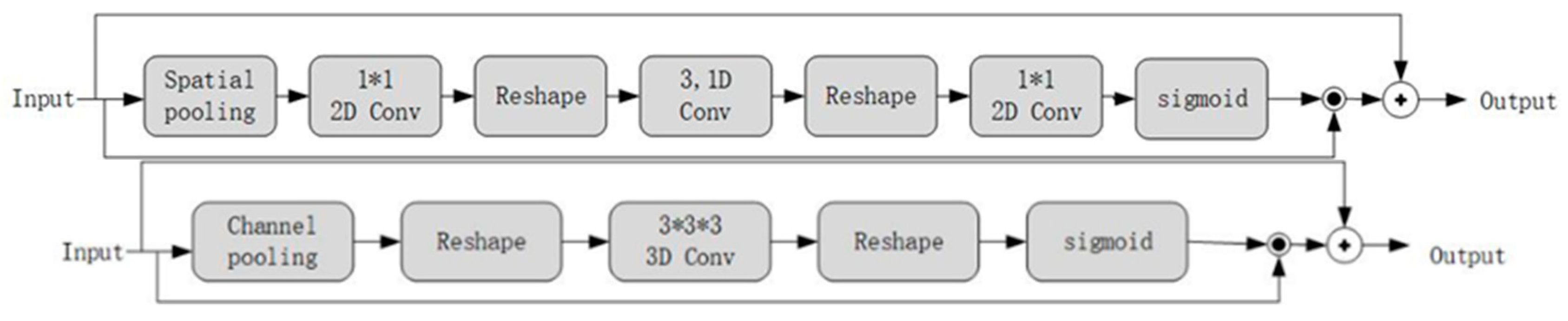

3.4.1. Spatial Excitation Method

3.4.2. Channel Excitation Method

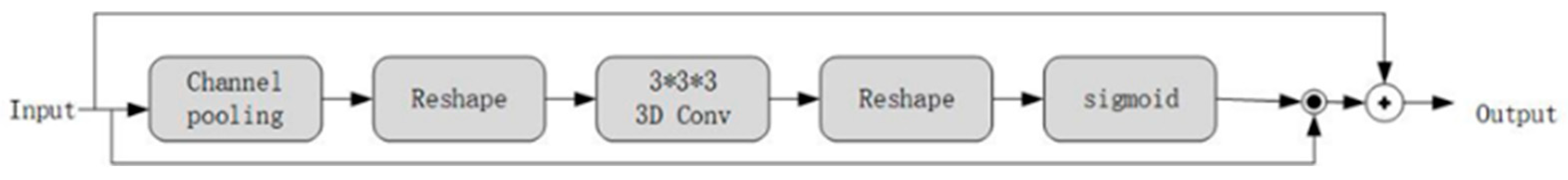

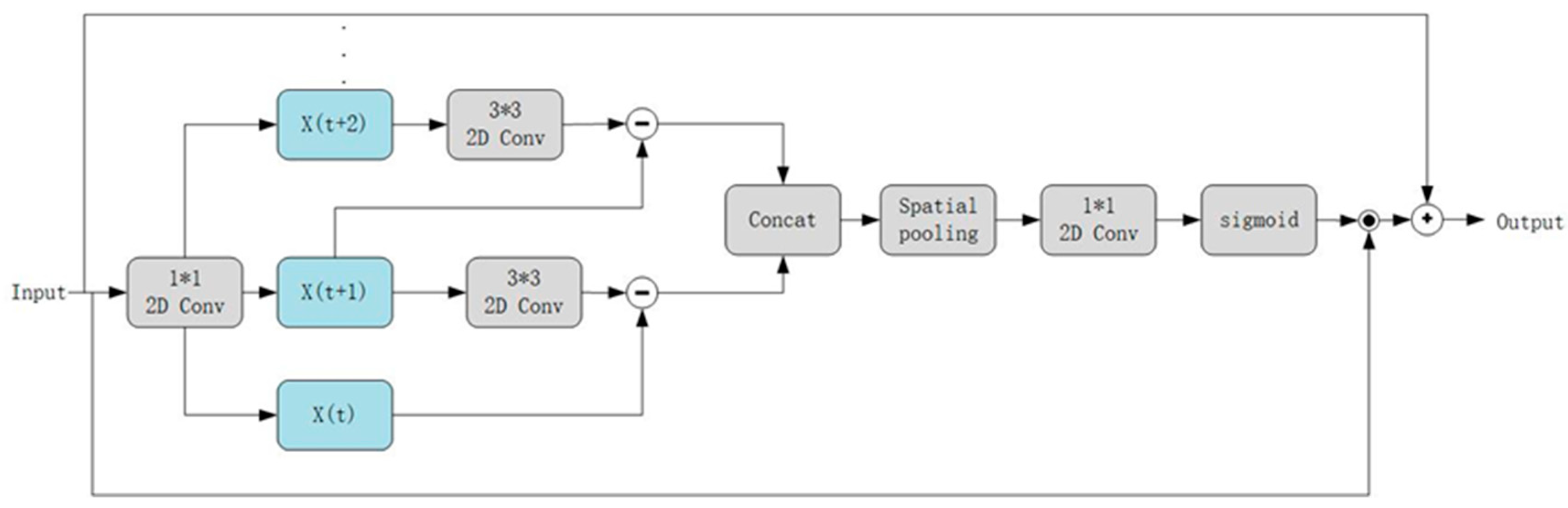

3.4.3. Motion Excitation Method

3.4.4. HE-Module Network Model

4. SL-HENet Underwater Vehicle Object Tracking Algorithm

4.1. Siamese Network Tracking Framework

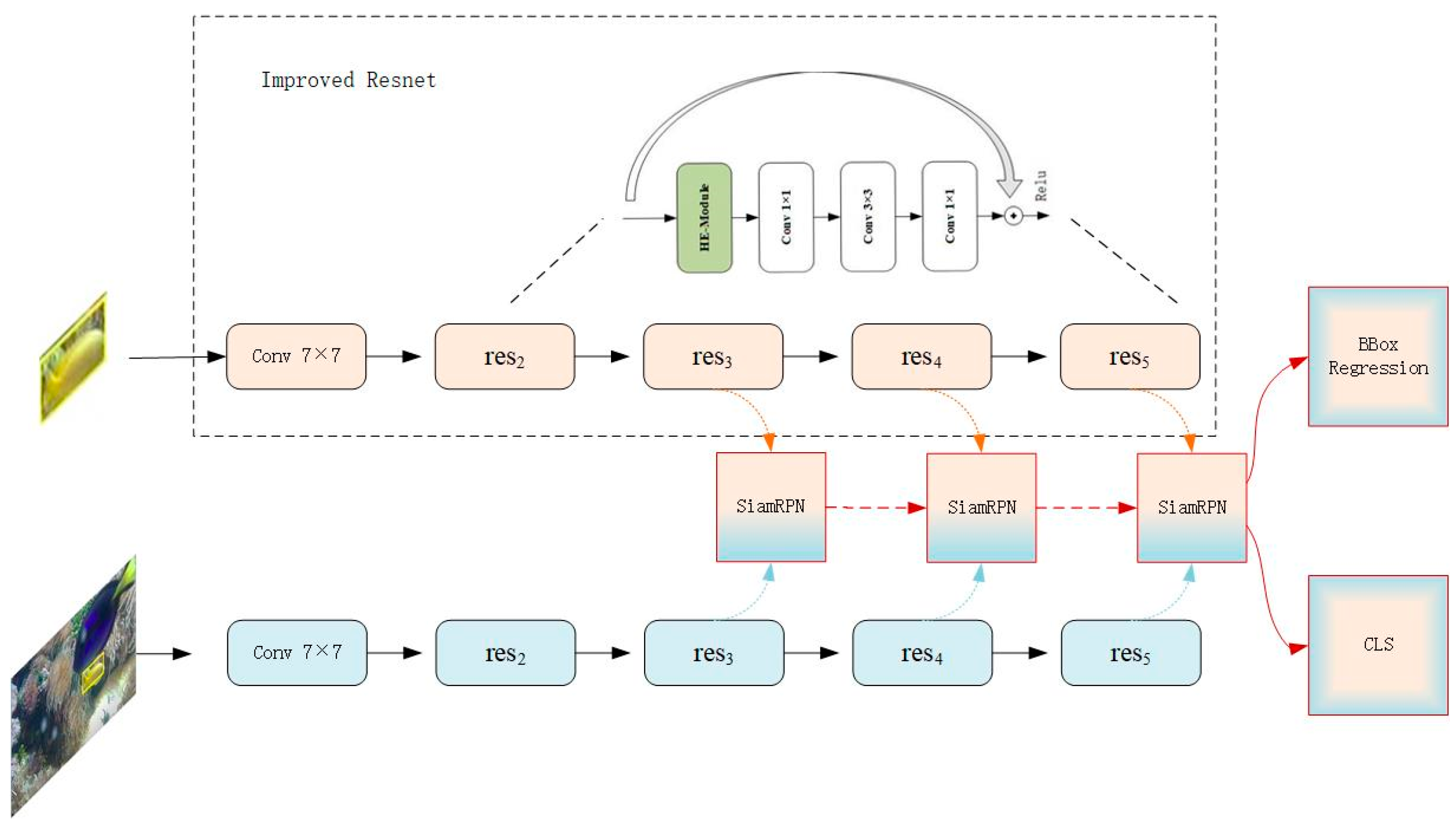

4.2. SL-HENet Framework

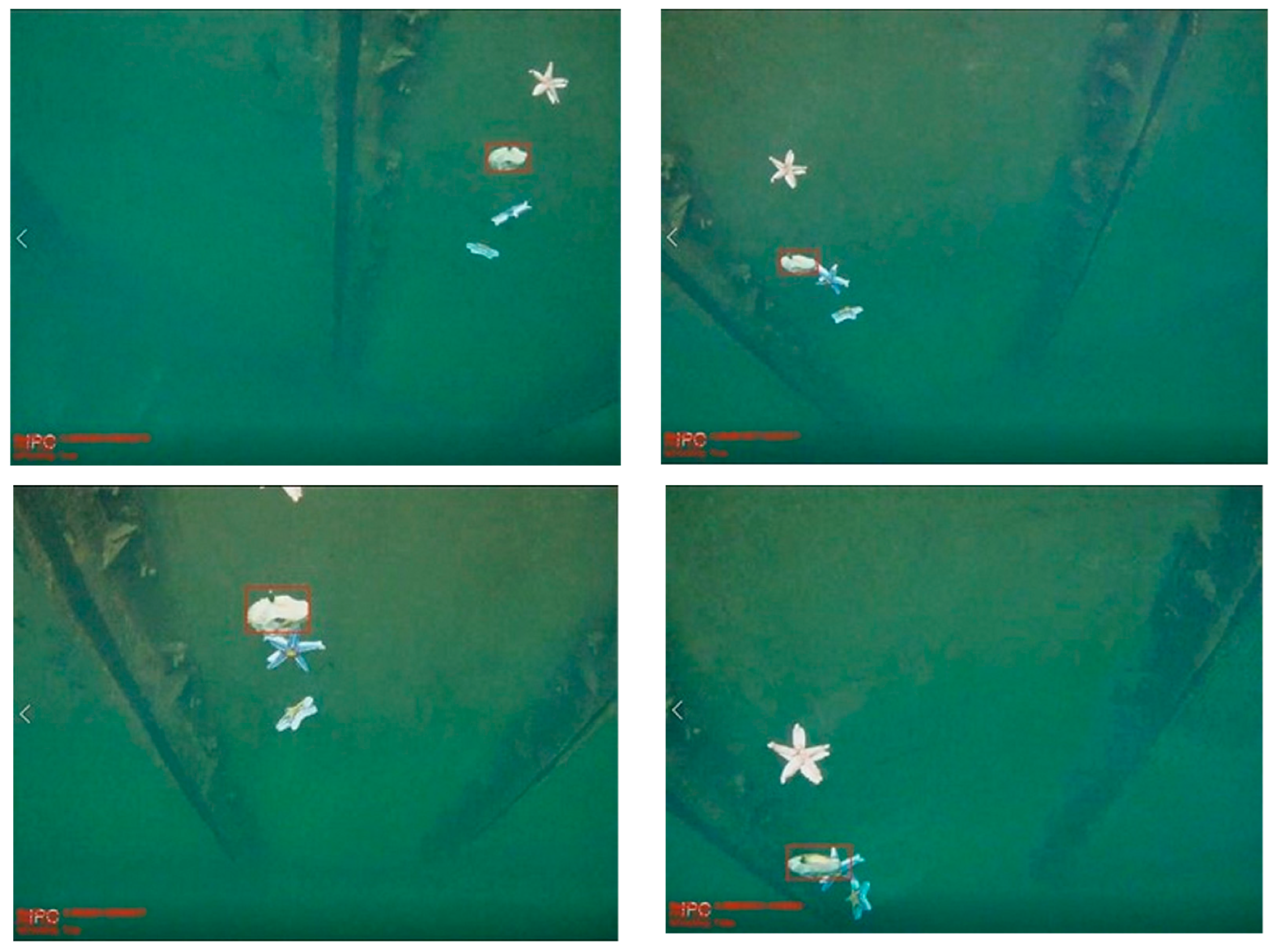

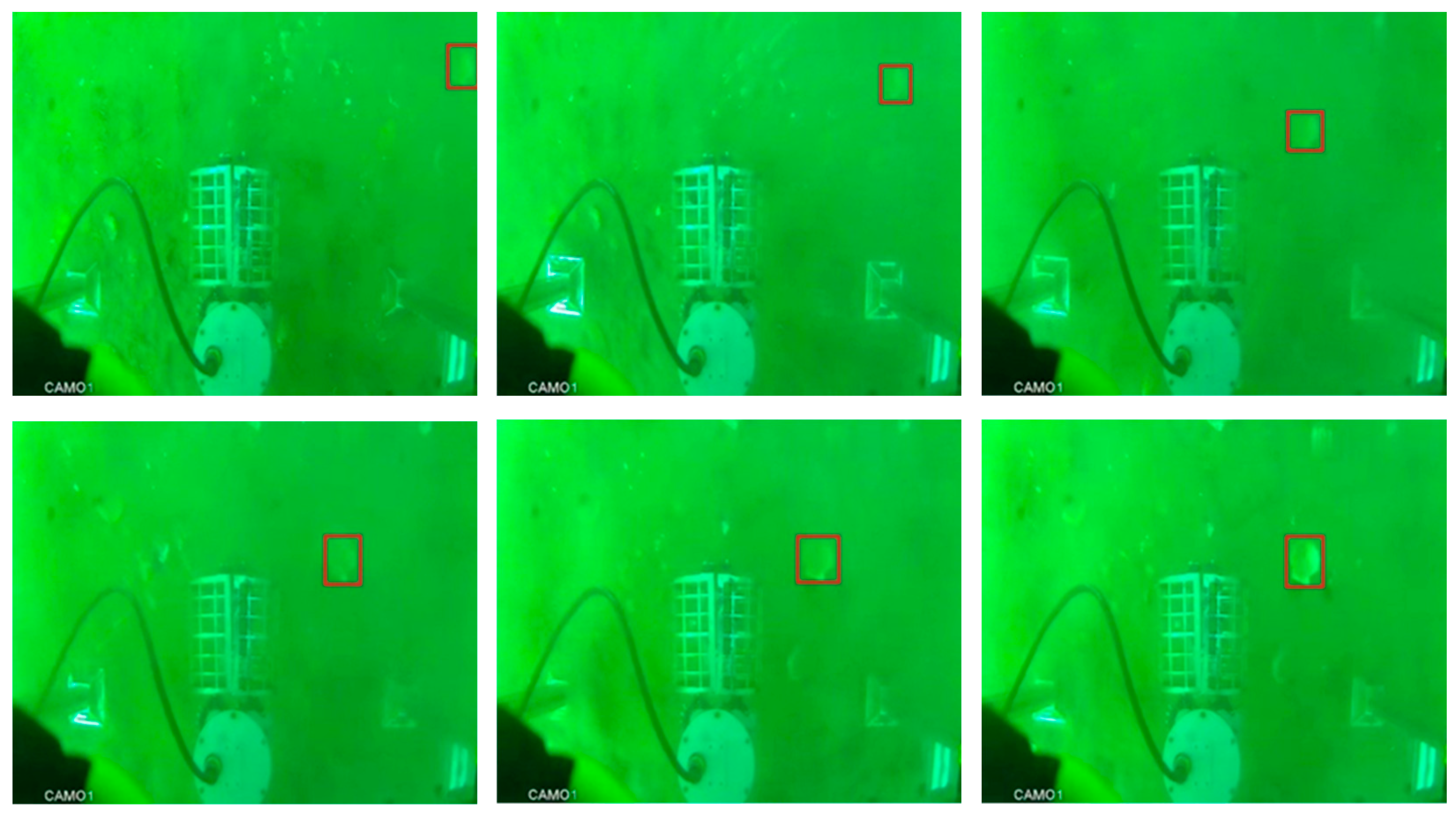

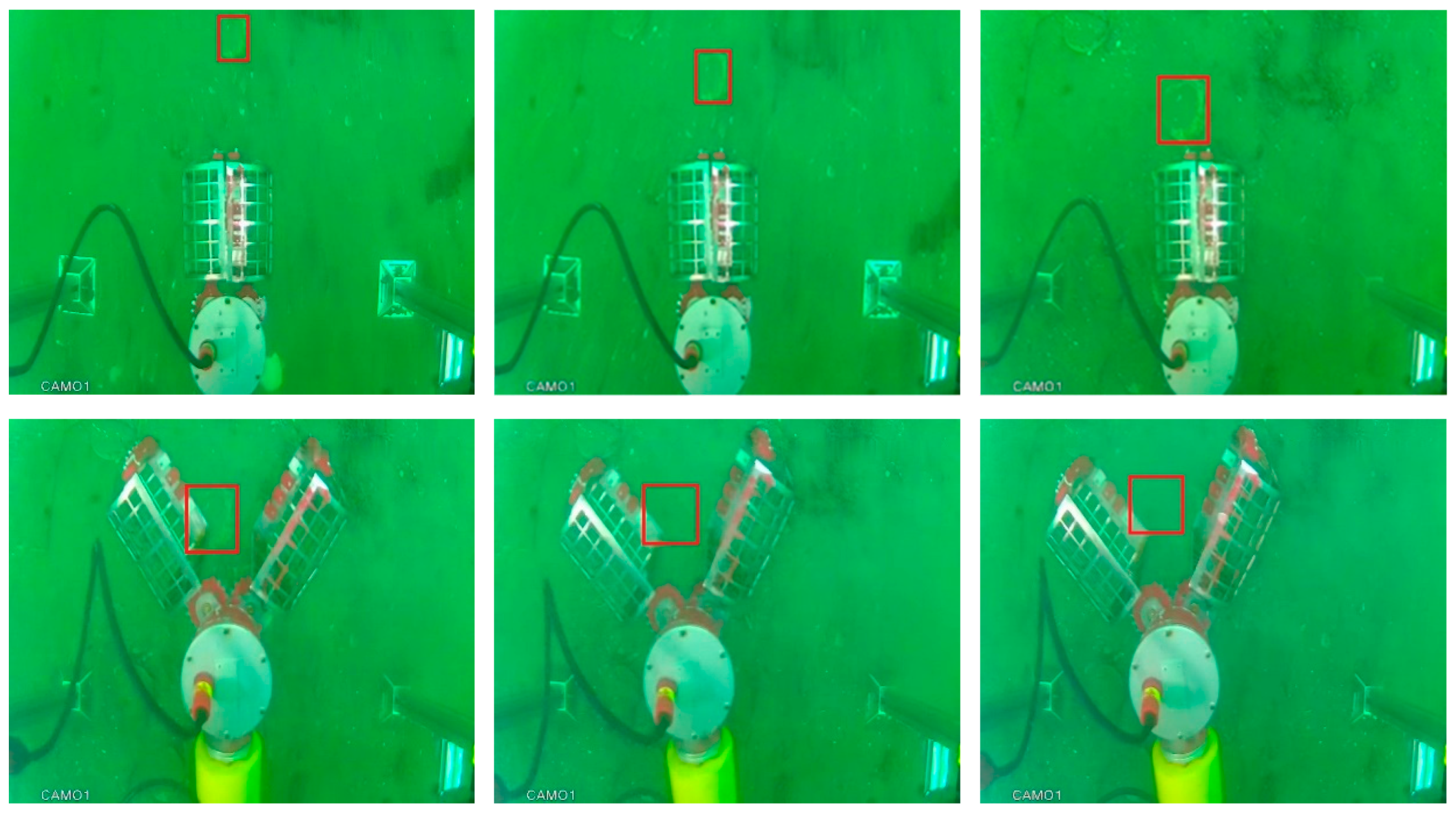

4.3. Tracking Strategy Based on the Influence of Sea Currents

4.4. The Strategy of Adaptive Training

5. Experiments

5.1. Details of Algorithm Implementation

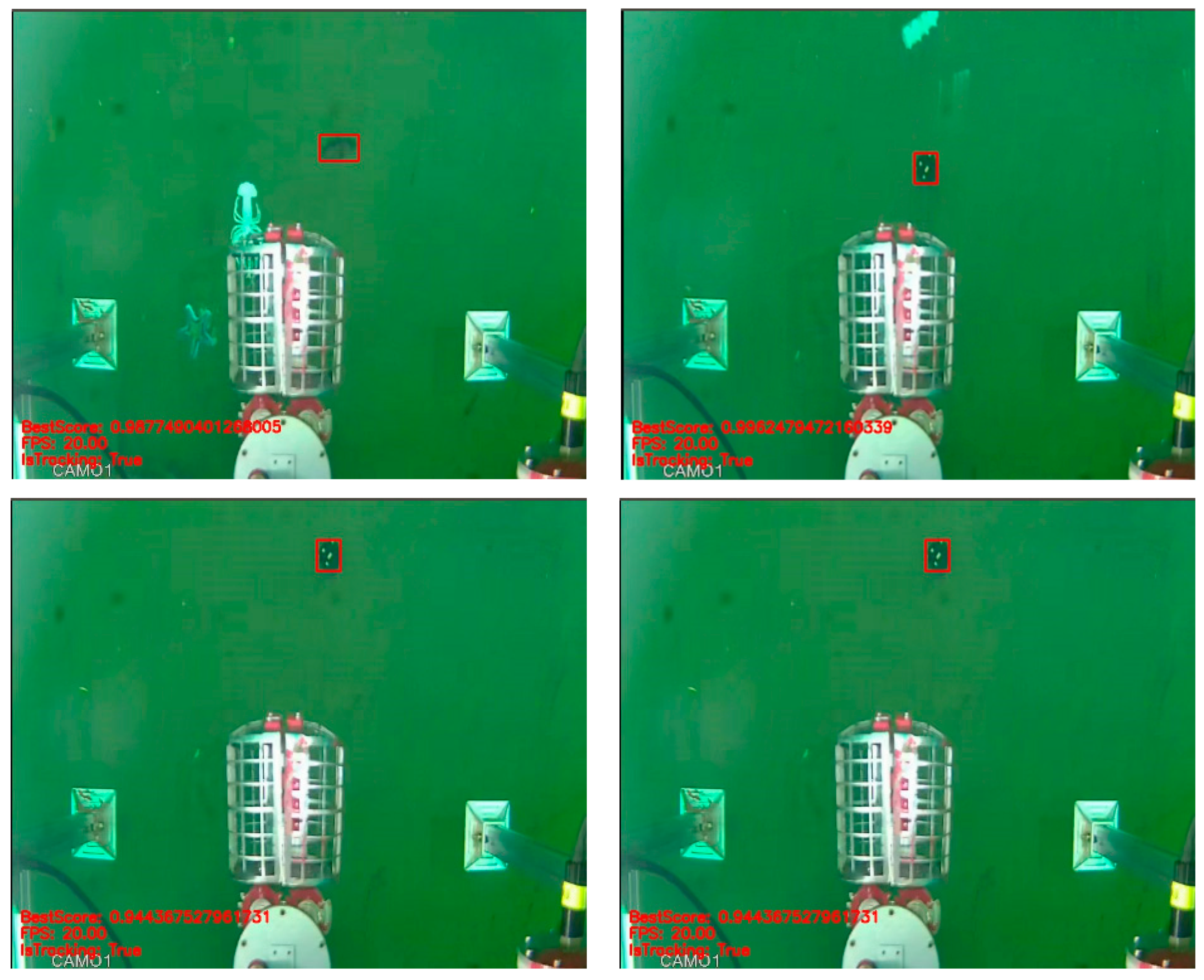

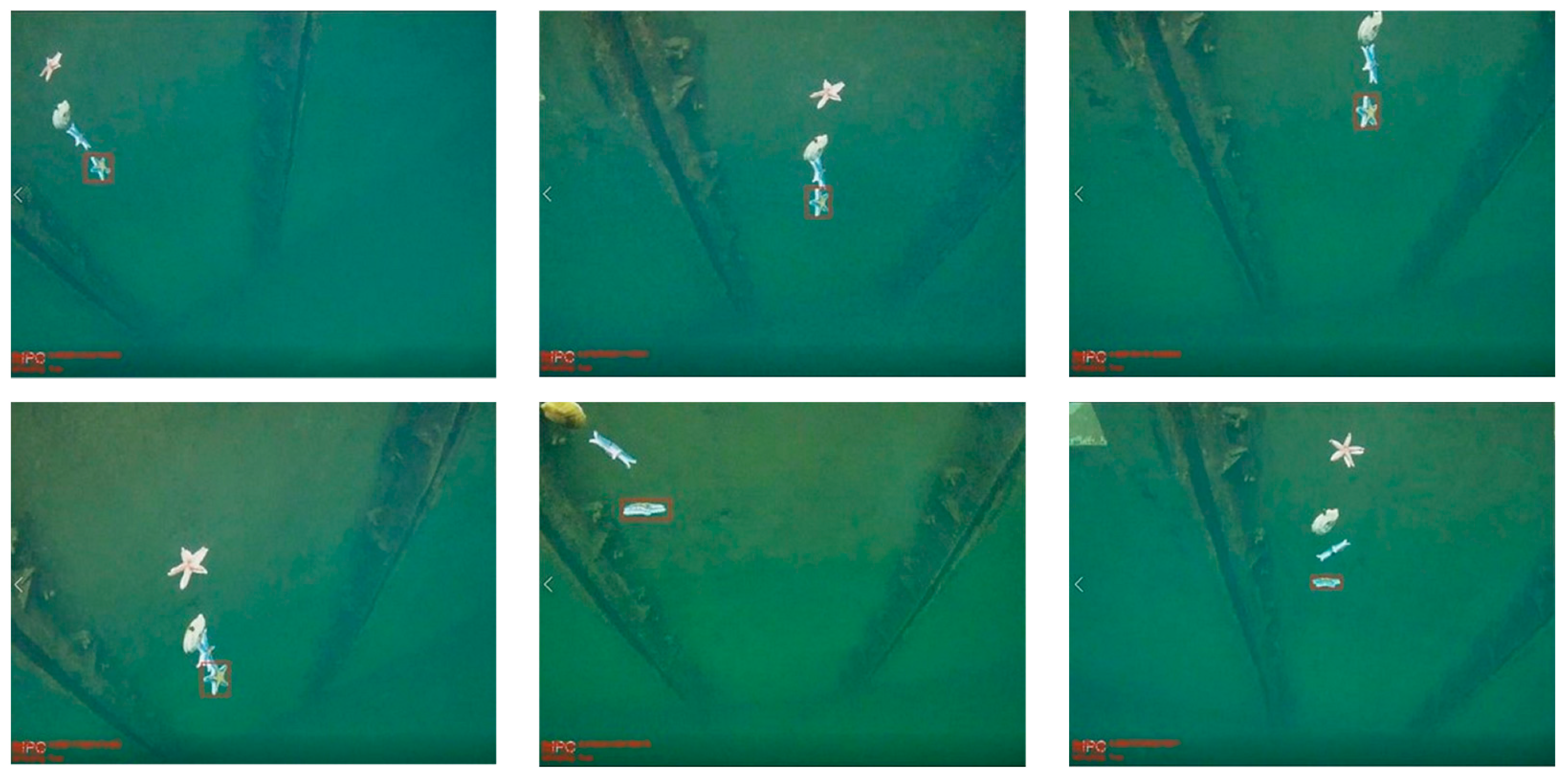

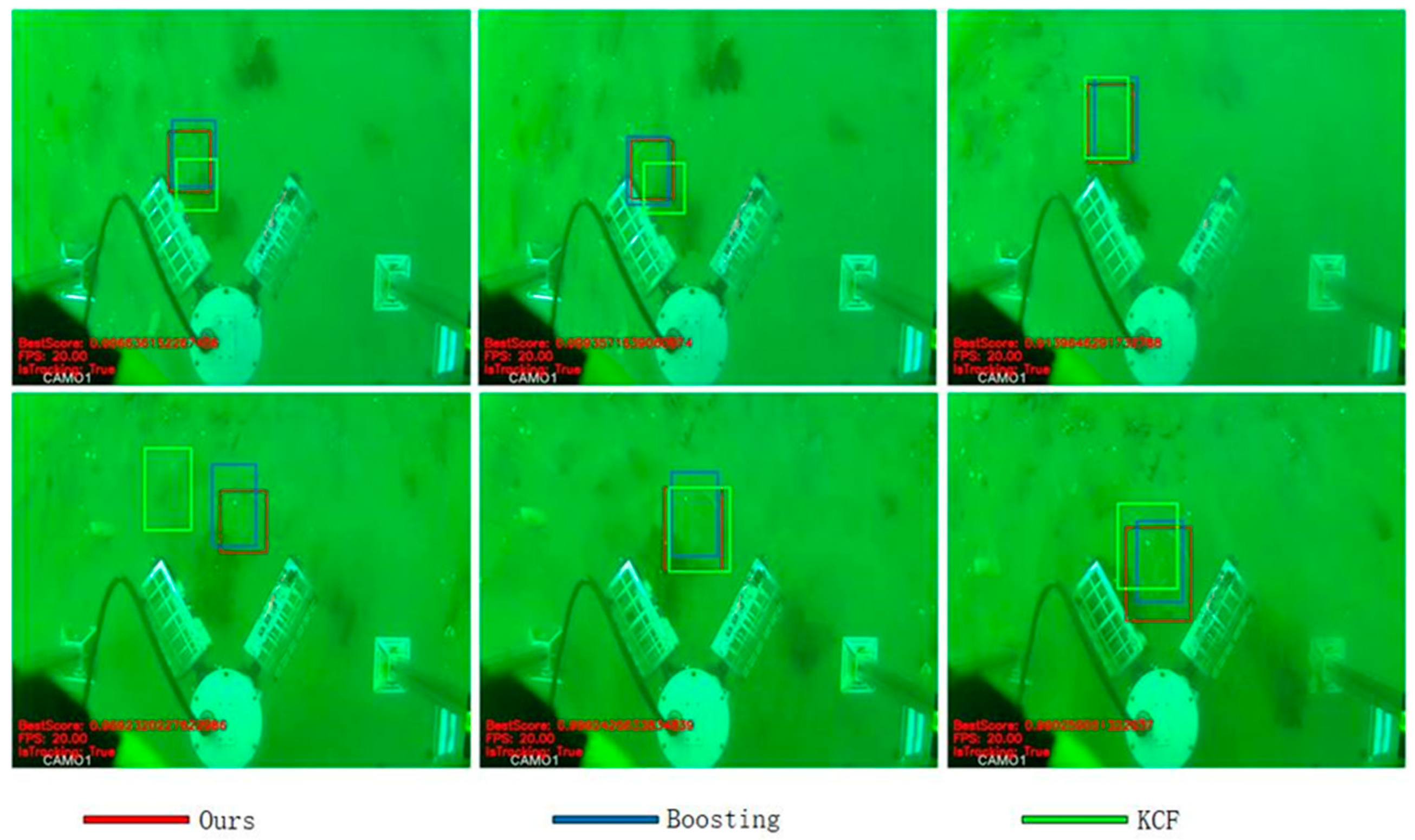

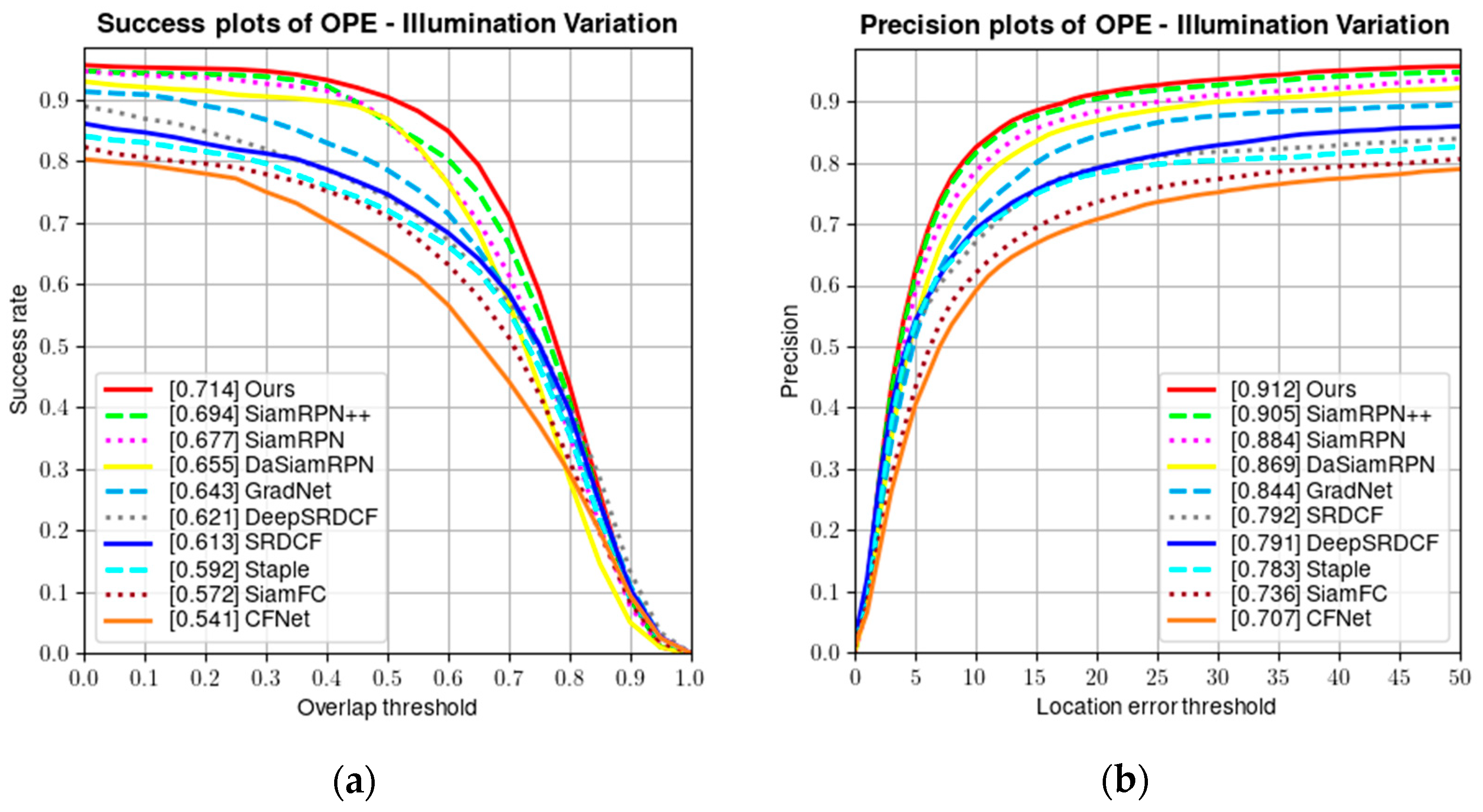

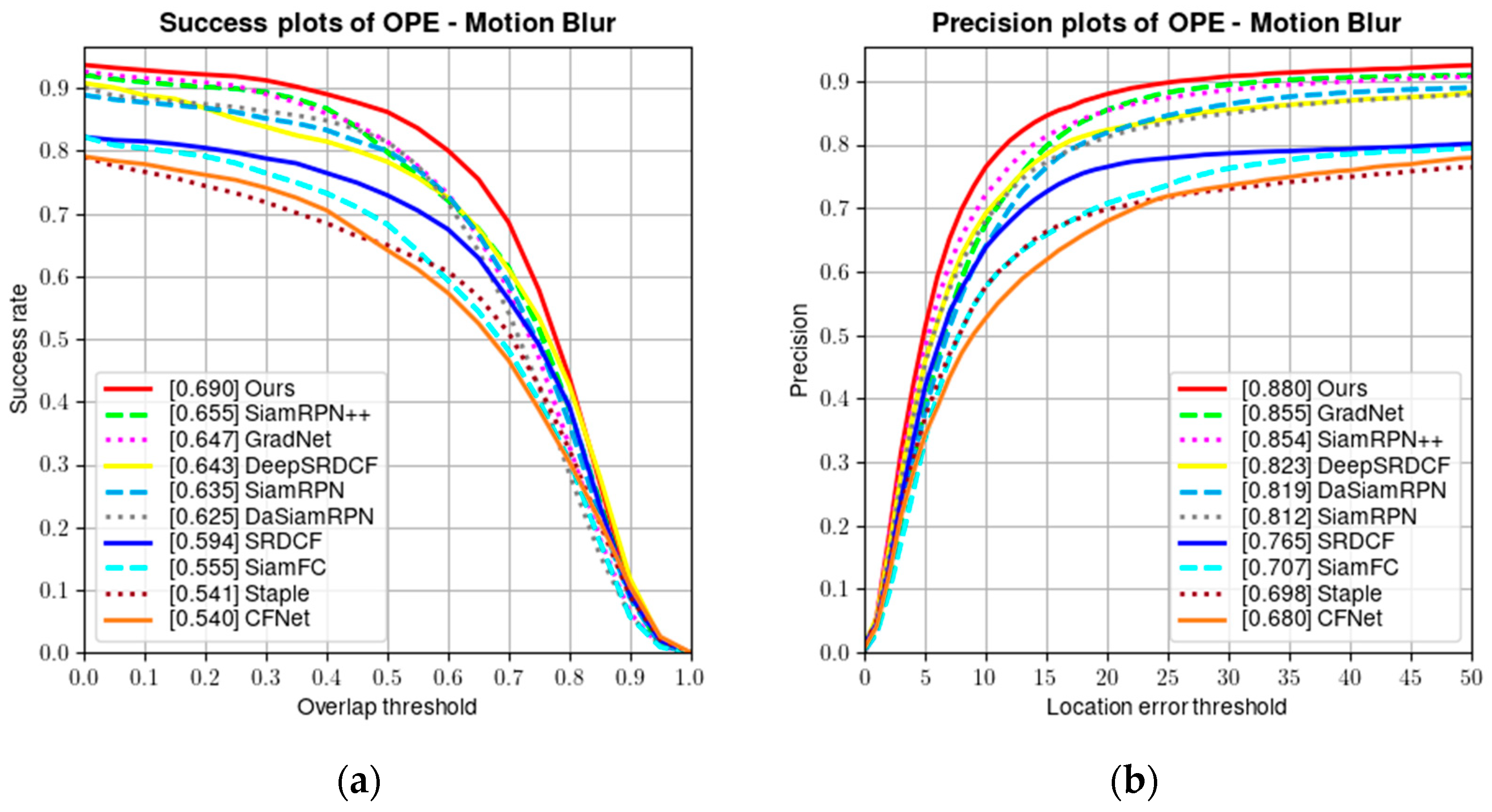

5.2. Analysis of Experimental Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sun, Y.; Shi, Y.; Yun, X.; Wang, S. Adaptive strategy fusion target tracking based on multi-layer convolutional features. J. Electron. Inf. Technol. 2019, 41, 2464–2470. [Google Scholar] [CrossRef]

- Yang, Z.; Li, Y.; Wang, B.; Ding, S.; Jiang, P. A lightweight sea surface object detection network for unmanned surface vehicles. J. Mar. Sci. Eng. 2022, 10, 965. [Google Scholar] [CrossRef]

- Park, H.; Ham, S.-H.; Kim, T.; An, D. Object Recognition and Tracking in Moving Videos for Maritime Autonomous Surface Ships. J. Mar. Sci. Eng. 2022, 10, 841. [Google Scholar] [CrossRef]

- Wu, Y.; Lim, J.; Yang, M.H. Online object tracking: A benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar] [CrossRef]

- Li, P.; Wang, D.; Wang, L.; Lu, H. Deep visual tracking: Review and experimental comparison. Pattern Recognit. 2018, 76, 323–338. [Google Scholar] [CrossRef]

- Zuo, W.; Wu, X.; Lin, L.; Zhang, L.; Yang, M.-H. Learning support correlation filters for visual tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1158–1172. [Google Scholar] [CrossRef] [PubMed]

- Freund, Y.; Schapire, R.E. A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. Siamrpn++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4282–4291. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-aware siamese networks for visual object tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 101–117. [Google Scholar] [CrossRef]

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H.S. End-to-end representation learning for correlation filter based tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2805–2813. [Google Scholar] [CrossRef]

- Li, P.; Chen, B.; Ouyang, W.; Wang, D.; Yang, X.; Lu, H. Gradnet: Gradient-guided network for visual object tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6162–6171. [Google Scholar] [CrossRef]

- Danelljan, M.; Hager, G.; Shahbaz Khan, F.; Felsberg, M. Convolutional features for correlation filter based visual tracking. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 7–13 December 2015; pp. 58–66. [Google Scholar] [CrossRef]

- Li, F.; Tian, C.; Zuo, W.; Zhang, L.; Yang, M.H. Learning spatial-temporal regularized correlation filters for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4904–4913. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H.S. Staple: Complementary learners for real-time tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1401–1409. [Google Scholar] [CrossRef]

- Cui, Y.; Jiang, C.; Wang, L.; Wu, G. Mixformer: End-to-end tracking with iterative mixed attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13608–13618. [Google Scholar] [CrossRef]

- Ye, B.; Chang, H.; Ma, B.; Shan, S.; Chen, X. Joint feature learning and relation modeling for tracking: A one-stream framework. In Proceedings of the Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; pp. 341–357. [Google Scholar] [CrossRef]

- Ortiz, A.; Simó, M.; Oliver, G. A vision system for an underwater cable tracker. Mach. Vis. Appl. 2002, 13, 129–140. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Li, A.; Yu, L.; Tian, S. Underwater Biological Detection Based on YOLOv4 Combined with Channel Attention. J. Mar. Sci. Eng. 2022, 10, 469. [Google Scholar] [CrossRef]

- Hong, X.; Cui, B.; Chen, W.; Rao, Y.; Chen, Y. Research on Multi-Ship Target Detection and Tracking Method Based on Camera in Complex Scenes. J. Mar. Sci. Eng. 2022, 10, 978. [Google Scholar] [CrossRef]

- Kong, Z.; Cui, Y.; Xiong, W.; Yang, F.; Xiong, Z.; Xu, P. Ship target identification via Bayesian-transformer neural network. J. Mar. Sci. Eng. 2022, 10, 577. [Google Scholar] [CrossRef]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High performance visual tracking with siamese region proposal network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8971–8980. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional siamese networks for object tracking. In Computer Vision–ECCV 2016 Workshops: Amsterdam, The Netherlands, October 8–10 and 15–16, 2016, Proceedings, Part II 14; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 850–865. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Wu, Y.; Lim, J.; Yang, M.H. Object tracking benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Čehovin, L.; Leonardis, A.; Kristan, M. Visual object tracking performance measures revisited. IEEE Trans. Image Process. 2016, 25, 1261–1274. [Google Scholar] [CrossRef] [PubMed]

- Gundogdu, E.; Alatan, A.A. Good features to correlate for visual tracking. IEEE Trans. Image Process. 2018, 27, 2526–2540. [Google Scholar] [CrossRef] [PubMed]

| Model | FLOPs | Weights |

|---|---|---|

| ResNet-50 | 6.51 G | 33.74 M |

| Versatile-ResNet-50 | 6.03 G | 30.92 M |

| Thinet-ResNet-50 | 5.79 G | 25.65 M |

| Ours | 4.46 G | 24.97 M |

| Tracker Name | Success Rate | Accuracy |

|---|---|---|

| Siam | 65.40% | 87.40% |

| SiamLW | 66.40% | 87.90% |

| SL-HENet | 69.10% | 90.80% |

| Tracker Name | Success Rate |

|---|---|

| Boosting | 70.7% |

| KCF | 65.9% |

| Ours | 75.8% |

| Tracker Name | Accuracy | Robustness | Lost Number | EAO |

|---|---|---|---|---|

| Ours | 0.600 | 0.246 | 52.0 | 0.385 |

| SiamRPN++ | 0.597 | 0.272 | 58.0 | 0.372 |

| DeepCSRDCF | 0.489 | 0.276 | 59.0 | 0.293 |

| CFCF | 0.511 | 0.286 | 61.0 | 0.280 |

| DSiam | 0.513 | 0.654 | 138.0 | 0.196 |

| SiamFC | 0.503 | 0.585 | 125.0 | 0.187 |

| Staple | 0.530 | 0.688 | 147.0 | 0.169 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, X.; Han, X.; Zhang, Z.; Wu, H.; Yang, X.; Huang, H. A Hybrid Excitation Model Based Lightweight Siamese Network for Underwater Vehicle Object Tracking Missions. J. Mar. Sci. Eng. 2023, 11, 1127. https://doi.org/10.3390/jmse11061127

Wu X, Han X, Zhang Z, Wu H, Yang X, Huang H. A Hybrid Excitation Model Based Lightweight Siamese Network for Underwater Vehicle Object Tracking Missions. Journal of Marine Science and Engineering. 2023; 11(6):1127. https://doi.org/10.3390/jmse11061127

Chicago/Turabian StyleWu, Xiaofeng, Xinyue Han, Zongyu Zhang, Han Wu, Xu Yang, and Hai Huang. 2023. "A Hybrid Excitation Model Based Lightweight Siamese Network for Underwater Vehicle Object Tracking Missions" Journal of Marine Science and Engineering 11, no. 6: 1127. https://doi.org/10.3390/jmse11061127

APA StyleWu, X., Han, X., Zhang, Z., Wu, H., Yang, X., & Huang, H. (2023). A Hybrid Excitation Model Based Lightweight Siamese Network for Underwater Vehicle Object Tracking Missions. Journal of Marine Science and Engineering, 11(6), 1127. https://doi.org/10.3390/jmse11061127