1. Introduction

Fluid machinery, such as hydraulic turbines, pumps, torque converters, wind turbines, and compressors, is widely used in many critical sectors of the national economy, including aerospace, agricultural engineering, the petrochemical industry, water conservancy projects, and medical equipment. However, in engineering applications, fluid machinery often faces issues such as low equipment efficiency, unstable operation, and incompatibility with the system [

1]. Consequently, the need for more efficient and stable fluid machinery has become a crucial area of research. Optimization design is a crucial aspect of fluid machinery design, and it involves addressing the complex and nonlinear relationship between performance and geometric parameters. To tackle these problems, some scientists have proposed the application of machine learning to optimize the design of fluid machinery.

Due to the rapid development of data science, processing units, neural-network-based technology, and sensor adaptation, machine learning has become an essential research method for development and innovation in various fields [

2]. Its unique ability to handle nonlinear problems has made it a powerful and intelligent data processing framework for the optimal design of fluid machinery, typically used for numerical simulation and structural optimization tasks in fluid machinery. Recently, machine learning has achieved significant success in the field of fluid machinery, leading to its rapid development. It has broken through traditional research methods and greatly enriched the industrial application of fluid machinery. Additionally, the development of fluid machinery has brought new challenges and breakthroughs to machine learning. Convolutional neural networks [

3], genetic algorithms, BP algorithms, deep learning, and other algorithms have made notable achievements in fluid machinery research. Due to the abundance of data, enhanced computing power, and improved data analysis accuracy, machine learning technology will play an increasingly crucial role in future social development.

Machine learning is an artificial intelligence development that primarily simulates human learning modes. It continuously learns and trains, updates the training model framework, and analyzes and classifies sample data characteristics to predict cases or issue execution commands. Machine learning is usually classified into three categories: supervised learning algorithms, unsupervised learning algorithms, and semi-supervised learning algorithms. Supervised learning algorithms can identify normal and abnormal data automatically and effectively from a large number of labeled samples, while unsupervised learning algorithms train samples without labeled samples. It can guarantee the model’s generalization performance and reduce learning costs. Semi-supervised learning builds models using a small number of labeled samples and a large number of unlabeled samples and makes predictions through algorithms. The active development of machine learning methods provides a new way to solve the nonlinear problems between parameters in optimization design, with the growth of machine learning and data-driven technology in the design and flow control field.

In conclusion, the application of machine learning in the optimization design of fluid machinery has significant theoretical and engineering value. This paper first summarizes the research status of fluid machinery optimization design and machine learning theory. Then, it provides an overview of the application status of machine learning in fluid machinery optimization design. Finally, the future prospects of machine learning in the optimal design of fluid machinery are discussed.

2. Review Methodology

Nowadays, the most efficient method of conducting research is by utilizing the internet and databases. Nevertheless, there is an overwhelming abundance of information available, varying in its authenticity, reliability, and usefulness. It can be challenging to distinguish between the effective and non-effective, authenticated and non-authenticated, as well as reliable and non-reliable sources of information. Therefore, it is crucial to exercise caution and discretion when selecting sources to ensure that the information obtained is beneficial for the research.

Google Scholar was used to search for high-quality research papers, initially using the keywords “machine learning”. However, it became apparent that many of the downloaded papers were not related to the optimal design of fluid machinery. To refine the search, the keywords “fluid machinery” and “optimal design” were employed, and relevant papers were downloaded. Additional papers were reviewed based on cross-references and their important role in the development of the optimal design of fluid machinery using machine learning techniques. Each paper was categorized based on the most suitable category, depending on whether the term was in the title, abstract, or body. This empirical research included literature review papers, conceptual papers, descriptive papers, and research papers. The primary databases searched were the Taylor & Francis, Elsevier, IEEE, and Springer publishing groups.

This paper provides an overview of the various definitions proposed by different researchers. To conduct this study, we reviewed a total of 89 research papers to gather a comprehensive understanding of the research contributions in this field.

3. Research Status of Optimal Design of Fluid Machinery

Fluid machinery is a type of energy conversion machinery that uses fluid as a working medium to achieve fluid pressurization or transportation. The traditional research process of fluid machinery includes modeling, performance testing, and analysis, as well as optimization design. The quality of 3D modeling during the modeling process typically depends on the designer’s experience. In performance testing, the method of creating models can be time-consuming and expensive. Moreover, due to errors in manufacturing and testing, solving these problems can require more time and energy during performance testing. Therefore, optimization design has become a critical aspect of fluid machinery research. Given the complex flow phenomena and the intricate relationship between flow and structural parameters (e.g., secondary flow caused by rotation and curvature, stratified flow, and boundary layer flow), creating the corresponding model is particularly challenging. In response to these issues, long-term research has focused on improving the internal flow field and optimizing mechanical geometric parameters. Commonly used optimization design methods include the inverse design optimization method, the multidisciplinary coupling optimization method, the genetic algorithm, and neural networks.

In the optimization design of fluid machinery, improving the internal flow field of fluid machinery is a challenging problem. However, few studies have simultaneously considered the mechanical problems associated with the design of optimized structures, especially in cases where turbulence, compressibility, or different physics are involved [

4]. To explore the influence of the internal flow field of fluid machinery on its performance and the relationship between them, some scholars proposed applying the Inverse Design Method (IDM) to the optimization design of fluid machinery. The optimization idea of IDM is to reconstruct the model through previously collected data. Compared to traditional optimization design methods, the IDM-based optimization method exhibits significant advantages in terms of time cost and universality. It is usually combined with turbulence simulation technology and mathematical optimization algorithms to improve the hydrodynamic performance of fluid machinery. This method has been studied both at home and abroad. For example, Yang et al. [

5] applied IDM to the optimization design of fluid machinery, effectively suppressing secondary flow and cavitation by controlling the loading parameters and stacking conditions of blades, thereby improving the internal flow field of fluid machinery. Moghadassian et al. [

6] used IDM to calculate the geometry of wind turbine blades, and the iterative inverse algorithm solved the optimization problem, thus improving the performance of single-rotor and double-rotor wind turbines. However, gray scale in the results of modeling the fluid flow field can make the contour of the fluid area inaccurate, which often occurs in the design of double channels and elbows. To avoid gray scale and obtain clear boundaries, Souza et al. [

7] proposed applying the Topology of Binary Structures (TOBS) in fluid flow design. In fluid topology optimization design, considering the density method, the material distribution characteristics are preserved, the gray problem is successfully eliminated, and the boundary between fluid and solid becomes clear. To solve this problem, Wildey et al. [

8] utilized the uncertainty of the discontinuity position and generated robust boundaries based on the estimation of specific probability quantities of samples.

For fluid machinery, optimizing the overall structural parameters is essential. These parameters not only ensure that the impeller can generate the required pressure, but also have a global impact on the flow situation in the impeller passage. The interface between the impeller and fluid largely determines the performance of fluid machinery. Therefore, blade shape selection is a crucial aspect of impeller design [

9]. Blade design is a complex multidisciplinary optimization problem, but innovative solutions have been proposed to address this issue. For instance, Meng et al. [

10] developed a multidisciplinary optimization strategy that uses surrogate models to obtain solutions such as adiabatic efficiency, equivalent stress, and the total pressure ratio, which improves the reliability, safety, and performance of impellers. Munk et al. [

11] proposed a multidisciplinary coupling optimization framework that incorporated fluid and structure into the topology optimization framework. By coupling a finite element solver with the lattice Boltzmann method, they demonstrated that adjusting the degree of coupling can significantly improve the algorithm’s computational efficiency and reduce the influence of fluid–structure coupling on the final optimization design. Moreover, Ghosh et al. [

12] adopted the Probabilistic Machine Learning (PMI) framework to overcome challenges related to ill-posed inverse problems of turbine blades. This method solved the issue of sparse data required for training such models and produced explicit inverse designs.

When optimizing the design of fluid machinery, it is important to consider the interaction between performance and geometric parameters. To explore this relationship, Yu et al. [

13] used computational fluid dynamics and neural networks to optimize the design of a blood pump, analyzing the influence of each parameter on performance and completing the parameter optimization. The research results indicate that this optimization method can be effectively applied to the design and research of complex, high-precision, multi-parameter, and multi-objective axial spiral vane pumps. In addition, Yu et al. [

14] also studied the influence of structural factors of splitter blades on the performance and flow fields of axial flow pumps, analyzing and optimizing the pump’s flow field and performance by using the orthogonal array method. Shi et al. [

15] adopted a multidisciplinary optimization design method based on an approximate model, considering blade mass and efficiency as objective functions, and head, efficiency, maximum stress, and maximum deformation as constraint conditions under small flow conditions. This method fully considers the interaction and mutual influence between hydraulic and structural design, improving the comprehensive performance of axial flow pump impellers. Lastly, Xu et al. [

16] proposed a global optimization method for annular jet pump design by combining computational fluid dynamics simulation, Kriging approximation models, and experimental data. Experimental results demonstrate that this method can improve the efficiency of annular jet pumps.

In recent years, the use of the genetic algorithm as an optimization algorithm has led to significant progress in the optimal design of fluid machinery [

17]. It is widely used to find the design parameters that optimize the performance of fluid machinery. The optimization idea of the genetic algorithm is to take the impeller performance as the objective function, determine the flow parameters related to the impeller performance, and combine the CFD calculation to predict the objective function result. Kim et al. [

18] used a commercial computational fluid dynamics program and the response surface method to optimize the design of a mixed-flow pump impeller, analyzed the design variables and performance changes of the inlet part of the mixed-flow pump impeller, and obtained the best shape, thereby improving the suction performance and efficiency of the mixed-flow pump. Peng et al. [

19] used a multi-objective genetic algorithm (MOGA) to perform multi-objective optimization on the optimal response surface model, and proved that this method significantly improved the efficiency of multiphase pumps under the condition of a large mass flow rate. In conclusion, the genetic algorithm is a popular and effective optimization method in fluid machinery design. The research status of the optimal design of fluid machinery for the internal flow field and the structure parameter is summarized in

Table 1.

4. Machine Learning Algorithm

With the rapid development of computing technology, machine learning has become an important research tool in various fields. It originated from the in-depth study of artificial intelligence by scholars. Machine learning simulates the human brain and behavior and selects appropriate algorithms to analyze data according to different types of phenomena. It continuously trains and learns to understand the characteristics and internal laws of the data, thereby making judgments and predictions about phenomena. The advantages of intelligence and automation make machine learning a powerful tool for simplifying data analysis problems in various fields. The process of estimating associations between the inputs, outputs, and parameters of a system using a limited number of observations can be described as the learning problem.

Machine learning can be categorized into three types based on different focuses: supervised learning, unsupervised learning, and semi-supervised learning. Supervised learning involves predicting samples through algorithm training models based on the classification information of known data. Unsupervised learning involves building models directly without the classification information of data. Semi-supervised learning involves using a small number of existing data with categorical information and most data without categorical information to build models. At present, machine learning research mainly focuses on algorithm development. Strengthening research on machine learning algorithms can significantly improve machine learning efficiency. Commonly used machine learning algorithms include support vector machine (SVM), random forest (RF), naive Bayesian (NB), back propagation (BP), K-means clustering algorithm, generative adversarial networks (GAN), artificial neural networks (ANN), decision tree, autoencoder (AE), deterministic policy gradient (DPG), and others [

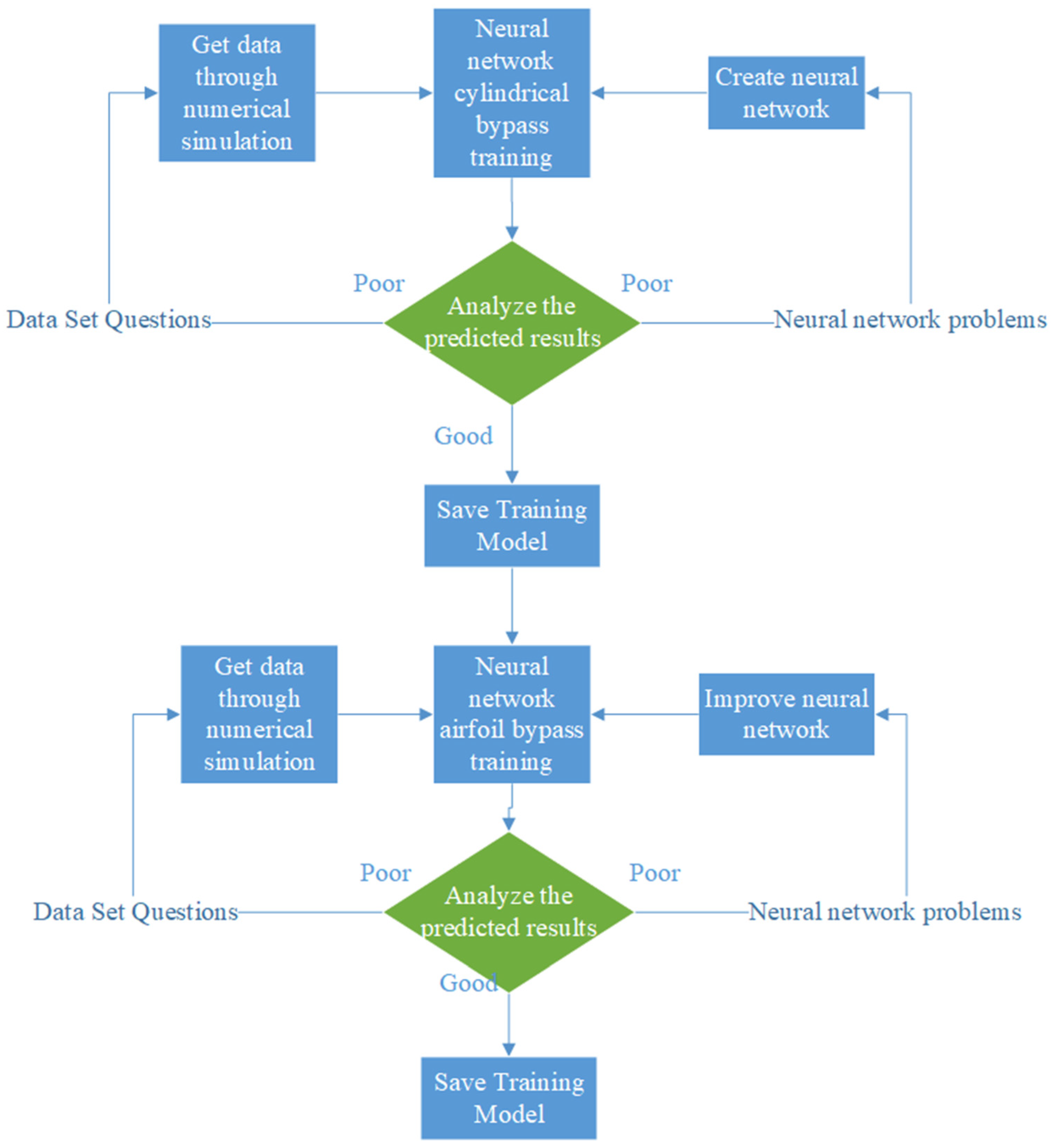

20]. The method flow is shown in

Figure 1.

4.1. Supervised Learning

In recent years, supervised learning has increasingly become a research focus in the field of machine learning. Supervised learning involves using known partial sample data to obtain a model through algorithm training, which can then be used to predict or classify unknown data. This process is not only suitable for simple sample judgments, but it can also lead to the development of a more accurate mathematical model through testing and optimizing another part of the sample data [

22].

4.1.1. Regression and Classification Algorithm

In the supervised learning process, the computer first classifies the input known sample data, then builds a model based on these data, and finally makes a prediction. When the input variable is continuous, this type of problem is called a regression problem; when the input variable is discrete, it is called a classification problem. Commonly used algorithms to solve such problems include neural networks, support vector machine (SVM), random forest (RF), naive Bayesian (NBM), and others. These algorithms have been widely used in industry. Al Shahrani et al. [

23] applied the elaborative stepwise stacked artificial neural network algorithm for automating industrial processes, significantly improving the control and monitoring of industrial environments in the automation industry. Nassif et al. [

24] conducted a comprehensive study on the application of deep learning in speech recognition, demonstrating that machine learning can achieve better performance in speech recognition than in other fields. Zhang et al. [

25] applied machine learning to a cloud-based medical diagnostic platform, using a secure model of support vector machine (SVM) to ensure safety and effectiveness in clinical diagnosis, highlighting the effectiveness and practicality of machine learning in medical diagnosis.

The focus of research on supervised learning algorithms is improving their performance and efficiency. Jafari-Marandi et al. [

26] studied the assumptions made to promote efficient pattern recognition, and theoretically explained the reasons for the lack of effectiveness of supervised learning. They illustrated that inherent assumptions in the process of building models for classification algorithms have a significant impact on the credibility of the built models and resulting predictions. Hu et al. [

27] proposed a spike optimization mechanism and demonstrated that it can improve the learning accuracy of the multi-layer Spiking Neural Network (SNN) and shorten the running time of the algorithm.

The support vector machine (SVM) is a popular supervised learning algorithm widely used in various fields. It demonstrates strong stability in nonlinear classification and is often employed for classification tasks. Wang et al. [

28] discussed the use of the SVM algorithm to process FMRI data and proved that the supervised learning model training can link brain imaging and stimulation. The study compared the performance of linear models of SVM, ridge regression, and Lasso regression on multivariate classification. The results showed that SVM is more efficient for small-scale data classification. In classification tasks of fault diagnosis and defect prediction, Zhou et al. [

29] proposed the Mean-ReSMOTE algorithm based on the traditional SVM algorithm named the SMOTE algorithm (Synthetic Minority Oversampling Technique) to oversample the samples in the dataset in order to reduce the over-generalization of the machine learning model. They also used the Hybrid-RFE+ algorithm to perform feature selection on the sampled data and obtained the optimal subset. The supervised defect prediction model built using SVM solves the problems of current defect prediction cross-domain category imbalance and feature redundancy. Xie [

30] established a fault diagnosis model using the SVM algorithm, which showed promising results in terms of accuracy, calculation time, and memory consumption, accurately classifying existing faults. Bordoloi et al. [

31] diagnosed the faults of centrifugal pumps using the SVM algorithm, analyzed the conditions under which the vibration signals caused the faults of centrifugal pumps, and classified their time-domain features. The SVM algorithm is often combined with other machine learning algorithms to address specific problems. Zhou et al. [

32] designed a supervised learning algorithm based on a multi-scale convolutional neural network (CNN) model, which combined the multi-scale CNN model with the Multi-Objective Generating Countermeasure Active Learning (MO-GAAL) algorithm in the unsupervised learning algorithm against active learning. This solved the problem of large loss function values in the test set and provided a further solution for fault diagnosis in different scenarios.

The performance of SVM is dependent on the choice of penalty and kernel parameters. To address the multi-parameter optimization problem of SVM, researchers have proposed various methods. For example, Yang et al. [

33] proposed the Cultural Emperor Penguin Optimizer (CEPO), which integrates the Cultural Algorithm (CA) and the Emperor Penguin Optimizer (EPO) for the parameter optimization of SVM. Experiments have shown that CEPO can improve the classification accuracy, convergence speed, stability, robustness, and operating efficiency of SVM. Additionally, Hu et al. [

34] developed a Fractional-order-PCA-SVM coupling algorithm for digital image recognition, which demonstrated effectiveness in digital medical image recognition. Tharwat et al. [

35] proposed a Chaotic Antlion Optimization (CALO) algorithm to optimize the kernel and penalty parameters of the SVM classifier, thereby reducing the classification error. Lastly, Tan [

36] utilized an improved Particle Swarm Optimization (PSO) algorithm to optimize the penalty factor and kernel function parameters in the SVM model, resulting in the PSO-SVM algorithm, which significantly improved the accuracy of the electric load forecasting model, according to experimental results.

In order to enhance the classification accuracy of SVM classifiers in various fields, scholars have proposed several approaches. Wang et al. [

37] suggested the use of Raman spectroscopy and an improved SVM to screen for thyroid dysfunction. They also introduced a Genetic Particle Swarm Optimization algorithm based on partial least squares, which can improve the classification accuracy of the SVM model. Xie et al. [

38] proposed a cancer classification algorithm based on the Dragonfly Algorithm and SVM, which can optimize the parameters of the SVM classifier and yield better classification accuracy. Li et al. [

39] developed a new Differential Evolution Algorithm for SVM parameter selection, which can attain faster convergence speeds and higher classification accuracy. Lastly, Ding et al. [

40] proposed an Improved Sparrow Search Algorithm (ISSA) for SVM fault diagnosis, where they established a fault diagnosis model, ISSA-SVM, for dissolved gas analysis. The results demonstrated that the algorithm can accurately determine the current operating state of the transformer. Random forest is an integrated learning idea that combines multiple decision trees into a forest and utilizes them together to predict the final outcome.

The random forest (RF) algorithm incorporates random attribute selection during the training process of decision trees. It is an ensemble algorithm that has been widely used in many applications, such as classification and regression. However, the current theoretical research on random forest lags far behind practical applications. Although the existing parallel random forest algorithms have been researched for a long time, they still have problems such as long execution times and low parallelism. To address these problems, Wang et al. [

41] proposed a parallel random forest (PRF) optimization algorithm based on distance weights, which has proven to perform better and more efficiently than previous PRF algorithms. Additionally, Wang et al. [

42] proposed a Post-Selection Boosting Random Forest (PBRF) algorithm that combines the RF and Lasso regression methods, and this algorithm has been verified to improve model performance. To further enhance the performance of the RF algorithm, Wang et al. [

43] utilized the Spark platform to propose a method that calculates feature weights to distinguish between strong and weak correlation features, and obtain feature subspaces through hierarchical sampling. This method has been proven to improve the classification accuracy and data calculation efficiency of the RF algorithm. However, these studies did not fully consider the problem of data imbalance. To make the RF algorithm more widely applicable, Sun et al. [

44] proposed a Banzhaf Random Forest algorithm (BRF) based on cooperative game theory, which has proven to be consistent, thus narrowing the gap between random forest theory and practical applications. Furthermore, to overcome the shortcomings of low learning efficiency and local optima, Wang et al. [

45] proposed an epistasis detection algorithm based on Artificial Fish Swarm Optimizing Bayesian Network (AFSBN), which has outperformed other methods in terms of epistasis detection accuracy across various datasets.

4.1.2. Evolutionary Algorithm

Evolutionary algorithms, also known as genetic algorithms (GA), are used to build models by simulating the crossover variation of chromosomes in the process of biological evolution, and to search for optimal solutions using algorithms. Genetic algorithms possess excellent global search capabilities and are widely used in various fields. However, the use of genetic algorithms in different application scenarios presents several challenges. To overcome these challenges, various solutions have been proposed by scholars. Maionchi et al. [

46] used a neural network to train a dataset with the diameter of the obstacle and its offset as input, the mixing percentage, and the pressure drop as output, to obtain the optimal geometry of circular obstacles in the channel of the micromixer. A genetic algorithm was then used to find the geometry that provided the maximum mixing percentage and minimum pressure drop values, proving the effectiveness of the combination of neural network and genetic algorithm in optimization problems. However, when the sample size in the dataset is small, directly inputting it into the network for training may lead to overfitting, making it necessary to expand the existing samples by using data enhancement techniques. To address this issue, Huang et al. [

47] proposed a generalized regression neural network optimized by a genetic algorithm. Additionally, Chui et al. [

48] proposed a general model of support vector machine for deep multi-kernel learning optimized using a multi-objective genetic algorithm. The results show that the algorithm not only achieves higher accuracy but also solves typical problems of datasets in simulated environments, such as the unreliability of cross-validation and input signals. The summary for supervised learning is provided in

Table 2.

4.2. Unsupervised Learning

Unsupervised learning is a machine learning method that explores the inherent laws and characteristics of sample data without labeled information. In order to achieve correct classification, the feature vector needs to contain sufficient category information, but it is often difficult to determine if the feature contains enough information. Unsupervised learning can effectively address this issue by selecting the best features for classifier training and automatically classifying all samples into different categories. This approach can be used to solve various problems in pattern recognition where class information is not available.

Stoudenmire et al. [

49] utilized an unsupervised learning algorithm to compute most of the layers of layered tree tensor networks, and then optimized only the top layer for supervised classification on the MNIST dataset. They demonstrated that combining prior guesses for supervised weights with unsupervised representations maintains good performance. Li et al. [

50] applied Particle Swarm Optimization (PSO) to predict the biological self-organization and thermodynamic properties of living systems, and proved that the model requires relatively little prior sample knowledge while ensuring the accuracy of the analysis. In order to further improve the prediction accuracy, Liu et al. [

51] combined Extreme Gradient Boosting (XGBoost), Kernel Principal Component Analysis (KPCA), and Linear Discriminant Analysis (LDA) and proposed a supervised learning model based on Kernel Principal Component Analysis, Linear Discriminant Analysis, and the Extreme Gradient Boosting Algorithm (KPCA-LDA-XGB). Hamadeh et al. [

52] optimized the multivariate statistical model by combining PCA and LDA, which improved the ability to process and analyze complex images using machine learning algorithms.

4.2.1. Dimensionality Reduction

In high-dimensional situations, dimensionality reduction becomes the first step in extracting predictive features from complex data due to problems such as sparse data samples and difficult distance calculations. Ge et al. [

53] proposed a domain adversarial neural network model for learning the dimensionality reduction representation of single-cell RNA sequencing data. The model reduces representativeness through dimensionality reduction to better focus on the feature types of the data. Similarly, Deng et al. [

54] proposed a Tensor Envelope Mixture Model (TEMM) for tensor data clustering and multidimensional dimensionality reduction, which reduces the number of free parameters and estimation variability. They also developed an expectation-maximization algorithm that obtains likelihood estimates of cluster means and covariances.

Moreover, despite extensive research on feature extraction methods, little attention has been paid to reducing the complexity of data. As a solution, Charte et al. [

55] proposed an autoencoder-based approach to reduce the complexity. By improving the shape and distribution of different classes, the complexity is further reduced, while preserving most information about classes in the encoded features. Experiments show that the proposed class-informed autoencoders outperform traditional unsupervised feature extraction techniques for classification tasks. Additionally, Li et al. [

56] proposed a new unsupervised robust discriminative manifold embedding method that addresses the problem of low performance on noisy data.

4.2.2. Clustering

Clustering refers to grouping similar data together and is often applied in the fields of computer vision and pattern recognition. Based on different learning strategies, scholars have designed various types of clustering algorithms, such as K-means clustering, t-SNE clustering, DBSCAN clustering, etc. Gan et al. [

57] proposed a general deep clustering framework, integrating representation learning and clustering into a single pipeline for the first time. This method has shown superior performance on benchmark datasets for pattern recognition and has received widespread attention. In the segmentation of digital images, clustering algorithms play an important role. Basar et al. [

58] proposed a new adaptive initialization method that determines the optimal initialization parameters of the traditional K-means clustering technique, optimizes the segmentation quality, and reduces the classification error. t-distributed random neighbor embedding clustering (t-distributed Random Neighbor Embedding, t-SNE) is often used for data dimensionality reduction and data visualization. Kimura et al. [

59] extended t-SNE using the framework of information geometry. With a carefully selected set of parameters, the generalized t-SNE outperforms the original t-SNE. In unsupervised machine learning, density-based algorithms can not only reduce the complexity of algorithm operation but also improve the accuracy of clustering results. Density-Based Spatial Clustering of Applications with Noise (DBSCAN) is one of the most preferred algorithms among density-based clustering methods. In the study of variable density spatial clustering for high-dimensional data, Unver et al. [

60] proposed the definition of fuzzy core points so that DBSCAN can try two different density modes in the same operation, and initially put forward the DBSCAN extension IFDBSCAN. The summary for unsupervised learning is provided in

Table 3.

4.3. Semi-Supervised Learning

4.3.1. Regression, Dimension Reduction, and Clustering

Semi-supervised learning is a method that uses a small amount of labeled data and a large amount of unlabeled data to build a model and predict unknown data through algorithms. It covers various fields, such as regression, dimensionality reduction, and clustering. Despite the significant progress made in semi-supervised regression, there is still a lack of systematic and comprehensive research in this area. In semi-supervised clustering, unlabeled data are used to obtain more precise clustering results and enhance the performance of clustering methods. Semi-supervised classification improves classifier performance by using unlabeled data to augment the training of labeled data. As a result, semi-supervised classifiers can utilize more data than those trained using only labeled data, leading to better generalization performance. Common semi-supervised learning methods include neural networks, reinforcement learning, and deep learning, with deep learning being widely used and researched in this field.

A neural network is an algorithm that uses functions to calculate input parameters and output results. It calculates errors through loss functions and uses chain derivation rules to propagate errors backward to correct neural network weights. This process is repeated until the neural network can fit the data well. Neural networks can learn many different attributes to process various aspects of instances, which can be continuous-valued, discrete-valued, or vectors. They are versatile and can be used for a wide range of tasks, such as image and speech recognition, natural language processing, and game playing [

61].

The back propagation (BP) neural network is a type of multi-layer perceptron (MLP) or feedforward neural network (FNN). Compared to a single-layer neural network, it can learn more complex nonlinear functions. Furthermore, as the number of layers increases, the fitting ability of the multilayer neural network for complex functions also increases. Zhao et al. [

62] designed an intelligent fault identification system based on the genetic algorithm using a set of BP neural networks suitable for rotary fluid machinery. Experiments proved that the system improved the fault recognition rate and accuracy rate, effectively diagnosed the fault types of rotating machinery, and demonstrated high generalization ability. Ling [

63] used the multilayer feedforward neural network model and applied the machine learning method’s flow field reproduction to calculate the aerodynamic characteristics of the airfoil. They used the excellent prediction model of the flow around the cylinder to predict the flow around the airfoil, predicted the velocity vector field through the independent neural network, established a regression problem to solve the two-dimensional airfoil flow field, and achieved rapid prediction. Lu et al. [

64] proposed a semi-supervised extreme learning machine (SSELM) method based on improved SMOTE to solve the problem of scarce labeled samples in the model construction process. They proved that the stacked denoising autoencoder can preserve and obtain better features, and further demonstrated that ELM can increase the learning rate of the model, resulting in better generalization performance.

4.3.2. Deep Reinforcement Learning

Reinforcement learning (RL) is a method that seeks to maximize long-term rewards by adapting behavior to a specific environment. It has found widespread application in various fields, including physics, chemistry, and biology [

65]. However, the existing parallel reinforcement learning methods suffer from a couple of issues. Firstly, the number of running algorithms cannot be reduced; secondly, the algorithm may not necessarily converge to the optimal solution. To address these problems, Ding et al. [

66] developed a new algorithm for asynchronous reinforcement learning—the Sarsa algorithm (APSO-BQSA) —by combining the backward Q-learning and Asynchronous Particle Swarm Optimization (APSO) algorithms. The proposed algorithm effectively searches for the optimal solution. In a related study, Kumar et al. [

67] compared the performance of multiple linear regression, multiple nonlinear regression, and artificial neural networks (ANN) in predicting the optimal configuration parameters of jet aerators. The findings indicate that artificial neural networks outperform multiple linear regression and multiple nonlinear regression techniques in this regard. Another study by Dalca et al. [

68] established a connection between classical learning and machine learning methods. The authors proposed a probabilistic generative model and derived an inference algorithm based on unsupervised machine learning. By combining classical registration methods and convolutional neural networks (CNN), the algorithm significantly improves the operation accuracy and speeds up the running time.

Deep learning (DL) has rapidly developed in the supervised field, and its combination with reinforcement learning has led to the emergence of deep reinforcement learning (DRL). As the latest achievement in the field of machine learning, DRL has found widespread application in various fields. Many classic algorithms and typical application fields have been produced for DRL research. DRL can achieve high-level control in the field of intelligent manufacturing, making it a technology with great potential [

69]. Li et al. [

70] proposed a deep learning model based on statistical features for the vibration measurement of rotating machinery. They used the model to classify faults in three domain feature sets and demonstrated the efficiency of the model for fault classification. In terms of structural optimization design, Viquerat et al. [

71] applied DRL to direct shape optimization for the first time. They demonstrated that an artificial neural network trained with DRL can generate optimal shapes on its own in a finite amount of time, without any prior knowledge. This demonstrates the effectiveness of reinforcement learning research in hydrodynamic shape optimization. Young et al. [

72] introduced an open-source, distributed Bayesian model optimization algorithm called HyperSpace. They proved that the algorithm consistently outperformed standard hyperparameter optimization techniques among the three DRL algorithms. The summary for semi-supervised learning is provided in

Table 4.

5. Application of Machine Learning in Optimal Design of Fluid Machinery

In this context, two complementary techniques can be identified: dimensionality reduction and reduced-order modeling. Dimensionality reduction aims to extract essential features and dominant patterns from the fluid, which can be used to compactly and efficiently describe its behavior using reduced coordinates. On the other hand, reduced-order modeling focuses on developing a parametrized dynamical system that captures the spatiotemporal evolution of the flow. Additionally, this technique may also involve creating a statistical map that relates the model parameters to averaged quantities.

5.1. Flow Feature Extraction

Machine learning (ML) is highly regarded for its strengths in pattern recognition and data mining, making it a valuable tool for analyzing spatiotemporal fluid data. The ML community has developed several techniques that can be easily applied in this field. In this discussion, we will delve into both linear and nonlinear dimensionality reduction methods, clustering, and classification techniques. Furthermore, we will examine approaches for expediting measurement and computation, and for processing experimental flow field data.

5.1.1. Dimensionality Reduction

Dimensionality reduction is a technique that reduces the dimensionality of data while preserving most of the information present in the original data. It can be employed in the preprocessing stage to minimize redundant information and noise. One of the most widely used dimensionality reduction algorithms is Proper Orthogonal Decomposition (POD), particularly in fluid mechanics and structural mechanics. POD reduces the dimensionality of the data by performing singular value decomposition (SVD) on the high-dimensional data matrix and keeping only the first r decomposition components. These components contain the most critical features or geometric structures of the data, and when the data are restored, the entire dataset can be recovered. Similarly, principal component analysis (PCA) is used in machine learning for dimensionality reduction by generating a set of orthogonal principal components through the covariance matrix of the dataset. The combination of principal components captures most of the variance in the original data.

In a study by Mendez et al. [

73], the differences and connections between autoencoders and manifold learning methods were emphasized. They investigated the impact of nonlinear techniques such as kernel principal component analysis, isometric feature learning, and local linear embedding on filtering, oscillation pattern recognition, and data compression.

5.1.2. Clustering and Classification

In the field of fluid machinery, flow field data refer to information describing the fluid’s motion state in a specific area. This information includes parameters such as velocity, pressure, and density. Clustering and classifying flow field data under different physical or boundary conditions can aid in understanding the fluid’s characteristics and its physical laws, and facilitate fluid control or optimal design. Machine learning offers various approaches, including K-means, which divides data points into K clusters, allowing for the identification of different fluid elements moving in the flow field. The K-means algorithm iteratively adjusts the positions of the clusters to minimize the error function, and the clustering results can be interpreted using the center point. Mi et al. [

74] applied K-means clustering to density logging and P-wave velocity data from three wells. The density log equation was also used to calculate the porosity of each cluster. The main lithologies, pore fluids, and fluid contacts were identified based on the center of mass of each cluster. However, this algorithm may lead to poor clustering results for complex structures.

Alternatively, the Gaussian Mixture Model (GMM) is suitable for more complex, nonlinear structural problems. GMM is a clustering method based on the probability density model and uses labeled particles, such as droplets or bubbles, as data points to achieve clustering by modeling the density relationship between them. GMM can adapt and fit to various shapes of clustering structures and avoids wrong classifications due to distribution instability. Zeng et al. [

75] used a deep autoencoder (DAE) and a Gaussian Mixture Model (GMM) to cluster trajectories and mine the main traffic flow patterns in the terminal airspace. The feature representations extracted by the DAE from historical high-dimensional trajectory data were used as input to the GMM and used for clustering.

Convolutional neural networks (CNNs) are commonly used for classification and can automatically extract features from input data. In the field of fluid mechanics, CNNs can detect fluid elements and track them across multiple image frames. Furthermore, CNNs can perform semi-supervised learning, which enables the clustering of unlabeled data points into different categories. He et al. [

76] applied a CNN to feature extraction in multidimensional data and used a Long Short-Term Memory Network (LSTM) to identify the relationships between different time steps, which overcomes the limitation of high-quality feature dependence.

5.1.3. Sparse and Randomized Methods

In machine learning, there are various approaches to choose from. For instance, the sparse method reduces the feature dimension by selecting some of the most representative features from the original features. This improves the accuracy and interpretability of the model prediction and is suitable for situations where only some features impact the model prediction results.

Compared to the sparse method, the random method is more appropriate for situations where the feature dimension is high, but the data are sparse and noisy. Techniques such as low-rank decomposition and random projection are used to randomly reduce the high-dimensional data to a low-dimensional space, thereby speeding up calculations and reducing noise. Commonly used methods include Proper Orthogonal Decomposition (POD) and Dynamic Mode Decomposition (DMD).

Krah et al. [

77] used the biorthogonal wavelet to compress data for wavelet feature extraction and reduce the amount of data analyzed, resulting in a sparse representation while controlling compression errors. DMD extracts key features that reflect system movement through the analysis of a large amount of time series data. These features can be used as input features for machine learning models and are valuable for problems such as time series forecasting and control.

In fluid machinery optimization design, these methods are often used in combination with machine learning to improve the efficiency and accuracy of data analysis. For example, DMD can decompose high-dimensional dynamic systems, and after extracting features, machine learning algorithms can perform tasks such as classification or prediction. Naderi et al. [

78] used the Hybrid Dynamic Mode Decomposition (HDMD) method to analyze unsteady fluid flow on a moving structure. Using the K-Nearest Neighbors (KNN) algorithm, numerical data of the dynamic grid are interpolated to a single stationary grid at each time step, providing the required fixed spatial domain for DMD.

5.1.4. Super-Resolution and Flow Cleansing

Super-resolution technology refers to using computer algorithms to increase the resolution of images, videos, or audio signals. This technology employs mathematical models, statistical knowledge, and machine learning methods to reconstruct missing pixels or signals, producing high-quality and clear results. In the field of computer vision and image processing, super-resolution is widely used to improve image or video quality, restore blurred or low-quality images, and enlarge small images. Similarly, in fluid mechanics, super-resolution techniques can help to visualize flow fields, leading to better process understanding.

One of the machine learning-based super-resolution techniques is the three-dimensional (3D) Enhanced Deep Super-Resolution (EDSR) convolutional neural network, which Jackson et al. [

79] developed. This network enhances the low-resolution images of large samples first, and then generates high-resolution images from those images, which is helpful in reducing micro-CT hardware or reconstruction defects often seen in high-resolution images. The EDSR technique can help to identify fluid phenomena, such as flow lines and vortices, and improve the efficiency of hydrodynamic analysis and visualization.

Flow cleansing refers to the process of selecting relatively important features using algorithms or models for dimensionality reduction. In data processing, missing value processing, outlier detection, and noise filtering methods are usually employed to gradually clean and process data, which can reduce computational complexity and improve algorithm accuracy. Montes et al. [

80] used the Evolutionary Polynomial Regression-Multi-Objective Genetic Algorithm (EPR-MOGA) to collect data from different steady-state flows, process them, and derive three new self-cleaning models based on their optimization strategies. Each operating system used a different set of potential input parameters to describe the modified Froude number. The summary for flow feature extraction is provided in

Table 5.

5.2. Optimal Design of Fluid Machinery

To achieve optimal design for fluid machinery, it is important to consider various interconnected factors. The relationship between performance and geometric parameters is highly sensitive and complex, displaying strong nonlinearity. Some scholars suggest using machine learning technology to simulate and better understand the behavior and mechanism of action, leveraging deep information and knowledge to improve the practicality and efficiency of optimization. Specifically, in the optimization design of fluid machinery, machine learning can be applied to construct surrogate or reduced-order models to explore the correlations between design variables or the relationship between design variables and performance.

5.2.1. Surrogate Model

The construction of a surrogate model is based on the relationship between inputs and outputs. In fluid machinery, surrogate models are commonly used to build a proxy model between inputs and outputs, such as experimental or simulation data. This is achieved by using various methods such as Gaussian process regression, the radial basis function network (RBF network), and multilayer perceptron (MLP). These surrogate models can then be used to quickly predict target functions based on design parameters, partially or completely replacing the need for CFD analysis.

Si et al. [

81] optimized the impeller of a pump using an orthogonal experimental design and the multi-island genetic algorithm (MIGA). They obtained the best impeller geometry by using the response surface method and analyzed the mixed flow design variables and performance variations in the inlet section of the pump impeller to obtain the optimal shape. Zhu et al. [

82] applied the Artificial Bee Colony (ABC) algorithm to find the optimal parameters in the traditional Support Vector Regression (SVR) model. They established an improved SVR model, the Improved Support Vector Regression Model (ISRM), and used the Multiple Population Genetic Algorithm (MPGA) to solve the optimization model and program of the ISRM method. This approach improved the reliability of optimization design for complex structures, particularly turbine blades, by increasing the modeling accuracy and optimization efficiency. Bouhlel et al. [

83] used an artificial neural network based on gradient enhancement to simulate airfoils under different flight conditions. They simulated the aerodynamic coefficients of subsonic and transonic airfoils by gradually introducing gradient information, allowing airfoil design optimization within seconds. Compared to traditional CFD-based optimization models, this approach greatly reduces the calculation time while producing similar results.

5.2.2. Reduced-Order Model

The reduced-order model uses machine learning methods to simplify the Navier–Stokes (N–S) equations for flow optimization, resulting in a streamlined model that can extract flow features and guide optimized design.

Yao et al. [

84] used Extreme Gradient Boosting (XGBoost) and Light Gradient Boosting Machine (Light GBM) algorithms to model the filtered density function of the mixture fraction in a turbulent evaporative spray. Their integrated model achieved high accuracy. Aversano et al. [

85] combined PCA with Kriging to build a low-level model. They used PCA to identify system invariants and separate them from coefficients related to characteristic operating conditions. The Kriging correspondence method was then used to find the response surface for these coefficients.

5.2.3. Deep Learning

In recent years, there have been remarkable developments and achievements in machine learning methods, especially in deep learning. Compared to traditional machine learning, deep learning utilizes a more complex network structure and improved training processes, resulting in greatly improved inductive ability. Deep learning models can independently select and eliminate useless features from a large pool of candidate features, and perform regression and classification tasks. Reinforcement learning, in particular, offers an interactive learning method that can be used to optimize design.

Renganathan et al. [

86] utilized machine learning to create a predictive model for the radar-based measurement of wind turbine wakes. They employed probabilistic machine learning techniques, specifically Gaussian process modeling, to learn the mapping between the parameter space and latent space and to account for data cognition and statistical uncertainty. This approach provided an accurate approximation of the wind turbine wake field. Maulik et al. [

87] used the Probabilistic Neural Network (PNN) to develop a machine-learning-based surrogate model for fluid flow, which also quantified the uncertainty in the model. This enhanced the reliability of the model. Li et al. [

88] employed a deep reinforcement learning algorithm to reduce the aerodynamic drag of a supercritical airfoil. They pre-trained the initial strategy of reinforcement learning through imitation learning and trained the policy in a proxy-model-based environment, which effectively improved the mean drag reduction across 200 airfoils. Zheng et al. [

89] combined the Bayesian optimization algorithm with a specified control action and used the Gaussian progress regression surrogate model to predict the vibration amplitude of the eddy-current-induced vibration. They also applied the soft actor–critic deep reinforcement learning algorithm to build a real-time control system, which provided a novel concept for typical flow control problems. The summary for the optimal design of fluid machinery using machine learning techniques is provided in

Table 6.

6. Outlook

After reviewing recent research on the application of machine learning methods in optimizing fluid machinery, this paper concludes that there are still many areas in this field that warrant further exploration.

Firstly, as science and technology advances, there is a growing need for more accurate turbulence models. Although machine learning techniques offer new possibilities for the development of such models, their industrial applications are still in their infancy. Current methods, such as using statistical models and data to compensate for prediction differences, often have shortcomings such as poor convergence, low learning speeds, and reduced accuracy caused by the introduction of human knowledge. Therefore, further research is necessary to improve the effectiveness of machine learning in optimizing fluid machinery.

Secondly, the issue of sparse design sample data is common in fluid machinery, and accurately quantifying the underlying physical mechanisms is crucial for analysis. Probabilistic machine learning has significant potential for data-driven prediction, fluid control, shape optimization, reconstruction, and model reduction in fluid mechanics applications. Current research shows that it can be used to create surrogate models for turbulent and compressible fluids, representing a promising research direction.

Finally, in machine learning, it is essential to consider various factors when selecting an appropriate algorithm, such as the quality and quantity of data, expected inputs and outputs, the cost function to be optimized, whether the task involves interpolation or extrapolation, and the interpretability of the model. Failure to account for these factors may result in overfitting or the non-convergence of the data to the model. Therefore, it is vital to establish an appropriate cross-validation model and optimize the algorithm to improve the accuracy and effectiveness of machine learning in optimizing fluid machinery.

Author Contributions

Conceptualization, B.X. and J.D.; methodology, B.X.; software, J.D.; validation, J.D. and X.L.; formal analysis, J.D.; investigation, X.L.; resources, A.C.; data curation, J.C.; writing—original draft preparation, J.D.; writing—review and editing, B.X.; visualization, J.D.; supervision, B.X. and D.Z.; project administration, B.X. and D.Z.; funding acquisition, B.X. and D.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (No. U2106225), Natural Science Foundation of Jiangsu Province (No. 21KJA470002), Jiangsu Province Engineering Research Center of High-Level Energy and Power Equipment (No. JSNYDL-202204), Senior Talent Foundation of Jiangsu University (No. 18JDG034), and Postdoctoral Science Foundation of Jiangsu Province (No. 2018K102C).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank the editors and the anonymous reviewers for their helpful and constructive comments. Their time is appreciated and the relevance of the comments on our manuscript indicated that they dedicated a significant portion of it to helping us to improve our work.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Luo, X.; Ye, W.; Song, X.; Geng, C. Future fluid machinery supporting “double-carbon” targets. J. Tsinghua Univ. Sci. Technol. 2022, 62, 678–692. [Google Scholar]

- San, O.; Pawar, S.; Rasheed, A. Prospects of federated machine learning in fluid dynamics. Aip Adv. 2022, 12, 095212. [Google Scholar] [CrossRef]

- Li, Y.; Chang, J.; Kong, C.; Bao, W. Recent progress of machine learning in flow modeling and active flow control. Chin. J. Aeronaut. 2022, 35, 14–44. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, M.; Zhu, Y.; Cheng, R.; Wang, L.; Li, X. Topology optimization of planar heat sinks considering out-of-plane design-dependent deformation problems. Meccanica 2021, 56, 1693–1706. [Google Scholar] [CrossRef]

- Yang, W.; Liu, B.; Xiao, R. Three-dimensional inverse design method for hydraulic machinery. Energies 2019, 12, 3210. [Google Scholar] [CrossRef]

- Moghadassian, B.; Sharma, A. Designing wind turbine rotor blades to enhance energy capture in turbine arrays. Renew. Energy 2020, 148, 651–664. [Google Scholar] [CrossRef]

- Souza, B.C.; Yamabe, P.V.M.; Sa, L.F.N.; Ranjbarzadeh, S.; Picelli, R.; Silva, E.C.N. Topology optimization of fluid flow by using Integer Linear Programming. Struct. Multidiscip. Optim. 2021, 64, 1221–1240. [Google Scholar] [CrossRef]

- Wildey, T.; Gorodetsky, A.A.; Belme, A.C.; Shadid, J.N. Robust uncertainty quantification using response surface approximations of discontinuous functions. Int. J. Uncertain. Quantif. 2019, 9, 415–437. [Google Scholar] [CrossRef]

- Chelabi, M.A.; Saga, M.; Kuric, I.; Basova, Y.; Dobrotvorskiy, S.; Ivanov, V.; Pavlenko, I. The effect of blade angle deviation on mixed inflow turbine performances. Appl. Sci. 2022, 12, 3781. [Google Scholar] [CrossRef]

- Meng, D.; Liu, M.; Yang, S.; Zhang, H.; Ding, R. A fluid-structure analysis approach and its application in the uncertainty-based multidisciplinary design and optimization for blades. Adv. Mech. Eng. 2018, 10, 1687814018783410. [Google Scholar] [CrossRef]

- Munk, D.J.; Kipouros, T.; Vio, G.A.; Parks, G.T.; Steven, G.P. On the effect of fluid-structure interactions and choice of algorithm in multi-physics topology optimisation. Finite Elem. Anal. Des. 2018, 145, 32–54. [Google Scholar] [CrossRef]

- Ghosh, S.; Padmanabha, G.A.; Peng, C.; Andreoli, V.; Atkinson, S.; Pandita, P.; Vandeputte, T.; Zabaras, N.; Wang, L. Inverse aerodynamic design of gas turbine blades using probabilistic machine learning. J. Mech. Des. 2022, 144. [Google Scholar] [CrossRef]

- Yu, Z.; Tan, J.; Wang, S.; Guo, B. Multiple parameters and target optimization of splitter blades for axial spiral blade blood pump using computational fluid mechanics, neural networks, and particle image velocimetry experiment. Sci. Prog. 2021, 104, 00368504211039363. [Google Scholar] [CrossRef] [PubMed]

- Yu, Z.; Tan, J.; Wang, S. Multi-parameter analysis of the effects on hydraulic performance and hemolysis of blood pump splitter blades. Adv. Mech. Eng. 2020, 12, 1687814020921299. [Google Scholar] [CrossRef]

- Shi, L.; Zhu, J.; Tang, F.; Wang, C. Multi-disciplinary optimization design of axial-flow pump impellers based on the approximation model. Energies 2020, 13, 779. [Google Scholar] [CrossRef]

- Xu, K.; Wang, G.; Wang, L.; Yun, F.; Sun, W.; Wang, X.; Chen, X. Parameter analysis and optimization of annular jet pump based on kriging model. Appl. Sci. 2020, 10, 7860. [Google Scholar] [CrossRef]

- Kavuri, C.; Kokjohn, S.L. Exploring the potential of machine learning in reducing the computational time/expense and improving the reliability of engine optimization studies. Int. J. Engine Res. 2020, 21, 1251–1270. [Google Scholar] [CrossRef]

- Kim, S.; Kim, Y.-I.; Kim, J.-H.; Choi, Y.-S. Three-objective optimization of a mixed-flow pump impeller for improved suction performance and efficiency. Adv. Mech. Eng. 2019, 11, 1687814019898969. [Google Scholar] [CrossRef]

- Peng, C.; Zhang, X.; Gao, Z.; Wu, J.; Gong, Y. Research on cooperative optimization of multiphase pump impeller and diffuser based on adaptive refined response surface method. Adv. Mech. Eng. 2022, 14, 16878140211072944. [Google Scholar] [CrossRef]

- Wang, Y.R.; Zhao, W.J.; Liang, L.G.; Wu, Q.; Jin, H.H.; Wang, C.X. Fluid mechanical pneumatic optimization based on machine learning method Research Status and Prospect of Design. Chin. J. Turbomach. 2020, 62, 77–90. [Google Scholar] [CrossRef]

- Brunton, S.L.; Noack, B.R.; Koumoutsakos, P. Machine learning for fluid mechanics. In Annual Review Of Fluid Mechanics; Davis, S.H., Moin, P., Eds.; Annual Reviews: San Mateo, CA, USA, 2020; Volume 52, pp. 477–508. [Google Scholar]

- Liu, F.N.; Shi, J.X.; Wang, W.J.; Zhao, R. Overview of machine learning algorithms in material science. New Chmical Mater. 2022, 50, 42–46+52. [Google Scholar] [CrossRef]

- Al Shahrani, A.M.M.; Alomar, M.A.; Alqahtani, K.N.N.; Basingab, M.S.; Sharma, B.; Rizwan, A. Machine learning-enabled smart industrial automation systems using internet of things. Sensors 2023, 23, 324. [Google Scholar] [CrossRef] [PubMed]

- Nassif, A.B.; Shahin, I.; Attili, I.; Azzeh, M.; Shaalan, K. Speech recognition using deep neural networks: A systematic review. IEEE Access 2019, 7, 19143–19165. [Google Scholar] [CrossRef]

- Zhang, M.; Song, W.; Zhang, J. A secure clinical diagnosis with privacy-preserving multiclass support vector machine in clouds. IEEE Syst. J. 2022, 16, 67–78. [Google Scholar] [CrossRef]

- Jafari-Marandi, R. Supervised or unsupervised learning? Investigating the role of pattern recognition assumptions in the success of binary predictive prescriptions. Neurocomputing 2021, 434, 165–193. [Google Scholar] [CrossRef]

- Hu, T.; Lin, X.; Wang, X.; Du, P. Supervised learning algorithm based on spike optimization mechanism for multilayer spiking neural networks. Int. J. Mach. Learn. Cybern. 2022, 13, 1981–1995. [Google Scholar] [CrossRef]

- Wang, Y.Y.; Yu, H.F.; Li, B.; Lu, X.M. How to analyze fmri data with open source tools: An introduction to supervised machine learning algorithm for multi-voxel patterns analysis. J. Psychol. Sci. 2022, 45, 718–724. [Google Scholar] [CrossRef]

- Chao, Z.; Zheng, W.; Futong, Q.; Yi, L. An enhanced supervised cross-domain protocol defect prediction algorithm. Comput. Eng. Appl. 2022, 1–7. [Google Scholar]

- Xun, Y.Y. Research on Fault Identification of Rolling Bearing in Rotating Fluid Machinery. Master’s Thesis, Zhejiang Sci-Tech University, Hangzhou, China, 2020. [Google Scholar]

- Bordoloi, D.J.; Tiwari, R. Identification of suction flow blockages and casing cavitations in centrifugal pumps by optimal support vector machine techniques. J. Braz. Soc. Mech. Sci. Eng. 2017, 39, 2957–2968. [Google Scholar] [CrossRef]

- Feng, Z.; Yi, Y.; Xin, L.; Yaguang, J.; Rufang, L. Equipment fault diagnosis technology based on supervised and unsupervised learning algorithms and investigation on algorithm fusion. Ind. Technol. Innov. 2022, 9, 30–38. [Google Scholar] [CrossRef]

- Yang, J.; Gao, H. Cultural emperor penguin optimizer and its application for face recognition. Math. Probl. Eng. 2020, 2020, 9579538. [Google Scholar] [CrossRef]

- Hu, L.; Cui, J. Digital image recognition based on Fractional-order-PCA-SVM coupling algorithm. Measurement 2019, 145, 150–159. [Google Scholar] [CrossRef]

- Tharwat, A.; Hassanien, A.E. Chaotic antlion algorithm for parameter optimization of support vector machine. Appl. Intell. 2018, 48, 670–686. [Google Scholar] [CrossRef]

- Tan, X.; Yu, F.; Zhao, X. Support vector machine algorithm for artificial intelligence optimization. Clust. Comput. J. Netw. Softw. Tools Appl. 2019, 22, 15015–15021. [Google Scholar] [CrossRef]

- Wang, D.; Jiang, J.; Mo, J.; Tang, J.; Lv, X. Rapid screening of thyroid dysfunction using raman spectroscopy combined with an improved support vector machine. Appl. Spectrosc. 2020, 74, 674–683. [Google Scholar] [CrossRef]

- Xie, T.; Yao, J.; Zhou, Z. Da-based parameter optimization of combined kernel support vector machine for cancer diagnosis. Processes 2019, 7, 263. [Google Scholar] [CrossRef]

- Li, J.; Fang, G. A novel differential evolution algorithm integrating opposition-based learning and adjacent two generations hybrid competition for parameter selection of SVM. Evol. Syst. 2021, 12, 207–215. [Google Scholar] [CrossRef]

- Ding, C.; Ding, Q.; Wang, Z.; Zhou, Y. Fault diagnosis of oil-immersed transformers based on the improved sparrow search algorithm optimised support vector machine. IET Electr. Power Appl. 2022, 16, 985–995. [Google Scholar] [CrossRef]

- Wang, Q.; Chen, H.H. Optimization of parallel random forest algorithm based on distance weight. J. Intell. Fuzzy Syst. 2020, 39, 1951–1963. [Google Scholar] [CrossRef]

- Wang, H.; Wang, G.Z. Improving random forest algorithm by Lasso method. J. Stat. Comput. Simul. 2021, 91, 353–367. [Google Scholar] [CrossRef]

- Wang, S.Z.; Zhang, Z.F.; Geng, S.S.; Pang, C.Y. Research on optimization of random forest algorithm based on spark. CMC Comput. Mater. Contin. 2022, 71, 3721–3731. [Google Scholar] [CrossRef]

- Sun, J.; Zhong, G.; Huang, K.; Dong, J. Banzhaf random forests: Cooperative game theory based random forests with consistency. Neural Netw. 2018, 106, 20–29. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Wang, Y.; Fu, Y.; Gao, Y.; Du, J.; Yang, C.; Liu, J. Afsbn: A method of artificial fish swarm optimizing bayesian network for epistasis detection. IEEE Acm Trans. Comput. Biol. Bioinform. 2021, 18, 1369–1383. [Google Scholar] [CrossRef] [PubMed]

- Maionchi, D.D.; Ainstein, L.; dos Santos, F.P.; de Souza, M.B. Computational fluid dynamics and machine learning as tools for optimization of micromixers geometry. Int. J. Heat Mass Transf. 2022, 194, 655–661. [Google Scholar] [CrossRef]

- Huang, H.-B.; Xie, Z.-H. Generalized regression neural network optimized by genetic algorithm for solving out-of-sample extension problem in supervised manifold learning. Neural Process. Lett. 2019, 50, 2567–2593. [Google Scholar] [CrossRef]

- Chui, K.T.; Lytras, M.D.; Liu, R.W. A generic design of driver drowsiness and stress recognition using moga optimized deep mkl-svm. Sensors 2020, 20, 1474. [Google Scholar] [CrossRef]

- Stoudenmire, E.M. Learning relevant features of data with multi-scale tensor networks. Quantum Sci. Technol. 2018, 3, 034003. [Google Scholar] [CrossRef]

- Li, J.; Xie, F. Self-organized criticality of molecular biology and thermodynamic analysis of life system based on optimized particle swarm algorithm. Cell. Mol. Biol. 2020, 66, 177–192. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, H.; Fei, Y.; Liu, Y.; Shen, L.; Zhuang, Z.; Zhang, X. Research on the prediction of green plum acidity based on improved xgboost. Sensors 2021, 21, 930. [Google Scholar] [CrossRef]

- Hamadeh, L.; Imran, S.; Bencsik, M.; Sharpe, G.R.; Johnson, M.A.; Fairhurst, D.J. Machine learning analysis for quantitative discrimination of dried blood droplets. Sci. Prog. 2020, 10, 3313. [Google Scholar] [CrossRef]

- Ge, S.; Wang, H.; Alavi, A.; Xing, E.; Bar-Joseph, Z. Supervised adversarial alignment of single-cell rna-seq data. J. Comput. Biol. 2021, 28, 501–513. [Google Scholar] [CrossRef] [PubMed]

- Deng, K.; Zhang, X. Tensor envelope mixture model for simultaneous clustering and multiway dimension reduction. Biometrics 2022, 78, 1067–1079. [Google Scholar] [CrossRef] [PubMed]

- Charte, D.; Charte, F.; Herrera, F. Reducing data complexity using autoencoders with class-informed loss functions. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 9549–9560. [Google Scholar] [CrossRef] [PubMed]

- Li, J. Unsupervised robust discriminative manifold embedding with self-expressiveness. Neural Netw. 2019, 113, 102–115. [Google Scholar] [CrossRef] [PubMed]

- Gan, Y.; Dong, X.; Zhou, H.; Gao, F.; Dong, J. Learning the precise feature for cluster assignment. IEEE Trans. Cybern. 2022, 52, 8587–8600. [Google Scholar] [CrossRef]

- Basar, S.; Ali, M.; Ochoa-Ruiz, G.; Zareei, M.; Waheed, A.; Adnan, A. Unsupervised color image segmentation: A case of rgb histogram based k-means clustering initialization. PLoS ONE 2020, 15, e0240015. [Google Scholar] [CrossRef]

- Kimura, M. Generalized t-SNE through the lens of information geometry. IEEE Access 2021, 9, 129619–129625. [Google Scholar] [CrossRef]

- Unver, M.; Erginel, N. Clustering applications of IFDBSCAN algorithm with comparative analysis. J. Intell. Fuzzy Syst. 2020, 39, 6099–6108. [Google Scholar] [CrossRef]

- Huiwei, X. Research on Network Traffic Analysis and Prediction Based on Decision Tree Integration and Width Forest. Master’s Thesis, Nanjing University of Posts and Telecommunications, Nanjing, China, 2020. [Google Scholar]

- Peng, Z.; Zhaolong, C.; Zhili, C. Design of intelligent fault diagnosis system for fluid machinery. Petrochem. Equip. Technol. 2022, 43, 52–58, 62+56–57. [Google Scholar]

- Ling, Z. High-Precision Numerical Simulation of Airfoil and Prediction of Airfoil Flow Field Based on Machine Learning Method; Lanzhou University of Technology: Lanzhou, China, 2021. [Google Scholar]

- Zihao, L.; Xiaoyuan, J. Defect prediction of semi-supervised limit learning machine based on improved SMOTE. Comput. Technol. Dev. 2021, 31, 21–25. [Google Scholar]

- Martin-Guerrero, J.D.; Lamata, L. Reinforcement learning and physics. Appl. Sci. 2021, 11, 8589. [Google Scholar] [CrossRef]

- Ding, S.; Du, W.; Zhao, X.; Wang, L.; Jia, W. A new asynchronous reinforcement learning algorithm based on improved parallel PSO. Appl. Intell. 2019, 49, 4211–4222. [Google Scholar] [CrossRef]

- Kumar, M.; Ranjan, S.; Tiwari, N.K. Oxygen transfer study and modeling of plunging hollow jets. Appl. Water Sci. 2018, 8, 121. [Google Scholar] [CrossRef]

- Dalca, A.V.; Balakrishnan, G.; Guttag, J.; Sabuncu, M.R. Unsupervised learning of probabilistic diffeomorphic registration for images and surfaces. Med. Image Anal. 2019, 57, 226–236. [Google Scholar] [CrossRef] [PubMed]

- Kong, S.; Liu, C.; Shi, Y.; Xie, Y.; Wang, K. Review of application prospect of deep reinforcement learning in intelligent manufacturing. Comput. Eng. Appl. 2021, 57, 49–59. [Google Scholar]

- Li, C.; Sanchez, R.V.; Zurita, G.; Cerrada, M.; Cabrera, D. Fault diagnosis for rotating machinery using vibration measurement deep statistical feature learning. Sensors 2016, 16, 895. [Google Scholar] [CrossRef]

- Viquerat, J.; Rabault, J.; Kuhnle, A.; Ghraieb, H.; Larcher, A.; Hachem, E. Direct shape optimization through deep reinforcement learning. J. Comput. Phys. 2021, 428, 110080. [Google Scholar] [CrossRef]

- Young, M.T.; Hinkle, J.D.; Kannan, R.; Ramanathan, A. Distributed bayesian optimization of deep reinforcement learning algorithms. J. Parallel Distrib. Comput. 2020, 139, 43–52. [Google Scholar] [CrossRef]

- Mendez, M.A. Linear and nonlinear dimensionality reduction from fluid mechanics to machine learning. Meas. Sci. Technol. 2023, 34, 042001. [Google Scholar] [CrossRef]

- Mi, A.; Chen, S.-C. Characterization of well logs using K-mean cluster analysis. J. Pet. Explor. Prod. Technol. 2020, 10, 2245–2256. [Google Scholar] [CrossRef]

- Zeng, W.; Xu, Z.; Cai, Z.; Chu, X.; Lu, X. Aircraft trajectory clustering in terminal airspace based on deep autoencoder and gaussian mixture model. Aerospace 2021, 8, 266. [Google Scholar] [CrossRef]

- He, W.; Li, J.; Tang, Z.; Wu, B.; Luan, H.; Chen, C.; Liang, H. A novel hybrid CNN-LSTM scheme for nitrogen oxide emission prediction in fcc unit. Math. Probl. Eng. 2020, 2020, 8071810. [Google Scholar] [CrossRef]

- Krah, P.; Engels, T.; Schneider, K.; Reiss, J. Wavelet adaptive proper orthogonal decomposition for large-scale flow data. Adv. Comput. Math. 2022, 48, 10. [Google Scholar] [CrossRef]

- Naderi, M.H.; Eivazi, H.; Esfahanian, V. New method for dynamic mode decomposition of flows over moving structures based on machine learning (hybrid dynamic mode decomposition). Phys. Fluids 2019, 31, 127102. [Google Scholar] [CrossRef]

- Jackson, S.J.; Niu, Y.; Manoorkar, S.; Mostaghimi, P.; Armstrong, R.T. Deep learning of multiresolution x-ray micro-computed-tomography images for multiscale modeling. Phys. Rev. Appl. 2022, 17, 054046. [Google Scholar] [CrossRef]

- Montes, C.; Berardi, L.; Kapelan, Z.; Saldarriaga, J. Predicting bedload sediment transport of non-cohesive material in sewer pipes using evolutionary polynomial regression—Multi-objective genetic algorithm strategy. Urban Water J. 2020, 17, 154–162. [Google Scholar] [CrossRef]

- Si, Q.; Lu, R.; Shen, C.; Xia, S.; Sheng, G.; Yuan, J. An intelligent cfd-based optimization system for fluid machinery: Automotive electronic pump case application. Appl. Sci. 2020, 10, 366. [Google Scholar] [CrossRef]

- Zhu, Z.-Z.; Feng, Y.-W.; Lu, C.; Fei, C.-W. Reliability optimization of structural deformation with improved support vector regression model. Adv. Mater. Sci. Eng. 2020, 2020, 3982450. [Google Scholar] [CrossRef]

- Bouhlel, M.A.; He, S.; Martins, J.R.R.A. Scalable gradient-enhanced artificial neural networks for airfoil shape design in the subsonic and transonic regimes. Struct. Multidiscip. Optim. 2020, 61, 1363–1376. [Google Scholar] [CrossRef]

- Yao, S.; Kronenburg, A.; Stein, O.T. Efficient modeling of the filtered density function in turbulent sprays using ensemble learning. Combust. Flame 2022, 237, 111722. [Google Scholar] [CrossRef]

- Aversano, G.; Bellemans, A.; Li, Z.; Coussement, A.; Gicquel, O.; Parente, A. Application of reduced-order models based on PCA & Kriging for the development of digital twins of reacting flow applications. Comput. Chem. Eng. 2019, 121, 422–441. [Google Scholar] [CrossRef]

- Renganathan, S.A.; Maulik, R.; Letizia, S.; Iungo, G.V. Data-driven wind turbine wake modeling via probabilistic machine learning. Neural Comput. Appl. 2022, 34, 6171–6186. [Google Scholar] [CrossRef]

- Maulik, R.; Fukami, K.; Ramachandra, N.; Fukagata, K.; Taira, K. Probabilistic neural networks for fluid flow surrogate modeling and data recovery. Phys. Rev. Fluids 2020, 5, 104401. [Google Scholar] [CrossRef]

- Li, R.; Zhang, Y.; Chen, H. Learning the aerodynamic design of supercritical airfoils through deep reinforcement learning. Aiaa J. 2021, 59, 3988–4001. [Google Scholar] [CrossRef]

- Zheng, C.; Ji, T.; Xie, F.; Zhang, X.; Zheng, H.; Zheng, Y. From active learning to deep reinforcement learning: Intelligent active flow control in suppressing vortex-induced vibration. Phys. Fluids 2021, 33, 063607. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).