Area Contrast Distribution Loss for Underwater Image Enhancement

Abstract

1. Introduction

2. Related Works

3. Method

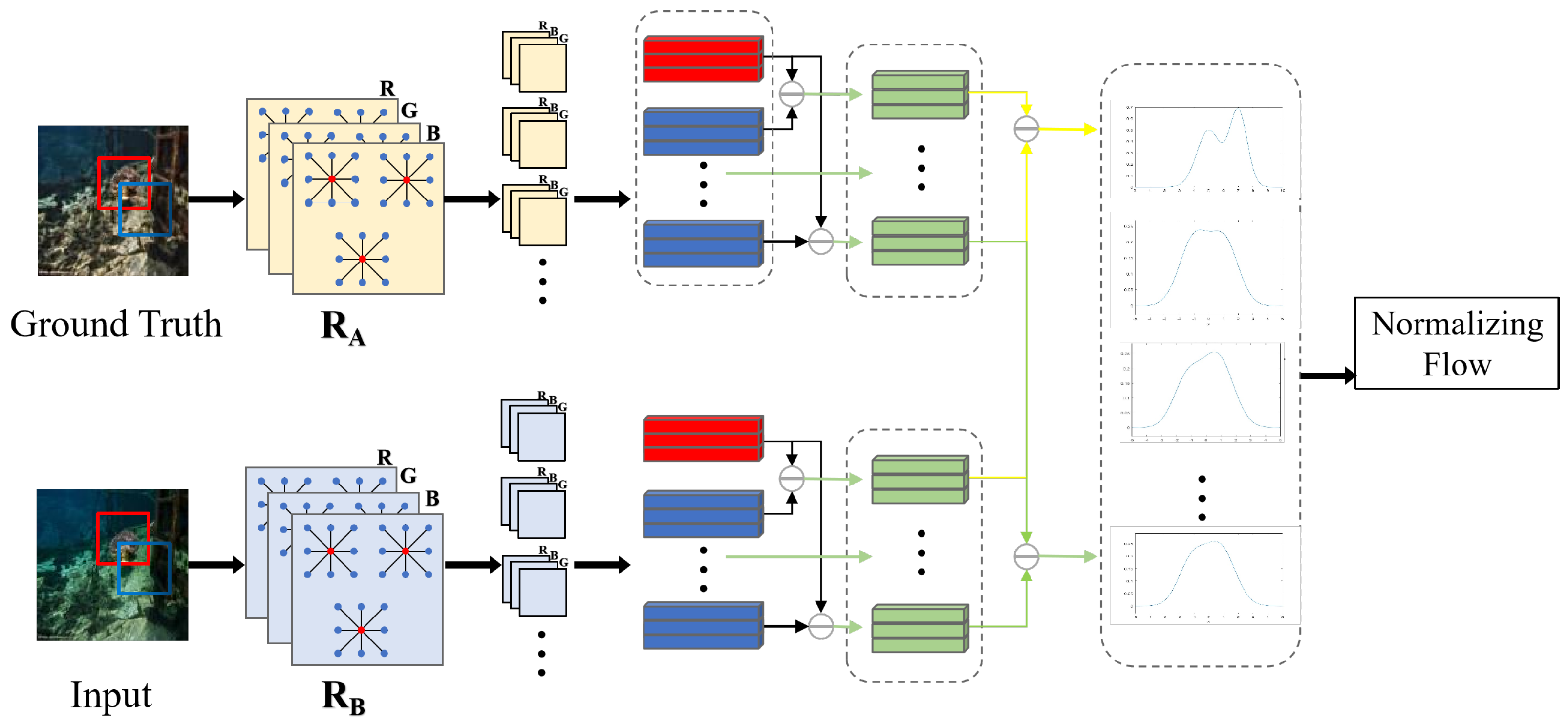

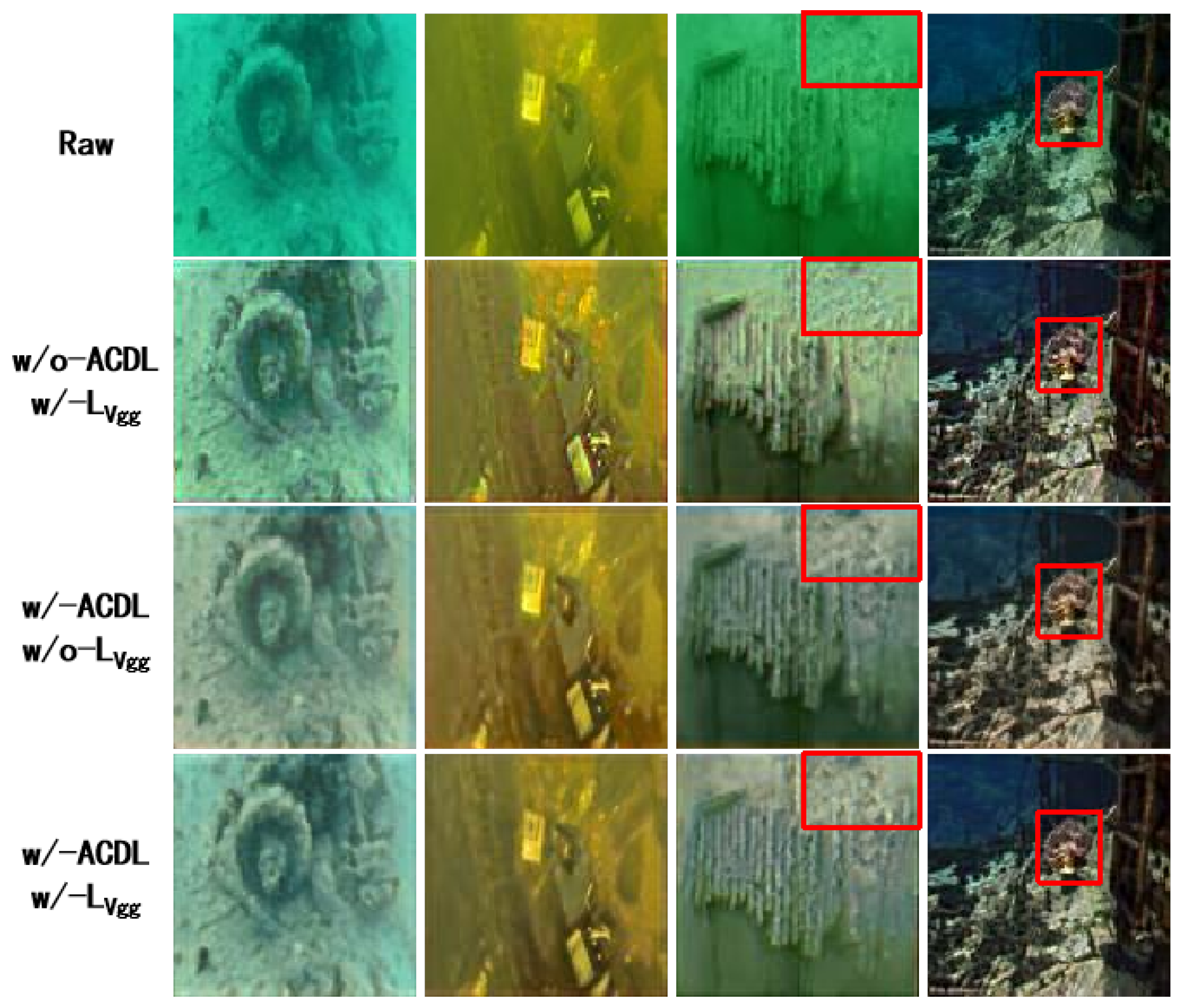

3.1. Area Contrast Distribution Loss (ACDL)

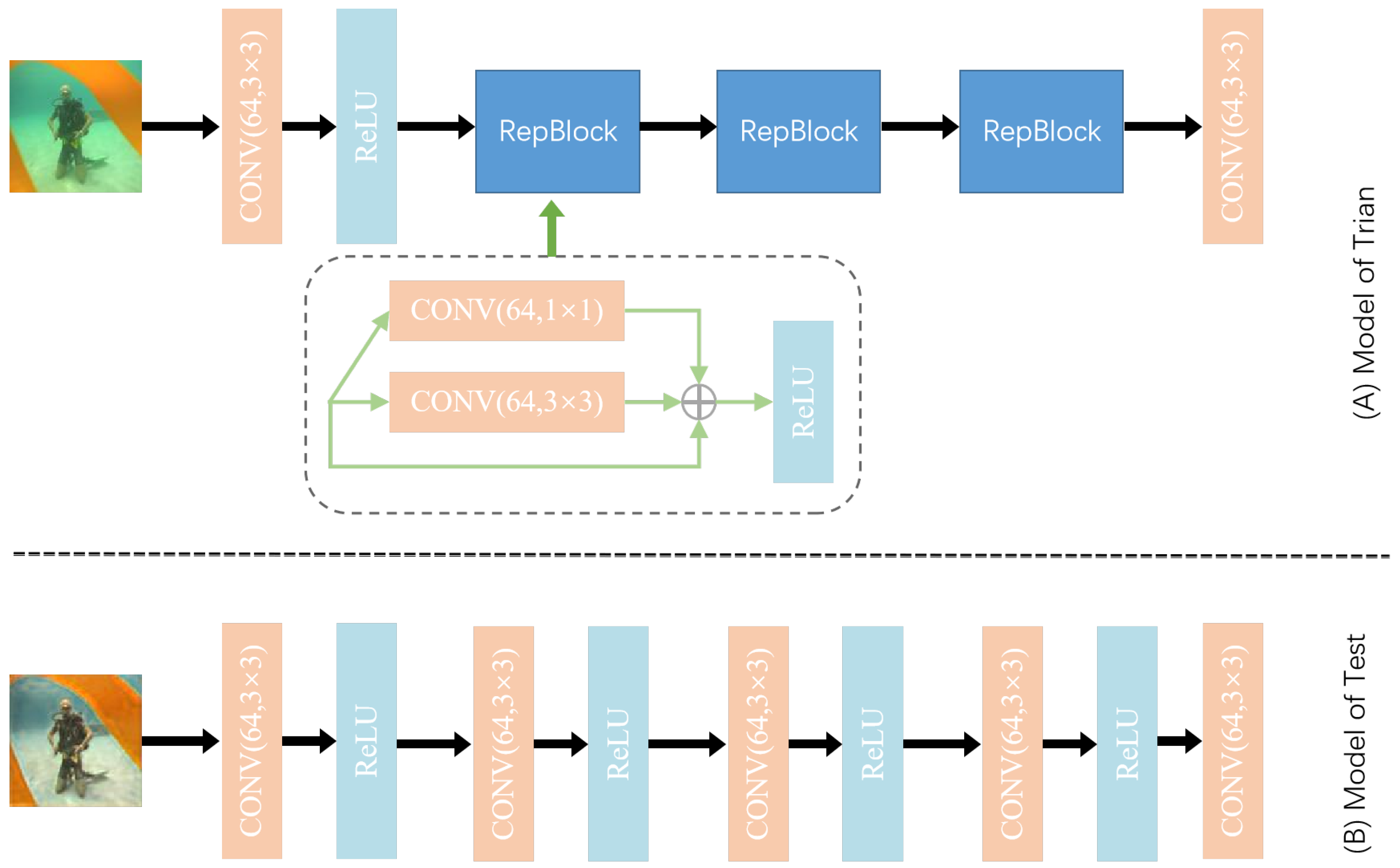

3.2. Reparameterization Underwater Image Enhancement

3.3. Loss Function

4. Experiments

4.1. Implementation Details

4.2. Test Datasets

4.3. Metrics

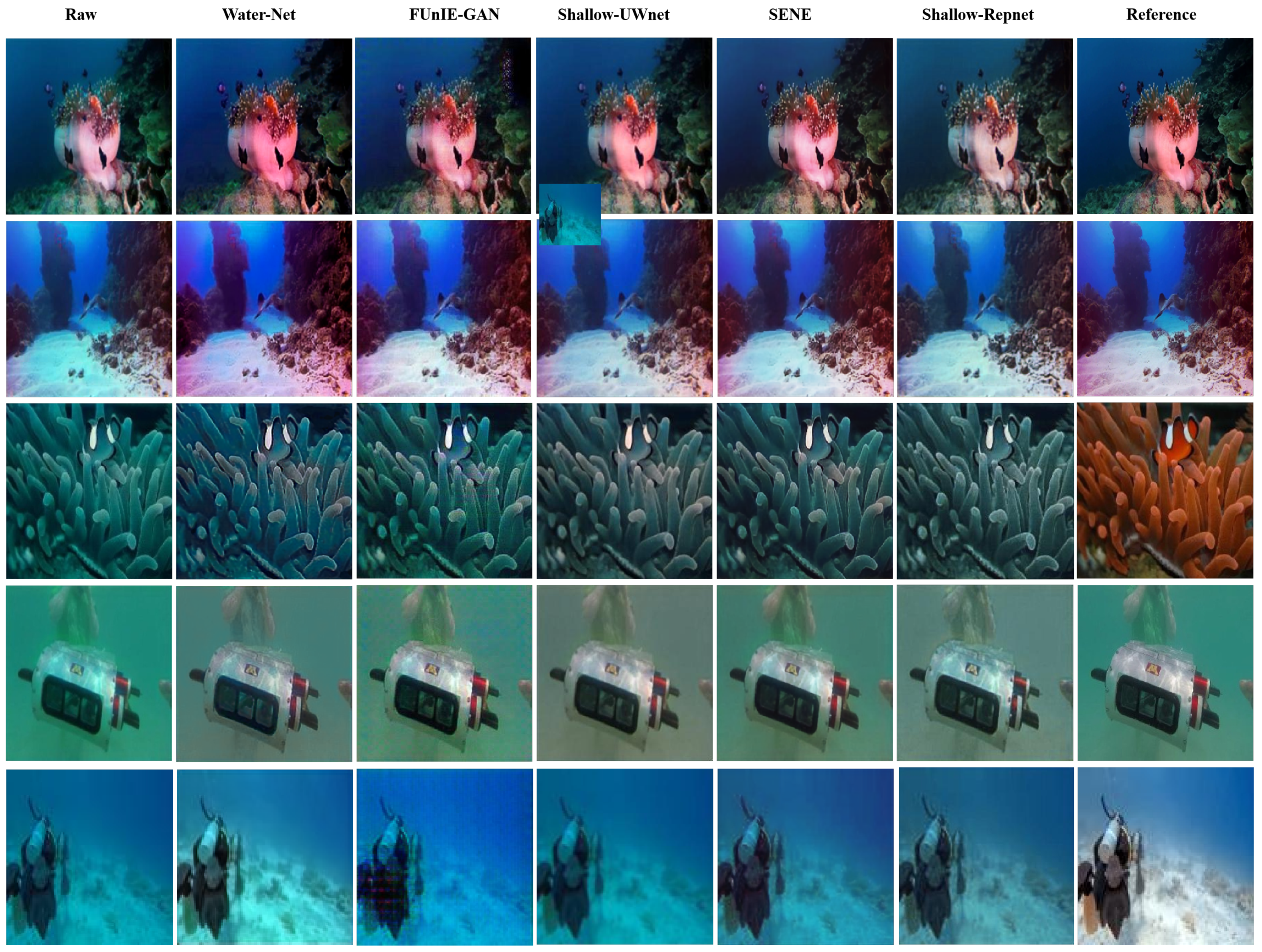

4.4. Comparison with Former Methods

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, J.; Skinner, K.A.; Eustice, R.M.; Johnson-Roberson, M. WaterGAN: Unsupervised generative network to enable real-time color correction of monocular underwater images. IEEE Robot. Autom. Lett. 2017, 3, 387–394. [Google Scholar] [CrossRef]

- Islam, M.J.; Ho, M.; Sattar, J. Understanding human motion and gestures for underwater human–robot collaboration. J. Field Robot. 2019, 36, 851–873. [Google Scholar] [CrossRef]

- Du, X.; Hu, X.; Hu, J.; Sun, Z. An adaptive interactive multi-model navigation method based on UUV. Ocean Eng. 2022, 267, 113217. [Google Scholar] [CrossRef]

- Schettini, R.; Corchs, S. Underwater image processing: State of the art of restoration and image enhancement methods. EURASIP J. Adv. Signal Process. 2010, 2010, 746052. [Google Scholar] [CrossRef]

- Ancuti, C.; Ancuti, C.O.; Haber, T.; Bekaert, P. Enhancing underwater images and videos by fusion. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 81–88. [Google Scholar]

- Ghani, A.S.A.; Isa, N.A.M. Underwater image quality enhancement through integrated color model with Rayleigh distribution. Appl. Soft Comput. 2015, 27, 219–230. [Google Scholar] [CrossRef]

- Fabbri, C.; Islam, M.J.; Sattar, J. Enhancing underwater imagery using generative adversarial networks. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 7159–7165. [Google Scholar]

- Shortis, M.; Abdo, E.H.D. A review of underwater stereo-image measurement for marine biology and ecology applications. In Oceanography and Marine Biology; CRC Press: Boca Raton, FL, USA, 2016; pp. 269–304. [Google Scholar]

- Johnson, J.; Alahi, A.; Li, F. Perceptual losses for real-time style transfer and super-resolution. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part II 14. Springer International Publishing: Cham, Switzerland, 2016; pp. 694–711. [Google Scholar]

- Mahendran, A.; Vedaldi, A. Understanding deep image representations by inverting them. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5188–5196. [Google Scholar]

- Simonyan, K.; Vedaldi, A.; Zisserman, A. Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv 2013, arXiv:1312.6034. [Google Scholar]

- Yosinski, J.; Clune, J.; Nguyen, A.; Fuchs, T.; Lipson, H. Understanding neural networks through deep visualization. arXiv 2015, arXiv:1506.06579. [Google Scholar]

- Wu, Z.; Zhu, Z.; Du, J.; Bai, X. CCPL: Contrastive Coherence Preserving Loss for Versatile Style Transfer. In Proceedings of the Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Proceedings, Part XVI. Springer Nature: Cham, Switzerland, 2022; pp. 189–206. [Google Scholar]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. Repvgg: Making vgg-style convnets great again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13733–13742. [Google Scholar]

- Naik, A.; Swarnakar, A.; Mittal, K. Shallow-uwnet: Compressed model for underwater image enhancement (student abstract). Proc. AAAI Conf. Artif. Intell. 2021, 35, 15853–15854. [Google Scholar] [CrossRef]

- Hou, M.; Liu, R.; Fan, X.; Luo, Z. Joint residual learning for underwater image enhancement. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 4043–4047. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar]

- Drews, P.L.; Nascimento, E.R.; Botelho, S.S.; Campos, M.F.M. Underwater depth estimation and image restoration based on single images. IEEE Comput. Graph. Appl. 2016, 36, 24–35. [Google Scholar] [CrossRef]

- Li, C.; Guo, J.; Guo, C. Emerging from water: Underwater image color correction based on weakly supervised color transfer. IEEE Signal Process. Lett. 2018, 25, 323–327. [Google Scholar] [CrossRef]

- Liu, C.; Wu, Q.; Wang, R.; Yang, W. Underwater Image Enhancement using a Residual Network. IEEE Trans. Image Process. 2018, 28, 6076–6087. [Google Scholar]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef] [PubMed]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Liu, S.; Fan, H.; Lin, S.; Wang, Q.; Ding, N.; Tang, Y. Adaptive learning attention network for underwater image enhancement. IEEE Robot. Autom. Lett. 2022, 7, 5326–5333. [Google Scholar] [CrossRef]

- Zhou, Y.; Yan, K.; Li, X. Underwater image enhancement via physical-feedback adversarial transfer learning. IEEE J. Ocean. Eng. 2021, 47, 76–87. [Google Scholar] [CrossRef]

- Verma, G.; Kumar, M.; Raikwar, S. FCNN: Fusion-based underwater image enhancement using multilayer convolution neural network. J. Electron. Imaging 2022, 31, 063039. [Google Scholar] [CrossRef]

- Liu, X.; Gao, Z.; Chen, B.M. MLFcGAN: Multilevel feature fusion-based conditional GAN for underwater image color correction. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1488–1492. [Google Scholar] [CrossRef]

- Papamakarios, G.; Nalisnick, E.; Rezende, D.J.; Mohamed, S.; Lakshminarayanan, B. Normalizing flows for probabilistic modeling and inference. J. Mach. Learn. Res. 2021, 22, 2617–2680. [Google Scholar]

- Lugmayr, A.; Danelljan, M.; Van Gool, L.; Timofte, R. Srflow: Learning the super-resolution space with normalizing flow. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part V 16. Springer International Publishing: Cham, Switzerland, 2020; pp. 715–732. [Google Scholar]

- Liang, J.; Lugmayr, A.; Zhang, K.; Danelljan, M.; Van Gool, L.; Timofte, R. Hierarchical conditional flow: A unified framework for image super-resolution and image rescaling. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 4076–4085. [Google Scholar]

- Wang, Y.; Wan, R.; Yang, W.; Li, H.; Chau, L.P.; Kot, A. Low-light image enhancement with normalizing flow. Proc. AAAI Conf. Artif. Intell. 2022, 36, 2604–2612. [Google Scholar] [CrossRef]

- Li, J.; Bian, S.; Zeng, A.; Wang, C.; Pang, B.; Liu, W.; Lu, C. Human pose regression with residual log-likelihood estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 11025–11034. [Google Scholar]

- Wu, L.; Tian, F.; Xia, Y.; Fan, Y.; Qin, T.; Jian-Huang, L.; Liu, T.Y. Learning to teach with dynamic loss functions. In Advances in Neural Information Processing Systems; Cornell University: Ithaca, NY, USA, 2018; Volume 31. [Google Scholar]

- Kalman, R.E.; Bucy, R.S. New results in linear filtering and prediction theory. J. Basic Eng. 1961, 83, 95–108. [Google Scholar] [CrossRef]

- Cameron, K.W.; Sun, X.H. Quantifying locality effect in data access delay: Memory logP. In Proceedings of the International Parallel and Distributed Processing Symposium, Nice, France, 22–26 April 2003; 8p. [Google Scholar]

- Lam, M.D.; Rothberg, E.E.; Wolf, M.E. The cache performance and optimizations of blocked algorithms. ACM SIGOPS Oper. Syst. Rev. 1991, 25, 63–74. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, X.; Yang, J.; Michailidis, T.; Swanson, S.; Zhao, J. Characterizing and modeling non-volatile memory systems. In Proceedings of the 2020 53rd Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), Athens, Greece, 17–21 October 2020; pp. 496–508. [Google Scholar]

- Sakr, M.F.; Levitan, S.P.; Chiarulli, D.M.; Horne, B.G.; Giles, C.L. Predicting multiprocessor memory access patterns with learning models. ICML 1997, 97, 305–312. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Islam, M.J.; Luo, P.; Sattar, J. Simultaneous enhancement and super-resolution of underwater imagery for improved visual perception. arXiv 2020, arXiv:2002.01155. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Korhonen, J.; You, J. Peak signal-to-noise ratio revisited: Is simple beautiful? In Proceedings of the 2012 Fourth International Workshop on Quality of Multimedia Experience, Melbourne, VIC, Australia, 5–7 July 2012; pp. 37–38. [Google Scholar]

- Yang, M.; Sowmya, A. An underwater color image quality evaluation metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef]

- Cai, J.; Zhang, W.; Lin, W.; Liu, S.; Wang, Y. Deep self-example-based super-resolution for 489 underwater images. IEEE Trans. Image Process. 2019, 29, 3599–3612. [Google Scholar]

- He, Y.; Lin, J.; Liu, Z.; Wang, H.; Li, L.J.; Han, S. Amc: Automl for model compression and acceleration on mobile devices. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 784–800. [Google Scholar]

| Metric | Datasets | Water-Net [21] | FUnIE-GAN [22] | Deep SESR [45] | Shallow-UWnet [15] | Shallow-RepNet |

|---|---|---|---|---|---|---|

| PSNR | EUVP-Dark | 24.43 ± 4.64 | 26.19 ± 2.87 | 25.30 ± 2.63 | 27.39 ± 2.70 | 24.49 ± 2.45 |

| UFO-120 | 23.12 ± 3.31 | 24.72 ± 2.57 | 26.46 ± 3.13 | 25.20 ± 2.88 | 22.32 ± 2.42 | |

| UIEBD | 19.11 ± 3.68 | 19.13 ± 3.91 | 19.26 ± 3.56 | 18.99 ± 3.60 | 19.80 ± 2.76 | |

| SSIM | EUVP-Dark | 0.82 ± 0.08 | 0.82 ± 0.08 | 0.81 ± 0.07 | 0.83 ± 0.07 | 0.79 ± 0.06 |

| UFO-120 | 0.73 ± 0.07 | 0.74 ± 0.06 | 0.78 ± 0.07 | 0.78 ± 0.07 | 0.72 ± 0.07 | |

| UIEBD | 0.79 ± 0.09 | 0.73 ± 0.11 | 0.73 ± 0.11 | 0.67 ± 0.13 | 0.77 ± 0.08 | |

| UIQM | EUVP-Dark | 2.97 ± 0.32 | 2.84 ± 0.46 | 2.95 ± 0.32 | 2.98 ± 0.38 | 2.82 ± 0.29 |

| UFO-120 | 2.94 ± 0.38 | 2.88 ± 0.41 | 2.98 ± 0.37 | 2.85 ± 0.37 | 2.98 ± 0.33 | |

| UIEBD | 3.02 ± 0.34 | 2.99 ± 0.39 | 2.95 ± 0.39 | 2.77 ± 0.43 | 2.79 ± 0.32 |

| Method | SSIM (↑) | PSNR (↑) |

|---|---|---|

| Shallow-UWnet | 0.65 ± 0.09 | 15.81 ± 2.79 |

| Shallow-RepNet+BN | 0.74 ± 0.09 | 18.05 ± 2.73 |

| Shallow-RepNet+BN+ | 0.76 ± 0.07 | 19.80 ± 2.93 |

| Model | #Parameters | Compression Ratio | Testing per Image (secs) | Speed-Up |

|---|---|---|---|---|

| Shallow-RepNet (Train) | 127,555 | - | - | - |

| Shallow-RepNet (Test) | 114,307 | 0.9 | 0.0039 | 0.7 |

| Shallow-UWnet | 219,840 | 1 | 0.02 | 1 |

| Water-Net | 1,090,668 | 3.96 | 0.50 | 24 |

| Deep SESR | 2,454,023 | 10.17 | 0.16 | 7 |

| FUnIE-GAN | 4,212,707 | 18.17 | 0.18 | 8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, J.; Zhuang, J.; Zheng, Y.; Li, J. Area Contrast Distribution Loss for Underwater Image Enhancement. J. Mar. Sci. Eng. 2023, 11, 909. https://doi.org/10.3390/jmse11050909

Zhou J, Zhuang J, Zheng Y, Li J. Area Contrast Distribution Loss for Underwater Image Enhancement. Journal of Marine Science and Engineering. 2023; 11(5):909. https://doi.org/10.3390/jmse11050909

Chicago/Turabian StyleZhou, Jiajia, Junbin Zhuang, Yan Zheng, and Juan Li. 2023. "Area Contrast Distribution Loss for Underwater Image Enhancement" Journal of Marine Science and Engineering 11, no. 5: 909. https://doi.org/10.3390/jmse11050909

APA StyleZhou, J., Zhuang, J., Zheng, Y., & Li, J. (2023). Area Contrast Distribution Loss for Underwater Image Enhancement. Journal of Marine Science and Engineering, 11(5), 909. https://doi.org/10.3390/jmse11050909