Fusion2Fusion: An Infrared–Visible Image Fusion Algorithm for Surface Water Environments

Abstract

1. Introduction

2. Related Work

3. Proposed Fusion Method

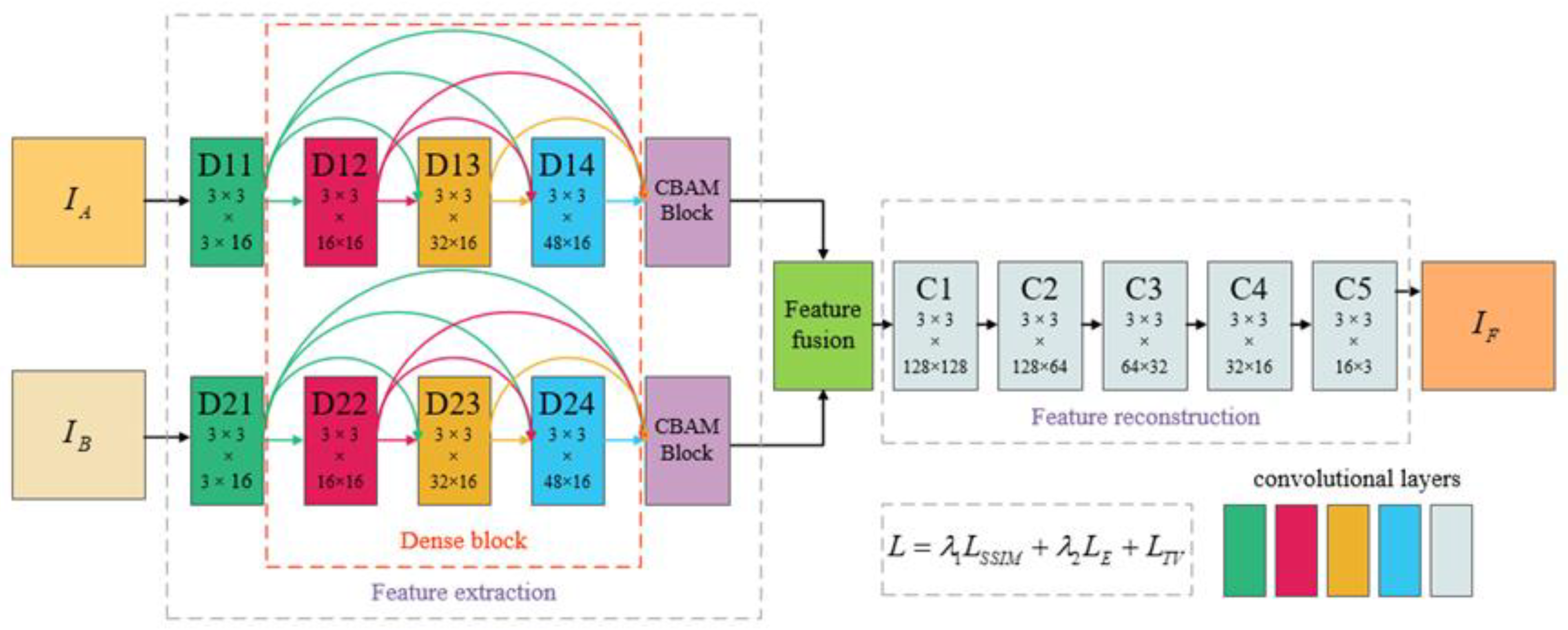

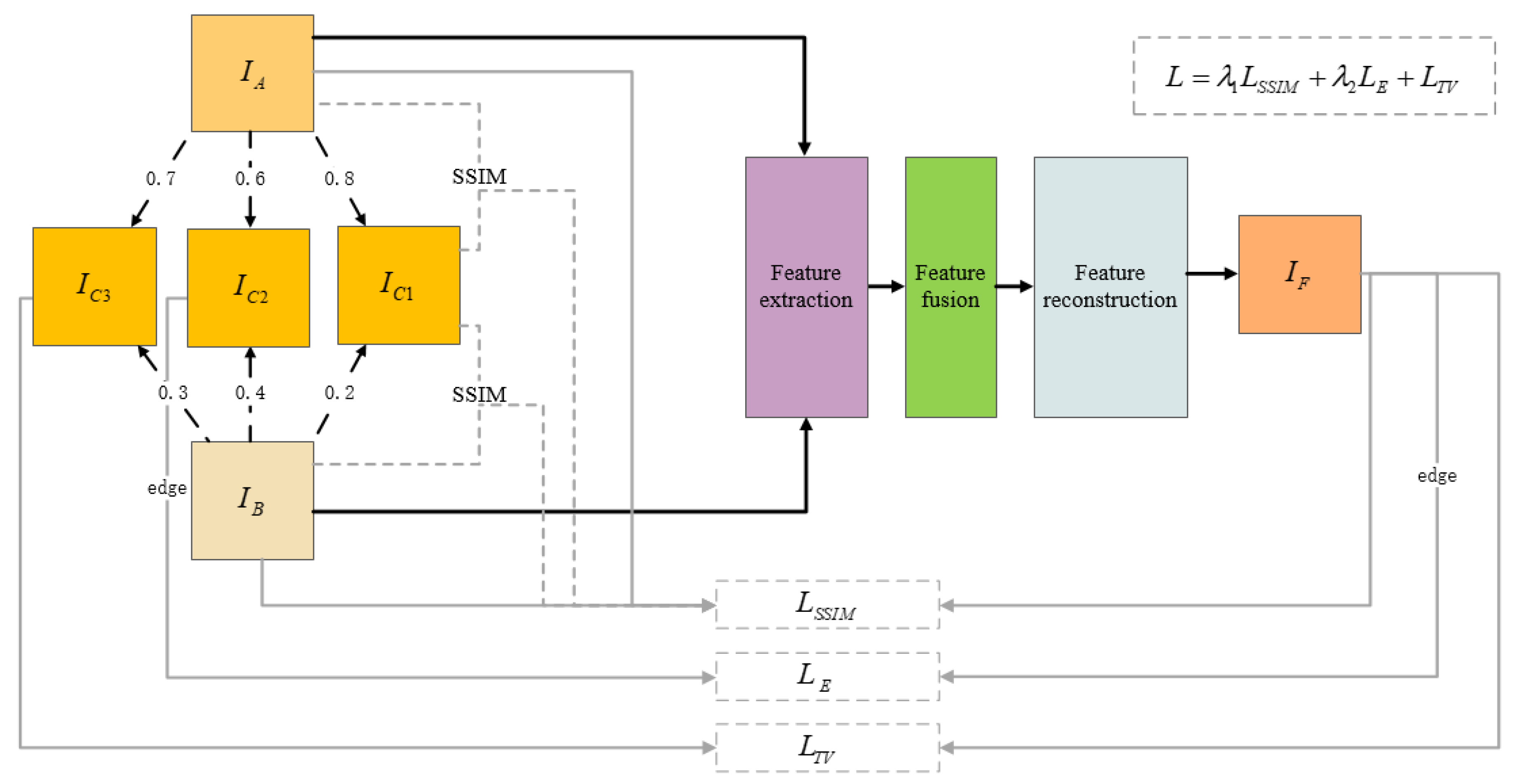

3.1. Network Architecture

3.2. Loss Function

3.3. Training

4. Experimental Results and Analysis

4.1. Experimental Setting

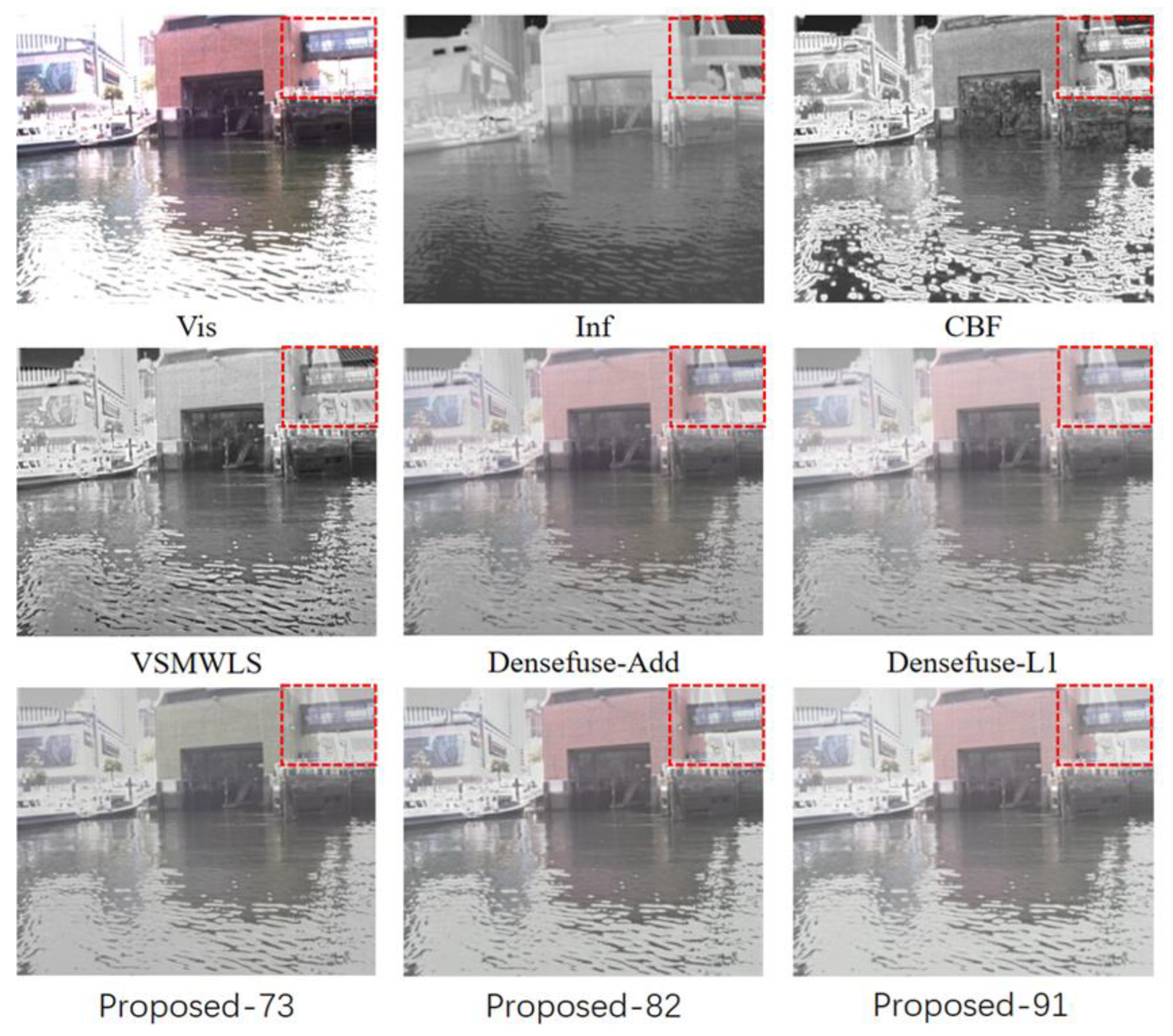

4.2. Fusion Method Evaluation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ben Hamza, A.; He, Y.; Krim, H.; Willsky, A. A multiscale approach to pixel-level image fusion. Integr. Comput. Eng. 2005, 12, 135–146. [Google Scholar] [CrossRef]

- Zhang, Q.; Fu, Y.; Li, H.; Zou, J. Dictionary learning method for joint sparse representation-based image fusion. Opt. Eng. 2013, 52, 057006. [Google Scholar] [CrossRef]

- Gao, R.; Vorobyov, S.A.; Zhao, H. Image Fusion with Cosparse Analysis Operator. IEEE Signal Process. Lett. 2017, 24, 943–947. [Google Scholar] [CrossRef]

- Zong, J.-J.; Qiu, T.-S. Medical image fusion based on sparse representation of classified image patches. Biomed. Signal Process. Control. 2017, 34, 195–205. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.-J. Multi-focus Image Fusion Using Dictionary Learning and Low-Rank Representation. In Image and Graphics; Zhao, Y., Kong, X., Taubman, D., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 675–686. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Peng, H.; Wang, Z. Multi-focus image fusion with a deep convolutional neural network. Inf. Fusion 2017, 36, 191–207. [Google Scholar] [CrossRef]

- Melekhov, I.; Kannala, J.; Rahtu, E. Siamese network features for image matching. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 378–383. [Google Scholar] [CrossRef]

- Wu, W.; Qiu, Z.; Zhao, M.; Huang, Q.; Lei, Y. Visible and infrared image fusion using NSST and deep Boltzmann machine. Optik 2018, 157, 334–342. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.-J.; Kittler, J. Infrared and Visible Image Fusion using a Deep Learning Framework. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.-J. DenseFuse: A Fusion Approach to Infrared and Visible Images. IEEE Trans. Image Process. 2019, 28, 2614–2623. [Google Scholar] [CrossRef]

- Pu, T. Contrast-based image fusion using the discrete wavelet transform. Opt. Eng. 2000, 39, 2075. [Google Scholar] [CrossRef]

- Burt, P.; Adelson, E. The Laplacian Pyramid as a Compact Image Code. IEEE Trans. Commun. 1983, 31, 532–540. [Google Scholar] [CrossRef]

- Do, M.; Vetterli, M. The contourlet transform: An efficient directional multiresolution image representation. IEEE Trans. Image Process. 2005, 14, 2091–2106. [Google Scholar] [CrossRef]

- Da Cunha, A.; Zhou, J.; Do, M. The Nonsubsampled Contourlet Transform: Theory, Design, and Applications. IEEE Trans. Image Process. 2006, 15, 3089–3101. [Google Scholar] [CrossRef]

- Luo, X.; Zhang, Z.; Zhang, B.; Wu, X. Image Fusion with Contextual Statistical Similarity and Nonsubsampled Shearlet Transform. IEEE Sensors J. 2016, 17, 1760–1771. [Google Scholar] [CrossRef]

- Yang, X.; Wang, J.; Zhu, R. Random Walks for Synthetic Aperture Radar Image Fusion in Framelet Domain. IEEE Trans. Image Process. 2017, 27, 851–865. [Google Scholar] [CrossRef]

- Quan, S.; Qian, W.; Guo, J.; Zhao, H. Visible and infrared image fusion based on Curvelet transform. In Proceedings of the The 2014 2nd International Conference on Systems and Informatics (ICSAI 2014), Shanghai, China, 15–17 November 2014; pp. 828–832. [Google Scholar] [CrossRef]

- Ahmed, N.; Natarajan, T.; Rao, K. Discrete Cosine Transform. IEEE Trans. Comput. 1974, C-23, 90–93. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Ward, R.K.; Wang, Z.J. Image Fusion with Convolutional Sparse Representation. IEEE Signal Process. Lett. 2016, 23, 1882–1886. [Google Scholar] [CrossRef]

- Prabhakar, K.R.; Srikar, V.S.; Babu, R.V. DeepFuse: A Deep Unsupervised Approach for Exposure Fusion with Extreme Exposure Image Pairs. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4724–4732. [Google Scholar] [CrossRef]

- Hou, R.; Zhou, D.; Nie, R.; Liu, D.; Xiong, L.; Guo, Y.B.; Yu, C. VIF-Net: An Unsupervised Framework for Infrared and Visible Image Fusion. IEEE Trans. Comput. Imaging 2020, 6, 640–651. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on International Conference on Machine Learning, Lille, France, 6–11 July 2015; JMLR.org: Lille, France, 2015; Volume 37, pp. 448–456. [Google Scholar]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep Sparse Rectifier Neural Networks. In Proceedings of the Fourteenth International Con-ference on Artificial Intelligence and Statistics, JMLR Workshop and Conference Proceedings, Fort Lauderdale, FL, USA, 11–13 April 2011; pp. 315–323. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar]

- Kumar, B.K.S. Image fusion based on pixel significance using cross bilateral filter. Signal Image Video Process. 2013, 9, 1193–1204. [Google Scholar] [CrossRef]

- Ma, J.; Zhou, Z.; Wang, B.; Zong, H. Infrared and visible image fusion based on visual saliency map and weighted least square optimization. Infrared Phys. Technol. 2017, 82, 8–17. [Google Scholar] [CrossRef]

- Xydeas, C.; Petrović, V. Objective image fusion performance measure. Electron. Lett. 2000, 36, 308–309. [Google Scholar] [CrossRef]

- Aslantas, V.; Bendes, E. A new image quality metric for image fusion: The sum of the correlations of differences. AEU-Int. J. Electron. Commun. 2015, 69, 1890–1896. [Google Scholar] [CrossRef]

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multiscale structural similarity for image quality assessment. In Proceedings of the Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, Pacific Grove, CA, USA, 9–12 November 2003. [Google Scholar] [CrossRef]

- Klonus, S.; Ehlers, M. Performance of evaluation methods in image fusion. In Proceedings of the 2009 12th International Conference on Information Fusion, Seattle, WA, USA, 6–9 July 2009; pp. 1409–1416. [Google Scholar]

| Methods | QAB/F | SCD | SSIM | MS-SSIM | EN | QNCIE |

|---|---|---|---|---|---|---|

| CBF | 0.361383 | 0.6890 | 0.591628 | 0.744972 | 7.626591 | 0.810328431 |

| VSMWLS | 0.361481 | 1.4520 | 0.683922 | 0.831989 | 7.366922 | 0.810889691 |

| Densefuse-Add | 0.373791 | 1.2658 | 0.729981 | 0.845292 | 6.965533 | 0.811113586 |

| Densefuse-L1 | 0.371051 | 1.0967 | 0.715435 | 0.841639 | 6.847064 | 0.811168666 |

| Ours-91 | 0.397623 | 1.2879 | 0.712871 | 0.855669 | 6.790240 | 0.818237040 |

| Ours-82 | 0.400324 | 1.2267 | 0.714010 | 0.868377 | 6.848320 | 0.817008603 |

| Ours-73 | 0.369366 | 1.2059 | 0.715949 | 0.846516 | 6.827703 | 0.817501251 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, C.; Qin, H.; Deng, Z.; Zhu, Z. Fusion2Fusion: An Infrared–Visible Image Fusion Algorithm for Surface Water Environments. J. Mar. Sci. Eng. 2023, 11, 902. https://doi.org/10.3390/jmse11050902

Lu C, Qin H, Deng Z, Zhu Z. Fusion2Fusion: An Infrared–Visible Image Fusion Algorithm for Surface Water Environments. Journal of Marine Science and Engineering. 2023; 11(5):902. https://doi.org/10.3390/jmse11050902

Chicago/Turabian StyleLu, Cheng, Hongde Qin, Zhongchao Deng, and Zhongben Zhu. 2023. "Fusion2Fusion: An Infrared–Visible Image Fusion Algorithm for Surface Water Environments" Journal of Marine Science and Engineering 11, no. 5: 902. https://doi.org/10.3390/jmse11050902

APA StyleLu, C., Qin, H., Deng, Z., & Zhu, Z. (2023). Fusion2Fusion: An Infrared–Visible Image Fusion Algorithm for Surface Water Environments. Journal of Marine Science and Engineering, 11(5), 902. https://doi.org/10.3390/jmse11050902