An Intelligent Algorithm for USVs Collision Avoidance Based on Deep Reinforcement Learning Approach with Navigation Characteristics

Abstract

1. Introduction

- (1)

- In many pieces of research, the training environment in each episode is fixed, lacking practical significance, whether complex or not.

- (2)

- Most researchers are not selecting more random seeds to verify the superiority and reliability of their algorithm.

- (3)

- Some researchers are not considering the maneuverability characteristics of USVs adequately.

- (1)

- This paper considers the restriction of maneuverability and international regulations for preventing collisions at sea (COLREGs) in the training process. A suitable training environment with stochasticity and complexity is designed based on the deep reinforcement learning approach. Additionally, considering the collision avoidance process for factors, a meticulous reward signal for USVs training is constructed, which makes training more practical.

- (2)

- Double Q learning method is used to reduce overestimation, dueling neural network architecture to improve training effect, and prioritized experience replay to optimize sampling. The results of various improvements are analytically compared under an abundant training environment based on multiple random number seeds.

- (3)

- Aiming at the hard-exploration problem caused by the training environment with strong randomness, the noisy network method is introduced, which can enhance the detection capability. Experimentally, the best way of noise adding in USV collision avoidance training is confirmed. Considering the characteristics of the USVs collision avoidance problem, the restriction of the dynamic area is introduced in training for calculation reduction and the clip of neural network state input for training effect improvement.

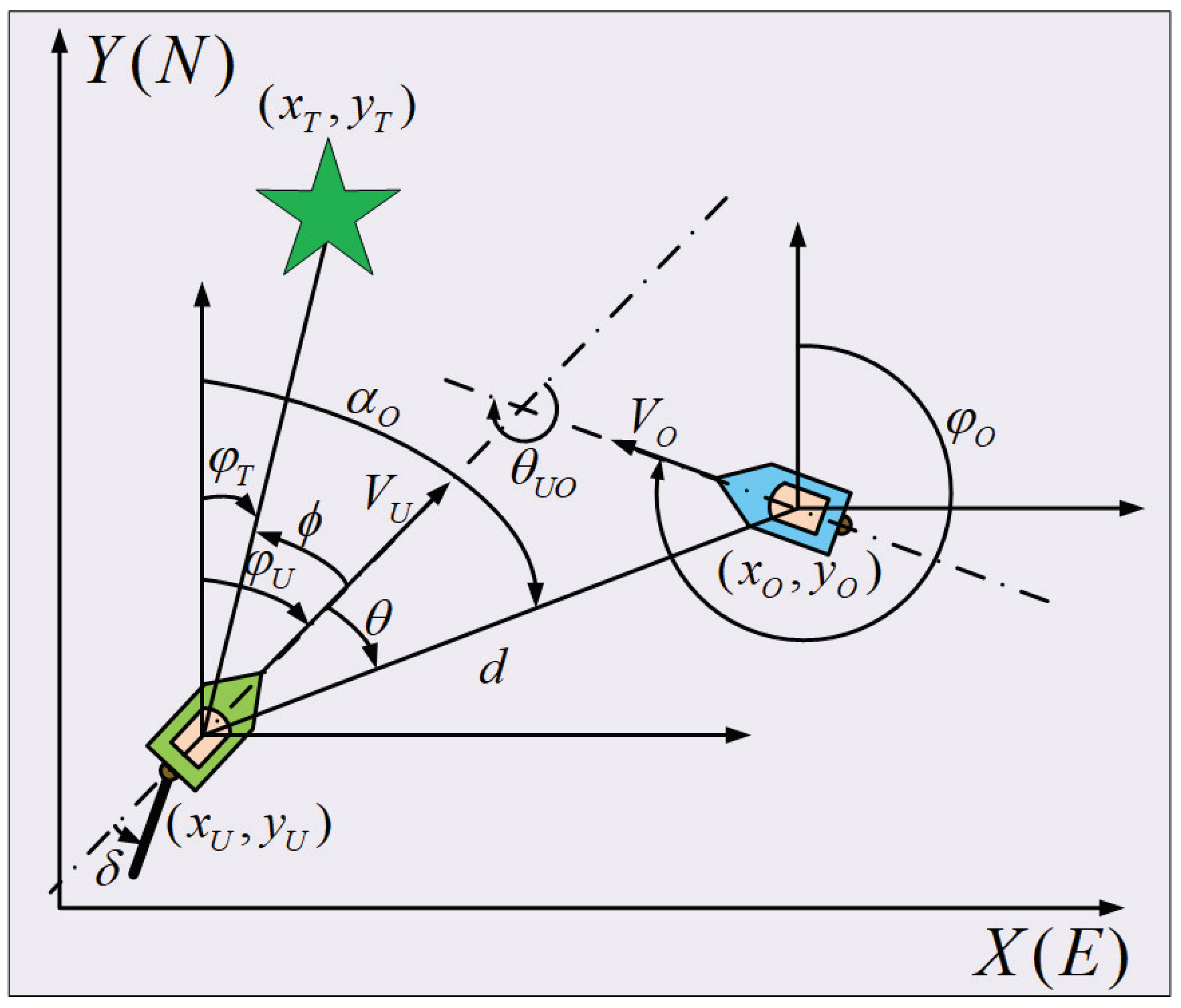

2. USV Collision Avoidance Parameters

2.1. USV Collision Avoidance Characteristics

- (1)

- As shown in Figure 2a, when the obstacle and own are at the relative azimuth of , it is the head-on encounter situation. According to the COLREGs, when there is a hazard of USV collision, both USVs have to avoid each other and should turn to the port side as they pass.

- (2)

- As shown in Figure 2b, when is at the relative azimuth of , and there is a risk of collision, it is the crossing-stand-on encounter situation. should stand on the course, and should turn to starboard.

- (3)

- As shown in Figure 2c, when is at the relative azimuth of , and there is a risk of collision, it is the crossing-give-way encounter situation. should turn to starboard, and should stand on the course.

- (4)

- As shown in Figure 2d, when is at the relative azimuth of , and there is a risk of collision, it is the overtaking encounter situation. should avoid the collision, and turns to starboard or port are allowed.

- (5)

- As shown in Figure 2e, when is at the relative azimuth of , and there is a risk of collision, it is the overtaking encounter situation. should stand on the course.

- (6)

- Additionally, when the obstacle USV is in breach of rules, our own USV should avoid it proactively.

2.2. USV Collision Avoidance Characteristics

3. Deep Reinforcement Learning

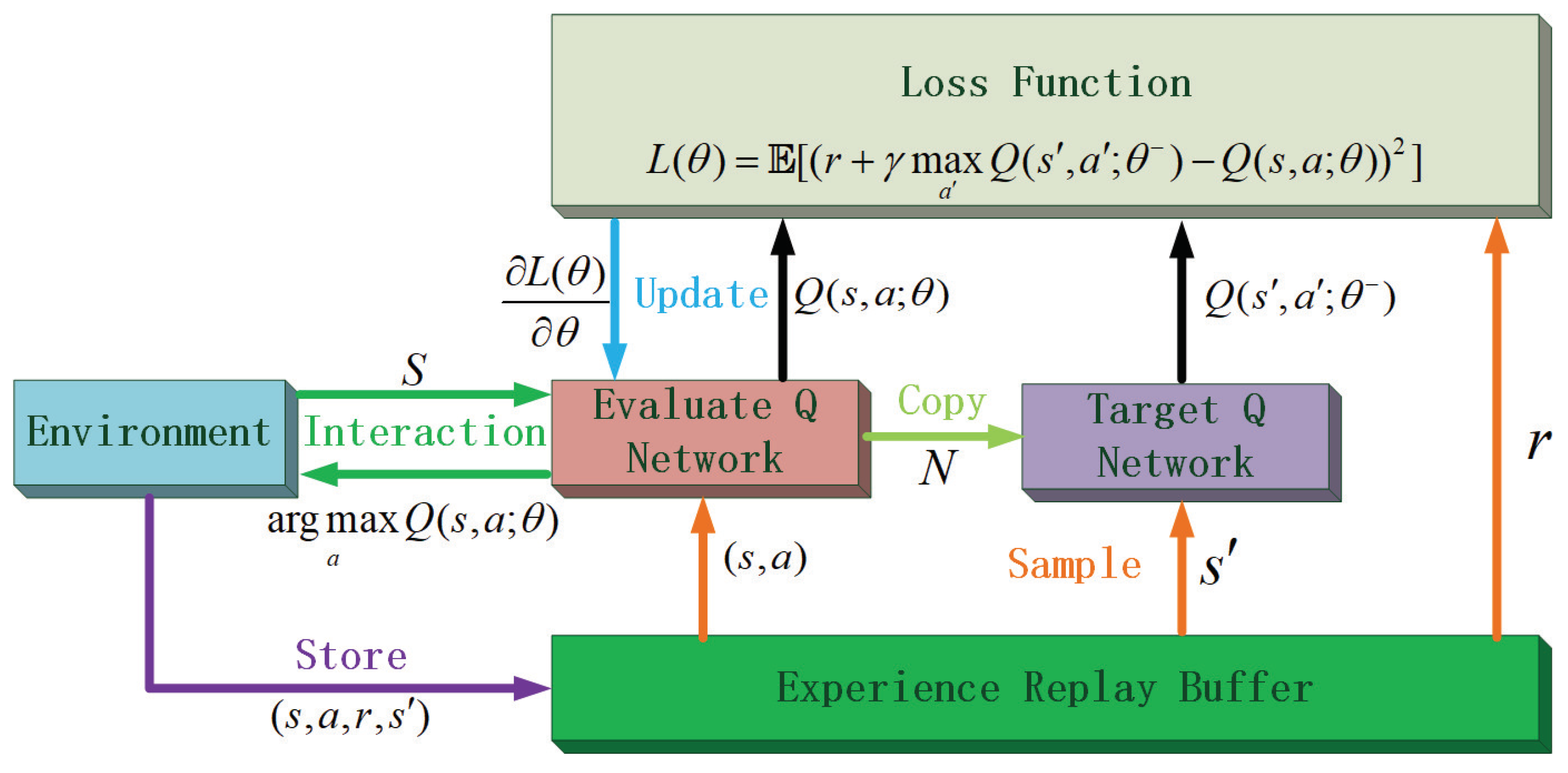

3.1. Deep Q Learning

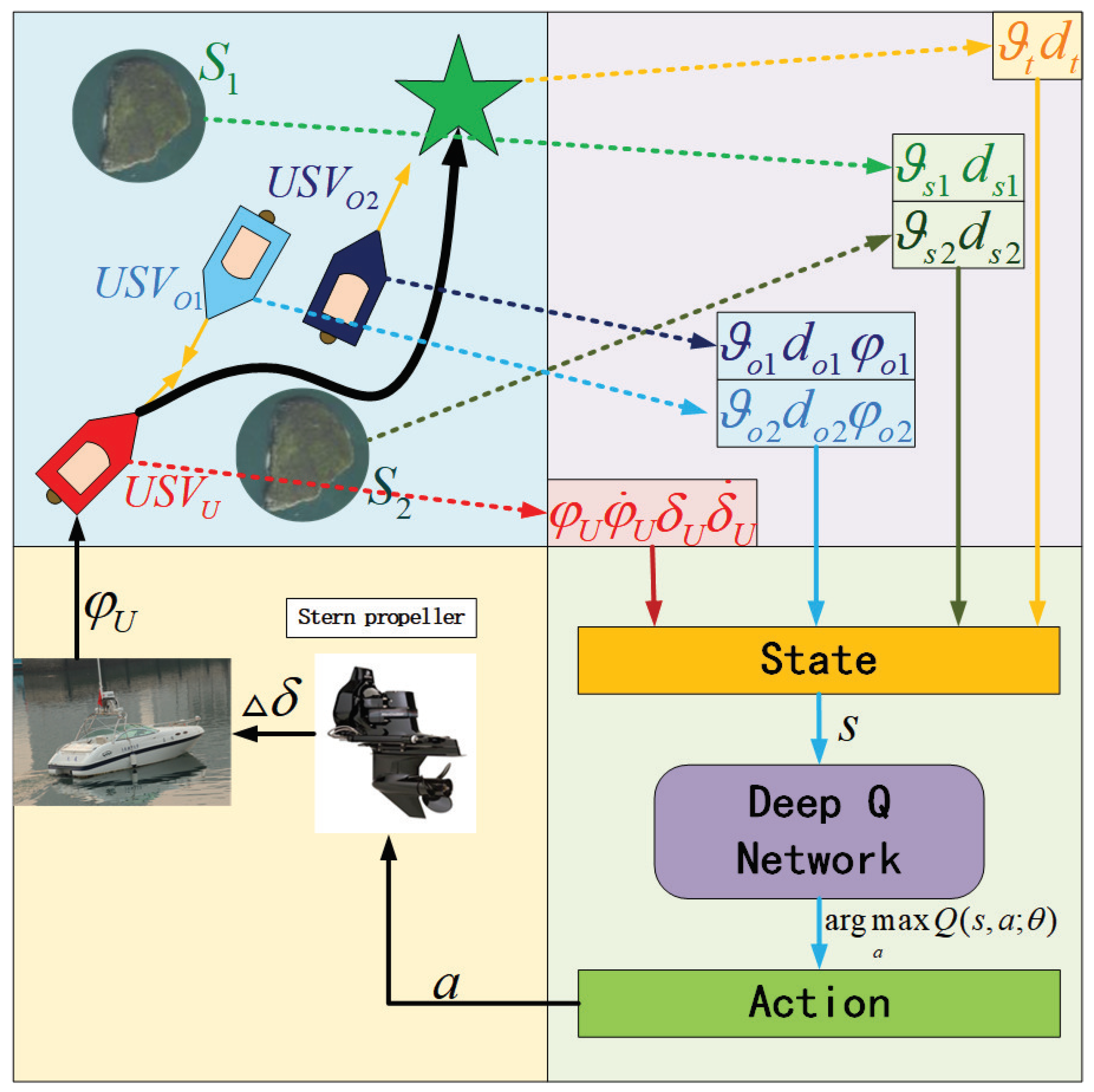

3.2. Collision Avoidance Algorithm for USV

- (1)

- The reward for goal

- (a)

- Terminal rewardThe terminal is where the end of USV navigation is on each training episode. The design of the terminal reward can encourage this good behavior and affect the whole training environment through bootstrap. When , it is considered that reaching the terminal, and getting the reward, .

- (b)

- Collision rewardAvoiding obstacles is another important goal in training. Punishment for collision can teach the trained USV to keep a safe distance from obstacles. When or , it is considered that colliding the obstacle USV. The collision reward obtained is .When , it is considered that colliding the static obstacle. The collision reward is .

- (c)

- COLREGs rewardCOLREGs provide a constraint for USV behaviors. Integrating COLREGs into the training process in a reward signal can endow the trained USV agent with regularized avoidance behavior. When and , the reward signal can be obtained. The more dangerous the moment of breaking the COLREGs, the higher the penalty for USV. When and , or , there are the conditions that the our USV should go straight or turn left or right. The designed reward signal is 0.

- (d)

- Seamanship rewardWhen there are no obstacles or no duty to give way, our USV should keep straight as far as possible. Therefore, the following seamanship reward is designed to restrain the navigation behavior of the USV: When and , the reward is .

- (2)

- Guiding rewardThe guiding reward can enrich the reward signal in a training environment and avoid the training difficulty caused by the sparse rewards problem.

- (a)

- Course rewardThe course that points more toward the terminal is considered to be a better state, so the course reward signal is designed as follows,where is the critical value of the positive or negative reward.

- (b)

- Course better rewardThe agent’s behavior is positive if it makes the course more pointed toward the terminal after an action, so the course better reward signal is designed as follows,Thus, the complete reward signal function can be expressed as,After designing the state space, action space, and reward signal, the training system is completed. Figure 5 shows the complete training architecture. At each step, the state information is input into the neural network, then the value of all actions based on the current network parameters and state is obtained through the neural network. Then the selected action is obtained, resulting in the environment update.

4. Improvement for USV Collision Avoidance Algorithm

4.1. Double DQN

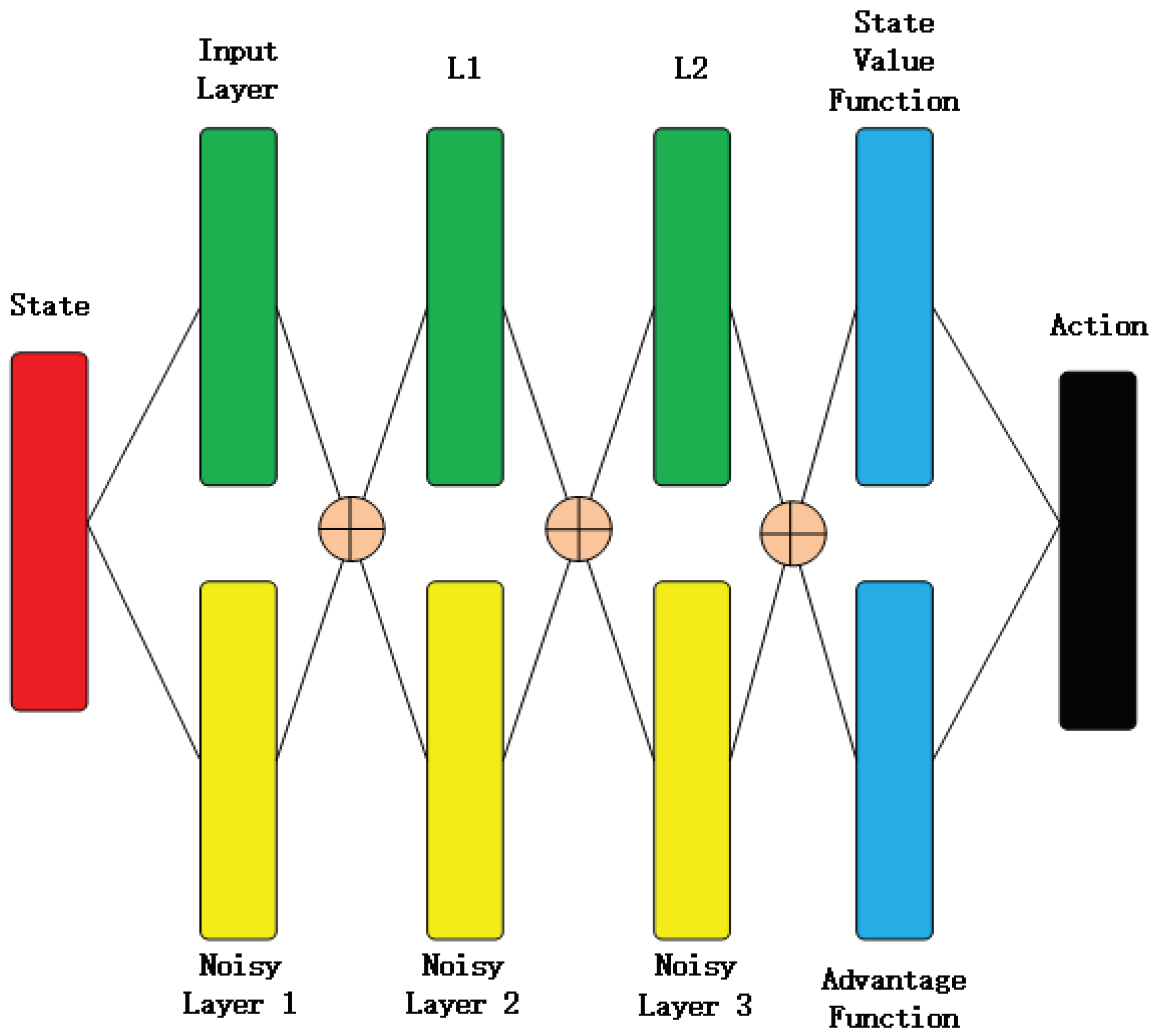

4.2. Dueling DQN

4.3. Prioritized Experience Replay

4.4. Noisy Network

4.5. Improvements with USV Characteristics

| Algorithm 1 DRLCA algorithm code |

Initialize USV training environment Initialize experience replay buffer H to capacity of C Initialize evaluation neural network in Initialize target network in Initialize variance of noise in k For episode = 1, n do Initialize initial states of each USV and static obstacles Initialize speed of our USV and obtain ship domain size r in current episode While true Update the USV collision avoidance training environment Generate and Get noise parameters Select the action with Changing rudder angel by execute action in environment, and obtain Obtain collision avoidance reward signal Store current transition in experience replay buffer H Assign current transition to highest priority By priority for each transition , sample the random minibatch of transitions from H for learning Caculate ISW for each transition in minibatch Caculate TD-error . Obtain Using gradient descent with ISW, Update evaluate network parameters Update for all samples If it is target network updating step update the weight End if The number of steps counted plus 1 End while End for Return the weight of target network |

5. Experiments

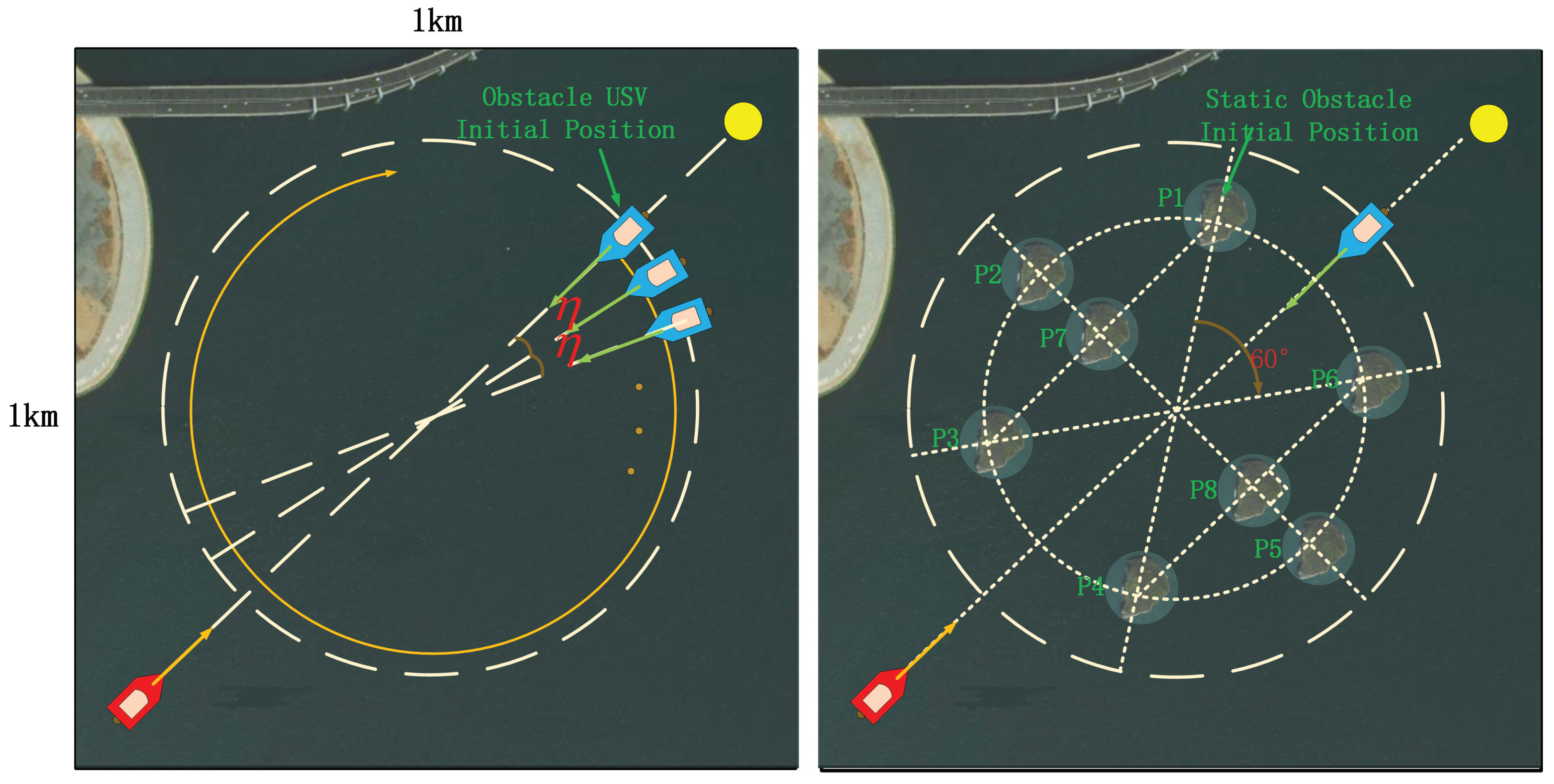

5.1. Training Environment

5.2. Framework for Training

5.3. Training

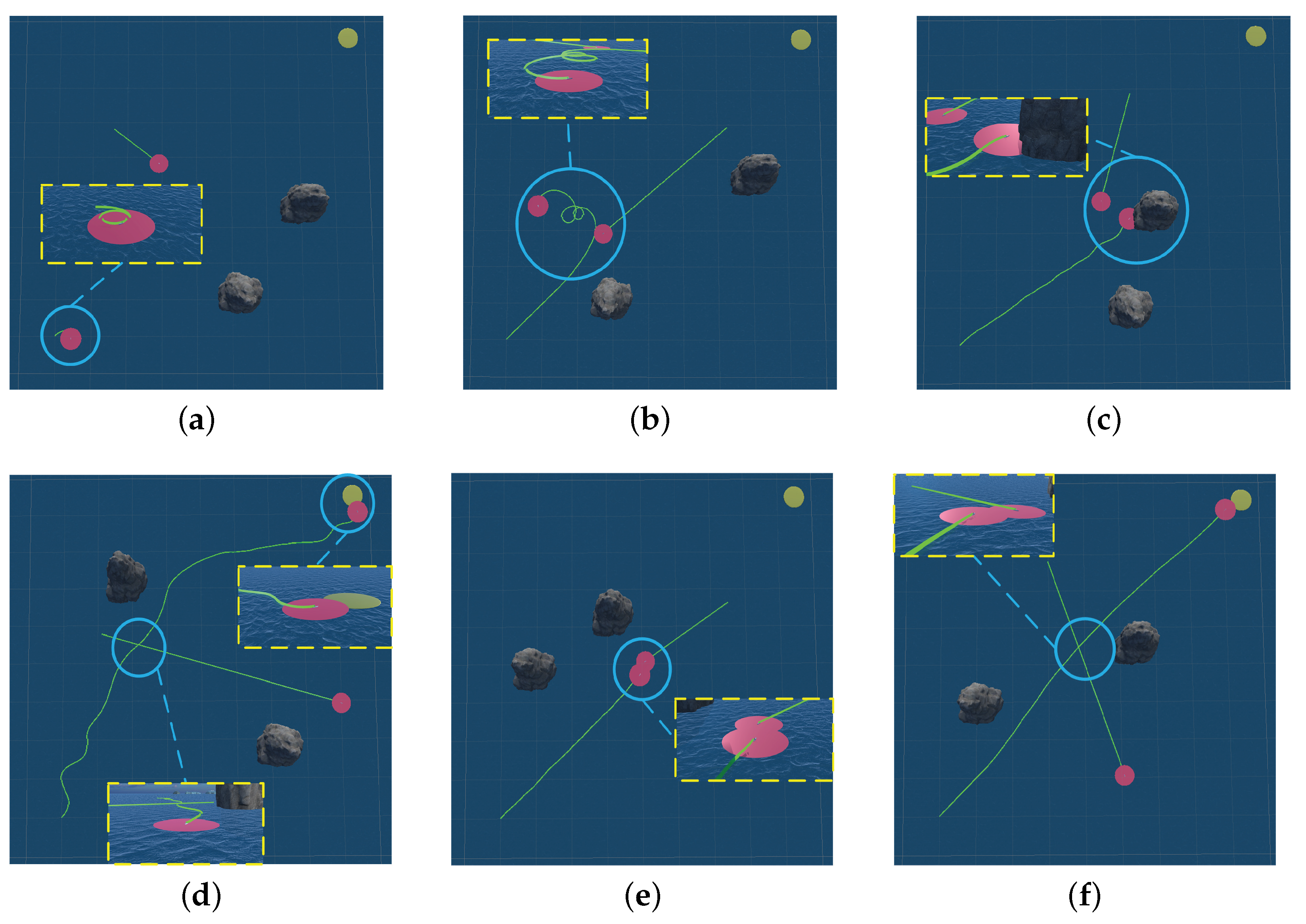

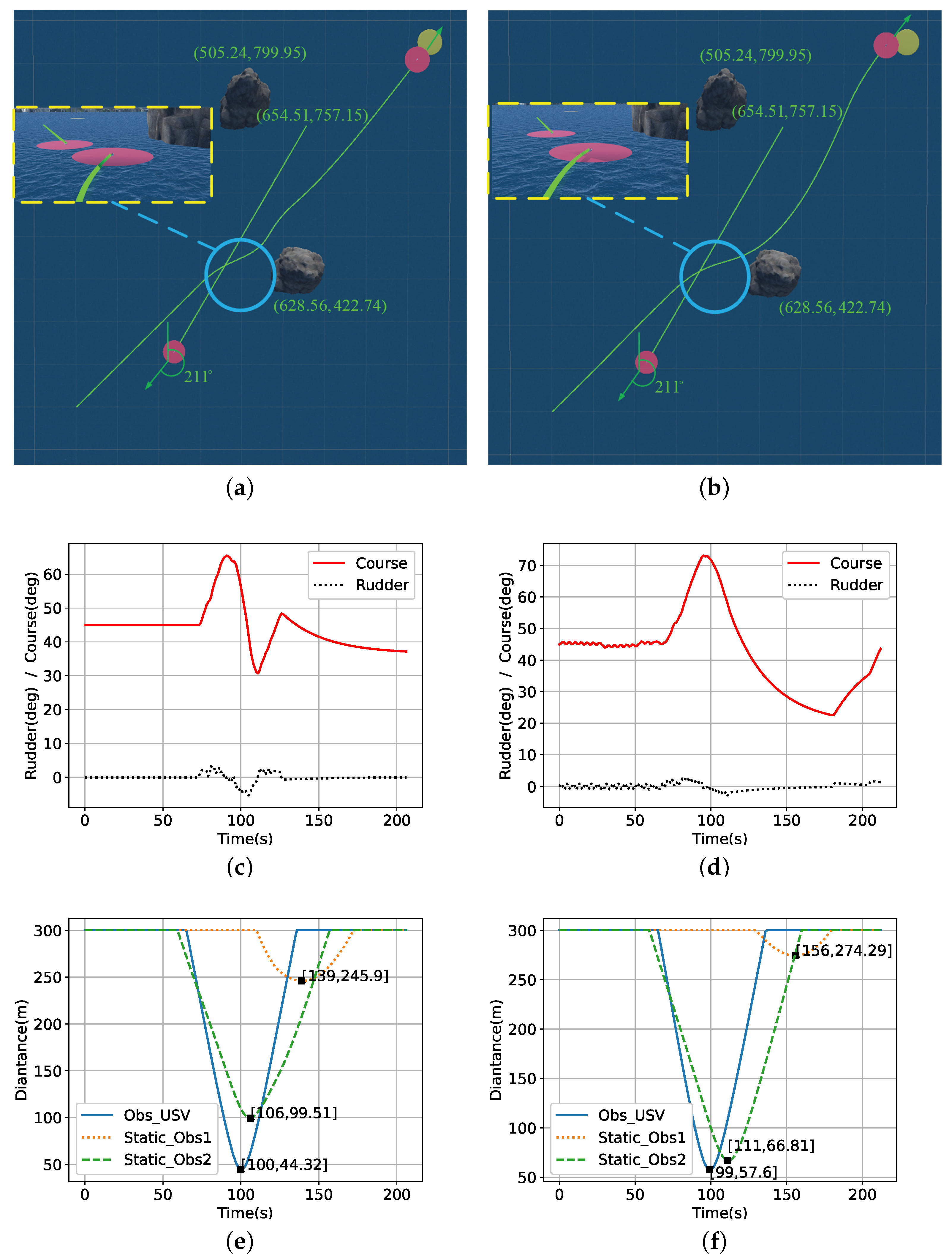

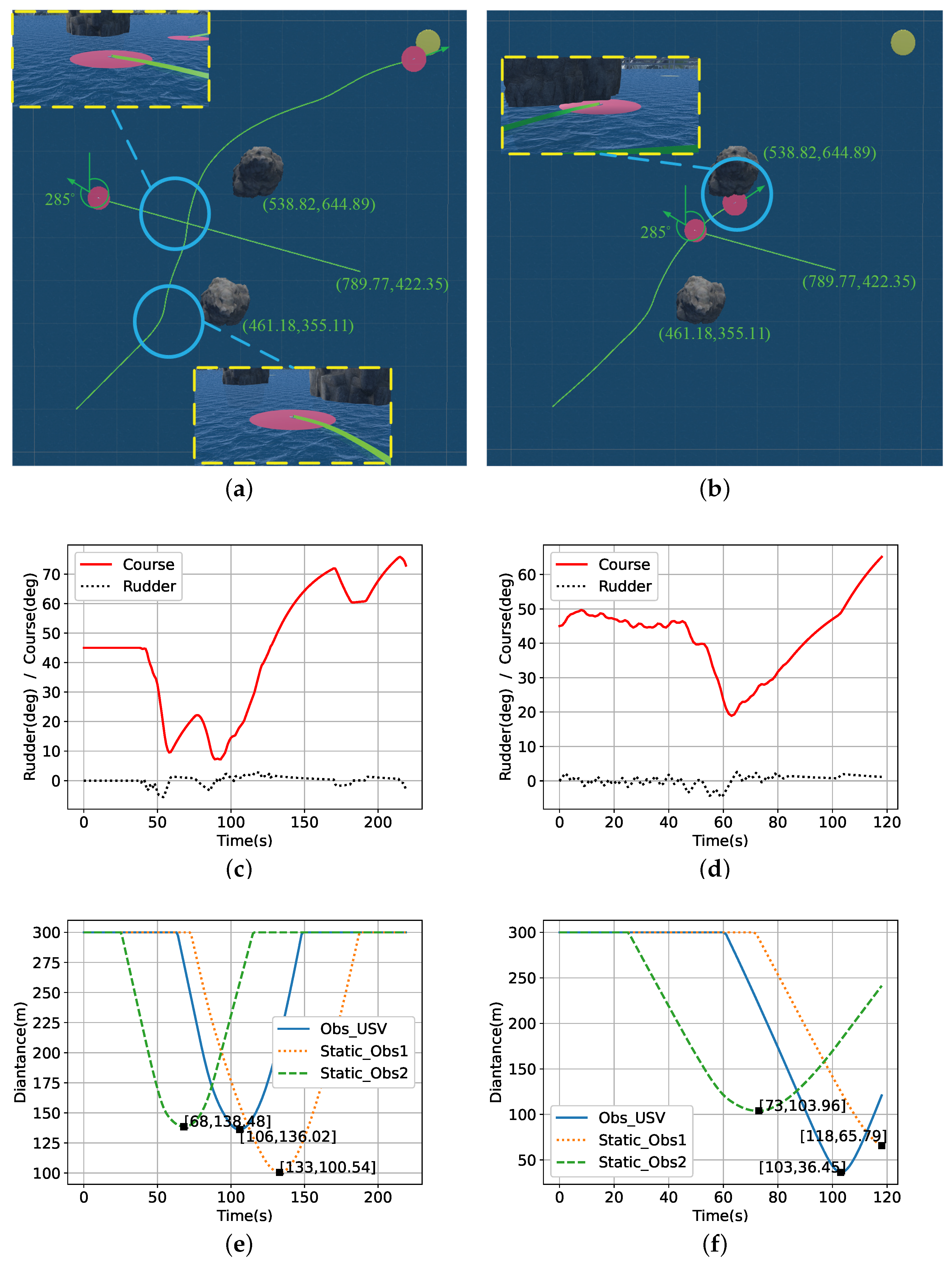

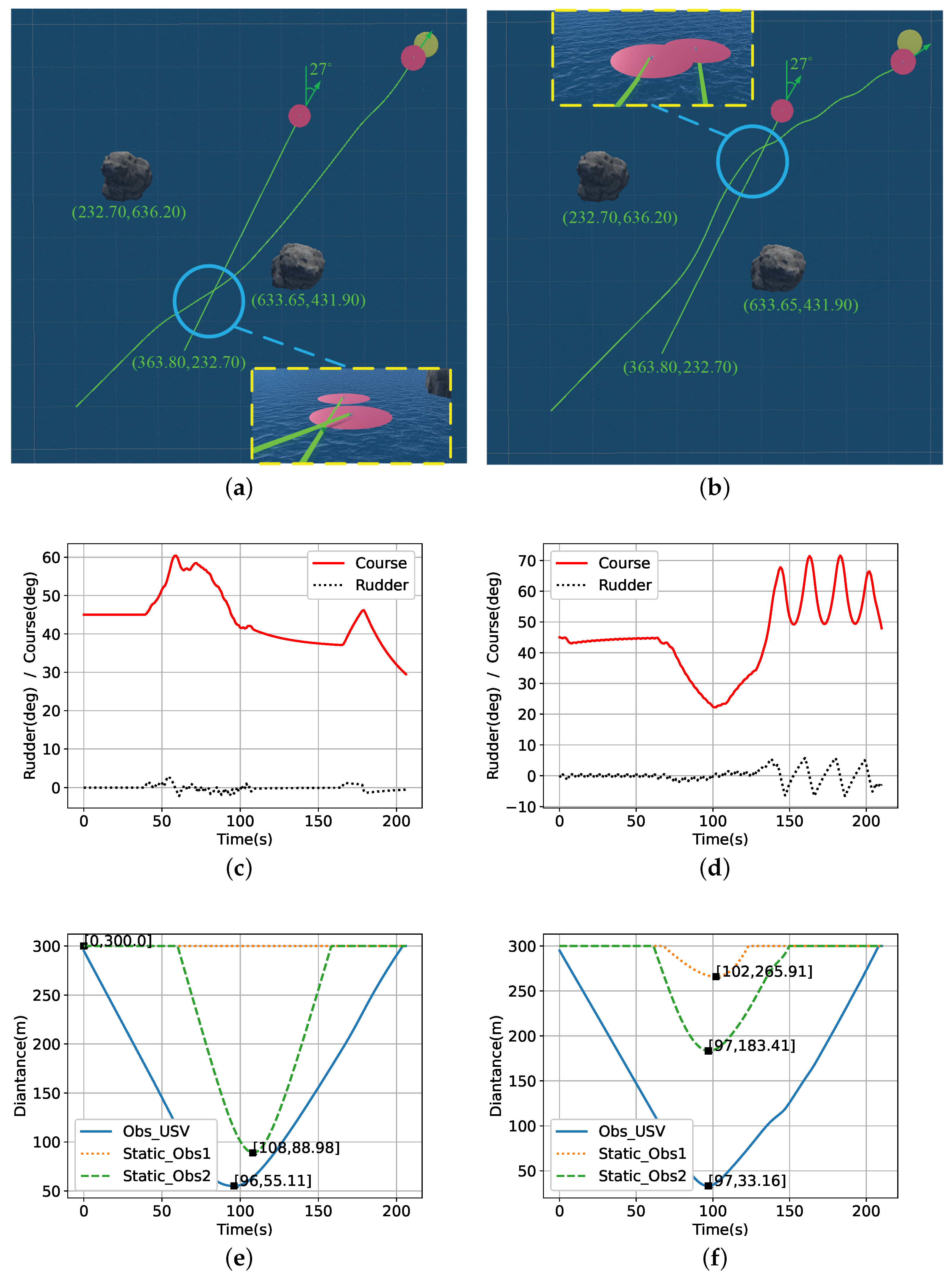

5.4. Test

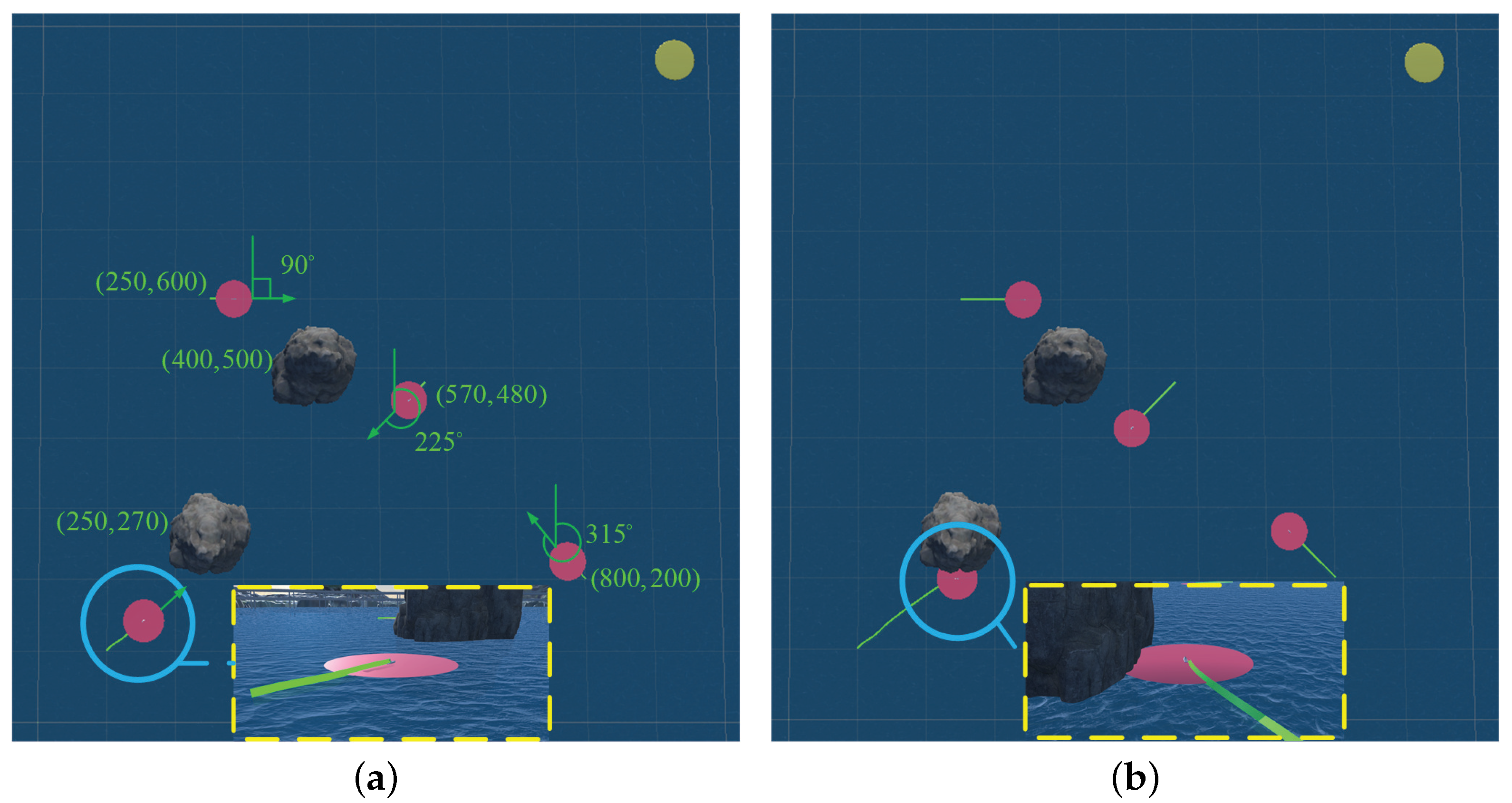

5.5. Multi-Obstacle USV Collision Avoidance

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| USV | unmanned surface vehicle |

| DQN | deep Q network |

| DRL | deep reinforcement learning |

| COLREGs | international regulations for preventing collisions at sea |

| DRLCA | deep reinforcement learning collision avoidance |

| DDPG | deep deterministicpolicy gradient |

| LSTM | long short-term memory |

| SD | ship domain |

| DA | dynamic area |

| CRI | collision risk index |

| DCPA | the distance at the closest point of approaching |

| TCPA | time to the closest point of approaching |

References

- Dabrowski, P.S.; Specht, C.; Specht, M. Integration of multi-source geospatial data from GNSS receivers, terrestrial laser scanners, and unmanned aerial vehicles. Can. J. Remote Sens. 2021, 47, 621–634. [Google Scholar] [CrossRef]

- Kurowski, M.; Thal, J.; Damerius, R. Automated survey in very shallow water using an unmanned surface vehicle. IFAC-PapersOnLine 2019, 52, 146–151. [Google Scholar] [CrossRef]

- Li, C.; Jiang, J.; Duan, F. Modeling and experimental testing of an unmanned surface vehicle with rudderless double thrusters. Sensors 2019, 19, 2051. [Google Scholar] [CrossRef]

- Luis, S.Y.; Reina, D.G.; Marín, S.L.T. A multiagent deep reinforcement learning approach for path planning in autonomous surface vehicles: The Ypacaraí lake patrolling case. IEEE Access 2021, 9, 17084–17099. [Google Scholar] [CrossRef]

- Mu, D.; Wang, G.; Fan, Y. Adaptive trajectory tracking control for underactuated unmanned surface vehicle subject to unknown dynamics and time-varing disturbances. Appl. Sci. 2018, 8, 547. [Google Scholar] [CrossRef]

- Stateczny, A.; Specht, C.; Specht, M. Study on the positioning accuracy of GNSS/INS systems supported by DGPS and RTK receivers for hydrographic surveys. Energies 2021, 14, 7413. [Google Scholar] [CrossRef]

- Gao, S.; Liu, C.; Tuo, Y. Augmented model-based dynamic positioning predictive control for underactuated unmanned surface vessels with dual-propellers. Ocean Eng. 2022, 266, 112885. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H. Collision Avoidance Decision Method for Unmanned Surface Vehicle Based on an Improved Velocity Obstacle Algorithm. J. Mar. Sci. Eng. 2022, 10, 1047. [Google Scholar] [CrossRef]

- Ren, J.; Zhang, J.; Cui, Y. Autonomous obstacle avoidance algorithm for unmanned surface vehicles based on an improved velocity obstacle method. ISPRS Int. J. Geo-Inf. 2021, 10, 618. [Google Scholar] [CrossRef]

- Fan, Y.; Sun, X.; Wang, G. Collision avoidance controller for unmanned surface vehicle based on improved cuckoo search algorithm. Appl. Sci. 2021, 11, 9741. [Google Scholar] [CrossRef]

- Guan, W.; Wang, K. Autonomous Collision Avoidance of Unmanned Surface Vehicles Based on Improved A-Star and Dynamic Window Approach Algorithms. IEEE Intell. Transp. Syst. Mag. 2023, 2–17. [Google Scholar] [CrossRef]

- Silver, D.; Schrittwieser, J.; Simonyan, K. Mastering the game of go without human knowledge. Nature 2017, 550, 354–359. [Google Scholar] [CrossRef] [PubMed]

- Vinyals, O.; Babuschkin, I.; Czarnecki, W.M. Grandmaster level in StarCraft II using multi-agent reinforcement learning. Nature 2019, 575, 350–354. [Google Scholar] [CrossRef] [PubMed]

- Wang, N.; Gao, Y.; Zhao, H. Reinforcement learning-based optimal tracking control of an unknown unmanned surface vehicle. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 3034–3045. [Google Scholar] [CrossRef]

- Bastani, H.; Drakopoulos, K.; Gupta, V. Efficient and targeted COVID-19 border testing via reinforcement learning. Nature 2021, 599, 108–113. [Google Scholar] [CrossRef]

- Kiran, B.R.; Sobh, I.; Talpaert, V. Deep reinforcement learning for autonomous driving: A survey. IEEE Trans. Intell. Transp. Syst. 2021, 23, 4909–4926. [Google Scholar] [CrossRef]

- Chen, C.; Chen, X.Q.; Ma, F. A knowledge-free path planning approach for smart ships based on reinforcement learning. Ocean. Eng. 2019, 189, 106299. [Google Scholar] [CrossRef]

- Li, L.; Wu, D.; Huang, Y. A path planning strategy unified with a COLREGS collision avoidance function based on deep reinforcement learning and artificial potential field. Appl. Ocean. Res. 2021, 113, 102759. [Google Scholar] [CrossRef]

- Shen, H.; Hashimoto, H.; Matsuda, A. Automatic collision avoidance of multiple ships based on deep Q-learning. Appl. Ocean. Res. 2019, 86, 268–288. [Google Scholar] [CrossRef]

- Zhou, C.; Wang, Y.; Wang, L. Obstacle avoidance strategy for an autonomous surface vessel based on modified deep deterministic policy gradient. Ocean Eng. 2022, 243, 110166. [Google Scholar] [CrossRef]

- Du, Y.; Zhang, X.; Cao, Z. An Optimized Path Planning Method for Coastal Ships Based on Improved DDPG and DP. J. Adv. Transp. 2021, 2021, 7765130. [Google Scholar] [CrossRef]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Xu, X.; Cai, P.; Ahmed, Z. Path planning and dynamic collision avoidance algorithm under COLREGs via deep reinforcement learning. Neurocomputing 2022, 468, 181–197. [Google Scholar] [CrossRef]

- Chen, C.; Ma, F.; Xu, X. A Novel Ship Collision Avoidance Awareness Approach for Cooperating Ships Using Multi-Agent Deep Reinforcement Learning. J. Mar. Sci. Eng. 2021, 9, 1056. [Google Scholar] [CrossRef]

- Norrbin, N.H. Theory and observations on the use of a mathematical model for ship manoeuvring in deep and confined waters. In Publication 68 of the Swedish State Shipbuilding Experimental Tank, Proceedings of the 8th Symposium on Naval Hydrodynamics, Pasadena, CA, USA, 24–28 August 1970; Elanders Boktryckeri Aktiebolag: Göteborg, Sweden, 1971; pp. 807–905. [Google Scholar]

- Fan, Y.; Sun, Z.; Wang, G. A Novel Reinforcement Learning Collision Avoidance Algorithm for USVs Based on Maneuvering Characteristics and COLREGs. Sensors 2022, 22, 2099. [Google Scholar] [CrossRef]

- Fujii, Y.; Tanaka, K. Traffic capacity. J. Navig. 1971, 24, 543–552. [Google Scholar] [CrossRef]

- Piray, P.; Daw, N.D. Linear reinforcement learning in planning, grid fields, and cognitive control. Nat. Commun. 2021, 12, 4942. [Google Scholar] [CrossRef]

- Aytar, Y.; Pfaff, T.; Budden, D. Playing hard exploration games by watching YouTube. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 2935–2945. [Google Scholar]

- Bellemare, M.G.; Candido, S.; Castro, P.S. Autonomous navigation of stratospheric balloons using reinforcement learning. Nature 2020, 588, 77–82. [Google Scholar] [CrossRef]

- Sutton, R.S.; Batro, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Dabney, W.; Kurth-Nelson, Z.; Uchida, N. A distributional code for value in dopamine-based reinforcement learning. Nature 2020, 577, 671–675. [Google Scholar] [CrossRef]

- Bain, A. The Emotions and the Will; John W. Parker and Son: London, UK, 1859. [Google Scholar]

- Alagoz, O.; Hsu, H.; Schaefer, A.J. Markov decision processes: A tool for sequential decision making under uncertainty. Med. Decis. Mak. 2010, 30, 474–483. [Google Scholar] [CrossRef]

- Watkins, C.J.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Bellman, R. Dynamic programming. Science 1966, 153, 34–37. [Google Scholar] [CrossRef]

- Metropolis, N.; Ulam, S. The monte carlo method. J. Am. Stat. Assoc. 1949, 44, 335–341. [Google Scholar] [CrossRef] [PubMed]

- Mnih, V.; Kavukcuoglu, K.; Silver, D. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Hasselt, H. Double Q-learning. In Proceedings of the 23rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–9 December 2010; pp. 2613–2621. [Google Scholar]

- Van Hasselt, H.; Guez, A.; Silver, D. Deep reinforcement learning with double q-learning. In Proceedings of the 13th AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Wang, Z.; Schaul, T.; Hessel, M. Dueling network architectures for deep reinforcement learning. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 1995–2003. [Google Scholar]

- Schaul, T.; Quan, J.; Antonoglou, I. Prioritized experience replay. arXiv 2015, arXiv:1511.05952. [Google Scholar]

- Fortunato, M.; Azar, M.G.; Piot, B. Noisy networks for exploration. arXiv 2017, arXiv:1706.10295. [Google Scholar]

| Hyperparameter | Value |

|---|---|

| Learning Rate | 0.0001 |

| Discount Factor | 0.99 |

| Target Network Update Frequency | 4096 |

| Replay Memory Size | 1,000,000 |

| Batch Size | 600 |

| Noise Variance | 0.1 |

| Noise Mean | 0.0 |

| Greedy Value | 1.0 |

| Importance Sampling | 0.5 |

| Linearly Anneal of Importance Sampling | |

| Priority Experience Replay | 0.4 |

| Replay Start Size | 2000 |

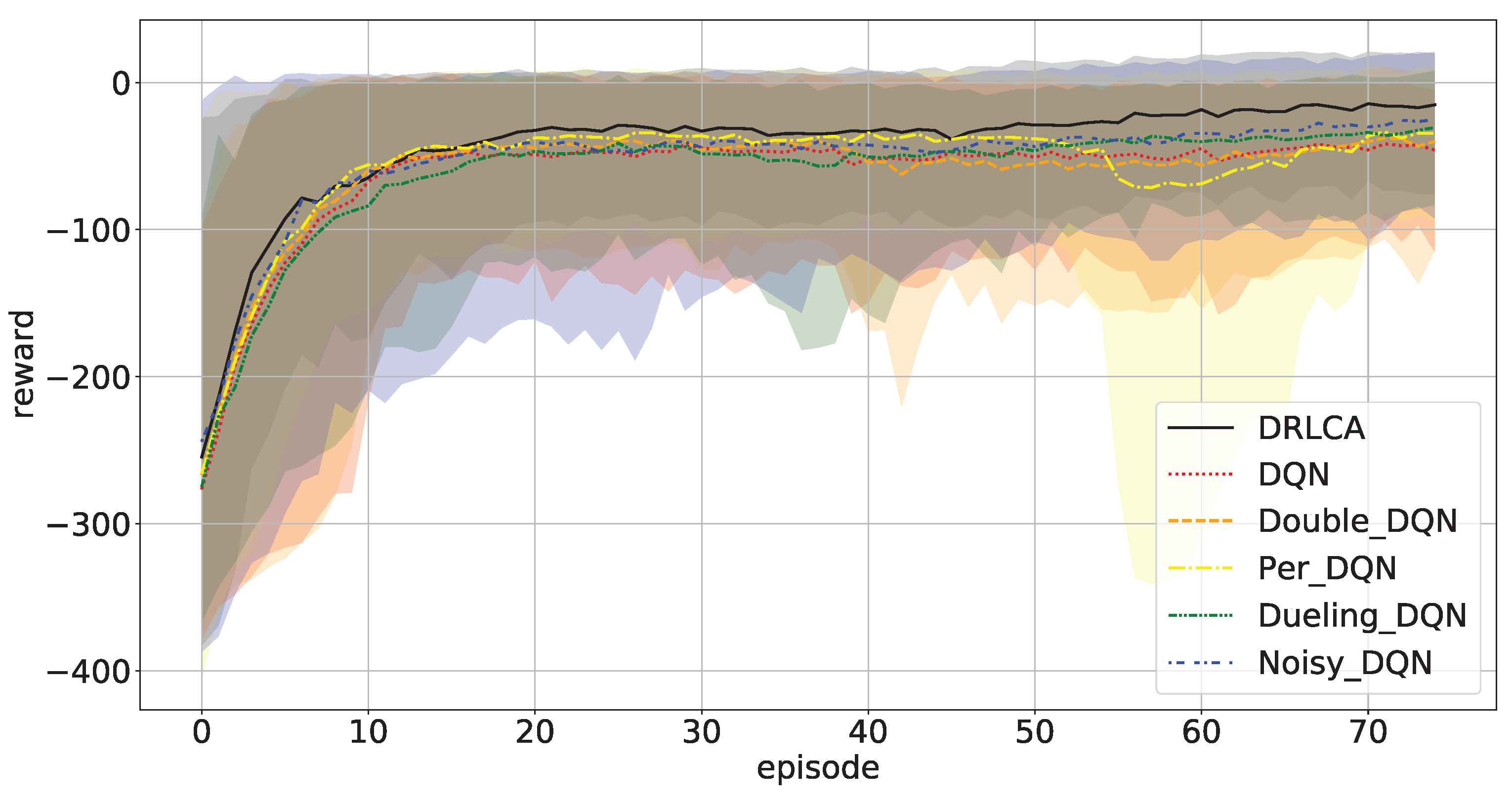

| Alogrithm | First Third of Training | Middle Third of Training | Last Third of Training |

|---|---|---|---|

| DRLCA | −75.86 | −32.61 | −20.83 |

| DQN | −91.05 | −48.37 | −47.26 |

| Double DQN | −85.33 | −47.62 | −49.55 |

| Per DQN | −81.92 | −37.46 | −51.17 |

| Dueling DRLCA | −96.42 | −48.82 | −38.18 |

| Noisy DQN | −81.97 | −43.11 | −34.09 |

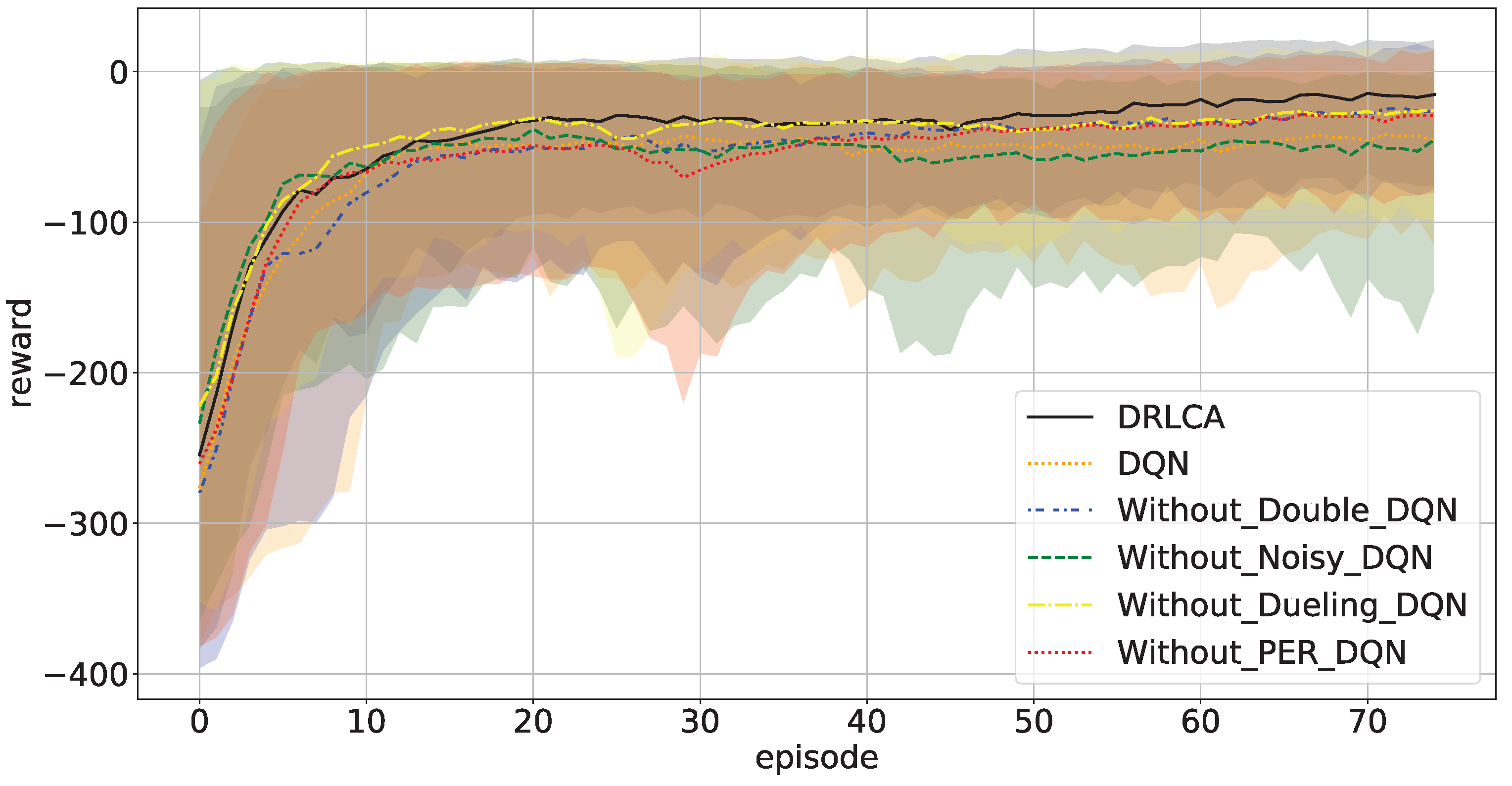

| Alogrithm | First Third of Training | Middle Third of Training | Last Third of Training |

|---|---|---|---|

| DRLCA | −75.86 | −32.61 | −20.83 |

| DQN | −91.05 | −48.37 | −47.26 |

| Without Double DQN | −97.36 | −43.94 | −31.61 |

| Without Per DQN | −88.91 | −49.68 | −33.53 |

| Without Dueling DRLCA | −69.30 | −35.92 | −31.44 |

| Without Noisy DQN | −73.84 | −52.57 | −52.04 |

| Experiment | Result | Course Deviation |

|---|---|---|

| Test environment 1, DRLCA | arrival | 1730.28 |

| Test environment 1, DQN | arrival | 3781.80 |

| Test environment 2, DRLCA | arrival | 707.51 |

| Test environment 2, DQN | arrival | 2357.59 |

| Test environment 3, DRLCA | arrival | 3371.51 |

| Test environment 3, DQN | collision | / |

| Test environment 4, DRLCA | arrival | 909.19 |

| Test environment 4, DQN | arrival | 2074.92 |

| Algorithm | Successful Arrival | Out of Bound | Collision | Average Accumulated Deviation of Course | Average Time |

|---|---|---|---|---|---|

| DRLCA | 97 | 3 | 0 | 2150.02 | 211.875 s |

| DQN | 56 | 30 | 14 | 2929.36 | 214.304 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, Z.; Fan, Y.; Wang, G. An Intelligent Algorithm for USVs Collision Avoidance Based on Deep Reinforcement Learning Approach with Navigation Characteristics. J. Mar. Sci. Eng. 2023, 11, 812. https://doi.org/10.3390/jmse11040812

Sun Z, Fan Y, Wang G. An Intelligent Algorithm for USVs Collision Avoidance Based on Deep Reinforcement Learning Approach with Navigation Characteristics. Journal of Marine Science and Engineering. 2023; 11(4):812. https://doi.org/10.3390/jmse11040812

Chicago/Turabian StyleSun, Zhe, Yunsheng Fan, and Guofeng Wang. 2023. "An Intelligent Algorithm for USVs Collision Avoidance Based on Deep Reinforcement Learning Approach with Navigation Characteristics" Journal of Marine Science and Engineering 11, no. 4: 812. https://doi.org/10.3390/jmse11040812

APA StyleSun, Z., Fan, Y., & Wang, G. (2023). An Intelligent Algorithm for USVs Collision Avoidance Based on Deep Reinforcement Learning Approach with Navigation Characteristics. Journal of Marine Science and Engineering, 11(4), 812. https://doi.org/10.3390/jmse11040812