Abstract

Tidal energy is a rapidly developing area of the marine renewable energy sector that requires converters to be placed within areas of fast current speeds to be commercially viable. Tidal environments are also utilised by marine fauna (marine mammals, seabirds and fish) for foraging purposes, with usage patterns observed at fine spatiotemporal scales (seconds and metres). An overlap between tidal developments and fauna creates uncertainty regarding the environmental impact of converters. Due to the limited number of tidal energy converters in operation, there is inadequate knowledge of marine megafaunal usage of tidal stream environments, especially the collection of fine-scale empirical evidence required to inform on and predict potential environmental effects. This review details the suitability of using multirotor unmanned aerial vehicles within tidal stream environments as a tool for capturing fine-scale biophysical interactions. This includes presenting the advantages and disadvantages of use, highlighting complementary image processing and automation techniques, and showcasing the limited current examples of usage within tidal stream environments. These considerations help to demonstrate the appropriateness of unmanned aerial vehicles, alongside applicable image processing, for use as a survey tool to further quantify the potential environmental impacts of marine renewable energy developments.

1. Introduction

In 2019, the UK legislated to reach net zero carbon emissions by 2050, with all electricity to come from low-carbon sources by 2035 [1]. To achieve these targets, there has been significant investment and development in marine renewable energy (MRE) technologies [2]. Tidal energy is a growing area of the MRE sector that, in its most general form, uses subsurface turbines to generate electricity from tidally driven currents within high flow areas (called tidal stream environments from hereon) where current speeds often exceed 2 m/s [3]. Horizontal-axis turbines are the most common design, accounting for over 70% of current global research and development efforts, but more unique adaptations, such as tidal kites, are also being trialled [4,5].

The estimated energy potential from tidal stream energy could exceed 120 GW, as observed with countries including Canada, China, Argentina, France, Russia, and South Korea developing technologies and the UK having the greatest capacity of 10 GW [6]. Since the mid-1980s, there has been an interest in using the UK’s strong tidal energy potential [7]. It is currently estimated that a practical resource of 34 TWh/yr, or approximately 11% of the UK’s annual electricity demand, is available [8]. This has led to major investments and development to push the UK to the forefront of the global tidal energy field, with 18 MW of capacity currently installed [8]. As of writing, there are 31 tidal stream lease sites in the UK, with the largest fully consented example being the MeyGen project within the Pentland Firth, Scotland [9,10].

While the tidal energy sector has witnessed rapid development and practical implementation in recent years, there are several existing constraints (engineering, environmental, economic and social) that limit commercially viable upscaling [5]. Environmental concerns are of particular importance, as in order to give consent, regulators require satisfactory data collection of baseline conditions, evidence-based findings detailing minimal impact on sensitive receptors and evidence of mitigation efforts to offset unavoidable impacts [11]. Within an emerging industry in which environmental impact assessments (EIAs) and post-consent monitoring protocols are still in development, compared to other sectors such as oil and gas, there is still a significant lack of data to understand the environmental implications of new developments [11]. This is necessary information to identify key receptors (e.g., fish, crustaceans, invertebrates and algae), accurately inform decision makers and allow the continued sustainable development of tidal energy converters [12].

Due to their status in conservation legislation in the UK, the potential to interact with tidal energy converters (TECs) and the public’s heightened perception of them, marine top predators (seabirds, marine mammals and sharks) are of particular importance with regard to environmental monitoring [11]. Coastal shelf seas support many top predator species in the northern hemisphere due to high productivity, maintaining spatiotemporally predictable concentrations of prey (fish) [13]. Within the European Union, protection under law is afforded to marine mammals and seabirds through Directive 2009/147/EC, which states that species “must be afforded protection within Special Protection Areas (SPAs)” [14]. In the UK, this protection is now afforded through the Conservation of Habitats and Species (Amendment) (EU Exit) Regulations 2019 [15]. However, many SPAs are in proximity to marine renewable developments, creating a potential conflict between energy extraction and species conservation [16]. It is, therefore, crucial to understand top predator usage patterns to inform on the level of overlap, understand variation at a species level and determine sensitivity to anthropogenic developments [17].

Assessment of current evidence has highlighted a lack of understanding regarding top predator usage patterns of tidal stream environments, particularly at fine spatiotemporal scales (<1 m and <1 min), and a deficit of appropriate methods and instruments to capture in situ measurements [18]. Fine-resolution information is required to accurately predict and inform the full extent of TEC impacts (e.g., collision risk and habitat displacement) with an increased degree of statistical power [12].

Fine-scale characterisation of turbulent features and top-predator associations with them can provide novel insight into the physical cues underlying habitat usage [19,20,21]. For the example of seabirds, this level of detail would allow for increasingly accurate predictions to be made with regard to seabird sensitivity towards habitat displacement and the likelihood of direct collisions with tidal converters [22]. However, the resolution required to capture habitat characteristics and seabird associations at fine spatiotemporal scales can be challenging to achieve with traditional survey methods [23,24]. Novel methodology will, therefore, have to be developed that can capture and quantify fine-scale habitat characteristics and resultant biophysical interactions. Without this, empirical evidence crucial to determining animal sensitivity towards tidal energy developments cannot be collated. This paper examines the use of unmanned aerial vehicles (UAVs) and concurrent image processing techniques as methodologies to collect relevant empirical data. It will describe the advantages and limitations of multirotor UAVs, briefly detail complementary image processing and automated techniques and display current examples of UAV usage within tidal stream environments. Search terms for Section 2 included “multirotor UAVs” within “environmental studies”; for Section 3, the literature included is specifically related to “image processing techniques” utilised to process “aerial imagery”, and finally, Section 4 literature focusses on “multirotor UAV” research within “tidal stream environments”.

2. Advantages and Limitations of Multirotor Unmanned Aerial Vehicle Usage

2.1. Background

Over the past decade, UAVs have experienced a rapid evolution from solely military usage to full commercialisation, with many designs, sizes and capabilities now in existence [25]. Multirotor UAVs are capable of hovering and vertical take-off and landing, and they are cost-effective and highly manoeuvrable [26]. These features make multirotor UAVs a suitable tool for carrying out accurate photogrammetric assessments, as well as vertical profiling and spatial surveys [26,27]. As this technology has developed, so have relevant remote sensing instrumentation and image processing techniques [25,28]. Due to the diversity of the range of platforms, sensor availability and operational capabilities, UAVs have been successfully used in a range of wildlife applications (Table S1) [29].

Within the field of marine ecology, uses range from the monitoring of previously inaccessible populations and locations to more novel applications like the noninvasive collection of DNA and microbiota samples [30,31]. In many cases, the ability to access restricted areas helps to provide a cost-effective alternative to existing data collection methods both financially and in effort expenditure [32]. These capabilities make UAVs a suitable tool for use within tidal stream environments, where fast current velocities and turbulence provide a challenging environment to survey within and fine-scale biophysical interactions require novel methods to capture [19]. However, as with all methodologies, it is key to assess the advantages and limitations of use to understand their potential.

2.2. Advantages

Multirotor UAVs have the capability to hover, meaning increased stability and positional control, which provides the accuracy needed for species identification and individual assessment using either high-resolution video or imagery [33]. The downward-facing camera angle, provided from UAV imagery, means that targets within a frame have a decreased chance of being obscured by other individuals or objects within the environment, such as waves, than from shore-based vantage point surveys [34]. Images can also be broken down into multiple subsections to increase the accuracy of manual processing [34]. These highly accurate visual datasets can provide a novel way in which to approach existing research questions related to aspects of marine top predators (life history, behaviour, abundance and distribution) as well as a permanent record for users to return to and review [35,36].

UAV systems are also appropriate for use in marine environments that may be logistically challenging to monitor [37,38,39,40]. Within any ecological survey, maximising and optimising effort is important to collect representative data with significant statistical power. UAVs have the potential to allow for this by offering a safer, more cost-effective research tool compared with existing low-altitude aerial survey techniques [41]. Safe operation of flights can occur within a broader range of environmental and logistical conditions, such as areas of shallow water or challenging coastline, which may be difficult to access through manned aerial or vessel surveys [42]. In many cases, this allows for UAVs to bridge the gap between satellite observations and ground-based measurements to provide supplementary data to abundance studies by working in conjunction with existing survey techniques [43,44]. Sweeney et al. [38] emphasised this by using a UAV during annual pinniped abundance surveys within the Aleutian Islands chain in Alaska to allow full coverage of the 23 survey sites where, in some cases, vessel or aircraft usage was not possible. In this instance, the highly portable nature of UAVs meant that the cost and effort of mobilisation for surveys were significantly reduced [38,45]. This helps to highlight the potential of multirotor UAVs to increase survey frequency, meaning a resultant increase in overall coverage monitoring capabilities [32]. This is highly advantageous when research questions in tidal stream environments are focussed on fine-scale associations, with a requirement for multiple surveys of localised areas across diel and tidal cycles.

The ability to collect fine-scale spatial and temporal data to examine relevant ecological variables is also an important benefit of using multirotor UAVs [26]. This is often at finer spatiotemporal resolutions than conventional aerial imagery (taken from an aircraft) and places it within a niche that sits between that and ground-based measurements [32]. Within tidal stream environments, multirotor UAVs allow for surveys of marine megafauna to be conducted at a range of differing altitudes, from 10–120 m, dependent on survey requirements and species [23,31,42,45,46,47,48,49]. Data collection in this manner has generally been at the spatiotemporal scales of <100 m and <10 min and has allowed for examinations into potentially undescribed drivers of habitat use [23].

Digital imagery collected at these altitudes also increases the accuracy of object localisation and detection, thus allowing for increased precision in abundance and distribution modelling [50]. UAV surveys within tidal stream environments have the potential to provide detailed insights into faunal spatial and temporal habitat usage patterns, which could begin to direct more relevant data collection when assessing species associations with localised habitat features. However, there is still a need for further quantifiable investigations into potential UAV disturbance to separate any impacts of noise and visual cues in eliciting behavioural responses [41]. This is becoming increasingly important to examine to fully inform future legislation and regulations pertaining to UAV usage within scientific research [28]. With increased UAV usage within environmental research, suggested codes of best practice and standardised methodological recommendations have become increasingly prevalent and robust [29,51]. This has created a visible shift as UAV usage becomes increasingly “main-stream”, from novel drone operation and methodology development towards normalised scientific inference-based work [29].

A final benefit of UAV usage within tidal stream environments is its adaptability as a survey tool. This highlights its potential to meet current and future research requirements. In terms of marine megafauna, there are relevant examples of novel usage that differ from the previously described fine-scale abundance and distribution studies. This can involve new derivations from current data (e.g., imagery or other sensory modalities) or by creating a novel methodology to capture additional information [43]. An investigation into reef shark (Carcharhinus melanopterus) swimming and shoaling behaviour at two differing microhabitats off the coast of Moorea Island, French Polynesia, provides an example [47]. Findings from the resultant imagery indicated significant differences in shark alignment (p < 0.05), dependent on microhabitat [47]. This study provides insights into animal behaviour that were not previously possible to collect and highlights adaptability in the usage of UAV-derived data.

However, adaptability also lies within the physical alteration of equipment use. This has been presented through the use of a UAV technique being developed and adapted from a pre-existing method to characterise viruses within free-ranging whale species [52,53]. Whale blow samples collected from 19 individuals via the use of a sterile petri dish placed upon the bottom of a UAV highlighted 42 classified viral families and led to the identification of six new virus species [53]. These studies help to highlight the adaptability of multirotor UAVs for current uses and their potential to be used with emerging methodologies. These are likely to be requirements driven by ecological knowledge gaps or questions.

2.3. Limitations

Examination of methodological limitations is especially pertinent when examining emerging techniques, as it will drive technological advancement, relevant legislation and the development of scientifically robust best practices [41].

Potential disturbance from both visual and noise sources is the primary limitation of using multirotor UAVs within an ecological context. Multirotor UAV functional noise is present in both harmonic and subharmonic frequency bands and may impact animals at the water surface and within the air depending on proximity or altitude [54]. This is particularly significant to examine for studies of coastal-associating marine megafauna, such as seabirds [31,55]. While many studies often deem disturbance to be negligible, quantifiable justification for these statements is lacking, and the limited code of best practices has been a long-standing issue [51,56]. Within the umbrella of UAV disturbance, there are many factors to consider, including UAV type, ambient environmental noise, species-level responses and individual-level responses dependent on age, sex and biological state [31,55]. Consideration also needs to be given to distinguishing between visual and auditory cues [41]. The need for a standardised disturbance criterion is now evident as the field continues to expand [29,51,57].

It is also important to consider the limitations of the technology itself. While the utility of multirotor UAVs within ecological and tidal stream research continues to grow, the primary technological limitation is the battery capabilities, which allow for flight times of approximately 20–30 min depending on weight, payload type and environmental conditions [45,57,58,59]. This can impact surveys requiring extended temporal coverage and often limits UAV data collection to being supplementary to a larger collection effort [38]. It is thus advisable to employ a precautionary, battery-saving strategy to flight times and survey design to assess whether the multirotor design is suitable on a use-by-use basis [33].

However, as technological advances continue, specific limitations may become less significant. Battery capacity has continually developed at an annual rate of around 3% in the past 60 years, and it is predicted that by 2030, average multirotor UAV flight times may be averaging 40 min [60,61]. Battery technologies are also diversifying, with exploration starting into the usage of hydrogen fuel, lithium–sulphur and lithium–air types to replace existing commercially available lithium-ion batteries [61]. Novel solutions are also being trialled in relation to charging and battery swapping to maximise existing resources. One solution puts forward a concept design for “an autonomous battery swapping system” that would enable battery swaps to automatically take place from a ground charging station without the UAV system ever having to be powered down [62]. This would minimise device downtime and increase useful flight time. Until technological advances unlock the further potential and negate existing technical limitations, novel solutions such as this, in combination with existing battery-saving strategies, are likely to be the key to maximising multirotor UAV capabilities.

Environmental conditions that may impact the device and its outputs but are not a direct result of the technology itself should also be considered when examining methodological limitations. Adverse weather conditions (heavy wind/rain) can negatively impact UAV devices in multiple ways, including limiting flight capability, damaging electronic components and increasing battery drain [63]. This can potentially cause limitations in survey coverage, as flights should only be undertaken during periods that do not exceed regulatory or manufacturer operational guidelines [64]. Methodologies incorporating UAVs need to factor potential disruptions due to unfavourable environmental conditions into survey design in order to mitigate this and capture appropriate levels of data.

UAV data outputs can also be restricted by environmental conditions. Conventional aerial surveys are usually limited to wind speeds of <7 knots and Beaufort sea states of 3 (characterised as large wavelets, breaking crests and scattered whitecaps) or less and can incur costs in keeping aircraft and crew on standby for acceptable survey conditions [65]. While the operational costs of UAVs will be far less than conventional aircraft, the image datasets, particularly within highly turbulent environments, such as tidal streams, will still be similarly impacted by increased sea state and turbidity levels, causing low levels of detection. Sun glare, causing a glinting effect on the water’s surface, is another environmental factor to consider. Sun glare can cause blurring and degradation in photogrammetric outputs and limit the detectability of objects of interest [39]. Image processing techniques can counter these issues by using brightness or contrast adjustments, but consideration should also be given during the flight planning and data collection phases to mitigate the impact of environmental conditions (sea state, turbidity and sun glare) on UAV imagery [66].

3. Complementary Image Processing and Automation Techniques

3.1. Background

UAVs can collect hundreds of images and large volumes of video footage within a single survey, making them highly suitable for the continuous capture of fine-scale data [67]. It is thus important to consider the methods used to process imagery to extract accurate data that is collected within feasible time and monetary constraints [36]. Manual review and analysis of large imagery datasets is time-consuming and could negate any efficiency gained through using UAVs as a data collection method [68]. However, image processing is a rapidly advancing field, with many semiautomated and automated approaches available for integration.

3.2. Image Segmentation and Thresholding

The simplest and most widely used form of image processing is image segmentation, which uses a technique called thresholding to separate an object from its background (Table 1) [69]. This is achieved by creating a binary image and selecting objects of interest by examining levels of spectral reflectance to then obtain a global lower limit whereby anything below it is discounted (Figure 1) [70]. This technique can be applied to greyscale images in which the threshold is dictated by brightness values or to colour images in which a different threshold value can be applied to each colour band. The most common issue with this is when other elements within the image are at the same spectral range as the target object, leading to the creation of false positive detections [68]. However, by implementing stringent filtering and sieving techniques, such as applying secondary thresholds and manually assessing any remaining questionable pixels, the reliability of this method can be significantly increased [71,72]. Applications of image segmentation have included medical image analysis, autonomous vehicles, video surveillance and augmented reality applications [73].

Table 1.

Literature examined in relation to relevant image segmentation and thresholding techniques detailing origin, purpose, image processing techniques and summary of work.

Figure 1.

Schematic detailing the workflow of image segmentation using thresholding.

Advancements in image segmentation include adaptive thresholding, which applies individual threshold values to each pixel rather than an entire image [74]. While this can compensate for spatial and temporal variations in illumination and can be implemented in real time, it is restricted by the need to process images twice [75]. Although both global and adaptive thresholding methods can be automated, image segmentation still requires significant user input to continually fine-tune thresholds, apply appropriate image enhancements and assess potential misclassifications in which inappropriate pixel segmentation has occurred [70]. This level of tuning can mean that manual processing times may still be high compared with other less user-intensive methods.

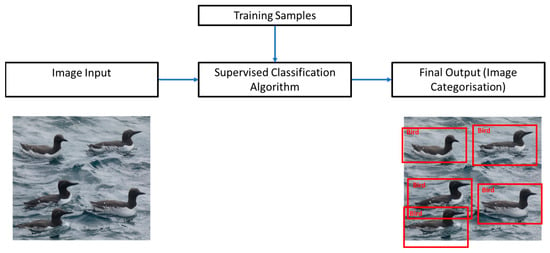

3.3. Supervised and Unsupervised Classification

Another common image processing technique is supervised classification, in which a user will classify known objects into different categories to train an image processing algorithm (Table 2) [72]. A user will manually classify targets that are already known to assemble a training dataset. The supervised classification algorithm will then use the mean and variance values from the previously labelled targets within the training dataset to assign classifications (Figure 2). However, this is dependent on human interpretation to differentiate whether an object is a target and can be highly iterative, requiring multiple classification attempts [77]. Reflectance values can be used to inform the process, although this can lead to misidentification and the potential identification of increased false positive results [76]. Image differentiation, the comparison of the differences between imagery collected at different times, has been found to perform better in this regard but is highly specific in its application due to requiring a previous image to refer to [76]. As with image segmentation, automation can occur to a point, with manual inputs still required to initially assign classifications, leading to the potential creation of user bias [78].

Table 2.

Literature examined in relation to relevant supervised and unsupervised classification techniques detailing origin, purpose, image processing techniques and summary of work.

Figure 2.

Schematic detailing supervised classification workflow.

Unsupervised classification builds upon supervised classification by using statistical algorithms to group pixels based on spectral data and make predictions with minimal user input (Figure 3) [72]. As a result, unsupervised classification is most utilised to identify specific features on the landscape, and while this can have a higher probability of target detection, there is an increased likelihood of the production of false positive results, with external information and manual input needed to correct this [79]. This, in turn, creates an increased requirement for manual interpretation to maintain higher levels of accuracy.

Figure 3.

Schematic detailing unsupervised classification workflow.

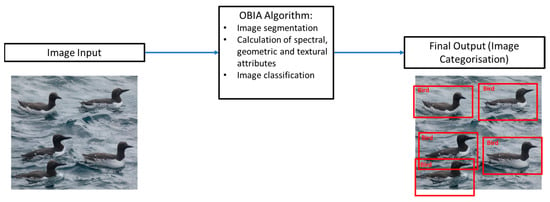

3.4. Object-Based Image Analysis

While the above pixel-based methods can be accurate at high spatial resolutions, with varying levels of automation applicable, all require some degree of manual input on an image-by-image basis (Table 3) [68]. An alternative method called object-based image analysis (OBIA) can provide improvements upon pixel-based techniques by reducing spectral overlap between objects of interest and increasing accuracy regarding target classification [72]. This is achieved by incorporating geometric, textural and spatial information, in combination with spectral data, of objects within the image to identify them based on pixel groups with similarities (Figure 4) [80,81]. Within ecological research, OBIA approaches have performed target detections that make the identification of individual animals and species identification viable and, in doing so, can provide relevant context regarding spatial and temporal relationships [82].

Table 3.

Literature examined in relation to relevant object-based image analysis techniques detailing origin, purpose, image processing techniques and summary of work.

Figure 4.

A schematic detailing the workflow of an OBIA algorithm.

However, it was demonstrated by Groom et al. [83] that while automation of OBIA methods gave a high detection accuracy of >99% when estimating lesser flamingo (Phoeniconaias minor) abundance at Kamfers Dam in South Africa, there was a significant underestimation of individuals when compared to visual counts [83]. This was believed to be due to the method’s decreased success rate in distinguishing targets on the land, where there was a lower contrast compared to when individuals were on or above the water surface [83]. The presence of immature, less brightly coloured birds was believed to be a potentially limiting factor. While this method outperforms previously described pixel-based supervised and unsupervised classification approaches, both computationally and performance-wise, it highlights the need for further development to detect animals that also consider biological and environmental variables. Expensive software and high computational requirements, demanding specific user knowledge to operate, are also significant limitations that may impact decisions to utilise OBIA methods [72].

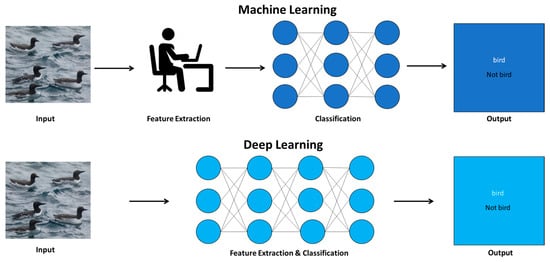

3.5. Machine Learning and Deep Learning

Machine learning techniques build on image feature-based algorithms, described above, through the incorporation of artificial intelligence (Table 4). Machine learning algorithms are suited to object detection tasks due to being able to tackle complex and often hidden patterns within data [84]. Deep learning is the most developed form of machine learning due to performing tasks without human interaction, and it has shown great promise in the field of image processing (Figure 5) [85]. Deep learning architecture is based on multiple neural networks, which are interconnected layers that process and extract information from an input [86]. Convolutional neural networks (CNNs) are the most common form of neural network used within deep learning models and allow for the detection and classification of objects of interest within a dataset [87]. This ability to detect and categorise objects is achieved through training CNNs on predefined labelled datasets that are often specific to the use case. This training process allows a deep learning model to autonomously adjust its behaviour to obtain the desired output, with this capability increasing as more data is fed into the learning phase. Iterative learning allows a deep learning model to acquire new, and adjust existing, parameters as it goes along, which traditional classification techniques are unable to do. In turn, this makes deep learning models a powerful tool for object detection tasks, with current iterations able to run at near real-time frame rates [88].

Table 4.

Literature examined in relation to relevant machine and deep learning techniques detailing origin, purpose, image processing techniques and summary of work.

Figure 5.

A schematic detailing the workflow of machine and deep learning algorithms used for object detection. Light and dark blue circles represent convolutional neural network structures.

From an ecological standpoint, deep learning models may be capable of handling imagery containing animal heterogeneity and external considerations, including motion blur, background contrast and differences in illumination [89]. Boudaoud et al. [89] used a deep learning object detection model on aerial images of seabirds, finding that the classification rate was 98% when used on real images, seeing an increase of 3% from implementation on training datasets. However, this approach was not without its limitations, as environmental conditions, including wave crests and sun glare, were still found to lower classification accuracy. This approach is heavily reliant on computational power and can be very time-consuming when introducing real source data sets containing large proportions of images with complex backgrounds [90].

While automated and semiautomated image processing approaches are becoming increasingly prevalent in ecological studies, advancements require the ability for greater distinction between animals and their background [72]. An overarching theme is the need to better overcome biological factors and environmental conditions that may either inhibit detection or create false positives. As advancements in digital camera technology, image analysis software and computer processing capabilities continue, this is increasingly becoming a reality [68]. Although CNNs appear the most capable of dealing with more representative data examples, there is a significant effort required for their initial development, and manual validation is still needed for accuracy purposes [36].

Consideration should be given to the appropriateness of any image processing technique on an individual case basis due to specific methods having a high knowledge threshold and extensive time costs for setup. While UAVs offer an advantageous tool for use within tidal stream environments, complementary postprocessing methods must be used in a balanced manner that will enhance the tool as opposed to creating further setbacks for use. However, they can be used to reduce manual effort by guiding a search area for user validation. To keep a balance between efficiency and accuracy, it is crucial to consider the facets of image processing that are best suited for automation while keeping manual control over parts requiring increased precision.

4. Current Examples and Future Recommendations of Multirotor UAV Usage within Tidal Stream Environments

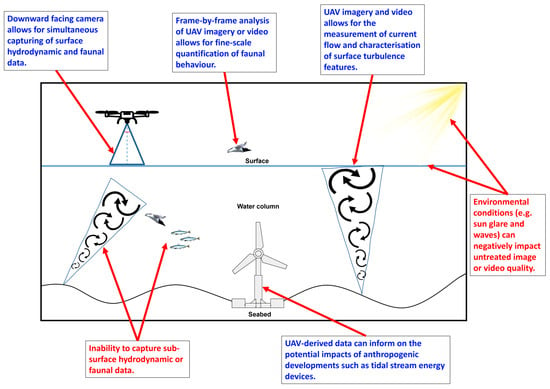

With the relevant UAV advantages, limitations and complementary image processing techniques described, it is also important to detail the current examples of usage within tidal stream environments (Table S2). UAV usage within tidal stream environments at present is limited and covers three primary areas: measurements of current flow, characterisation of surface turbulence habitat features and the exploration of fine-scale (metres and seconds) animal habitat use (Figure 6).

Figure 6.

A visualisation of UAV advantages (blue text) and limitations (red text) within a tidal stream environment that highlights capability of simultaneously capturing hydrodynamic (represented by the blue lines and black arrows) and ecological (represented by the seabirds and fish) video or imagery data in relation to anthropogenic developments (represented by grey tidal turbine). Blue triangle indicates field of view and area coverage from UAV camera.

4.1. Current Examples

UAVs can provide measurements of flow conditions that are a valuable addition to tidal stream characterisation and allow MRE developers to carry out initial site selection, device micrositing and flow structure analysis [91]. This was achieved using image processing methods, incorporating particle tracking (dense optical flow and particle image velocimetry (PIV), e.g., PIVlab version 2.63.0.0: a software with an inbuilt graphical user interface for PIV data extraction [92]), and has been evaluated at multiple tidal stream sites while providing complementary coverage, resolution and accuracy compared with acoustic Doppler current profiler (ADCP) techniques [91,93]. Short stationary hover flights (at 120 m altitudes) were undertaken, with UAVs orientated to allow for video recordings in parallel to the prevailing current flow direction. Image preprocessing, greyscale conversion and contrast limited adaptive histogram equalisation (CLAHE) were then applied to image stills extracted from the video recordings to improve PIVlab’s measurement capabilities. Although flow measurement inaccuracies were detected due to an inability to incorporate turbulent features, UAVs demonstrated a low-cost, low-risk technique to collect measurements of surface currents within tidal stream sites [93].

UAV imagery has also allowed for the characterisation of individual turbulent flow structures. Empirical measurements of turbulent features, such as kolk-boils, are important for informing turbine power output, design and micrositing while also providing key metrics to help assess animal distribution and habitat usage patterns. It was demonstrated, within the Pentland Firth (UK), that UAV imagery could capture and map kolk-boils at the surface throughout a tidal stream environment [94]. This was achieved through UAV surveys conducted against the prevailing current flow at 70 m altitude, collecting imagery data of the sea’s surface. Images were then manually processed within a user interface that allowed for specific measurements (distribution, size and classification) of kolk-boils to be taken. The study also highlighted environmental drivers, such as tidal phase and current velocity, of kolk-boil presence and distribution, allowing for increased predictability of what has often been described as an “ephemeral” occurrence [94].

Turbulent features are hypothesised to create fine spatiotemporal foraging hotspots through the overturning or displacement of prey (fish) species [19]. UAVs provide a tool that can take direct measurements of these associations, which are often unobtainable through traditional methods. These studies have focussed on seabird species and highlighted that foraging strategy dictates the nature of this relationship. UAV stationary hover and transiting surveys (at >70 m altitudes) incorporating both manual and machine learning image processing techniques allowed for the specific tracking and measurements of seabirds on a frame-by-frame basis in relation to humanmade and naturally occurring turbulent features [23,95]. This ability to track fine-scale seabird behaviour highlighted that surface foraging species displayed an attraction towards features due to prey being moved towards the surface and being easier to hunt [23]. Conversely, diving seabirds were found to be attracted to general areas of turbulence but avoided individual features potentially due to the accompanying energy expenditure of swimming through them [95]. Novel findings of biophysical interactions within tidal stream environments at a species level of identification would not have been possible without the use of standardised UAV imagery and video footage to capture it.

4.2. Future Recommendations

For continued usage within tidal stream environments, UAV standardisation should retain some flexibility, especially when considering potential ecological disturbance. This is highlighted by Rush et al. [31], who concluded that while UAVs offered great potential for the accurate surveying of colony-nesting lesser black-backed gulls (Larus fuscus), with minimal disturbance noted, future surveys on different bird species would require appropriate levels of behavioural assessment on a species-by-species basis. This would ideally reflect all relevant ethical considerations, adhere to civil aviation laws, accurately detail methodologies used and design surveys with appropriate precautions as would befit other guidelines detailing good research practice [51]. Increased collection of species-specific data to quantify potential disturbance comparative to traditional techniques is a definitive requirement as the use of multirotor UAVs increases within ecological surveys [36]. Ideally, this should be performed in tandem with applications of the technology and not as a retrospective measure to validate findings.

UAVs provide high-definition image and video quality that can allow for the successful quantification of fine-scale biophysical interactions occurring within a tidal stream environment [57]. However, UAV-derived data are limited to what is visible at the surface, with survey duration heavily determined by the flight time capacity of the device [96]. This means that UAV usage is unable to provide a complete picture of biophysical interactions, as many faunal species (marine mammals, sharks and pelagic or benthic foraging seabirds) interact with the habitat both at and below the water’s surface. The importance of understanding the impact of anthropogenic devices, such as tidal turbines, is thus underpinned by the requirement of prey, predator and hydrodynamic information that is collected throughout the entirety of the water column [24]. Active and passive acoustic monitoring devices offer the ability to quantify fine-scale subsurface hydrodynamic and faunal interactions within tidal stream environments [24,97,98]. While UAVs can be used as a singular ecological survey tool to provide unique and novel datasets, it is recommended that they be used as part of a wider array. A multifaceted approach utilising UAV- and acoustic-derived data would allow for assessments to span multiple trophic levels throughout the entirety of the water column and more accurately inform on the potential environmental impacts of anthropogenic developments within tidal stream environments.

It is also important to focus on UAV and image processing development in order for it to continue to be a viable environmental survey tool. Regarding the technology itself, while battery limitations have not hampered data collection efforts in tidal stream environments (described in Section 4.1), advancements in battery technology will only help to increase flight time durations, which, in turn, will improve survey efficiency and effort [61]. Advancements in image processing techniques will also help to mitigate potentially data-degrading environmental factors within tidal stream environments, such as sun glare and waves. In particular, the focus should be on the incorporation of machine learning techniques, such as deep learning, which have been found to be the most suited to dealing with complex problems and busy environments [99]. This can be through image preprocessing to allow objects of interest to be observed more clearly or to increase the automation of data processing that would allow for quicker turnaround times for results. However, as with the incorporation of any multifaceted methodology, the addition of specific image processing techniques that can be computationally taxing should be done on a case-by-case basis that considers the trade-offs between surveying efficiency, accuracy and methodological complexity.

5. Conclusions

This article showcases the use of multirotor UAVs as a scientific survey tool for fine-scale data collection, discusses relevant image processing techniques and highlights current examples of UAV usage within tidal stream environments.

Multirotor UAVs can collect positionally accurate digital imagery that provides fine-scale spatiotemporal data in environments that are often considered challenging to survey within. While limitations exist in the form of battery life as well as bottlenecks in manual postprocessing of imagery, the adaptability of UAVs allows challenges to be managed and for standardised usage to be appropriate within tidal stream environments. Concurrent image processing techniques provide a method in which to optimise manual postprocessing bottlenecks, with many variations in processes available for use depending on user requirements. For example, deep learning, a form of machine learning, offers the greatest potential for UAV-based object detection tasks within tidal stream environments.

UAVs have already been used in a range of different applications within tidal stream sites. Survey work includes the mapping of surface currents, the characterisation of surface turbulent features and the quantification of fine-scale animal habitat usage. These tasks are achieved due to UAVs being low cost and low risk within what is often described as a “challenging survey environment” and due to capturing data at appropriate spatiotemporal scales to highlight these biophysical interactions.

It is clear from this paper that UAVs and relevant image processing techniques offer an advantageous tool for use within tidal stream environments. This can provide complementary and novel insights to go alongside outputs from conventional survey methods. However, the appropriateness of the tool itself and relevant postprocessing methods must be evaluated on a case-by-case basis. Within tidal stream environments, this must specifically consider potential environmental disturbance, the technological limitations of devices themselves and the trade-off between image processing complexity and survey efficiency.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/jmse11122298/s1, Table S1: literature examined in relation to multirotor UAV advantages and limitations of use detailing origin, purpose and summary of work, Table S2: literature examined in relation to UAV usage within tidal stream environments detailing origin, purpose, UAV specifications and methodology, image processing techniques and summary of work.

Author Contributions

Conceptualisation, J.S., B.E.S., L.K., J.M., J.W. and B.J.W.; methodology, J.S., B.E.S., L.K., J.M., J.W. and B.J.W.; investigation, J.S.; writing—original draft preparation, J.S.; writing—review and editing, J.S., B.E.S., L.K., J.M., J.W. and B.J.W.; supervision, B.E.S., L.K., J.M., J.W. and B.J.W.; project administration, B.J.W.; funding acquisition, B.E.S., L.K., J.M., J.W. and B.J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the Bryden Centre project, supported by the European Union’s INTERREG VA Programme and managed by the Special EU Programmes Body (SEUPB). The views and opinions expressed in this paper do not necessarily reflect those of the European Commission or the Special EU Programmes Body (SEUPB). Aspects of this research were also funded by a Royal Society Research Grant (RSG\R1\180430), the NERC VertIBase project (NE/N01765X/1), the UK Department for Business, Energy, and Industrial Strategy’s offshore energy Strategic Environmental Assessment programme and EPSRC Supergen ORE Hub (EP/S000747/1).

Data Availability Statement

The data underlying this article will be shared on reasonable request by the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- BEIS. Net Zero Strategy: Build Back Greener; HM Government: London, UK, 2021. [Google Scholar]

- Lewis, M.; Neill, S.P.; Robins, P.E.; Hashemi, M.R. Resource Assessment for Future Generations of Tidal-Stream Energy Arrays. Energy 2015, 83, 403–415. [Google Scholar] [CrossRef]

- Scherelis, C.; Penesis, I.; Hemer, M.A.; Cossu, R.; Wright, J.T.; Guihen, D. Investigating Biophysical Linkages at Tidal Energy Candidate Sites: A Case Study for Combining Environmental Assessment and Resource Characterisation. Renew. Energy 2020, 159, 399–413. [Google Scholar] [CrossRef]

- Zambrano, C. Lessons Learned from Subsea Tidal Kite Quarter Scale Ocean Trials. In Proceedings of the WTE16—Second Workshop on Wave and Tidal Energy, Valdivia, Chile, 16–18 November 2016. [Google Scholar]

- Magagna, D.; Uihlein, A. Ocean Energy Development in Europe: Current Status and Future Perspectives. Int. J. Mar. Energy 2015, 11, 84–104. [Google Scholar] [CrossRef]

- Jha, R.; Singh, V.P.; Singh, V.; Roy, L.B.; Thendiyath, R. River and Coastal Engineering; Springer: Berlin/Heidelberg, Germany, 2022; Volume 117. [Google Scholar]

- Burrows, R.; Yates, N.C.; Hedges, T.S.; Li, M.; Zhou, J.G.; Chen, D.Y.; Walkington, I.A.; Wolf, J.; Holt, J.; Proctor, R. Tidal Energy Potential in UK Waters. Proc. Inst. Civ. Eng. Marit. Eng. 2009, 162, 155–164. [Google Scholar] [CrossRef]

- Coles, D.; Angeloudis, A.; Greaves, D.; Hastie, G.; Lewis, M.; MacKie, L.; McNaughton, J.; Miles, J.; Neill, S.; Piggott, M.; et al. A Review of the UK and British Channel Islands Practical Tidal Stream Energy Resource. Proc. R. Soc. A Math. Phys. Eng. Sci. 2021, 477, 20210469. [Google Scholar] [CrossRef]

- Isaksson, N.; Masden, E.A.; Williamson, B.J.; Costagliola-Ray, M.M.; Slingsby, J.; Houghton, J.D.R.; Wilson, J. Assessing the Effects of Tidal Stream Marine Renewable Energy on Seabirds: A Conceptual Framework. Mar. Pollut. Bull. 2020, 157, 111314. [Google Scholar] [CrossRef] [PubMed]

- Rajgor, G. Tidal Developments Power Forward. Renew. Energy Focus 2016, 17, 147–149. [Google Scholar] [CrossRef]

- Fox, C.J.; Benjamins, S.; Masden, E.A.; Miller, R. Challenges and Opportunities in Monitoring the Impacts of Tidal-Stream Energy Devices on Marine Vertebrates. Renew. Sustain. Energy Rev. 2018, 81, 1926–1938. [Google Scholar] [CrossRef]

- Copping, A.; Battey, H.; Brown-Saracino, J.; Massaua, M.; Smith, C. An International Assessment of the Environmental Effects of Marine Energy Development. Ocean. Coast Manag. 2014, 99, 3–13. [Google Scholar] [CrossRef]

- Hunt, G.L.; Russell, R.W.; Coyle, K.O.; Weingartner, T. Comparative Foraging Ecology of Planktivorous Auklets in Relation to Ocean Physics and Prey Availability. Mar. Ecol. Prog. Ser. 1998, 167, 241–259. [Google Scholar] [CrossRef]

- European Parliament. Directive 2009/147/EC of the European Parliament and of the Council. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32009L0147 (accessed on 2 June 2022).

- Department for Environment Food & Rural Affairs. The Conservation of Habitats and Species (Amendment) (EU Exit) Regulations 2019. Available online: https://www.legislation.gov.uk/ukdsi/2019/9780111176573 (accessed on 14 November 2023).

- Frid, C.; Andonegi, E.; Depestele, J.; Judd, A.; Rihan, D.; Rogers, S.I.; Kenchington, E. The Environmental Interactions of Tidal and Wave Energy Generation Devices. Environ. Impact Assess. Rev. 2012, 32, 133–139. [Google Scholar] [CrossRef]

- Furness, R.W.; Wade, H.M.; Robbins, A.M.C.; Masden, E.A. Assessing the Sensitivity of Seabird Populations to Adverse Effects from Tidal Stream Turbines and Wave Energy Devices. ICES J. Mar. Sci. 2012, 69, 1466–1479. [Google Scholar] [CrossRef]

- Hutchison, I. Wave and Tidal Stream Critical Evidence Needs. Available online: https://tethys.pnnl.gov/publications/wave-tidal-stream-critical-evidence-needs (accessed on 29 January 2021).

- Lieber, L.; Nimmo-Smith, W.A.M.; Waggitt, J.J.; Kregting, L. Localised Anthropogenic Wake Generates a Predictable Foraging Hotspot for Top Predators. Commun. Biol. 2019, 2, 123. [Google Scholar] [CrossRef] [PubMed]

- Waggitt, J.J.; Cazenave, P.W.; Torres, R.; Williamson, B.J.; Scott, B.E. Predictable Hydrodynamic Conditions Explain Temporal Variations in the Density of Benthic Foraging Seabirds in a Tidal Stream Environment. ICES J. Mar. Sci. 2016, 73, 2677–2686. [Google Scholar] [CrossRef]

- Waggitt, J.J.; Cazenave, P.W.; Torres, R.; Williamson, B.J.; Scott, B.E. Quantifying Pursuit-Diving Seabirds’ Associations with Fine-Scale Physical Features in Tidal Stream Environments. J. Appl. Ecol. 2016, 53, 1653–1666. [Google Scholar] [CrossRef]

- Johnston, D.T.; Furness, R.W.; Robbins, A.M.C.; Tyler, G.; Taggart, M.A.; Masden, E.A. Black Guillemot Ecology in Relation to Tidal Stream Energy Generation: An Evaluation of Current Knowledge and Information Gaps. Mar. Environ. Res. 2018, 134, 121–129. [Google Scholar] [CrossRef] [PubMed]

- Lieber, L.; Langrock, R.; Nimmo-Smith, A. A Bird’s Eye View on Turbulence: Seabird Foraging Associations with Evolving Surface Flow Features. Proc. R. Soc. B 2021, 288, 20210592. [Google Scholar] [CrossRef] [PubMed]

- Couto, A.; Williamson, B.J.; Cornulier, T.; Fernandes, P.G.; Fraser, S.; Chapman, J.D.; Davies, I.M.; Scott, B.E. Tidal Streams, Fish, and Seabirds: Understanding the Linkages between Mobile Predators, Prey, and Hydrodynamics. Ecosphere 2022, 13, e4080. [Google Scholar] [CrossRef]

- Chabot, D.; Bird, D.M. Wildlife Research and Management Methods in the 21st Century: Where Do Unmanned Aircraft Fit In? J. Unmanned Veh. Syst. 2015, 3, 137–155. [Google Scholar] [CrossRef]

- Anderson, K.; Gaston, K.J. Lightweight Unmanned Aerial Vehicles Will Revolutionize Spatial Ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef]

- Hendrickx, M.; Gheyle, W.; Bonne, J.; Bourgeois, J.; de Wulf, A.; Goossens, R. The Use of Stereoscopic Images Taken from a Microdrone for the Documentation of Heritage—An Example from the Tuekta Burial Mounds in the Russian Altay. J. Archaeol. Sci. 2011, 38, 2968–2978. [Google Scholar] [CrossRef]

- Christie, K.S.; Gilbert, S.L.; Brown, C.L.; Hatfield, M.; Hanson, L. Unmanned Aircraft Systems in Wildlife Research: Current and Future Applications of a Transformative Technology. Front. Ecol. Environ. 2016, 14, 241–251. [Google Scholar] [CrossRef]

- Barnas, A.F.; Chabot, D.; Hodgson, A.J.; Johnston, D.W.; Bird, D.M.; Ellis-Felege, S.N. A Standardized Protocol for Reporting Methods When Using Drones for Wildlife Research. J. Unmanned Veh. Syst. 2020, 8, 89–98. [Google Scholar] [CrossRef]

- Wolinsky, H. Biology Goes in the Air. EMBO Rep. 2017, 18, 1284–1289. [Google Scholar] [CrossRef] [PubMed]

- Rush, G.P.; Clarke, L.E.; Stone, M.; Wood, M.J. Can Drones Count Gulls? Minimal Disturbance and Semiautomated Image Processing with an Unmanned Aerial Vehicle for Colony-Nesting Seabirds. Ecol. Evol. 2018, 8, 12322–12334. [Google Scholar] [CrossRef] [PubMed]

- Waite, C.E.; van der Heijden, G.M.F.; Field, R.; Boyd, D.S. A View from above: Unmanned Aerial Vehicles (UAVs) Provide a New Tool for Assessing Liana Infestation in Tropical Forest Canopies. J. Appl. Ecol. 2019, 56, 902–912. [Google Scholar] [CrossRef]

- Colefax, A.P.; Butcher, P.A.; Kelaher, B.P. The Potential for Unmanned Aerial Vehicles (UAVs) to Conduct Marine Fauna Surveys in Place of Manned Aircraft. ICES J. Mar. Sci. 2018, 75, 1–8. [Google Scholar] [CrossRef]

- Hodgson, J.C.; Baylis, S.M.; Mott, R.; Herrod, A.; Clarke, R.H. Precision Wildlife Monitoring Using Unmanned Aerial Vehicles. Sci. Rep. 2016, 6, 22574. [Google Scholar] [CrossRef]

- Yaney-Keller, A.; San Martin, R.; Reina, R.D. Comparison of UAV and Boat Surveys for Detecting Changes in Breeding Population Dynamics of Sea Turtles. Remote Sens. 2021, 13, 2857. [Google Scholar] [CrossRef]

- Edney, A.J.; Wood, M.J. Applications of Digital Imaging and Analysis in Seabird Monitoring and Research. Ibis 2020, 163, 317–337. [Google Scholar] [CrossRef]

- Kiszka, J.J.; Mourier, J.; Gastrich, K.; Heithaus, M.R. Using Unmanned Aerial Vehicles (UAVs) to Investigate Shark and Ray Densities in a Shallow Coral Lagoon. Mar. Ecol. Prog. Ser. 2016, 560, 237–242. [Google Scholar] [CrossRef]

- Sweeney, K.L.; Helker, V.T.; Perryman, W.L.; LeRoi, D.J.; Fritz, L.W.; Gelatt, T.S.; Angliss, R.P. Flying beneath the Clouds at the Edge of the World: Using a Hexacopter to Supplement Abundance Surveys of Steller Sea Lions (Eumetopias Jubatus) in Alaska. J. Unmanned Veh. Syst. 2016, 4, 70–81. [Google Scholar] [CrossRef]

- Casella, E.; Collin, A.; Harris, D.; Ferse, S.; Bejarano, S.; Parravicini, V.; Hench, J.L.; Rovere, A. Mapping Coral Reefs Using Consumer-Grade Drones and Structure from Motion Photogrammetry Techniques. Coral Reefs 2017, 36, 269–275. [Google Scholar] [CrossRef]

- Li, T.; Zhang, B.; Cheng, X.; Hui, F.; Li, Y. Leveraging the UAV to Support Chinese Antarctic Expeditions: A New Perspective. Adv. Polar. Sci. 2021, 32, 67–74. [Google Scholar] [CrossRef]

- Smith, C.E.; Sykora-Bodie, S.T.; Bloodworth, B.; Pack, S.M.; Spradlin, T.R.; LeBoeuf, N.R. Assessment of Known Impacts of Unmanned Aerial Systems (UAS) on Marine Mammals: Data Gaps and Recommendations for Researchers in the United States. J. Unmanned Veh. Syst. 2016, 4, 31–44. [Google Scholar] [CrossRef]

- Goebel, M.E.; Perryman, W.L.; Hinke, J.T.; Krause, D.J.; Hann, N.A.; Gardner, S.; LeRoi, D.J. A Small Unmanned Aerial System for Estimating Abundance and Size of Antarctic Predators. Polar. Biol. 2015, 38, 619–630. [Google Scholar] [CrossRef]

- Yang, Z.; Yu, X.; Dedman, S.; Rosso, M.; Zhu, J.; Yang, J.; Xia, Y.; Tian, Y.; Zhang, G.; Wang, J. UAV Remote Sensing Applications in Marine Monitoring: Knowledge Visualization and Review. Sci. Total Environ. 2022, 838, 155939. [Google Scholar] [CrossRef]

- Durban, J.W.; Michael, S.A.; Moore, J.; Gloria, S.A.; Bahamonde, P.-L.A.; Perryman, W.L.; Donald, S.A.; Leroi, J. Photogrammetry of Blue Whales with an Unmanned Hexacopter. Notes Mar. Mammal Sci. 2016, 32, 1510–1515. [Google Scholar] [CrossRef]

- Durban, J.; Fearnbach, H.; Barrett-Lennard, L.; Perryman, W.; Leroi, D. Photogrammetry of Killer Whales Using a Small Hexacopter Launched at Sea. J. Unmanned Veh. Syst. 2015, 3, 131–135. [Google Scholar] [CrossRef]

- Sardà-Palomera, F.; Bota, G.; Viñolo, C.; Pallarés, O.; Sazatornil, V.; Brotons, L.; Gomáriz, S.; Sardà, F. Fine-Scale Bird Monitoring from Light Unmanned Aircraft Systems. Ibis 2012, 154, 177–183. [Google Scholar] [CrossRef]

- Rieucau, G.; Kiszka, J.J.; Castillo, J.C.; Mourier, J.; Boswell, K.M.; Heithaus, M.R. Using Unmanned Aerial Vehicle (UAV) Surveys and Image Analysis in the Study of Large Surface-Associated Marine Species: A Case Study on Reef Sharks Carcharhinus Melanopterus Shoaling Behaviour. J. Fish Biol. 2018, 93, 119–127. [Google Scholar] [CrossRef] [PubMed]

- Borowicz, A.; McDowall, P.; Youngflesh, C.; Sayre-Mccord, T.; Clucas, G.; Herman, R.; Forrest, S.; Rider, M.; Schwaller, M.; Hart, T.; et al. Multi-Modal Survey of Adélie Penguin Mega-Colonies Reveals the Danger Islands as a Seabird Hotspot. Sci. Rep. 2018, 8, 3926. [Google Scholar] [CrossRef] [PubMed]

- Gooday, O.J.; Key, N.; Goldstien, S.; Zawar-Reza, P. An Assessment of Thermal-Image Acquisition with an Unmanned Aerial Vehicle (UAV) for Direct Counts of Coastal Marine Mammals Ashore. J. Unmanned Veh. Syst. 2018, 6, 100–108. [Google Scholar] [CrossRef]

- Žydelis, R.; Dorsch, M.; Heinänen, S.; Nehls, G.; Weiss, F. Comparison of Digital Video Surveys with Visual Aerial Surveys for Bird Monitoring at Sea. J. Ornithol. 2019, 160, 567–580. [Google Scholar] [CrossRef]

- Hodgson, J.C.; Koh, L.P. Best Practice for Minimising Unmanned Aerial Vehicle Disturbance to Wildlife in Biological Field Research. Curr. Biol. 2016, 26, R404–R405. [Google Scholar] [CrossRef] [PubMed]

- Acevedo-Whitehouse, K.; Rocha-Gosselin, A.; Gendron, D. A Novel Non-Invasive Tool for Disease Surveillance of Free-Ranging Whales and Its Relevance to Conservation Programs. Anim. Conserv. 2010, 13, 217–225. [Google Scholar] [CrossRef]

- Geoghegan, J.L.; Pirotta, V.; Harvey, E.; Smith, A.; Buchmann, J.P.; Ostrowski, M.; Eden, J.S.; Harcourt, R.; Holmes, E.C. Virological Sampling of Inaccessible Wildlife with Drones. Viruses 2018, 10, 300. [Google Scholar] [CrossRef]

- Christiansen, F.; Rojano-Doñate, L.; Madsen, P.T.; Bejder, L. Noise Levels of Multi-Rotor Unmanned Aerial Vehicles with Implications for Potential Underwater Impacts on Marine Mammals. Front. Mar. Sci. 2016, 3, 277. [Google Scholar] [CrossRef]

- Pomeroy, P.; O’Connor, L.; Davies, P. Assessing Use of and Reaction to Unmanned Aerial Systems in Gray and Harbor Seals during Breeding and Molt in the UK. J. Unmanned Veh. Syst. 2015, 3, 102–113. [Google Scholar] [CrossRef]

- Zink, R.; Kmetova-Biro, E.; Agnezy, S.; Klisurov, I.; Margalida, A. Assessing the Potential Disturbance Effects on the Use of Unmanned Aircraft Systems (UASs) for European Vultures Research: A Review and Conservation Recommendations. Bird Conserv. Int. 2023, 33, e45. [Google Scholar] [CrossRef]

- Verfuss, U.K.; Aniceto, A.S.; Harris, D.V.; Gillespie, D.; Fielding, S.; Jiménez, G.; Johnston, P.; Sinclair, R.R.; Sivertsen, A.; Solbø, S.A.; et al. A Review of Unmanned Vehicles for the Detection and Monitoring of Marine Fauna. Mar. Pollut. Bull. 2019, 140, 17–29. [Google Scholar] [CrossRef] [PubMed]

- Fiori, L.; Doshi, A.; Martinez, E.; Orams, M.B.; Bollard-Breen, B. The Use of Unmanned Aerial Systems in Marine Mammal Research. Remote Sens. 2017, 9, 543. [Google Scholar] [CrossRef]

- Rees, A.; Avens, L.; Ballorain, K.; Bevan, E.; Broderick, A.C.; Carthy, R.R.; Christianen, M.J.A.; Duclos, G.; Heithaus, M.R.; Johnston, D.W.; et al. The Potential of Unmanned Aerial Systems for Sea Turtle Research and Conservation: A Review and Future Directions. Endanger. Species Res. 2018, 35, 81–100. [Google Scholar] [CrossRef]

- Zu, C.X.; Li, H. Thermodynamic Analysis on Energy Densities of Batteries. Energy Environ. Sci. 2011, 4, 2614–2624. [Google Scholar] [CrossRef]

- Galkin, B.; Kibilda, J.; DaSilva, L.A. UAVs as Mobile Infrastructure: Addressing Battery Lifetime. IEEE Commun. Mag. 2019, 57, 132–137. [Google Scholar] [CrossRef]

- Lee, D.; Zhou, J.; Lin, W.T. Autonomous Battery Swapping System for Quadcopter. In Proceedings of the 2015 International Conference on Unmanned Aircraft Systems, ICUAS, Denver, CO, USA, 9–12 June 2015; pp. 118–124. [Google Scholar]

- Mangewa, L.J.; Ndakidemi, P.A.; Munishi, L.K. Integrating UAV Technology in an Ecological Monitoring System for Community Wildlife Management Areas in Tanzania. Sustainability 2019, 11, 6116. [Google Scholar] [CrossRef]

- Hardin, P.; Jensen, R. Small-Scale Unmanned Aerial Vehicles in Environmental Remote Sensing: Challenges and Opportunities. GIScience Remote Sens. 2011, 48, 99–111. [Google Scholar] [CrossRef]

- Hodgson, A.; Kelly, N.; Peel, D. Unmanned Aerial Vehicles (UAVs) for Surveying Marine Fauna: A Dugong Case Study. PLoS ONE 2013, 8, e79556. [Google Scholar] [CrossRef]

- Ortega-Terol, D.; Hernandez-Lopez, D.; Ballesteros, R.; Gonzalez-Aguilera, D. Automatic Hotspot and Sun Glint Detection in UAV Multispectral Images. Sensors 2017, 17, 2352. [Google Scholar] [CrossRef]

- Drever, M.C.; Chabot, D.; O’hara, P.D.; Thomas, J.D.; Breault, A.; Millikin, R.L. Evaluation of an Unmanned Rotorcraft to Monitor Wintering Waterbirds and Coastal Habitats in British Columbia, Canada. J. Unmanned Veh. Syst. 2015, 3, 259–267. [Google Scholar] [CrossRef]

- Chabot, D.; Francis, C.M. Computer-Automated Bird Detection and Counts in High-Resolution Aerial Images: A Review. J. Field Ornithol. 2016, 87, 343–359. [Google Scholar] [CrossRef]

- Glasbey, C.A.; Horgan, G.W.; Darbyshire, J.F. Image Analysis and Three-dimensional Modelling of Pores in Soil Aggregates. J. Soil Sci. 1991, 42, 479–486. [Google Scholar] [CrossRef]

- Laliberte, S.; Ripple, W.J. Automated Wildlife Counts from Remotely Sensed Imagery. Wildl. Soc. Bull. 2003, 31, 362–371. [Google Scholar]

- Trathan, P.N. Image Analysis of Color Aerial Photography to Estimate Penguin Population Size. Wildl. Soc. Bull. 2004, 32, 332–343. [Google Scholar] [CrossRef]

- Hollings, T.; Burgman, M.; van Andel, M.; Gilbert, M.; Robinson, T.; Robinson, A. How Do You Find the Green Sheep? A Critical Review of the Use of Remotely Sensed Imagery to Detect and Count Animals. Methods Ecol. Evol. 2018, 9, 881–892. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.; Porikli, F.; Plaza, A.; Kehtarnavaz, N.; Terzopoulos, D. Image Segmentation Using Deep Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 3523–3542. [Google Scholar] [CrossRef] [PubMed]

- Wellner, P.D. Adaptive Thresholding for the DigitalDesk; Rank Xerox Ltd.: Cambridge, UK, 1993. [Google Scholar]

- Bradley, D.; Roth, G. Adaptive Thresholding Using the Integral Image. J. Graph. Tools 2007, 12, 13–21. [Google Scholar] [CrossRef]

- LaRue, M.A.; Stapleton, S.; Porter, C.; Atkinson, S.; Atwood, T.; Dyck, M.; Lecomte, N. Testing Methods for Using High-Resolution Satellite Imagery to Monitor Polar Bear Abundance and Distribution. Wildl. Soc. Bull. 2015, 39, 772–779. [Google Scholar] [CrossRef]

- Fretwell, P.T.; LaRue, M.A.; Morin, P.; Kooyman, G.L.; Wienecke, B.; Ratcliffe, N.; Fox, A.J.; Fleming, A.H.; Porter, C.; Trathan, P.N. An Emperor Penguin Population Estimate: The First Global, Synoptic Survey of a Species from Space. PLoS ONE 2012, 7, e33751. [Google Scholar] [CrossRef]

- Fretwell, P.T.; Staniland, I.J.; Forcada, J. Whales from Space: Counting Southern Right Whales by Satellite. PLoS ONE 2014, 9, 88655. [Google Scholar] [CrossRef]

- Terletzky, P.A.; Ramsey, R.D. Comparison of Three Techniques to Identify and Count Individual Animals in Aerial Imagery. J. Signal Inf. Process. 2016, 07, 123–135. [Google Scholar] [CrossRef]

- Chrétien, L.P.; Théau, J.; Ménard, P. Visible and Thermal Infrared Remote Sensing for the Detection of White-Tailed Deer Using an Unmanned Aerial System. Wildl. Soc. Bull. 2016, 40, 181–191. [Google Scholar] [CrossRef]

- Blaschke, T. Object Based Image Analysis for Remote Sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Guirado, E.; Tabik, S.; Alcaraz-Segura, D.; Cabello, J.; Herrera, F. Deep-Learning Versus OBIA for Scattered Shrub Detection with Google Earth Imagery: Ziziphus Lotus as Case Study. Remote Sens. 2017, 9, 1220. [Google Scholar] [CrossRef]

- Groom, G.; Petersen, I.K.; Anderson, M.D.; Fox, A.D. Using Object-Based Analysis of Image Data to Count Birds: Mapping of Lesser Flamingos at Kamfers Dam, Northern Cape, South Africa. Int. J. Remote Sens. 2011, 32, 4611–4639. [Google Scholar] [CrossRef]

- Zhong, S.; Zhang, K.; Bagheri, M.; Burken, J.G.; Gu, A.; Li, B.; Ma, X.; Marrone, B.L.; Ren, Z.J.; Schrier, J.; et al. Machine Learning: New Ideas and Tools in Environmental Science and Engineering. Environ. Sci. Technol. 2021, 55, 12741–12754. [Google Scholar] [CrossRef]

- Zhao, R.; Li, C.; Ye, S.; Fang, X. Butterfly Recognition Based on Faster R-CNN. Proc. J. Phys. Conf. Ser. 2019, 1176, 032048. [Google Scholar] [CrossRef]

- Lürig, M.D.; Donoughe, S.; Svensson, E.I.; Porto, A.; Tsuboi, M. Computer Vision, Machine Learning, and the Promise of Phenomics in Ecology and Evolutionary Biology. Front. Ecol. Evol. 2021, 9, 148. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Ben Boudaoud, L.; Maussang, F.; Garello, R.; Chevallier, A. Marine Bird Detection Based on Deep Learning Using High-Resolution Aerial Images. In Proceedings of the OCEANS 2019-Marseille, Marseille, France, 17–20 June 2019; pp. 1–7. [Google Scholar]

- Maganathan, T.; Senthilkumar, S.; Balakrishnan, V. Machine Learning and Data Analytics for Environmental Science: A Review, Prospects and Challenges. In Proceedings of the IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2020; Volume 955, p. 012107. [Google Scholar]

- McIlvenny, J.; Williamson, B.J.; Fairley, I.A.; Lewis, M.; Neill, S.; Masters, I.; Reeve, D.E. Comparison of Dense Optical Flow and PIV Techniques for Mapping Surface Current Flow in Tidal Stream Energy Sites. Int. J. Energy Environ. Eng. 2022, 14, 273–285. [Google Scholar] [CrossRef]

- Thielicke, W.; Sonntag, R. Particle Image Velocimetry for MATLAB: Accuracy and Enhanced Algorithms in PIVlab. J. Open Res. Softw. 2021, 9, 12. [Google Scholar] [CrossRef]

- Fairley, I.; Williamson, B.J.; McIlvenny, J.; King, N.; Masters, I.; Lewis, M.; Neill, S.; Glasby, D.; Coles, D.; Powell, B.; et al. Drone-Based Large-Scale Particle Image Velocimetry Applied to Tidal Stream Energy Resource Assessment. Renew. Energy 2022, 196, 839–855. [Google Scholar] [CrossRef]

- Slingsby, J.; Scott, B.E.; Kregting, L.; Mcilvenny, J.; Wilson, J.; Couto, A.; Roos, D.; Yanez, M.; Williamson, B.J. Surface Characterisation of Kolk-Boils within Tidal Stream Environments Using UAV Imagery. J. Mar. Sci. Eng. 2021, 9, 484. [Google Scholar] [CrossRef]

- Slingsby, J.; Scott, B.E.; Kregting, L.; McIlvenny, J.; Wilson, J.; Yanez, M.; Langlois, S.; Williamson, B.J. Using Unmanned Aerial Vehicle (UAV) Imagery to Characterise Pursuit-Diving Seabird Association With Tidal Stream Hydrodynamic Habitat Features. Front. Mar. Sci. 2022, 9, 820722. [Google Scholar] [CrossRef]

- Faye, E.; Rebaudo, F.; Yánez-Cajo, D.; Cauvy-Fraunié, S.; Dangles, O. A Toolbox for Studying Thermal Heterogeneity across Spatial Scales: From Unmanned Aerial Vehicle Imagery to Landscape Metrics. Methods Ecol. Evol. 2016, 7, 437–446. [Google Scholar] [CrossRef]

- Williamson, B.J.; Blondel, P.; Williamson, L.D.; Scott, B.E. Application of a Multibeam Echosounder to Document Changes in Animal Movement and Behaviour around a Tidal Turbine Structure. ICES J. Mar. Sci. 2021, 78, 1253–1266. [Google Scholar] [CrossRef]

- Benjamins, S.; Dale, A.; Van Geel, N.; Wilson, B. Riding the Tide: Use of a Moving Tidal-Stream Habitat by Harbour Porpoises. Mar. Ecol. Prog. Ser. 2016, 549, 275–288. [Google Scholar] [CrossRef]

- Albahli, S.; Nawaz, M.; Javed, A.; Irtaza, A. An Improved Faster-RCNN Model for Handwritten Character Recognition. Arab. J. Sci. Eng. 2021, 46, 8509–8523. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).