Abstract

Monitoring offshore infrastructure is a challenging task owing to the harsh ocean environment. To reduce human involvement in this task, this study proposes an autonomous surface vehicle (ASV)-based structural monitoring system for inspecting power cable lines under the ocean surface. The proposed ASV was equipped with multimodal sonar sensors, including a multibeam echosounder (MBES) and side-scan sonar (SSS) for mapping the seafloor, combined with a precisely estimated vehicle pose from navigation sensors. In particular, a globally consistent map was developed using the orthometric height as a vertical datum estimated based on the geoid height received from the GPS. Accordingly, the MBES and SSS generate a map of the target objects in the form of point clouds and sonar images, respectively. Dedicated outlier removal methods for MBES sensing were proposed to preserve the sparse inlier point cloud, and we applied the projection of the SSS image pixels to reflect the geometry of the seafloor. A field test was conducted in an ocean environment using real offshore cable lines to verify the efficiency of the proposed monitoring system.

1. Introduction

The ocean is a huge source of renewable energy resources. In this sense, offshore power plants, such as wind and wave farms, became emerging technologies with increasing importance due to the global requirement for zero carbon emission. Therefore, providing a proper structural monitoring system during either the construction or operation of these types of infrastructures is essential. In addition, recent advances in robotics technologies are gradually changing the field monitoring tasks as safe and efficient, even in challenging environments such as oceans [1,2].

Autonomous surface vehicles (ASVs) are being developed for various missions, such as hydrographic surveys [3,4,5], environmental monitoring [6,7], defense [8,9], and first responder support [10,11,12]. The installed exteroceptive and proprioceptive sensors allow an ASV to accurately obtain its position and attitude above the ocean surface. This information can be used for autonomous navigation and mapping.

Compared to the traditional ship-based, airborne or satellite remote sensing systems [13], the ASV mapping system has advantages in its agility and real-time monitoring capabilities. Our ASV can be deployed immediately when the survey is required, and operators can remotely monitor the mapping procedures in real-time at the base station.

The ASV can be equipped with several payload sensors based on its mission. Sonar is a de facto standard payload sensor for monitoring underwater environments. Because light is significantly absorbed in a water medium, the range of optical cameras or LiDARs [14,15] used for underwater mapping is limited. However, sonars have longer sensing ranges (up to several kilometers) and do not depend on lighting conditions. A multibeam echosounder (MBES) provides an array of sonar range and bearing information regarding the seafloor, and can be converted into a point cloud. In contrast, a side-scan sonar (SSS) obtains a series of sonar intensities from fan-shaped beams from the sonar head; usually, two sonar heads are installed on opposite sides. These intensities can generate sonar images of the seafloor. Significant research on using MBES and SSS with ships or autonomous underwater vehicles (AUVs) for ocean mapping has been conducted [16,17,18,19,20]. However, ASVs have rarely been used in offshore environments, and a few applications have been developed in inland and shallow waters.

In this study, we developed an ASV for inspecting the offshore power cables that connect wave power plants and offshore electrical substations built in the middle of the ocean. The ASV was equipped with multimodal sonar sensors to acquire the position and shape of the power lines lying on the seafloor. The vehicle performs the inspection task autonomously, and the users only remotely monitor the real-time mapping procedure.

The remainder of this paper is organized as follows. In Section 2, the system design and overall control architecture of the developed ASV are described. In Section 3, the precise motion estimation and consistent mapping are proposed, followed by the experimental results of the field test in Section 4. Finally, Section 5 concludes this study.

2. ASV Development

2.1. Hardware System

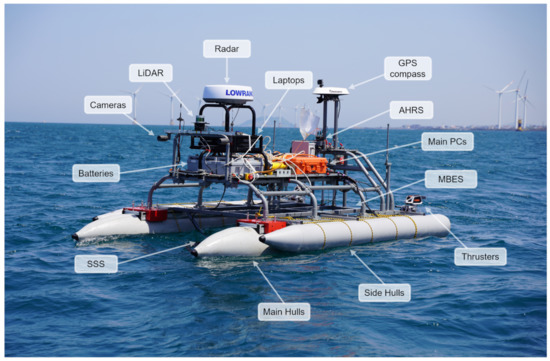

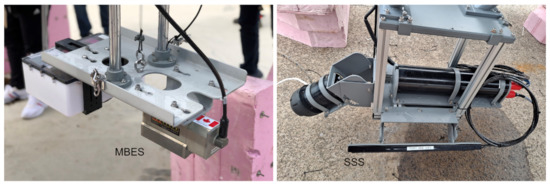

The base platform of the ASV was designed based on a catamaran to enable a differential thrust. The vehicle was integrated above the base along with various component systems, including power, electronic sensors and devices, onboard computers, and wireless communication systems, as shown in Figure 1. The detailed configuration of the installed sonar sensors is shown in Figure 2. The MBES and SSS were installed in the middle of the main hulls on the stern and bow sides, respectively. As the two sonar sensors used different frequencies and did not produce a common multiple, there was zero interference between them.

Figure 1.

Developed ASV for offshore cable line inspection.

Figure 2.

Equipped multi-modal sonar sensors. MBES for point cloud generation and SSS for sonar imaging.

For the propulsion system, twin electric outboard thrusters were mounted on the stern of each hull. Three high-performance lithium batteries were installed for electrical power. Each battery module includes a battery management system. The battery capacity was determined to support the operating hours of the vehicle for one-day survey missions. Moreover, a GPS receiver and an attitude heading reference system (AHRS) were installed to acquire the real-time motion information of the vehicle. A situational awareness system (e.g., optical cameras) was installed to inform about the objects floating on the water to the users. Wireless local area networks using wireless fidelity and radio frequency devices were deployed to reciprocally communicate operating commands and the current state of the vehicle. Furthermore, wireless remote stops and onboard kill switches were configured in the system to handle emergencies. Table 1 summarizes the specifications of the developed ASV, including the payload sensors for navigation and mapping.

Table 1.

ASV Specifications.

2.2. Control Architecture

Autonomous navigation approaches for tracking pre-assigned waypoints are fundamentally required to achieve various tasks autonomously. Thus, a closed-loop controller was applied to control the course and direct the vehicle towards the waypoint.

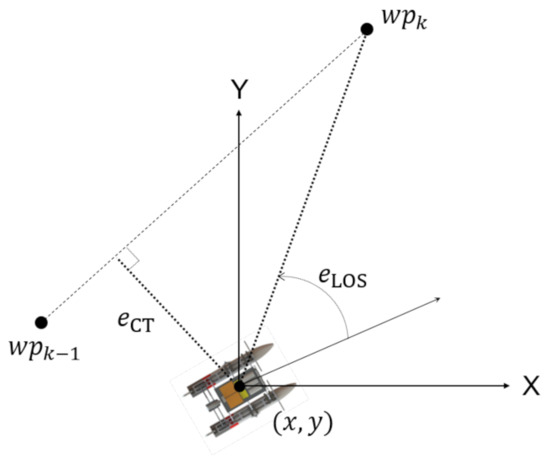

Waypoints were assigned based on the required tasks. Because the vehicle was developed as a nonholonomic system, its motion model for guidance and control approaches was simplified as a three-degree-of-freedom (3-DOF) kinematic model. In addition, a guidance approach for minimizing line-of-sight (LOS) and cross-track (CT) errors was employed. In this regard, a conventional proportional-derivative (PD) controller was designed and implemented to control the course of the vehicle toward the waypoints. During task activation, a control input () of each thruster, determined by the LOS and CT guidance, can be expressed as follows:

where and are the proportional and differential gain coefficients for the LOS guidance, respectively, and and are the proportional and differential gain coefficients for the CT guidance, respectively. The variables and denote the heading angle error of the vehicle and its differential value. Moreover, and represent the CT error and its differential value, respectively. In addition, is defined as the ratio of the distance to the current goal waypoint from the vehicle position to the distance between the current and the previous waypoints. If is larger than 1, then is set to 1. Accordingly, the weighting of each guidance approach was adjusted using . The definitions of these tracking performance indices are presented in Figure 3.

Figure 3.

Illustration of waypoint tracking performance indices.

3. Consistent Mapping

To generate a globally consistent map of the target object from a sensor data stream, the following three steps must be executed: (1) precise estimation of vehicle motion, (2) MBES point cloud generation with outlier rejection and filtering, and (3) proper projection of sonar image pixels onto the baseline for SSS map building.

3.1. Motion Estimation

In an offshore ocean environment, the ASV suffers from large disturbances due to wind and wave currents. This makes precise position and attitude estimations crucial for consistent mapping; otherwise, each captured scene from the mapping sensors will not overlap correctly, thereby making it difficult to recognize the target objects in the resulting map.

For this purpose, we first define the coordinate systems to describe the vehicle motion. We assume that the effect of the Earth’s rotation on the vehicle motion can be neglected, and thus any Earth-fixed frame such as Earth-centered Earth-fixed (ECEF) or East, North, up (ENU) can be employed as an inertial reference frame {I}. In this study, we considered the ENU as an inertial reference frame. We also define the vehicle’s body-fixed frame {B} as the center of the inertial measurement unit (IMU), where the specific forces and angular velocities are measured.

Subsequently, we can define the vehicle’s navigation states, including the position , linear velocity , and orientation .

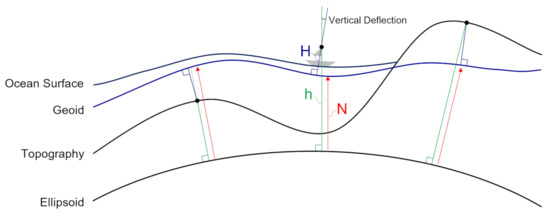

Then, we must define a vertical datum representing the height of the target objects. The most typically used datum is geoid, and the height from the geoid is the orthometric height H. The relation between H and the other height information is described as follows:

where N and h are the geoid and ellipsoid height, respectively, and both can be obtained from the NMEA 0183 packet data received by the GPS antenna. This relationship is depicted in Figure 4. Note that the vertical deflection (VD) corresponds to the discrepancy between the true vertical and ellipsoid normal. The VD over the field test area was less than 5, implying that the horizontal deviation due to the VD is at most 2.5 mm for the 100 m range measurement. Therefore, we can neglect the VD for the local survey and let .

Figure 4.

Height definition.

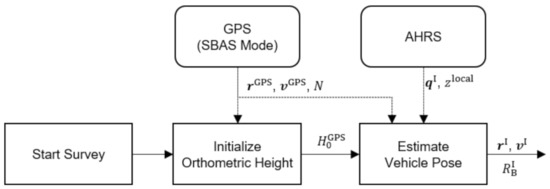

Based on these representations, the overall procedure for the vehicle state estimation can be represented as shown in Figure 5. The vehicle state estimation combines measurements from GPS and the absolute heading reference system (AHRS). In particular, the GPS provides position information , where , , and h are the geodetic coordinates represented in a reference ellipsoid (e.g., WGS84). Owing to the geometric configuration of the satellite constellation, GPS usually has a higher dilution of precision (DOP) in the vertical direction than in the horizontal direction. Thus, it has larger uncertainty in the vertical estimation. Typical GPS position accuracy running in satellite-based augmentation system (SBAS) mode shows 0.3 m and 0.6 m in horizontal and vertical axes, respectively. Therefore, having additional altitude information from a more accurate sensor is required. In this regard, we employed a survey-grade AHRS for both heave and orientation estimation.

Figure 5.

Pose estimation flow chart.

Moreover, the position expressed in a geodetic coordinate system is transformed into an ENU frame. Assuming that the reference position is not far from the survey area (e.g., located at the starting point of the vehicle), the transformation equations using the Taylor series expansion can be approximated as follows:

where is the Earth’s prime vertical radius of curvature, is the Earth’s meridional radius of curvature, is the equatorial radius and e is the first eccentricity of the ellipsoid. The converted GPS position measurements were used to update the vehicle pose estimation. The altitude measurement h is used only while initializing the orthometric height of the GPS antenna when the survey starts. After initialization, the height of the vehicle’s body-fixed frame is estimated using the heave measurement , as follows:

Since we used a highly accurate AHRS, the vehicle orientation from AHRS was directly employed in the final state estimation.

3.2. MBES Mapping

The procedure for terrain mapping includes the raw measurement of bathymetry using sonar beams, reconstruction of 3D point clouds of underwater terrains with the vehicle’s pose information, and outlier rejection.

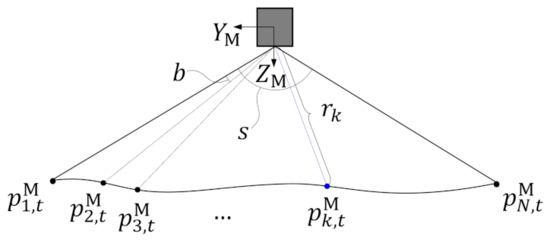

Bathymetry measurements using the MBES are shown in Figure 6. Given the sonar scan width s and beam spacing b, we can transform the sonar ranges into sonar point clouds represented in a MBES-sensor-fixed frame {M} as follows:

where is the measurement range from the kth sonar beam at time step t. can be represented in {I} as follows:

where is the rotation matrix describing the transformation from {B} to {I}, is the matrix describing the rotation from {M} to {B}, and is a translation vector describing the sensor position from the center of the vehicle’s motion.

Figure 6.

Seafloor point cloud generation with an MBES.

The initial terrain map comprises the reconstructed 3D terrain points as follows:

where is the number of sonar beams in a single scan, and is the total time step taken for sonar mapping.

The above represents the terrain map for an ideal sonar ranging. However, in a real situation, sonar ranging suffers from multipath or scattering effects. This generally occurs from the water surface, the vehicle itself, or the rough seafloor, causing missing data and outliers. The latter must be removed from the initial sonar point clouds for consistent mapping.

Therefore, we generated a sub-map of the sonar point cloud from the aggregated sonar scans and applied the statistical outlier removal (SOR) method [21] to the generated sub-map. A single sonar scan only contains a stripe of seafloor information; thus, it is better to aggregate the information from the consecutive scans and subsequently apply the filter to it. We also modified the traditional SOR filter into a v-SOR to generate statistics on the vertical heights, as the seafloor point clouds are 2.5D. This approach provides us with two advantages over the conventional method. First, we can preserve the sparse sonar points generated during the vehicle’s heading change or abrupt roll motion. The conventional SOR filter uses Euclidean distance as a criterion for determining the outliers. However, this approach can remove inlier sonar points that are sparse in the XY plane. Our approach verifies the height differences and preserves sonar points within a statistical bound in the Z-direction. The second advantage is that we can skip the spawning of K-d trees to calculate the distance, as we directly use the height of each point to measure discrepancies, enhancing computational efficiency. A more detailed explanation of the approach can be found below in the section of experimental results.

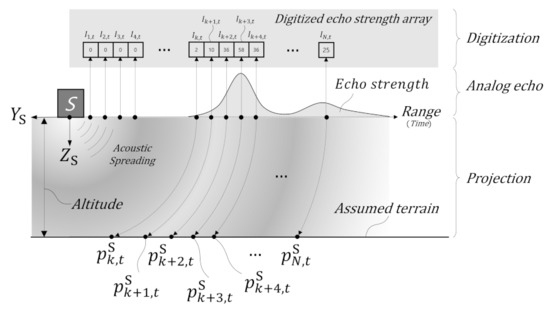

3.3. SSS Mapping

To generate a sonar image, the image surface must first be defined. Generally, the SSS image is created on a plane by linearly projecting the received array of sonar echo intensity onto this plane. However, this approach generates increasing distortions in terms of pixel positions because the actual sonar projection is not linear. Therefore, we assumed a virtual terrain surface below the sonar head and radially projected the sonar echo value onto the surface to reduce the distortion of the sonar image. A virtual surface was created using the altitude between the vehicle and underwater terrain based on the measured SSS echo strength. Further, height of the virtual surface varied according to the altitude and attitude of the vehicle. The sonar images were then generated by aggregating the SSS sonar pixels from the port and starboard sides, as shown in Figure 7.

Figure 7.

SSS image generation with 3D projection of the intensity pixels onto the assumed underwater terrain.

4. Experiments

A field test was conducted to validate the proposed ASV-based mapping system. During the field test, we remotely monitored the waypoint tracking and sensor acquisition status of the vehicle and verified whether the automatic saving system saves the entire sensor data into a series of split files. Finally, an additional offline evaluation was performed using the recorded data for the proposed mapping algorithm.

4.1. Experimental Setup

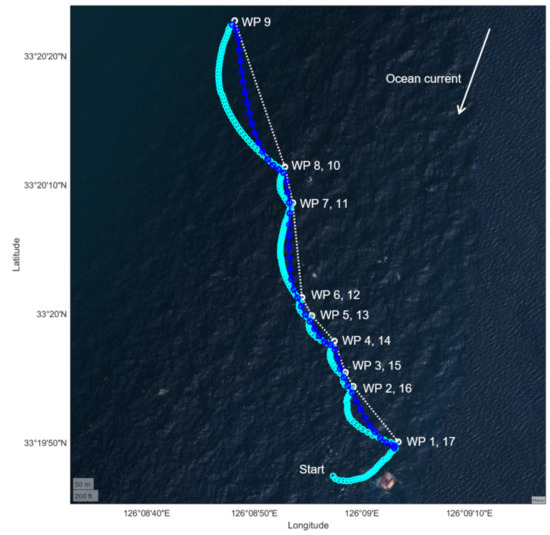

The targeted survey area was the wave power plant testing site built by Korea Research Institute of Ships and Ocean Engineering (KRISO), located on the south-west coast of Jeju Island. The vehicle path used to survey the cable line is illustrated in Figure 8. The waypoints were selected based on the draft of the power cable lines provided by relevant authorities. The line had several segments, and waypoints were given for the joint of the segments, as well as the starting and end points. The vehicle travelled back and forth along the same waypoints.

Figure 8.

Waypoint tracking performance under the harsh ocean environment. Survey paths and desired waypoints are shown in white circles and dotted lines. Actual vehicle trajectories are shown in solid lines with cyan and blue circles.

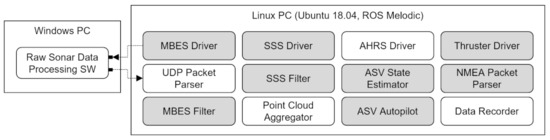

Low-level communication for thrust command, feedback, and battery management was performed through a microcontroller. Sensor acquisition and processing were implemented in a Linux PC running a robot operating system (ROS) [22], except for the raw sonar data processing software that ran on a Windows PC. The developed software components are shown in Figure 9. All sensor data were saved as automatically split files during the survey mission. The total size of the collected data was 47.5 GB for the survey path. The vehicle ran for approximately one and a half hours to complete this survey. The total travel distance was 2.56 km.

Figure 9.

ASV software components. Manufacturer-provided and in-house software blocks are shown in white and gray boxes, respectively.

The driver and data acquisition PCs were time-synchronized through the network time protocol server before the survey began. The heading angle of the AHRS was calibrated using an additional dual GPS antenna system connected to the AHRS. The initial extrinsic calibration parameters between the GPS antenna, AHRS, MBES, and SSS were obtained from a computer-aided design drawing of the vehicle body frame.

4.2. Waypoint Trakcing Results

The pre-planned waypoints and the actual vehicle trajectories are shown in Figure 8. The ocean current velocity during the survey was approximately 2.1 m/s and its direction was opposite to that of the vehicle course when the waypoint tracking started (the current forecast was acquired by observing the buoys). This caused the vehicle to move slowly until it reached a large turning point at waypoint 9. Then, it quickly returned to the starting point (waypoint 17). The average speed over ground (SOG) of the vehicle between waypoints 1 and 9 was 1.5 m/s; the average SOG during the homing journey from waypoint 9 was 5.8 m/s. This indicates that the vehicle was under the influence of a high current. We can also observe this in Figure 8, where the density of the ASV positions is much higher until it changes its heading at waypoint 9.

Another difficulty in waypoint tracking is the reduction in CT errors. As the current pushes the vehicle on the lateral side from the northeast direction, it is difficult for the vehicle to overcome the CT errors with constrained thrust force and maneuverability, especially when it follows the north-heading paths. Moreover, the CT error was more significant when the angle between the path segment and the current direction became perpendicular, for example, paths WP 1–2, WP 3–4, and WP 8–9. Due to this, the ASV could not observe part of the power lines in the WP 8–9 segment, both with MBES and SSS measurements.

In future studies, through validation with additional field tests, a more dedicated controller design to overcome the harsh ocean currents may be realized.

4.3. MBES Mapping Results

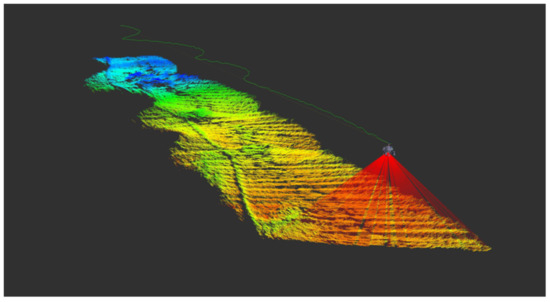

The real-time sonar point cloud generation in Rviz (ROS 3D visualization tool) is shown in Figure 10. As the seafloor near Jeju Island is mainly composed of basalt, the sonar beams suffer from random reflections. In some cases, no return data were obtained for various sonar beams.

Figure 10.

Real-time MBES sonar point cloud generation.

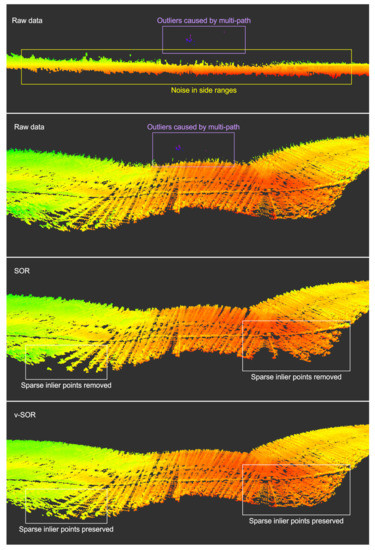

Figure 11 shows the effect of the SOR filter on the sonar point cloud. The raw sonar point cloud contains outliers due to the multipath effect and increasing noise in the side ranges. These can be removed by applying a conventional SOR with a tight rejection threshold. However, it also removes the sparse sonar points that should have been preserved. In contrast, the proposed v-SOR filters out the vertical outliers as well as side noises while preserving the sparse inlier point cloud.

Figure 11.

Comparison between the conventional SOR and the proposed v-SOR. SOR removed the sparse inlier sonar points; v-SOR preserves them while removing vertical ouliers and side noises.

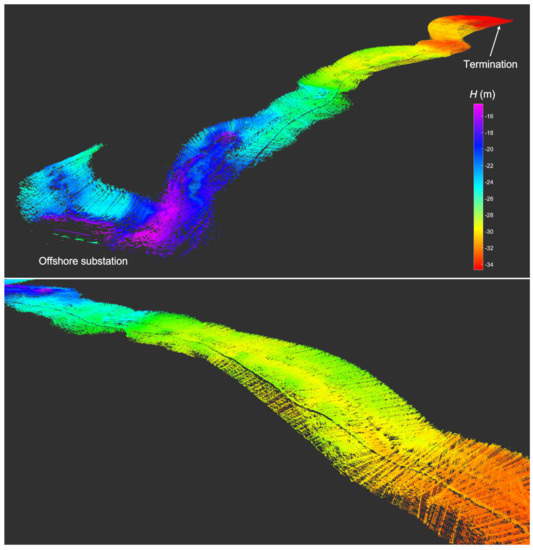

The final aggregated sonar-point cloud is shown in Figure 12. We can observe the entire segment of the power cable lines that starts from the offshore electrical substation (areas colored in magenta) to the termination point (areas colored in red). Owing to the consistent height estimation, the shape of the cable lines from the generated point clouds maintains consistency, even with overlapping survey paths. The quality of the generated point cloud in this step is also important in post-processing, as it can be directly used in mesh interpolation without additional outlier removal and to generate sharper seafloor maps. Furthermore, we expect the built map to be used as a draft during future construction works, such as inspection and maintenance, as all these point clouds were georeferenced using vertical datum. This also applies to the generated SSS image maps.

Figure 12.

MBES cable line mapping results.

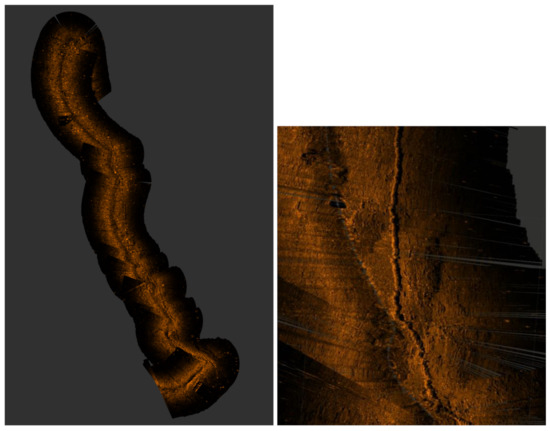

4.4. SSS Mapping Results

Figure 13 shows the sonar images generated from SSS. The sonar image produced a patchy image owing to the high roll and pitch motion of the ASV caused by the ocean waves and the new pixels drawn on top of the old pixels. However, we can clearly observe the offshore cable line laid on the seafloor, as shown in Figure 13. In particular, the stone bags used to anchor the cable lines placed on top of the cables can be seen in the SSS maps through the acoustic shadows created, while these cannot be seen in the MBES generated maps. Thus, we can exploit the advantages of using multimodal sonar sensors for offshore surveys.

Figure 13.

SSS mapping results.

Future studies may be directed toward the projection of SSS image pixels into real MBES 3D points rather than the hypothetical baseline. To address this, a dedicated synchronization between the MBES and SSS measurements, along with accurate estimation of extrinsic parameters for the transformation between MBES and SSS must be acheived.

5. Conclusions

In this study, an autonomous mapping system using an ASV is proposed to inspect offshore cable lines. The proposed multimodal sonar mapping system can inspect the seafloor, including power cables, using MBES and SSS simultaneously. For MBES mapping, dedicated point-cloud filtering methods along with sub-mapping can remove the outliers in real time. For SSS mapping, the proper projection of the sonar pixel intensity into the baseline was proposed to overcome the distortion of sonar images. Field tests conducted in a challenging ocean environment validate the performance of the developed online ASV inspection system. In future work, a heterogeneous autonomous system with surface and underwater vehicles can be used to survey larger offshore areas. In this regard, more advanced technologies such as mission planning, task allocation, and robust control schemes should be developed and validated. Providing satellite communication is also desirable for long-range monitoring capabilities.

Author Contributions

Conceptualization, J.J., Y.L., J.P. and T.-K.Y.; methodology, J.J., J.P. and Y.L.; software, J.J., J.P. and Y.L; validation, J.J., J.P. and Y.L.; formal analysis, J.J., J.P. and Y.L.; investigation, J.J., J.P., Y.L. and T.-K.Y.; resources, J.P., J.J. and Y.L.; data curation, Y.L.; writing—original draft preparation, J.J., Y.L. and J.P.; writing—review and editing, J.J.; visualization, J.J. and Y.L.; supervision, T.-K.Y.; project administration, T.-K.Y.; funding acquisition, T.-K.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported in part by a grant from the Endowment Project (Development of Core Technologies for Operation of Marine Robots based on Cyber-Physical System) funded by the Korea Research Institute of Ships and Ocean Engineering under Grant PES4420, and in part by a grant from the Endowment Project (Development of Modeling/Simulation and Estimation/Inference Technology for Digital Twin Ship) funded by the Korea Research Institute of Ships and Ocean Engineering under Grant PES4430.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Acknowledgments

The authors thank Jong-Su Choi, Tae-Kyeong Ko, Jeong-Ki Lee, Jongboo Han and Jinwoo Choi from KRISO for their support during field tests.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Schiaretti, M.; Chen, L.; Negenborn, R.R. Survey on Autonomous Surface Vessels: Part I-A New Detailed Definition of Autonomy Levels. In Computational Logistics; Bektaş, T., Coniglio, S., Martinez-Sykora, A., Voß, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2017; pp. 219–233. [Google Scholar]

- Schiaretti, M.; Chen, L.; Negenborn, R.R. Survey on Autonomous Surface Vessels: Part II-Categorization of 60 Prototypes and Future Applications. In Computational Logistics; Bektaş, T., Coniglio, S., Martinez-Sykora, A., Voß, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2017; pp. 234–252. [Google Scholar]

- Kum, B.C.; Shin, D.H.; Lee, J.H.; Moh, T.; Jang, S.; Lee, S.Y.; Cho, J.H. Monitoring Applications for Multifunctional Unmanned Surface Vehicles in Marine Coastal Environments. J. Coast. Res. 2018, 85, 1381–1385. [Google Scholar] [CrossRef]

- Cost Reduction in E&P, IMR, and Survey Operations Using Unmanned Surface Vehicles. In OTC Offshore Technology Conference; 2018. Available online: https://onepetro.org/OTCONF/proceedings-pdf/18OTC/4-18OTC/D041S054R004/1193771/otc-28707-ms.pdf (accessed on 16 January 2022).

- Carlson, D.F.; Fürsterling, A.; Vesterled, L.; Skovby, M.; Pedersen, S.S.; Melvad, C.; Rysgaard, S. An affordable and portable autonomous surface vehicle with obstacle avoidance for coastal ocean monitoring. HardwareX 2019, 5, e00059. [Google Scholar] [CrossRef]

- Nicholson, D.P.; Michel, A.P.M.; Wankel, S.D.; Manganini, K.; Sugrue, R.A.; Sandwith, Z.O.; Monk, S.A. Rapid Mapping of Dissolved Methane and Carbon Dioxide in Coastal Ecosystems Using the ChemYak Autonomous Surface Vehicle. Environ. Sci. Technol. 2018, 52, 13314–13324. [Google Scholar] [CrossRef] [PubMed]

- Karapetyan, N.; Moulton, J.; Rekleitis, I. Dynamic Autonomous Surface Vehicle Control and Applications in Environmental Monitoring. In Proceedings of the OCEANS 2019 MTS/IEEE SEATTLE, Seattle, WA, USA, 27–31 October 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Yan, R.; Pang, S.; Sun, H.; Pang, Y. Development and missions of unmanned surface vehicle. J. Mar. Sci. Appl. 2010, 9, 451–457. [Google Scholar] [CrossRef]

- Han, J.; Cho, Y.; Kim, J. Coastal SLAM With Marine Radar for USV Operation in GPS-Restricted Situations. IEEE J. Ocean. Eng. 2019, 44, 300–309. [Google Scholar] [CrossRef]

- Xiao, X.; Dufek, J.; Woodbury, T.; Murphy, R. UAV assisted USV visual navigation for marine mass casualty incident response. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 6105–6110. [Google Scholar] [CrossRef]

- Jorge, V.A.M.; Granada, R.; Maidana, R.G.; Jurak, D.A.; Heck, G.; Negreiros, A.P.F.; dos Santos, D.H.; Gonçalves, L.M.G.; Amory, A.M. A Survey on Unmanned Surface Vehicles for Disaster Robotics: Main Challenges and Directions. Sensors 2019, 19, 702. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pu, H.; Liu, Y.; Luo, J.; Xie, S.; Peng, Y.; Yang, Y.; Yang, Y.; Li, X.; Su, Z.; Gao, S.; et al. Development of an Unmanned Surface Vehicle for the Emergency Response Mission of the ‘Sanchi’ Oil Tanker Collision and Explosion Accident. Appl. Sci. 2020, 10, 2704. [Google Scholar] [CrossRef] [Green Version]

- Capperucci, R.; Kubicki, A.; Holler, P.; Bartholomä, A. Sidescan sonar meets airborne and satellite remote sensing: Challenges of a multi-device seafloor classification in extreme shallow water intertidal environments. Geo-Mar. Lett. 2020, 40, 117–133. [Google Scholar] [CrossRef] [Green Version]

- Kampmeier, M.; van der Lee, E.M.; Wichert, U.; Greinert, J. Exploration of the munition dumpsite Kolberger Heide in Kiel Bay, Germany: Example for a standardised hydroacoustic and optic monitoring approach. Cont. Shelf Res. 2020, 198, 104108. [Google Scholar] [CrossRef]

- Pydyn, A.; Popek, M.; Kubacka, M.; Janowski, Ł. Exploration and reconstruction of a medieval harbour using hydroacoustics, 3-D shallow seismic and underwater photogrammetry: A case study from Puck, southern Baltic Sea. Archaeol. Prospect. 2021, 28, 527–542. [Google Scholar] [CrossRef]

- Petillot, Y.; Reed, S.; Bell, J. Real time AUV pipeline detection and tracking using side scan sonar and multi-beam echo-sounder. In Proceedings of the OCEANS ’02 MTS/IEEE, Biloxi, MI, USA, 29–31 October 2002; pp. 217–222. [Google Scholar] [CrossRef]

- DeKeyzer, R.; Byrne, J.; Case, J.; Clifford, B.; Simmons, W. A comparison of acoustic imagery of sea floor features using a towed side scan sonar and a multibeam echo sounder. In Proceedings of the OCEANS ’02 MTS/IEEE, Biloxi, MI, USA, 29–31 October 2002; pp. 1203–1211. [Google Scholar] [CrossRef]

- Barkby, S.; Williams, S.; Pizarro, O.; Jakuba, M. An efficient approach to bathymetric SLAM. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; pp. 219–224. [Google Scholar] [CrossRef]

- Thompson, D.; Caress, D.; Paull, C.; Clague, D.; Thomas, H.; Conlin, D. MBARI mapping AUV operations: In the Gulf of California. In Proceedings of the 012 Oceans, Hampton Roads, VA, USA, 14–19 October 2012; pp. 1–5. [Google Scholar] [CrossRef]

- Fezzani, R.; Zerr, B.; Mansour, A.; Legris, M.; Vrignaud, C. Fusion of Swath Bathymetric Data: Application to AUV Rapid Environment Assessment. IEEE J. Ocean. Eng. 2019, 44, 111–120. [Google Scholar] [CrossRef]

- Rusu, R.B.; Marton, Z.C.; Blodow, N.; Dolha, M.; Beetz, M. Towards 3D Point cloud based object maps for household environments. Robot. Auton. Syst. 2008, 56, 927–941. [Google Scholar] [CrossRef]

- Quigley, M.; Gerkey, B.; Conley, K.; Faust, J.; Foote, T.; Leibs, J.; Berger, E.; Wheeler, R.; Ng, A. ROS: An open-source Robot Operating System. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Kobe, Japan, 12–17 May 2009. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).