Author Contributions

Conceptualization, L.L. and S.S.; methodology, L.L. and S.S.; software, L.L. and S.S.; validation, L.L., S.S. and X.F.; resources, S.S.; data curation, L.L. and S.S.; writing—original draft preparation, L.L. and S.S.; writing—review and editing, L.L., S.S. and X.F.; supervision, S.S. and X.F.; project administration, S.S.; funding acquisition, S.S. All authors have read and agreed to the published version of the manuscript.

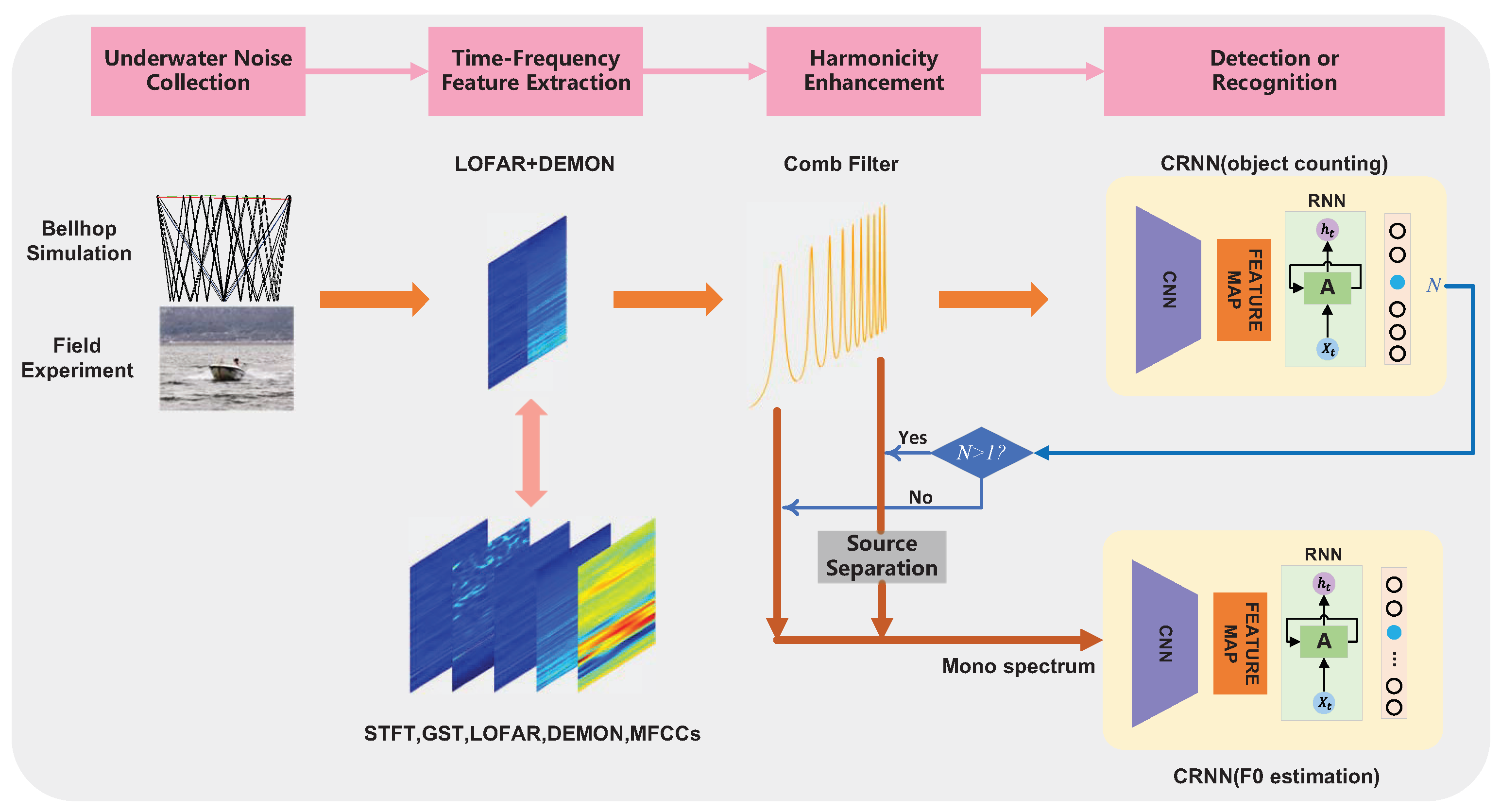

Figure 1.

The pipeline of our proposed algorithm for underwater acoustic object counting and F0 estimation. Again, when the target number , mixed spectra must be separated into mono spectrums before feeding into the CRNN, and related works will be present in a future report, which is not a concern in this paper.

Figure 1.

The pipeline of our proposed algorithm for underwater acoustic object counting and F0 estimation. Again, when the target number , mixed spectra must be separated into mono spectrums before feeding into the CRNN, and related works will be present in a future report, which is not a concern in this paper.

Figure 2.

An example for LOFAR and DEMON spectrum in low-speed rotation (“Hailangdao" ship, 600 r·min), with upper for LOFAR and lower for DEMON. Letter annotations are used to illustrate the harmonic relationship between spectral lines. The vertical axis represents time and the horizontal axis frequency.

Figure 2.

An example for LOFAR and DEMON spectrum in low-speed rotation (“Hailangdao" ship, 600 r·min), with upper for LOFAR and lower for DEMON. Letter annotations are used to illustrate the harmonic relationship between spectral lines. The vertical axis represents time and the horizontal axis frequency.

Figure 3.

An example of the LOFAR and DEMON spectrums in high-speed rotation (“Hailangdao” ship, 1200 r·min), with upper for LOFAR and lower for DEMON. Letter and digital annotations are used to illustrate the harmonic relationship between spectral lines. The vertical axis represents time and the horizontal axis frequency.

Figure 3.

An example of the LOFAR and DEMON spectrums in high-speed rotation (“Hailangdao” ship, 1200 r·min), with upper for LOFAR and lower for DEMON. Letter and digital annotations are used to illustrate the harmonic relationship between spectral lines. The vertical axis represents time and the horizontal axis frequency.

Figure 4.

The enhanced version of the combined LOFAR and DEMON spectrum in

Figure 3 with comb filtering, where the frequency (horizontal) axis is shown logarithmically for better visualization.

Figure 4.

The enhanced version of the combined LOFAR and DEMON spectrum in

Figure 3 with comb filtering, where the frequency (horizontal) axis is shown logarithmically for better visualization.

Figure 5.

Structure of our CRNN.

Figure 5.

Structure of our CRNN.

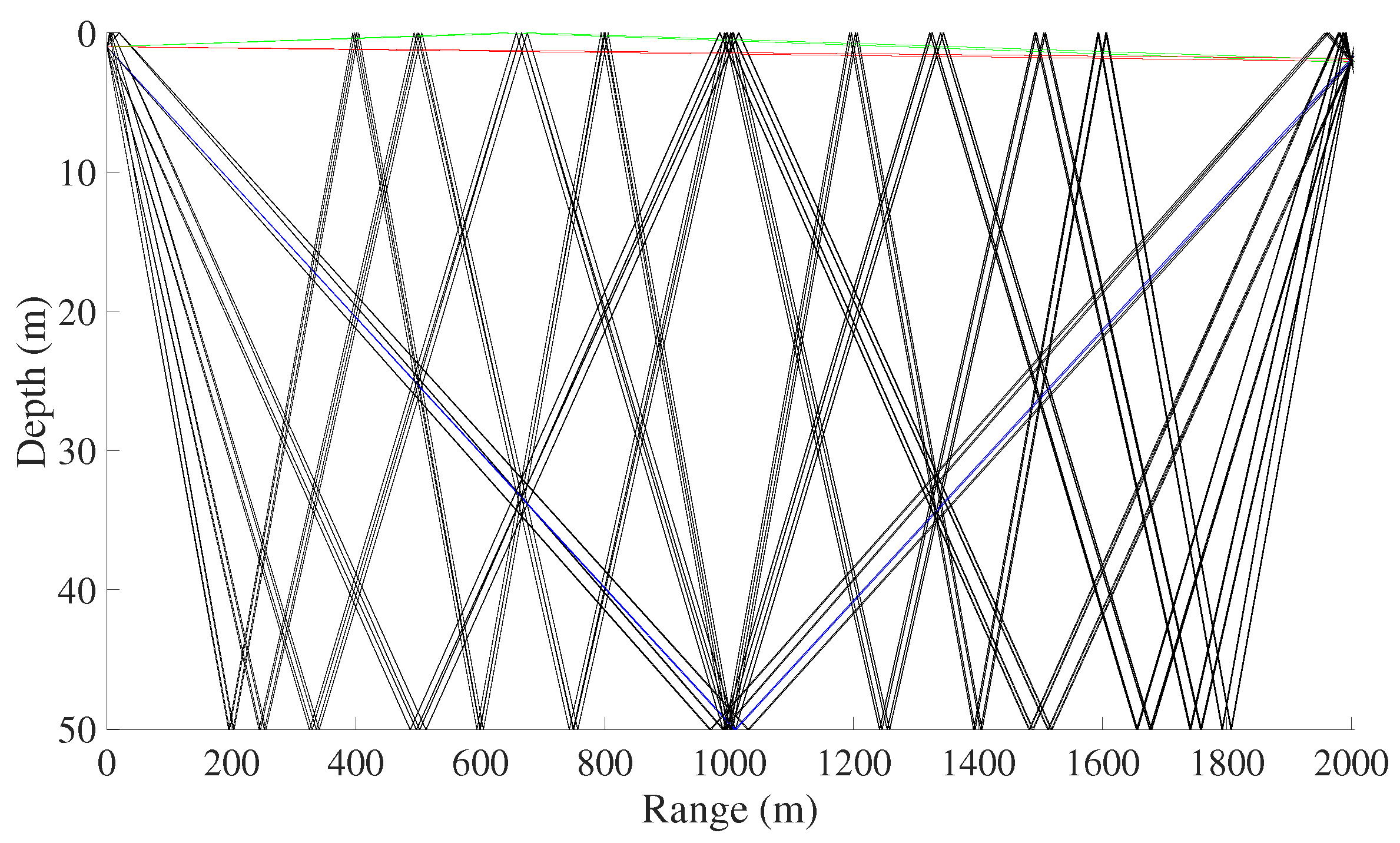

Figure 6.

Eigenray trace of sound propagation during simulation with the Bellhop toolkit. The rays are plotted using different colors depending on whether the ray hits one or both boundaries, red for direct, green for the surface, blue for the bottom, and black for both. The multi-path effect is clearly shown above.

Figure 6.

Eigenray trace of sound propagation during simulation with the Bellhop toolkit. The rays are plotted using different colors depending on whether the ray hits one or both boundaries, red for direct, green for the surface, blue for the bottom, and black for both. The multi-path effect is clearly shown above.

Figure 7.

Spectrum of 1 s radiated noise before and after the Bellhop simulation.

Figure 7.

Spectrum of 1 s radiated noise before and after the Bellhop simulation.

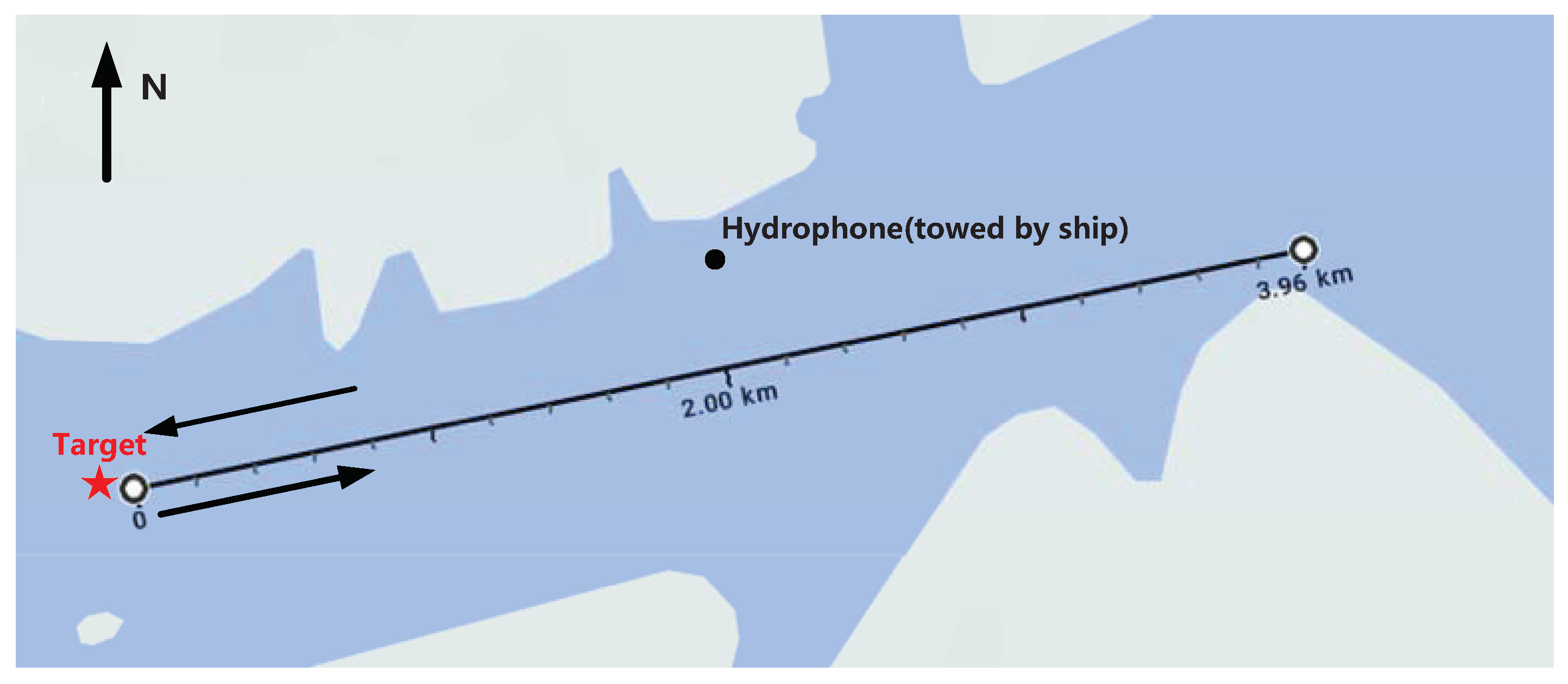

Figure 8.

Environment and settings for lake trial, see the text for details.

Figure 8.

Environment and settings for lake trial, see the text for details.

Figure 9.

Training process with different features on simulation dataset for the F0 estimation task, L + D is short for LOFAR + DEMON. The upper panel shows the loss and accuracy on the training dataset while the lower panel for validation dataset.

Figure 9.

Training process with different features on simulation dataset for the F0 estimation task, L + D is short for LOFAR + DEMON. The upper panel shows the loss and accuracy on the training dataset while the lower panel for validation dataset.

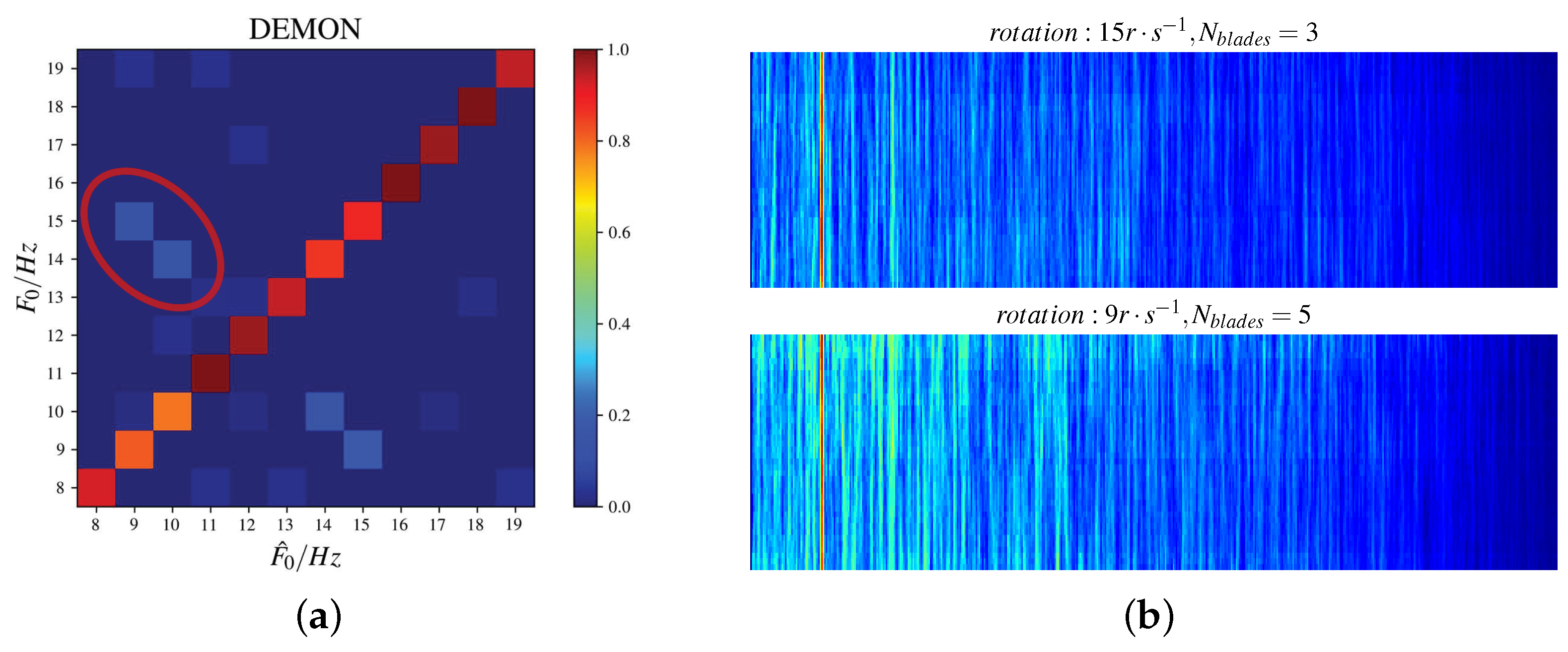

Figure 10.

Feature spectrum of noises with the same blade frequency leading to classification errors. The confusion matrix for all fundamental frequencies is presented in (a). The red circle marks the largest classification errors, and the corresponding DEMON spectrum of one category is displayed in (b).

Figure 10.

Feature spectrum of noises with the same blade frequency leading to classification errors. The confusion matrix for all fundamental frequencies is presented in (a). The red circle marks the largest classification errors, and the corresponding DEMON spectrum of one category is displayed in (b).

Figure 11.

Training process with different features, L + D + COMB is an abbreviation for LOFAR + DEMON + COMB. The upper panel shows the loss and accuracy on the training dataset while the lower panel for validation dataset.

Figure 11.

Training process with different features, L + D + COMB is an abbreviation for LOFAR + DEMON + COMB. The upper panel shows the loss and accuracy on the training dataset while the lower panel for validation dataset.

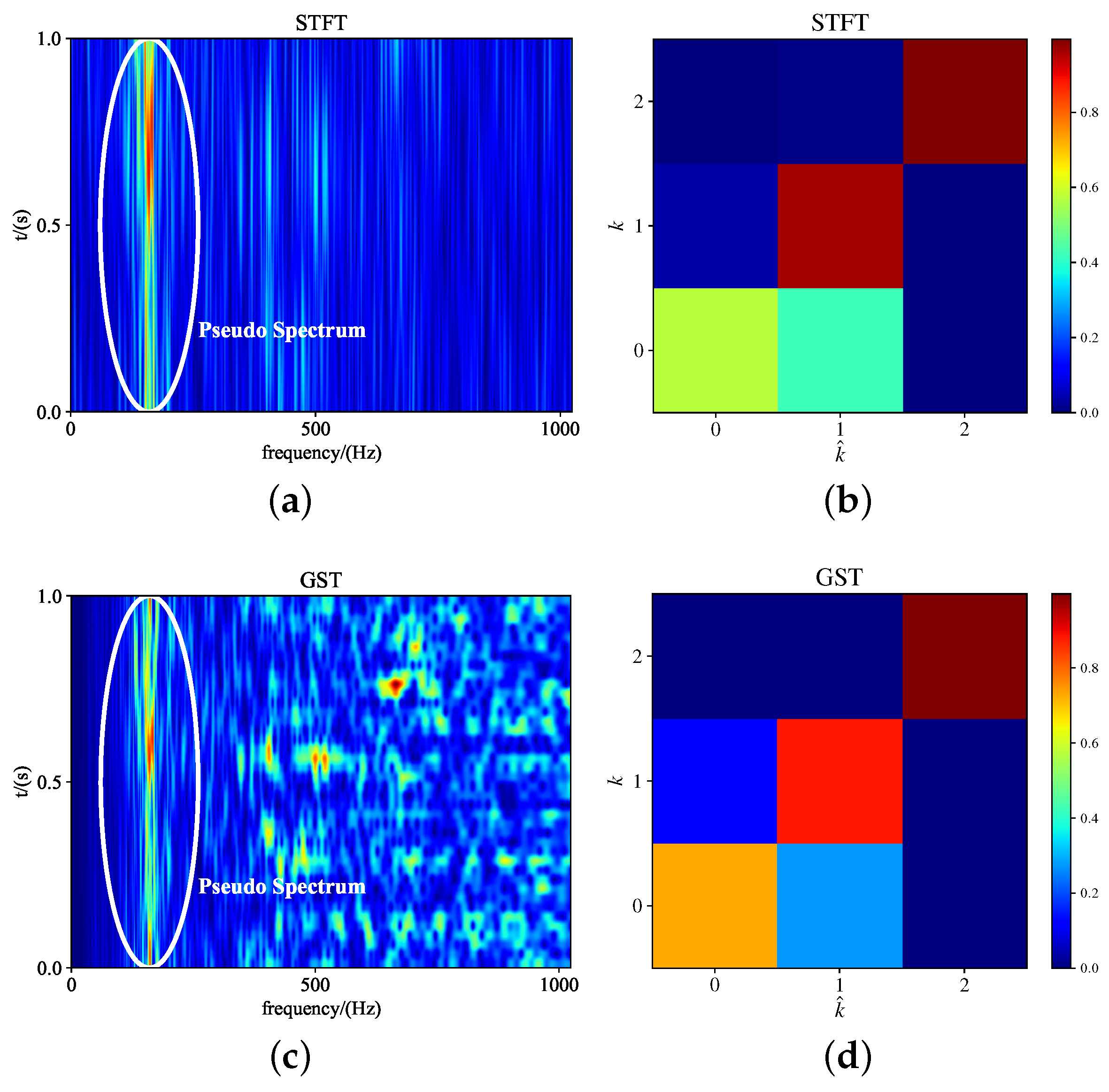

Figure 12.

Confusion between spectral lines and electrical noises-induced pseudo-lines. (a,c) correspond to the STFT and GST feature of background noise (target absent); (b,d) give the confusion matrix of STFT and GST.

Figure 12.

Confusion between spectral lines and electrical noises-induced pseudo-lines. (a,c) correspond to the STFT and GST feature of background noise (target absent); (b,d) give the confusion matrix of STFT and GST.

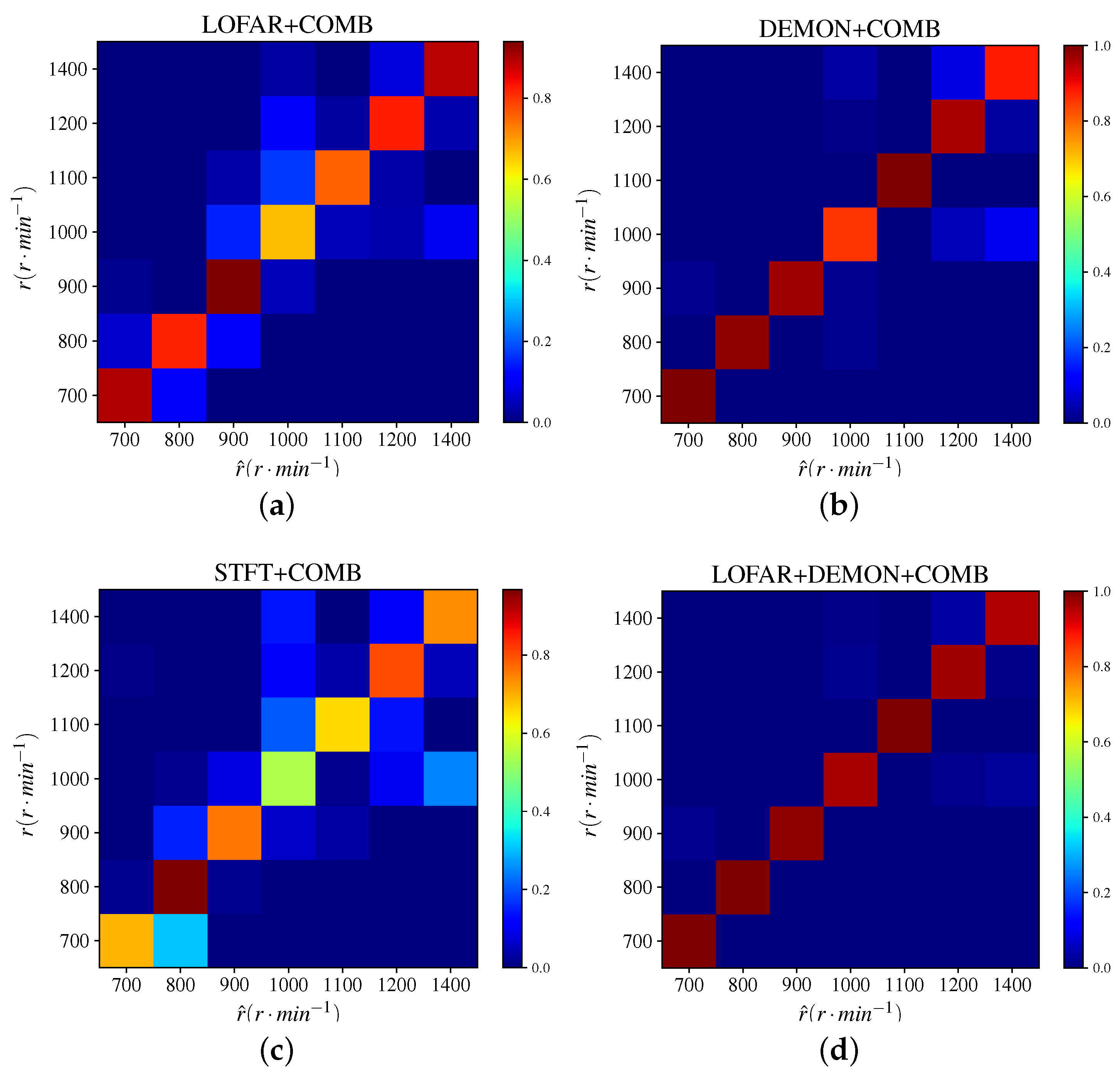

Figure 13.

The confusion matrix of different features for F0 estimation on the lake trial dataset (COMB included). The GST is neglected since it has the same overall accuracy as STFT (c). (a,b,d) represent the cofusion matrix when LOFAR, DEMON and LOFAR + DEMON are employed for classification, respectively.

Figure 13.

The confusion matrix of different features for F0 estimation on the lake trial dataset (COMB included). The GST is neglected since it has the same overall accuracy as STFT (c). (a,b,d) represent the cofusion matrix when LOFAR, DEMON and LOFAR + DEMON are employed for classification, respectively.

Table 1.

CRNN layers and hyperparameters.

Table 1.

CRNN layers and hyperparameters.

| Layer

| Filters

| Size

| Input

| Output

|

|---|

| 0 conv | 16 | | | |

| 1 batchnorm | | | | |

| 2 maxpool | | | | |

| 3 conv | 1 | | | |

| 4 batchnorm | | | | |

| 6 fc | 1 | | 160 | c |

| Layer

| Input

| Hidden Size

| Output

|

| 5 lstm | | 8 | 160 | |

Table 2.

Information of ships in the lake trial.

Table 2.

Information of ships in the lake trial.

| Notation | Tonnage (t) | | Rotation ( r·min) |

|---|

| Wanbang | 81 | 4 | |

| Hailangdao | 39 | 4 | |

| Tuolun | 21 | 4 | |

| Motorboat | <1 | 3 | |

Table 3.

Performance of each feature when feeding into the CRNN for object counting on the simulation dataset (COMB excluded).

Table 3.

Performance of each feature when feeding into the CRNN for object counting on the simulation dataset (COMB excluded).

| Features | Accuracy |

|---|

| MFCCs | 37% |

| LOFAR | 79% |

| DEMON | 80% |

| GST | 84% |

| STFT | 87% |

| LOFAR + DEMON (Ours) | 87% |

Table 4.

Performance of each feature when feeding into the CRNN for F0 estimation on the simulation dataset (COMB excluded).

Table 4.

Performance of each feature when feeding into the CRNN for F0 estimation on the simulation dataset (COMB excluded).

| Features | Accuracy |

|---|

| MFCCs | 10% |

| DEMON | 92% |

| GST | 98% |

| STFT | 99% |

| LOFAR | 99% |

| LOFAR + DEMON (Ours) | 100% |

Table 5.

Performance of each feature when feeding into CRNN for object counting on the simulation dataset (COMB included).

Table 5.

Performance of each feature when feeding into CRNN for object counting on the simulation dataset (COMB included).

| Features | Accuracy |

|---|

| LOFAR + COMB | 65% |

| DEMON + COMB | 68% |

| STFT + COMB | 70% |

| GST + COMB | 77% |

| LOFAR + DEMON + COMB (Ours) | 72% |

Table 6.

Prediction accuracy and convergence speed of each feature when comb filtering is included or not, based on features before and after enhancement, L + D is the abbr. of LOFAR + DEMON. Changes in accuracy and convergence speed are highlighted in bold format.

Table 6.

Prediction accuracy and convergence speed of each feature when comb filtering is included or not, based on features before and after enhancement, L + D is the abbr. of LOFAR + DEMON. Changes in accuracy and convergence speed are highlighted in bold format.

| Features | Accuracy | Epochs to Converge |

|---|

| DEMON | 92% | 80 |

| DEMON + COMB | 92% | 60 |

| GST | 98% | 140 |

| GST + COMB | 99% | 20 |

| LOFAR | 99% | 10 |

| LOFAR + COMB | 96% | 10 |

| STFT | 99% | 15 |

| STFT + COMB | 99% | 15 |

| L + D (Ours) | 100% | 40 |

| L + D + COMB (Ours) | 100% | 20 |

Table 7.

Evaluation metrics for LOFAR + COMB when feeding into the CRNN for F0 estimation on the simulation dataset, two pairs of rotations with integral multiplication relationship are highlighted in bold format.

Table 7.

Evaluation metrics for LOFAR + COMB when feeding into the CRNN for F0 estimation on the simulation dataset, two pairs of rotations with integral multiplication relationship are highlighted in bold format.

| Rotation (r·s−1) | Precision | Recall | F1 Score |

|---|

| 08 | 95% | 92% | 94% |

| 09 | 92% | 89% | 91% |

| 10 | 100% | 96% | 98% |

| 11 | 100% | 100% | 99% |

| 12 | 100% | 92% | 96% |

| 13 | 100% | 100% | 100% |

| 14 | 100% | 100% | 100% |

| 15 | 94% | 100% | 97% |

| 16 | 94% | 98% | 96% |

| 17 | 100% | 97% | 99% |

| 18 | 88% | 91% | 89% |

| 19 | 95% | 100% | 97% |

Table 8.

Performance of the algorithm based on different features on the lake trial dataset in object counting task (COMB excluded).

Table 8.

Performance of the algorithm based on different features on the lake trial dataset in object counting task (COMB excluded).

| Features | Accuracy |

|---|

| STFT | 87% |

| GST | 89% |

| LOFAR | 96% |

| DEMON | 97% |

| LOFAR + DEMON (Ours) | 100% |

Table 9.

Performance of algorithm based on different features on the lake trial dataset in F0 estimation task (COMB included).

Table 9.

Performance of algorithm based on different features on the lake trial dataset in F0 estimation task (COMB included).

| Features | Accuracy |

|---|

| GST + COMB | 74% |

| STFT + COMB | 74% |

| LOFAR + COMB | 83% |

| DEMON + COMB | 94% |

| LOFAR + DEMON + COMB (Ours) | 98% |