An Efficient Method for Counting Large-Scale Plantings of Transplanted Crops in UAV Remote Sensing Images

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

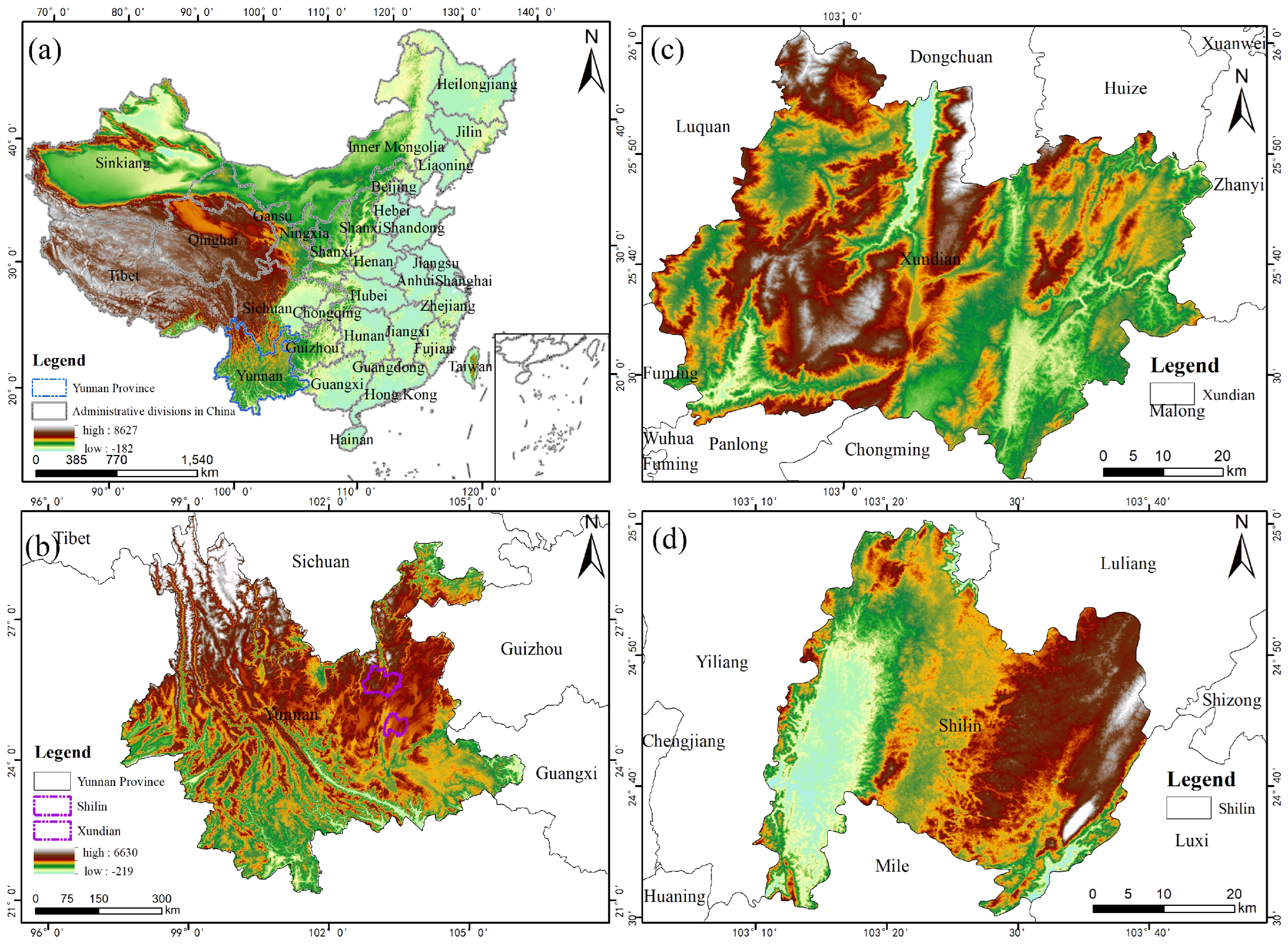

3.1. Study Area and Data

3.1.1. Study Area Introduction

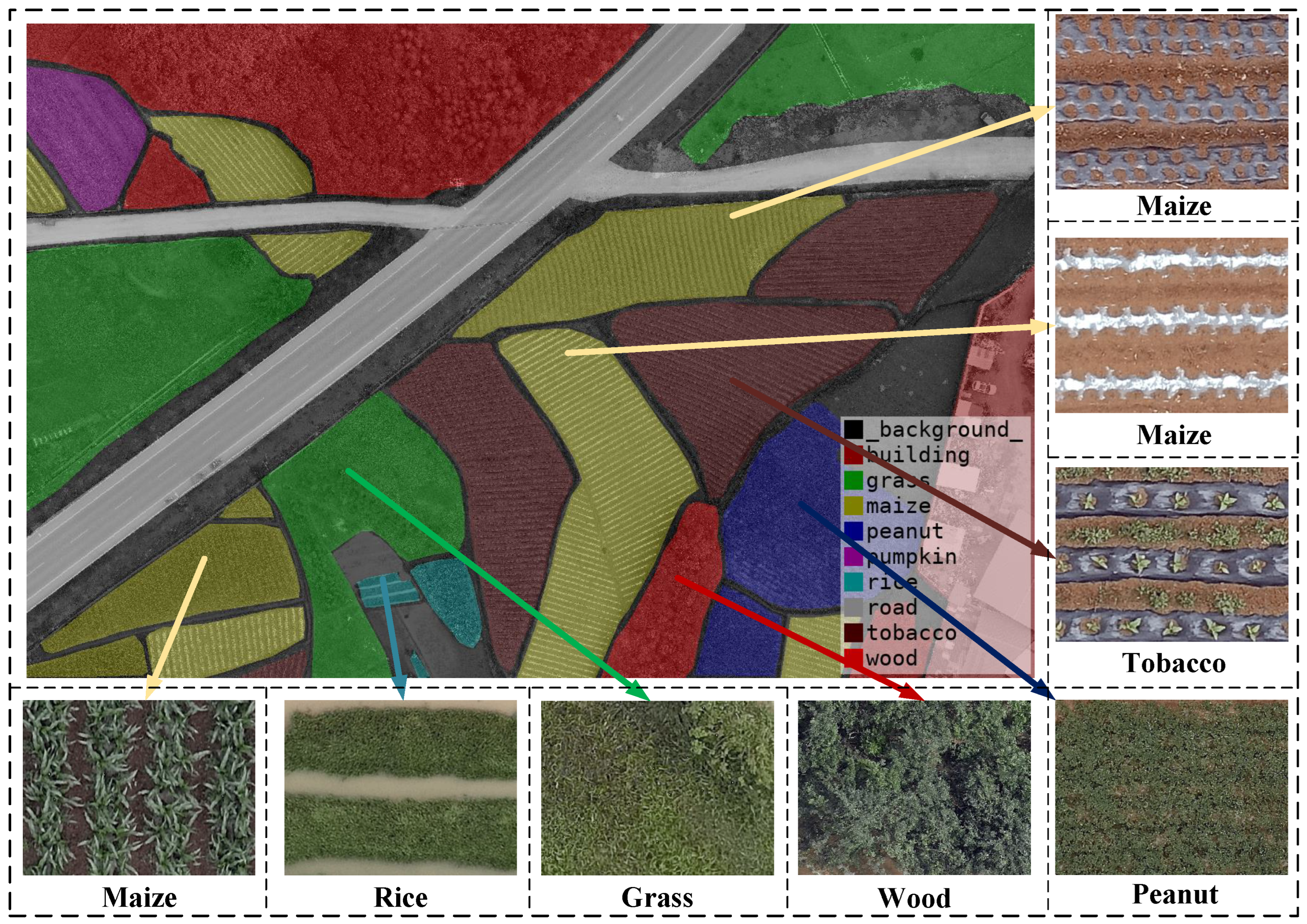

3.1.2. Dataset Construction

3.2. Farmland Segmentation Model

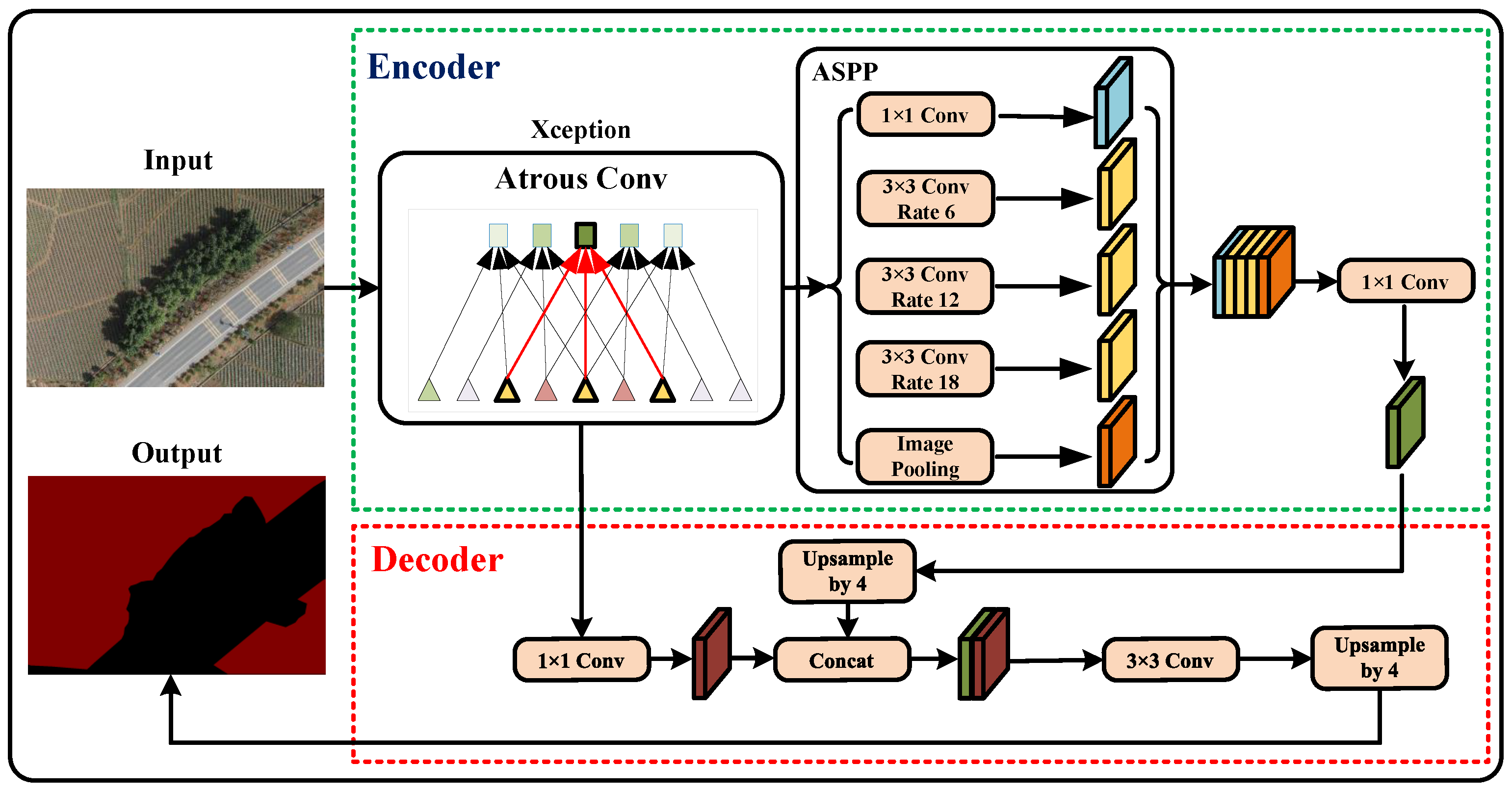

3.2.1. DeepLabV3+

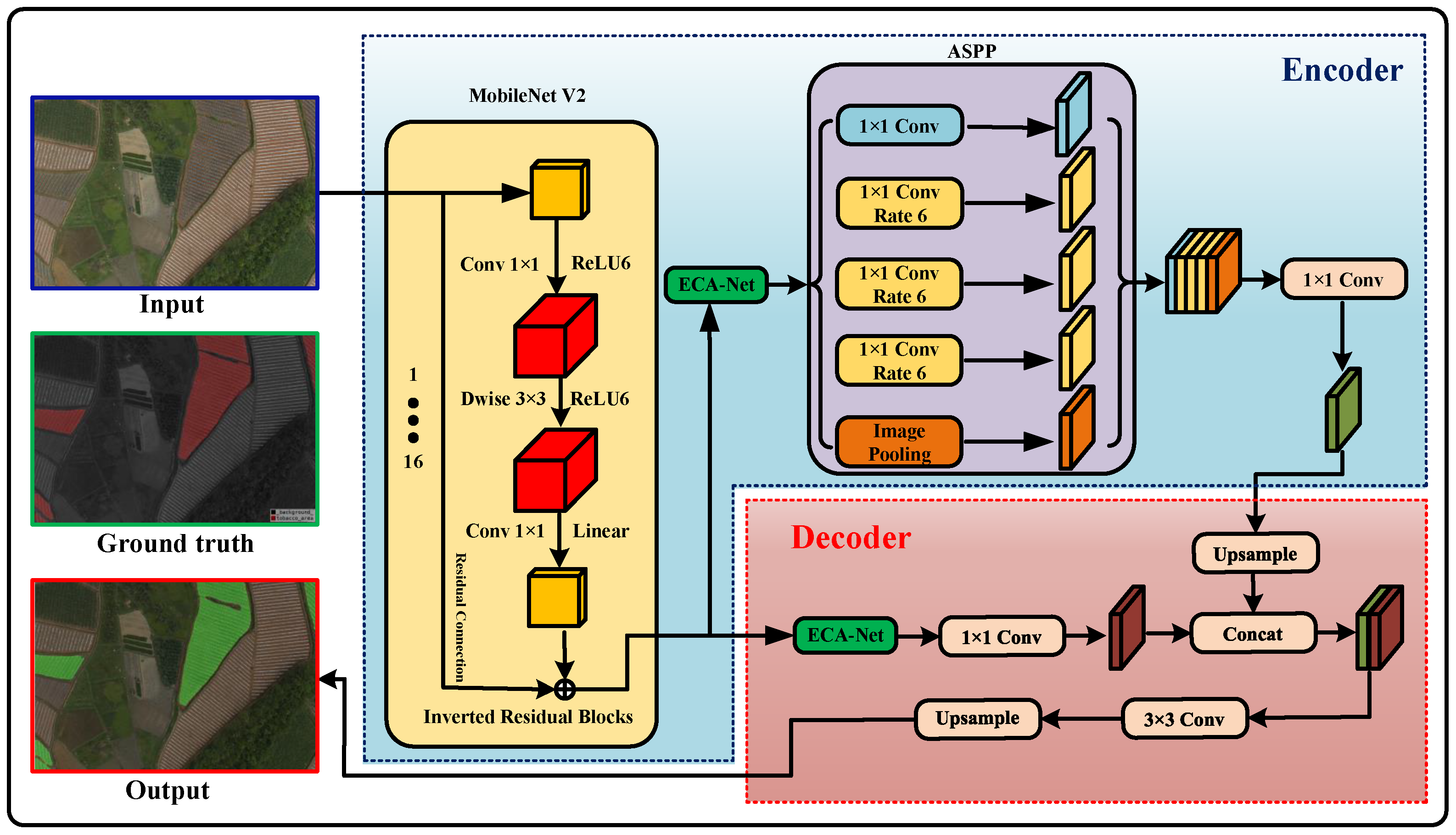

3.2.2. MED-Net Construction

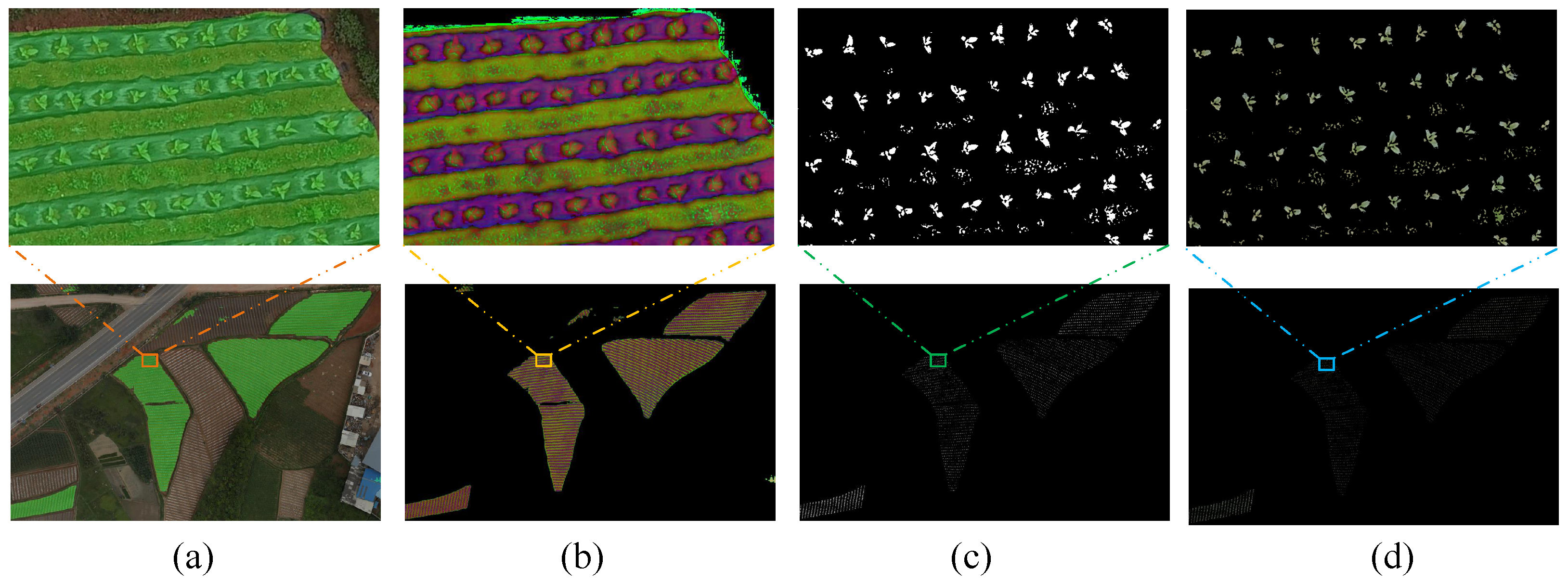

3.2.3. Establishing Background Color Masking Image for Transplanted Crops

3.3. Methods for Transplanted Crops Counting

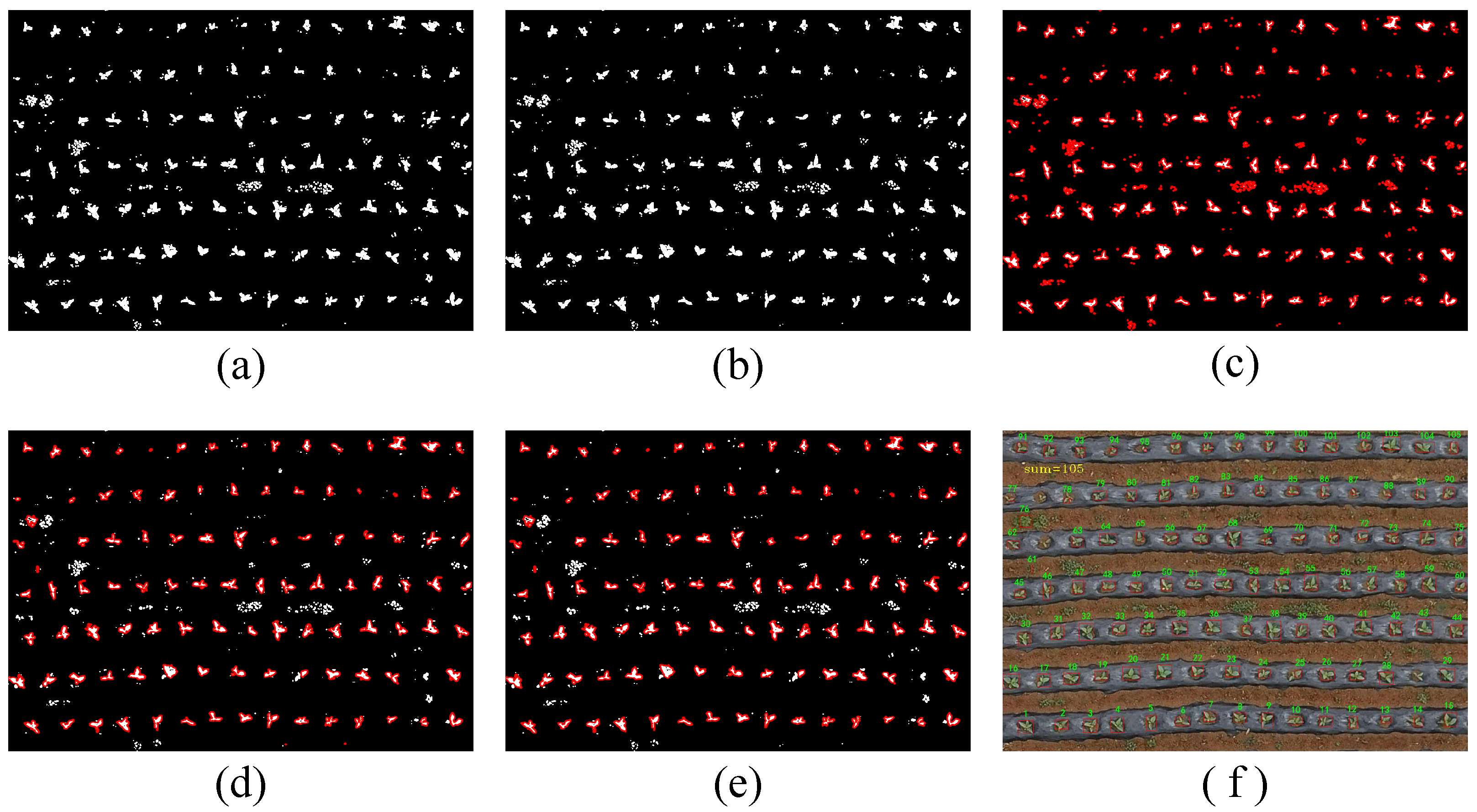

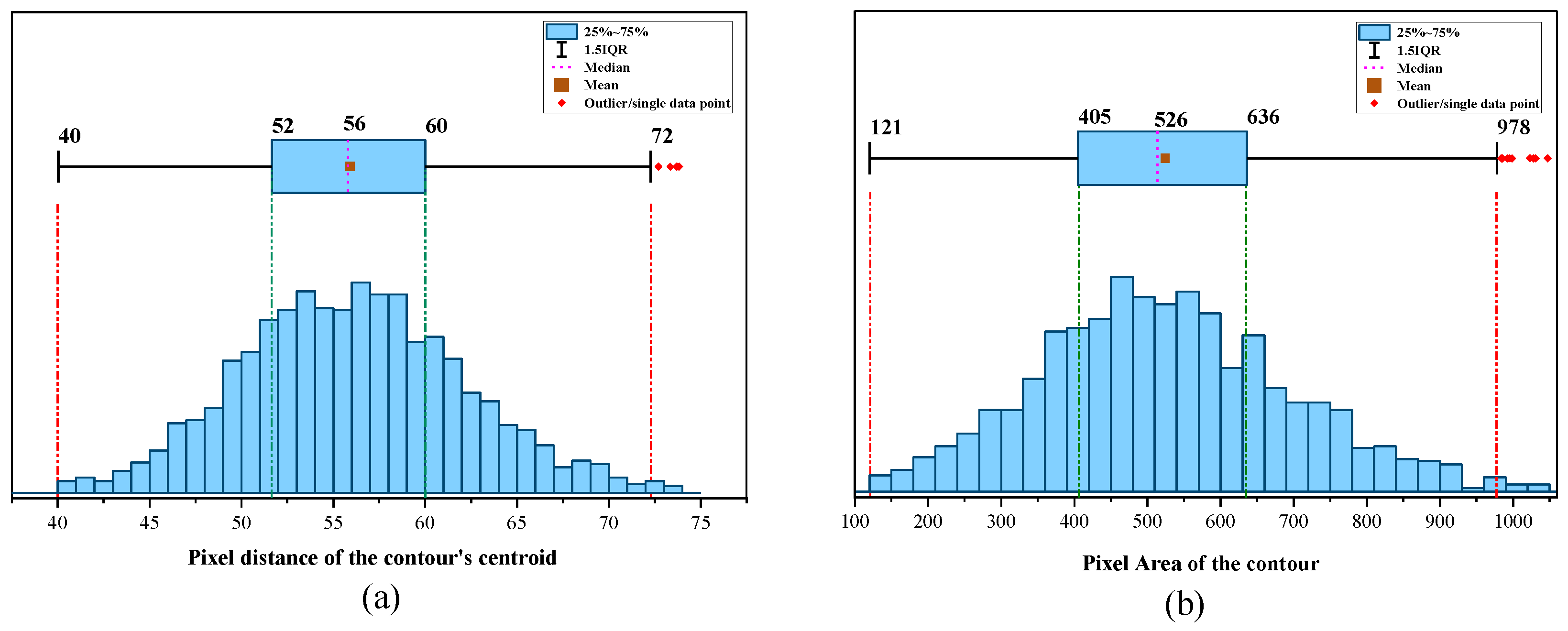

| Algorithm 1: Transplanted Crop Contour Filtering Algorithm |

|

4. Results and Discussion

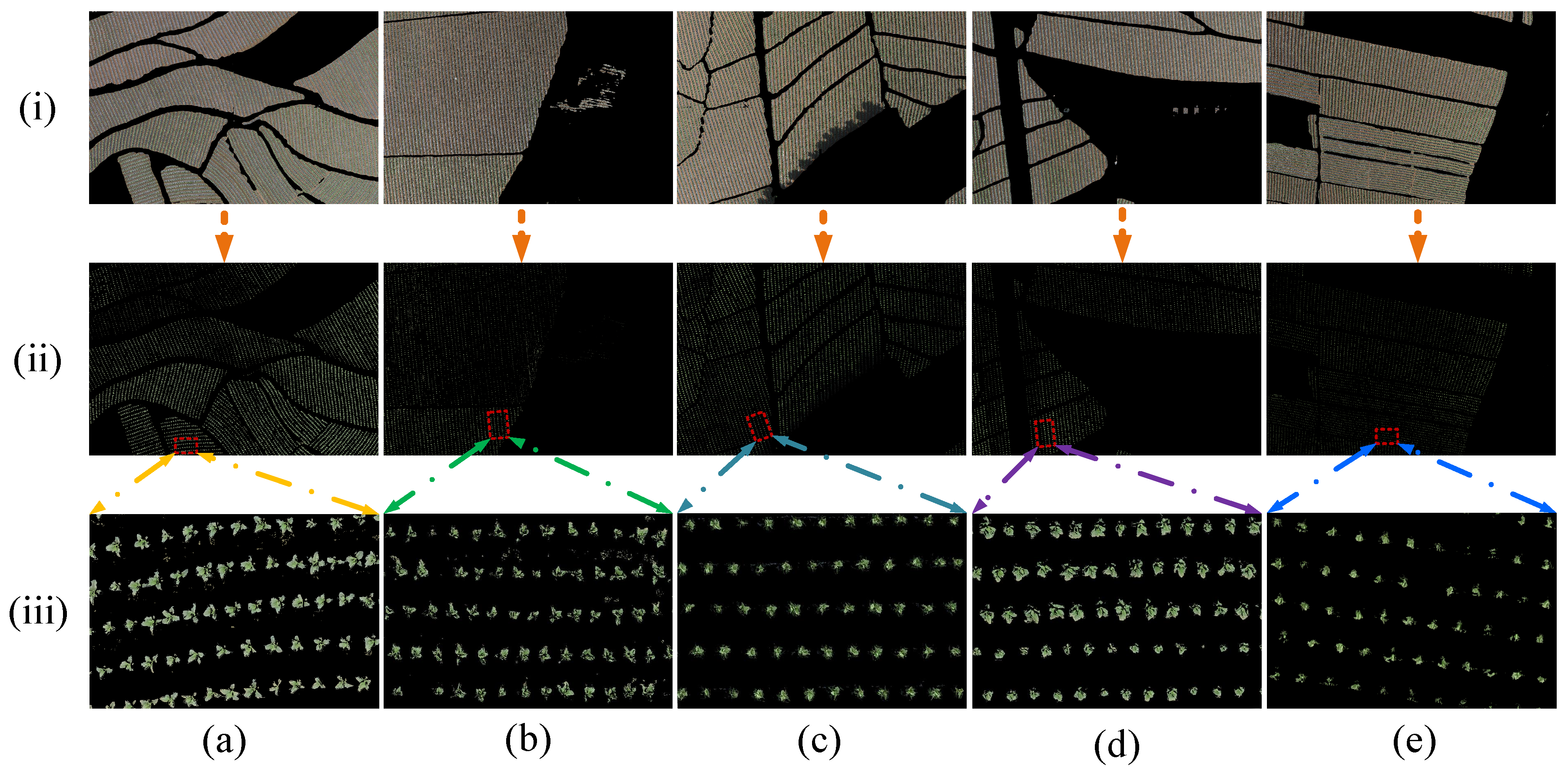

4.1. Background Removal of Tobacco Plants

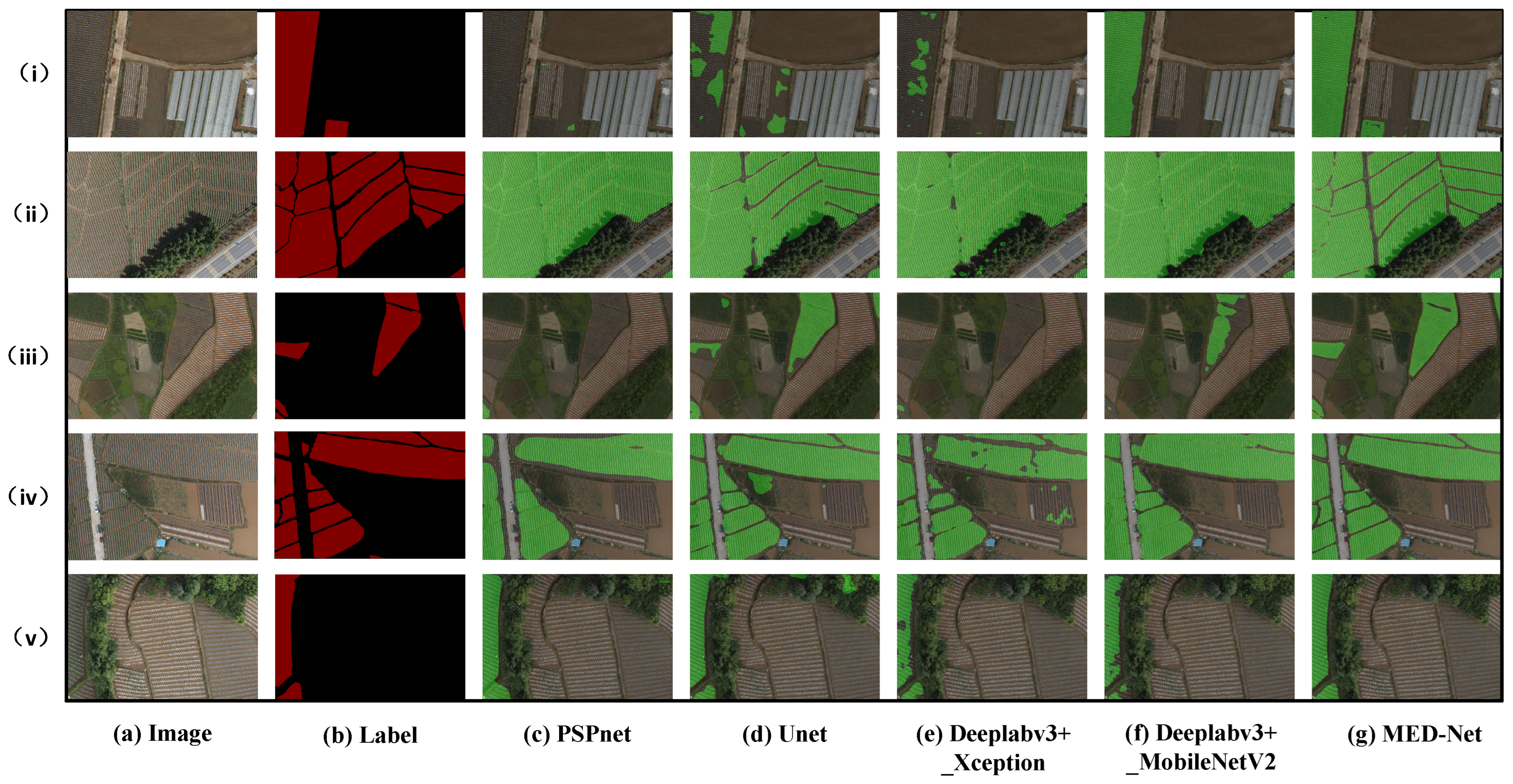

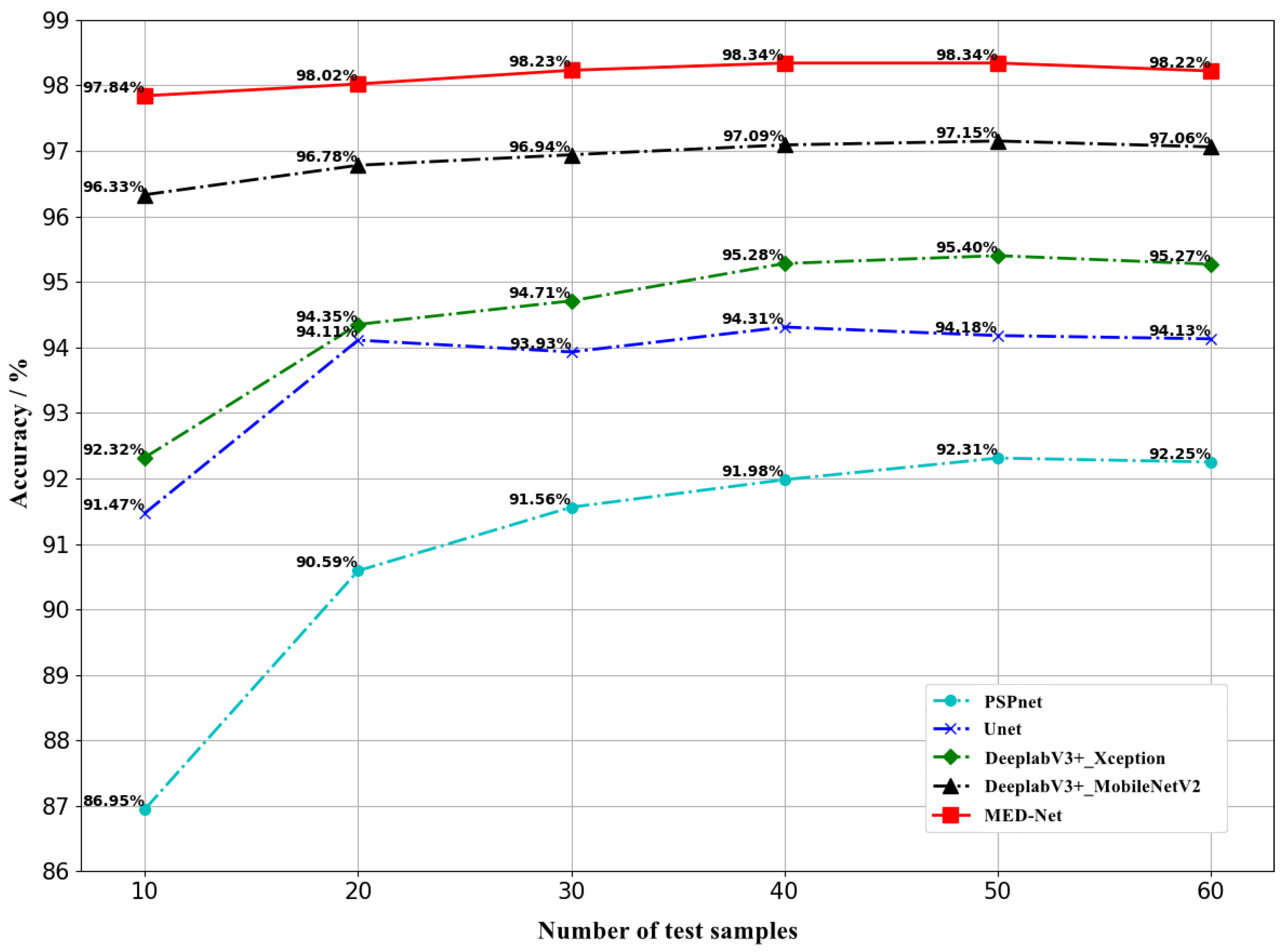

4.1.1. MED-Net Based Tobacco Field Segmentation Experiments

4.1.2. HSV-Based Background Removal Experiments

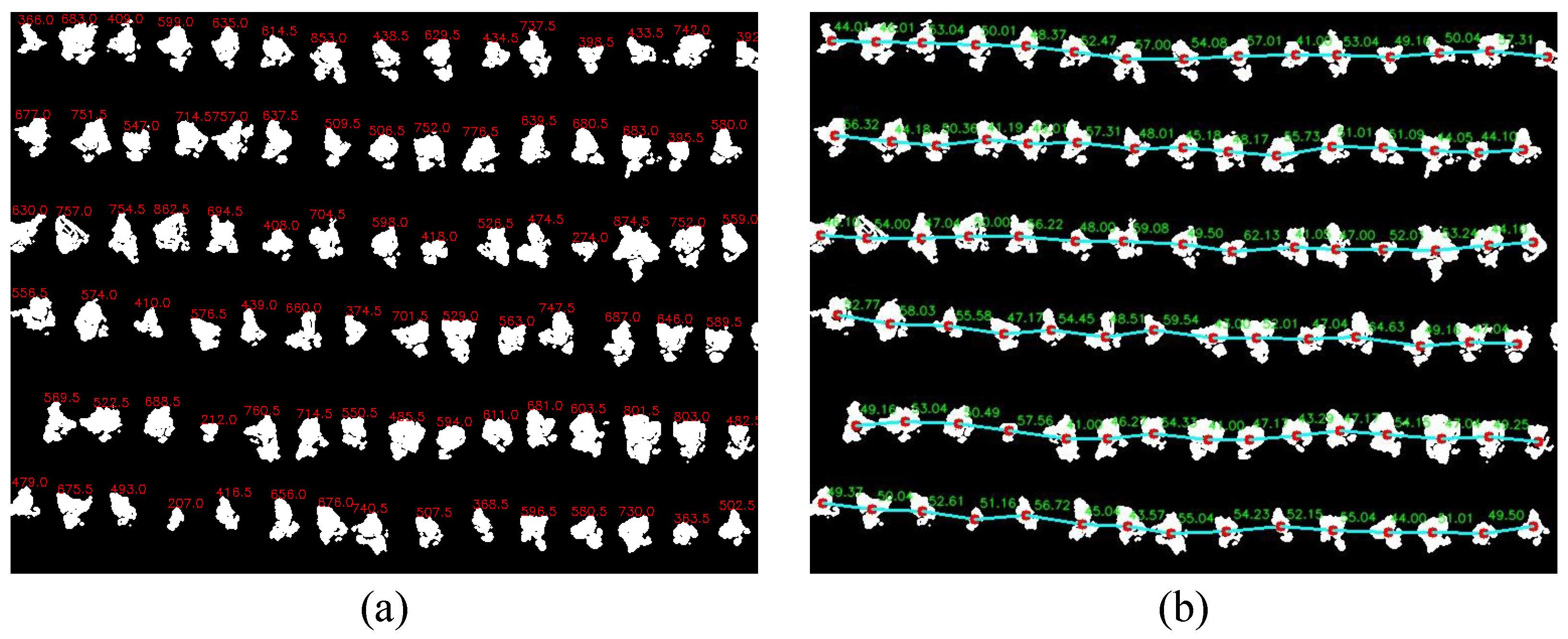

4.2. Tobacco Plant Counting

5. Conclusions

6. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ling, Y.; Liu, M.; Feng, Y.; Xing, Z.; Gao, H.; Wei, H.; Hu, Q.; Zhang, H. Effects of increased seeding density on seedling characteristics, mechanical transplantation quality, and yields of rice with crop straw boards for seedling cultivation. J. Integr. Agric. 2025, 24, 101–113. [Google Scholar] [CrossRef]

- Kacheyo, O.C.; Mhango, K.J.; de Vries, M.E.; Schneider, H.M.; Struik, P.C. Bed, ridge and planting configurations influence crop performance in field-transplanted hybrid potato crops. Field Crop. Res. 2024, 317, 109556. [Google Scholar] [CrossRef]

- Ghosal, S.; Zheng, B.Y.; Chapman, S.C.; Potgieter, A.B.; Jordan, D.R.; Wang, X.M.; Singh, A.K.; Singh, A.; Hirafuji, M.; Ninomiya, S.; et al. A Weakly Supervised Deep Learning Framework for Sorghum Head Detection and Counting. Plant Phenomics 2019, 2019, 1525874. [Google Scholar] [CrossRef] [PubMed]

- Sozzi, M.; Cantalamessa, S.; Cogato, A.; Kayad, A.; Marinello, F. Automatic Bunch Detection in White Grape Varieties Using YOLOv3, YOLOv4, and YOLOv5 Deep Learning Algorithms. Agronomy 2022, 12, 17. [Google Scholar] [CrossRef]

- Qing, G.; Haiyang, Z.; Miao, G.; Hongbo, Q.; Xin, X.; Xinming, M. A rapid, low-cost wheat spike grain segmentation and counting system based on deep learning and image processing. Eur. J. Agron. 2024, 156, 127158. [Google Scholar] [CrossRef]

- Jian, T.; Guoming, S.; Yimin, X.; Hongbo, L. Prospect of applying remote sensing to tobacco planting monitoring and management. Acta Tabacaria Sin. 2015, 21, 111–116. [Google Scholar] [CrossRef]

- Delavarpour, N.; Koparan, C.; Nowatzki, J.; Bajwa, S.; Sun, X. A Technical Study on UAV Characteristics for Precision Agriculture Applications and Associated Practical Challenges. Remote Sens. 2021, 13, 1204. [Google Scholar] [CrossRef]

- Velusamy, P.; Rajendran, S.; Mahendran, R.K.; Naseer, S.; Shafiq, M.; Choi, J.G. Unmanned Aerial Vehicles (UAV) in Precision Agriculture: Applications and Challenges. Energies 2022, 15, 217. [Google Scholar] [CrossRef]

- Wijayanto, A.K.; Junaedi, A.; Sujaswara, A.A.; Khamid, M.B.R.; Prasetyo, L.B.; Hongo, C.; Kuze, H. Machine Learning for Precise Rice Variety Classification in Tropical Environments Using UAV-Based Multispectral Sensing. AgriEngineering 2023, 5, 2000–2019. [Google Scholar] [CrossRef]

- Strzępek, K.; Salach, M.; Trybus, B.; Siwiec, K.; Pawłowicz, B.; Paszkiewicz, A. Quantitative and Qualitative Analysis of Agricultural Fields Based on Aerial Multispectral Images Using Neural Networks. Sensors 2023, 23, 9251. [Google Scholar] [CrossRef]

- Hong-Yu, F.; Yun-Kai, Y.; Wei, W.; Ao, L.; Ming-Zhi, X.; Xihong, G.; Wei, S.; Guo-Xian, C. Ramie Plant Counting Based on UAV Remote Sensing Technology and Deep Learning. J. Nat. Fibers 2023, 20, 2159610. [Google Scholar] [CrossRef]

- Valente, J.; Sari, B.; Kooistra, L.; Kramer, H.; Mücher, S. Automated crop plant counting from very high-resolution aerial imagery. Precis. Agric. 2020, 21, 1366–1384. [Google Scholar] [CrossRef]

- Shirzadifar, A.; Maharlooei, M.; Bajwa, S.G.; Oduor, P.G.; Nowatzki, J.F. Mapping crop stand count and planting uniformity using high resolution imagery in a maize crop. Biosyst. Eng. 2020, 200, 377–390. [Google Scholar] [CrossRef]

- Seifert, E.; Seifert, S.; Vogt, H.; Drew, D.; van Aardt, J.; Kunneke, A.; Seifert, T. Influence of Drone Altitude, Image Overlap, and Optical Sensor Resolution on Multi-View Reconstruction of Forest Images. Remote Sens. 2019, 11, 1252. [Google Scholar] [CrossRef]

- Tong, K.; Wu, Y.; Zhou, F. Recent advances in small object detection based on deep learning: A review. Image Vis. Comput. 2020, 97, 103910. [Google Scholar] [CrossRef]

- Wang, B.; Zhou, J.; Costa, M.; Kaeppler, S.M.; Zhang, Z. Plot-Level Maize Early Stage Stand Counting and Spacing Detection Using Advanced Deep Learning Algorithms Based on UAV Imagery. Agronomy 2023, 13, 1728. [Google Scholar] [CrossRef]

- Lichuan, G.; Zhongfeng, W.; Yongzhi, Q.; Li, M. Tobacco Plant Number Detection Based on UAV Remote Sensing Image and YOLOv7-Sim. Chin. Tob. Sci. 2023, 44, 94–102. [Google Scholar] [CrossRef]

- Lin, H.; Chen, Z.; Qiang, Z.; Tang, S.K.; Liu, L.; Pau, G. Automated Counting of Tobacco Plants Using Multispectral UAV Data. Agronomy 2023, 13, 2861. [Google Scholar] [CrossRef]

- He, Y.; Zhang, X.; Zhang, Z.; Fang, H. Automated detection of boundary line in paddy field using MobileV2-UNet and RANSAC. Comput. Electron. Agric. 2022, 194, 106697. [Google Scholar] [CrossRef]

- Wang, S.; Su, D.; Jiang, Y.; Tan, Y.; Qiao, Y.; Yang, S.; Feng, Y.; Hu, N. Fusing vegetation index and ridge segmentation for robust vision based autonomous navigation of agricultural robots in vegetable farms. Comput. Electron. Agric. 2023, 213, 108235. [Google Scholar] [CrossRef]

- Yu, J.; Zhang, J.; Shu, A.; Chen, Y.; Chen, J.; Yang, Y.; Tang, W.; Zhang, Y. Study of convolutional neural network-based semantic segmentation methods on edge intelligence devices for field agricultural robot navigation line extraction. Comput. Electron. Agric. 2023, 209, 107811. [Google Scholar] [CrossRef]

- Xie, D.; Xu, H.; Xiong, X.; Liu, M.; Hu, H.; Xiong, M.; Liu, L. Cropland Extraction in Southern China from Very High-Resolution Images Based on Deep Learning. Remote Sens. 2023, 15, 2231. [Google Scholar] [CrossRef]

- Zhang, M.; Xue, Y.; Zhan, Y.; Zhao, J. Semi-Supervised Semantic Segmentation-Based Remote Sensing Identification Method for Winter Wheat Planting Area Extraction. Agronomy 2023, 13, 2868. [Google Scholar] [CrossRef]

- Sun, W.; Sheng, W.; Zhou, R.; Zhu, Y.; Chen, A.; Zhao, S.; Zhang, Q. Deep edge enhancement-based semantic segmentation network for farmland segmentation with satellite imagery. Comput. Electron. Agric. 2022, 202, 107273. [Google Scholar] [CrossRef]

- Gopidas, D.K.; Priya, D.R. Hybrid Segmentation Method for Boundary Delineation of Agricultural Fields in Multitemporal Satellite Image using HS-PSO-FCNN. Mater. Today Proc. 2022, 51, 2272–2276. [Google Scholar] [CrossRef]

- Dutta, K.; Talukdar, D.; Bora, S.S. Segmentation of unhealthy leaves in cruciferous crops for early disease detection using vegetative indices and Otsu thresholding of aerial images. Measurement 2022, 189, 110478. [Google Scholar] [CrossRef]

- Debnath, S.; Preetham, A.; Vuppu, S.; Kumar, S.N.P. Optimal weighted GAN and U-Net based segmentation for phenotypic trait estimation of crops using Taylor Coot algorithm. Appl. Soft Comput. 2023, 144, 110396. [Google Scholar] [CrossRef]

- Kim, Y.H.; Lee, S.J.; Yun, C.; Im, S.J.; Park, K.R. LCW-Net: Low-light-image-based crop and weed segmentation network using attention module in two decoders. Eng. Appl. Artif. Intell. 2023, 126, 106890. [Google Scholar] [CrossRef]

- Sahin, H.M.; Miftahushudur, T.; Grieve, B.; Yin, H. Segmentation of weeds and crops using multispectral imaging and CRF-enhanced U-Net. Comput. Electron. Agric. 2023, 211, 107956. [Google Scholar] [CrossRef]

- Kim, Y.H.; Park, K.R. MTS-CNN: Multi-task semantic segmentation-convolutional neural network for detecting crops and weeds. Comput. Electron. Agric. 2022, 199, 107146. [Google Scholar] [CrossRef]

- Farjon, G.; Huijun, L.; Edan, Y. Deep-learning-based counting methods, datasets, and applications in agriculture: A review. Precis. Agric. 2023, 24, 1683–1711. [Google Scholar] [CrossRef]

- Lin, Y.; Hu, W.; Zheng, Z.; Xiong, J. Citrus Identification and Counting Algorithm Based on Improved YOLOv5s and DeepSort. Agronomy 2023, 13, 1674. [Google Scholar] [CrossRef]

- Ma, L.; Zhao, L.; Wang, Z.; Zhang, J.; Chen, G. Detection and Counting of Small Target Apples under Complicated Environments by Using Improved YOLOv7-tiny. Agronomy 2023, 13, 1419. [Google Scholar] [CrossRef]

- Bai, Y.; Nie, C.; Wang, H.; Cheng, M.; Liu, S.; Yu, X.; Shao, M.; Wang, Z.; Wang, S.; Tuohuti, N.; et al. A fast and robust method for plant count in sunflower and maize at different seedling stages using high-resolution UAV RGB imagery. Precis. Agric. 2022, 23, 1720–1742. [Google Scholar] [CrossRef]

- Lin, J.; Li, J.; Yang, Z.; Lu, H.; Ding, Y.; Cui, H. Estimating litchi flower number using a multicolumn convolutional neural network based on a density map. Precis. Agric. 2022, 23, 1226–1247. [Google Scholar] [CrossRef]

- Bai, X.; Liu, P.; Cao, Z.; Lu, H.; Xiong, H.; Yang, A.; Cai, Z.; Wang, J.; Yao, J. Rice Plant Counting, Locating, and Sizing Method Based on High-Throughput UAV RGB Images. Plant Phenomics 2023, 5, 0020. [Google Scholar] [CrossRef]

- Bai, X.; Gu, S.; Liu, P.; Yang, A.; Cai, Z.; Wang, J.; Yao, J. RPNet: Rice plant counting after tillering stage based on plant attention and multiple supervision network. Crop. J. 2023, 11, 1586–1594. [Google Scholar] [CrossRef]

- Kuznichov, D.; Zvirin, A.; Honen, Y.; Kimmel, R. Data augmentation for leaf segmentation and counting tasks in rosette plants. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019; pp. 2580–2589. [Google Scholar]

- Xu, X.; Geng, Q.; Gao, F.; Xiong, D.; Qiao, H.; Ma, X. Segmentation and counting of wheat spike grains based on deep learning and textural feature. Plant Methods 2023, 19, 77. [Google Scholar] [CrossRef] [PubMed]

- Zabawa, L.; Kicherer, A.; Klingbeil, L.; Töpfer, R.; Kuhlmann, H.; Roscher, R. Counting of grapevine berries in images via semantic segmentation using convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2020, 164, 73–83. [Google Scholar] [CrossRef]

- Du, W.; Liu, P. Instance Segmentation and Berry Counting of Table Grape before Thinning Based on AS-SwinT. Plant Phenomics 2023, 5, 0085. [Google Scholar] [CrossRef] [PubMed]

- He, L.; Liao, K.; Li, Y.; Li, B.; Zhang, J.; Wang, Y.; Lu, L.; Jian, S.; Qin, R.; Fu, X. Extraction of Tobacco Planting Information Based on UAV High-Resolution Remote Sensing Images. Remote Sens. 2024, 16, 359. [Google Scholar] [CrossRef]

- Chen, X.; Liu, T.; Han, K.; Jin, X.; Wang, J.; Kong, X.; Yu, J. TSP-yolo-based deep learning method for monitoring cabbage seedling emergence. Eur. J. Agron. 2024, 157, 127191. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.F.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar]

- Han, C.; Wu, W.; Luo, X.; Li, J. Visual Navigation and Obstacle Avoidance Control for Agricultural Robots via LiDAR and Camera. Remote Sens. 2023, 15, 5402. [Google Scholar] [CrossRef]

- Liu, W.; Sun, H.; Xia, Y.; Kang, J. Real-Time Cucumber Target Recognition in Greenhouse Environments Using Color Segmentation and Shape Matching. Appl. Sci. 2024, 14, 1884. [Google Scholar] [CrossRef]

- Li, X.; Fang, J.; Zhao, Y. A Multi-Target Identification and Positioning System Method for Tomato Plants Based on VGG16-UNet Model. Appl. Sci. 2024, 14, 2804. [Google Scholar] [CrossRef]

| Parameters | Options | Values |

|---|---|---|

| Acquisition | Flight altitude | 40 m, 50 m, 80 m, 100 m |

| Camera lens | ZENMUSE P1, 3:2 (8192 × 5460) | |

| Ground Sampling Distance (GSD) | 0.5 cm/pixel, 0.625 cm/pixel, 1 cm/pixel, 1.25 cm/pixel | |

| Flight parameters | Flight speed: 15 m/s, overlap: 70% (Along-track), 60% (Across-track) | |

| Stitching | Software | DJI Terra |

| Central Meridian Longitude (CML) | Kunming (02:42E 25:03N) | |

| Geodetic Coordinate System | CGCS2000 |

| Options | Values | ||

| Original data | Flight altitude | Region | Number |

| 40 m | Shilin, Xundian | 194 | |

| 50 m | Shilin, Xundian | 303 | |

| 80 m | Shilin, Xundian | 180 | |

| 100 m | Xundian | 87 | |

| Filter data | 208 | ||

| Enhance data | Method | Operation | |

| Augmentor | rotate | 20 | |

| flip | 40 | ||

| skew | 40 | ||

| scale | 40 | ||

| Random distortion | 20 | ||

| shear | 20 | ||

| crop | 40 | ||

| Mosaic | 172 | ||

| Dataset | Training set | 540 | |

| Test set | 60 | ||

| Parameters | Values |

|---|---|

| Total data | 600 |

| Training Set (90%) | 540 |

| Testing Set (10%) | 60 |

| batch size | 4 |

| learning_rate | |

| epochs | 112 |

| momentum | 0.9 |

| Weight_decay | |

| optimizer | SDG |

| Model | DeeplabV3+_Xception | DeeplabV3+_MobilenetV2 | MED-Net |

|---|---|---|---|

| Model Size (MB) | 209 | 22.4 | 22.3 |

| Number of Test Images | 60 | 60 | 60 |

| Test Execution Time (s) | 10.910 | 9.186 | 9.849 |

| Inference Speed (img/s) | 5.50 | 6.53 | 6.09 |

| mIoU (%) | 91.45 | 94.79 | 96.49 |

| mPA (%) | 95.52 | 97.32 | 98.2 |

| Accuracy (%) | 95.56 | 97.34 | 98.36 |

| Model | mIoU/% | mAP/% | mPrecision/% | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| PSPnet | 86.46 | 84.64 | 85.55 | 91.51 | 93.05 | 92.28 | 93.31 | 91.01 | 92.16 | ||

| U-net | 89.49 | 88.27 | 88.88 | 92.5 | 96.0 | 94.25 | 96.06 | 92.04 | 94.05 | ||

| DeeplabV3+_Xception | 91.62 | 90.21 | 90.91 | 96.0 | 94.01 | 95.21 | 95.19 | 95.35 | 95.27 | ||

| DeeplabV3+_MobileNetV2 | 94.71 | 93.79 | 94.25 | 96.0 | 98.02 | 97.0 | 97.09 | 97.07 | 97.08 | ||

| MED-Net | 96.76 | 96.21 | 96.46 | 98.4 | 98.0 | 98.2 | 98.14 | 98.32 | 98.23 | ||

| Field | Contours | Predicted Value | False Detection | Predicted True Value | Missed Detection | Actual Value | Missed Detection Rate | Accuracy | ||

|---|---|---|---|---|---|---|---|---|---|---|

| a | 10,691 | 4420 | 119 | 6152 | 18 | 6134 | 189 | 6323 | 2.99% | 97.01% |

| b | 9893 | 5823 | 337 | 3733 | 86 | 3647 | 168 | 3815 | 4.40% | 95.60% |

| c | 19,564 | 14,691 | 68 | 4805 | 19 | 4786 | 202 | 4988 | 4.05% | 95.95% |

| d | 7258 | 3774 | 44 | 3440 | 0 | 3440 | 57 | 3497 | 1.63% | 98.37% |

| e | 11,360 | 5384 | 78 | 5898 | 26 | 5872 | 114 | 5986 | 1.90% | 98.10% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, H.; Zhang, Y.; Li, Z.; Li, M.; Wu, H.; Jia, Y.; Yang, J.; Bi, S. An Efficient Method for Counting Large-Scale Plantings of Transplanted Crops in UAV Remote Sensing Images. Agriculture 2025, 15, 511. https://doi.org/10.3390/agriculture15050511

Wang H, Zhang Y, Li Z, Li M, Wu H, Jia Y, Yang J, Bi S. An Efficient Method for Counting Large-Scale Plantings of Transplanted Crops in UAV Remote Sensing Images. Agriculture. 2025; 15(5):511. https://doi.org/10.3390/agriculture15050511

Chicago/Turabian StyleWang, Huihua, Yuhang Zhang, Zhengfang Li, Mofei Li, Haiwen Wu, Youdong Jia, Jiankun Yang, and Shun Bi. 2025. "An Efficient Method for Counting Large-Scale Plantings of Transplanted Crops in UAV Remote Sensing Images" Agriculture 15, no. 5: 511. https://doi.org/10.3390/agriculture15050511

APA StyleWang, H., Zhang, Y., Li, Z., Li, M., Wu, H., Jia, Y., Yang, J., & Bi, S. (2025). An Efficient Method for Counting Large-Scale Plantings of Transplanted Crops in UAV Remote Sensing Images. Agriculture, 15(5), 511. https://doi.org/10.3390/agriculture15050511