Unmanned Aerial Vehicle Remote Sensing for Monitoring Fractional Vegetation Cover in Creeping Plants: A Case Study of Thymus mongolicus Ronniger

Abstract

1. Introduction

2. Materials and Methods

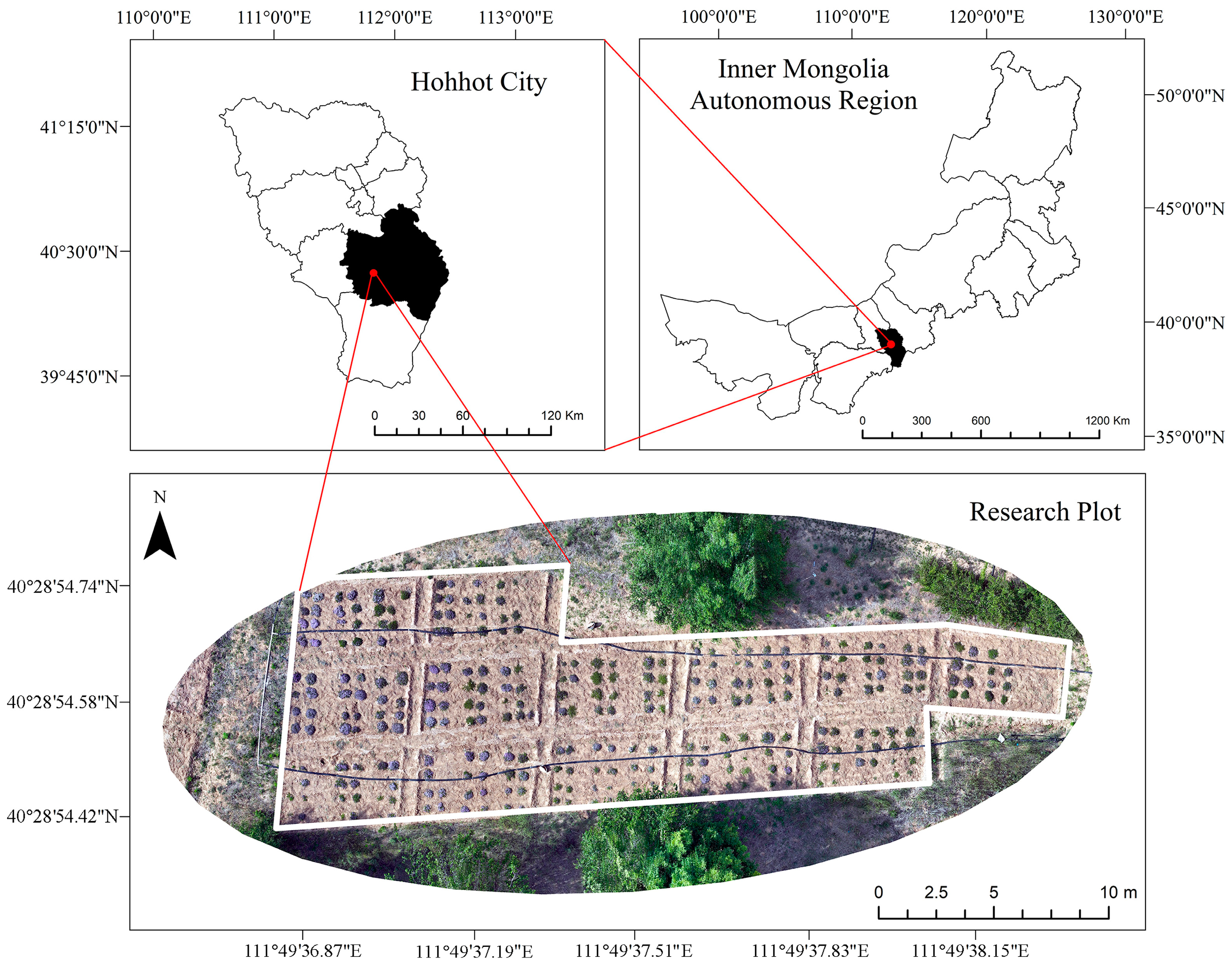

2.1. Research Plot

2.2. UAV Imagery Collection and Preprocessing

2.3. Research Methodology

2.3.1. Ground Truth Values

2.3.2. Vegetation Index-Based FVC Extraction

2.3.3. Construction and Accuracy Assessment of FVC Inversion Models

3. Results

3.1. Determination of Ground Truth Values and Optimization of Model Parameters

3.1.1. FVC Ground Truth at Different Phenological Stages

3.1.2. Parameter Sensitivity Analysis Results

- The RF model achieved optimal performance during the peak flowering stage (R2 = 0.93, max_depth = 5) but exhibited relatively lower accuracy in the fruiting stage (R2 = 0.77, n_estimators = 50). The model sensitivity to key parameters varied, with n_estimators ranging from 50 to 500. Additionally, max_depth values between 5 and 10 yielded better performance.

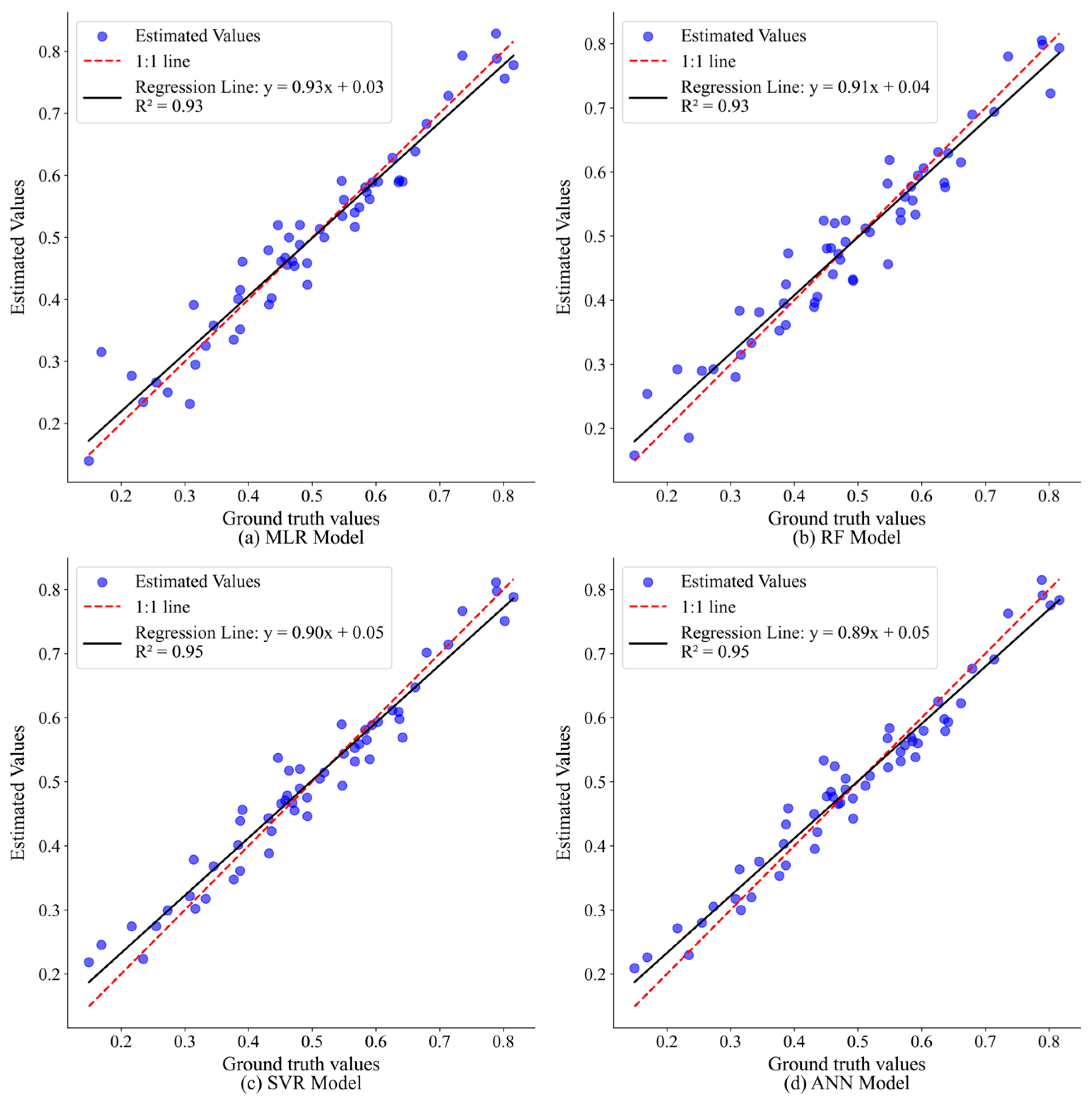

- The SVR model exhibited high prediction accuracy during the peak flowering stage (R2 = 0.95, gamma = 0.1) and maintained strong performance during the early flowering stage (R2 = 0.92, C = 5.0). The regularization parameter C considerably influenced model performance, with optimal values ranging from 1.0 to 10.0 across different stages. The epsilon parameter consistently exhibited optimal performance at 0.1 across all phenological stages.

- The ANN model achieved the highest accuracy during the peak flowering stage (R2 = 0.95, dropout = 0.1) and the budding stage (R2 = 0.93, dropout = 0.1). Lower dropout rates (0.1–0.2) generally yielded better results across most phenological stages, while higher dropout values resulted in decreased model performance. During the fruiting stage, optimal performance was achieved with a slightly higher dropout rate of 0.2 (R2 = 0.77).

3.2. Establishment and Validation of Best Models for Different Phenological Stages

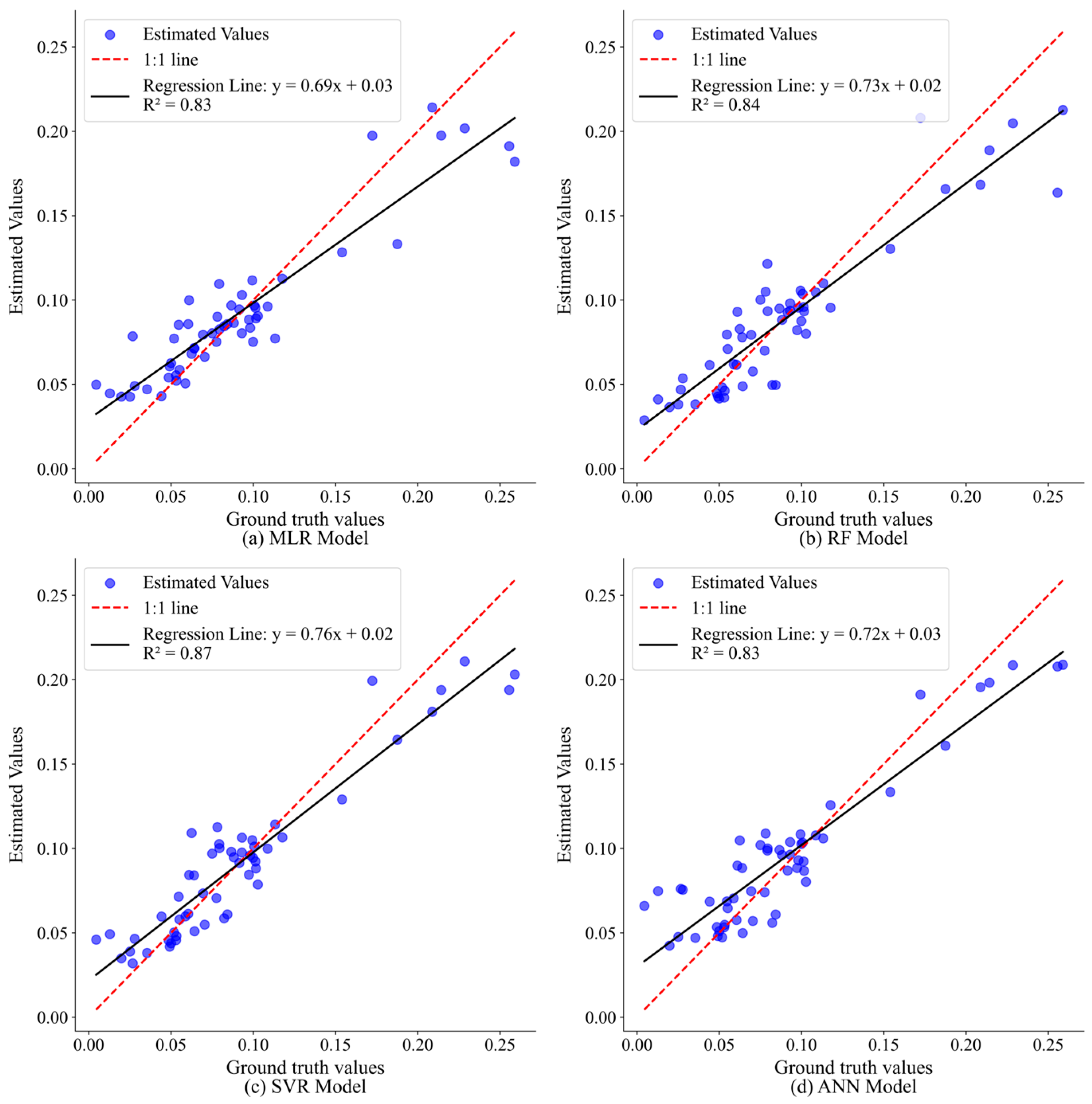

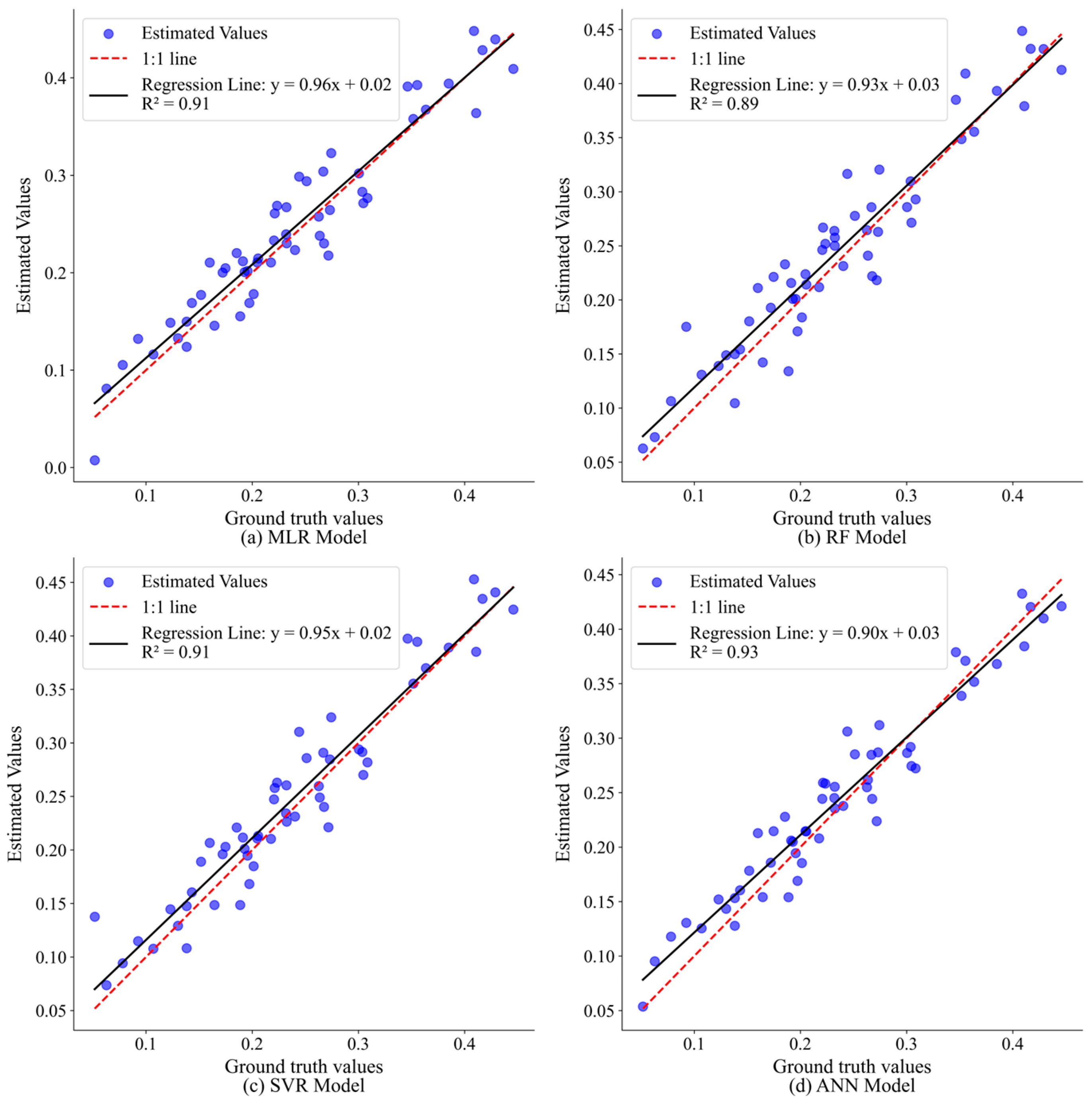

3.2.1. Validation Set Results for Different Models Across Phenological Stages

3.2.2. Best Model Results Validation

3.3. Dynamic Changes in T. mongolicus Across Phenological Stages

4. Discussion

4.1. FVC Changes Across Key Phenological Stages of T. mongolicus

4.2. Best Models for Different Phenological Stages

4.3. Error Source Analysis Across FVC Ranges

5. Conclusions

- (1)

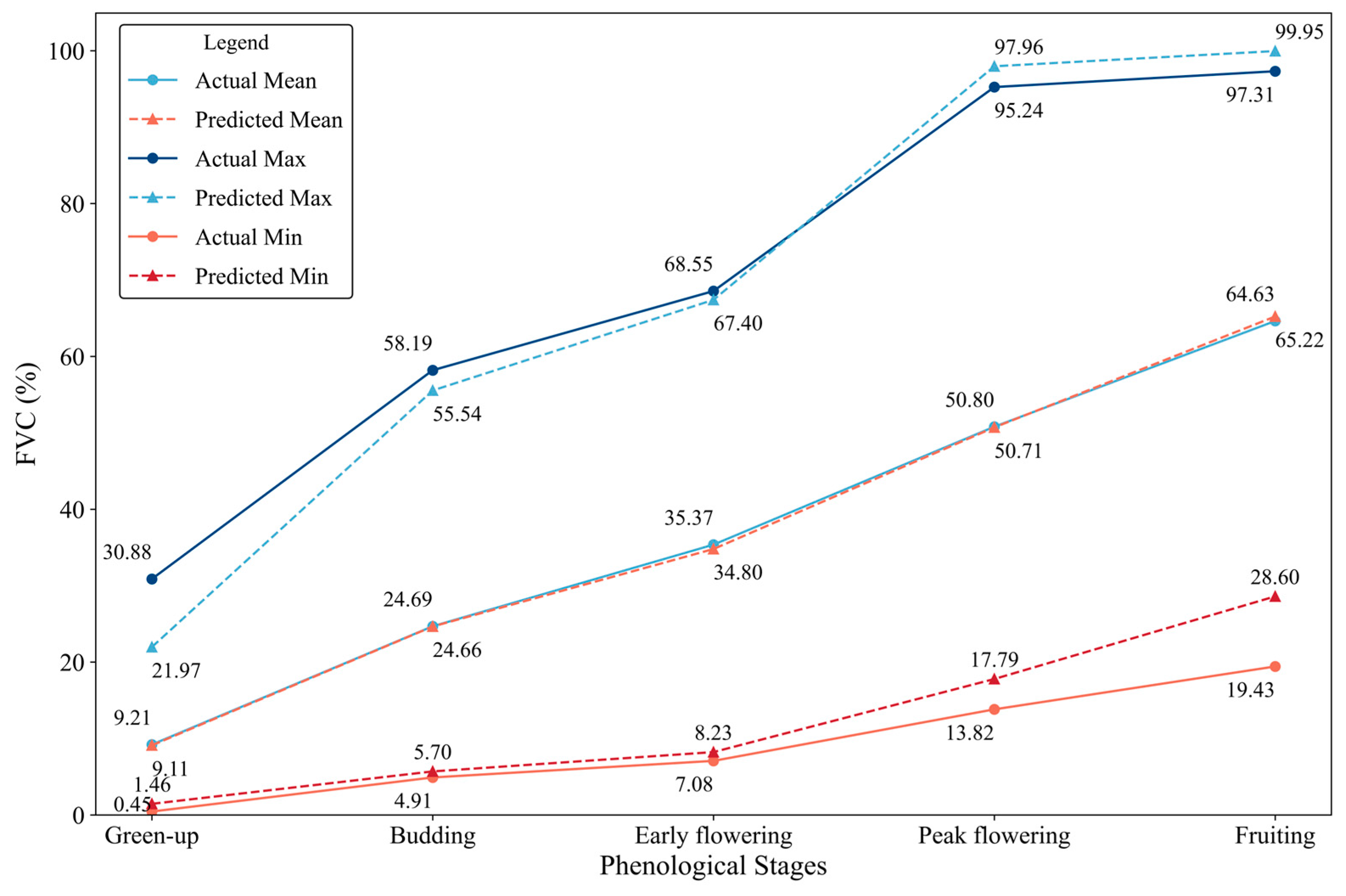

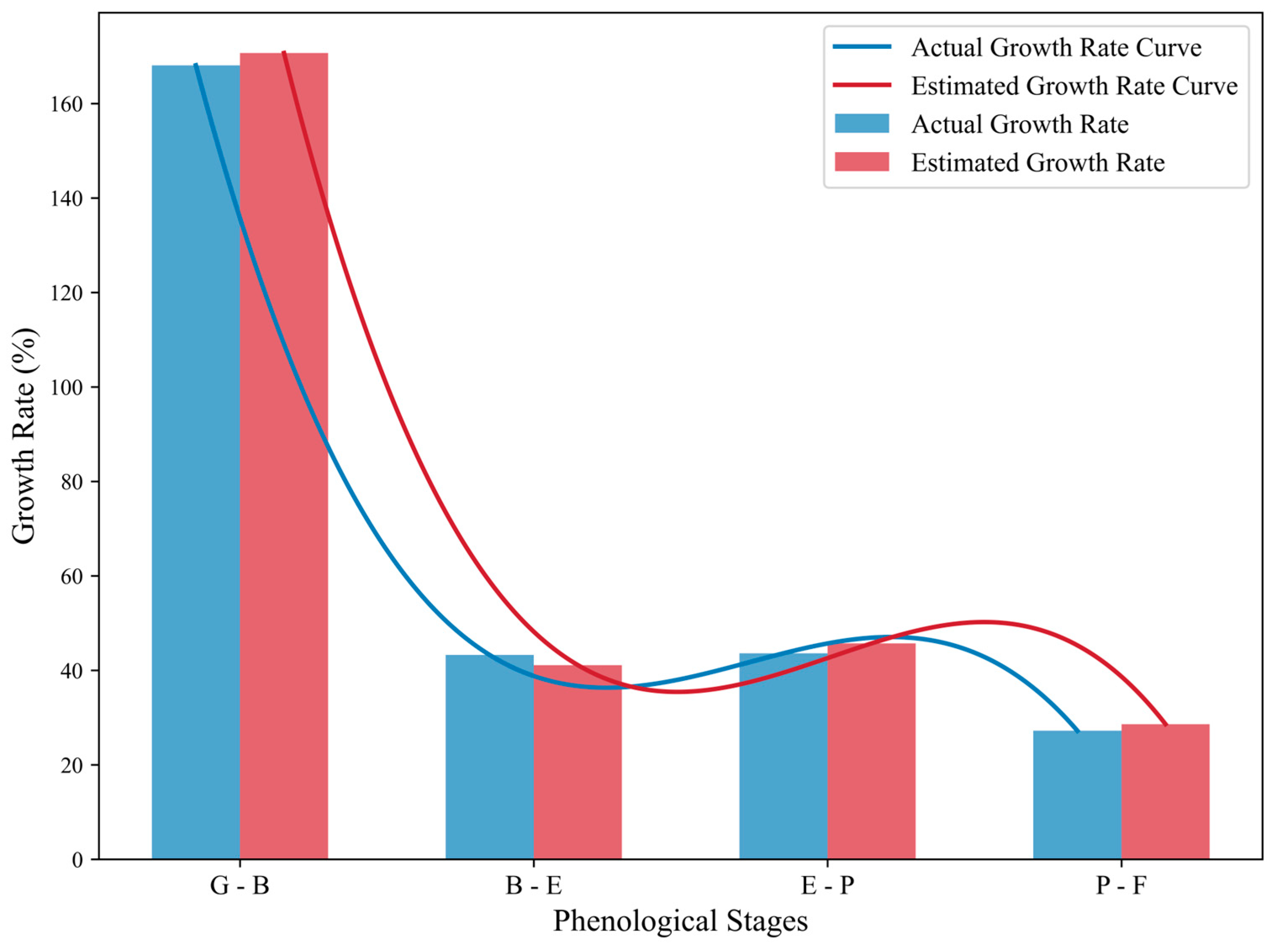

- The mean FVC of T. mongolicus exhibited notable dynamic changes across phenological stages. Particularly, the mean FVC increased from 9.21% during the green-up stage to 64.63% at the fruiting stage. The growth rate peaked at 168.08% during the transition from green-up to budding and then gradually decreased rates in subsequent stages, reflecting distinct temporal patterns in vegetation development.

- (2)

- The SVR model outperformed the other models during the green-up (R2 = 0.87) and early flowering (R2 = 0.91) stages. Moreover, the ANN model exhibited superior performance during the budding (R2 = 0.93), peak flowering (R2 = 0.95), and fruiting stages (R2 = 0.77). These findings highlight the varying effectiveness of different models across the phenological stages of T. mongolicus.

- (3)

- Across all phenological stages, the models effectively captured the dynamic changes in FVC, with estimated values being consistent with ground truth measurements. The predicted growth rates were highly consistent with actual measurements, indicating the reliability of models in dynamically monitoring FVC changes.

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Gitelson, A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Kooch, Y.; Kartalaei, Z.M.; Amiri, M.; Zarafshar, M.; Shabani, S.; Mohammady, M. Soil health reduction following the conversion of primary vegetation covers in a semi-arid environment. Sci. Total Environ. 2024, 921, 171113. [Google Scholar] [CrossRef] [PubMed]

- Kaufmann, R.K.; Zhou, L.; Myneni, R.B.; Tucker, C.J.; Slayback, D.; Shabanov, N.V.; Pinzon, J. The effect of vegetation on surface temperature: A statistical analysis of NDVI and climate data. Geophys. Res. Lett. 2003, 30, 1234–1237. [Google Scholar] [CrossRef]

- Cao, W.; Xu, H.; Zhang, Z. Vegetation growth dynamic and sensitivity to changing climate in a watershed in northern China. Remote Sens. 2022, 14, 4198. [Google Scholar] [CrossRef]

- Marsett, R.C.; Qi, J.; Heilman, P.; Biedenbender, S.H.; Watson, M.C.; Amer, S.; Weltz, M.; Goodrich, D.; Marsett, R. Remote sensing for grassland management in the arid southwest. Rangel. Ecol. Manag. 2006, 59, 530–540. [Google Scholar] [CrossRef]

- Zhao, X.; Zhou, D.; Fang, J. Satellite-based studies on large-scale vegetation changes in China. J. Integr. Plant Biol. 2012, 54, 713–728. [Google Scholar] [CrossRef]

- Wang, Z.; Ma, Y.; Zhang, Y.; Shang, J. Review of remote sensing applications in grassland monitoring. Remote Sens. 2022, 14, 2903. [Google Scholar] [CrossRef]

- Robinson, J.M.; Harrison, P.A.; Mavoa, S.; Breed, M.F. Existing and emerging uses of drones in restoration ecology. Methods Ecol. Evol. 2022, 13, 1899–1911. [Google Scholar] [CrossRef]

- Rahman, D.A.; Sitorus, A.B.Y.; Condro, A.A. From coastal to montane forest ecosystems, using drones for multi-species research in the tropics. Drones 2022, 6, 6. [Google Scholar] [CrossRef]

- Tait, L.W.; Orchard, S.; Schiel, D.R. Missing the forest and the trees: Utility, limits and caveats for drone imaging of coastal marine ecosystems. Remote Sens. 2021, 13, 3136. [Google Scholar] [CrossRef]

- Chen, R.; Han, L.; Zhao, Y.; Zhao, Z.; Liu, Z.; Li, R.; Xia, L.; Zhai, Y. Extraction and monitoring of vegetation coverage based on uncrewed aerial vehicle visible image in a post gold mining area. Front. Ecol. Evol. 2023, 11, 1171358. [Google Scholar] [CrossRef]

- Zhai, L.; Yang, W.; Li, C.; Ma, C.; Wu, X.; Zhang, R. Extraction of maize canopy temperature and variation factor analysis based on multi-source unmanned aerial vehicle remote sensing data. Earth Sci. Inform. 2024, 17, 5079–5094. [Google Scholar] [CrossRef]

- Sato, Y.; Tsuji, T.; Matsuoka, M. Estimation of rice plant coverage using Sentinel-2 based on UAV-observed data. Remote Sens. 2024, 16, 1628. [Google Scholar] [CrossRef]

- Yang, S.; Li, S.; Zhang, B.; Yu, R.; Li, C.; Hu, J.; Liu, S.; Cheng, E.; Lou, Z.; Peng, D. Accurate estimation of fractional vegetation cover for winter wheat by integrated unmanned aerial systems and satellite images. Front. Plant Sci. 2023, 14, 1220137. [Google Scholar] [CrossRef] [PubMed]

- Weigel, M.M.; Andert, S.; Gerowitt, B. Monitoring patch expansion amends to evaluate the effects of non-chemical control on the creeping perennial Cirsium arvense (L.) Scop. in a spring wheat crop. Agronomy 2023, 13, 1474. [Google Scholar] [CrossRef]

- Editorial Committee of the Flora of China, Chinese Academy of Sciences. Flora of China; Science Press: Beijing, China, 1977; pp. 258–259. [Google Scholar]

- Talovskaya, E.B.; Cheryomushkina, V.; Astashenkov, A.; Gordeeva, N.I. State of coenopopulations of Thymus mongolicus (Lamiaceae) depending on environmental conditions. Bot. Zhurnal 2023, 108, 3–12. [Google Scholar] [CrossRef]

- Liu, M.; Luo, F.; Qing, Z.; Yang, H.; Liu, X.; Yang, Z.; Zeng, J. Chemical composition and bioactivity of essential oil of ten labiatae species. Molecules 2020, 25, 4862. [Google Scholar] [CrossRef]

- Li, X.; He, T.; Wang, X.; Shen, M.; Yan, X.; Fan, S.; Wang, L.; Wang, X.; Xu, X.; Sui, H.; et al. Traditional uses, chemical constituents and biological activities of plants from the genus Thymus. Chem. Biodivers. 2019, 16, e1900254. [Google Scholar] [CrossRef] [PubMed]

- Yang, M. Research advances on Thymus mongolicus. Hortic. Seed. 2018, 11, 68–70. [Google Scholar]

- Jiapaer, G.; Chen, X.; Bao, A. A comparison of methods for estimating fractional vegetation cover in arid regions. Agric. For. Meteorol. 2011, 151, 1698–1710. [Google Scholar] [CrossRef]

- Yue, J.; Guo, W.; Yang, G.; Zhou, C.; Feng, H.; Qiao, H. Method for accurate multi-growth-stage estimation of fractional vegetation cover using unmanned aerial vehicle remote sensing. Plant Methods 2021, 17, 51. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Liu, Y.; Wang, L. Change in Fractional Vegetation Cover and Its Prediction during the Growing Season Based on Machine Learning in Southwest China. Remote Sens. 2024, 16, 3623. [Google Scholar] [CrossRef]

- Yang, L.; Jia, K.; Liang, S.; Wei, X.; Yao, Y.; Zhang, X. A Robust Algorithm for Estimating Surface Fractional Vegetation Cover from Landsat Data. Remote Sens. 2017, 9, 857. [Google Scholar] [CrossRef]

- Saini, R. Integrating vegetation indices and spectral features for vegetation mapping from multispectral satellite imagery using AdaBoost and random forest machine learning classifiers. Geomat. Environ. Eng. 2022, 17, 57–74. [Google Scholar] [CrossRef]

- Ma, X.; Ding, J.; Wang, T.; Lu, L.; Sun, H.; Zhang, F. A pixel dichotomy coupled linear Kernel-Driven model for estimating fractional vegetation cover in arid areas from high-spatial-resolution images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Andini, S.W.; Prasetyo, Y.; Sukmono, A. Analysis of vegetation distribution using sentinel satellite imagery with NDVI and segmentation methods. J. Geod. Undip 2017, 7, 14–24. [Google Scholar]

- Zhao, J.; Li, J.; Liu, Q.; Zhang, Z.; Dong, Y. Comparative study of fractional vegetation cover estimation methods based on fine spatial resolution images for three vegetation types. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Fern, R.R.; Foxley, E.A.; Bruno, A.; Morrison, M.L. Suitability of NDVI and OSAVI as estimators of green biomass and coverage in a semi-arid rangeland. Ecol. Indic. 2018, 94, 16–21. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Nguyen, H.H. Comparison of various spectral indices for estimating mangrove covers using PlanetScope data: A case study in Xuan Thuy National Park, Nam Dinh Province. J. For. Sci. Technol. 2017, 5, 74–83. [Google Scholar]

- Yin, L.; Zhou, Z.; Li, S.; Huang, D. Research on vegetation extraction and fractional vegetation cover of Karst area based on visible light image of UAV. Acta Agrestia Sin. 2020, 28, 1664–1672. [Google Scholar]

- Li, B.; Liu, R.; Liu, S.; Liu, Q. Monitoring vegetation coverage variation of winter wheat by low-altitude UAV remote sensing system. Trans. Chin. Soc. Agric. Eng. 2012, 28, 160–165. [Google Scholar]

- Liu, J.; Pattey, E. Retrieval of leaf area index from top-of-canopy digital photography over agricultural crops. Agric. For. Meteorol. 2010, 150, 1485–1490. [Google Scholar] [CrossRef]

- Rouse, J.; Haas, R.H.; Schell, J.A.; Deering, D. Monitoring vegetation systems in the great plains with ERTS. In Third ERTS Symposium; NASA Scientific and Technical Information: Washington, DC, USA, 1974; pp. 309–317. [Google Scholar]

- Daughtry, C.S.T.; Gallo, K.P.; Goward, S.N.; Prince, S.D.; Kustas, W.P. Spectral estimates of absorbed radiation and phytomass production in corn and soybean canopies. Remote Sens. Environ. 1992, 39, 141–152. [Google Scholar] [CrossRef]

- Pearson, R.; Miller, L.D. Remote mapping of standing crop biomass for estimation of the productivity of the short-grass prairie. Remote Sens. Environ. 1972, 2, 1357–1381. [Google Scholar]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Tanphiphat, K.; Appleby, A.P. Growth and development of Bulbous oatgrass (Arrhenatherum elatius var. bulbosum). Weed Technol. 1990, 4, 843–848. [Google Scholar] [CrossRef]

- Maurice, I.; Gastal, F.; Durand, J.-L. Generation of form and associated mass deposition during leaf development in grasses: A kinematic approach for non-steady growth. Ann. Bot. 1997, 80, 673–683. [Google Scholar] [CrossRef][Green Version]

- Kawagishi, Y.; Miura, Y. Growth characteristics and effect of nitrogen and potassium topdressing on thickening growth of bulbs in spring-planted edible lily (Lilium leichtlinii var. maximowiczii Baker). Jpn. J. Crop Sci. 1996, 65, 51–57. [Google Scholar] [CrossRef][Green Version]

- Pitelka, L.F. Energy allocations in annual and perennial lupines (Lupinus: Leguminosae). Ecology 1977, 58, 1055–1065. [Google Scholar] [CrossRef]

- Zohner, C.M.; Renner, S.S.; Sebald, V.; Crowther, T.W. How changes in spring and autumn phenology translate into growth-experimental evidence of asymmetric effects. J. Ecol. 2021, 109, 2717–2728. [Google Scholar] [CrossRef]

- Curran, P.J. The Estimation of the Surface Moisture of a Vegetated Soil Using Aerial Infrared Photography. Remote Sens. 1981, 2, 369–378. [Google Scholar] [CrossRef]

- Fernandes, A.M.; Fortini, E.A.; Müller, L.A.d.C.; Batista, D.S.; Vieira, L.M.; Silva, P.O.; Amaral, C.H.d.; Poethig, R.S.; Otoni, W.C. Leaf Development Stages and Ontogenetic Changes in Passionfruit (Passiflora edulis Sims.) are Detected by Narrowband Spectral Signal. J. Photochem. Photobiol. B Biol. 2020, 209, 111931. [Google Scholar] [CrossRef]

- Sims, D.A.; Gamon, J.A. Relationships between leaf pigment content and spectral reflectance across a wide range of species, leaf structures and developmental stages. Remote Sens. Environ. 2002, 81, 337–354. [Google Scholar] [CrossRef]

- Si, W.; Amari, S.I. Conformal Transformation of Kernel Functions: A Data-Dependent Way to Improve Support Vector Machine Classifiers. Neural Process. Lett. 2002, 15, 59–67. [Google Scholar]

- Zhou, S. Sparse SVM for Sufficient Data Reduction. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 5560–5571. [Google Scholar] [CrossRef]

- Xie, Q.; Dash, J.; Huang, W.; Peng, D.; Qin, Q.; Mortimer, H. Vegetation indices combining the red and red-edge spectral information for leaf area index retrieval. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1482–1493. [Google Scholar] [CrossRef]

- Zhang, Y.; Zheng, L.; Li, M.; Deng, X.; Ji, R. Predicting apple sugar content based on spectral characteristics of apple tree leaf in different phenological phases. Comput. Electron. Agric. 2015, 112, 20–27. [Google Scholar] [CrossRef]

- Gardner, M.; Dorling, S. Artificial neural networks (the multilayer perceptron)—A review of applications in the atmospheric sciences. Atmos. Environ. 1998, 32, 2627–2636. [Google Scholar] [CrossRef]

- Sheehy, J.E. Microclimate, canopy structure, and photosynthesis in canopies of three contrasting temperate forage grasses: III. canopy photosynthesis, individual leaf photosynthesis and the distribution of current assimilate. Ann. Bot. 1977, 41, 593–604. [Google Scholar] [CrossRef]

- Gao, L.; Wang, X.; Johnson, B.A.; Tian, Q.; Wang, Y.; Verrelst, J.; Mu, X.; Gu, X. Remote sensing algorithms for estimation of fractional vegetation cover using pure vegetation index values: A review. ISPRS J. Photogramm. Remote Sens. 2020, 159, 364–377. [Google Scholar] [CrossRef]

- Gu, Y.; Wylie, B.K.; Howard, D.M.; Phuyal, K.P.; Ji, L. NDVI saturation adjustment: A new approach for improving cropland performance estimates in the Greater Platte River Basin, USA. Ecol. Indic. 2013, 30, 1–6. [Google Scholar] [CrossRef]

- Yuan, H.; Yang, G.; Li, C.; Wang, Y.; Liu, J.; Yu, H.; Feng, H.; Xu, B.; Zhao, X.; Yang, X. Retrieving soybean leaf area index from unmanned aerial vehicle hyperspectral remote sensing: Analysis of RF, ANN, and SVM regression models. Remote Sens. 2017, 9, 309. [Google Scholar] [CrossRef]

- de Souza, A.V.; Bonini Neto, A.; Cabrera Piazentin, J.; Dainese Junior, B.J.; Perin Gomes, E.; dos Santos Batista Bonini, C.; Ferrari Putti, F. Artificial neural network modelling in the prediction of bananas’ harvest. Sci. Hortic. 2019, 257, 108724. [Google Scholar] [CrossRef]

- Ramezan, C.A.; Warner, T.A.; Maxwell, A.E.; Price, B.S. Effects of training set size on supervised machine-learning land-cover classification of large-area high-resolution remotely sensed data. Remote Sens. 2021, 13, 368. [Google Scholar] [CrossRef]

- Cao, J.; Zhang, Z.; Tao, F.; Zhang, L.; Luo, Y.; Han, J.; Li, Z. Identifying the contributions of multi-source data for winter wheat yield prediction in China. Remote Sens. 2020, 12, 750. [Google Scholar] [CrossRef]

- Kamir, E.; Waldner, F.; Hochman, Z. Estimating wheat yields in Australia using climate records, satellite image time series and machine learning methods. ISPRS J. Photogramm. Remote Sens. 2020, 160, 124–135. [Google Scholar] [CrossRef]

| Parameter | Description | Specifications |

|---|---|---|

| UAV | Takeoff weight | 1050 g |

| Flight speed | 1 m/s | |

| RTK positioning accuracy | Horizontal: 1 cm + 1 ppm Vertical: 1.5 cm + 1 ppm | |

| Lens. | Visible light imaging | RGB composite |

| Multispectral imaging | Green (G): 560 nm ± 16 nm Red (R): 650 nm ± 16 nm Red edge (RE): 730 nm ± 16 nm Near infrared (NIR): 860 nm ± 26 nm | |

| Maximum resolution | 20 Megapixels | |

| Photo format | JPEG; TIFF |

| VIs | Vegetation Index | Equation | Reference |

|---|---|---|---|

| NDVI | Normalized difference vegetation index | [35] | |

| GNDVI | Green normalized difference vegetation index | [36] | |

| RVI | Ratio vegetation index | [37] | |

| DVI | Difference vegetation index | [38] |

| Parameters | Metrics | Green-Up | Budding | Early Flowering | Peak Flowering | Fruiting |

|---|---|---|---|---|---|---|

| Ground truth values | Mean | 9.21% | 24.69% | 35.37% | 50.80% | 64.63% |

| Min | 0.45% | 4.91% | 7.08% | 13.82% | 19.43% | |

| Max | 30.88% | 58.19% | 68.55% | 95.24% | 97.31% | |

| SD | 0.05 | 0.09 | 0.11 | 0.15 | 0.17 | |

| Estimated values | Mean | 9.11% | 24.66% | 34.80% | 50.71% | 65.22% |

| Min | 1.46% | 5.70% | 8.23% | 17.79% | 28.60% | |

| Max | 21.97% | 55.54% | 67.40% | 97.96% | 99.95% | |

| SD | 0.04 | 0.09 | 0.11 | 0.14 | 0.15 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, H.; Mi, W.; Cao, K.; Ren, W.; Chi, Y.; Yuan, F.; Liu, Y. Unmanned Aerial Vehicle Remote Sensing for Monitoring Fractional Vegetation Cover in Creeping Plants: A Case Study of Thymus mongolicus Ronniger. Agriculture 2025, 15, 502. https://doi.org/10.3390/agriculture15050502

Zheng H, Mi W, Cao K, Ren W, Chi Y, Yuan F, Liu Y. Unmanned Aerial Vehicle Remote Sensing for Monitoring Fractional Vegetation Cover in Creeping Plants: A Case Study of Thymus mongolicus Ronniger. Agriculture. 2025; 15(5):502. https://doi.org/10.3390/agriculture15050502

Chicago/Turabian StyleZheng, Hao, Wentao Mi, Kaiyan Cao, Weibo Ren, Yuan Chi, Feng Yuan, and Yaling Liu. 2025. "Unmanned Aerial Vehicle Remote Sensing for Monitoring Fractional Vegetation Cover in Creeping Plants: A Case Study of Thymus mongolicus Ronniger" Agriculture 15, no. 5: 502. https://doi.org/10.3390/agriculture15050502

APA StyleZheng, H., Mi, W., Cao, K., Ren, W., Chi, Y., Yuan, F., & Liu, Y. (2025). Unmanned Aerial Vehicle Remote Sensing for Monitoring Fractional Vegetation Cover in Creeping Plants: A Case Study of Thymus mongolicus Ronniger. Agriculture, 15(5), 502. https://doi.org/10.3390/agriculture15050502