1. Introduction

In commercial livestock production, animals frequently encounter diverse stressors throughout their lives, including handling, transport, social competition, and specific housing conditions. An animal’s ability to effectively cope with these challenges profoundly influences its health, welfare, and productive efficiency [

1,

2]. Within pig populations, discernible individual differences in stress responsiveness, often characterised as proactive versus reactive coping styles, are known to impact neuroendocrine function, behavioural patterns, and immune competence [

3]. Importantly, these inherent traits are shaped not only by genetic predisposition but are also significantly modulated by early life and prenatal environmental exposures [

4].

Early life stress in pigs can trigger enduring ‘developmental programming’ effects, which are thought to be mediated through epigenetic mechanisms [

5]. Such effects are often attributed to elevations in maternal glucocorticoid levels during critical windows of foetal development, which alongside other physiological changes can disrupt normal functioning of the hypothalamic–pituitary–adrenal (HPA) axis and various neurotransmitter systems. Such changes can lead to a variety of biological impairments, for example, to cognition and immune function, as well as to aspects of behaviour such as pain or fear responses, social interactions, and maternal behaviour [

6,

7,

8,

9,

10]. Beyond specific stress exposures, there is also evidence that naturally occurring variation (e.g., in maternal temperament [

11] or social status [

12]) can also alter progeny phenotypes.

However, translating research findings in early life research into relevance for commercial livestock production has been limited due to difficulties in identifying affected animals and relating negative outcomes in production animals back to stressor exposures that may have occurred before they were born. In a research context, methodologies for assessing stress and emotional states in livestock typically combine physiological and behavioural metrics. Commonly, glucocorticoid hormones, or their metabolites, are measured in various sample types (blood, saliva, faeces, and hair), but interpretation is complicated by individual variability, sampling invasiveness, and limited cross-context reliability [

13,

14]. Behavioural tests such as the novel object, open field, and startle response are also widely used in pigs, but these often suffer from low sensitivity and poor standardisation [

15,

16,

17].

In both cases, application outside of research in real-world environments is limited, and effective detection of welfare issues in industry remains a challenge. This challenge also exists in laboratory animal populations, particularly in relation to painful experiences. In this context clear advances have been made following the introduction of the mouse grimace scale in 2010 [

18], which introduced the concept of using facial expression analysis to gain insight into animal experiences. Since then, applications of facial assessments have shown substantial promise as a non-invasive approach to interpreting animal emotions in various species [

19] including pigs [

20,

21,

22].

Building on these advances, recent studies have successfully applied computer vision and machine learning techniques to detect stress and other affective states in pigs using facial imagery [

23,

24], demonstrating the feasibility of real-time, scalable welfare monitoring in commercial settings.

Our study aimed to address the novel question of whether putatively stress-related features of mothers are associated with similar features in their progeny. Addressing this question has implications for precision livestock farming, where stress assessments could benefit animal health (for instance, in the context of antimicrobial resistance (AMR), where stress is recognised as a key driver of diseases [

2,

25]), animal welfare, and production performance.

This study presents and validates a novel deep learning framework that leverages facial imagery from pregnant sows to predict putative stress phenotypes in their daughters. We investigated the ability of modern neural network architectures to detect stress-related facial features in pigs. Five state-of-the-art models, ConvNeXt [

26], EfficientNet_V2 [

27], MobileNet_V3 [

28], RegNet [

29], and the Vision Transformer (ViT) [

30], were selected for evaluation. Facial images were collected from sows previously classified as low- (LR) or high (HR)-stress responders through behavioural testing and salivary cortisol analysis. Independent validation was performed on a separate cohort of 53 daughters to ensure that the observed predictive patterns reflected stress-associated features rather than familial resemblance.

2. Materials and Methods

2.1. Experimental Design and Data Acquisition

Data collection was conducted at Scotland’s Rural College (SRUC) Pig Research Centre between August 2022 and November 2024. All procedures were approved by both SRUC’s and UWE’s Animal Welfare Ethical Review Bodies (PIG-AE 14-2022 and R209, respectively) and complied with established ethical standards.

Imaging sessions were performed on gestation days 70 and 90 during an induced food competition scenario conducted in the home pen environment. Facial imagery was collected on D70 and D90 of gestation for the sows and later for their daughters when they entered the breeding herd as gilts (i.e., female pigs bearing their first litter), and they were also the subjects of this study. Baseline images of sow faces were collected as the sows accessed their individual feeding stalls. Following a one-hour acclimation period to habituate the animals to the equipment, the test commenced following the procedure described by Ison et al. [

31]. Briefly, a line of feed was introduced on the pen floor, triggering spontaneous competition over the resource. The competition phase lasted approximately 10 min, during which video footage was continuously recorded to capture dynamic facial and social interactions. SRUC researchers collected behavioural and physiological measurements from the sows, including salivary cortisol levels and behavioural responses during the food competition tests.

To determine salivary cortisol levels, sows (and later their daughters at the same stage of gestation) had samples collected 15 min before and after the food competition test. Samples were obtained by allowing the pigs to chew on a large cotton swab (Millpledge Veterinary, DX09396) until saturated, typically approximately 20 s. Collected swabs were placed in Salivette® tubes (Sarstedt AG & Co., Nümbrecht, Germany) and stored in a Styrofoam box with ice blocks at approximately 8 °C. Swabs were centrifuged at approximately 4 °C for 5 min at 3000 rpm 30 min after collection. Samples were refrigerated for transport to the laboratory and subsequently frozen at −80 °C (approximately 4 to 5 h after collection) before being analysed by biomarker laboratory specialists at a later date.

During the food competition test, direct observations of aggression received by each sow were recorded (see [

31] for a full description of behaviours observed). These responses were used to assign dominance ranks within the home pen group and contributed to the development of a behavioural and physiological profile for each sow. This profile allowed researchers to combine behavioural data with salivary cortisol levels to classify sows as ‘low responders’ (LR) or ‘high responders’ (HR) for the purposes of facial image analysis and stress-related investigations.

The camera system used for capturing facial imagery was engineered to withstand the demanding conditions of a commercial pig facility. A custom wooden mount supported multiple GoPro HERO11 Black cameras (Model CHDHX-111, GoPro Inc., San Mateo, CA, USA) [

32], with each unit assigned to a specific feeding stall. Each camera was independently powered using an Anker 337 Power Bank (PowerCore 26K, Model A1277, Anker Innovations Ltd., Changsha, China) [

33], as shown in

Figure 1 (left). Continuous recording was maintained for 10.5 h per session using 512 GB SD cards, with footage captured at a resolution of 640 × 480 and a frame rate of 30 frames per second. To ensure animal safety and minimise interference, cameras were mounted approximately 20 cm from the animals. Although this reduced pig interaction with the setup, it resulted in a broader field of view and introduced challenges for motion detection. To mitigate this, custom motion detection masks were implemented using the GoPro API. These effectively filtered out empty-stall footage and focused data capture on periods of sow activity.

2.2. Image Processing and Quality Control

Following the extraction of raw video data from the GoPro camera SD cards, a custom Python (v3.10.11) program was developed to address challenges posed by the wide field of view and obstructive metal bars present in the housing environment. This code facilitated the removal of such visual obstructions, significantly enhancing the clarity of the footage for subsequent processing, as illustrated in

Figure 1 (middle). A central objective was to efficiently extract relevant pig facial images from hundreds of hours of video using a semi-automated approach. The resulting pipeline integrated automated detection with rigorous quality control, ensuring that only high-quality, relevant facial images were retained for model training. The workflow consisted of the following sequential stages:

2.2.1. Automated Face Segmentation

Initial face segmentation was performed manually using the Segment Anything Model (SAM) [

34] by Meta AI, which enables high-precision, interactive segmentation. A total of 100 sow facial images were manually segmented and labelled to generate a training dataset for a YOLOv8 segmentation model [

35]. This custom YOLOv8 model was subsequently deployed to automatically detect and segment pig faces within the full video frames. The model produced bounding boxes for candidate facial regions, which were further refined using predefined spatial constraints (

Figure 1, right). To eliminate false detections, an area-based filter was applied, retaining only face bounding boxes within the range of 2000 to 2,600,000 pixels.

2.2.2. Eye-Based Image Validation

Each segmented facial region was subjected to an additional validation step using a pre-trained YOLOv3-Tiny model [

36] designed for pig eye detection. This anatomical verification ensured that only images containing at least one visible eye were retained, thereby enhancing consistency and reducing the likelihood of false positives.

2.2.3. Redundancy Reduction and Dataset Optimisation

To minimise dataset redundancy and improve representativeness, we extracted deep features from each validated image using a pre-trained VGG16 model [

37] with its final classification layers removed. Feature vectors were then clustered using the K-Means algorithm [

38], and one representative image was randomly selected from each cluster. This strategy preserved dataset diversity while eliminating duplicate or near-identical sequential frames. The full data processing pipeline is schematically summarised in

Figure 2.

2.3. Deep Learning Model Selection and Configuration

To classify sow facial images into the low- (LR) and high (HR)-responder categories, we selected five state-of-the-art deep learning architectures: ConvNeXt [

26], EfficientNetV2 [

27], MobileNetV3 [

28], RegNet [

29], and the Vision Transformer (ViT) [

30]. Each model was initialised with weights pre-trained on the ImageNet-1K dataset [

39] and fine-tuned on our sow stress dataset using a transfer learning approach [

40]. A standardised preprocessing pipeline was applied across all models, including image resizing, normalisation, and data augmentation techniques such as random flipping and rotation. Training was performed using the Adam optimiser with a learning rate of

and cross-entropy loss. Early stopping based on validation loss was employed to mitigate overfitting. Model robustness was further ensured through a cross-validation scheme.

2.4. Cross-Generational Validation Strategy

To structure the dataset for biologically meaningful validation, animals were grouped into six distinct batches, each comprising a set of gestating sows tested under similar conditions. Each sow’s daughters were assigned to the same batch as their mother, allowing batch membership to define both the training (sows) and evaluation (daughters) sets. This design enabled a leave-one-batch-out strategy, ensuring models were evaluated on daughters from entirely unseen sow cohorts while preserving biological independence between the training and test sets. We adopted a leave-one-batch-out (LOBO) cross-validation strategy, which was well-suited to the batch-wise structure of our dataset and accounted for biological variability within the population [

41,

42]. The training dataset comprised six distinct batches of 18 gestating sows. Within each batch, sows were classified as Low (LR) and High (HR) responders based on their behavioural responses in the food competition tests, and salivary cortisol analyses were conducted by SRUC research staff as described above.

A central aim of this study was to determine whether there are associations that are detectable through automated analysis between mothers and their progeny in terms of facial expression of stress responses. Critically, the goal was not to exclude all associated facial traits from the learning process but rather to ensure that the models were identifying generalisable, putatively stress-linked phenotypes rather than simply memorising individual identity or superficial familial resemblance. To this end, all models were trained exclusively on facial images from the maternal sow cohort and evaluated on an entirely independent set of 53 daughters. The batch-based partitioning was designed to ensure that the models learned putatively stress-related facial patterns rather than batch-specific or environmental artefacts. Both sows and their daughters were classified using the same standardised behavioural and physiological criteria, ensuring consistency in phenotype labelling across generations. To prevent overfitting to identity-specific visual features, no model was ever evaluated on the daughter of a sow included in its training data. This strict lineage-exclusion protocol ensured that the models were assessing associated stress-related facial features and not exploiting visual similarities between biologically related individuals. The details of the sow–daughter associations are provided in

Table 1.

A core innovation and key strength of this study lies in its unique cross-generation validation design. Deep learning models were exclusively trained on face images of pregnant sows and subsequently evaluated on their daughters. This novel approach enabled a direct investigation into whether facial markers of maternal responses to stress, which are detectable by artificial intelligence, are associated with similar changes in their progeny.

All 53 daughters were recorded using the same test protocol used for their mothers, including assessments on days 70 and 90 of pregnancy. These contexts were specifically chosen to induce naturalistic facial expressions without introducing external stressors, thereby mirroring the conditions under which maternal data were collected. Our cross-validation framework rigorously enforced lineage separation, ensuring that no familial overlap occurred between the training and test sets. This strategy eliminates potential confounding factors from facial similarity between mothers and daughters. A detailed overview of the cross-generational leave-one-batch-out validation protocol is presented in

Table 2.

2.5. Evaluation Metrics and Explainability Analysis

2.5.1. Performance Metrics

Model performance was assessed using a suite of standard classification metrics that together provide a robust picture of predictive behaviour [

43]. These included accuracy, precision, recall, F1-scores, and class-specific confusion matrices. To ensure biological and statistical robustness, all metrics were first computed separately for each validation batch and then averaged across the six cross-generational folds. In addition, per-class precision and recall were examined to highlight potential asymmetries in model sensitivity between low-responder (LR) and high-responder (HR) animals.

2.5.2. Model Explainability with Grad-CAM

To gain insight into the facial features underpinning classification decisions, we employed Gradient-weighted Class Activation Mapping (Grad-CAM) [

44]. This technique generates saliency maps by leveraging gradient information to localise image regions most influential to the model’s prediction, thereby offering an interpretable link between decision outputs and biologically relevant features. Implementation was carried out using the torchcam library [

45], allowing automated extraction and overlay of heatmaps on the corresponding input images.

Grad-CAM visualisation was applied systematically across all test batches. For each model, semantically informative intermediate layers were selected (e.g., features.7 for ConvNeXt and conv_proj for the ViT) to maximise spatial resolution and interpretability. Class-specific activation maps were generated for each prediction and superimposed on the original facial images to highlight stress-related regions. These heatmaps were archived and organised by stress category (LR vs. HR), enabling both within-class and cross-class comparisons and supporting qualitative validation of the quantitative results.

3. Results

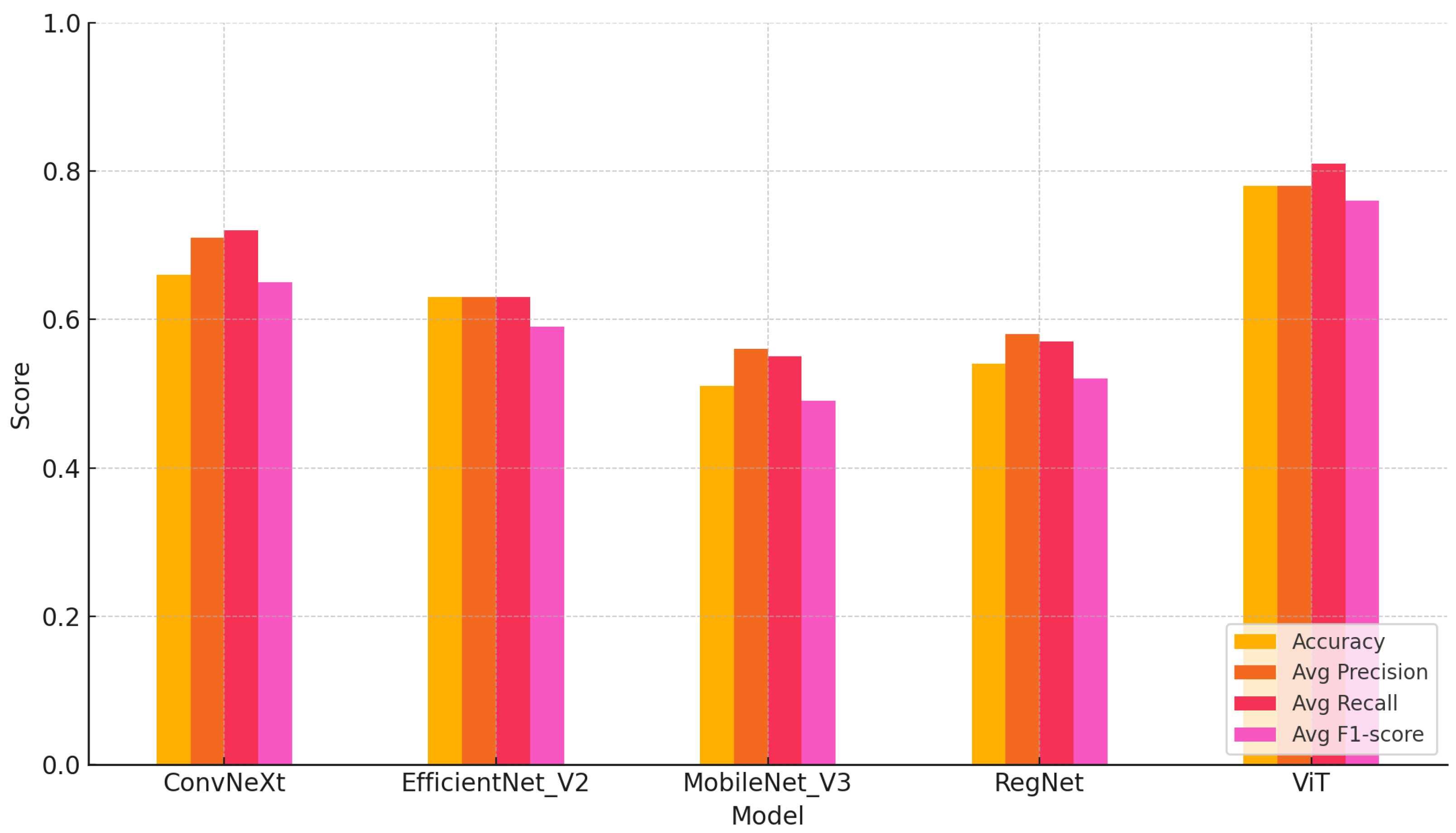

Across all six cross-generational daughter batches, the Vision Transformer (ViT) consistently outperformed all other models in terms of accuracy, class balance, and generalisation. As shown in the mean cross-batch rows of

Table 3, the ViT achieved the highest overall accuracy of 0.78. For low-responder (LR) daughters, it reached 0.88 precision, 0.70 recall, and a 0.76 F1-score; for high-responder (HR) daughters, it attained 0.69 precision, 0.92 recall, and a 0.76 F1-score. These results reflect strong overall classification capability and robust generalisation across biologically independent LR and HR cohorts, demonstrating the ViT’s ability to capture phenotypic features that transfer effectively from parents to daughters. ConvNeXt ranked second with a mean accuracy of 0.66, followed by EfficientNet_V2 at 0.63, RegNet at 0.54, and MobileNet_V3 at 0.51. The lightweight models, MobileNet_V3 and RegNet, exhibited greater variability and weaker discrimination, particularly in the more biologically complex batches.

Building on the overall performance trends, the mean metrics across all six daughter batches provide a clear comparison of model behaviour. A key differentiator among the models lies in the average precision, recall, and F1-score values reported in the last three columns of

Table 3. The ViT achieved the highest mean averages, with 0.78 precision, 0.81 recall, and a 0.76 F1-score, reflecting its ability to classify LR and HR daughters consistently with minimal bias. ConvNeXt followed with 0.71 precision, 0.72 recall, and a 0.65 F1-score, while EfficientNet_V2 scored 0.63 for both precision and recall, with an F1-score of 0.59. RegNet and MobileNet_V3 recorded the lowest performance across all three indicators, reinforcing their limited discriminative capacity in cross-generational evaluations.

A summary comparison across models (average accuracy, macro-precision, macro-recall, and macro-F1 over six daughter batches) is shown in

Figure 3, with full per-batch metrics provided in

Table 3. The ViT maintained superior accuracy and balanced recall across the LR and HR classes, whereas the CNN-based models showed greater sensitivity to batch shifts and occasional bias toward HR predictions. Collectively, these results position the ViT as the most reliable and generalisable architecture for automated cross-generational stress phenotype classification in precision livestock systems.

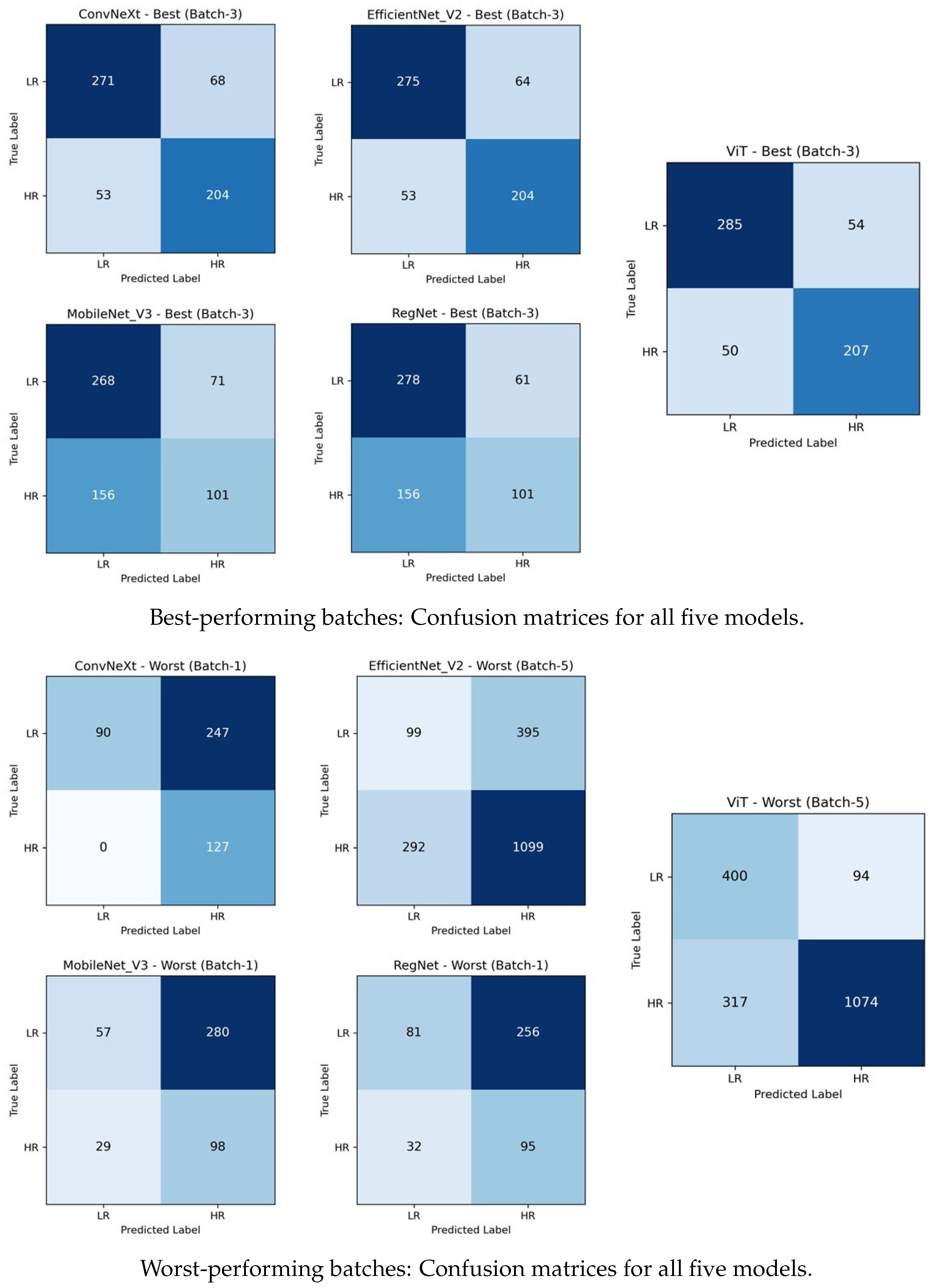

Building on this overall trend, the batch-wise analysis provides insight into how performance varied across daughter cohorts, as shown in

Table 3. Batch 1 produced the lowest overall results, revealing that daughter data posed a significant generalisation challenge for CNN-based models. The ViT achieved 0.75 accuracy and a 0.74 average F1-score, clearly outperforming all other models. ConvNeXt, MobileNet_V3, and RegNet struggled to detect LR samples, with accuracies of 0.47, 0.33, and 0.38, respectively. Confusion matrices (

Figure 4, worst-performing batches) show that large numbers of LR samples were misclassified as HR in batch 1. ConvNeXt misclassified 247 LRs, while MobileNet_V3 misclassified 280, and RegNet misclassified 256, resulting in LR recalls of 0.27, 0.17, and 0.24, respectively. These results suggest that the stress phenotype captured from parents did not fully generalise to daughters in this batch, particularly for LR samples.

Batch 2 showed moderate improvement, with model accuracies ranging from 0.47 to 0.71 and average F1-scores from 0.47 to 0.70 (

Table 3). Batch 3 marked a substantial performance increase for all models, indicating that the phenotypic expressions in daughters more closely aligned with the parent-trained models. Confusion matrices in

Figure 4 illustrate that misclassifications of low-responder (LR) samples decreased substantially in the best-performing case (batch 3) across all five models. The ViT achieved 0.83 accuracy and 0.83 average F1, correctly classifying 285 LR and 207 HR samples and reflecting its superior generalisation capability. ConvNeXt and EfficientNet_V2 both reached 0.80 accuracy, correctly identifying 271 LR and 204 HR samples and 275 LR and 204 HR samples, respectively. MobileNet_V3 and RegNet improved to 0.62 and 0.64 in terms of accuracy, with average F1-scores of 0.59 and 0.60. MobileNet_V3 achieved 0.79 LR recall, correctly classifying 268 LR and 101 HR samples, while RegNet achieved a slightly higher LR recall of 0.82, correctly identifying 278 LR and 101 HR samples with more balanced predictions.

Batch 4 represented another high-performance stage, with the ViT peaking at 0.85 accuracy and a 0.85 average F1-score, demonstrating strong cross-generational generalisation (

Table 3). Batch 5 introduced greater variability and a decline in LR recognition for some models. The ViT and ConvNeXt remained comparatively robust (0.78 and 0.70 accuracy scores, respectively), but EfficientNet_V2’s LR detection capability deteriorated, yielding 0.64 accuracy and 0.49 average F1, as evident in its worst-performing confusion matrix (batch 5) in

Figure 4. The ViT’s confusion matrix also showed a rise in LR-to-HR misclassifications, though it retained the batch lead. MobileNet_V3 reached its minimum performance (0.43 accuracy and 0.41 average F1), while RegNet (0.58 accuracy and 0.54 average F1) was slightly stronger but still limited in terms of class separation. These results suggest that the cross-generational LR feature transfer was weakest in this batch. Batch 6 showed a recovery for CNN-based models, with all architectures reaching their highest or near-highest accuracies, as shown in

Table 3.

4. Discussion

The evaluation of parent-trained models on daughter cohorts highlights the challenges of cross-generational stress phenotype recognition. While the Vision Transformer (ViT) consistently achieved superior performance, CNN-based models exhibited moderate accuracy with greater sensitivity to class imbalances and cohort variability, and lightweight models (MobileNet_V3 and RegNet) were least robust overall. These differences suggest that attention-based models are better suited to capture complex and spatially distributed stress cues, whereas CNNs and lightweight architectures face trade-offs between efficiency and generalisation. These observations align with previous work showing that deep learning and facial expression analysis can reveal stress- or emotion-related traits in pigs and other livestock species [

21,

23,

24,

45].

As summarised in

Table 3, the ViT not only achieved the highest mean accuracy but also the most balanced precision–recall performance across low-responder (LR) and high-responder (HR) daughters. This supports the potential of transformer models for precision livestock applications where phenotypic variability is expected. Importantly, variation across daughter cohorts may reflect biologically meaningful differences, including subtle morphological variation, environmental exposure, and stress adaptation mechanisms, rather than purely technical noise.

To further interpret these model behaviours, Grad-CAM visualisations (

Table 4) provide insights into the regions of attention that drive predictions for both LR and HR daughters. This pattern of region-specific attention is consistent with earlier studies identifying the same facial areas as informative indicators of emotional or stress states in pigs [

19,

20,

21]. The ViT consistently focused on biologically informative facial regions such as the eyes, snout, and ears, which are known to reflect subtle expressions of stress. This targeted attention likely contributed to its robust LR detection performance and balanced HR recognition across batches. In contrast, ConvNeXt and EfficientNet_V2 often exhibited broader or diffuse activation patterns, sometimes emphasizing background regions or non-informative facial areas, which may explain their higher rates of LR-to-HR misclassification. MobileNet_V3 and RegNet occasionally highlighted inconsistent regions across the face, reflecting their limited capacity to capture stable phenotypic cues.

These observations suggest that the reliability of stress classification in daughter sows is tied to the model’s ability to focus on biologically relevant regions that reflect stress phenotypes. Facial regions such as the eyes, snout, and ears are known to exhibit subtle changes in tension, shape, and thermal profile during stress, reflecting autonomic and muscular responses associated with negative effects. When the model’s attention aligns with these biologically informative areas, it is more likely to capture genuine stress-related variation rather than visual noise. Attention-based architectures such as the ViT not only achieve higher quantitative performance but also provide more interpretable activation maps that align with expected facial stress indicators. This alignment is critical for practical deployment in precision livestock systems, where explainable predictions are essential for trust and adoption. The use of batch-wise evaluation offered valuable insight into the variability of stress response phenotypes across lineages. Differences in model performance between daughter batches suggest that stress traits may manifest more strongly in some cohorts than others, possibly due to environmental influences or underlying genetic variability. This highlights the importance of evaluating generalisation across biologically diverse groups when developing predictive tools for livestock systems.

Overall, the cross-generational evaluation demonstrates that attention-based models are better suited for recognising subtle facial patterns associated with stress and associations between parents and offspring. CNN-based models may benefit from feature refinement, domain adaptation, or hybrid attention mechanisms to mitigate batch sensitivity and improve their robustness in biologically diverse herds. Taken together, the results provide good evidence that the model is detecting stress-related phenotypes that are genuinely associated between generations rather than incidental. Notably, under the cross-generational validation protocol, where no daughter was evaluated using a model trained on her own mother, the Vision Transformer (ViT) achieved a mean accuracy of 0.78 and an average F1-score of 0.76 across six daughter batches. These findings indicate that the model is not merely recognising visual resemblance or identity but instead capturing stable, generalisable facial cues associated with stress susceptibility.

For practical implementation in commercial settings, the proposed model could be integrated into existing farm camera systems, provided that consistent front-facing image capture is maintained. Stable lighting and minimal obstructions are important for accurate face segmentation. Lightweight transformer architectures or edge-optimised models could enable on-farm inference using embedded processors or cloud-connected systems. Integration with existing precision-livestock data streams (e.g., feeding or activity records) could enhance early detection of stress-prone animals, providing farmers with actionable welfare indicators in real time.

5. Conclusions

This study evaluated the cross-generational performance of deep learning models trained on parent sows and tested on their daughters for automated stress response phenotype classification. The results show that models trained on maternal facial images can predict stress responses in daughters, even when the daughters’ mothers were not included in training. This indicates that the models are detecting facial markers associated with stress responses rather than simply relying on familial facial resemblance. Some variability across daughter batches was observed, which may reflect biological diversity or environmental factors affecting stress expression.

Among the evaluated architectures, the Vision Transformer (ViT) consistently achieved the highest accuracy and F1-scores across all six daughter batches, demonstrating superior generalisation, balanced class detection, and alignment with biologically meaningful facial regions, as supported by Grad-CAM visualisations. ConvNeXt and EfficientNet_V2 formed a stable middle tier, showing moderate performance and improved accuracy in later batches, while lightweight models such as MobileNet_V3 and RegNet exhibited the greatest variability and the lowest discriminative capability.

These findings highlight two key insights: First, attention-based architectures are more effective for capturing subtle facial stress cues that are associated between parents and offspring, reducing bias toward high-stress classifications and improving interpretability. Second, batch-wise variability underscores the importance of accounting for generational and environmental differences when designing and deploying precision livestock monitoring systems. For practical use, this framework could be integrated into existing camera systems in feeding or inspection areas, provided that lighting and facial visibility are consistent. Lightweight transformer variants could run on local processors or cloud platforms to allow near-real-time welfare monitoring. Combining these outputs with farm management data such as feeding or activity records would support early detection of stress-prone animals and inform welfare-focused breeding decisions.

Importantly, the ability to detect stress phenotypes has direct implications for antimicrobial use (AMU) and antimicrobial resistance (AMR). Chronic stress is a key driver of disease susceptibility, which often leads to increased AMU in intensive farming. Early identification of stress-prone lineages through automated facial analysis could enable proactive welfare interventions and targeted breeding strategies, ultimately reducing the need for antimicrobial treatments and contributing to AMR mitigation.

Future work should explore strategies to enhance cross-cohort generalisation, including domain adaptation, temporal modelling of stress indicators, and hybrid CNN–transformer architectures. By integrating these approaches, automated stress recognition pipelines can become more robust, interpretable, and scalable for real-world livestock management, supporting welfare-driven and AMR-conscious farming practices.

Author Contributions

Conceptualisation, S.U.Y., A.S., E.M.B., M.F.H., M.L.S. and L.N.S.; methodology, S.U.Y. and A.S.; software, S.U.Y., A.S. and M.F.H.; validation, S.U.Y., A.S., E.M.B. and M.F.H.; formal analysis, S.U.Y., A.S., E.M.B. and M.F.H.; investigation, S.U.Y. and A.S.; resources, S.U.Y. and E.M.B.; data curation, S.U.Y., E.M.B. and K.M.D.R.; writing—original draft preparation, S.U.Y.; writing—review and editing, S.U.Y., A.S., E.M.B., K.M.D.R., M.F.H., M.L.S. and L.N.S.; visualisation, S.U.Y., A.S., E.M.B. and M.F.H.; supervision, S.U.Y., A.S. and M.F.H.; project administration, S.U.Y., A.S., E.M.B., M.F.H. and M.L.S.; funding acquisition, M.L.S. and L.N.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Joint Programming Initiative on Antimicrobial Resistance (JPIAMR) under the FARM-CARE project, ‘FARM interventions to Control Antimicrobial ResistancE–Full Stage’ (Project ID: 7429446), and by the Medical Research Council (MRC), UK (Grant Number: MR/W031264/1).

Institutional Review Board Statement

Ethical approval for this collaborative research was obtained from the Animal Welfare and Ethical Review Bodies at both SRUC (Ref: PIG AE14-2022, approved on 1 March 2022) and UWE Bristol (Ref: R209, approved on 23 March 2022).

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Acknowledgments

The authors gratefully acknowledge the support of the Joint Programming Initiative on Antimicrobial Resistance (JPIAMR) and the Medical Research Council (MRC), UK. We also thank the collaborative partners of the FARM-CARE project: the University of the West of England, Scotland’s Rural College, the University of Copenhagen, Teagasc, University Hospital Bonn, Statens Serum Institut (SSI), and the Porkcolombia Association. We are particularly grateful to the farm and technical staff at SRUC’s Pig Research Centre for their invaluable assistance with data collection. During the preparation of this manuscript, the authors used ChatGPT-4 for language editing and polishing (grammar, style, and readability). The authors have reviewed and edited the content generated by the tool and take full responsibility for the final version of this publication.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Martínez-Miró, S.; Tecles, F.; Ramón, M.; Escribano, D.; Hernández, F.; Madrid, J.; Orengo, J.; Martínez-Subiela, S.; Manteca, X.; Cerón, J. Causes, Consequences and Biomarkers of Stress in Swine: An Update. BMC Vet. Res. 2016, 12, 171. [Google Scholar] [CrossRef]

- Albernaz-Gonçalves, R.; Olmos Antillón, G.; Hötzel, M. Linking Animal Welfare and Antibiotic Use in Pig Farming—A Review. Animals 2022, 12, 216. [Google Scholar] [CrossRef]

- Koolhaas, J. Coping Style and Immunity in Animals: Making Sense of Individual Variation. Brain Behav. Immun. 2008, 22, 662–667. [Google Scholar] [CrossRef] [PubMed]

- Kanitz, E.; Tuchscherer, M.; Otten, W.; Tuchscherer, A.; Zebunke, M.; Puppe, B. Coping Style of Pigs Is Associated with Different Behavioral, Neurobiological and Immune Responses to Stressful Challenges. Front. Behav. Neurosci. 2019, 13, 173. [Google Scholar] [CrossRef] [PubMed]

- Sinclair, K.D.; Rutherford, K.M.D.; Wallace, J.M.; Brameld, J.M.; Stöger, R.; Alberio, R.; Sweetman, D.; Gardner, D.S.; Perry, V.E.A.; Adam, C.L.; et al. Epigenetics and developmental programming of welfare and production traits in farm animals. Reprod. Fertil. Dev. 2016, 28, 1443–1478. [Google Scholar] [CrossRef] [PubMed]

- Merlot, E.; Couret, D.; Otten, W. Prenatal Stress, Fetal Imprinting and Immunity. Brain Behav. Immun. 2008, 22, 42–51. [Google Scholar] [CrossRef]

- Couret, D.; Jamin, A.; Simon, G.; Prunier, A.; Merlot, E. Maternal Stress During Late Gestation Has Moderate but Long-Lasting Effects on the Immune System of the Piglets. Vet. Immunol. Immunopathol. 2009, 131, 17–24. [Google Scholar] [CrossRef]

- Rutherford, K.; Robson, S.; Donald, R.; Jarvis, S.; Sandercock, D.; Scott, E.; Nolan, A.; Lawrence, A. Prenatal Stress Amplifies the Immediate Behavioural Responses to Acute Pain in Piglets. Biol. Lett. 2009, 5, 452–454. [Google Scholar] [CrossRef]

- Jarvis, S.; Moinard, C.; Robson, S.; Baxter, E.; Ormandy, E.; Douglas, A.; Seckl, J.; Russell, J.; Lawrence, A. Programming the Offspring of the Pig by Prenatal Social Stress: Neuroendocrine Activity and Behaviour. Horm. Behav. 2006, 49, 68–80. [Google Scholar] [CrossRef]

- Rutherford, K.M.D.; Piastowska, A.; Donald, R.D.; Robson, S.K.; Ison, S.H.; Jarvis, S.; Brunton, P.; Russell, J.A.; Lawrence, A.B. Prenatal Stress Produces Anxiety-Prone Female Offspring and Impaired Maternal Behaviour in the Domestic Pig. Physiol. Behav. 2014, 129, 255–264. [Google Scholar] [CrossRef]

- Rooney, H.; Schmitt, O.; Courty, A.; Lawlor, P.; O’Driscoll, K. Like Mother Like Child: Do Fearful Sows Have Fearful Piglets? Animals 2021, 11, 1232. [Google Scholar] [CrossRef]

- Kranendonk, G.; der Mheen, H.V.; Fillerup, M.; Hopster, H. Social rank of pregnant sows affects their body weight gain and behavior and performance of the offspring. J. Anim. Sci. 2007, 85, 420–429. [Google Scholar] [CrossRef]

- Palme, R. Non-Invasive Measurement of Glucocorticoids: Advances and Problems. Physiol. Behav. 2019, 199, 229–243. [Google Scholar] [CrossRef] [PubMed]

- Plut, J.; Snoj, T.; Golinar Oven, I.; Štukelj, M. The Combination of Serum and Oral Fluid Cortisol Levels and Welfare Quality Protocol® for Assessment of Pig Welfare on Intensive Farms. Agriculture 2023, 13, 351. [Google Scholar] [CrossRef]

- Murphy, E.; Nordquist, R.; van der Staay, F. A Review of Behavioural Methods to Study Emotion and Mood in Pigs, Sus scrofa. Appl. Anim. Behav. Sci. 2014, 159, 9–28. [Google Scholar] [CrossRef]

- Murphy, E.; Melotti, L.; Mendl, M. Assessing Emotions in Pigs: Determining Negative and Positive Mental States. In Understanding the Behaviour and Improving the Welfare of Pigs; Burleigh Dodds Science Publishing: Cambridge, UK, 2021; pp. 455–496. [Google Scholar]

- Zebunke, M.; Puppe, B.; Langbein, J. Effects of Cognitive Enrichment on Behavioural and Physiological Reactions of Pigs. Physiol. Behav. 2013, 118, 70–79. [Google Scholar] [CrossRef] [PubMed]

- Langford, D.J.; Bailey, A.L.; Chanda, M.L.; Clarke, S.E.; Drummond, T.E.; Echols, S.; Glick, S.; Ingrao, J.; Klassen-Ross, T.; LaCroix-Fralish, M.L.; et al. Coding of facial expressions of pain in the laboratory mouse. Nat. Methods 2010, 7, 447–449. [Google Scholar] [CrossRef]

- Descovich, K.A.; Wathan, J.; Leach, M.C.; Buchanan-Smith, H.M.; Flecknell, P.; Farningham, D.; Vick, S. Facial Expression: An Under-Utilised Tool for the Assessment of Welfare in Mammals. ALTEX 2017, 34, 409–429. [Google Scholar] [CrossRef] [PubMed]

- Viscardi, A.; D’Eath, R.; Dalla Costa, E.; Contiero, B.; Cozzi, M.; Minero, M.; Canali, E.; Edwards, S. Development of a Piglet Grimace Scale to Evaluate Piglet Pain Using Facial Expressions Following Castration and Tail Docking: A Pilot Study. Front. Vet. Sci. 2017, 4, 51. [Google Scholar] [CrossRef]

- Camerlink, I.; Coulange, E.; Farish, M.; Baxter, E.M.; Turner, S.P. Facial Expression as a Potential Measure of Both Intent and Emotion in Pigs. Sci. Rep. 2018, 8, 17602. [Google Scholar] [CrossRef]

- Di Giminiani, P.; Brierley, V.; Scollo, A.; Gottardo, F.; Malcolm, E.; Edwards, S.; Leach, M.C. The Assessment of Facial Expressions in Piglets Undergoing Tail Docking and Castration: Toward the Development of the Piglet Grimace Scale. Front. Vet. Sci. 2016, 3, 100. [Google Scholar] [CrossRef]

- Hansen, M.; Baxter, E.; Rutherford, K.; Futro, A.; Smith, M.; Smith, L. Towards Facial Expression Recognition for On-Farm Welfare Assessment in Pigs. Agriculture 2021, 11, 847. [Google Scholar] [CrossRef]

- Nie, L.; Li, B.; Du, Y.; Jiao, F.; Song, X.; Liu, Z. Deep Learning Strategies with CReToNeXt-YOLOv5 for Advanced Pig Face Emotion Detection. Sci. Rep. 2024, 14, 1679. [Google Scholar] [CrossRef] [PubMed]

- Caneschi, A.; Bardhi, A.; Barbarossa, A.; Zaghini, A. The Use of Antibiotics and Antimicrobial Resistance in Veterinary Medicine, a Complex Phenomenon: A Narrative Review. Antibiotics 2023, 12, 487. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Mao, H.; Wu, C.; Feichtenhofer, C.; Li, T.; Chang, H.; Kumar, R.; Sreerama, S.; Sreerama, B. ConvNeXt: A ConvNet for the 2020s. arXiv 2022, arXiv:2201.03545. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNetV2: Smaller Models and Faster Training. arXiv 2021, arXiv:2104.00298. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.; Tan, M. Searching for MobileNetV3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar] [CrossRef]

- Radosavovic, I.; Kosaraju, V.; He, K.; Lin, Z.; Cao, P.; Girshick, R. Designing Network Design Spaces. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10428–10436. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Gelly, S.; Houlsby, N. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Ison, S.; D’Eath, R.; Robson, S.; Baxter, E.; Ormandy, E.; Douglas, A.; Russell, J.; Lawrence, A.; Jarvis, S. ‘Subordination style’ in pigs? The response of pregnant sows to mixing stress affects their offspring’s behaviour and stress reactivity. Appl. Anim. Behav. Sci. 2010, 124, 16–27. [Google Scholar] [CrossRef]

- GoPro Inc. GoPro HERO11 Black-Technical Specifications; GoPro Inc.: San Mateo, CA, USA, 2023; Available online: https://gopro.com/en/gb/shop/cameras/hero11-black/CHDHX-111-master.html (accessed on 29 July 2025).

- Anker Innovations Ltd. Anker PowerCore 26K Model A1277; Anker Innovations Ltd.: Changsha, China, 2023; Available online: https://www.anker.com/uk/products/a1277 (accessed on 29 July 2025).

- Kirillov, A.; Mintzer, M.; Girshick, R.; Han, X.; He, K. Segment Anything. arXiv 2023, arXiv:2304.02643. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv8-Ultralytics YOLO Object Detection. 2025. Available online: https://github.com/ultralytics/ultralytics (accessed on 29 July 2025).

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar] [CrossRef]

- Ahmed, M.; Seraj, R.; Islam, S. The K-Means Algorithm: A Comprehensive Survey and Performance Evaluation. Electronics 2020, 9, 1295. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems; Curran Associates: Red Hook, NY, USA, 2012; Volume 25, pp. 1097–1105. Available online: https://papers.nips.cc/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf (accessed on 29 July 2025).

- Zhuang, F.; Qi, Z.; Duan, K.; Zhu, Y.; Zhu, H.; Xiong, H.; Chen, Q. A Comprehensive Survey on Transfer Learning. Proc. IEEE 2020, 109, 43–76. [Google Scholar] [CrossRef]

- Arlot, S.; Celisse, A. A Survey of Cross-Validation Procedures for Model Selection. Stat. Surv. 2010, 4, 40–79. [Google Scholar] [CrossRef]

- Berrar, D. Cross-Validation. In Encyclopedia of Bioinformatics and Computational Biology; Academic Press: Cambridge, MA, USA, 2019; pp. 542–545. [Google Scholar] [CrossRef]

- Powers, D.M.W. Evaluation: From Precision, Recall and F-Measure to ROC, Informedness, Markedness and Correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef]

- Fernandez, F. TorchCAM: Class Activation Explorer for PyTorch. 2025. Available online: https://github.com/frgfm/torch-cam (accessed on 29 July 2025).

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).