A Crawling Review of Fruit Tree Image Segmentation

Abstract

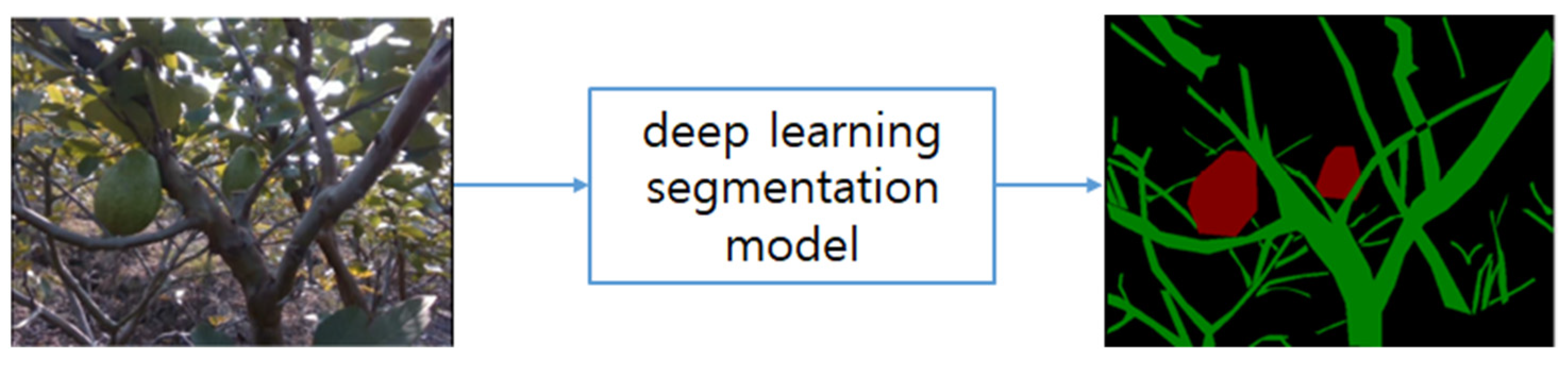

1. Introduction

- Focus on frontal-view image segmentation in orchard environments: Unlike most existing reviews that concentrate on top-view images captured by UAVs or satellites, this work specifically targets segmentation of frontal-view images of fruit trees within orchard environments.

- Coverage of recent literature: The review includes an extensive collection of papers published up to the end of 2025, ensuring that the most recent advancements are captured.

- Introduction of a new review methodology: A novel review approach, termed crawling review, is proposed and applied in the paper to enhance the comprehensiveness and systematic nature of the literature search.

- Proposal of a four-tier taxonomy: A hierarchical taxonomy is developed and used to classify the reviewed studies across four levels—methodology, image type, agricultural task, and fruit type—providing a structured and insightful overview of the field.

2. Review Scope, Method, and Statistics

2.1. Review Scope

2.2. Literature Search and Taxonomy

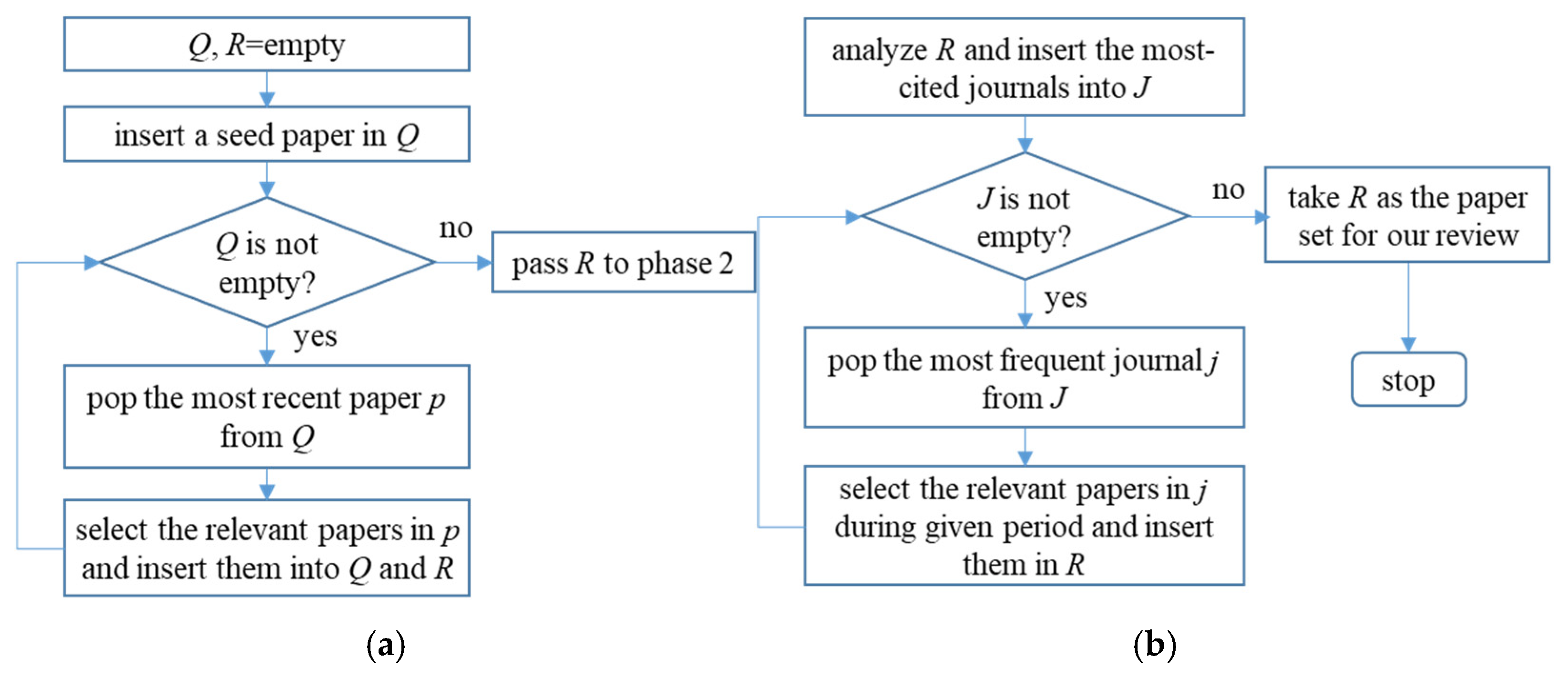

2.2.1. Crawling Review

2.2.2. Four-Tier Taxonomy

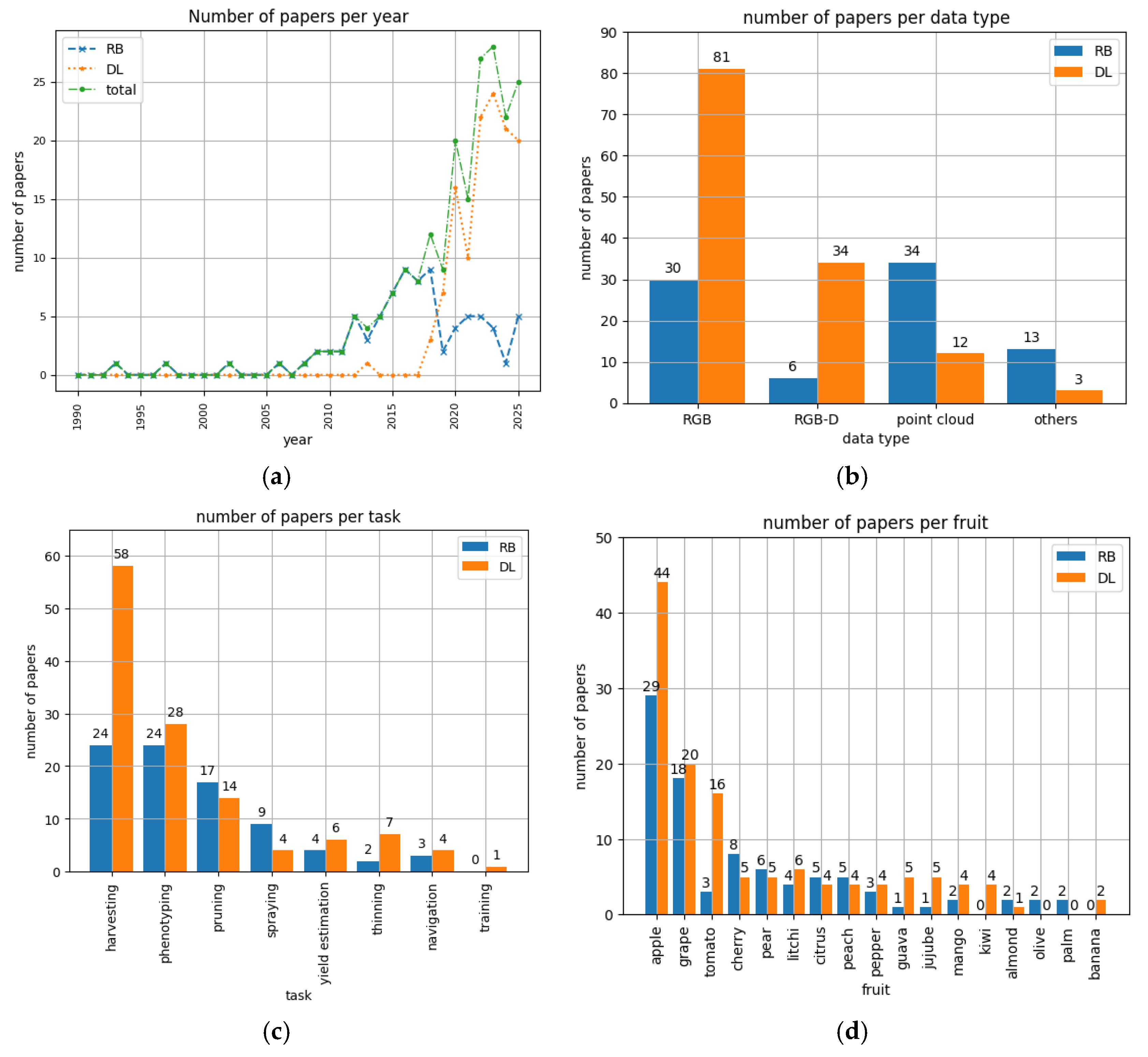

2.3. Statistics

3. Rule-Based Algorithms for Tree Image Segmentation

3.1. RGB

3.1.1. Phenotyping

3.1.2. Harvesting

3.1.3. Spraying

3.1.4. Pruning

3.1.5. Yield Estimation

3.1.6. Navigation

3.1.7. Thinning

3.2. RGB-D

3.2.1. Phenotyping

3.2.2. Harvesting

3.2.3. Spraying

3.2.4. Navigation

3.3. Point Cloud

3.3.1. Phenotyping

3.3.2. Harvesting

3.3.3. Spraying

3.3.4. Pruning

3.3.5. Yield Estimation

3.3.6. Navigation

3.3.7. Thinning

3.4. Others

3.4.1. Phenotyping

3.4.2. Harvesting

3.4.3. Pruning

4. DL Algorithms for Tree Image Segmentation

4.1. RGB

4.1.1. Phenotyping

4.1.2. Harvesting

4.1.3. Spraying

4.1.4. Pruning

4.1.5. Yield Estimation

4.1.6. Navigation

4.1.7. Thinning

4.2. RGB-D

4.2.1. Phenotyping

4.2.2. Harvesting

4.2.3. Spraying

4.2.4. Pruning

4.2.5. Training

4.2.6. Navigation

4.2.7. Thinning

4.3. Point Cloud

4.3.1. Phenotyping

4.3.2. Harvesting

4.3.3. Pruning

4.4. Others

4.4.1. Phenotyping

4.4.2. Yield Estimation

5. Discussion and Future Work

5.1. Sensors

5.2. Methods

5.3. Datasets

5.4. Generalizability

5.5. Future Works

- Table A2 in Appendix A.3 presents 11 public datasets related to tree image segmentation. Except for the last dataset, each one is highly specific to a given task. The construction of versatile datasets with large quantities and good quality is a critical element in future tree segmentation research. These datasets can serve as de facto standards to enable the objective comparison of newly developed segmentation models. Additionally, they will motivate challenging contests.

- If a foundation model is developed for agriculture, it could be applied to a wide range of tasks and conditions, either directly or with minimal fine-tuning [226]. Fine-tuning such a model would enhance its adaptability across diverse agricultural scenarios, including variations in season, soil type, and tree growth patterns. Specific constraints can be exploited when developing the foundation model for tree segmentation, including the fact that the objects in the image are confined to tree components such as the fruit, flowers, branches, leaves, and trunk. These constraints will make the development much easier than that for a random scene.

- Fusing a CNN and transformer has been proved to extract more robust features [227]. Medical imaging is actively adopting fused models to identify thin vessels and small tissues [228]. Agricultural tasks could benefit from a fused model to improve the segmentation accuracy, especially in identifying small fruits, thin branches, and highly occluded fruits or flowers.

- Monocular depth estimation infers a depth map from a single RGB image or video. For natural images with applications in augmented reality or autonomous driving, many excellent methods are available [229]. However, few papers can be found in the agriculture domain. Cui et al. produced a vineyard depth map from an RGB video using U-net, which is a good basis to begin research in the agriculture domain [230]. The resulting maps could be used for tasks such as robot path planning and visual servoing.

- The 3D reconstruction of a row of trees could be implemented by combining DL techniques. For example, combining panoramic imaging and monocular depth estimation from an RGB video is expected to accurately generate a 3D tree row model.

- In the agriculture domain, this is just an initial stage of adopting few-shot learning or self-supervised learning [104,231,232]. These strategies will be very effective in overcoming the obstacles incurred as a result of the inherent large variations in agricultural tasks and environments. They will promote a more versatile system. It is highly recommended that a pre-trained model with a large and general agricultural dataset be built and then fine-tuned to handle downstream segmentation problems suitable for given tasks using few-shot learning.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. Basics of Image Segmentation and Performance Metrics

| Aspect | Rule-Based Methods | Deep Learning Methods |

|---|---|---|

| Feature | Hand-crafted features (e.g., color thresholds, edge detection, texture analysis, and clustering) and predefined rules based on agricultural domain knowledge | Automatic hierarchical feature learning from agricultural data through deep neural networks such as CNN and transformer |

| Training | No training phase; rules defined by agricultural experts | Training a deep neural network using large labeled datasets |

| Robustness | Poor in varying lighting, occlusions, and complex backgrounds; struggles with overlapping trees, shadows, and clutter | Strong; handles variations in lighting, scale, occlusions, and complex spatial relationships |

| Generalization | Poor; specific to designed agricultural scenarios and conditions | Excellent; transfers well across different agricultural tasks with fine-tuning |

| Advantage | Fast, interpretable, no training data required, works well in controlled settings | High accuracy, robust to variations, handles complex patterns, scalable, semantic |

| Limitation | Sensitive to parameter tuning; fails in unforeseen conditions; not semantic | Requires substantial computational resources; needs large datasets |

Appendix A.1.1. Image Type

Appendix A.1.2. Rule-Based Methods

Appendix A.1.3. Deep Learning Methods

Appendix A.2. Agricultural Tasks Supported by Tree Segmentation

Appendix A.2.1. Agricultural Environments

Appendix A.2.2. Agricultural Tasks

Appendix A.3. Public Datasets

Appendix A.3.1. Apple

Appendix A.3.2. Grape

Appendix A.3.3. Tomato

Appendix A.3.4. Avocado

Appendix A.3.5. Capsicum Annum (Pepper)

Appendix A.3.6. Street Trees

| Dataset | Fruit | Image | URL |

|---|---|---|---|

| WACL [94] | apple | depth | https://engineering.purdue.edu/RVL/WACV_Dataset (accessed on 23 October 2025) |

| Fuji-SfM [268] | apple | RGB | http://www.grap.udl.cat/en/publications/datasets.html (accessed on 23 October 2025) |

| NIHHS-JBNU [8] | apple | RGB | http://data.mendeley.com/datasets/t7jk2mspcy/1 (accessed on 23 October 2025) |

| Fruit Flower [15] | apple, peach, pear | RGB | https://doi.org/10.15482/USDA.ADC/1423466 (accessed on 23 October 2025) |

| Grapes-and-Leaves [117] | grape | RGB | https://github.com/humain-lab/Grapes-and-Leaves-dataset (accessed on 23 October 2025) |

| Stem [118] | grape | RGB | https://github.com/humain-lab/stem-dataset (accessed on 23 October 2025) |

| 3D2cut Single Guyot [156] | grape | RGB | https://www.idiap.ch/en/scientific-research/data/3d2cut (accessed on 23 October 2025) |

| Pheno4D [204] | tomato and maize | point cloud | https://www.ipb.uni-bonn.de/data/pheno4d/ (accessed on 23 October 2025) |

| Avocado [270] | avocado | point cloud | https://data.mendeley.com/datasets/d6k5v2rmyx/1 (accessed on 23 October 2025) |

| Capsicum Annum Image (synthetic) [172] | Capsicum annum | RGB-D | https://data.4tu.nl/articles/_/12706703/1 (accessed on 23 October 2025) |

| Urban Street Tree [271] | Street trees (50 species) | RGB | https://ytt917251944.github.io/dataset_jekyll/ (accessed on 23 October 2025) |

References

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep Learning in Agriculture: A Survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Thakur, A.; Venu, S.; Gurusamy, M. An Extensive Review on Agricultural Robots with a Focus on Their Perception Systems. Comput. Electron. Agric. 2023, 212, 108146. [Google Scholar] [CrossRef]

- Hua, W.; Zhang, Z.; Zhang, W.; Liu, X.; Hu, C.; He, Y.; Mhamed, M.; Li, X.; Dong, H.; Saha, C.K.; et al. Key Technologies in Apple Harvesting Robot for Standardized Orchards: A Comprehensive Review of Innovations, Challenges, and Future Directions. Comput. Electron. Agric. 2025, 235, 110343. [Google Scholar] [CrossRef]

- Dhanya, V.G.; Subeesh, A.; Kushwaha, N.L.; Vishwakarma, D.K.; Kumar, T.N.; Ritika, G.; Singh, A.N. Deep Learning-Based Computer Vision Approaches for Smart Agricultural Applications. Artif. Intell. Agric. 2022, 6, 211–229. [Google Scholar] [CrossRef]

- Luo, J.; Li, B.; Leung, C. A Survey of Computer Vision Technologies in Urban and Controlled-Environment Agriculture. ACM Comput. Surv. 2023, 56, 118. [Google Scholar] [CrossRef]

- Zhang, A.; Lipton, Z.C.; Li, M.; Smola, A.J. Dive into Deep Learning. 2023. Available online: https://d2l.ai/ (accessed on 12 October 2025).

- Chehreh, B.; Moutinho, A.; Viegas, C. Latest Trends on Tree Classification and Segmentation Using UAV Data—A Review of Agroforestry Applications. Remote Sens. 2023, 15, 2263. [Google Scholar] [CrossRef]

- La, Y.-J.; Seo, D.; Kang, J.; Kim, M.; Yoo, T.-W.; Oh, I.-S. Deep Learning-Based Segmentation of Intertwined Fruit Trees for Agricultural Tasks. Agriculture 2023, 13, 2097. [Google Scholar] [CrossRef]

- Cheng, Z.; Qi, L.; Cheng, Y.; Wu, Y.; Zhang, H. Interlacing Orchard Canopy Separation and Assessment Using UAV Images. Remote Sens. 2020, 12, 767. [Google Scholar] [CrossRef]

- Xiao, F.; Wang, H.; Xu, Y.; Zhang, R. Fruit Detection and Recognition Based on Deep Learning for Automatic Harvesting: An Overview and Review. Agronomy 2023, 13, 1625. [Google Scholar] [CrossRef]

- Wan, H.; Zeng, X.; Fan, Z.; Zhang, S.; Kang, M. U2ESPNet—A Lightweight and High-Accuracy Convolutional Neural Network for Real-Time Semantic Segmentation of Visible Branches. Comput. Electron. Agric. 2023, 204, 107542. [Google Scholar] [CrossRef]

- Borrenpohl, D.; Karkee, M. Automated Pruning Decisions in Dormant Sweet Cherry Canopies Using Instance Segmentation. Comput. Electron. Agric. 2023, 207, 107716. [Google Scholar] [CrossRef]

- Hussain, M.; He, L.; Schupp, J.; Lyons, D.; Heinemann, P. Green Fruit Segmentation and Orientation Estimation for Robotic Green Fruit Thinning of Apples. Comput. Electron. Agric. 2023, 207, 107734. [Google Scholar] [CrossRef]

- Zheng, C.; Chen, P.; Pang, J.; Yang, X.; Chen, C.; Tu, S.; Xue, Y. A Mango Picking Vision Algorithm on Instance Segmentation and Key Point Detection from RGB Images in an Open Orchard. Biosyst. Eng. 2021, 206, 32–54. [Google Scholar] [CrossRef]

- Dias, P.A.; Tabb, A.; Medeiros, H. Multispecies Fruit Flower Detection Using a Refined Semantic Segmentation Network. IEEE Robot. Autom. Lett. 2018, 3, 3003–3010. [Google Scholar] [CrossRef]

- Snyder, H. Literature Review as a Research Methodology: An Overview and Guidelines. J. Bus. Res. 2019, 104, 333–339. [Google Scholar] [CrossRef]

- Tabb, A.; Medeiros, H. A Robotic Vision System to Measure Tree Traits. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 6005–6012. [Google Scholar] [CrossRef]

- Tabb, A.; Medeiros, H. Automatic Segmentation of Trees in Dynamic Outdoor Environments. Comput. Ind. 2018, 98, 90–99. [Google Scholar] [CrossRef]

- Svensson, J. Assessment of Grapevine Vigour Using Image Processing. Master’s Thesis, Linköping University, Linköping, Sweden, 2002. [Google Scholar]

- Chen, Y.; Xiao, K.; Gao, G.; Zhang, F. High-Fidelity 3D Reconstruction of Peach Orchards Using a 3DGS-Ag Model. Comput. Electron. Agric. 2025, 234, 110225. [Google Scholar] [CrossRef]

- Ji, W.; Qian, Z.; Xu, B.; Tao, Y.; Zhao, D.; Ding, S. Apple Tree Branch Segmentation from Images with Small Gray-Level Difference for Agricultural Harvesting Robot. Optik 2016, 127, 11173–11182. [Google Scholar] [CrossRef]

- Ji, W.; Meng, X.; Tao, Y.; Xu, B.; Zhao, D. Fast Segmentation of Colour Apple Image under All-Weather Natural Conditions for Vision Recognition of Picking Robot. Int. J. Adv. Robot. Syst. 2017, 13, 24. [Google Scholar] [CrossRef]

- Silwal, A.; Davidson, J.R.; Karkee, M.; Mo, C.; Zhang, Q.; Lewis, K. Design, Integration, and Field Evaluation of a Robotic Apple Harvester. J. Field Robot. 2017, 34, 1140–1159. [Google Scholar] [CrossRef]

- Xiang, R. Image Segmentation for Whole Tomato Plant Recognition at Night. Comput. Electron. Agric. 2018, 154, 434–442. [Google Scholar] [CrossRef]

- Deng, J.; Li, J.; Zou, X. Extraction of Litchi Stem Based on Computer Vision under Natural Scene. In Proceedings of the International Conference on Computer Distributed Control and Intelligent Environmental Monitoring (CDCIEM), Changsha, China, 19–20 February 2011; pp. 832–835. [Google Scholar] [CrossRef]

- Zhuang, J.; Hou, C.; Tang, Y.; He, Y.; Guo, Q.; Zhong, Z.; Luo, S. Computer Vision-Based Localization of Picking Points for Automatic Litchi Harvesting Applications towards Natural Scenarios. Biosyst. Eng. 2019, 187, 1–20. [Google Scholar] [CrossRef]

- Xiong, J.; Lin, R.; Liu, Z.; He, Z.; Tang, L.; Yang, Z.; Zou, X. The Recognition of Litchi Clusters and the Calculation of Picking Point in a Nocturnal Natural Environment. Biosyst. Eng. 2018, 166, 44–57. [Google Scholar] [CrossRef]

- Xiong, J.; He, Z.; Lin, R.; Liu, Z.; Bu, R.; Yang, Z.; Peng, H.; Zou, X. Visual Positioning Technology of Picking Robots for Dynamic Litchi Clusters with Disturbance. Comput. Electron. Agric. 2018, 151, 226–237. [Google Scholar] [CrossRef]

- Pla, F.; Juste, F.; Ferri, F.; Vicens, M. Colour Segmentation Based on a Light Reflection Model to Locate Citrus Fruits for Robotic Harvesting. Comput. Electron. Agric. 1993, 9, 53–70. [Google Scholar] [CrossRef]

- Lü, Q.; Cai, J.; Liu, B.; Deng, L.; Zhang, Y. Identification of Fruit and Branch in Natural Scenes for Citrus Harvesting Robot Using Machine Vision and Support Vector Machine. Int. J. Agric. Biol. Eng. 2014, 7, 115–121. [Google Scholar] [CrossRef]

- Liu, T.-H.; Ehsani, R.; Toudeshki, A.; Zou, X.-J.; Wang, H.-J. Detection of Citrus Fruit and Tree Trunks in Natural Environments Using a Multi-Elliptical Boundary Model. Comput. Ind. 2018, 99, 9–16. [Google Scholar] [CrossRef]

- Amatya, S.; Karkee, M.; Gongal, A.; Zhang, Q.; Whiting, M.D. Detection of Cherry Tree Branches with Full Foliage in Planar Architecture for Automated Sweet-Cherry Harvesting. Biosyst. Eng. 2016, 146, 3–15. [Google Scholar] [CrossRef]

- Amatya, S.; Karkee, M.; Zhang, Q.; Whiting, M.D. Automated Detection of Branch Shaking Locations for Robotic Cherry Harvesting Using Machine Vision. Robotics 2017, 6, 31. [Google Scholar] [CrossRef]

- Mohammadi, P.; Massah, J.; Vakilian, K.A. Robotic Date Fruit Harvesting Using Machine Vision and a 5-DOF Manipulator. J. Field Robot. 2023, 40, 1408–1423. [Google Scholar] [CrossRef]

- He, L.; Du, X.; Qiu, G.; Wu, C. 3D Reconstruction of Chinese Hickory Trees for Mechanical Harvest. In Proceedings of the American Society of Agricultural and Biological Engineers (ASABE) Annual Meeting, Dallas, TX, USA, 29 July–1 August 2012. Paper No. 121340678. [Google Scholar] [CrossRef]

- Wu, C.; He, L.; Du, X.; Chen, S. 3D Reconstruction of Chinese Hickory Tree for Dynamics Analysis. Biosyst. Eng. 2014, 119, 69–79. [Google Scholar] [CrossRef]

- Hocevar, M.; Sirok, B.; Jejcic, V.; Godesa, T.; Lesnik, M.; Stajnko, D. Design and Testing of an Automated System for Targeted Spraying in Orchards. J. Plant Dis. Prot. 2010, 117, 71–79. [Google Scholar] [CrossRef]

- Berenstein, R.; Ben-Shahar, O.; Shapiro, A.; Edan, Y. Grape Clusters and Foliage Detection Algorithms for Autonomous Selective Vineyard Sprayer. Intell. Serv. Robot. 2010, 3, 233–243. [Google Scholar] [CrossRef]

- Asaei, H.; Jafari, A.; Loghavi, M. Site-Specific Orchard Sprayer Equipped with Machine Vision for Chemical Usage Management. Comput. Electron. Agric. 2019, 162, 431–439. [Google Scholar] [CrossRef]

- Cheng, Z.; Qi, L.; Cheng, Y. Cherry Tree Crown Extraction from Natural Orchard Images with Complex Backgrounds. Agriculture 2021, 11, 431. [Google Scholar] [CrossRef]

- McFarlane, N.J.B.; Tisseyre, B.; Sinfort, C.; Tillett, R.D.; Sevila, F. Image Analysis for Pruning of Long Wood Grape Vines. J. Agric. Eng. Res. 1997, 66, 111–119. [Google Scholar] [CrossRef]

- Gao, M.; Lu, T.-F. Image Processing and Analysis for Autonomous Grapevine Pruning. In Proceedings of the IEEE International Conference on Mechatronics and Automation (ICMA), Luoyang, China, 25–29 June 2006; pp. 922–927. [Google Scholar] [CrossRef]

- Roy, P.; Dong, W.; Isler, V. Registering Reconstruction of the Two Sides of Fruit Tree Rows. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–9. [Google Scholar] [CrossRef]

- Dunkan, B.; Bulanon, D.M.; Bulanon, J.I.; Nelson, J. Development of a Cross-Platform Mobile Application for Fruit Yield Estimation. AgriEngineering 2024, 6, 1807–1826. [Google Scholar] [CrossRef]

- Juman, M.A.; Wong, Y.W.; Rajkumar, R.K.; Goh, L.J. A Novel Tree Trunk Detection Method for Oil-Palm Plantation Navigation. Comput. Electron. Agric. 2016, 128, 172–180. [Google Scholar] [CrossRef]

- Zhang, C.; Mouton, C.; Valente, J.; Kooistra, L.; van Ooteghem, R.; de Hoog, D.; van Dalfsen, P.; de Jong, P.F. Automatic Flower Cluster Estimation in Apple Orchards Using Aerial and Ground-Based Point Clouds. Biosyst. Eng. 2022, 221, 164–180. [Google Scholar] [CrossRef]

- Xue, J.; Fan, B.; Yan, J.; Dong, S.; Ding, Q. Trunk Detection Based on Laser Radar and Vision Data Fusion. Int. J. Agric. Biol. Eng. 2018, 11, 20–26. [Google Scholar] [CrossRef]

- Lin, G.; Tang, Y.; Zou, X.; Xiong, J.; Fang, Y. Color-, Depth-, and Shape-Based 3D Fruit Detection. Precis. Agric. 2020, 21, 1–17. [Google Scholar] [CrossRef]

- Xiao, K.; Ma, Y.; Gao, G. An Intelligent Precision Orchard Pesticide Spray Technique Based on the Depth-of-Field Extraction Algorithm. Comput. Electron. Agric. 2017, 133, 30–36. [Google Scholar] [CrossRef]

- Gao, G.; Xiao, K.; Ma, Y. A Leaf-Wall-to-Spray-Device Distance and Leaf-Wall-Density-Based Automatic Route-Planning Spray Algorithm for Vineyards. Crop Prot. 2018, 111, 33–41. [Google Scholar] [CrossRef]

- Gao, G.; Xiao, K.; Jia, Y. A Spraying Path Planning Algorithm Based on Colour-Depth Fusion Segmentation in Peach Orchards. Comput. Electron. Agric. 2020, 173, 105412. [Google Scholar] [CrossRef]

- Gimenez, J.; Sansoni, S.; Tosetti, S.; Capraro, F.; Carelli, R. Trunk Detection in Tree Crops Using RGB-D Images for Structure-Based ICM-SLAM. Comput. Electron. Agric. 2022, 199, 107099. [Google Scholar] [CrossRef]

- Rosell Polo, J.R.; Sanz, R.; Llorens, J.; Arnó, J.; Escolà, A.; Ribes-Dasi, M.; Masip, J.; Camp, F.; Gràcia, F.; Solanelles, F.; et al. A Tractor-Mounted Scanning LiDAR for the Non-Destructive Measurement of Vegetative Volume and Surface Area of Tree-Row Plantations: A Comparison with Conventional Destructive Measurements. Biosyst. Eng. 2009, 102, 128–134. [Google Scholar] [CrossRef]

- Rosell, J.R.; Llorens, J.; Sanz, R.; Arnó, J.; Ribes-Dasi, M.; Masip, J.; Escolà, A.; Camp, F.; Solanelles, F.; Gràcia, F.; et al. Obtaining the Three-Dimensional Structure of Tree Orchards from Remote 2D Terrestrial LiDAR Scanning. Agric. For. Meteorol. 2009, 149, 1505–1515. [Google Scholar] [CrossRef]

- Méndez, V.; Rosell-Polo, J.R.; Sanz, R.; Escolà, A.; Catalán, H. Deciduous Tree Reconstruction Algorithm Based on Cylinder Fitting from Mobile Terrestrial Laser Scanned Point Clouds. Biosyst. Eng. 2014, 124, 78–88. [Google Scholar] [CrossRef]

- Das, J.; Cross, G.; Qu, C.; Makineni, A.; Tokekar, P.; Mulgaonkar, Y.; Kumar, V. Devices, Systems, and Methods for Automated Monitoring Enabling Precision Agriculture. In Proceedings of the IEEE International Conference on Automation Science and Engineering (CASE), Gothenburg, Sweden, 24–28 August 2015; pp. 462–469. [Google Scholar] [CrossRef]

- Peng, C.; Roy, P.; Luby, J.; Isler, V. Semantic Mapping of Orchards. IFAC-Pap. OnLine 2016, 49, 85–89. [Google Scholar] [CrossRef]

- Zhang, C.; Yang, G.; Jiang, Y.; Xu, B.; Li, X.; Zhu, Y.; Lei, L.; Chen, R.; Dong, Z.; Yang, H. Apple Tree Branch Information Extraction from Terrestrial Laser Scanning and Backpack-LiDAR. Remote Sens. 2020, 12, 3592. [Google Scholar] [CrossRef]

- Wahabzada, M.; Paulus, S.; Kersting, K.; Mahlein, A.-K. Automated Interpretation of 3D Laser-Scanned Point Clouds for Plant Organ Segmentation. BMC Bioinform. 2015, 16, 248. [Google Scholar] [CrossRef]

- Scholer, F.; Steinhage, V. Automated 3D Reconstruction of Grape Cluster Architecture from Sensor Data for Efficient Phenotyping. Comput. Electron. Agric. 2015, 114, 163–177. [Google Scholar] [CrossRef]

- Mack, J.; Lenz, C.; Teutrine, J.; Steinhage, V. High-Precision 3D Detection and Reconstruction of Grapes from Laser Range Data for Efficient Phenotyping Based on Supervised Learning. Comput. Electron. Agric. 2017, 135, 300–311. [Google Scholar] [CrossRef]

- Zhu, T.; Ma, X.; Guan, H.; Wu, X.; Wang, F.; Yang, C.; Jiang, Q. A Calculation Method of Phenotypic Traits Based on Three-Dimensional Reconstruction of Tomato Canopy. Comput. Electron. Agric. 2023, 204, 107515. [Google Scholar] [CrossRef]

- Zhu, T.; Ma, X.; Guan, H.; Wu, X.; Wang, F.; Yang, C.; Jiang, Q. A Method for Detecting Tomato Canopies’ Phenotypic Traits Based on Improved Skeleton Extraction Algorithm. Comput. Electron. Agric. 2023, 214, 108285. [Google Scholar] [CrossRef]

- Auat Cheein, F.A.; Guivant, J.; Sanz, R.; Escolà, A.; Yandún, F.; Torres-Torriti, M.; Rosell-Polo, J.R. Real-Time Approaches for Characterization of Fully and Partially Scanned Canopies in Groves. Comput. Electron. Agric. 2015, 118, 361–371. [Google Scholar] [CrossRef]

- Nielsen, M.; Slaughter, D.C.; Gliever, C.; Upadhyaya, S. Orchard and Tree Mapping and Description Using Stereo Vision and LiDAR. In Proceedings of the International Conference of Agricultural Engineering (AgEng), CIGR–EurAgEng, Valencia, Spain, 8 July 2012. [Google Scholar]

- Underwood, J.P.; Jagbrant, G.; Nieto, J.I.; Sukkarieh, S. LiDAR-Based Tree Recognition and Platform Localization in Orchards. J. Field Robot. 2015, 32, 1056–1074. [Google Scholar] [CrossRef]

- Bargoti, S.; Underwood, J.P.; Nieto, J.I.; Sukkarieh, S. A Pipeline for Trunk Detection in Trellis Structured Apple Orchards. J. Field Robot. 2015, 32, 1075–1094. [Google Scholar] [CrossRef]

- Underwood, J.P.; Hung, C.; Whelan, B.; Sukkarieh, S. Mapping Almond Orchard Canopy Volume, Flowers, Fruits and Yield Using LiDAR and Vision Sensors. Comput. Electron. Agric. 2016, 130, 83–96. [Google Scholar] [CrossRef]

- Westling, F.; Underwood, J.; Bryson, M. Graph-Based Methods for Analyzing Orchard Tree Structure Using Noisy Point Cloud Data. Comput. Electron. Agric. 2021, 187, 106270. [Google Scholar] [CrossRef]

- Li, L.; Fu, W.; Zhang, B.; Yang, Y.; Ge, Y.; Shen, C. Branch Segmentation and Phenotype Extraction of Apple Trees Based on Improved Laplace Algorithm. Comput. Electron. Agric. 2025, 232, 109998. [Google Scholar] [CrossRef]

- Tao, Y.; Zhou, J. Automatic Apple Recognition Based on the Fusion of Color and 3D Feature for Robotic Fruit Picking. Comput. Electron. Agric. 2017, 142, 388–396. [Google Scholar] [CrossRef]

- Mahmud, M.S.; Zahid, A.; He, L.; Choi, D.; Krawczyk, G.; Zhu, H.; Heinemann, P. Development of a LiDAR-Guided Section-Based Tree Canopy Density Measurement System for Precision Spray Applications. Comput. Electron. Agric. 2021, 182, 106053. [Google Scholar] [CrossRef]

- Guo, N.; Xu, N.; Kang, J.; Zhang, G.; Meng, Q.; Niu, M.; Wu, W.; Zhang, X. A Study on Canopy Volume Measurement Model for Fruit Tree Application Based on LiDAR Point Cloud. Agriculture 2025, 15, 130. [Google Scholar] [CrossRef]

- Karkee, M.; Adhikari, B.; Amatya, S.; Zhang, Q. Identification of Pruning Branches in Tall Spindle Apple Trees for Automated Pruning. Comput. Electron. Agric. 2014, 103, 127–135. [Google Scholar] [CrossRef]

- Elfiky, N.M.; Akbar, S.A.; Sun, J.; Park, J.; Kak, A. Automation of Dormant Pruning in Specialty Crop Production: An Adaptive Framework for Automatic Reconstruction and Modeling of Apple Trees. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 65–73. [Google Scholar] [CrossRef]

- Zeng, L.; Feng, J.; He, L. Semantic Segmentation of Sparse 3D Point Cloud Based on Geometrical Features for Trellis-Structured Apple Orchard. Biosyst. Eng. 2020, 196, 46–55. [Google Scholar] [CrossRef]

- You, A.; Grimm, C.; Silwal, A.; Davidson, J.R. Semantics-Guided Skeletonization of Upright Fruiting Offshoot Trees for Robotic Pruning. Comput. Electron. Agric. 2022, 192, 106622. [Google Scholar] [CrossRef]

- You, A.; Parayil, N.; Krishna, J.G.; Bhattarai, U.; Sapkota, R.; Ahmed, D.; Whiting, M.; Karkee, M.; Grimm, C.M.; Davidson, J.R. An Autonomous Robot for Pruning Modern, Planar Fruit Trees. arXiv 2022, arXiv:2206.07201v1. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Z.; Wang, X.; Fu, W.; Li, J. Automatic Reconstruction and Modeling of Dormant Jujube Trees Using Three-View Image Constraints for Intelligent Pruning Applications. Comput. Electron. Agric. 2023, 212, 108149. [Google Scholar] [CrossRef]

- Westling, F.; Underwood, J.; Bryson, M. A Procedure for Automated Tree Pruning Suggestion Using LiDAR Scans of Fruit Trees. Comput. Electron. Agric. 2021, 187, 106274. [Google Scholar] [CrossRef]

- Cao, Y.; Wang, N.; Wu, B.; Zhang, X.; Wang, Y.; Xu, S.; Zhang, M.; Miao, Y.; Kang, F. A Novel Adaptive Cuboid Regional Growth Algorithm for Trunk–Branch Segmentation of Point Clouds from Two Fruit Tree Species. Agriculture 2025, 15, 1463. [Google Scholar] [CrossRef]

- Churuvija, M.; Sapkota, R.; Ahmed, D.; Karkee, M. A Pose-Versatile Imaging System for Comprehensive 3D Modeling of Planar-Canopy Fruit Trees for Automated Orchard Operations. Comput. Electron. Agric. 2025, 230, 109899. [Google Scholar] [CrossRef]

- Zine-El-Abidine, M.; Dutagaci, H.; Galopin, G.; Rousseau, D. Assigning Apples to Individual Trees in Dense Orchards Using 3D Colour Point Clouds. Biosyst. Eng. 2021, 209, 30–52. [Google Scholar] [CrossRef]

- Dey, D.; Mummert, L.; Sukthankar, R. Classification of Plant Structures from Uncalibrated Image Sequences. In Proceedings of the IEEE Workshop on the Applications of Computer Vision (WACV), Breckenridge, CO, USA, 9–11 January 2012; pp. 329–336. [Google Scholar] [CrossRef]

- Zhang, J.; Chambers, A.; Maeta, S.; Bergerman, M.; Singh, S. 3D Perception for Accurate Row Following: Methodology and Results. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Tokyo, Japan, 3–7 November 2013; pp. 5306–5313. [Google Scholar] [CrossRef]

- Nielsen, M.; Slaughter, D.C.; Gliever, C. Vision-Based 3D Peach Tree Reconstruction for Automated Blossom Thinning. IEEE Trans. Ind. Inform. 2012, 8, 188–196. [Google Scholar] [CrossRef]

- Chéné, Y.; Rousseau, D.; Lucidarme, P.; Bertheloot, J.; Caffier, V.; Morel, P.; Belin, É.; Chapeau-Blondeau, F. On the Use of Depth Camera for 3D Phenotyping of Entire Plants. Comput. Electron. Agric. 2012, 82, 122–127. [Google Scholar] [CrossRef]

- Jin, Y.; Yu, C.; Yin, J.; Yang, S.X. Detection Method for Table Grape Ears and Stems Based on a Far–Close-Range Combined Vision System and Hand–Eye-Coordinated Picking Test. Comput. Electron. Agric. 2022, 202, 107364. [Google Scholar] [CrossRef]

- Lu, Q.; Tang, M.; Cai, J. Obstacle Recognition Using Multi-Spectral Imaging for Citrus Picking Robot. In Proceedings of the Pacific Asia Conference on Circuits, Communications and System (PACCS), Wuhan, China, 17–18 July 2011; pp. 1–5. [Google Scholar] [CrossRef]

- Tanigaki, K.; Fujiura, T.; Akase, A.; Imagawa, J. Cherry-Harvesting Robot. Comput. Electron. Agric. 2008, 63, 65–72. [Google Scholar] [CrossRef]

- Bac, C.W.; Hemming, J.; van Henten, E.J. Robust Pixel-Based Classification of Obstacles for Robotic Harvesting of Sweet-Pepper. Comput. Electron. Agric. 2013, 96, 148–162. [Google Scholar] [CrossRef]

- Bac, C.W.; Hemming, J.; van Henten, E.J. Stem Localization of Sweet-Pepper Plants Using the Support Wire as Visual Cue. Comput. Electron. Agric. 2014, 105, 111–120. [Google Scholar] [CrossRef]

- Colmenero-Martinez, J.T.; Blanco-Roldán, G.L.; Bayano-Tejero, S.; Castillo-Ruiz, F.J.; Sola-Guirado, R.R.; Gil-Ribes, J.A. An Automatic Trunk-Detection System for Intensive Olive Harvesting with Trunk Shaker. Biosyst. Eng. 2018, 172, 92–101. [Google Scholar] [CrossRef]

- Chattopadhyay, S.; Akbar, S.A.; Elfiky, N.M.; Medeiros, H.; Kak, A. Measuring and Modeling Apple Trees Using Time-of-Flight Data for Automation of Dormant Pruning Applications. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–9. [Google Scholar] [CrossRef]

- Akbar, S.A.; Elfiky, N.M.; Kak, A. A Novel Framework for Modeling Dormant Apple Trees Using a Single Depth Image for Robotic Pruning Application. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 5136–5142. [Google Scholar] [CrossRef]

- Medeiros, H.; Kim, D.; Sun, J.; Seshadri, H.; Akbar, S.A.; Elfiky, N.M.; Park, J. Modeling Dormant Fruit Trees for Agricultural Automation. J. Field Robot. 2017, 34, 1203–1224. [Google Scholar] [CrossRef]

- Botterill, T.; Green, R.; Mills, S. Finding a Vine’s Structure by Bottom-Up Parsing of Cane Edges. In Proceedings of the International Conference on Image and Vision Computing New Zealand (IVCNZ), Wellington, New Zealand, 27–29 November 2013; pp. 112–117. [Google Scholar] [CrossRef]

- Botterill, T.; Paulin, S.; Green, R.; Williams, S.; Lin, J.; Saxton, V.; Mills, S.; Chen, X.; Corbett-Davies, S. A Robot System for Pruning Grape Vines. J. Field Robot. 2017, 34, 1100–1122. [Google Scholar] [CrossRef]

- Luo, L.; Tang, Y.; Zou, X.; Ye, M.; Feng, W.; Li, G. Vision-Based Extraction of Spatial Information in Grape Clusters for Harvesting Robots. Biosyst. Eng. 2016, 151, 90–104. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Sanz-Cortiella, R.; Rosell-Polo, J.R.; Morros, J.-R.; Ruiz-Hidalgo, J.; Vilaplana, V.; Gregorio, E. Fruit Detection and 3D Location Using Instance Segmentation Neural Networks and Structure-from-Motion Photogrammetry. Comput. Electron. Agric. 2020, 169, 105165. [Google Scholar] [CrossRef]

- Sun, X.; Fang, W.; Gao, C.; Fu, L.; Majeed, Y.; Liu, X.; Gao, F.; Yang, R.; Li, R. Remote Estimation of Grafted Apple Tree Trunk Diameter in Modern Orchard with RGB and Point Cloud Based on SOLOv2. Comput. Electron. Agric. 2022, 199, 107209. [Google Scholar] [CrossRef]

- Suo, R.; Fu, L.; He, L.; Li, G.; Majeed, Y.; Liu, X.; Zhao, G.; Yang, R.; Li, R. A Novel Labeling Strategy to Improve Apple Seedling Segmentation Using BlendMask for Online Grading. Comput. Electron. Agric. 2022, 201, 107333. [Google Scholar] [CrossRef]

- Zhao, G.; Yang, R.; Jing, X.; Zhang, H.; Wu, Z.; Sun, X.; Jiang, H.; Li, R.; Wei, X.; Fountas, S.; et al. Phenotyping of Individual Apple Tree in Modern Orchard with Novel Smartphone-Based Heterogeneous Binocular Vision and YOLOv5s. Comput. Electron. Agric. 2023, 209, 107814. [Google Scholar] [CrossRef]

- Siddique, A.; Tabb, A.; Medeiros, H. Self-Supervised Learning for Panoptic Segmentation of Multiple Fruit Flower Species. IEEE Robot. Autom. Lett. 2022, 7, 12387–12394. [Google Scholar] [CrossRef]

- Xiong, J.; Liang, J.; Zhuang, Y.; Hong, D.; Zheng, Z.; Liao, S.; Hu, W.; Yang, Z. Real-Time Localization and 3D Semantic Map Reconstruction for Unstructured Citrus Orchards. Comput. Electron. Agric. 2023, 213, 108217. [Google Scholar] [CrossRef]

- Chen, J.; Ji, C.; Zhang, J.; Feng, Q.; Li, Y.; Ma, B. A Method for Multi-Target Segmentation of Bud-Stage Apple Trees Based on Improved YOLOv8. Comput. Electron. Agric. 2024, 220, 108876. [Google Scholar] [CrossRef]

- Xu, W.; Guo, R.; Chen, P.; Li, L.; Gu, M.; Sun, H.; Hu, L.; Wang, Z.; Li, K. Cherry Growth Modeling Based on Prior Distance Embedding Contrastive Learning: Pre-Training, Anomaly Detection, Semantic Segmentation, and Temporal Modeling. Comput. Electron. Agric. 2024, 221, 108973. [Google Scholar] [CrossRef]

- Zhang, J.; Tian, M.; Yang, Z.; Li, J.; Zhao, L. An Improved Target Detection Method Based on YOLOv5 in Natural Orchard Environments. Comput. Electron. Agric. 2024, 219, 108780. [Google Scholar] [CrossRef]

- Abdalla, A.; Zhu, Y.; Qu, F.; Cen, H. Novel Encoding Technique to Evolve Convolutional Neural Network as a Multi-Criteria Problem for Plant Image Segmentation. Comput. Electron. Agric. 2025, 230, 109869. [Google Scholar] [CrossRef]

- Gao, G.; Fang, L.; Zhang, Z.; Li, J. YOLOR-Stem: Gaussian Rotating Bounding Boxes and Probability Similarity Measure for Enhanced Tomato Main Stem Detection. Comput. Electron. Agric. 2025, 233, 110192. [Google Scholar] [CrossRef]

- Jin, T.; Kang, S.M.; Kim, N.; Kim, H.R.; Han, X. Comparative Analysis of CNN-Based Semantic Segmentation for Apple Tree Canopy Size Recognition in Automated Variable-Rate Spraying. Agriculture 2025, 15, 789. [Google Scholar] [CrossRef]

- Metuarea, H.; Laurens, F.; Guerra, W.; Lozano, L.; Patocchi, A.; Van Hoye, S.; Dutagaci, H.; Labrosse, J.; Rasti, P.; Rousseau, D. Individual Segmentation of Intertwined Apple Trees in a Row via Prompt Engineering. Sensors 2025, 25, 4721. [Google Scholar] [CrossRef]

- Kang, H.; Chen, C. Fruit Detection and Segmentation for Apple Harvesting Using Visual Sensor in Orchards. Sensors 2019, 19, 4599. [Google Scholar] [CrossRef]

- Kang, H.; Chen, C. Fruit Detection, Segmentation and 3D Visualization of Environments in Apple Orchards. Comput. Electron. Agric. 2020, 171, 105302. [Google Scholar] [CrossRef]

- Jiang, H.; Sun, X.; Fang, W.; Fu, L.; Li, R.; Auat Cheein, F.; Majeed, Y. Thin Wire Segmentation and Reconstruction Based on a Novel Image Overlap-Partitioning and Stitching Algorithm in Apple Fruiting Wall Architecture for Robotic Picking. Comput. Electron. Agric. 2023, 209, 107840. [Google Scholar] [CrossRef]

- Kok, E.; Wang, X.; Chen, C. Obscured Tree Branches Segmentation and 3D Reconstruction Using Deep Learning and Geometrical Constraints. Comput. Electron. Agric. 2023, 210, 107884. [Google Scholar] [CrossRef]

- Kalampokas, T.; Tziridis, K.; Nikolaou, A.; Vrochidou, E.; Papakostas, G.A.; Pachidis, T.; Kaburlasos, V.G. Semantic Segmentation of Vineyard Images Using Convolutional Neural Networks. In International Neural Networks Society, Proceedings of the International Conference on Engineering Applications of Neural Networks (EANN), Halkidiki, Greece, 5–7 June 2020; Springer: Berlin/Heidelberg, Germany; p. 2. [CrossRef]

- Kalampokas, T.; Vrochidou, E.; Papakostas, G.A.; Pachidis, T.; Kaburlasos, V.G. Grape Stem Detection Using Regression Convolutional Neural Networks. Comput. Electron. Agric. 2021, 186, 106220. [Google Scholar] [CrossRef]

- Qiao, Y.; Hu, Y.; Zheng, Z.; Qu, Z.; Wang, C.; Guo, T.; Hou, J. A Diameter Measurement Method of Red Jujubes Trunk Based on Improved PSPNet. Agriculture 2022, 12, 1140. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, Z.; Luo, L.; Wei, H.; Wang, W.; Chen, M.; Luo, S. DualSeg: Fusing Transformer and CNN Structure for Image Segmentation in Complex Vineyard Environment. Comput. Electron. Agric. 2023, 206, 107682. [Google Scholar] [CrossRef]

- Wu, Z.; Xia, F.; Zhou, S.; Xu, D. A Method for Identifying Grape Stems Using Keypoints. Comput. Electron. Agric. 2023, 209, 107825. [Google Scholar] [CrossRef]

- Kim, J.; Pyo, H.; Jang, I.; Kang, J.; Ju, B.; Ko, K. Tomato Harvesting Robotic System Based on Deep-ToMaToS: Deep Learning Network Using Transformation Loss for 6D Pose Estimation of Maturity Classified Tomatoes with Side-Stem. Comput. Electron. Agric. 2022, 201, 107300. [Google Scholar] [CrossRef]

- Rong, J.; Wang, P.; Wang, T.; Hu, L.; Yuan, T. Fruit Pose Recognition and Directional Orderly Grasping Strategies for Tomato Harvesting Robots. Comput. Electron. Agric. 2022, 202, 107430. [Google Scholar] [CrossRef]

- Rong, Q.; Hu, C.; Hu, X.; Xu, M. Picking Point Recognition for Ripe Tomatoes Using Semantic Segmentation and Morphological Processing. Comput. Electron. Agric. 2023, 210, 107923. [Google Scholar] [CrossRef]

- Kim, T.; Lee, D.-H.; Kim, K.-C.; Kim, Y.-J. 2D Pose Estimation of Multiple Tomato Fruit-Bearing Systems for Robotic Harvesting. Comput. Electron. Agric. 2023, 211, 108004. [Google Scholar] [CrossRef]

- Liang, C.; Xiong, J.; Zheng, Z.; Zhong, Z.; Li, Z.; Chen, S.; Yang, Z. A Visual Detection Method for Nighttime Litchi Fruits and Fruiting Stems. Comput. Electron. Agric. 2020, 169, 105192. [Google Scholar] [CrossRef]

- Chen, M.; Tang, Y.; Zou, X.; Huang, Z.; Zhou, H.; Chen, S. 3D Global Mapping of Large-Scale Unstructured Orchard Integrating Eye-in-Hand Stereo Vision and SLAM. Comput. Electron. Agric. 2021, 187, 106237. [Google Scholar] [CrossRef]

- Zhong, Z.; Xiong, J.; Zheng, Z.; Liu, B.; Liao, S.; Huo, Z.; Yang, Z. A Method for Litchi Picking Points Calculation in Natural Environment Based on Main Fruit Bearing Branch Detection. Comput. Electron. Agric. 2021, 189, 106398. [Google Scholar] [CrossRef]

- Peng, H.; Zhong, J.; Liu, H.; Li, J.; Yao, M.; Zhang, X. ResDense-Focal-DeepLabV3+ Enabled Litchi Branch Semantic Segmentation for Robotic Harvesting. Comput. Electron. Agric. 2023, 206, 107691. [Google Scholar] [CrossRef]

- Lin, G.; Wang, C.; Xu, Y.; Wang, M.; Zhang, Z.; Zhu, L. Real-Time Guava Tree-Part Segmentation Using Fully Convolutional Network with Channel and Spatial Attention. Front. Plant Sci. 2022, 13, 991487. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Deng, X.; Luo, J.; Li, B.; Xiao, S. Cross-Task Feature Enhancement Strategy in Multi-Task Learning for Harvesting Sichuan Pepper. Comput. Electron. Agric. 2023, 207, 107726. [Google Scholar] [CrossRef]

- Zheng, Z.; Hu, Y.; Guo, T.; Qiao, Y.; He, Y.; Zhang, Y.; Huang, Y. AGHRNet: An Attention Ghost-HRNet for Confirmation of Catch-and-Shake Locations in Jujube Fruits Vibration Harvesting. Comput. Electron. Agric. 2023, 210, 107921. [Google Scholar] [CrossRef]

- Williams, H.A.M.; Jones, M.H.; Nejati, M.; Seabright, M.J.; Bell, J.; Penhall, N.D.; Barnett, J.J.; Duke, M.D.; Scarfe, A.J.; Ahn, H.S.; et al. Robotic Kiwifruit Harvesting Using Machine Vision, Convolutional Neural Networks, and Robotic Arms. Biosyst. Eng. 2019, 181, 140–156. [Google Scholar] [CrossRef]

- Williams, H.; Ting, C.; Nejati, M.; Jones, M.H.; Penhall, N.; Lim, J.; Seabright, M.; Bell, J.; Ahn, H.S.; Scarfe, A.; et al. Improvements to and Large-Scale Evaluation of a Robotic Kiwifruit Harvester. J. Field Robot. 2020, 37, 187–201. [Google Scholar] [CrossRef]

- Song, Z.; Zhou, Z.; Wang, W.; Gao, F.; Fu, L.; Li, R.; Cui, Y. Canopy Segmentation and Wire Reconstruction for Kiwifruit Robotic Harvesting. Comput. Electron. Agric. 2021, 181, 105933. [Google Scholar] [CrossRef]

- Fu, L.; Wu, F.; Zou, X.; Jiang, Y.; Lin, J.; Yang, Z.; Duan, J. Fast Detection of Banana Bunches and Stalks in the Natural Environment Based on Deep Learning. Comput. Electron. Agric. 2022, 194, 106800. [Google Scholar] [CrossRef]

- Wan, H.; Fan, Z.; Yu, X.; Kang, M.; Wang, P.; Zeng, X. A Real-Time Branch Detection and Reconstruction Mechanism for Harvesting Robot via Convolutional Neural Network and Image Segmentation. Comput. Electron. Agric. 2022, 192, 106609. [Google Scholar] [CrossRef]

- Li, D.; Sun, X.; Lv, S.; Elkhouchlaa, H.; Jia, Y.; Yao, Z.; Lin, P.; Zhou, H.; Zhou, Z.; Shen, J.; et al. A Novel Approach for the 3D Localization of Branch Picking Points Based on Deep Learning Applied to Longan Harvesting UAVs. Comput. Electron. Agric. 2022, 199, 107191. [Google Scholar] [CrossRef]

- Chen, J.; Ma, A.; Huang, L.; Li, H.; Zhang, H.; Huang, Y.; Zhu, T. Efficient and Lightweight Grape and Picking Point Synchronous Detection Model Based on Key Point Detection. Comput. Electron. Agric. 2024, 217, 108612. [Google Scholar] [CrossRef]

- Du, X.; Meng, Z.; Ma, Z.; Zhao, L.; Lu, W.; Cheng, H.; Wang, Y. Comprehensive Visual Information Acquisition for Tomato Picking Robot Based on Multitask Convolutional Neural Network. Biosyst. Eng. 2024, 238, 51–61. [Google Scholar] [CrossRef]

- Gu, Z.; He, D.; Huang, J.; Chen, J.; Wu, X.; Huang, B.; Dong, T.; Yang, Q.; Li, H. Simultaneous Detection of Fruits and Fruiting Stems in Mango Using Improved YOLOv8 Model Deployed by Edge Device. Comput. Electron. Agric. 2024, 227, 109512. [Google Scholar] [CrossRef]

- Li, H.; Huang, J.; Gu, Z.; He, D.; Huang, J.; Wang, C. Positioning of Mango Picking Point Using an Improved YOLOv8 Architecture with Object Detection and Instance Segmentation. Biosyst. Eng. 2024, 247, 202–220. [Google Scholar] [CrossRef]

- Neupane, C.; Walsh, K.B.; Goulart, R.; Koirala, A. Developing Machine Vision in Tree-Fruit Applications: Fruit Count, Fruit Size and Branch Avoidance in Automated Harvesting. Sensors 2024, 24, 5593. [Google Scholar] [CrossRef]

- Sapkota, R.; Ahmed, D.; Karkee, M. Comparing YOLOv8 and Mask R-CNN for Instance Segmentation in Complex Orchard Environments. Artif. Intell. Agric. 2024, 13, 84–99. [Google Scholar] [CrossRef]

- Wang, J.; Lin, X.; Luo, L.; Chen, M.; Wei, H.; Xu, L.; Luo, S. Cognition of Grape Cluster Picking Point Based on Visual Knowledge Distillation in Complex Vineyard Environment. Comput. Electron. Agric. 2024, 225, 109216. [Google Scholar] [CrossRef]

- Wang, C.; Han, Q.; Zhang, T.; Li, C.; Sun, X. Litchi Picking Points Localization in Natural Environment Based on the Litchi-YOSO Model and Branch Morphology Reconstruction Algorithm. Comput. Electron. Agric. 2024, 226, 109473. [Google Scholar] [CrossRef]

- Li, L.; Li, K.; He, Z.; Li, H.; Cui, Y. Kiwifruit Segmentation and Identification of Picking Point on Its Stem in Orchards. Comput. Electron. Agric. 2025, 229, 109748. [Google Scholar] [CrossRef]

- Li, P.; Chen, J.; Chen, Q.; Huang, L.; Jiang, Z.; Hua, W.; Li, Y. Detection and Picking Point Localization of Grape Bunches and Stems Based on Oriented Bounding Box. Comput. Electron. Agric. 2025, 233, 110168. [Google Scholar] [CrossRef]

- Shen, Q.; Zhang, X.; Shen, M.; Xu, D. Multi-Scale Adaptive YOLO for Instance Segmentation of Grape Pedicels. Comput. Electron. Agric. 2025, 229, 109712. [Google Scholar] [CrossRef]

- Wu, Y.; Yu, X.; Zhang, D.; Yang, Y.; Qiu, Y.; Pang, L.; Wang, H. TinySeg: A Deep Learning Model for Small Target Segmentation of Grape Pedicels with Multi-Attention and Multi-Scale Feature Fusion. Comput. Electron. Agric. 2025, 237, 110726. [Google Scholar] [CrossRef]

- Kim, J.; Seol, J.; Lee, S.; Hong, S.-W.; Son, H.I. An Intelligent Spraying System with Deep Learning-Based Semantic Segmentation of Fruit Trees in Orchards. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 3923–3929. [Google Scholar] [CrossRef]

- Seol, J.; Kim, J.; Son, H.I. Field Evaluation of a Deep Learning-Based Intelligent Spraying Robot with Flow Control for Pear Orchards. Precis. Agric. 2022, 23, 712–732. [Google Scholar] [CrossRef]

- Tong, S.; Yue, Y.; Li, W.; Wang, Y.; Kang, F.; Feng, C. Branch Identification and Junction Points Location for Apple Trees Based on Deep Learning. Remote Sens. 2022, 14, 4495. [Google Scholar] [CrossRef]

- Tong, S.; Zhang, J.; Li, W.; Wang, Y.; Kang, F. An Image-Based System for Locating Pruning Points in Apple Trees Using Instance Segmentation and RGB-D Images. Biosyst. Eng. 2023, 236, 277–286. [Google Scholar] [CrossRef]

- Williams, H.; Smith, D.; Shahabi, J.; Gee, T.; Nejati, M.; McGuinness, B.; Black, K.; Tobias, J.; Jangali, R.; Lim, H.; et al. Modelling Wine Grapevines for Autonomous Robotic Cane Pruning. Biosyst. Eng. 2023, 235, 31–49. [Google Scholar] [CrossRef]

- Gentilhomme, T.; Villamizar, M.; Corre, J.; Odobez, J.-M. Towards Smart Pruning: ViNet, a Deep-Learning Approach for Grapevine Structure Estimation. Comput. Electron. Agric. 2023, 207, 107736. [Google Scholar] [CrossRef]

- Liang, X.; Wei, Z.; Chen, K. A Method for Segmentation and Localization of Tomato Lateral Pruning Points in Complex Environments Based on Improved YOLOv5. Comput. Electron. Agric. 2025, 229, 109731. [Google Scholar] [CrossRef]

- Hani, N.; Roy, P.; Isler, V. A Comparative Study of Fruit Detection and Counting Methods for Yield Mapping in Apple Orchards. J. Field Robot. 2020, 37, 263–282. [Google Scholar] [CrossRef]

- Gao, F.; Fang, W.; Sun, X.; Wu, Z.; Zhao, G.; Li, G.; Li, R.; Fu, L.; Zhang, Q. A Novel Apple Fruit Detection and Counting Methodology Based on Deep Learning and Trunk Tracking in Modern Orchard. Comput. Electron. Agric. 2022, 197, 107000. [Google Scholar] [CrossRef]

- Wu, Z.; Sun, X.; Jiang, H.; Gao, F.; Li, R.; Fu, L.; Zhang, D.; Fountas, S. Twice Matched Fruit Counting System: An Automatic Fruit Counting Pipeline in Modern Apple Orchard Using Mutual and Secondary Matches. Biosyst. Eng. 2023, 234, 140–155. [Google Scholar] [CrossRef]

- Palacios, F.; Melo-Pinto, P.; Diago, M.P.; Tardaguila, J. Deep Learning and Computer Vision for Assessing the Number of Actual Berries in Commercial Vineyards. Biosyst. Eng. 2022, 218, 175–188. [Google Scholar] [CrossRef]

- Wen, Y.; Xue, J.; Sun, H.; Song, Y.; Lv, P.; Liu, S.; Chu, Y.; Zhang, T. High-Precision Target Ranging in Complex Orchard Scenes by Utilizing Semantic Segmentation Results and Binocular Vision. Comput. Electron. Agric. 2023, 215, 108440. [Google Scholar] [CrossRef]

- Saha, S.; Noguchi, N. Smart Vineyard Row Navigation: A Machine Vision Approach Leveraging YOLOv8. Comput. Electron. Agric. 2025, 229, 109839. [Google Scholar] [CrossRef]

- Majeed, Y.; Karkee, M.; Zhang, Q.; Fu, L.; Whiting, M.D. Determining Grapevine Cordon Shape for Automated Green Shoot Thinning Using Semantic Segmentation-Based Deep Learning Networks. Comput. Electron. Agric. 2020, 171, 105308. [Google Scholar] [CrossRef]

- Majeed, Y.; Karkee, M.; Zhang, Q. Estimating the Trajectories of Vine Cordons in Full Foliage Canopies for Automated Green Shoot Thinning in Vineyards. Comput. Electron. Agric. 2020, 176, 105671. [Google Scholar] [CrossRef]

- Wu, F.; Duan, J.; Ai, P.; Chen, Z.; Yang, Z.; Zou, X. Rachis Detection and Three-Dimensional Localization of Cut Point for Vision-Based Banana Robot. Comput. Electron. Agric. 2022, 198, 107079. [Google Scholar] [CrossRef]

- Du, W.; Cui, X.; Zhu, Y.; Liu, P. Detection of Table Grape Berries Need to be Removal before Thinning Based on Deep Learning. Comput. Electron. Agric. 2025, 231, 110043. [Google Scholar] [CrossRef]

- Hussain, M.; He, L.; Schupp, J.; Lyons, D.; Heinemann, P. Green Fruit–Stem Pairing and Clustering for Machine Vision System in Robotic Thinning of Apples. J. Field Robot. 2025, 42, 1463–1490. [Google Scholar] [CrossRef]

- Dong, W.; Roy, P.; Isler, V. Semantic Mapping for Orchard Environments by Merging Two-Sides Reconstructions of Tree Rows. J. Field Robot. 2020, 37, 97–121. [Google Scholar] [CrossRef]

- Milella, A.; Marani, R.; Petitti, A.; Reina, G. In-Field High Throughput Grapevine Phenotyping with a Consumer-Grade Depth Camera. Comput. Electron. Agric. 2019, 156, 293–306. [Google Scholar] [CrossRef]

- Digumarti, S.T.; Schmid, L.M.; Rizzi, G.M.; Nieto, J.; Siegwart, R.; Beardsley, P.; Cadena, C. An Approach for Semantic Segmentation of Tree-like Vegetation. In Proceedings of the 2019 IEEE International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 1801–1807. [Google Scholar] [CrossRef]

- Barth, R.; Ijsselmuiden, J.; Hemming, J.; van Henten, E.J. Data Synthesis Methods for Semantic Segmentation in Agriculture: A Capsicum annuum Dataset. Comput. Electron. Agric. 2018, 144, 284–296. [Google Scholar] [CrossRef]

- Barth, R.; Ijsselmuiden, J.; Hemming, J.; van Henten, E.J. Synthetic Bootstrapping of Convolutional Neural Networks for Semantic Plant Part Segmentation. Comput. Electron. Agric. 2019, 161, 291–304. [Google Scholar] [CrossRef]

- Barth, R.; Hemming, J.; van Henten, E.J. Optimizing Realism of Synthetic Images Using Cycle Generative Adversarial Networks for Improved Part Segmentation. Comput. Electron. Agric. 2020, 173, 105378. [Google Scholar] [CrossRef]

- Chen, Z.; Granland, K.; Tang, Y.; Chen, C. HOB-CNNv2: Deep Learning Based Detection of Extremely Occluded Tree Branches and Reference to the Dominant Tree Image. Comput. Electron. Agric. 2024, 218, 108727. [Google Scholar] [CrossRef]

- Qi, Z.; Hua, W.; Zhang, Z.; Deng, X.; Yuan, T.; Zhang, W. A Novel Method for Tomato Stem Diameter Measurement Based on Improved YOLOv8-Seg and RGB-D Data. Comput. Electron. Agric. 2024, 226, 109387. [Google Scholar] [CrossRef]

- Zhang, J.; He, L.; Karkee, M.; Zhang, Q.; Zhang, X.; Gao, Z. Branch Detection for Apple Trees Trained in Fruiting Wall Architecture Using Depth Features and Region-Convolutional Neural Network (R-CNN). Comput. Electron. Agric. 2018, 155, 386–393. [Google Scholar] [CrossRef]

- Zhang, X.; Fu, L.; Karkee, M.; Whiting, M.D.; Zhang, Q. Canopy Segmentation Using ResNet for Mechanical Harvesting of Apples. IFAC-PapersOnLine 2019, 52, 300–305. [Google Scholar] [CrossRef]

- Zhang, X.; Karkee, M.; Zhang, Q.; Whiting, M.D. Computer Vision-Based Tree Trunk and Branch Identification and Shaking Point Detection in Dense-Foliage Canopy for Automated Harvesting of Apples. J. Field Robot. 2020, 38, 476–493. [Google Scholar] [CrossRef]

- Zhang, J.; Karkee, M.; Zhang, Q.; Zhang, X.; Majeed, Y.; Fu, L.; Wang, S. Multi-Class Object Detection Using Faster R-CNN and Estimation of Shaking Locations for Automated Shake-and-Catch Apple Harvesting. Comput. Electron. Agric. 2020, 173, 105384. [Google Scholar] [CrossRef]

- Granland, K.; Newbury, R.; Chen, Z.; Ting, D.; Chen, C. Detecting Occluded Y-Shaped Fruit Tree Segments Using Automated Iterative Training with Minimal Labeling Effort. Comput. Electron. Agric. 2022, 194, 106747. [Google Scholar] [CrossRef]

- Coll-Ribes, G.; Torres-Rodriguez, I.J.; Grau, A.; Guerra, E.; Safeliu, A. Accurate Detection and Depth Estimation of Table Grapes and Peduncles for Robot Harvesting, Combining Monocular Depth Estimation and CNN Methods. Comput. Electron. Agric. 2023, 215, 108362. [Google Scholar] [CrossRef]

- Xu, P.; Fang, N.; Liu, N.; Lin, F.; Yang, S.; Ning, J. Visual Recognition of Cherry Tomatoes in Plant Factory Based on Improved Deep Instance Segmentation. Comput. Electron. Agric. 2022, 197, 106991. [Google Scholar] [CrossRef]

- Zhang, F.; Gao, J.; Zhou, H.; Zhang, J.; Zou, K.; Yuan, T. Three-Dimensional Pose Detection Method Based on Keypoints Detection Network for Tomato Bunch. Comput. Electron. Agric. 2022, 195, 106824. [Google Scholar] [CrossRef]

- Zhang, F.; Gao, J.; Song, C.; Zhou, H.; Zou, K.; Xie, J.; Yuan, T.; Zhang, J. TPMv2: An End-to-End Tomato Pose Method Based on 3D Keypoint Detection. Comput. Electron. Agric. 2023, 210, 107878. [Google Scholar] [CrossRef]

- Li, J.; Tang, Y.; Zou, X.; Lin, G.; Wang, H. Detection of Fruit-Bearing Branches and Localization of Litchi Clusters for Vision-Based Harvesting Robots. IEEE Access 2020, 8, 117746–117758. [Google Scholar] [CrossRef]

- Yang, C.H.; Xiong, L.Y.; Wang, Z.; Wang, Y.; Shi, G.; Kuremot, T.; Zhao, W.H.; Yang, Y. Integrated Detection of Citrus Fruits and Branches Using a Convolutional Neural Network. Comput. Electron. Agric. 2020, 174, 105469. [Google Scholar] [CrossRef]

- Lin, G.; Tang, Y.; Zou, X.; Xiong, J.; Li, J. Guava Detection and Pose Estimation Using a Low-Cost RGB-D Sensor in the Field. Sensors 2019, 19, 428. [Google Scholar] [CrossRef]

- Lin, G.; Tang, Y.; Zou, X.; Wang, C. Three-Dimensional Reconstruction of Guava Fruit and Branches Using Instance Segmentation and Geometry Analysis. Comput. Electron. Agric. 2021, 184, 106107. [Google Scholar] [CrossRef]

- Lin, G.; Zhu, L.; Li, J.; Zou, X.; Tang, Y. Collision-Free Path Planning for a Guava-Harvesting Robot Based on Recurrent Deep Reinforcement Learning. Comput. Electron. Agric. 2021, 188, 106350. [Google Scholar] [CrossRef]

- Yu, T.; Hu, C.; Xie, Y.; Liu, J.; Li, P. Mature Pomegranate Fruit Detection and Location Combining Improved F-PointNet with 3D Point Cloud Clustering in Orchard. Comput. Electron. Agric. 2022, 200, 107233. [Google Scholar] [CrossRef]

- Ci, J.; Wang, X.; Rapado-Rincón, D.; Burusa, A.K.; Kootstra, G. 3D Pose Estimation of Tomato Peduncle Nodes Using Deep Keypoint Detection and Point Cloud. Biosyst. Eng. 2024, 243, 57–69. [Google Scholar] [CrossRef]

- Li, Y.; Feng, Q.; Zhang, Y.; Peng, C.; Ma, Y.; Liu, C.; Ru, M.; Sun, J.; Zhao, C. Peduncle Collision-Free Grasping Based on Deep Reinforcement Learning for Tomato Harvesting Robot. Comput. Electron. Agric. 2024, 216, 108488. [Google Scholar] [CrossRef]

- Dong, L.; Zhu, L.; Zhao, B.; Wang, R.; Ni, J.; Liu, S.; Chen, K.; Cui, X.; Zhou, L. Semantic Segmentation-Based Observation Pose Estimation Method for Tomato Harvesting Robots. Comput. Electron. Agric. 2025, 230, 109895. [Google Scholar] [CrossRef]

- Cong, P.; Zhou, J.; Li, S.; Lv, K.; Feng, H. Citrus Tree Crown Segmentation of Orchard Spraying Robot Based on RGB-D Image and Improved Mask R-CNN. Appl. Sci. 2023, 13, 164. [Google Scholar] [CrossRef]

- Bhattarai, U.; Sapkota, R.; Kshetri, S.; Mo, C.; Whiting, M.D.; Zhang, Q.; Karkee, M. A Vision-Based Robotic System for Precision Pollination of Apples. Comput. Electron. Agric. 2025, 234, 110158. [Google Scholar] [CrossRef]

- Chen, Z.; Ting, D.; Newbury, R.; Chen, C. Semantic Segmentation for Partially Occluded Apple Trees Based on Deep Learning. Comput. Electron. Agric. 2021, 181, 105952. [Google Scholar] [CrossRef]

- Ahmed, D.; Sapkota, R.; Churuvija, M.; Karkee, M. Estimating Optimal Crop-Load for Individual Branches in Apple Tree Canopies Using YOLOv8. Comput. Electron. Agric. 2025, 229, 109697. [Google Scholar] [CrossRef]

- Majeed, Y.; Zhang, J.; Zhang, X.; Fu, L.; Karkee, M.; Zhang, Q.; Whiting, M.D. Deep Learning Based Segmentation for Automated Training of Apple Trees on Trellis Wires. Comput. Electron. Agric. 2020, 170, 105277. [Google Scholar] [CrossRef]

- Brown, J.; Paudel, A.; Biehler, D.; Thompson, A.; Karkee, M.; Grimm, C.; Davidson, J.R. Tree Detection and In-Row Localization for Autonomous Precision Orchard Management. Comput. Electron. Agric. 2024, 227, 109454. [Google Scholar] [CrossRef]

- Xu, S.; Rai, R. Vision-Based Autonomous Navigation Stack for Tractors Operating in Peach Orchards. Comput. Electron. Agric. 2024, 217, 108558. [Google Scholar] [CrossRef]

- Pawikhum, K.; Yang, Y.; He, L.; Heinemann, P. Development of a Machine Vision System for Apple Bud Thinning in Precision Crop Load Management. Comput. Electron. Agric. 2025, 236, 110479. [Google Scholar] [CrossRef]

- Dong, X.; Kim, W.-Y.; Zheng, Y.; Oh, J.-Y.; Ehsani, R.; Lee, K.-H. Three-Dimensional Quantification of Apple Phenotypic Traits Based on Deep Learning Instance Segmentation. Comput. Electron. Agric. 2023, 212, 108156. [Google Scholar] [CrossRef]

- Schunck, D.; Magistri, F.; Rosu, R.A.; Cornelißen, A.; Chebrolu, N.; Paulus, S.; Léon, J.; Behnke, S.; Stachniss, C.; Kuhlmann, H.; et al. Pheno4D: A Spatio-Temporal Datasets of Maize and Tomato Plant Point Clouds for Phenotyping and Advanced Plant Analysis. PLoS ONE 2021, 16, e0256340. [Google Scholar] [CrossRef] [PubMed]

- Liu, Q.; Yang, H.; Wei, J.; Zhang, Y.; Yang, S. FSDNet: A Feature Spreading Network with Density for 3D Segmentation in Agriculture. Comput. Electron. Agric. 2024, 222, 109073. [Google Scholar] [CrossRef]

- Sun, X.; He, L.; Jiang, H.; Li, R.; Mao, W.; Zhang, D.; Majeed, Y.; Andriyanov, N.; Soloviev, V.; Fu, L. Morphological Estimation of Primary Branch Length of Individual Apple Trees during the Deciduous Period in Modern Orchard Based on PointNet++. Comput. Electron. Agric. 2024, 220, 108873. [Google Scholar] [CrossRef]

- Jiang, L.; Li, C.; Fu, L. Apple Tree Architectural Trait Phenotyping with Organ-Level Instance Segmentation from Point Cloud. Comput. Electron. Agric. 2025, 229, 109708. [Google Scholar] [CrossRef]

- Luo, L.; Yin, W.; Ning, Z.; Wang, J.; Wei, H.; Chen, W.; Lu, Q. In-Field Pose Estimation of Grape Clusters with Combined Point Cloud Segmentation and Geometric Analysis. Comput. Electron. Agric. 2022, 200, 107197. [Google Scholar] [CrossRef]

- Ma, B.; Du, J.; Wang, L.; Jiang, H.; Zhou, M. Automatic Branch Detection of Jujube Trees Based on 3D Reconstruction for Dormant Pruning Using a Deep Learning-Based Method. Comput. Electron. Agric. 2021, 190, 106484. [Google Scholar] [CrossRef]

- Zhang, J.; Gu, J.; Hu, T.; Wang, B.; Xia, Z. An Image Segmentation and Point Cloud Registration Combined Scheme for Sensing of Obscured Tree Branches. Comput. Electron. Agric. 2024, 221, 108960. [Google Scholar] [CrossRef]

- Zhao, G.; Wang, D. A Multiple Criteria Decision-Making Method Generated by the Space Colonization Algorithms for Automated Pruning Strategies of Trees. AgriEngineering 2024, 6, 539–554. [Google Scholar] [CrossRef]

- Fernandes, M.; Gamba, J.D.; Pelusi, F.; Bratta, A.; Caldwell, D.; Poni, S.; Gatti, M.; Semini, C. Grapevine Winter Pruning: Merging 2D Segmentation and 3D Point Clouds for Pruning Point Generation. Comput. Electron. Agric. 2025, 237, 110589. [Google Scholar] [CrossRef]

- Shang, L.; Yan, F.; Teng, T.; Pan, J.; Zhou, L.; Xia, C.; Li, C.; Shi, M.; Si, C.; Niu, R. Morphological Estimation of Primary Branch Inclination Angles in Jujube Trees Based on Improved PointNet++. Agriculture 2025, 15, 1193. [Google Scholar] [CrossRef]

- Zhu, W.; Bai, X.; Xu, D.; Li, W. Pruning Branch Recognition and Pruning Point Localization for Walnut (Juglans regia L.) Trees Based on Point Cloud Semantic Segmentation. Agriculture 2025, 15, 817. [Google Scholar] [CrossRef]

- Uryasheva, A.; Kalashnikova, A.; Shadrin, D.; Evteeva, K.; Moskovtsev, E.; Rodichenko, N. Computer Vision-Based Platform for Apple Leaf Segmentation in Field Conditions to Support Digital Phenotyping. Comput. Electron. Agric. 2022, 201, 107269. [Google Scholar] [CrossRef]

- Liu, C.; Feng, Q.; Sun, Y.; Li, Y.; Ru, M.; Xu, L. YOLACTFusion: An Instance Segmentation Method for RGB–NIR Multimodal Image Fusion Based on an Attention Mechanism. Comput. Electron. Agric. 2023, 213, 108186. [Google Scholar] [CrossRef]

- Hung, C.; Nieto, J.; Taylor, Z.; Underwood, J.; Sukkarieh, S. Orchard Fruit Segmentation Using Multispectral Feature Learning. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 5314–5320. [Google Scholar] [CrossRef]

- Droukas, L.; Doulgeri, Z.; Tsakiridis, N.L.; Triantafyllou, D.; Kleitsiotis, I.; Mariolis, I.; Giakoumis, D.; Tzovaras, D.; Kateris, D.; Bochtis, D. A Survey of Robotic Harvesting Systems and Enabling Technologies. J. Intell. Robot. Syst. 2023, 107, 21. [Google Scholar] [CrossRef]

- Westling, F.; Bryson, M.; Underwood, J. SimTreeLS: Simulating Aerial and Terrestrial Laser Scans of Trees. Comput. Electron. Agric. 2021, 187, 106277. [Google Scholar] [CrossRef]

- Scarfe, A.J.; Flemmer, R.C.; Bakker, H.H.; Flemmer, C.L. Development of an Autonomous Kiwifruit Picking Robot. In Proceedings of the 4th International Conference on Autonomous Robots and Agents, Wellington, New Zealand, 10–12 February 2009; pp. 380–384. [Google Scholar] [CrossRef]

- Kayad, A.; Sozzi, M.; Paraforos, D.S.; Rodrigues, F.A.; Cohen, Y.; Fountas, S.; Francisco, M.-J.; Pezzuolo, A.; Grigolato, S.; Marinello, F. How Many Gigabytes per Hectare Are Available in the Digital Agriculture Era? A Digitization Footprint Estimation. Comput. Electron. Agric. 2022, 198, 107080. [Google Scholar] [CrossRef]

- Zhu, X.; Zhu, J.; Li, H.; Wu, X.; Li, H.; Wang, X.; Dai, J. Uni-Perceiver: Pre-Training Unified Architecture for Generic Perception for Zero-Shot and Few-Shot Tasks. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 16783–16794. [Google Scholar] [CrossRef]

- Li, J.; Zhu, G.; Hua, C.; Feng, M.; Bennamoun, B.; Li, P.; Lu, X.; Song, J.; Shen, P.; Xu, X.; et al. A Systematic Collection of Medical Image Datasets for Deep Learning. ACM Comput. Surv. 2023, 56, 1–51. [Google Scholar] [CrossRef]

- Lu, Y.; Young, S. A Survey of Public Datasets for Computer Vision Tasks in Precision Agriculture. Comput. Electron. Agric. 2020, 178, 105760. [Google Scholar] [CrossRef]

- Gui, J.; Chen, T.; Zhang, J.; Cao, Q.; Sun, Z.; Luo, H.; Tao, D. A Survey on Self-Supervised Learning: Algorithms, Applications, and Future Trends. arXiv 2023, arXiv:2301.05712v3. [Google Scholar] [CrossRef] [PubMed]

- Yin, S.; Xi, Y.; Zhang, X.; Sun, C.; Mao, Q. Foundation Models in Agriculture: A Comprehensive Review. Agriculture 2025, 15, 847. [Google Scholar] [CrossRef]

- Khan, A.; Rauf, Z.; Sohail, A.; Khan, A.R.; Asif, H.; Asif, A.; Farooq, U. A Survey of the Vision Transformers and Their CNN–Transformer Based Variants. Artif. Intell. Rev. 2023, 56 (Suppl. 3), 2917–2970. [Google Scholar] [CrossRef]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar] [CrossRef]

- Ming, Y.; Meng, X.; Fan, C.; Yu, H. Deep Learning for Monocular Depth Estimation: A Review. Neurocomputing 2021, 438, 14–33. [Google Scholar] [CrossRef]

- Cui, X.-Z.; Feng, Q.; Wang, S.-Z.; Zhang, J.-H. Monocular Depth Estimation with Self-Supervised Learning for Vineyard Unmanned Agricultural Vehicle. Sensors 2022, 22, 721. [Google Scholar] [CrossRef]

- Yang, J.; Guo, X.; Li, Y.; Marinello, F.; Ercisli, S.; Zhang, Z. A Survey of Few-Shot Learning in Smart Agriculture: Developments, Applications, and Challenges. Plant Methods 2022, 18, 28. [Google Scholar] [CrossRef]

- Guldenring, R.; Nalpantidis, L. Self-Supervised Contrastive Learning on Agricultural Images. Comput. Electron. Agric. 2021, 191, 106510. [Google Scholar] [CrossRef]

- Kirillov, A.; He, K.; Girshick, R.; Rother, C.; Dollár, P. Panoptic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Denver, CO, USA, 6 June 2019. [Google Scholar] [CrossRef]

- Fu, L.; Gao, F.; Wu, J.; Li, R.; Karkee, M.; Zhang, Q. Application of Consumer RGB-D Cameras for Fruit Detection and Localization in Field: A Critical Review. Comput. Electron. Agric. 2020, 177, 105687. [Google Scholar] [CrossRef]

- Szeliski, R. Computer Vision: Algorithms and Applications, 2nd ed.; Springer: Cham, Switzerland, 2022. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the 26th International Conference on Neural Information Processing Systems (NeurIPS), Tahoe, CA, USA, 3–8 December 2012. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.; Porikli, F.; Plaza, A.; Kehtarnavaz, N.; Terzopoulos, D. Image Segmentation Using Deep Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3523–3542. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2015, arXiv:1506.01497v3. [Google Scholar] [CrossRef]

- Terven, J.; Cordova-Esparza, D. A Comprehensive Review of YOLO: From YOLOv1 to YOLOv8 and Beyond. arXiv 2023, arXiv:2304.00501. [Google Scholar]

- Jocher, G.; Ultralytics Team. YOLO by Ultralytics. Available online: https://github.com/ultralytics/ultralytics (accessed on 12 October 2025).

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT: Real-Time Instance Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9156–9165. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S. Transformers in Vision: A Survey. ACM Comput. Surv. 2022, 54, 200. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar] [CrossRef]

- Liu, Z.; Hu, H.; Lin, Y.; Yao, Z.; Xie, Z.; Wei, Y.; Ning, J.; Cao, Y.; Zhang, Z.; Dong, L.; et al. Swin Transformer V2: Scaling Up Capacity and Resolution. arXiv 2021, arXiv:2111.09883. [Google Scholar]

- Zhang, M.; Xu, S.; Han, Y.; Li, D.; Yang, S.; Huang, Y. High-Throughput Horticultural Phenomics: The History, Recent Advances and New Prospects. Comput. Electron. Agric. 2023, 213, 108265. [Google Scholar] [CrossRef]

- Huang, Y.; Ren, Z.; Li, D.; Liu, X. Phenotypic Techniques and Applications in Fruit Trees: A Review. Plant Methods 2020, 16, 107. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Igathinathane, C.; Li, J.; Cen, H.; Lu, Y.; Flores, P. Technology Progress in Mechanical Harvest of Fresh Market Apples. Comput. Electron. Agric. 2020, 175, 105606. [Google Scholar] [CrossRef]

- Mail, M.F.; Maja, J.M.; Marshall, M.; Cutulle, M.; Miller, G.; Barnes, E. Agricultural Harvesting Robot Concept Design and System Components: A Review. AgriEngineering 2023, 5, 777–800. [Google Scholar] [CrossRef]

- Yang, Y.; Han, Y.; Li, S.; Yang, Y.; Zhang, M.; Li, H. Vision-Based Fruit Recognition and Positioning Technology for Harvesting Robots. Comput. Electron. Agric. 2023, 213, 108258. [Google Scholar] [CrossRef]

- Meshram, A.T.; Vanalkar, A.V.; Kalambe, K.B.; Badar, A.M. Pesticide Spraying Robot for Precision Agriculture: A Categorical Literature Review and Future Trends. J. Field Robot. 2022, 39, 53–171. [Google Scholar] [CrossRef]

- Dange, K.M.; Bodile, R.M.; Varma, B.S. A Comprehensive Review on Agriculture-Based Pesticide Spraying Robot. In Proceedings of the International Conference on Sustainable and Innovative Solutions for Current Challenges in Engineering and Technology, Gwalior, India, 20–21 October 2023. [Google Scholar] [CrossRef]

- Zahid, A.; Mahmud, M.S.; He, L.; Heinemann, P.; Choi, D.; Schupp, J. Technological Advancements towards Developing a Robotic Pruner for Apple Trees: A Review. Comput. Electron. Agric. 2021, 189, 106383. [Google Scholar] [CrossRef]

- He, L.; Schupp, J. Sensing and Automation in Pruning of Apple Trees: A Review. Agronomy 2018, 8, 211. [Google Scholar] [CrossRef]

- Zeng, H.; Yang, J.; Yang, N.; Huang, J.; Long, H.; Chen, Y. A Review of the Research Progress of Pruning Robots. In Proceedings of the IEEE International Conference on Data Science and Computer Application (ICDSCA), Dalian, China, 28–30 October 2022; pp. 1069–1073. [Google Scholar] [CrossRef]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep Learning—Method Overview and Review of Use for Fruit Detection and Yield Estimation. Comput. Electron. Agric. 2019, 162, 219–234. [Google Scholar] [CrossRef]

- Maheswari, P.; Raja, P.; Apolo-Apolo, O.E.; Pérez-Ruiz, M. Intelligent Fruit Yield Estimation for Orchards Using Deep Learning-Based Semantic Segmentation Techniques—A Review. Front. Plant Sci. 2021, 12, 684328. [Google Scholar] [CrossRef]

- Farjon, G.; Huijun, L.; Edan, Y. Deep Learning-Based Counting Methods, Datasets, and Applications in Agriculture: A Review. Precis. Agric. 2023, 24, 1683–1711. [Google Scholar] [CrossRef]

- Villacrés, J.; Viscaino, M.; Delpiano, J.; Vougioukas, S.; Auat Cheein, F. Apple Orchard Production Estimation Using Deep Learning Strategies: A Comparison of Tracking-by-Detection Algorithms. Comput. Electron. Agric. 2023, 204, 107513. [Google Scholar] [CrossRef]

- Li, X.; Qiu, Q. Autonomous Navigation for Orchard Mobile Robots: A Rough Review. In Proceedings of the Youth Academic Annual Conference of Chinese Association of Automation (YAC), Nanchang, China, 28–30 May 2021; pp. 552–557. [Google Scholar] [CrossRef]

- Wang, T.; Chen, B.; Zhang, Z.; Li, H.; Zhang, M. Applications of Machine Vision in Agricultural Robot Navigation: A Review. Comput. Electron. Agric. 2022, 198, 107085. [Google Scholar] [CrossRef]

- Lei, X.; Yuan, Q.; Xyu, T.; Qi, Y.; Zeng, J.; Huang, K.; Sun, Y.; Herbst, A.; Lyu, X. Technologies and Equipment of Mechanized Blossom Thinning in Orchards: A Review. Agronomy 2023, 13, 2753. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Sanz-Cortiella, R.; Rosell-Polo, J.R.; Morros, J.R.; Ruiz-Hidalgo, J.; Vilaplana, V.; Gregorio, E. Fuji-SfM Dataset: A Collection of Annotated Images and Point Clouds for Fuji Apple Detection and Location Using Structure-from-Motion Photogrammetry. Data Brief 2020, 30, 105591. [Google Scholar] [CrossRef]

- Dias, P.A.; Medeiros, H. Semantic Segmentation Refinement by Monte Carlo Region Growing of High Confidence Detections. arXiv 2018, arXiv:1802.07789. [Google Scholar] [CrossRef]

- Westling, F. Avocado Tree Point Clouds Before and After Pruning; Mendeley Data: Amsterdam, The Netherlands, 2021; Version 1. [Google Scholar] [CrossRef]

- Yang, T.; Zhou, S.; Huang, Z.; Xu, A.; Ye, J.; Yin, J. Urban Street Tree Dataset for Image Classification and Instance Segmentation. Comput. Electron. Agric. 2023, 209, 107852. [Google Scholar] [CrossRef]

| Image Type | Agricultural Tasks and Relevant Papers |

|---|---|

| RGB | Phenotyping: [17] (2017, apple), [18] (2018, apple), [19] (2002, grape), [20] (2025, peach) Harvesting: [21] (2016, apple), [22] (2017, apple), [23] (2017, apple), [24] (2018, tomato), [25] (2011, litchi), [26] (2019, litchi), [27] (2018, litchi), [28] (2018, litchi), [29] (1993, citrus), [30] (2014, citrus), [31] (2018, citrus), [32] (2016, cherry), [33] (2017, cherry), [34] (2023, palm), [35] (2012, Chinese hickory), [36] (2014, Chinese hickory) Spraying: [37] (2010, apple), [38] (2010, grape), [39] (2019, olive), [40] (2021, cherry) Pruning: [41] (1997, grape), [42] (2006, grape) Yield estimation: [43] (2018, apple), [44] (2024, apple) Navigation: [45] (2016, palm) Thinning: [46] (2022, apple) |

| RGB-D | Phenotyping: [47] (2018, pear) Harvesting: [48] (2020, guava, pepper, eggplant) Spraying: [49] (2017, grape, peach, apricot), [50] (2018, grape), [51] (2020, peach) Navigation: [52] (2022, pear) |

| Point cloud | Phenotyping: [53] (2009, apple, pear, grape), [54] (2009, apple, pear, grape, citrus), [55] (2014, apple, pear, grape), [56] (2015, apple, grape), [57] (2016, apple), [58] (2020, apple), [59] (2015, grape), [60] (2015, grape), [61] (2017, grape), [62] (2023, tomato), [63] (2023, tomato), [64] (2015, pear), [65] (2012, peach), [66] (2015, almond), [67] (2015, apple), [68] (2016, almond), [69] (2021, mango, avocado), [70] (2025, apple) Harvesting: [71] (2017, apple) Spraying: [72] (2021, apple), [73] (2025, apple) Pruning: [74] (2014, apple), [75] (2015, apple), [76] (2020, apple), [77] (2022, cherry), [78] (2022, cherry), [79] (2023, jujube), [80] (2021, mango, avocado), [81] (2025, apple, cherry), [82] (2025, cherry) Yield estimation: [83] (2021, apple), [84] (2012, grape) Navigation: [85] (2013, Not Available) Thinning: [86] (2012, peach) |

| Others | Phenotyping: [87] (2012, apple, yucca) Harvesting: [88] (2022, grape), [89] (2011, citrus), [90] (2008, cherry), [91] (2013, pepper), [92] (2014, pepper), [93] (2018, olive) Pruning: [94] (2016, apple), [95] (2016, apple), [96] (2017, apple), [97] (2013, grape), [98] (2017, grape), [99] (2016, grape) |

| Image Type | Agricultural Tasks and Relevant Papers |

|---|---|

| RGB | Phenotyping: [100] (2020, apple), [101] (2022, apple), [102] (2022, apple), [103] (2023, apple), [15] (2018, apple, peach, pear), [104] (2022, apple, peach, pear), [105] (2023, citrus), [106] (2024, apple), [107] (2024, cherry), [108] (2024, apple), [109] (2025, apple), [110] (2025, tomato), [111] (2025, apple), [112] (2025, apple), Harvesting: [113] (2019, apple), [114] (2020, apple), [11] (2023, apple), [115] (2023, apple), [116] (2023, apple), [117] (2020, grape), [118] (2021, grape), [119] (2022, jujube), [120] (2023, grape), [121] (2023, grape), [122] (2022, tomato), [123] (2022, tomato), [124] (2023, tomato), [125] (2023, tomato), [126] (2020, litchi), [127] (2021, litchi, passion fruit, citrus, guava, jujube), [128] (2021, litchi), [129] (2023, litchi), [130] (2022, guava), [131] (2023, Sichuan pepper), [132] (2023, jujube), [133] (2019, kiwi), [134] (2020, kiwi), [135] (2021, kiwi), [14] (2021, mango), [136] (2022, banana), [137] (2022, pomegranate), [138] (2022, longan), [139] (2024, grape), [140] (2024, tomato), [141] (2024, mango), [142] (2024, mango), [143] (2024, mango), [144] (2024, apple), [145] (2024, grape), [146] (2024, litchi), [147] (2025, kiwi), [148] (2025, grape), [149] (2025, grape), [150] (2025, grape) Spraying: [151] (2020, pear), [152] (2022, pear) Pruning: [153] (2022, apple), [154] (2023, apple), [155] (2023, grape), [156] (2023, grape), [12] (2023, cherry), [157] (2025, tomato) Yield estimation: [158] (2020, apple), [159] (2022, apple), [160] (2023, apple), [8] (2023, apple), [161] (2022, grape) Navigation: [162] (2023, pear, peach), [163] (2025, grape) Thinning: [13] (2023, apple), [164] (2020, grape), [165] (2020, grape), [166] (2022, banana), [167] (2025, grape), [168] (2025, apple) |

| RGB-D | Phenotyping: [169] (2020, apple), [170] (2019, grape), [171] (2019, cherry), [172] (2018, Capsicum annuum (pepper)), [173] (2019, Capsicum annuum (pepper)), [174] (2020, Capsicum annuum (pepper)), [175] (2024, apple), [176] (2024, tomato) Harvesting: [177] (2018, apple), [178] (2019, apple), [179] (2020, apple), [180] (2020, apple), [181] (2022, apple), [182] (2023, grape), [183] (2022, cherry tomato), [184] (2022, tomato), [185] (2023, tomato), [186] (2020, litchi), [187] (2020, citrus), [188] (2019, guava), [189] (2021, guava), [190] (2021, guava), [191] (2022, pomegranate), [192] (2024, tomato), [193] (2024, tomato), [194] (2025, tomato) Spraying: [195] (2023, citrus), [196] (2025, apple) Pruning: [197] (2021, apple), [198] (2025, apple) Training: [199] (2020, apple) Navigation: [200] (2024, apple), [201] (2024, peach) Thinning: [202] (2025, apple) |

| Point cloud | Phenotyping: [203] (2023, apple), [204] (2021, tomato, maze), [205] (2024, apple), [206] (2024, apple), [207] (2025, apple) Harvesting: [208] (2022, grape) Pruning: [209] (2021, jujube), [210] (2024, apple), [211] (2024, cherry), [212] (2025, grape), [213] (2025, jujube), [214] (2025, walnut) |

| Others | Phenotyping: [215] (2022, apple), [216] (2023, tomato) Yield estimation: [217] (2013, almond) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oh, I.-S.; Lee, J.-S. A Crawling Review of Fruit Tree Image Segmentation. Agriculture 2025, 15, 2239. https://doi.org/10.3390/agriculture15212239

Oh I-S, Lee J-S. A Crawling Review of Fruit Tree Image Segmentation. Agriculture. 2025; 15(21):2239. https://doi.org/10.3390/agriculture15212239

Chicago/Turabian StyleOh, Il-Seok, and Jin-Seon Lee. 2025. "A Crawling Review of Fruit Tree Image Segmentation" Agriculture 15, no. 21: 2239. https://doi.org/10.3390/agriculture15212239