Author Contributions

Writing—review and editing, writing—original draft preparation, software, visualization, methodology, investigation, formal analysis, data curation, and validation, C.-E.C.; writing—review and editing, data curation, visualization, validation, supervision, resources, project administration, methodology, funding acquisition, formal analysis, and conceptualization, W.-Z.L.; writing—review and editing, supervision, data curation, and funding acquisition, J.C.; writing—review and editing, supervision, data curation, and funding acquisition, X.Q.; funding acquisition and supervision, V.T.; data curation, visualization, methodology, and software, Z.Y.; visualization and software, J.O. All authors have read and agreed to the published version of the manuscript.

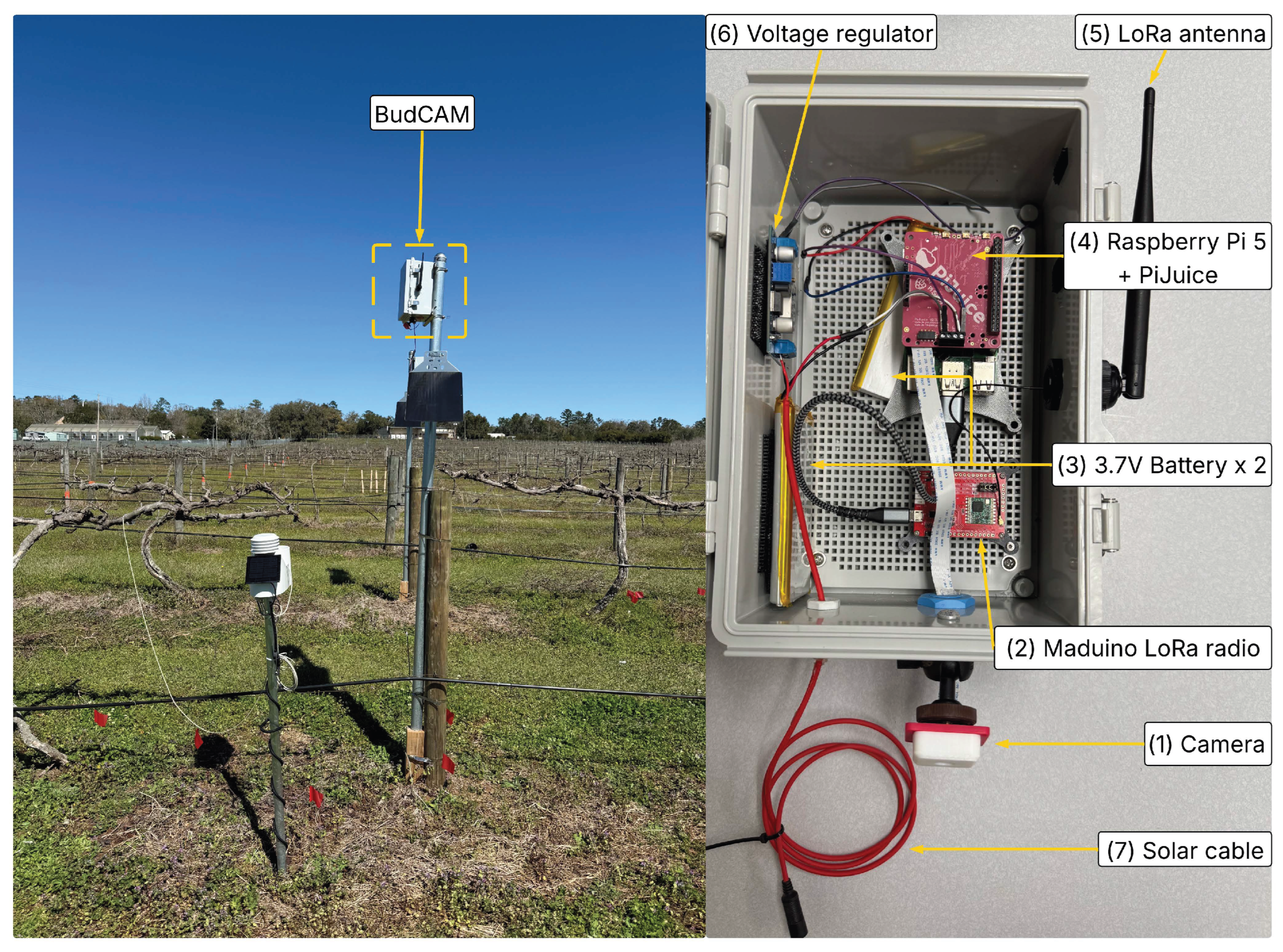

Figure 1.

(Left): Illustration of BudCAM deployed in the research vineyard at Florida A&M University Center for Viticulture and Small Fruit Research. (Right): Components of BudCAM include: (1) ArduCAM 16 MP autofocus camera, (2) Maduino LoRa radio board, (3) two 3.7 V lithium batteries, (4) Raspberry pi 5 and PiJuice power management board, (5) LoRa antenna, (6) voltage regulator, and (7) solar cable.

Figure 1.

(Left): Illustration of BudCAM deployed in the research vineyard at Florida A&M University Center for Viticulture and Small Fruit Research. (Right): Components of BudCAM include: (1) ArduCAM 16 MP autofocus camera, (2) Maduino LoRa radio board, (3) two 3.7 V lithium batteries, (4) Raspberry pi 5 and PiJuice power management board, (5) LoRa antenna, (6) voltage regulator, and (7) solar cable.

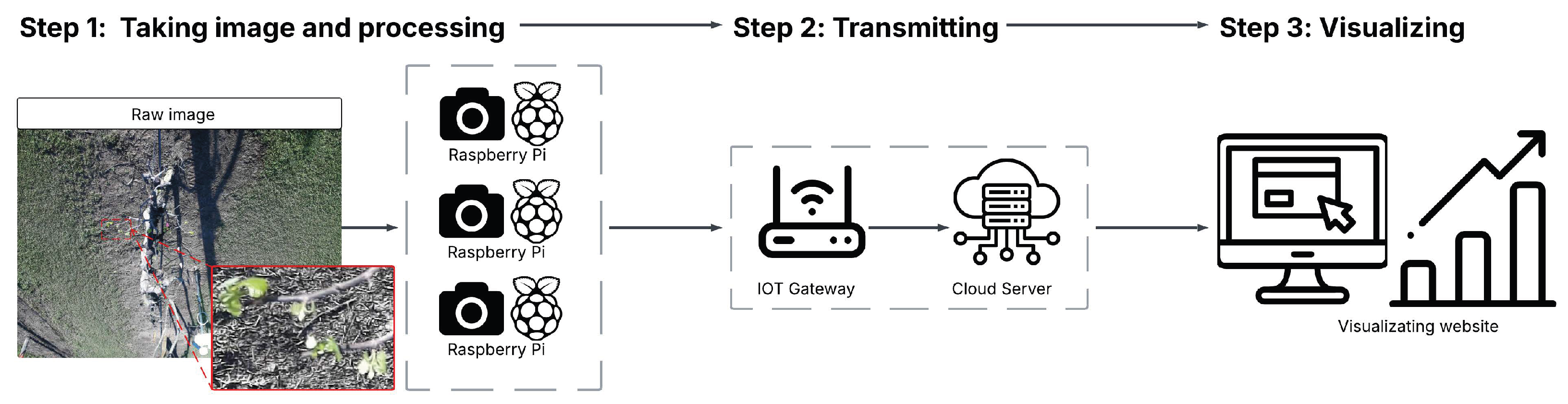

Figure 2.

Overview of three-phase processing framework for developing BudCAM. The workflow consists of image acquisition, on-device processing, and transmission of bud counts to a cloud platform. A rectangular callout highlights a sample region of the grapevine canopy, with an inset showing a zoomed-in view of buds within that region for enhanced visual clarity.

Figure 2.

Overview of three-phase processing framework for developing BudCAM. The workflow consists of image acquisition, on-device processing, and transmission of bud counts to a cloud platform. A rectangular callout highlights a sample region of the grapevine canopy, with an inset showing a zoomed-in view of buds within that region for enhanced visual clarity.

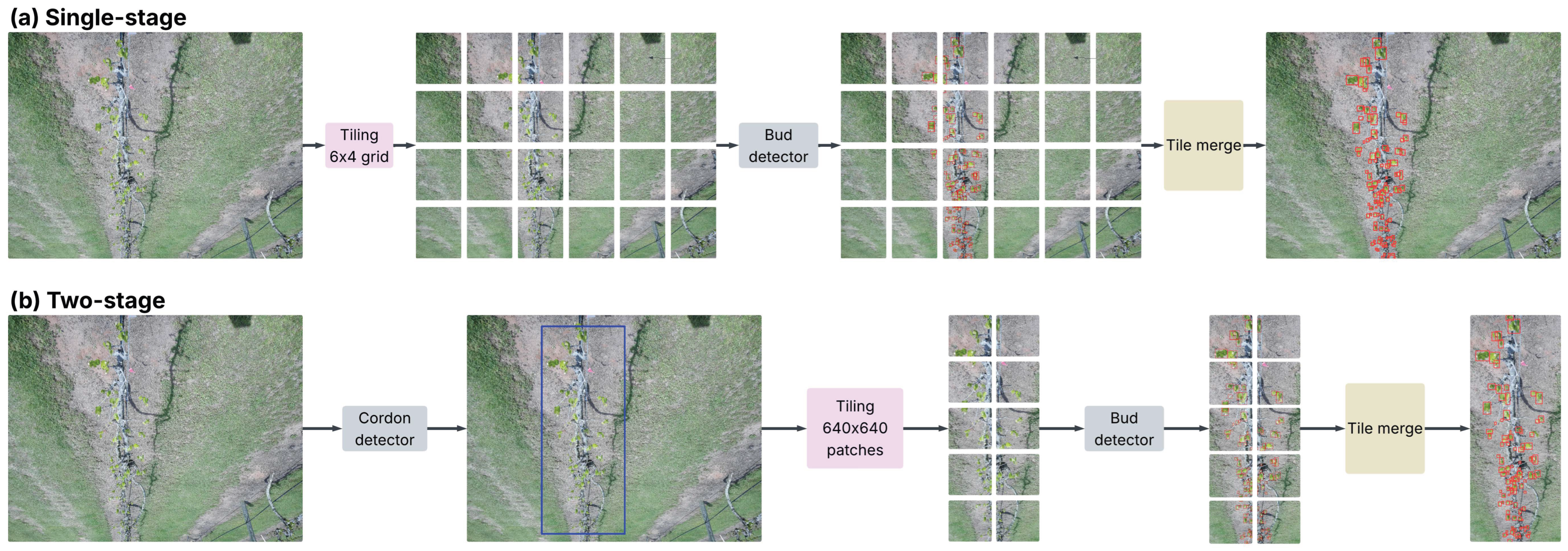

Figure 3.

Overview of the two detection pipelines. (a) Single-stage bud detector: the raw image is divided into a 6 × 4 grid (tiles of 776 × 864 pixels); each tile is processed by a YOLOv11 bud detector. Tile-level detections are mapped back to the full image and merged to obtain the final bud counts. (b) Two-stage pipeline: a YOLOv11 cordon detector first localizes the grapevine cordon, which is then cropped and partitioned into 640 × 640 pixels patches. These patches are processed by a second YOLOv11 model, and the results are merged. In all panels, detected buds are shown in red and the detected cordon is shown in blue.

Figure 3.

Overview of the two detection pipelines. (a) Single-stage bud detector: the raw image is divided into a 6 × 4 grid (tiles of 776 × 864 pixels); each tile is processed by a YOLOv11 bud detector. Tile-level detections are mapped back to the full image and merged to obtain the final bud counts. (b) Two-stage pipeline: a YOLOv11 cordon detector first localizes the grapevine cordon, which is then cropped and partitioned into 640 × 640 pixels patches. These patches are processed by a second YOLOv11 model, and the results are merged. In all panels, detected buds are shown in red and the detected cordon is shown in blue.

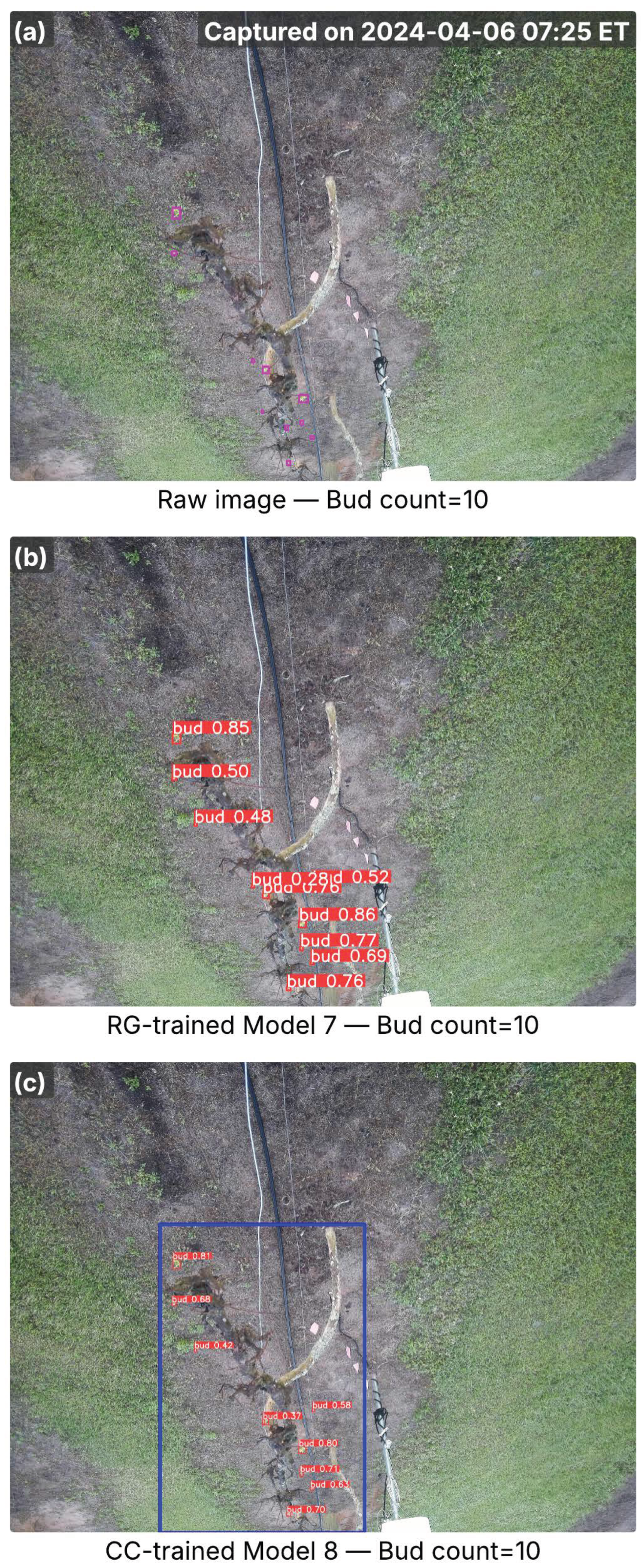

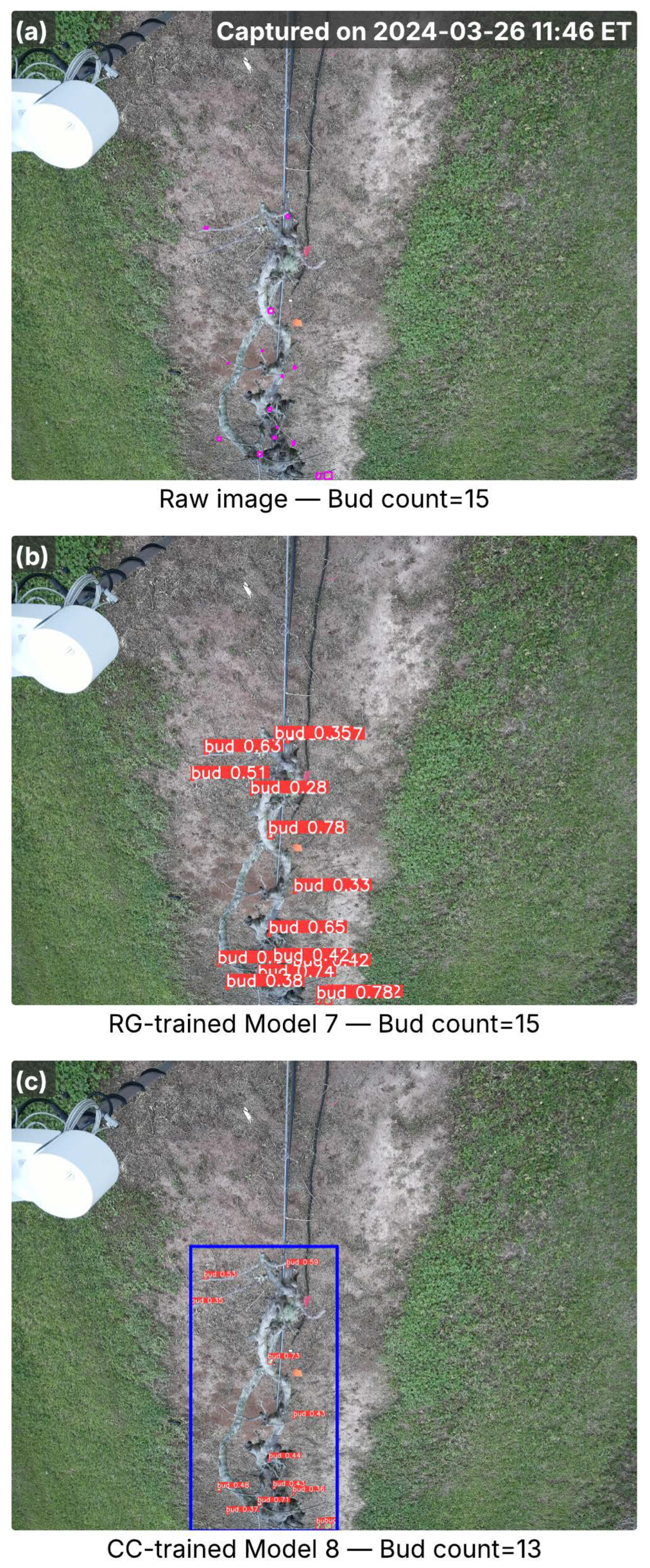

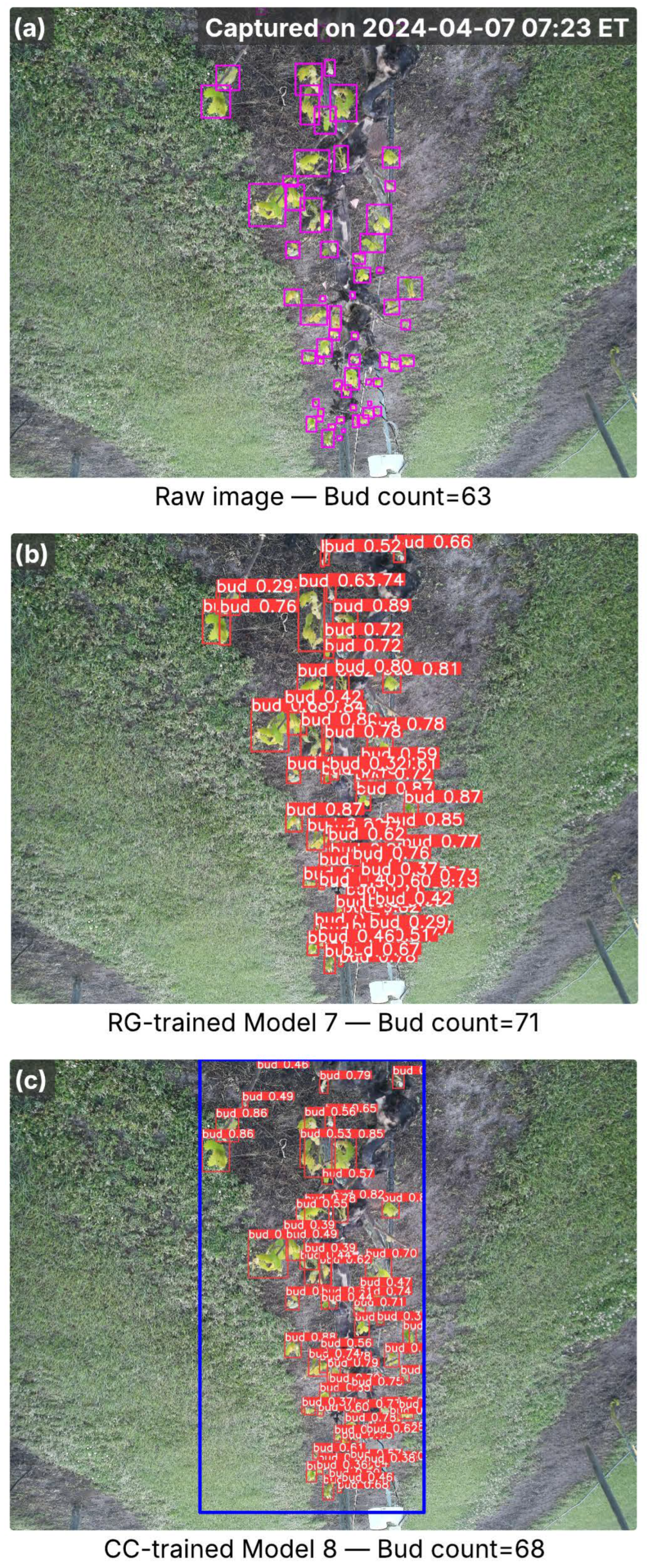

Figure 4.

Images from the A27 cultivar captured in the early bud break season, showing inference results from RG-trained Model 7 and CC-trained Model 8. (a) Raw image with ground-truth buds (pink bounding boxes) and total bud counts; the capture time is marked in the top-right corner. (b) Detection results from Model 7, with red bounding boxes indicating detected buds and associated confidence scores. (c) Detection results from Model 8, where the blue bounding box marks the cordon region identified by the cordon detector, and red bounding boxes denote detected buds with confidence scores. Bud counts in (b,c) represent the total detections per image.

Figure 4.

Images from the A27 cultivar captured in the early bud break season, showing inference results from RG-trained Model 7 and CC-trained Model 8. (a) Raw image with ground-truth buds (pink bounding boxes) and total bud counts; the capture time is marked in the top-right corner. (b) Detection results from Model 7, with red bounding boxes indicating detected buds and associated confidence scores. (c) Detection results from Model 8, where the blue bounding box marks the cordon region identified by the cordon detector, and red bounding boxes denote detected buds with confidence scores. Bud counts in (b,c) represent the total detections per image.

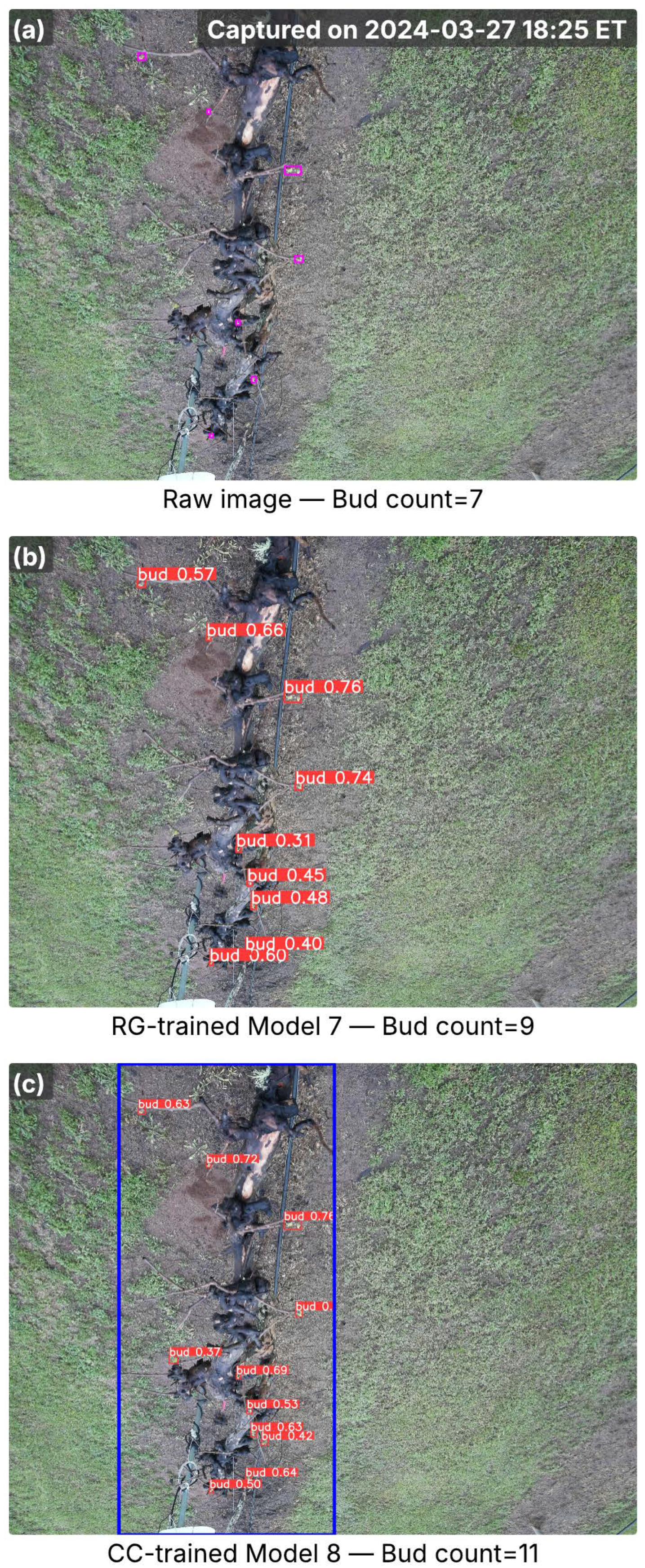

Figure 5.

Images from the Floriana cultivar captured in the early bud break season, showing inference results from RG-trained Model 7 and CC-trained Model 8. (a) Raw image with ground-truth buds (pink bounding boxes) and total bud counts; the capture time is marked in the top-right corner. (b) Detection results from Model 7, with red bounding boxes indicating detected buds and associated confidence scores. (c) Detection results from Model 8, where the blue bounding box marks the cordon region identified by the cordon detector, and red bounding boxes denote detected buds with confidence scores. Bud counts in (b,c) represent the total detections per image.

Figure 5.

Images from the Floriana cultivar captured in the early bud break season, showing inference results from RG-trained Model 7 and CC-trained Model 8. (a) Raw image with ground-truth buds (pink bounding boxes) and total bud counts; the capture time is marked in the top-right corner. (b) Detection results from Model 7, with red bounding boxes indicating detected buds and associated confidence scores. (c) Detection results from Model 8, where the blue bounding box marks the cordon region identified by the cordon detector, and red bounding boxes denote detected buds with confidence scores. Bud counts in (b,c) represent the total detections per image.

Figure 6.

Images from the Noble cultivar captured in the early bud break season, showing inference results from RG-trained Model 7 and CC-trained Model 8. (a) Raw image with ground-truth buds (pink bounding boxes) and total bud counts; the capture time is marked in the top-right corner. (b) Detection results from Model 7, with red bounding boxes indicating detected buds and associated confidence scores. (c) Detection results from Model 8, where the blue bounding box marks the cordon region identified by the cordon detector, and red bounding boxes denote detected buds with confidence scores. Bud counts in (b,c) represent the total detections per image.

Figure 6.

Images from the Noble cultivar captured in the early bud break season, showing inference results from RG-trained Model 7 and CC-trained Model 8. (a) Raw image with ground-truth buds (pink bounding boxes) and total bud counts; the capture time is marked in the top-right corner. (b) Detection results from Model 7, with red bounding boxes indicating detected buds and associated confidence scores. (c) Detection results from Model 8, where the blue bounding box marks the cordon region identified by the cordon detector, and red bounding boxes denote detected buds with confidence scores. Bud counts in (b,c) represent the total detections per image.

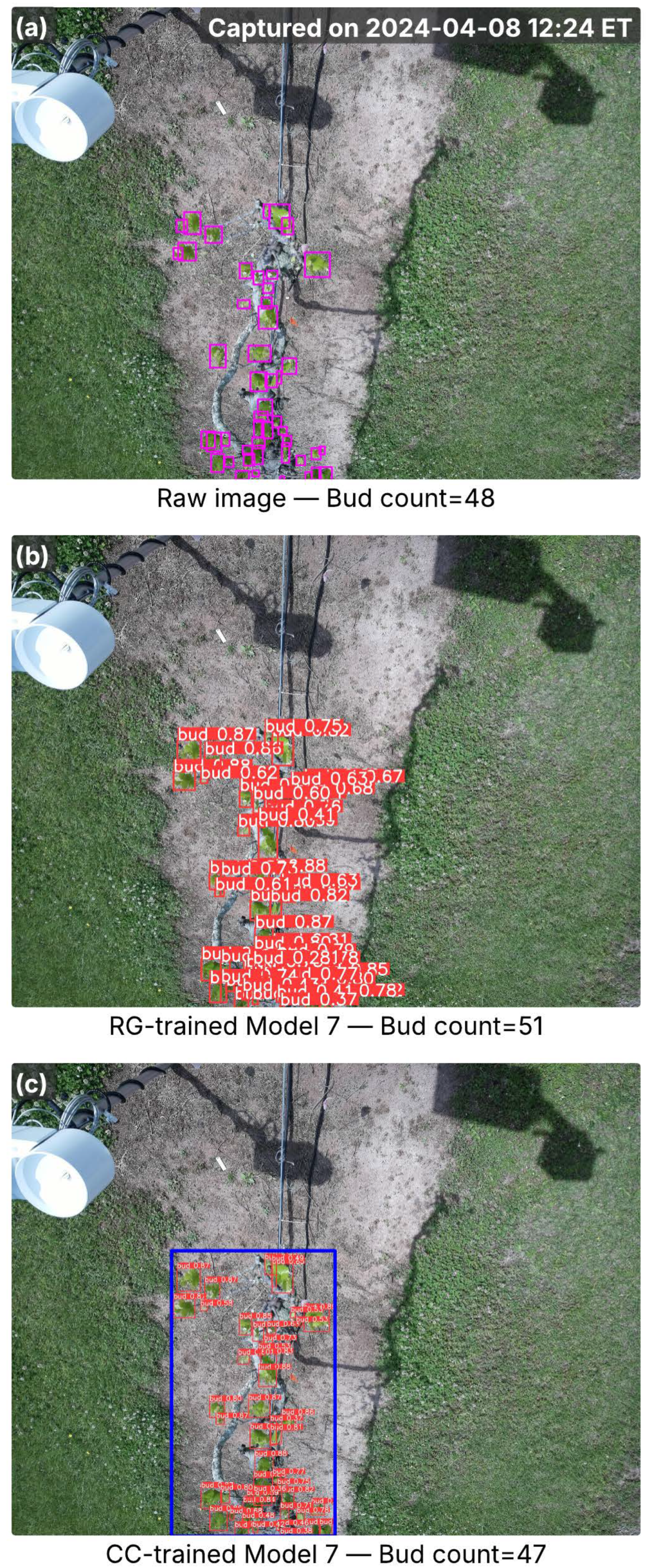

Figure 7.

Images from the A27 cultivar captured in the full bud break season, showing inference results from RG-trained Model 7 and CC-trained Model 8. (a) Raw image with ground-truth buds (pink bounding boxes) and total bud counts; the capture time is marked in the top-right corner. (b) Detection results from Model 7, with red bounding boxes indicating detected buds and associated confidence scores. (c) Detection results from Model 8, where the blue bounding box marks the cordon region identified by the cordon detector, and red bounding boxes denote detected buds with confidence scores. Bud counts in (b,c) represent the total detections per image.

Figure 7.

Images from the A27 cultivar captured in the full bud break season, showing inference results from RG-trained Model 7 and CC-trained Model 8. (a) Raw image with ground-truth buds (pink bounding boxes) and total bud counts; the capture time is marked in the top-right corner. (b) Detection results from Model 7, with red bounding boxes indicating detected buds and associated confidence scores. (c) Detection results from Model 8, where the blue bounding box marks the cordon region identified by the cordon detector, and red bounding boxes denote detected buds with confidence scores. Bud counts in (b,c) represent the total detections per image.

Figure 8.

Images from the Floriana cultivar captured in the full bud break season, showing inference results from RG-trained Model 7 and CC-trained Model 8. (a) Raw image with ground-truth buds (pink bounding boxes) and total bud counts; the capture time is marked in the top-right corner. (b) Detection results from Model 7, with red bounding boxes indicating detected buds and associated confidence scores. (c) Detection results from Model 8, where the blue bounding box marks the cordon region identified by the cordon detector, and red bounding boxes denote detected buds with confidence scores. Bud counts in (b,c) represent the total detections per image.

Figure 8.

Images from the Floriana cultivar captured in the full bud break season, showing inference results from RG-trained Model 7 and CC-trained Model 8. (a) Raw image with ground-truth buds (pink bounding boxes) and total bud counts; the capture time is marked in the top-right corner. (b) Detection results from Model 7, with red bounding boxes indicating detected buds and associated confidence scores. (c) Detection results from Model 8, where the blue bounding box marks the cordon region identified by the cordon detector, and red bounding boxes denote detected buds with confidence scores. Bud counts in (b,c) represent the total detections per image.

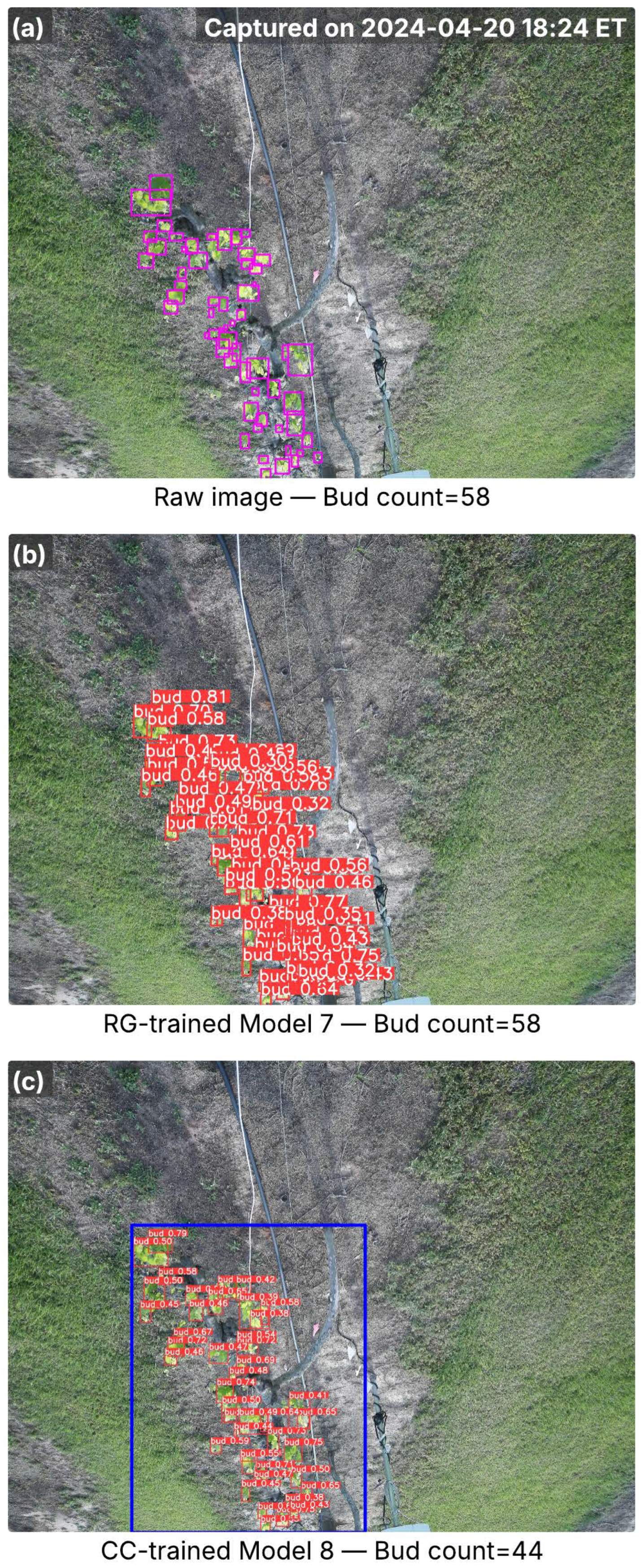

Figure 9.

Images from the Noble cultivar captured in the full bud break season, showing inference results from RG-trained Model 7 and CC-trained Model 8. (a) Raw image with ground-truth buds (pink bounding boxes) and total bud counts; the capture time is marked in the top-right corner. (b) Detection results from Model 7, with red bounding boxes indicating detected buds and associated confidence scores. (c) Detection results from Model 8, where the blue bounding box marks the cordon region identified by the cordon detector, and red bounding boxes denote detected buds with confidence scores. Bud counts in (b,c) represent the total detections per image.

Figure 9.

Images from the Noble cultivar captured in the full bud break season, showing inference results from RG-trained Model 7 and CC-trained Model 8. (a) Raw image with ground-truth buds (pink bounding boxes) and total bud counts; the capture time is marked in the top-right corner. (b) Detection results from Model 7, with red bounding boxes indicating detected buds and associated confidence scores. (c) Detection results from Model 8, where the blue bounding box marks the cordon region identified by the cordon detector, and red bounding boxes denote detected buds with confidence scores. Bud counts in (b,c) represent the total detections per image.

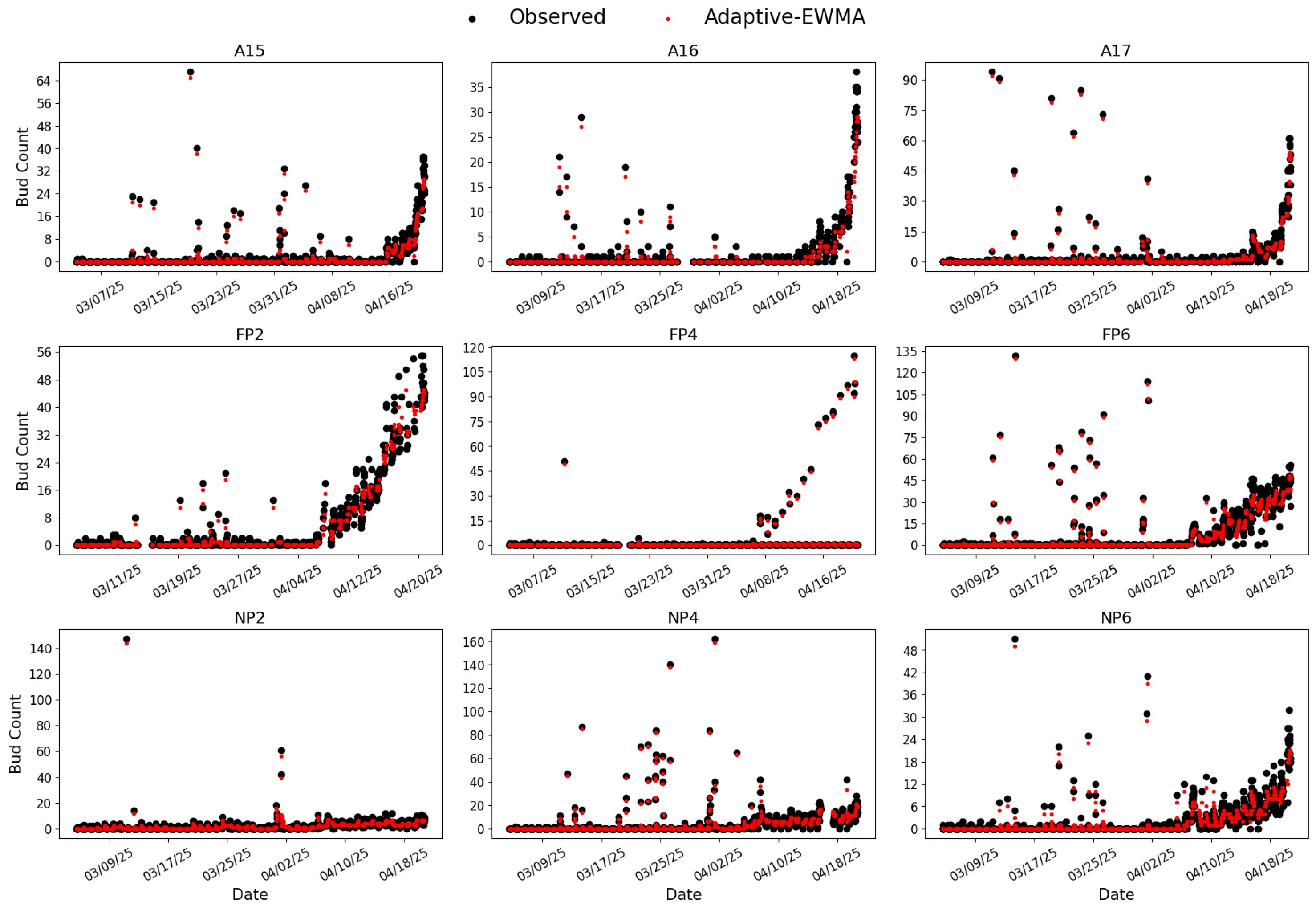

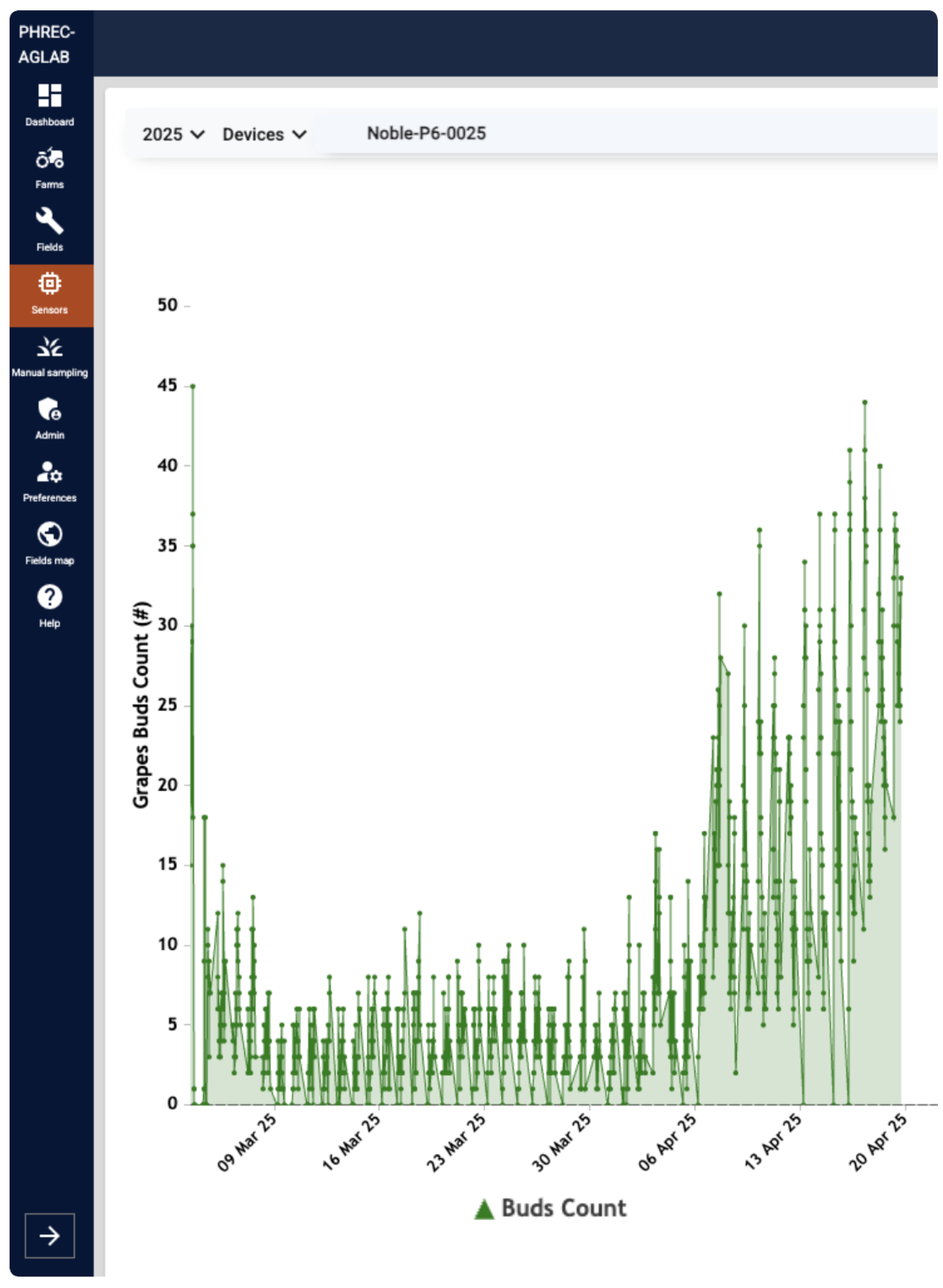

Figure 10.

Inference results from RG-trained Model 7 applied to bud season images collected between 3 March and 20 April 2025, across nine BudCAM units deployed in the vineyard. Black dots represent the raw bud counts and red dots represent the smoothed values obtained by the adaptive Exponentially Weighted Moving Average (adaptive-EWMA) filter.

Figure 10.

Inference results from RG-trained Model 7 applied to bud season images collected between 3 March and 20 April 2025, across nine BudCAM units deployed in the vineyard. Black dots represent the raw bud counts and red dots represent the smoothed values obtained by the adaptive Exponentially Weighted Moving Average (adaptive-EWMA) filter.

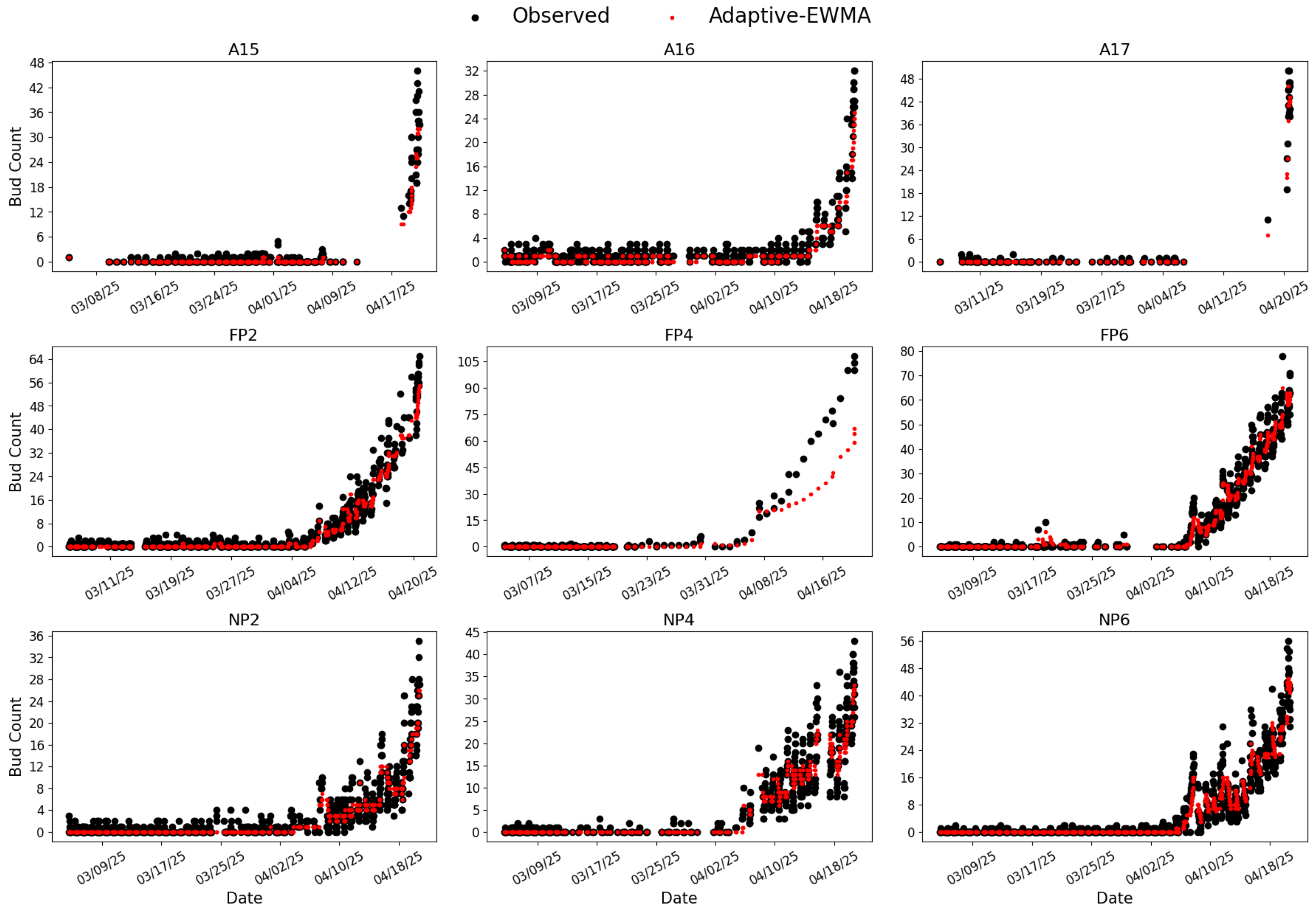

Figure 11.

Inference results from CC-trained Model 8 evaluated on the same vines and time period as those shown in

Figure 10. Labeling and color conventions are identical.

Figure 11.

Inference results from CC-trained Model 8 evaluated on the same vines and time period as those shown in

Figure 10. Labeling and color conventions are identical.

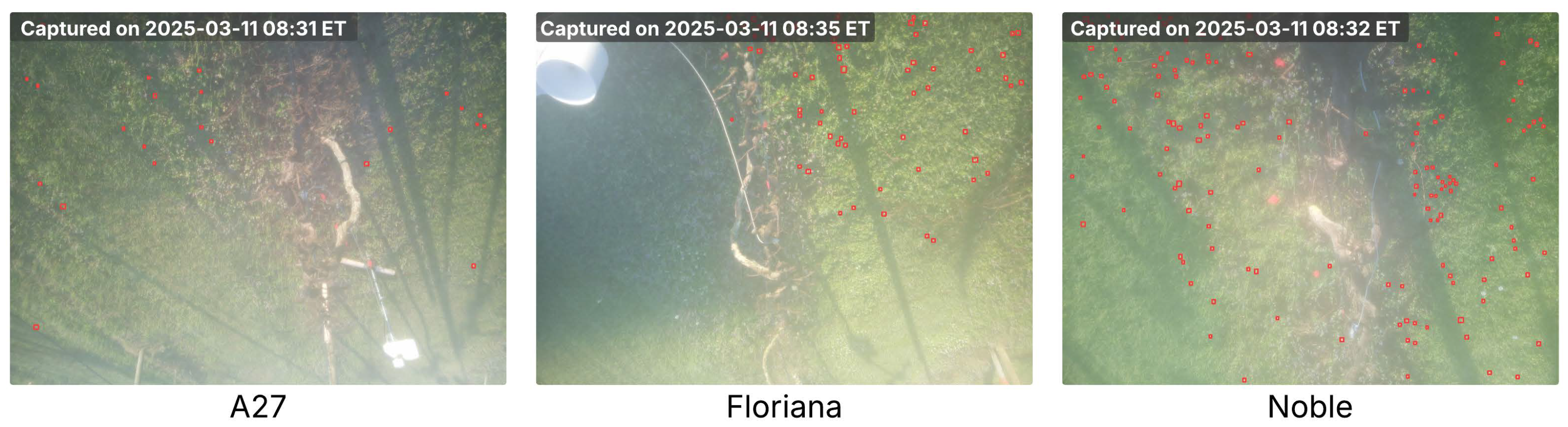

Figure 12.

Example images of three cultivars captured with a fogged lens and processed by RG-trained Model 7. Detected buds are highlighted with red bounding boxes. The capture time is shown in the top-left corner of each image.

Figure 12.

Example images of three cultivars captured with a fogged lens and processed by RG-trained Model 7. Detected buds are highlighted with red bounding boxes. The capture time is shown in the top-left corner of each image.

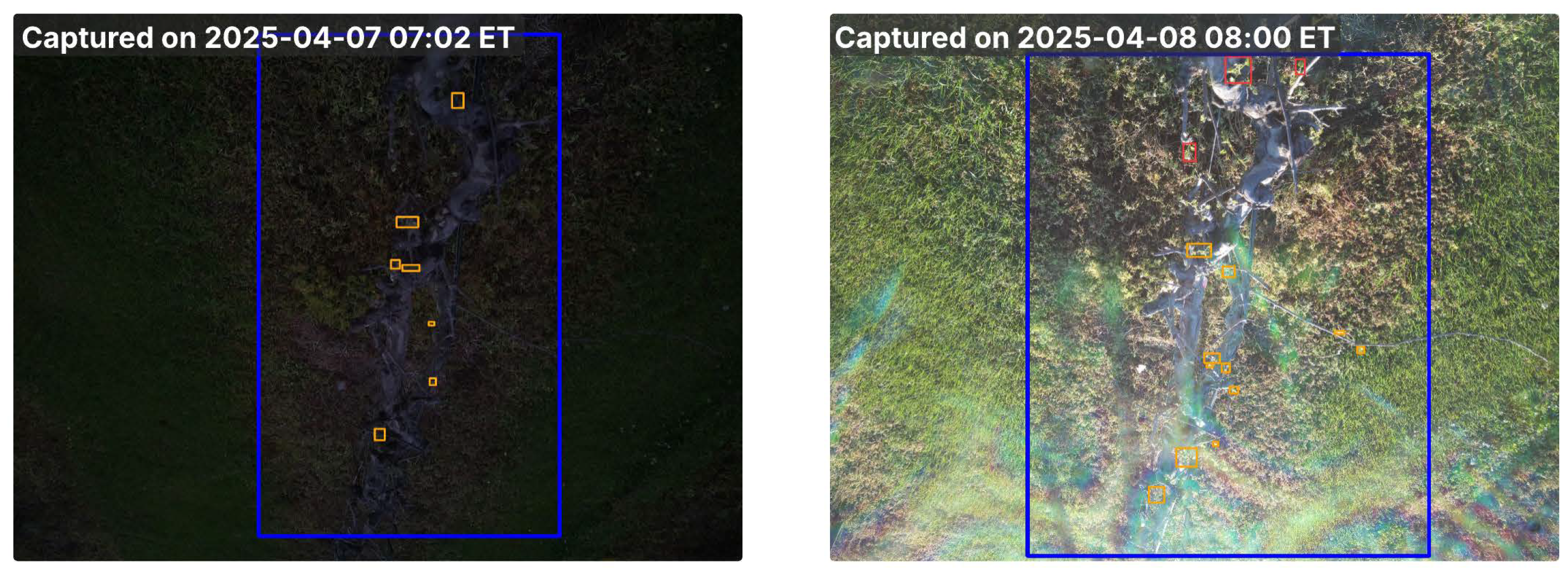

Figure 13.

Example of under-detected images processed by CC-trained Model 8. Detected buds are highlighted with red bounding boxes, the non-detected buds are highlighted with orange bounding boxes, and the blue bounding box marks the cordon region identified by the cordon detector.

Figure 13.

Example of under-detected images processed by CC-trained Model 8. Detected buds are highlighted with red bounding boxes, the non-detected buds are highlighted with orange bounding boxes, and the blue bounding box marks the cordon region identified by the cordon detector.

Table 1.

BudCAM node information including cultivar, harvest date, and bud break start and end dates.

Table 1.

BudCAM node information including cultivar, harvest date, and bud break start and end dates.

| Vine ID | Cultivar | Harvest Date | Start Date | End Date |

|---|

| FP2 | Floriana | 15 August 2024 | 24 March 2024 | 15 April 2024 |

| FP4 | Floriana | 15 August 2024 | 25 March 2024 | 8 April 2024 |

| FP6 | Floriana | 15 August 2024 | 26 March 2024 | 9 April 2024 |

| NP2 | Noble | 15 August 2024 | 26 March 2024 | 9 April 2024 |

| NP4 | Noble | 15 August 2024 | 26 March 2024 | 13 April 2024 |

| NP6 | Noble | 15 August 2024 | 24 March 2024 | 11 April 2024 |

| A27P15 | A27 | 20 August 2024 | 2 April 2024 | 20 April 2024 |

| A27P16 | A27 | 20 August 2024 | 30 March 2024 | 15 April 2024 |

| A27P17 | A27 | 20 August 2024 | 1 April 2024 | 20 April 2024 |

Table 2.

Distribution of raw images per vine from three muscadine grape cultivars used for training, validation, and testing in model development. A total of 2656 high-resolution images were collected from nine vines and divided into 70% training, 15% validation, and 15% test subsets.

Table 2.

Distribution of raw images per vine from three muscadine grape cultivars used for training, validation, and testing in model development. A total of 2656 high-resolution images were collected from nine vines and divided into 70% training, 15% validation, and 15% test subsets.

| Vine ID | Cultivar | Training | Validation | Test | Total |

|---|

| FP2 | Floriana | 168 | 52 | 43 | 263 |

| FP4 | Floriana | 133 | 24 | 30 | 187 |

| FP6 | Floriana | 233 | 51 | 47 | 331 |

| NP2 | Noble | 177 | 33 | 42 | 252 |

| NP4 | Noble | 299 | 80 | 65 | 444 |

| NP6 | Noble | 95 | 19 | 17 | 131 |

| A27P15 | A27 | 321 | 61 | 74 | 456 |

| A27P16 | A27 | 91 | 9 | 10 | 110 |

| A27P17 | A27 | 343 | 69 | 70 | 482 |

| – | Floriana total | 534 | 127 | 120 | 781 |

| – | Noble total | 571 | 132 | 124 | 827 |

| – | A27 total | 755 | 139 | 154 | 1048 |

Table 3.

Summary of the Raw Grid (RG) and Cordon-Cropped (CC) datasets, including processing strategies, pipeline usage, and the number of samples in each subset after filtering. Both datasets were derived from 2656 high-resolution images collected from nine muscadine vines across three cultivars. The RG dataset was generated by tiling each raw image into 6 × 4 grids (24 tiles per image), while the CC dataset was generated by cropping the detected cordon region into 640 × 640-pixel patches for the two-stage pipeline.

Table 3.

Summary of the Raw Grid (RG) and Cordon-Cropped (CC) datasets, including processing strategies, pipeline usage, and the number of samples in each subset after filtering. Both datasets were derived from 2656 high-resolution images collected from nine muscadine vines across three cultivars. The RG dataset was generated by tiling each raw image into 6 × 4 grids (24 tiles per image), while the CC dataset was generated by cropping the detected cordon region into 640 × 640-pixel patches for the two-stage pipeline.

| Dataset | Processing Strategy | Pipeline Usage | Training | Validation | Test | Total |

|---|

| RG | Raw image tiled into 6 × 4 grid (776 × 864 px per tile) | Single-stage detector | 15,872 | 3387 | 3308 | 22,567 |

| CC | Cordon cropped and tiled into patches | Two-stage pipeline | 19,666 | 4147 | 4119 | 27,956 |

Table 4.

Parameter setting for two data augmentation configurations.

Table 4.

Parameter setting for two data augmentation configurations.

| Config. 1 | Rotation | Translate | Scale | Shear | Perspective | Flip |

|---|

| Config. 1 | 45° | 40% | 30% | 10% | 0 | Yes |

| Config. 2 | 10° | 10% | 0 | 5% | 0.001 | Yes |

Table 5.

Overview of the eight training configurations with variations in model size, fine-tuning, and data augmentation strategies.

Table 5.

Overview of the eight training configurations with variations in model size, fine-tuning, and data augmentation strategies.

| Model ID | Model Size | Fine-Tuned | Data Augmentation |

|---|

| Model 1 | Medium | No | None |

| Model 2 | Medium | Yes | None |

| Model 3 | Large | No | None |

| Model 4 | Large | Yes | None |

| Model 5 | Medium | No | Config 1 |

| Model 6 | Medium | Yes | Config 1 |

| Model 7 | Medium | No | Config 2 |

| Model 8 | Medium | Yes | Config 2 |

Table 6.

Performance and final training epochs for all model configurations trained on the Raw Grid (RG) and Cordon-Cropped (CC) datasets. Results were evaluated on test subsets from 2656 images collected from nine muscadine grapevines representing three cultivars with three replicates each.

Table 6.

Performance and final training epochs for all model configurations trained on the Raw Grid (RG) and Cordon-Cropped (CC) datasets. Results were evaluated on test subsets from 2656 images collected from nine muscadine grapevines representing three cultivars with three replicates each.

| Model ID | Final Training Epoch | Test mAP@0.5 (%) |

|---|

| Trained on Raw Grid (RG) Dataset |

| Model 1 | 70 ± 25 | 75.9 ± 2.4 |

| Model 2 | 79 ± 34 | 80.3 ± 1.1 |

| Model 3 | 77 ± 25 | 76.0 ± 1.5 |

| Model 4 | 69 ± 24 | 78.5 ± 1.6 |

| Model 5 | 315 ± 69 | 85.1 ± 0.4 |

| Model 6 | 229 ± 30 | 85.3 ± 0.2 |

| Model 7 | 441 ± 70 | 86.0 ± 0.1 |

| Model 8 | 238 ± 43 | 85.7 ± 0.1 |

| Trained on Cordon-Cropped (CC) Dataset |

| Model 1 | 77 ± 4 | 79.1 ± 0.3 |

| Model 2 | 72 ± 10 | 78.3 ± 0.3 |

| Model 3 | 76 ± 4 | 79.2 ± 0.1 |

| Model 4 | 71 ± 9 | 78.7 ± 0.2 |

| Model 5 | 512 ± 60 | 84.4 ± 0.1 |

| Model 6 | 297 ± 103 | 84.4 ± 0.4 |

| Model 7 | 435 ± 27 | 84.7 ± 0.1 |

| Model 8 | 278 ± 45 | 85.0 ± 0.1 |

Table 7.

Performance comparison of the top models under different inference strategies.

Table 7.

Performance comparison of the top models under different inference strategies.

| Model | Baseline a | Resized-CC | Sliced-CC b |

|---|

| Model 7 (RG) | 86.0 ± 0.1 | 58.2 ± 1.3 | 74.8 ± 0.7 |

| Model 8 (CC) | 85.0 ± 0.1 | 52.6 ± 1.0 | 80.0 ± 0.2 |

Table 8.

Comparison of mAP@0.5 scores (%, mean ± SD) under three slice-overlap ratios for the top-performing models.

Table 8.

Comparison of mAP@0.5 scores (%, mean ± SD) under three slice-overlap ratios for the top-performing models.

| Model | Slice-Overlap Ratio |

|---|

|

0.2

|

0.5

|

0.75

|

| Model 7 (RG) | 74.8 ± 0.7 | 72.0 ± 0.7 | 66.3 ± 0.6 |

| Model 8 (CC) | 80.0 ± 0.2 | 76.4 ± 0.4 | 69.2 ± 0.5 |

Table 9.

Comparison of methods related to bud/seedling detection or segmentation.

Table 9.

Comparison of methods related to bud/seedling detection or segmentation.

| Method | Model | Dataset | View/Camera Angle | Platform | Performance |

|---|

| BudCAM (this work) | YOLOv11-m (RG, M7) a | 2656 images; px | Nadir, 1–3 m above vines | Raspberry Pi 5 | 86.0 (mAP@0.5) |

| YOLOv11-m (CC, M8) b | 85.0 (mAP@0.5) |

| Grimm et al. [7] | UNet-style (VGG16 encoder) | 542 images; up to px | Side view, within ∼2 m | – | 79.7 (F1) |

| Rudolph et al. [8] | FCN | 108 images; px | Side view, within ∼1 m | – | 75.2 (F1) |

| Marset et al. [9] | FCN–MobileNet | 698 images; px | – | – | 88.6 (F1) |

| Xia et al. [6] | ResNeSt50 | 31,158 images; up to px | 0.4–0.8 m | – | 92.4 (F1) |

| Li et al. [4] | YOLOv4 | 7723 images; px | – | – | 85.2 (mAP@0.5) |

| Gui et al. [5] | YOLOv5-l | 1000 images; px | – | – | 92.7 (mAP@0.5) |

| Chen et al. [17] | YOLOv8-n | 4058 images; px | UAV top view, ∼3 m AGL | – | 99.4 (mAP@0.5) |

| Pawikhum et al. [20] | YOLOv8-n | 1500 images | Multi-angles, ∼0.5 m | Edge (embedded) | 59.0 (mAP@0.5) |