1. Introduction

Winter jujubes are popular among consumers due to their high nutritional value, containing plenty of vitamin C and various essential minerals [

1]. Yet, labor costs and the declining rural workforce render traditional manual harvesting costly and unsustainable, rendering it increasingly inadequate for the industry’s needs [

2]. In addition, winter jujubes possess distinct growth traits—such as small fruit dimensions and elevated growing positions—that hinder accurate harvesting by traditional approaches, often resulting in substantial losses in yield and revenue [

3]. Thus, the precision and efficiency of winter jujube harvesting has become an urgent technical issue.

Notably, learning-based object detection algorithms, such as the YOLO series, have demonstrated strong potential in agricultural fruit tree recognition and localization [

4,

5]. Combining orchard vision systems and sensors with machine learning models allows for real-time detection and positioning of winter jujubes, providing key technical support for automated harvesting [

6].

Specifically, object detection methods that precisely determine both the position and maturity of winter jujubes can markedly lower rates of incorrect or missed picking. Furthermore, Incorporating robotic arms and mobile platforms into harvesting systems speeds up picking [

7]. Although agricultural object recognition has advanced, detecting winter jujubes is still challenged by occlusion, complex backgrounds, and variable lighting [

8]. Thus, practical production demands necessitate further refinement of efficient and precise object recognition methods for winter jujubes.

With the continuous optimization of YOLO models, both the detection accuracy and robustness of these models have been significantly improved. For example, Yu et al. proposed a novel YOLO model MLG-YOLO [

4], while Li et al. and Han et al. enhanced the YOLO model’s adaptability in complex environments by incorporating the self-attention mechanism (CBAM) and the lightweight ShuffleNetV2 network [

9,

10]. Ma et al. introduced the STRAW-YOLO model [

11], specifically designed for detecting strawberry fruits and their key points in agricultural environments. Li et al. proposed a lightweight target detection method based on an improved YOLOv5 model for detecting flat winter jujubes in the field [

12]. Wu et al. developed the DNE-YOLO model to address the low detection accuracy of apple fruits in complex natural environments (such as varying lighting, occlusion, and cluttered backgrounds) [

12,

13]. In recent years, to meet the real-time detection requirements in agricultural production with limited computational resources, Han et al. and Lyu et al. proposed integrating YOLOv4 and YOLOv5 with edge computing devices [

14,

15], significantly enhancing real-time performance and computational efficiency. Specifically, by using the lightweight YOLOv5 model, high detection accuracy is maintained while enabling efficient computation and inference on mobile devices and in low-resource environments. Liu et al. improved the detection efficiency of YOLOv8 by utilizing PDWConv [

16]. Wang et al. further improved YOLOv8n for high-precision real-time detection of apples under complex natural lighting conditions [

17]. Peng et al. applied an improved YOLOv5 model and UAV technology for lychee image detection in orchards [

18], while Sorbelli et al. used UAV-RGB imagery for real-time monitoring of the brown marmorated stink bug in orchards [

19], providing new insights into precision orchard management. Additionally, Li et al. introduced the C2f-WTConv (C2f-Wide Transformer Convolution) module [

20], enabling the model to effectively integrate multi-level feature information when handling targets of varying scales and complex backgrounds, thereby enhancing its detection capabilities for large objects, small objects, and occluded targets.

The current mainstream fruit localization methods primarily include laser scanning technology, stereo vision systems, and methods based on RGB-D cameras. Yu et al. deployed SOD-YOLOv5n on Android phones to count winter jujubes in real-time [

21], further demonstrating the feasibility of mobile applications. Hou et al. proposed a citrus fruit detection and localization method based on an improved YOLOv5s and binocular vision technology [

22], which accurately detects and localizes mature citrus fruits in orchards. Fu et al. introduced an RGB-D camera that combines color images and depth information [

23], enabling accurate fruit detection and localization under varying lighting conditions and complex backgrounds, while also offering significant advantages in terms of cost-effectiveness, flexibility, and adaptability. Boaz Arad et al. developed a sweet pepper harvesting robot system [

24], using a combination of RGB-D cameras and LED lights at the robotic arm’s end for the three-dimensional localization of sweet peppers. Shamshiri et al. proposed a method combining depth cameras and RGB-D technology [

25], which provides more precise three-dimensional coordinates by acquiring depth information from the target, supporting automated fruit harvesting. Divyanth L.G. et al. presented a depth estimation method based on deep learning using RGB images [

26], extracting depth information from RGB images and enabling precise three-dimensional localization of targets in apple orchards. Additionally, Hu et al. implemented apple target detection and three-dimensional localization based on the improved YOLOX and RGB-D images [

27], also validating the value of depth information in improving localization accuracy.

To address key challenges in winter jujube harvesting, this study proposes an enhanced YOLOv11 model combined with RGB-D sensing for accurate 3D localization and cross-platform deployment performance evaluation. The main contributions are as follows:

(1) A lightweight detection model named SCC-YOLO was proposed, integrating ShuffleNetV2, CBAM, and a redesigned C2f_WTConv module to improve detection accuracy and efficiency under occlusion, complex backgrounds, and illumination variations.

(2) A compact network architecture was constructed using ShuffleNetV2 as the backbone for real-time deployment, while CBAM adaptively emphasized salient winter jujube features against occlusion and clutter, and C2f_WTConv introduced wavelet-based spatial–frequency modeling to enhance robustness under varying lighting.

(3) The DIoU loss was adopted to replace the original YOLOv11 localization loss, refining bounding regression and improving the detection of small and overlapping winter jujubes.

(4) A 3D localization method was developed by combining SCC-YOLO with RGB-D cameras and Eye-to-Hand calibration, and cross-platform deployment was validated on both desktop and mobile devices to ensure real-time capability in practical orchard scenarios.

2. Materials and Methods

2.1. Data Collection and Image Preprocessing

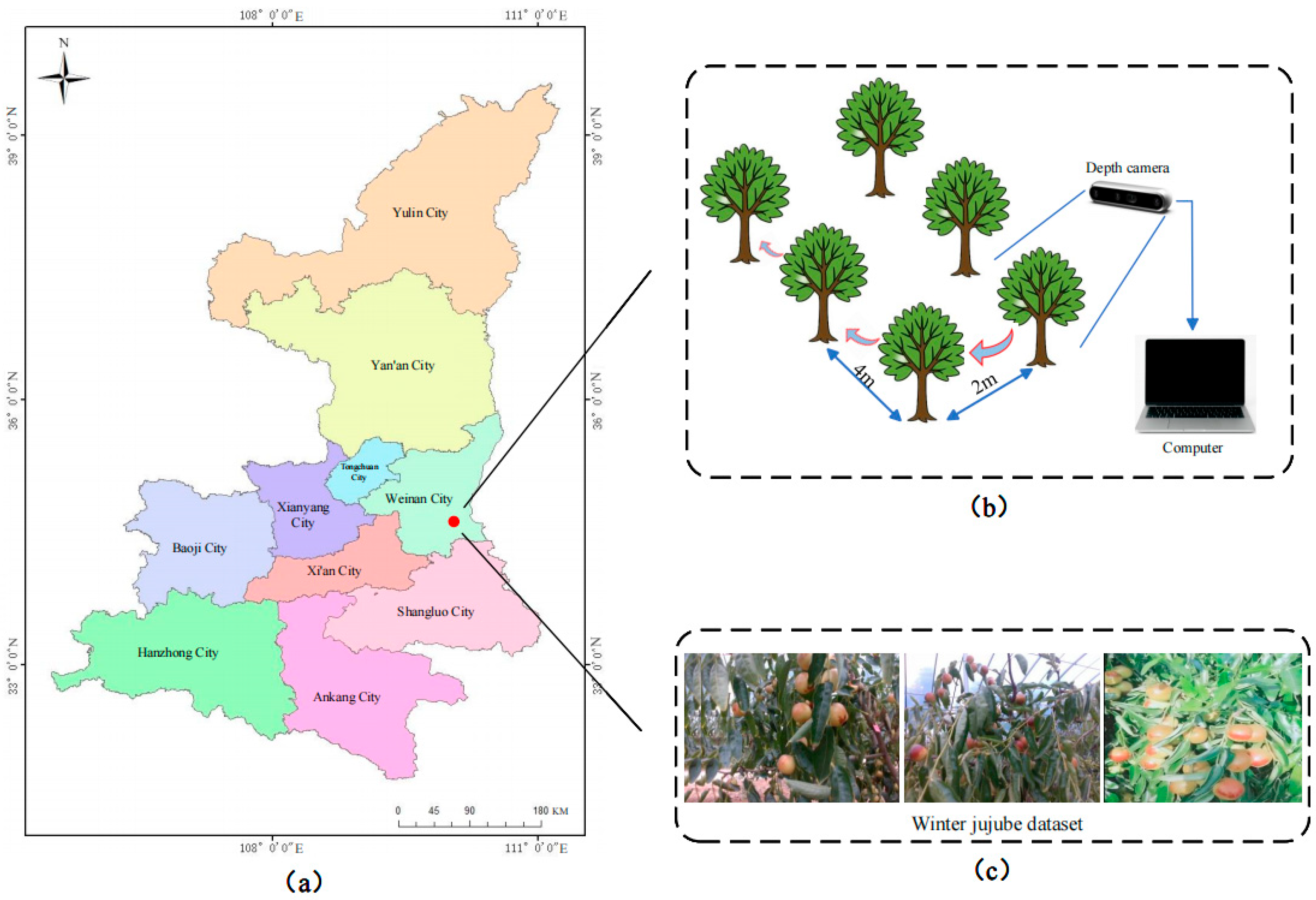

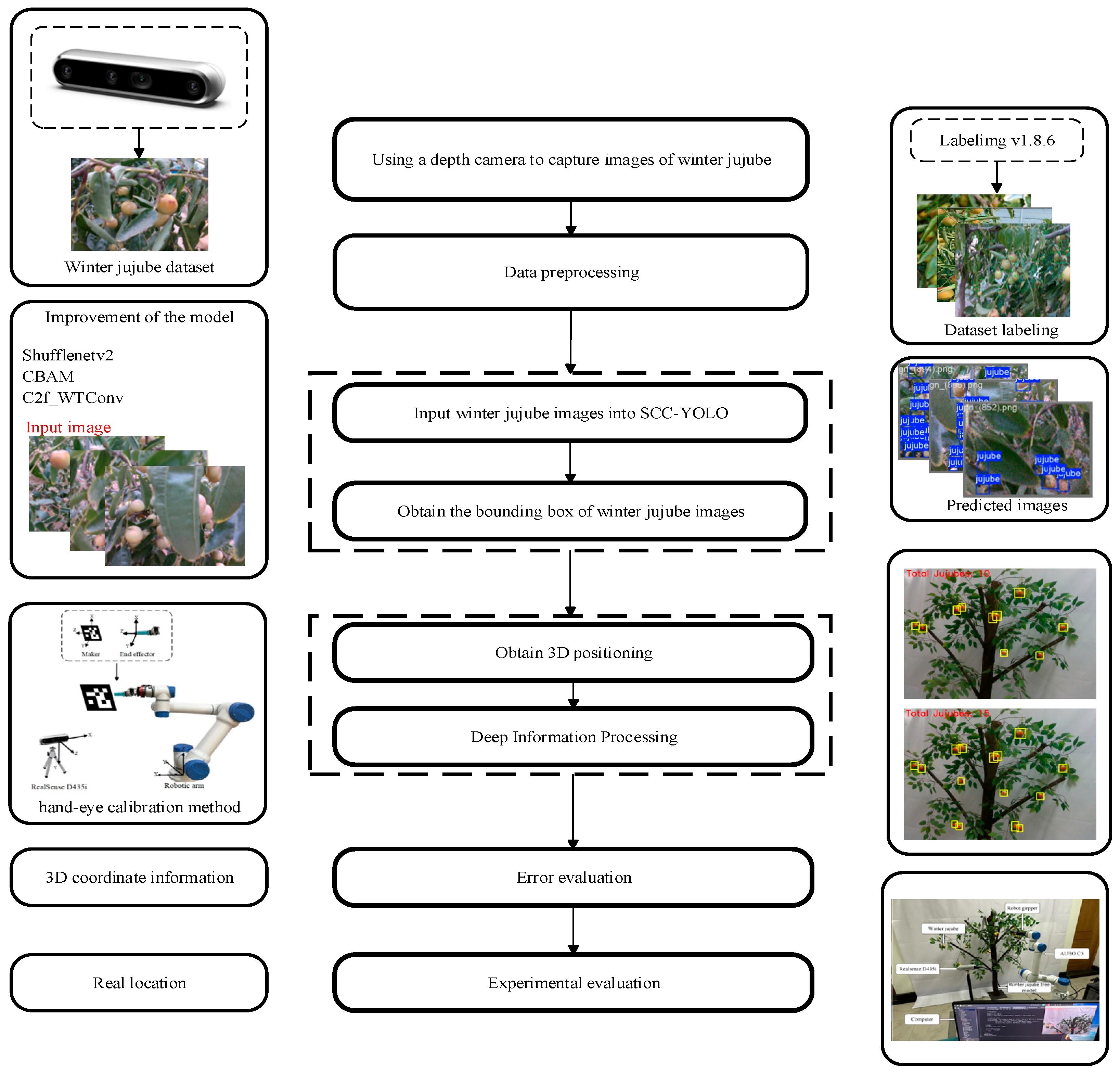

The dataset for this study was acquired at the winter jujube experimental base of Northwest A&F University, in Dali County, Weinan, Shaanxi. The winter jujube planting area at this site follows a planting pattern with a row spacing of 2 m and a column spacing of 4 m. Data acquisition employed an Intel RealSense D435i RGB-D camera (Intel Corporation, Santa Clara, CA, USA), with the resolution set to 640 × 480 pixels. In total, 900 images of winter jujubes were captured, encompassing variations in ripeness, illumination, time of shooting, and occlusion levels to guarantee dataset diversity and representativeness. An illustration of the image collection process is shown in

Figure 1.

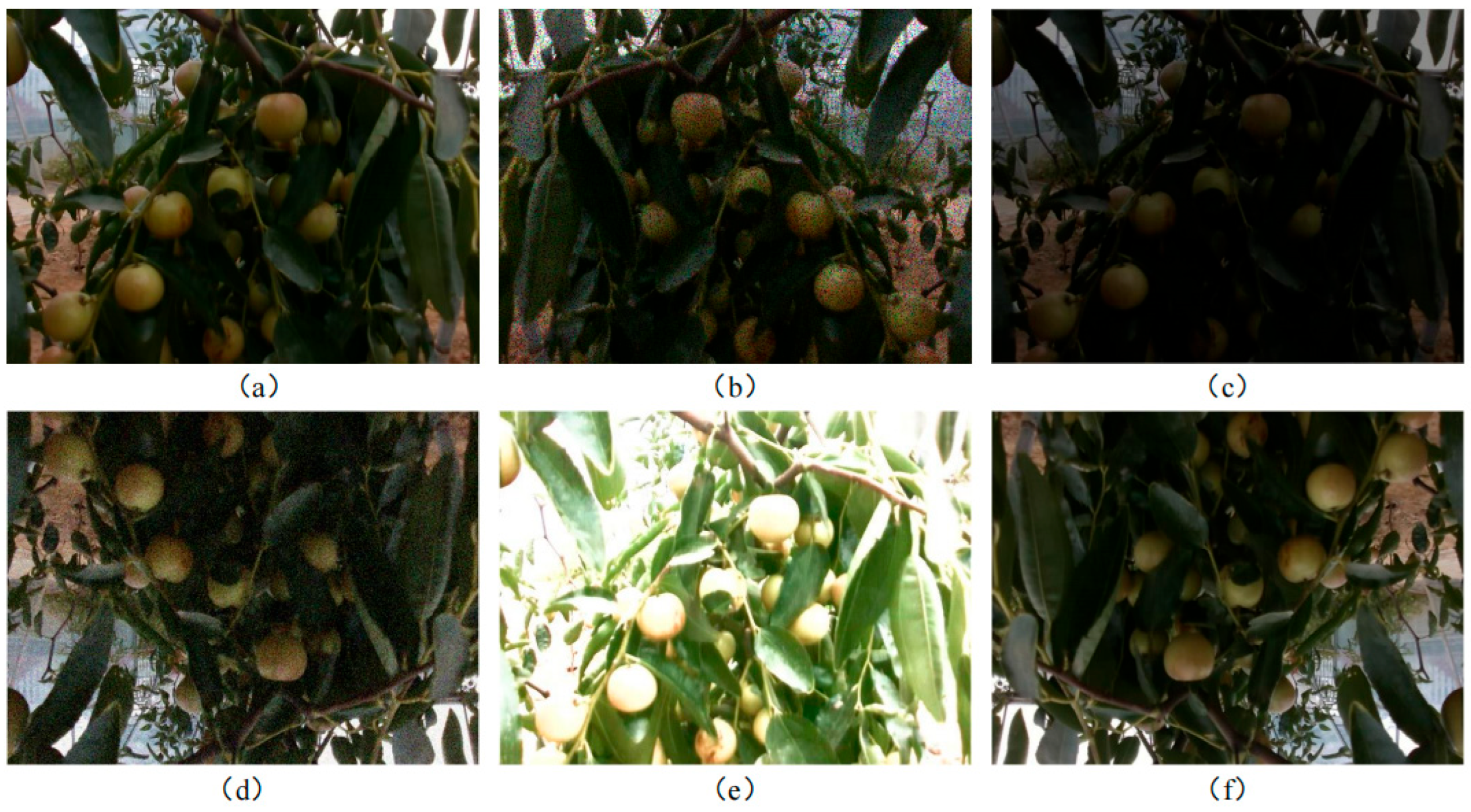

To improve robustness and generalization, we used a two-stage scheme: offline augmentation before training and online augmentation during training. Offline, data diversity was expanded via rotation, mirroring, cropping, and color/brightness/contrast adjustments to simulate varied scenes. Online, stochastic transforms were applied to curb overfitting (

Figure 2). This pipeline produced 3680 usable images, substantially enlarging the training set.

In addition, Each image was meticulously labeled in LabelImg, ensuring every winter jujube target was correctly boxed and tagged. The dataset was then randomly divided into training and test sets in an 8:2 ratio to ensure balanced distribution and independence, thus laying the foundation for subsequent model training and evaluation.

2.2. SCC-YOLO for Winter Jujube Target Recognition

2.2.1. YOLOv11 Model

Developed by Ultralytics, YOLOv11 retains prior strengths yet advances detection accuracy, throughput, and adaptability. It introduces coordinated updates to architecture, training, and inference, marking a notable step beyond YOLOv8. Building on YOLOv8’s efficient convolutions and residual routes, YOLOv11 reinforces feature extraction for low-resolution and cluttered imagery, delivering more stable detections under challenging conditions.

Across the backbone–neck–head pipeline, YOLOv11 applies unified upgrades. The backbone replaces heavy stems with lightweight, high-throughput blocks and enhanced residual paths, improving gradient flow and preserving details under low resolution, illumination variation, and clutter. The neck performs self-attention-aided multi-scale fusion to enable cross-level context exchange, which retains small/distant instances and alleviates long-tail bias. The head adopts a decoupled, anchor-free design with Transformer-style attention, separating classification from localization and stabilizing performance for dense/overlapping targets and diverse object sizes.

2.2.2. SCC-YOLO Model

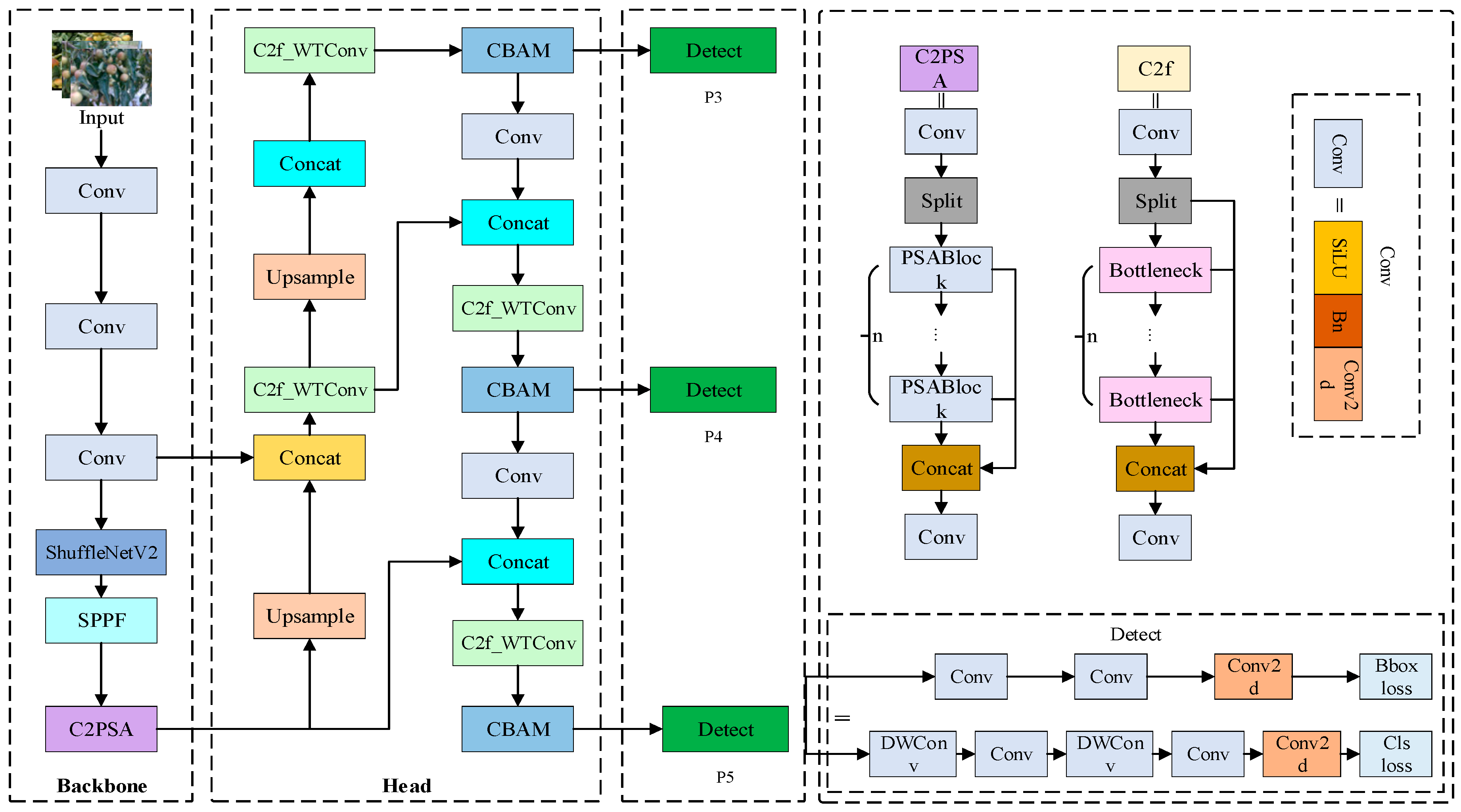

To overcome the low recognition accuracy of winter jujubes in orchard settings with the original YOLOv11 structure, this study introduces an enhanced YOLOv11-based detection model. First, The Backbone employs ShuffleNetV2, an efficient and lightweight convolutional network. The use of channel shuffle and depthwise separable convolution in ShuffleNetV2 reduces computation load and speeds up inference. Next, the CBAM (Convolutional Block Attention Module) attention mechanism is introduced in the head section. By combining channel and spatial attention, the module guides the network toward critical feature areas, enhancing detection precision in challenging environments. Finally, C2f_WTConv (C2f-Wide Transformer Convolution) is introduced in the head section of YOLOv11. C2f_WTConv merges convolutional operations with Transformer elements, enabling improved fusion of multi-level features across varying target scales, thus enhancing detection capabilities for large objects, small objects, and targets in complex scenes. The network structure of SCC-YOLO is shown in

Figure 3, and we elaborate on the specific improvements of the model in the following sections.

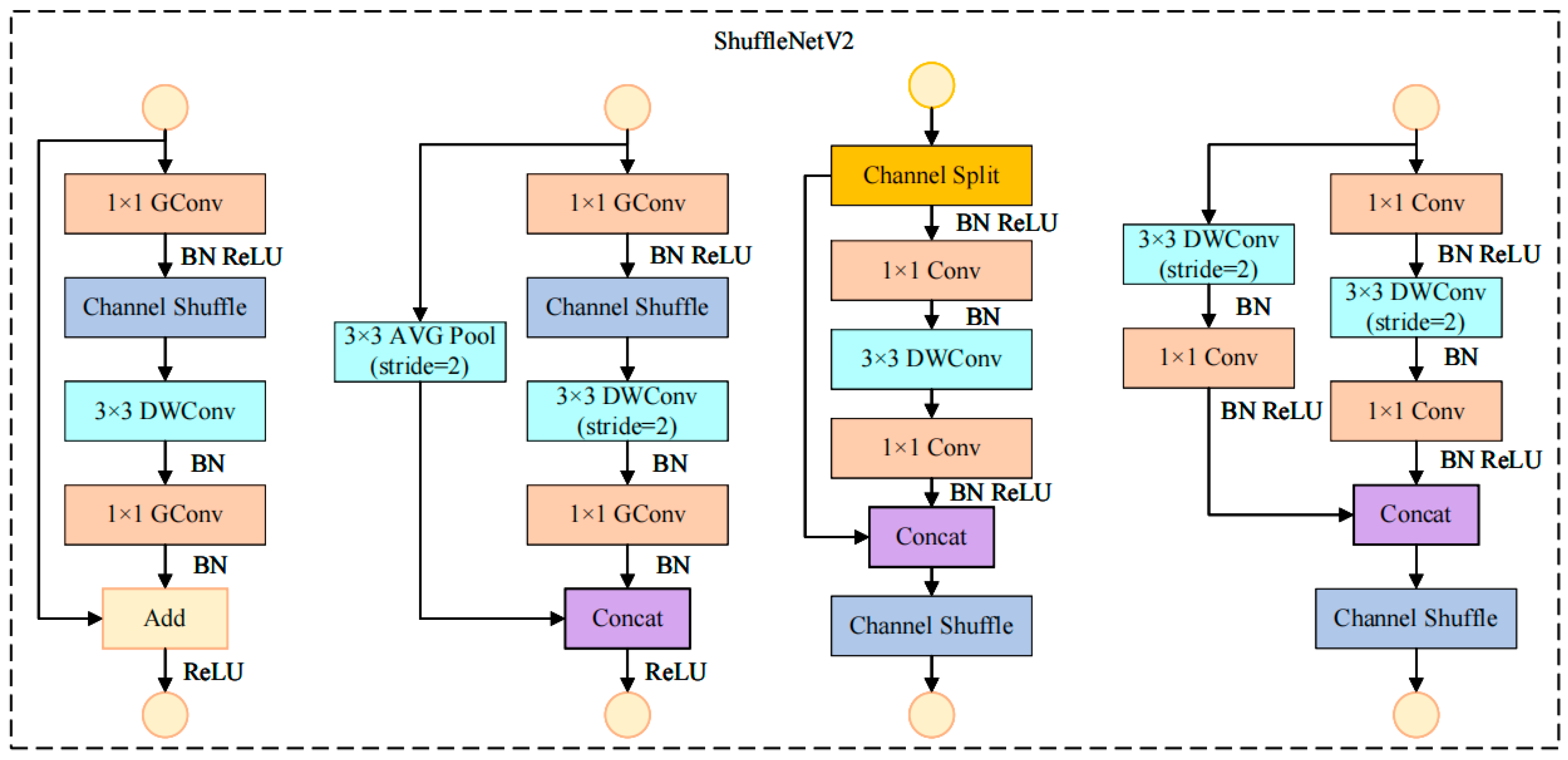

2.2.3. ShuffleNetV2-Based Backbone Redesign

ShuffleNetV2 is an efficient lightweight architecture designed specifically for platforms with limited computing resources, such as mobile and embedded systems. By employing channel shuffle and group convolution techniques, ShuffleNetV2 significantly reduces computational overhead while enhancing information exchange between feature maps. Such a design enables ShuffleNetV2 to maintain high accuracy while markedly reducing both computational demand and memory consumption. Unlike many lightweight networks, it converges quickly without heavy reliance on extensive data augmentation or complex regularization strategies.

Within the YOLOv11 backbone, ShuffleNetV2 serves as the primary network, replacing standard convolutional layers and the C3k2 module. Conventional convolutions require high computation and memory, and although the C3k2 module uses simple convolutions with non-linear activations, its efficiency remains limited, especially on devices with tight resources. YOLOv11 adopts ShuffleNetV2 to boost efficiency and speed up inference. The network structure of ShuffleNetV2 is shown in

Figure 4.

As shown in

Figure 4, By combining grouped convolutions with channel shuffle, ShuffleNetV2 increases computational efficiency while preserving strong representational capacity. Specifically, given an input tensor

, where H and W represent the height and width of the image, and C represents the number of input channels, a convolution operation is first applied. Typically, a convolution kernel of size

is used for the computation. The calculation Formula (1) is as follows:

where

,

is the number of output channels, and n is the size of the convolution kernel. Next, ShuffleNetV2 applies a pointwise convolution (1 × 1 convolution) to expand the feature channels to a higher dimension d, producing the output

, where

is used to enhance the feature representation capability.

To process global information,

is unfolded into multiple independent tiling blocks, each with a size of

, where P is the size of the block. The total number of blocks, N, is determined by the following Formulas (2) and (3):

Then, within each block of size

, a channel shuffle operation is applied to rearrange the channels between the blocks. Each block optimizes the information flow through the channel shuffle operation while preserving spatial order. The feature map

, after the channel shuffle, is folded back to the original size

, and a point wise convolution is applied to transform

back to C channels. After concatenation, a new feature map

is obtained. Finally, the concatenated feature map undergoes further fusion through an

convolutional layer to obtain the final feature representation (4):

This series of operations enables ShuffleNetV2 to fuse both local and global information while significantly reducing computational overhead, ensuring that YOLOv11 maintains high detection accuracy and inference speed even in low computational resource environments.

2.2.4. CBAM-Based Channel–Spatial Recalibration

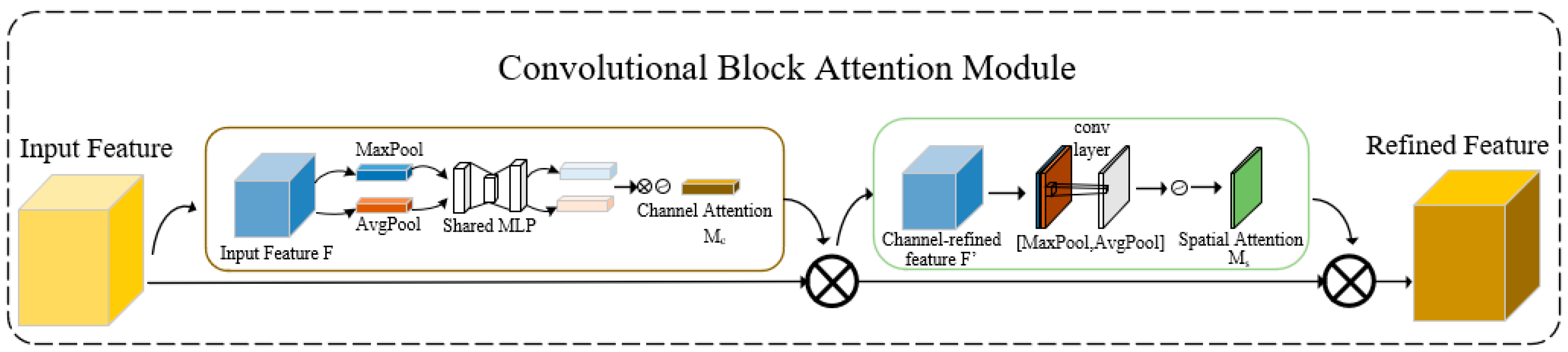

Winter jujube images exhibit substantial variation depending on shooting angles, illumination, and background conditions. Under such complex variations, conventional convolutional neural networks often find it difficult to capture essential features. In this study, the CBAM (Convolutional Block Attention Module) is introduced into the YOLOv11 model. CBAM is a compact attention module that strengthens a model’s capacity to focus on crucial features through the integration of channel and spatial attention. By weighting different image regions, the mechanism guides the network to adaptively attend to the most critical areas for winter jujube identification, thereby preventing the model from ignoring key target information.

The architecture of the CBAM module is shown in

Figure 5, which consists of both the channel attention module and the spatial attention module.

The CBAM module is applied to the P3, P4, and P5 layers to enhance the model’s focus on important regions in winter jujube images vary greatly with shooting angle, lighting, and background. Under such complex changes, standard CNNs struggle to extract key features. To address this, we introduce the CBAM (Convolutional Block Attention Module) into YOLOv11. CBAM is a lightweight unit that enhances focus on important cues by combining channel and spatial attention mechanisms to more accurately enhance the model’s perception of key regions of winter jujube targets at each scale, significantly improving the detection performance of multi-scale targets in complex environments, ensuring both detection accuracy and robustness.

Channel attention works by evaluating the significance of each channel and dynamically adjusting its weight in the feature map. Specifically, the channel attention mechanism first generates two pooled feature maps, representing the global information of the image, by applying average pooling and max pooling to the input feature map Y. Then, these two pooled results are processed through a fully connected (FC) layer, and finally, a Sigmoid activation function is used to generate the channel attention map

, as shown in Formula (5):

Through this mechanism, the network dynamically adjusts the channel weights based on the key features of the winter jujube, thereby enhancing its ability to perceive these features.

Spatial attention highlights the feature responses located at different spatial positions in an image. By evaluating the importance of each spatial location, it helps the network accurately recognize winter jujubes in complex backgrounds or under occlusion. The spatial attention mechanism extracts spatial information through average pooling and max pooling operations, concatenates the two pooled results, and then applies a convolution operation to generate the spatial attention map

, as shown in Formula (6):

here,

represents the concatenation of the results from average pooling and max pooling, and

is a

convolution operation used to generate the spatial attention map. Through this mechanism, the network can focus more on the core areas of the winter jujube, particularly in cases of partial occlusion or complex backgrounds, effectively reducing the influence of background noise and improving detection accuracy. By combining these two attention mechanisms, CBAM effectively enhances YOLOv11’s ability to process winter jujube images, ensuring detection accuracy and reliability, especially under complex environmental conditions.

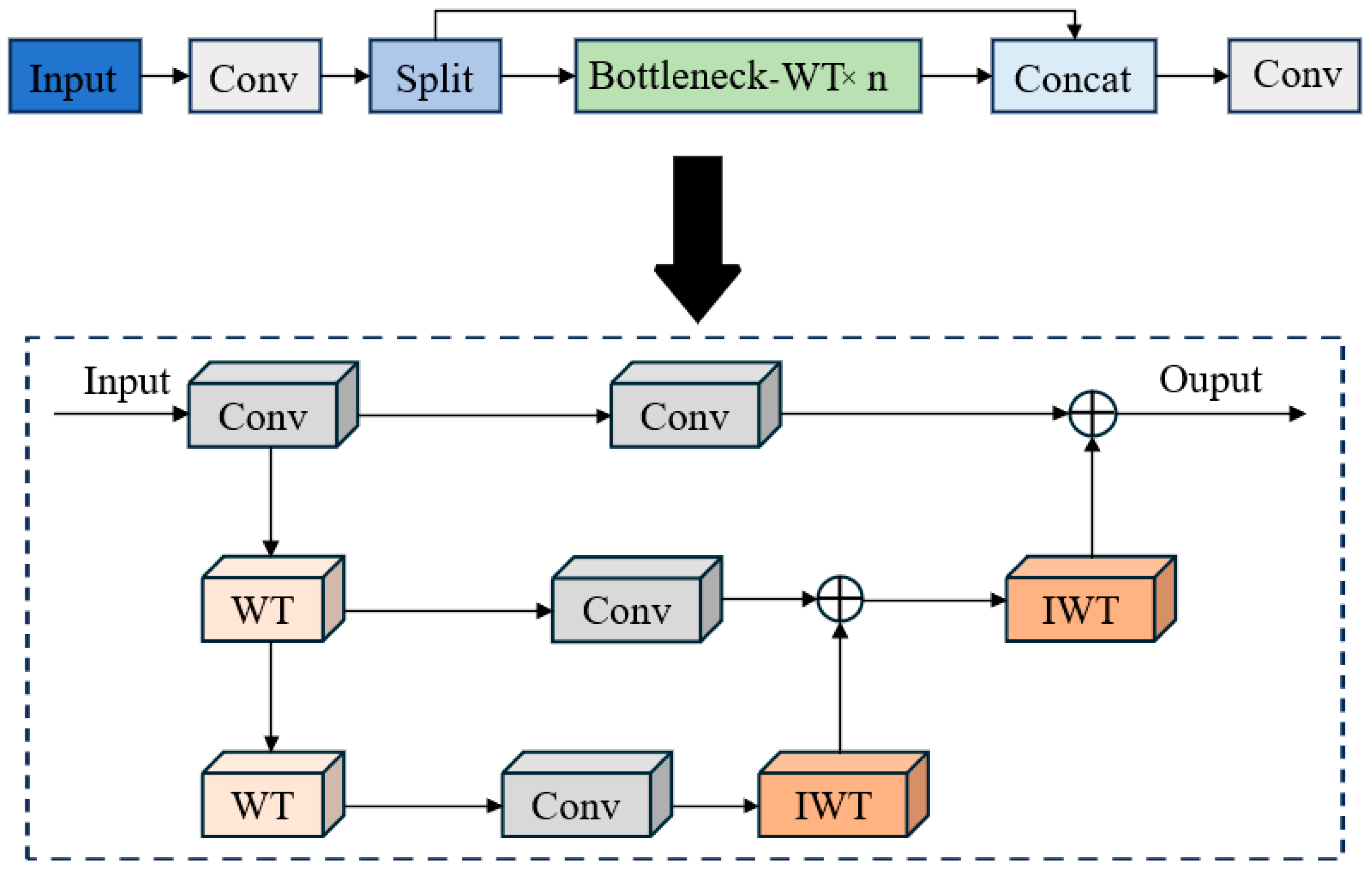

2.2.5. Spatial–Frequency Aware Head via C2f_WTConv

To strengthen YOLOv11’s representation ability and detection accuracy in complex scenes, we design an improved feature-extraction unit based on the C2f block, called C2f_WTConv (Channel-wise Wavelet Transformer Convolution); its structure is shown in

Figure 6.

The C2f_WTConv module retains the original C2f block’s multi-branch and fusion design, but introduces essential upgrades to improve joint spatial–frequency representation. In particular, one auxiliary branch integrates the Discrete Wavelet Transform (DWT) and Inverse Wavelet Transform (IWT). We adopt the Haar wavelet as the basis and apply a single-level decomposition, producing four sub-bands (LL, LH, HL, HH). Each sub-band is processed by a 3 × 3 depthwise separable convolution (DSC) followed by batch normalization and SiLU activation. The transformed sub-bands are then restored to the spatial domain by IWT and concatenated with the main C2f branch along the channel dimension. This explicit spatial–frequency fusion allows the model to capture both fine texture details and global structure, thereby improving small-object detection, resilience to occlusion, and performance in cluttered orchard scenes.

For higher efficiency, the module adopts Depthwise Separable Convolutions (DSC) for all layers, substantially reducing parameters and computation versus standard convolutions. It also employs Channel Shuffle to promote cross-branch and cross-channel information flow, thereby enriching feature diversity and improving discrimination.

To improve localization, YOLOv11’s original CIOU loss was substituted with DIoU loss, which jointly considers overlap and the center-point distance between predicted and ground-truth boxes. This modification increases regression accuracy, especially for small or closely clustered targets.

In summary, the C2f_WTConv module is a structural innovation, not merely a combination of existing parts. Through WT/IWT-based frequency modeling, lightweight convolution, and efficient channel interaction, together with DIoU loss, the improved YOLOv11 achieves better accuracy and robustness in complex agricultural settings like jujube orchards, providing a more practical and scalable solution for intelligent fruit detection.

2.3. Harvesting Position Localization

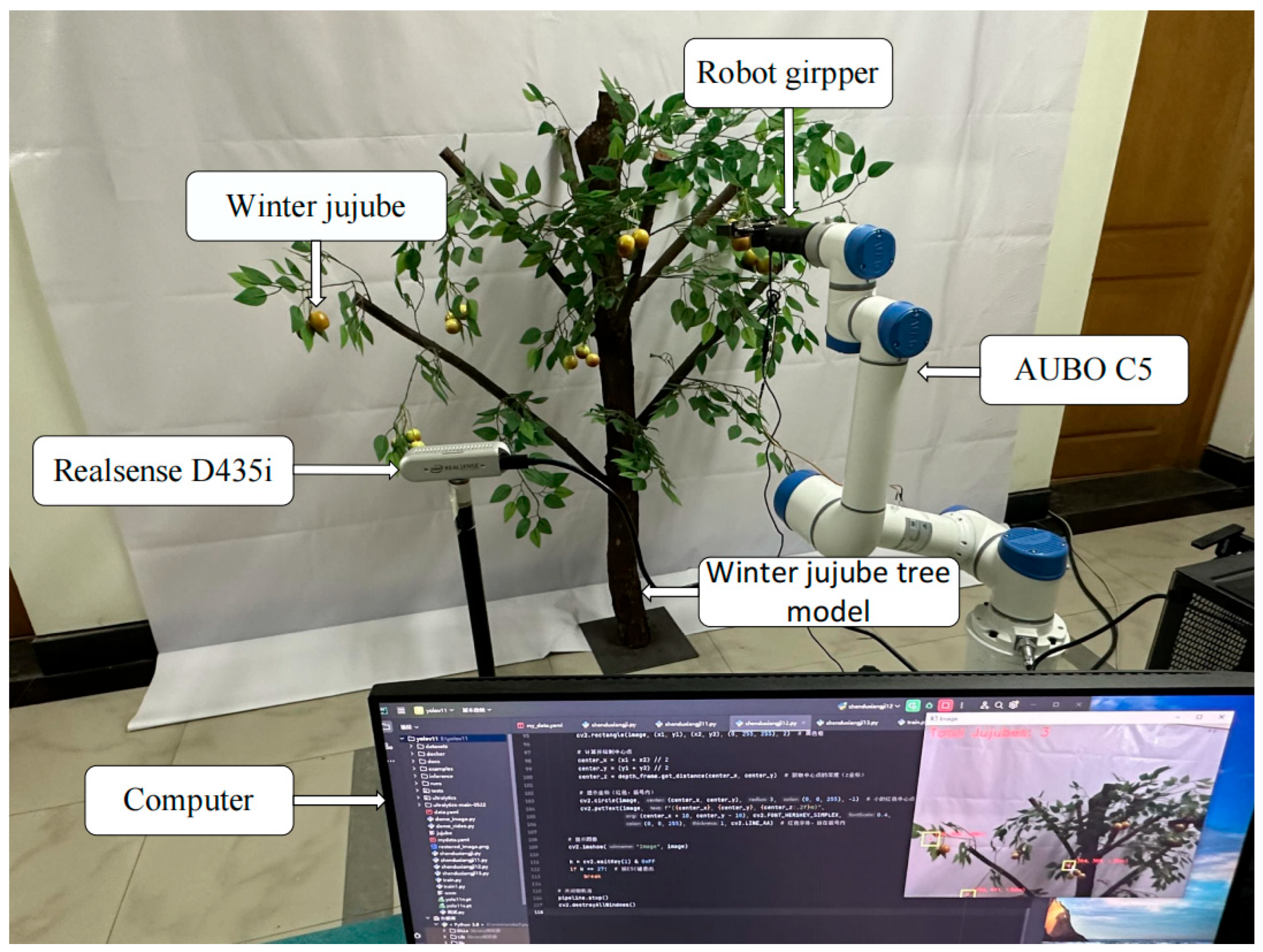

To improve the precision and efficiency of winter jujube harvesting, this study proposes a robotic harvesting system that integrates a depth camera with an improved YOLO object detection model.

Table 1 showed the core components of the system:

The system mainly consists of three key parts: an AUBO C5 robotic arm (manufactured by AUBO Robotics, located in Beijing, China), a RealSense D435i depth camera (manufactured by Intel Corporation, located in Santa Clara, CA, USA), and a computer-based control unit. Unlike conventional systems that depend on a single device, this setup integrates multiple modern technologies to achieve efficient, precise, and stable automation. The system is shown in

Figure 7.

The proposed winter jujube harvesting point localization process is divided into four main steps:

(1) Object Detection: First, system identifies winter jujubes and extracts their 2D positions with the improved YOLO detector. By integrating ShuffleNetV2, CBAM, and C2f_WTConv, detection accuracy and efficiency are significantly increased.

(2) Three-Dimensional Localization and Depth Information Processing: After determining the 2D positions, the RealSense D435i obtains depth information to support 3D positioning. The specific calculation Formulas (7)–(9) are as follows:

here,

represents the 2D coordinates of the winter jujube localization, d is the corresponding depth, representing the distance from the camera to the center of the winter jujube,

is the 3D coordinates of the winter jujube localization,

and

represent the camera’s focal lengths in the xxx- and yyy-directions, respectively.

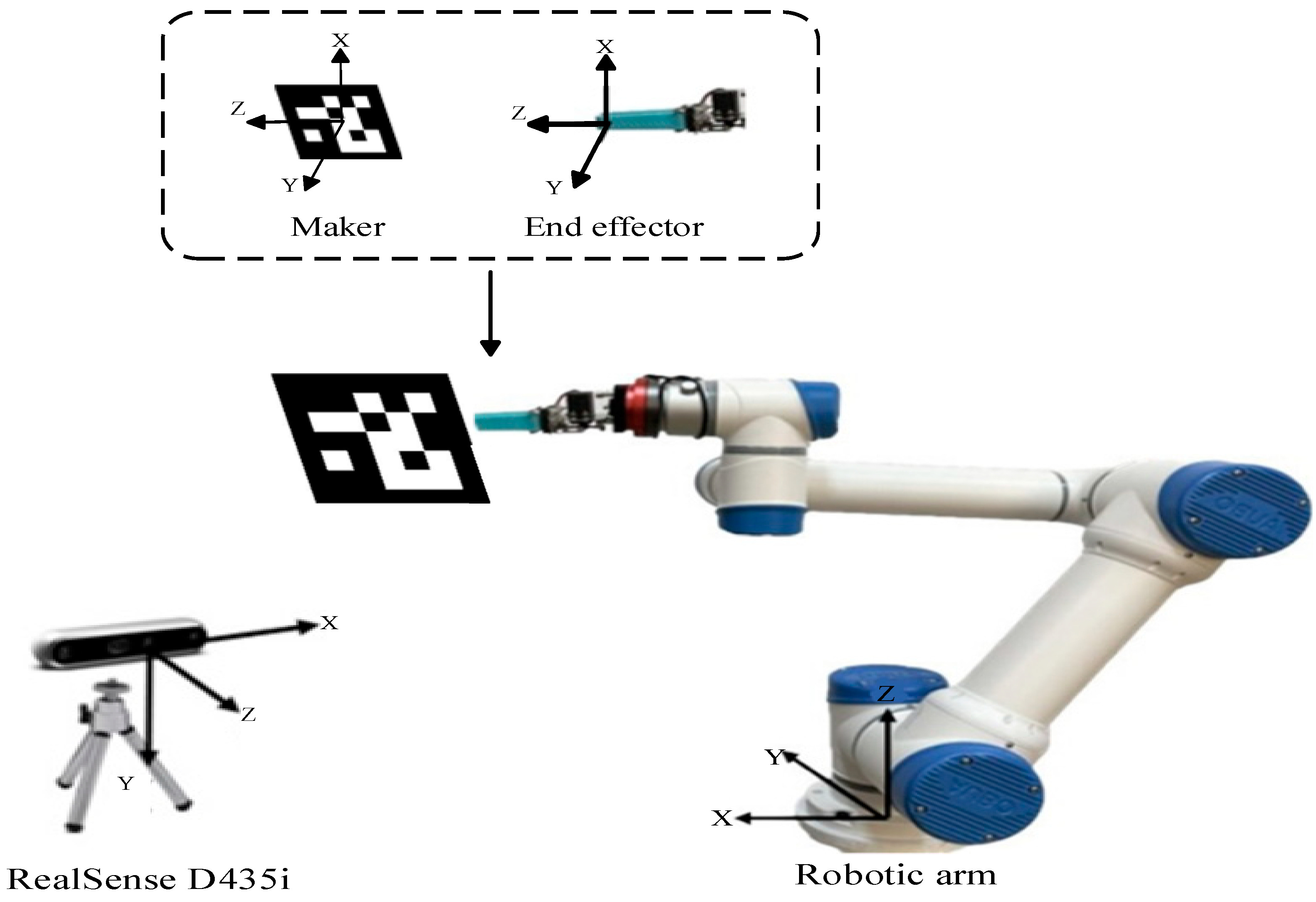

Coordinate Transformation: This study applies a hand–eye calibration method to precisely map the detected 3D coordinates of winter jujubes to the robot’s coordinate frame.d, as shown in

Figure 8. This step uses ArUco markers together with the positional robot’s end effector to determine the coordinate transformation. The specific calculation Formula (10) is as follows:

here,

is the detected 3D position in the camera coordinate system,

represents the 3D position of the winter jujube in the robot base coordinate system, R is the rotation matrix, and T is the translation vector.

Precise Robotic Harvesting: Based on the calculated 3D coordinates, the robotic arm performs accurate fruit harvesting. Thanks to optimized coordinate mapping and depth handling, the system remains stable in complex environments while ensuring picking precision and efficiency.

Based on the aforementioned steps, this study successfully achieves efficient and precise winter jujube harvesting through the optimized YOLO object detection model and accurate coordinate transformation method. The process of detecting and locating winter jujubes with the robotic harvesting system is shown in

Figure 9.

2.4. Environment Configuration

To ensure experimental reliability and precision, comprehensive environment configurations were adopted, particularly for YOLOv11 training and deployment. Detailed configuration parameters are listed in

Table 2.

2.5. Metric Evaluation

Assessment of deep learning detection models requires the use of multiple performance indicators. SCC-YOLO’s performance was evaluated using six indicators: Precision, Recall, number of parameters, mAP, FPS, and model size. These metrics provide a multi-dimensional evaluation of detection capability in complex orchard conditions. To assess the accuracy of the harvesting point localization, the absolute error and standard error in the x, y, and z directions are used as the main evaluation metrics. The detailed formulas for calculations (11)–(20) are listed below:

Here, TP represents the number of true positive samples, FP represents the number of false positive samples, and FN represents the number of false negative samples. Precision represents the proportion of correctly identified positives among all predicted positive samples. Recall measures the percentage of actual positive samples correctly identified as positive by the model.mAP (mean Average Precision) represents the average precision across all classes; the higher the mAP score, the better the model’s performance. Since this study involves only one detection class, mAP equals AP (Average Precision). n represents the number of image samples, denotes the precision when the image is identified, and represents the change in precision at different recall rates. refer to the absolute localization errors in the X, Y, and Z directions, respectively. represent the predicted coordinates of the winter jujube harvesting points in the X, Y, and Z directions, and represent the true coordinates of the winter jujube harvesting points in the X, Y, and Z directions. Lastly, represent the standard errors in the X, Y, and Z directions, respectively, and refer to the mean errors in the X, Y, and Z directions.

2.6. Model Deployment and Testing Methods

To highlight the practicality of edge-side deployment, this study reports the desktop-side results only briefly as an upper-bound performance baseline, while emphasizing mobile-side deployment and empirical testing.

2.6.1. Desktop-Side Deployment and Testing

The desktop-side experiments were conducted on a Windows 11 operating system equipped with an NVIDIA RTX 4070 Ti GPU, CUDA 11.6, and cuDNN 8.2.1. First, the trained SCC-YOLO model was loaded in the PyTorch framework for inference testing. The model was then exported to ONNX format, and inference acceleration was performed using ONNX Runtime (CUDAExecutionProvider). During testing, the input resolution was set to 640 × 640, and the batch size was fixed at 1. Each test image was processed 100 consecutive times, with the average frames per second (FPS), inference latency, and standard deviation recorded. An FPS distribution boxplot and a latency histogram were subsequently generated to comprehensively evaluate the real-time performance and stability of different inference frameworks.

2.6.2. Mobile-Side Deployment and Testing

The mobile-side deployment was conducted on a Huawei Mate 40 smartphone (Kirin 9000 chipset, 8 GB RAM, Android 13). The deployment environment consisted of Android Studio Koala|2024.1.1, Gradle 8.2.1, NDK r26c, OpenCV 4.8.0, and the NCNN inference framework with Vulkan and FP16 acceleration enabled. The model conversion pipeline was as follows:

(1) Export the trained PyTorch model to ONNX format;

(2) Use the NCNN toolchain to convert the ONNX model into .param and .bin files;

(3) Place the model files in the assets directory of the Android project, and load them NCNN API calls in the C++ layer for inference;

(4) Enable Vulkan computation and FP16 storage during inference to improve throughput and reduce memory usage.

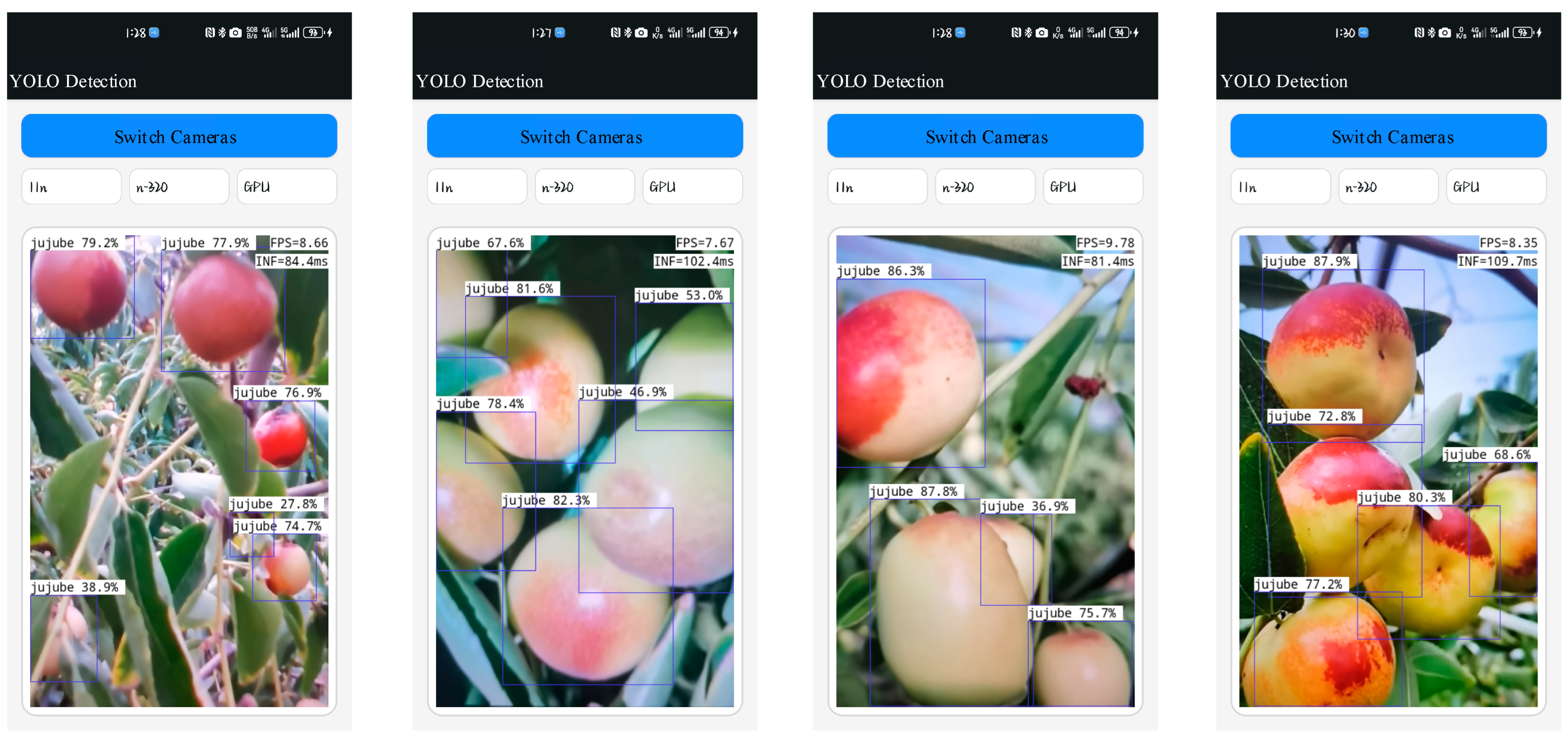

To evaluate the real-time performance and detection capability on the mobile side, real-time camera inference experiments were conducted outdoors under natural lighting conditions, with an input resolution of 640 × 640 and a batch size of 1. In Vulkan+FP16 mode, the frame rate (FPS) varied between 7 and 13 depending on scene complexity. As shown in

Figure 10, even in complex scenarios with multiple objects blocking and background interference, the system can maintain a relatively good frame rate and accurately identify multiple winter jujubes. Although this meets the basic real-time detection requirements, in actual continuous picking tasks, the robot needs to perform not only object detection, but also precise positioning and robotic arm execution. Any delay in this process may affect the overall efficiency of the system. Therefore, although mobile deployment provides initial feasibility for robotic picking, further in-depth performance evaluation is still needed to ensure its stable operation in complex dynamic environments. We plan to further evaluate the performance of the system throughout the continuous picking task chain, including latency, real-time performance, power consumption, and efficiency, to better support practical applications.

3. Results and Discussion

3.1. Ablation Study

To examine the effect of modules such as ShuffleNetV2, CBAM, and C2f_WTConv on winter jujube detection, YOLOv11 served as the baseline model, and these components were incrementally integrated for comparison. As shown in

Table 3, each module’s contribution to robustness, detection accuracy, and real-time performance was assessed to refine detection and positioning. Specifically, Employing ShuffleNetV2 in the backbone enhanced performance while greatly lowering complexity and size, cutting FLOPs by 3.0 G and shrinking model size by 57.65%. With the CBAM module added to the head, Precision rose by 2.0%, Recall by 0.20%, while mAP showed a minor decline. With the addition of the C2f_WTConv module, Precision and Recall reached 91.74% and 86.62%, respectively, while mAP improved to 83.90%. Finally, with the DIOU module included, the integration of all components achieved Precision of 92.80%, Recall of 86.85%, and mAP of 85.30%, marking the highest accuracy. Furthermore, the overall lightweight advantage of the model was fully realized, significantly reducing computational load and storage requirements, ensuring efficient operation under resource-constrained conditions.As shown in

Figure 11, the four sample images demonstrate the results after model improvement.

Detection performance was compared between the baseline model and its improved versions. Every model was trained and evaluated on an identical dataset that included diverse lighting, occlusion, viewing angles, and maturity stages. Additionally, to further enhance robustness, data augmentation methods such as rotation, cropping, and brightness adjustment were applied, enabling better adaptability in complex orchard environments. In scenarios with poor lighting or leaf blockage, the standard YOLOv11 model experienced significant instances of missed detection. Additionally, for winter jujubes that overlapped or were partially occluded, the YOLOv11 model exhibited a high rate of both missed and false detections. This occurs mainly because low resolution for small, densely packed objects makes it hard to differentiate closely spaced or partly overlapping targets. Particularly when the targets were occluded, important feature information was often not extracted, affecting the accuracy of target recognition. SCC-YOLO demonstrated notable performance gains over YOLOv11 when tested in such challenging conditions. This was primarily due to the introduction of advanced modules such as ShuffleNetV2, CBAM, and C2f_WTConv. By integrating these innovative modules, SCC-YOLO displayed stronger robustness in complex environments, more accurately capturing target features. The model performed significantly better, particularly in cases of insufficient lighting or target occlusion.

3.2. Comparative Experiment

For a thorough assessment, SCC-YOLO was compared experimentally against multiple object detection models.

Table 4 provides a detailed summary of the performance results of these models across several metrics, including precision, recall, mAP, parameter count, model size, and FLOPs. SCC-YOLO achieved competitive performance on all metrics, with a clear advantage in computational efficiency.

In terms of precision, SCC-YOLO achieved 92.80%, exceeding both YOLOv7-tiny’s 90.03% and YOLOv10n’s 90.41%. For recall, SCC-YOLO achieved 86.85%, slightly lower than YOLOv7-tiny’s 86.91%, but still maintaining a high level. Regarding the mAP metric, SCC-YOLO achieved 85.32%, Although SCC-YOLO achieves slightly lower mAP than YOLOv7-tiny, it requires only about one-fifth of the parameters and has a significantly smaller model size. This compactness allows SCC-YOLO to deliver comparable detection accuracy with much lower storage and computational overhead on resource-constrained edge devices. In practical scenarios such as mobile orchard monitoring or embedded harvesting robots, hardware is often limited by storage capacity, processing speed, and power consumption. Under such conditions, lightweight design becomes more critical than pursuing the highest possible mAP. Therefore, SCC-YOLO, while maintaining high accuracy, is more suitable for edge deployment. In terms of model complexity, SCC-YOLO has the smallest parameter count (1,170,000) among all models, with FLOPs of 5.40 G, only slightly higher than YOLOv5n’s 4.50 G, and substantially lower than RT-DETR-L’s 110 G. As for model size, SCC-YOLO is the smallest among the compared models, with a size of just 2.53 MB, providing a significant advantage for edge deployment. It is worth noting that YOLOv12, the latest version in the YOLO series, achieved an mAP of 85.29%, comparable to SCC-YOLO, but with a larger parameter count (3.01 M) and model size (7.52 MB), further highlighting SCC-YOLO’s lightweight advantage for edge deployment.

Additionally, The detection capability of SCC-YOLO was assessed on winter jujube images with challenging conditions such as variable lighting, leaf coverage, and overlapping fruits, as shown in

Figure 12. Experimental findings indicate that in cases of leaf coverage or smaller fruit size, all models experienced reduced detection accuracy due to weaker feature visibility. However, SCC-YOLO showed greater robustness, with fewer false negatives under severe occlusion or faint features.

Under dim lighting, certain models (e.g., SSD) failed to distinguish winter jujubes from the background, leading to errors. Thanks to its improved architecture, SCC-YOLO identified low-contrast areas more accurately, avoiding such errors.

In summary, SCC-YOLO achieves an excellent balance between detection performance and computational efficiency, As shown in

Figure 12 and

Table 4, it maintains very small size and low computation while ensuring high accuracy and recall, This makes it particularly suitable for efficient detection tasks of winter jujube targets in complex orchard environments.

To further reinforce the reliability of the comparative results, we performed 5-fold cross-validation and conducted statistical significance testing. As summarized in

Table 5, the YOLO11 baseline achieved 0.827 mAP@[0.5,0.95] (95% CI [0.815, 0.839]), while SCC-YOLO reached 0.851 (95% CI [0.837, 0.865]). The paired difference was Δ = +0.024 (95% CI [+0.010, +0.038]), which was statistically significant under the paired

t-test (

p = 0.018) and the Wilcoxon test (

p = 0.031). For mAP@0.5, SCC-YOLO averaged 0.926 compared with 0.902 for the baseline, with Δ = +0.024 (95% CI [+0.009, +0.039],

p < 0.05). These findings confirm that SCC-YOLO consistently outperforms the baseline under cross-validation, providing robust statistical evidence for its advantages.

3.3. Three-Dimensional Localization Error Evaluation of Winter Jujube

To assess the real-world performance of the winter jujube harvesting system—combining the optimized YOLO model with an RGB-D camera as described in

Section 2.3—an autonomous robotic harvesting platform was developed (see

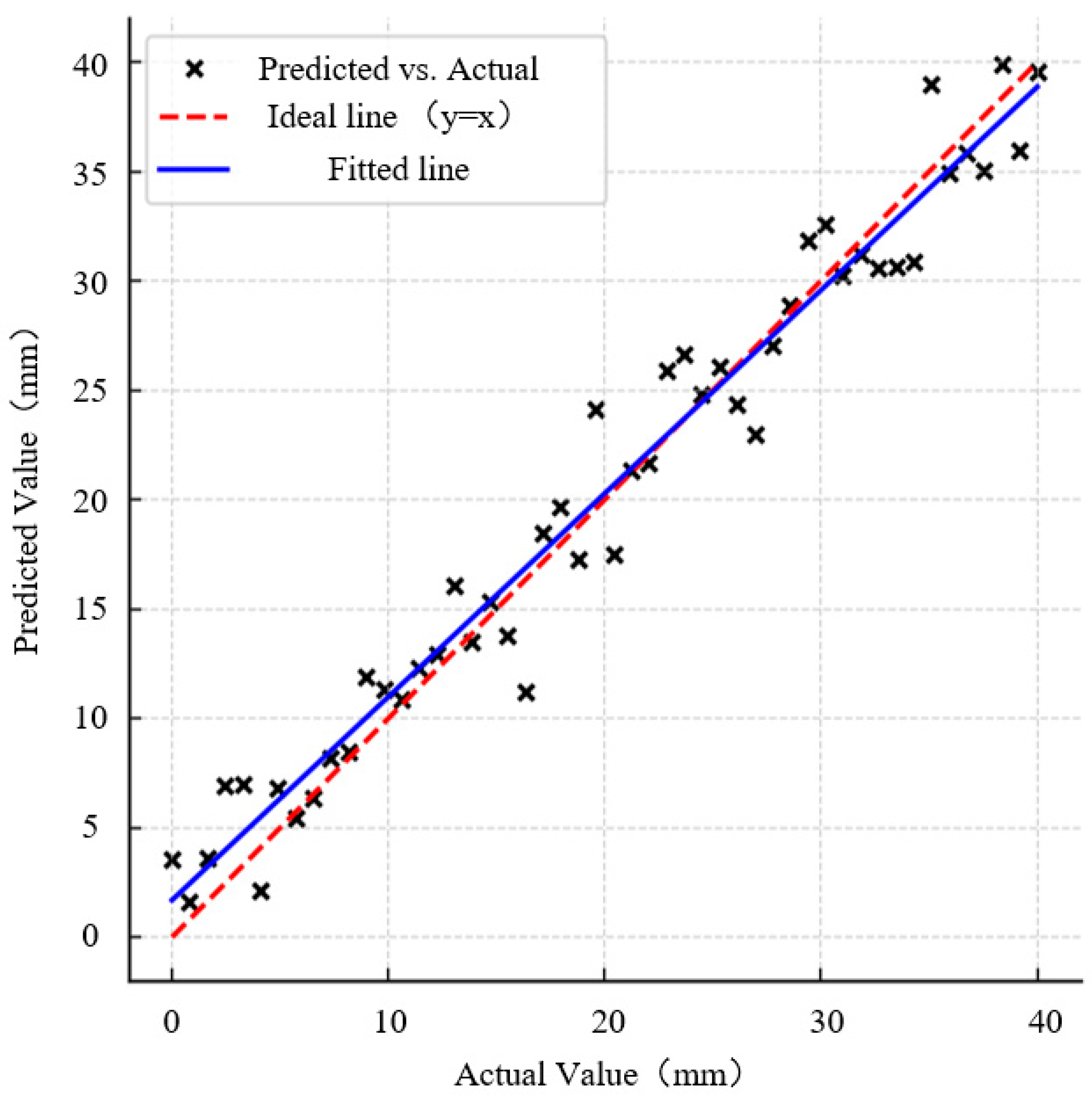

Figure 7). Experiments were carried out with artificial winter jujube trees in a lab setting to systematically assess the robot’s detection and picking abilities. The procedure began with the optimized YOLO model obtaining real-time 2D image coordinates of winter jujube targets. Second, the depth data from the RGB-D camera was combined with the 2D coordinates to perform 3D spatial localization, obtaining the spatial coordinates of the winter jujubes. Next, the spatial coordinate information was mapped into the robot’s coordinate system using the calibrated coordinate transformation relationship. Finally, the robot’s end effector was controlled to automatically move to the computed target harvesting point, and a high-precision laser rangefinder was used to record the position error between the actual location reached by the end effector and the predetermined target point in real-time.

To ensure the validity of the experimental data, each winter jujube target underwent five localization and harvesting experiments, with the average of the five measurement errors taken as the final result. Ten winter jujube targets positioned at various locations were randomly chosen for testing. Results showed mean localization errors of 3.62 mm, 4.83 mm, and 4.12 mm along the X, Y, and Z axes, respectively, with standard errors of 1.78 mm, 1.90 mm, and 1.64 mm, satisfying precise harvesting standards. To intuitively validate the localization accuracy,

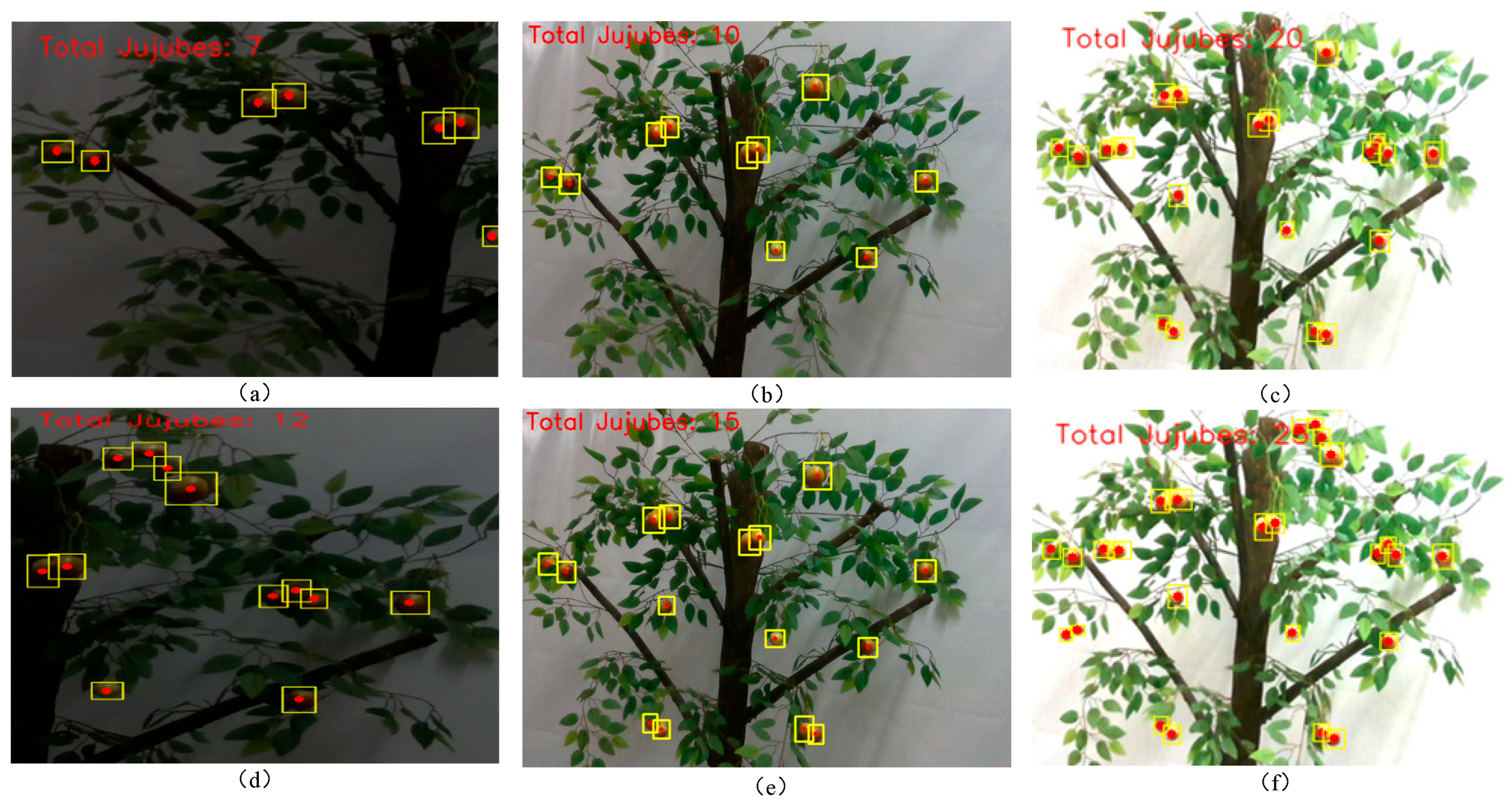

Figure 13 illustrates the relationship between predicted and actual positions of winter jujubes. The scatter points closely follow the fitted line and approach the ideal line, demonstrating that the system achieves high localization precision with minimal deviations. In addition to tests under normal indoor illumination, we also conducted supplementary experiments under dim-light and bright-light conditions to further evaluate the robustness of the system. Furthermore, to further validate the system’s actual harvesting capability, robotic autonomous harvesting experiments were conducted in a real environment.

Figure 14 and

Figure 15 illustrate the detection process and the actual harvesting process of winter jujubes, respectively. Experimental findings confirm that the proposed winter jujube detection and harvesting system delivers strong real-time performance and high accuracy, effectively meeting the demands for automated winter jujube harvesting in practical production.

3.4. Deployment Performance Comparison on Desktop and Mobile

To comprehensively evaluate the inference performance of the proposed SCC-YOLO model across different computing platforms, this section compares the results obtained on the desktop side (Windows + GPU) and the mobile side (Huawei Mate 40), with a focus on FPS, inference latency, and performance stability.The evaluation followed the methodology below:

(1) Desktop side: On a Windows 11 system equipped with an RTX 4070 Ti GPU, inference was performed on a single 640 × 640 image using both the PyTorch framework and ONNX Runtime (CUDAExecutionProvider), with a batch size of 1. Each image was processed 100 consecutive times, and the average was recorded for stability.

(2) Mobile side: On a Huawei Mate 40 smartphone (Kirin 9000, 8 GB RAM, Android 13), the deployment pipeline was PyTorch → ONNX → NCNN. Inference performance was evaluated under both GPU-based Vulkan+FP16 mode and CPU-only mode, following the same test conditions as the desktop side. The Vulkan+FP16 mode exploited the device’s GPU for acceleration and was selected as the primary deployment scheme.

Table 6 summarized the inference performance comparison across different platforms and modes. The results indicate that, in the desktop environment, the ONNX Runtime mode, with the support of a high-performance GPU, achieved a substantially higher average FPS than the PyTorch mode (173.36 ± 16.75 vs. 46.28 ± 3.32) and a lower inference latency (5.82 ± 0.59 ms vs. 21.72 ± 1.54 ms), with minimal performance fluctuation. These findings confirm that the optimized ONNX inference engine significantly improves throughput and reduces latency compared to the baseline PyTorch framework.

On the mobile side, the results show that the GPU-accelerated (Vulkan+FP16) mode, by leveraging the parallel computing capabilities of the mobile SoC’s built-in GPU, achieved a notable increase in average FPS compared with the CPU-only mode, along with a substantial reduction in inference latency. Moreover, the performance fluctuations during continuous inference were minimal, enabling stable real-time detection under resource-constrained conditions. In contrast, although the CPU-only mode offers lower hardware power consumption, it failed to meet real-time requirements in terms of both speed and latency.

A comprehensive analysis indicates that, on the desktop side, the combination of a high-performance GPU and an optimized inference engine delivers the highest throughput and the lowest latency, making it well suited for scenarios with abundant computing resources such as batch data processing, high-precision detection, and offline analysis. Although the absolute performance of the mobile GPU-accelerated (Vulkan+FP16) scheme is lower than that of the desktop platform, it fully satisfies the real-time requirements for orchard on-site detection, edge computing, and on-device deployment in unmanned systems, while offering significant advantages in power consumption and deployment cost. Despite the pronounced differences in FPS and latency between desktop and mobile platforms, this gap reflects the model’s capability to achieve maximum performance on resource-rich platforms while maintaining stable real-time detection on resource-constrained devices. These findings verify the proposed SCC-YOLO model’s transferability and engineering applicability across diverse scenarios and platforms, laying a solid foundation for its promotion in practical agricultural production. Despite achieving 7–13 FPS in complex scenarios on the mobile side, which meets the basic real-time requirements, the system may still encounter latency during continuous harvesting tasks in practical applications. In addition to FPS, latency, and stability, we also evaluated power consumption, memory footprint, and device heating during mobile inference, as these aspects are critical for battery-powered agricultural robots. In Vulkan+FP16 mode, the average power consumption during continuous inference was approximately 4.7 W, compared to 3.2 W in CPU-only mode. The peak memory usage reached about 618 MB (GPU) versus 412 MB (CPU). Furthermore, the device surface temperature near the SoC region increased by 6.9 °C after 10 min of continuous inference in GPU mode, while the CPU-only mode showed a smaller rise of 3.1 °C. These results suggest that although GPU acceleration introduces higher power and thermal overhead, the values remain within the acceptable range for mobile devices and are offset by the significant gains in inference speed and real-time detection capability. Therefore, future work should not only focus on optimizing model performance but also on thermal management and energy efficiency to ensure stable operation in long-term dynamic environments, particularly for automated harvesting robots.

3.5. Comparison of Related Studies

To further validate the effectiveness of the SCC-YOLO model proposed in this research for winter jujube detection and localization, this section compares it with other related object detection models proposed in recent studies.

In detection testing, SCC-YOLO reached 92.80% precision, 86.85% recall, and 85.30% mAP. In terms of model lightweighting, SCC-YOLO has approximately 1,170,521 parameters and a model size of 2.53 MB. It also demonstrates high computational efficiency and real-time performance, making it suitable for deployment in resource-constrained environments.

Ma et al. developed the STRAW-YOLO model for identifying strawberries and locating key points. Experimental results show that it achieved a Precision of 91.6%, Recall of 91.7%, and an impressive mAP of 96% [

11]. Despite its strong performance, the model has not undergone significant optimization in terms of parameter size and computational complexity, which presents challenges for deployment in resource-limited environments. Wu et al. designed the DNE-YOLO model for apple fruit detection in natural environments, achieving Precision of 90.7%, Recall of 88.9%, and mAP of 94.3% [

13]. Although the model showed high accuracy, it contains roughly 10.46 M parameters and requires substantial computation, which restricts its use in resource-limited settings.

Furthermore, in terms of 3D localization, Wang et al. proposed the A3N network, which combines RGB-D sensor information to achieve fruit instance segmentation and grasp pose estimation in complex orchard environments. The center grasp error was about 0.61 cm, with a computation time of 35 ms [

28]. Zhang et al. designed an active laser-camera scanning system (ALACS), which achieved localization errors ranging from 6.9 to 11.2 mm under complex lighting conditions, showing stronger environmental adaptability [

29]. However, these approaches involve additional hardware costs, with complexity and expenses considerably higher than the RGB-D and YOLO-based detection scheme presented in this work.

Compared with the above methods, and as indicated in

Table 4, the SCC-YOLO proposed here holds notable advantages: it achieves substantial model light weighting while ensuring high detection accuracy, significantly reducing storage and computational requirements, making it more suitable for deployment on mobile devices or in edge computing environments. In addition, the model delivers strong computational efficiency along with real-time processing performance. Additionally, the RGB-D camera combined with YOLO detection method used in this research has a significantly lower cost and deployment complexity compared to additional hardware systems such as ALACS and the A3N network. Overall, SCC-YOLO offers a practical, efficient, and scalable solution for intelligent fruit harvesting systems, with added advantages in mobile deployment and real-time performance verified across platforms. In summary, SCC-YOLO provides clear advantages in detection accuracy, real-time operation, efficiency, and cost-effectiveness, positioning it as a strong option for large-scale application in practical winter jujube harvesting robots.

3.6. Limitations and Future Work

Although SCC-YOLO demonstrates promising results, several limitations should be acknowledged. From a societal and ecological perspective, robotic harvesting may reduce demand for seasonal labor and pose risks of unintended tree damage, underscoring the need to integrate technological innovation with social responsibility and ecological monitoring to ensure sustainable adoption.

In terms of robustness, while SCC-YOLO achieves high accuracy in both controlled and natural orchard environments, its performance may degrade under extreme weather (e.g., heavy rainfall, dense fog) or rapid fruit/branch movements, potentially leading to false detections or missed targets. Addressing these challenges will require further exploration of domain adaptation, multi-modal sensor fusion, and temporal filtering to ensure stability and reliability across diverse operating conditions. Moreover, since the dataset used in this study was collected from a single site, potential dataset-specific bias may limit the generalization of results. To overcome these limitations, future work will not only include cross-dataset validation with public agricultural benchmarks, but also extend the localization error experiments to real orchard environments with more diverse and complex conditions, thereby providing a more rigorous validation of model generalization.

Looking forward, SCC-YOLO can also be extended to broader applications. Future work may explore transfer learning for multi-crop adaptation, integration of SLAM modules for dynamic perception, and federated learning to enable distributed model improvement without centralized data aggregation. These directions will not only enhance the scalability and versatility of the framework but also ensure its alignment with long-term social and environmental goals.

4. Conclusions

This study presents SCC-YOLO, a compact and accurate detection model tailored for intelligent winter jujube harvesting. By combining an optimized YOLOv11 architecture with RGB-D sensing, the proposed system achieves robust detection and millimeter-level 3D localization in complex orchard environments. In addition, the model demonstrates excellent cross-platform deployment capabilities, supporting both high-performance desktop inference and resource-constrained mobile deployment. The specific conclusions are as follows:

(1) A lightweight SCC-YOLO framework was developed for winter jujube detection, reducing parameters, model size, and computational complexity while maintaining strong accuracy and efficiency.

(2) Architectural and loss-function refinements—including attention-based recalibration and a redesigned C2f_WTConv—enhance spatial–frequency feature learning and improve robustness to illumination changes, occlusion, and cluttered backgrounds.

(3) Coupled with RGB-D sensing and Eye-to-Hand calibration, SCC-YOLO lifts 2D detections to metric 3D coordinates in the robot base frame. Image coordinates are fused with depth and transformed via camera-to-base extrinsics, enabling precise harvest-point localization for automated picking in real orchards.

(4) For engineering validation, SCC-YOLO was deployed on desktop (PyTorch/ONNX Runtime) and mobile (NCNN + Vulkan/FP16). With matched inputs, throughput/latency/stability tests confirmed real-time operation on mobile and sustained high performance on desktop GPUs, demonstrating portability for edge computing.