3.2. Experimental Results

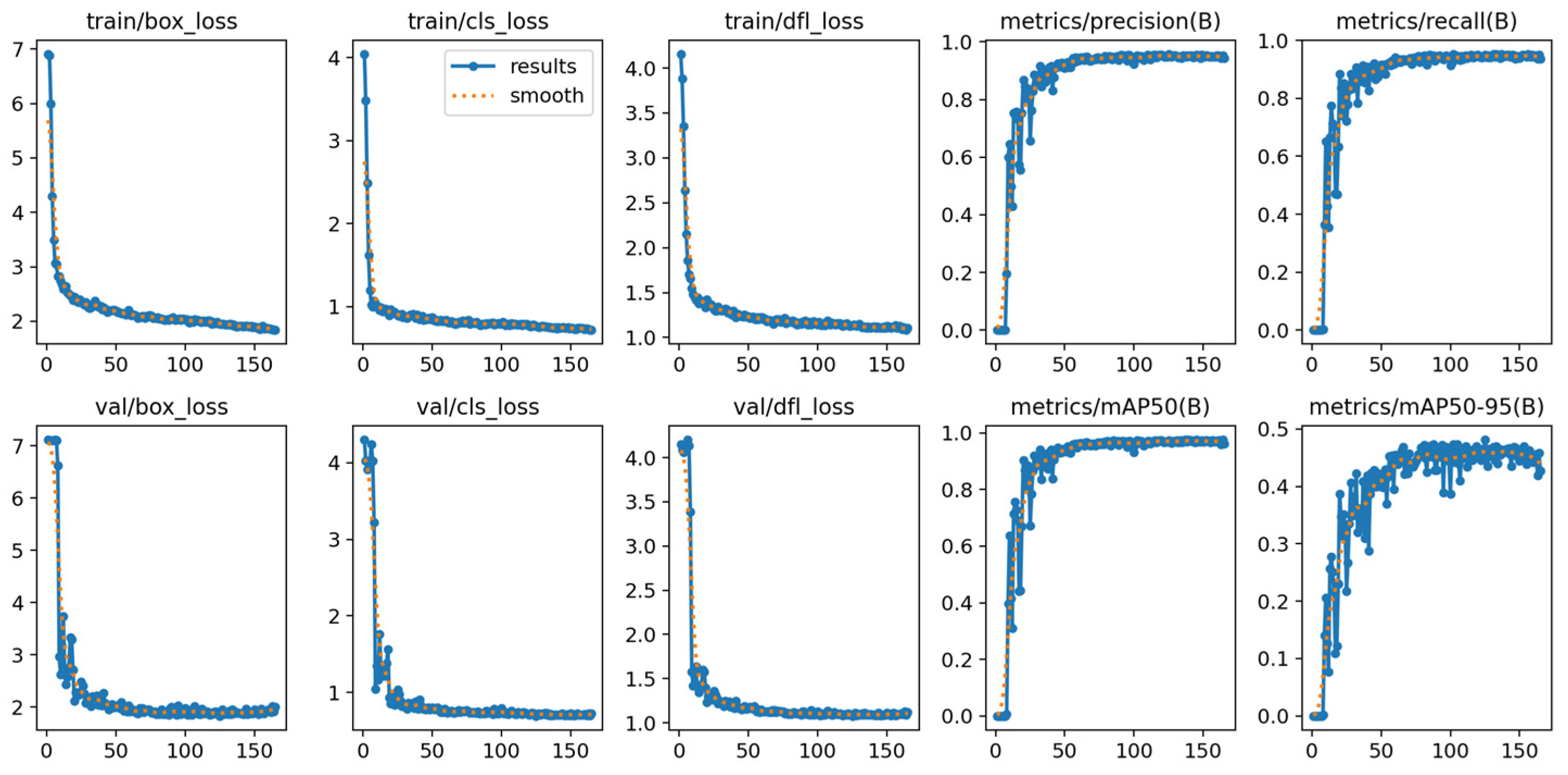

Figure 7 shows the loss function curve and the changes in accuracy, recall rate, and mAP metrics during the training process of the LF-Yolo model. As shown in the figure, the loss function value gradually decreases and converges toward stability with increasing training iterations. Meanwhile, the accuracy, recall, and mAP metrics exhibit an overall upward trend, demonstrating that the LF-Yolo model has effectively learned the required features.

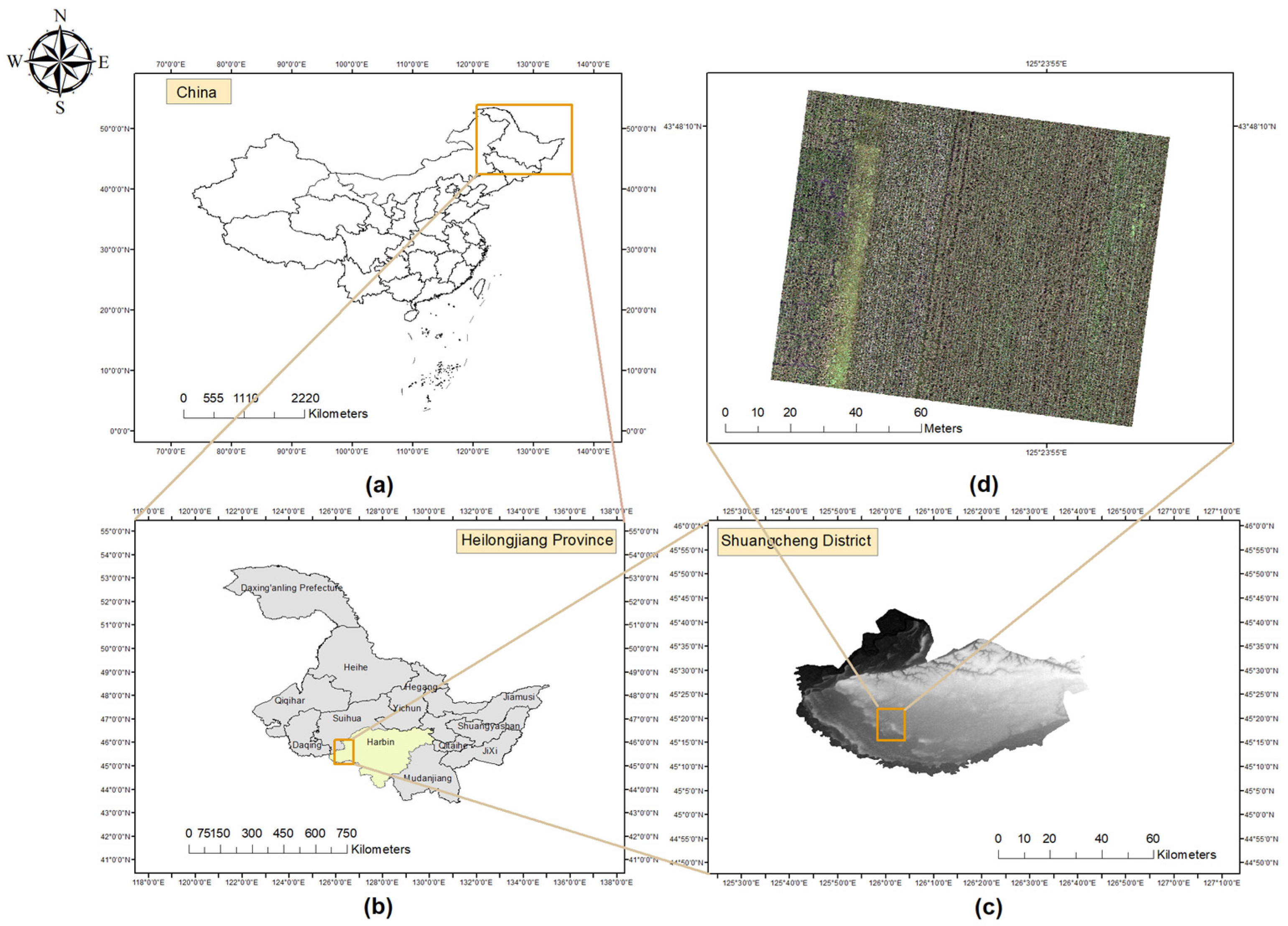

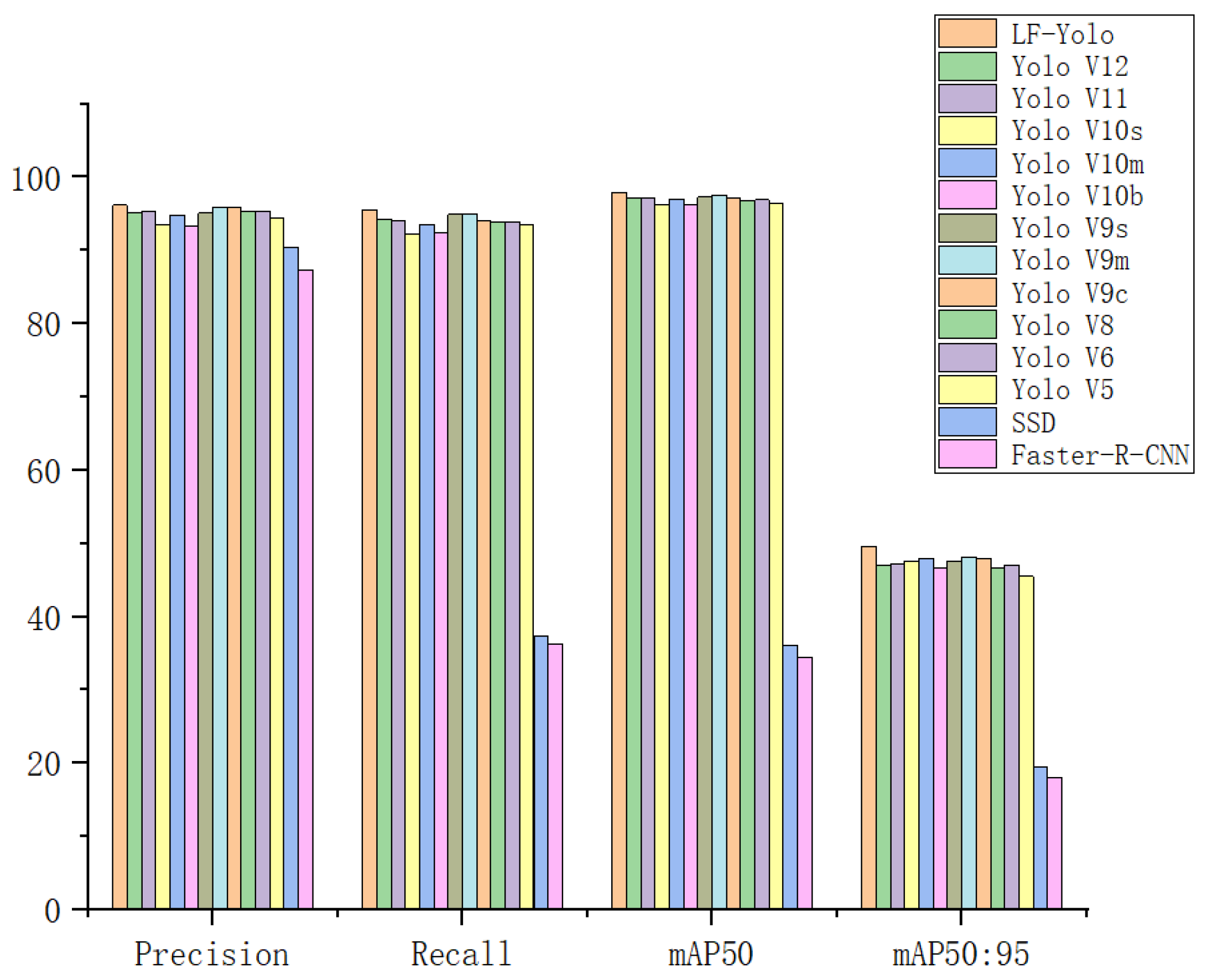

To validate the effectiveness of our designed model in the field of dense object detection in agricultural settings, this experiment selected multiple representative and widely used one-stage and two-stage models from the current object detection domain. These models were compared on the UAVMaize dataset to evaluate our model’s performance. The results of the comparative experiments are shown in

Table 3. The comparison results in the table demonstrate that LF-Yolo achieves stable improvements across key metrics compared to the other models used as benchmarks. Compared to the widely used YoloV8[

11] model in the object detection field, on the UAVMaize dataset, LF-Yolo achieves a 0.9% improvement in Precision, a 1.6% improvement in Recall, and increases of 1.1% and 2.9% in mAP50 and mAP50-95, respectively. The proposed LF-Yolo achieves superior detection accuracy at the cost of a slight increase in computational load and parameter count. When compared to larger models like YOLOv9c [

12], YOLOv9m, and YOLOv10b [

13], which exhibit significant increases in parameters and computational requirements, LF-Yolo still demonstrates superior overall performance efficiency. Using fewer parameters, LF-Yolo achieves higher precision.

Comprehensive comparisons across all experimental models demonstrate that the proposed LF-Yolo achieves highly competitive performance, outperforming widely used object detection models in all metrics while maintaining relatively low computational complexity. This strikes a balance between accuracy and efficiency. The comparison results are summarized in histograms shown in

Figure 8 and

Figure 9.

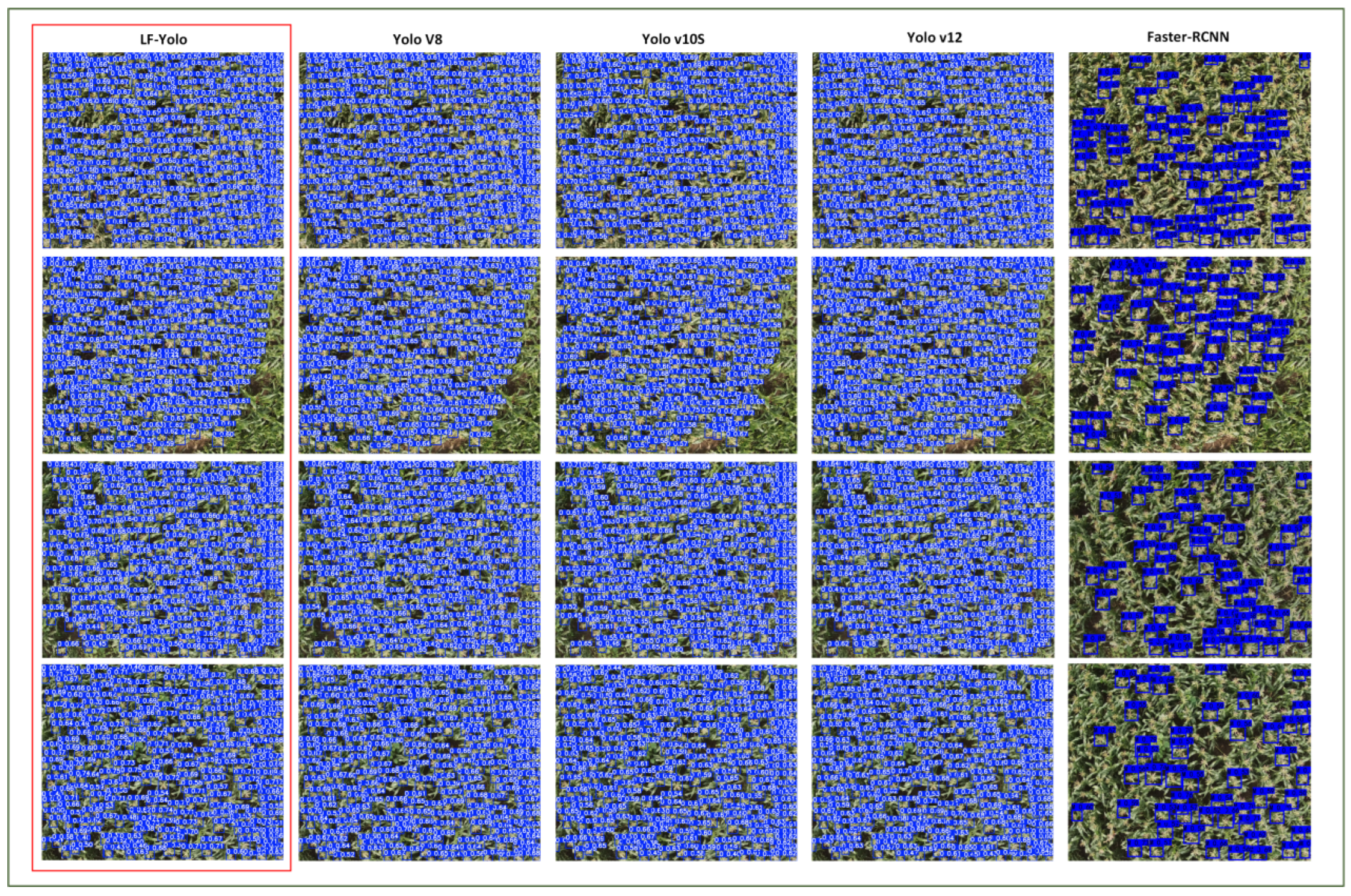

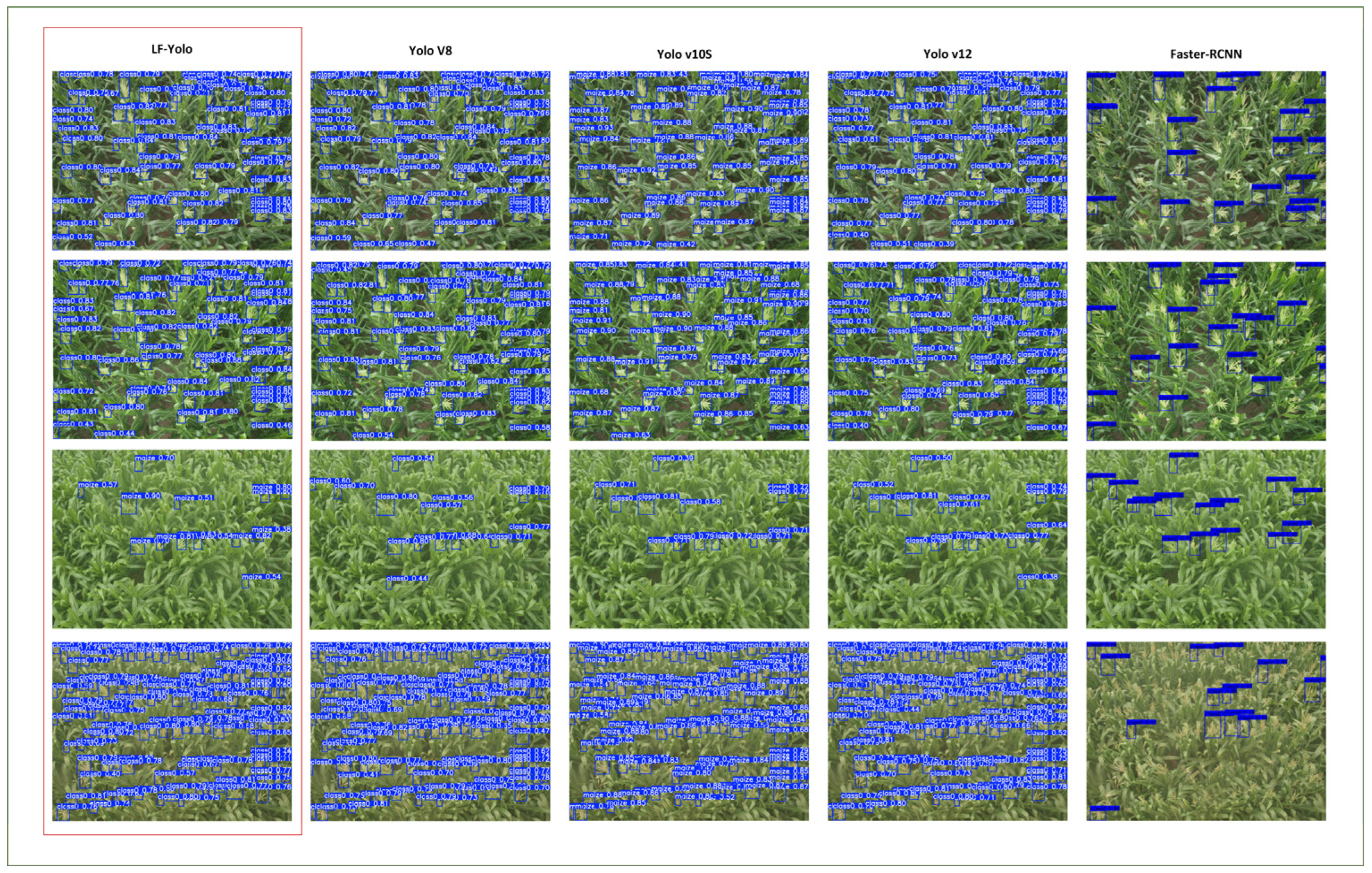

As shown in

Figure 10, this figure visualizes the prediction results from comparative experiments conducted on the UAVMaize dataset. For comparison models, we selected YoloV8, YoloV10s, YoloV12, and the two-stage classical object detection model Faster-R-CNN to contrast with the LF-Yolo model proposed in this paper. As shown in the figure, compared to other models, LF-Yolo achieves higher detection accuracy with fewer missed targets. Both YoloV8 and YoloV10s exhibit missed detections and false positives, such as detecting two ears as one. Although the Faster-R-CNN model demonstrates high detection accuracy, it also suffers from a high rate of missed detections, failing to identify numerous targets. Consequently, it is unsuitable for dense detection scenarios in field environments.

3.3. Generalization Experiment

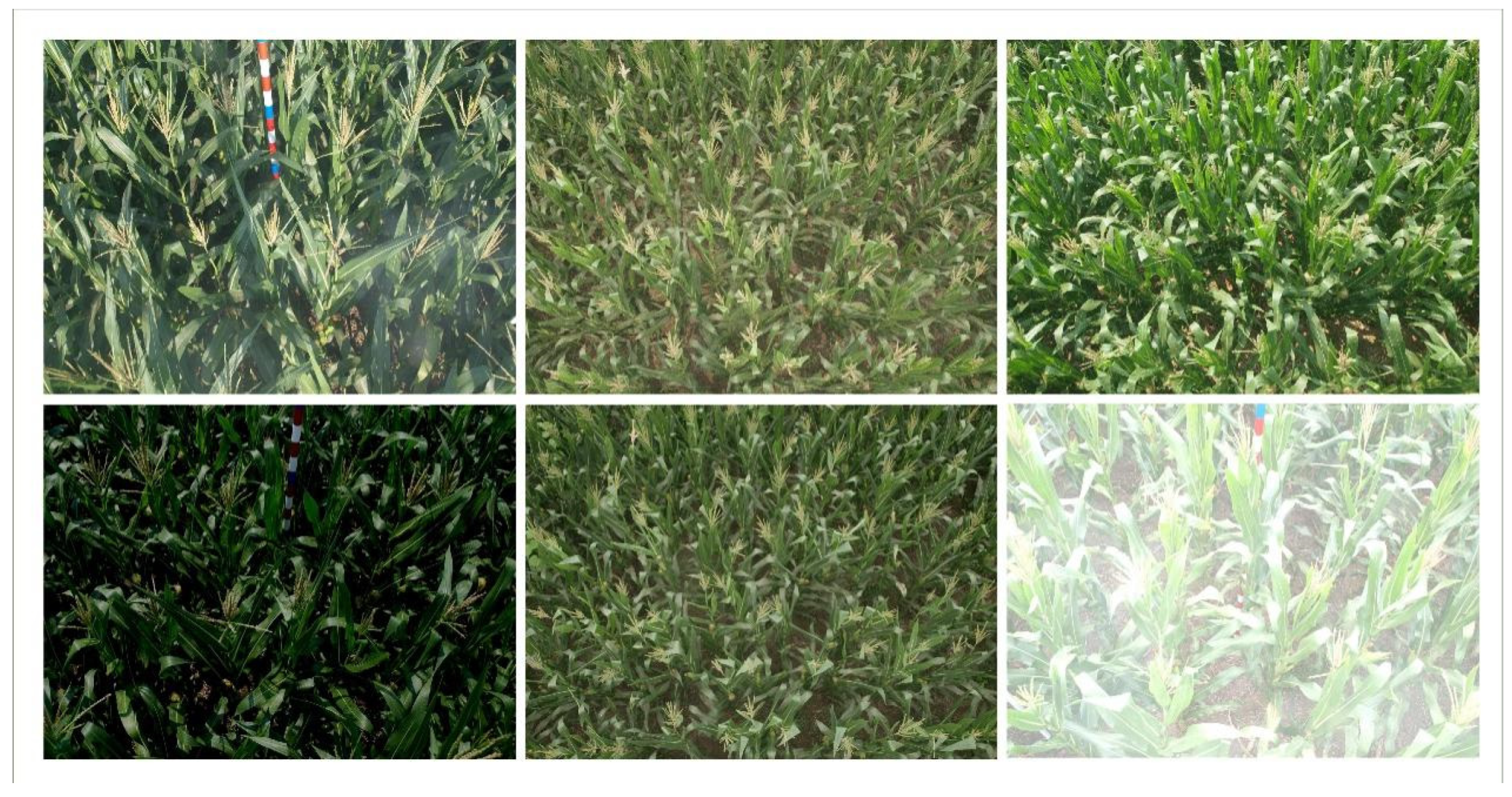

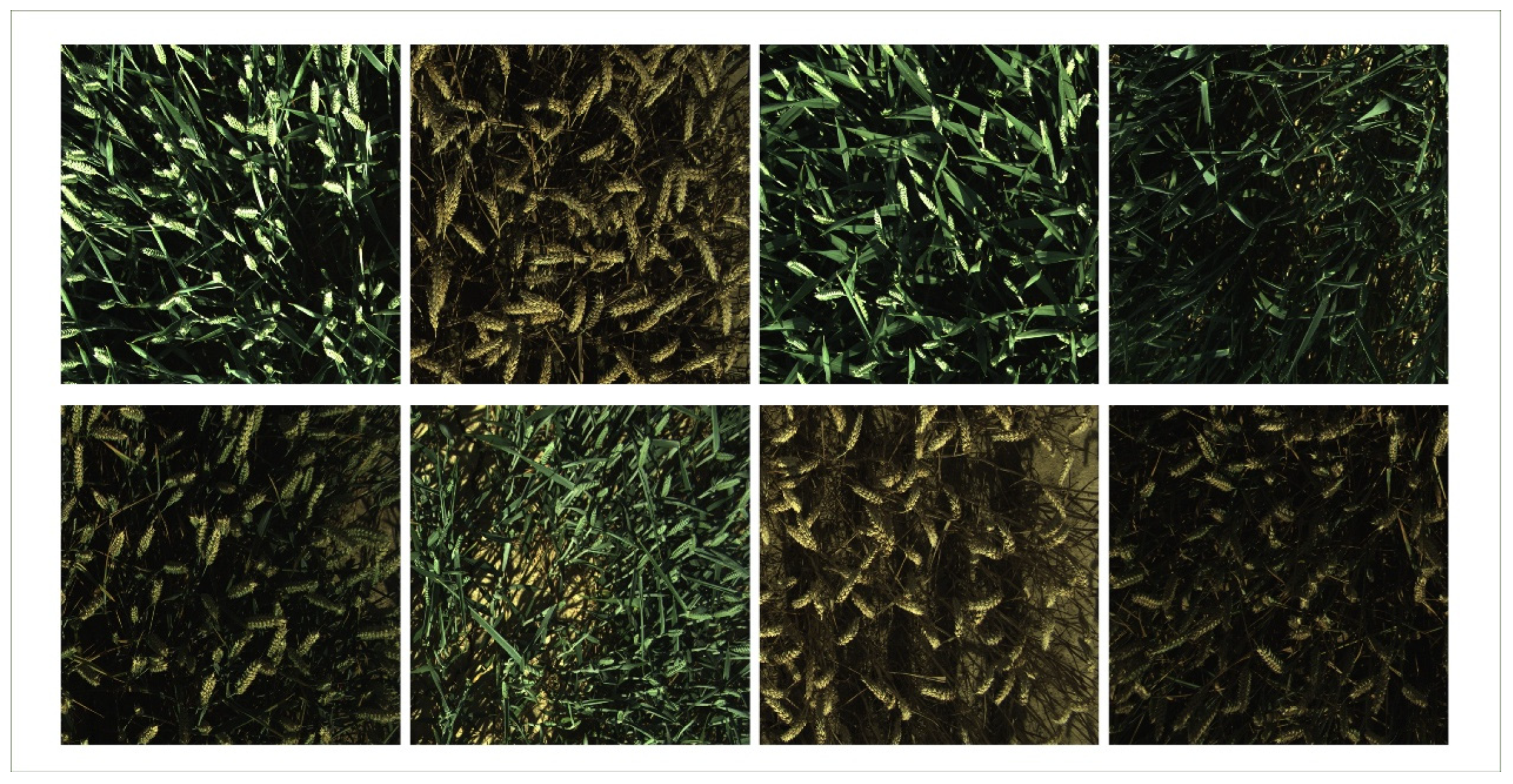

To investigate the generalization capability and effectiveness of the proposed LF-Yolo model on other crops and scenarios, we selected the publicly available wheat ear dataset and Maize tassel dataset for generalization experiments.

Wheat ear data originates from field trials conducted in Belgium’s Hesbaye region in 2020 [

18], covering cultivation data for two winter wheat varieties under multi-gradient nitrogen fertilizer and fungicide treatments. Images were captured using paired industrial RGB cameras (The RGB cameras were GO-5000C-USB (JAI A/S, Copenhagen, Denmark), equipped with a CMOS sensor of 2560 × 2048 pixels and a LM16HC objective (Kowa GmbH, Düsseldorf, Germany) with adaptive exposure to prevent saturation. The dataset comprises 701 images collected across seven stages from heading to maturity, each featuring high-quality wheat ear boundary annotations.

The Maize tassel dataset comprises portions of the Maize Tasseling Detection Dataset (MTDC) [

19] and the Multi-Region Maize Tassel Dataset (MrMT) [

20]. The MTDC was reconstructed based on the publicly available MTC [

21] dataset. The original MTC resource contains 361 time-series images collected from experimental fields across four distinct regions in China between 2010 and 2015. These images cover critical growth stages from tasseling to flowering and include high-resolution field imagery (typical resolution 3648 × 2736 to 4272 × 2848) of six major cultivated maize varieties. These images were captured at a height of 4–5 m using cameras mounted at a 60° tilt angle, effectively capturing planting scenarios of varying densities. The original MTC dataset’s point annotation method, which only marked tassel locations without target-scale information, had significant limitations. Therefore, the original dataset was upgraded using a center-point strict alignment strategy to convert tassel annotations into pixel-level bounding boxes. These annotations were provided in a unified VOC format, offering dual-dimensional supervision of both location and scale. The combined dataset is what we call the FusionMaize Dataset.

The original images and labels from both datasets were divided into training, validation, and test sets at a ratio of 6:3:1. Subsequently, data augmentation operations were applied to the training set, including random flipping, random mirroring, random brightness/saturation transformations, and random Gaussian noise addition. The final datasets comprised the wheat ear dataset with 1676 training images, 211 validation images, and 71 test images, and the Maize tassel dataset with 1580 training images, 239 validation images, and 80 test images. A schematic of the FusionMaize dataset is shown in

Figure 11, while a schematic of the Wheat Ear dataset is shown in

Figure 12.

The experimental parameters for the generalization experiments remained consistent with previous studies. Results on the maize tassel dataset are presented in

Table 4, while those on the wheat ear dataset are shown in

Table 5. Visualizations of the detection results are illustrated in

Figure 13 and

Figure 14. The results demonstrate that the proposed LF-Yolo model outperforms other comparison models in both accuracy and recall for dense target detection in field scenarios. Analysis of the visualizations reveals that LF-Yolo exhibits robust performance across both sparse and dense target scenarios, highlighting its generalization capability and applicability in the field of target detection within agricultural settings.

3.4. Ablation Experiment

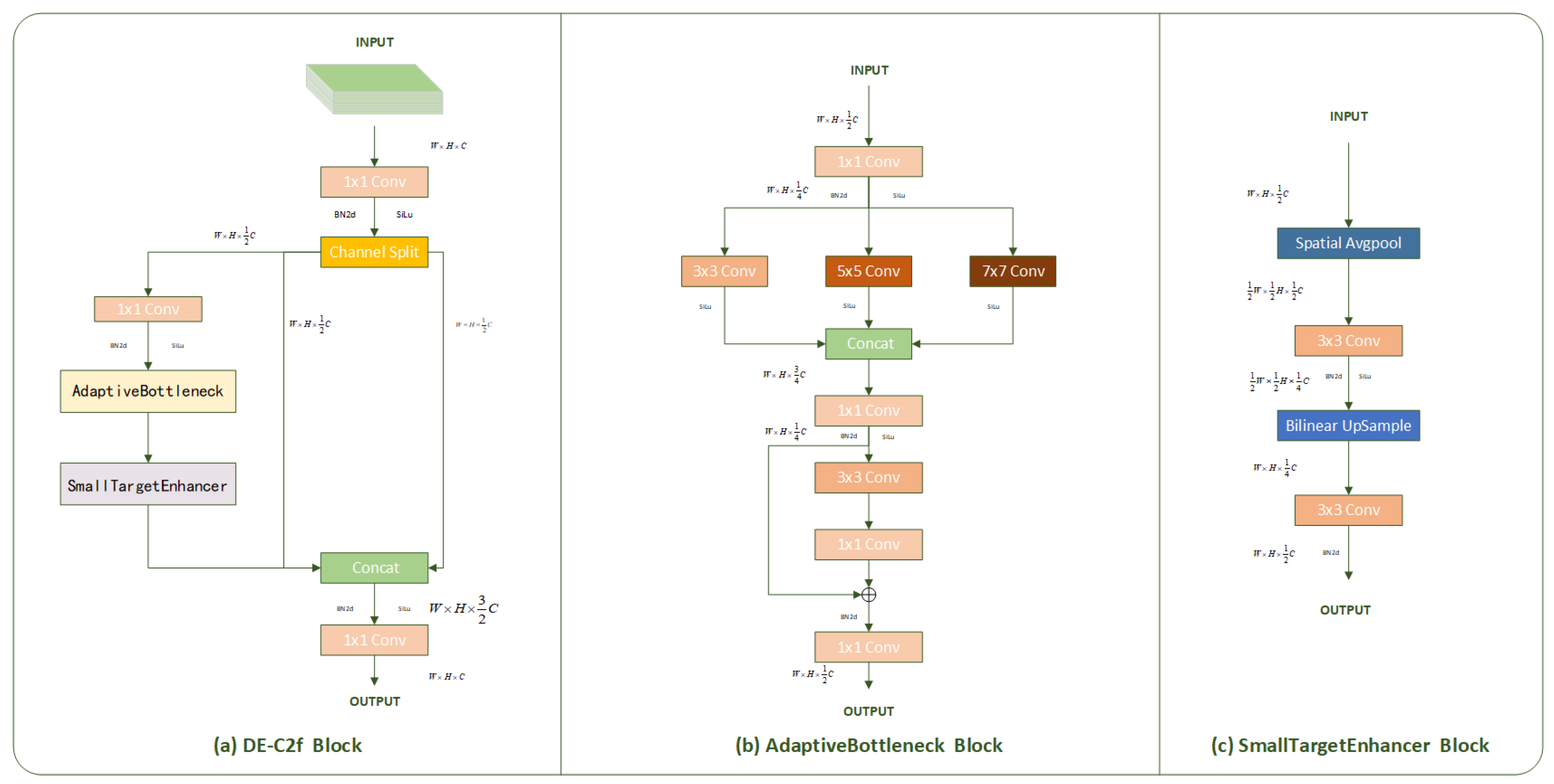

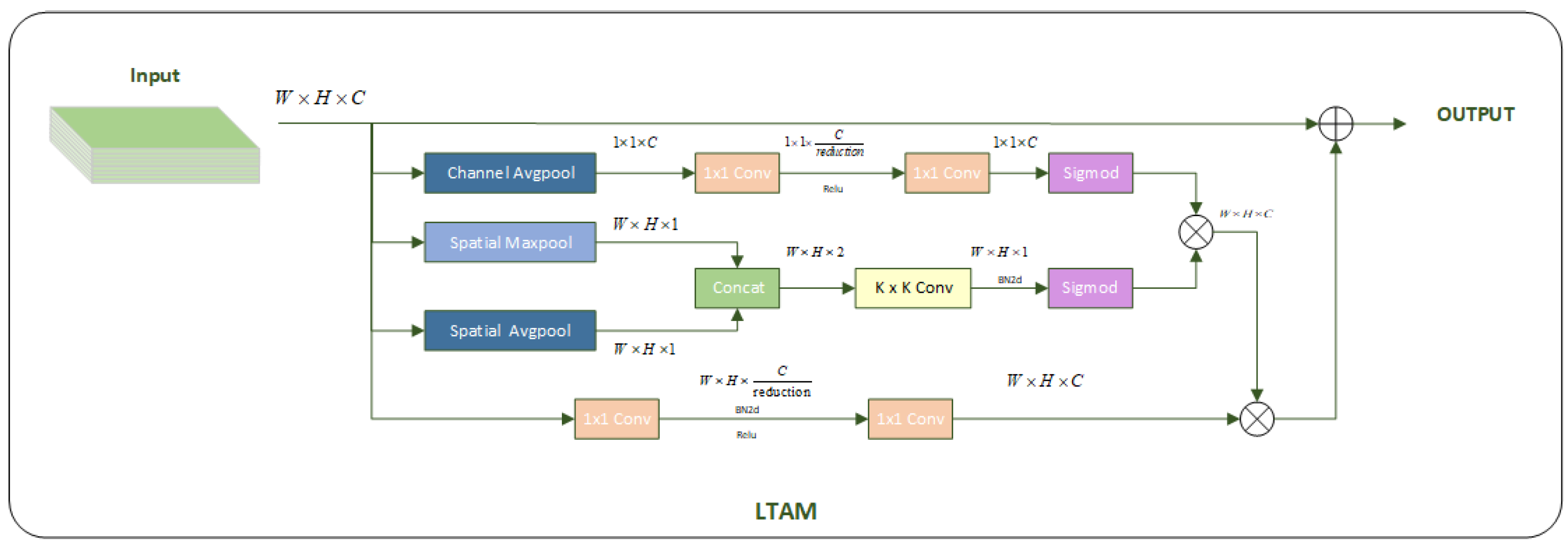

This study employs a systematic controlled ablation experiment methodology to validate the effectiveness of the proposed DE-C2f, LTAM, and CFFMs in dense object detection tasks in agricultural fields. Using the standard Yolo V8 model as the baseline network, each enhancement module is sequentially introduced via controlled variable methods. All experiments are conducted under identical hyperparameter settings and evaluated across three datasets to comprehensively assess the modules’ generalization capabilities and synergistic effects.

Table 6 presents ablation results on the UAVMaize dataset,

Table 7 shows results on the FusionMaize dataset, and

Table 8 displays results on the Wheat Ear dataset.

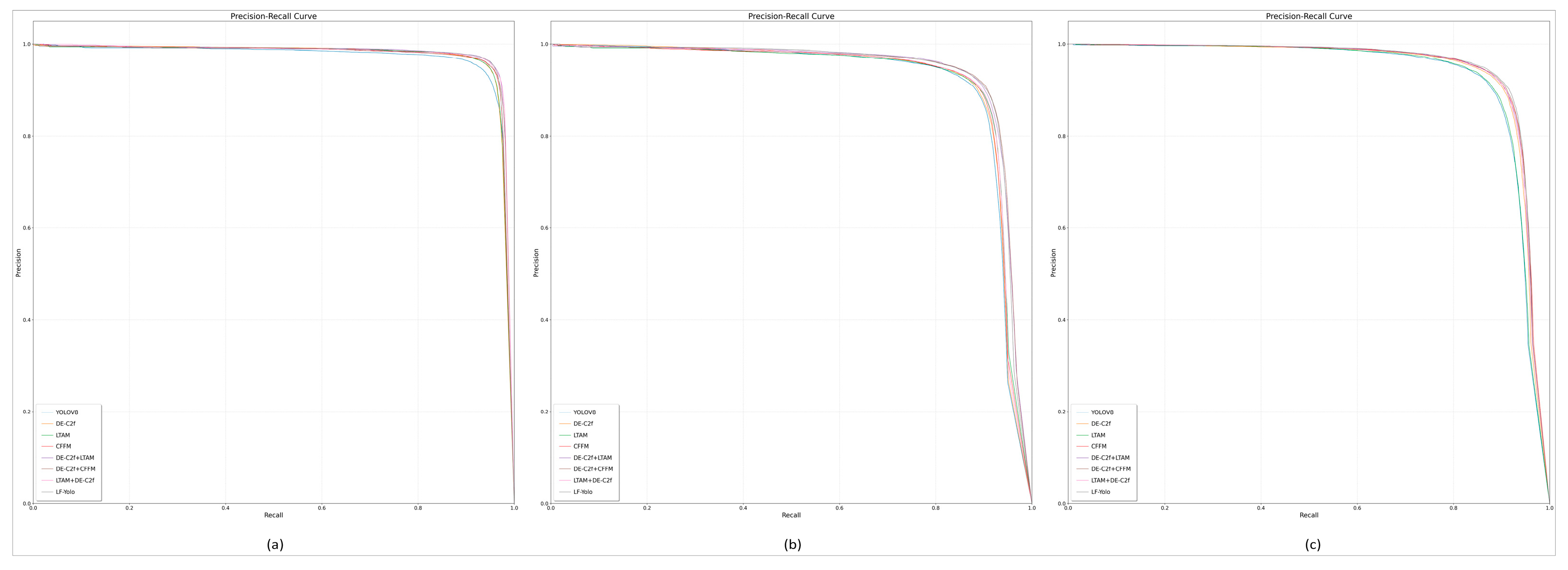

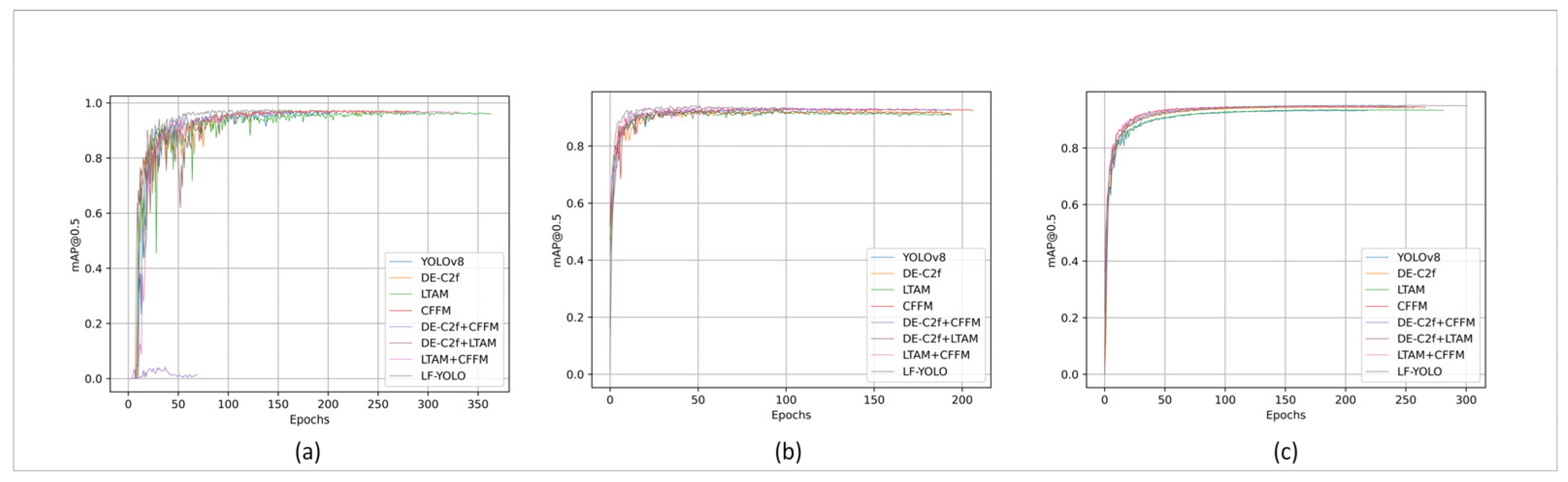

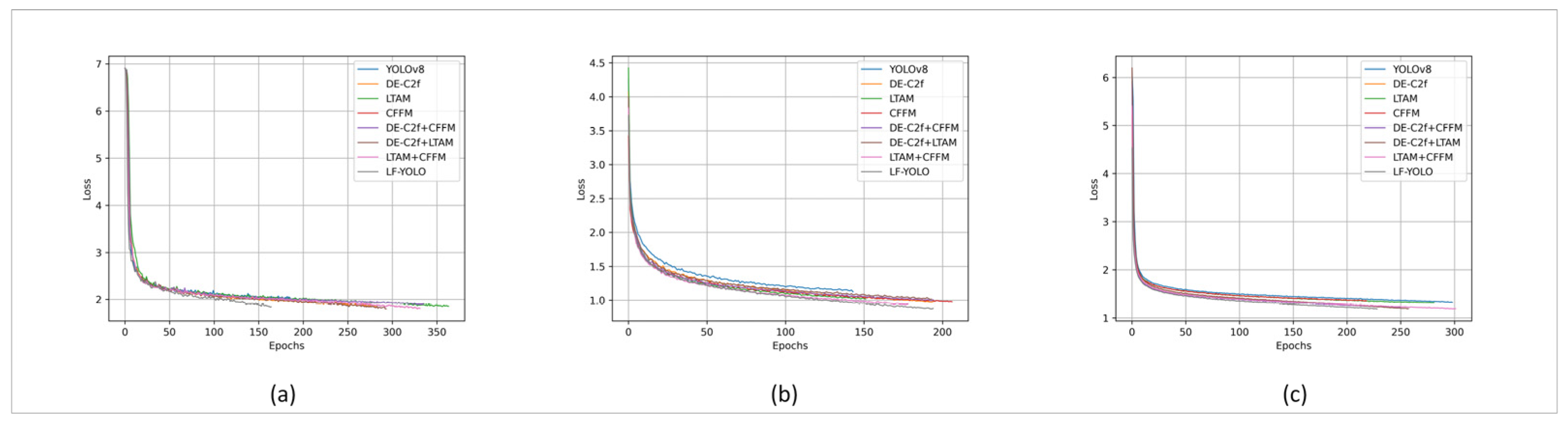

Figure 15,

Figure 16 and

Figure 17 illustrate the PR curves, mAP50 curves, and loss curves from the ablation experiments.

On the UAVMaize dataset, the DE-C2f module (Experiment 2) achieved a recall of 94.4%, an mAP50 of 97.1%, and an mAP50:95 of 47.4%, representing improvements of 0.5% and 0.7%, respectively, demonstrating its enhanced feature extraction capability. The LTAM (Experiment 3) significantly improved overall performance, achieving precision, recall, mAP50, and mAP50:95 of 95.5%, 95.1%, 97.2%, and 48.2%, respectively—representing increases of 0.2%, 1.2%, 0.4%, and 1.5% over YoloV8; The CFFM (Experiment 4) delivered balanced performance, achieving 94.2% recall, 96.9% mAP50, and 47.4% mAP50:95. Among the module combination experiments, the tri-module ensemble (Experiment 8) achieved the best performance, attaining 97.9% mAP50 and 49.6% mAP50:95.

On the FusionMaize Dataset, the DE-C2f module (Experiment 2) maintained high precision alongside recall rates, achieving mAP50 and mAP50:95 of 88.4%, 92.6%, and 52.8%, respectively—representing improvements of 0.5%, 0.3%, and 0.9% over the baseline model. The LTAM (Experiment 3) achieved the highest recall (88.6%), surpassing the baseline model by 0.7%. It attained 92.5% mAP50 and 52.9% mAP50:95, representing improvements of 0.2% and 1.0%, respectively; The CFFM (Experiment 4) demonstrated the strongest overall improvement, with mAP50:95 significantly increasing to 54.2%. It achieved 91.7% precision, 89.1% recall, and 54.2% mAP50:95. The direct combination of DE-C2f and LTAM (Experiment 5) exhibited performance degradation, indicating the need for improved inter-module coordination mechanisms. The three-module integration (Experiment 8) achieved optimal results across all metrics, with mAP50:95 rising to 55.6%.

On the Wheat Ear Dataset, all modules demonstrated significant performance improvements. The DE-C2f module (Experiment 2) achieved precision and recall rates of 92.3% and 88.3%, respectively, along with mAP50 and mAP50:95 values of 94.3% and 58.4%. Compared to the YoloV8 model, it achieved a 1.0% improvement in both precision and mAP50, while also achieving a 1.0% improvement in recall and mAP50:95. 94.3% and 58.4%, respectively. Compared to the YoloV8 model, it achieved a 1.0% improvement in precision and mAP50, along with 1.4% and 2.0% gains in recall and mAP50:95, respectively. The LTAM (Experiment 3) achieved precision and recall of 92.1% and 86.7%, respectively, with mAP50 and mAP50:95 at 93.4% and 57.2%. The CFFM (Experiment 4) achieved the best recall and mAP50 at 89.4% and 94.7%, respectively, improving by 2.5% and 1.5% over the baseline model. It achieved 92.4% precision and 58.9% mAP50:95, representing improvements of 1.1% and 2.4%. The three-module integration (Experiment 8) delivered the most comprehensive optimal performance, achieving 93.1% precision, 90.3% recall, 95.7% mAP50, and 59.7% mAP50:95.

The curve plots demonstrate that introducing the DE-C2f module, LTAM, and CFFM module, respectively, across three datasets achieves higher precision and recall rates. This trend shows a significant improvement in the medium-to-high recall range, with the curve shifting closer to the upper-right corner compared to the baseline model. This indicates robust performance within the medium-to-high recall range. The mAP curve corroborates this finding: each module individually improves performance, while the combined three modules exhibit minimal fluctuation and the smoothest curve. Finally, the loss curve demonstrates that integrating all three modules accelerates convergence, markedly improving boundary regression accuracy. This confirms our module design achieves performance optimization by accelerating convergence and reducing training time.

Experimental results demonstrate that the proposed modules effectively enhance detection performance across various datasets. The DE-C2f module constructs adaptive receptive fields using multi-scale convolutional kernels (3 × 3, 5 × 5, 7 × 7), covering different-sized receptive fields with varying kernel dimensions to effectively address the issue of large target scale variations in agricultural scenarios. Its small object enhancement path enhances scale invariance through downsampling–upsampling operations, significantly improving small object detection capabilities across all three datasets. The LTAM employs a triple attention mechanism working in concert: channel attention compresses and optimizes channel weights, spatial attention enhances boundary features via 7 × 7 convolutions, and the feature enhancement branch strengthens detail representation through a bottleneck structure. This lightweight design maintains parameter efficiency while significantly improving target-background discrimination, demonstrating strong adaptability in complex agricultural environments. The CFFM employs a dynamic upsampling mechanism to prevent detail loss, with multi-domain attention fusion capturing long-range dependencies in the channel domain and optimizing weight allocation in the spatial domain. The dual-path collaborative design preserves fine details while enhancing channel representation capabilities, achieving effective fusion of multi-scale features across all three datasets. The three modules collectively achieve synergistic effects. The DE-C2f module expands the receptive field, enhancing small object recognition capabilities; LTAM amplifies high-frequency features of small objects while suppressing background noise; and the CFFM excels particularly in multi-scale feature fusion. The combined use of these three modules leverages synergistic effects, with improved cross-task stability demonstrating the model’s strong generalization capabilities.

To validate the effectiveness of our improvements, we replaced the LTAM attention module in our LF-Yolo model with the widely adopted CBAM attention and SE attention modules. The specific experimental results are shown in Experiments 9 and 10 of

Table 6,

Table 7, and

Table 8. On the UAVMaize, FusionMaize, and Wheat Ear datasets, the accuracy achieved after replacing with the CBAM attention mechanism was 94.9%, 91.3%, and 92.1%, respectively, representing reductions of 1.3%, 1.2%, and 1.0% compared to LF-Yolo. The mAP50 metrics were 96.5%, 93.1%, and 94.9%, respectively, representing decreases of 1.4%, 1.5%, and 0.8% compared to LF-Yolo. When replaced with the SE attention mechanism, the accuracy values were 95.3%, 91.2%, and 92.4%, respectively, decreasing by 0.9%, 1.3%, and 0.7% compared to LF-Yolo; mAP50 metrics were 96.8%, 92.6%, and 94.6%, representing decreases of 1.1%, 2.0%, and 0.8% compared to LF-Yolo. It is evident that the SE module, as a classic channel attention mechanism, primarily captures inter-channel relationships through global average pooling. However, its lack of spatial attention weight distribution hinders effective processing of irregularly distributed small objects in agricultural images. Although the CBAM module combines channel and spatial attention, its serial structure exhibits limitations in complex agricultural scenarios: First, the fully connected layer used for channel attention introduces a large number of parameters. Second, the simple max pooling and average pooling operations employed for spatial attention concatenation struggle to effectively capture fine-grained features of small objects. Consequently, replacing the original LTAM with CBAM and SE modules reduces the model’s feature extraction capability.

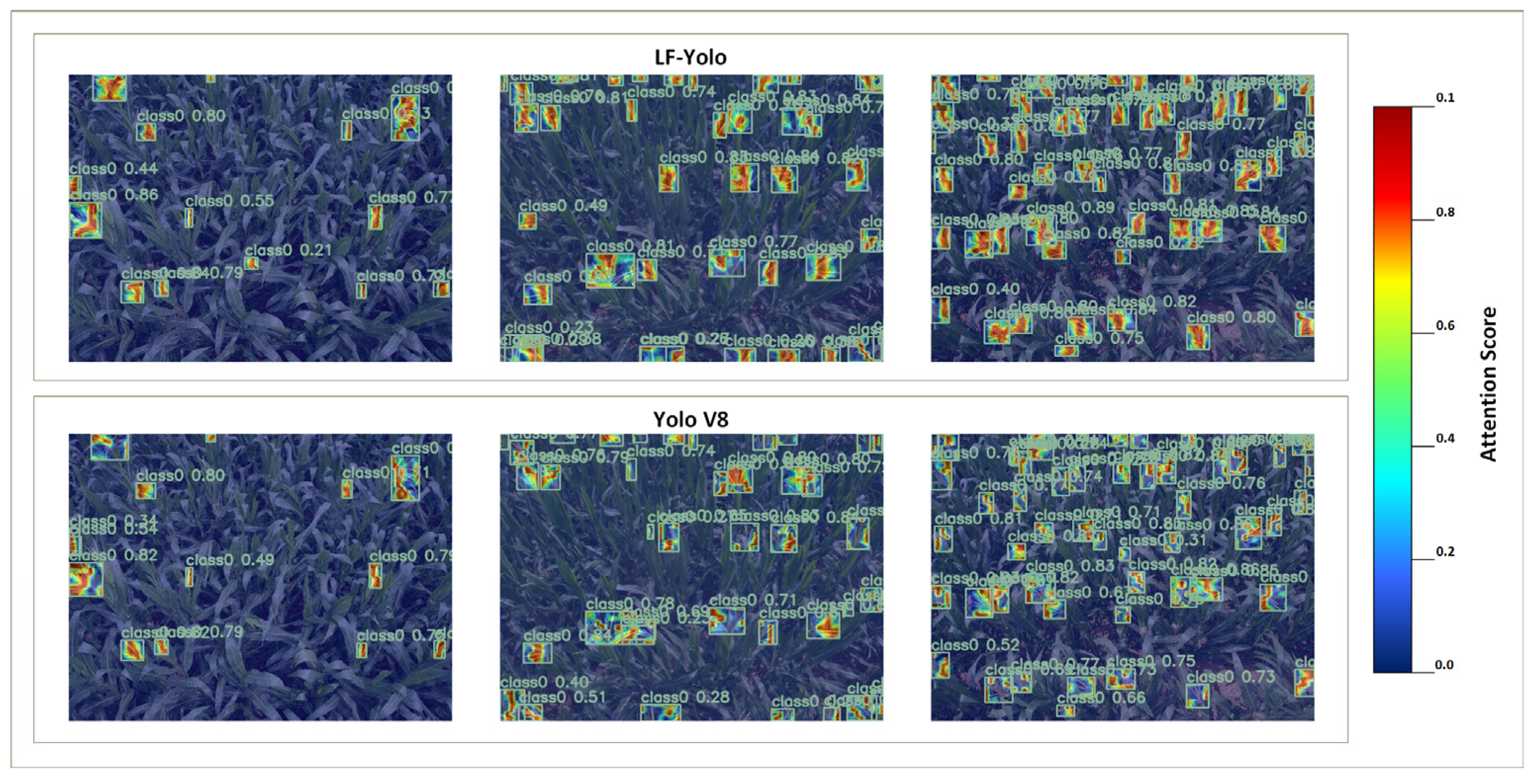

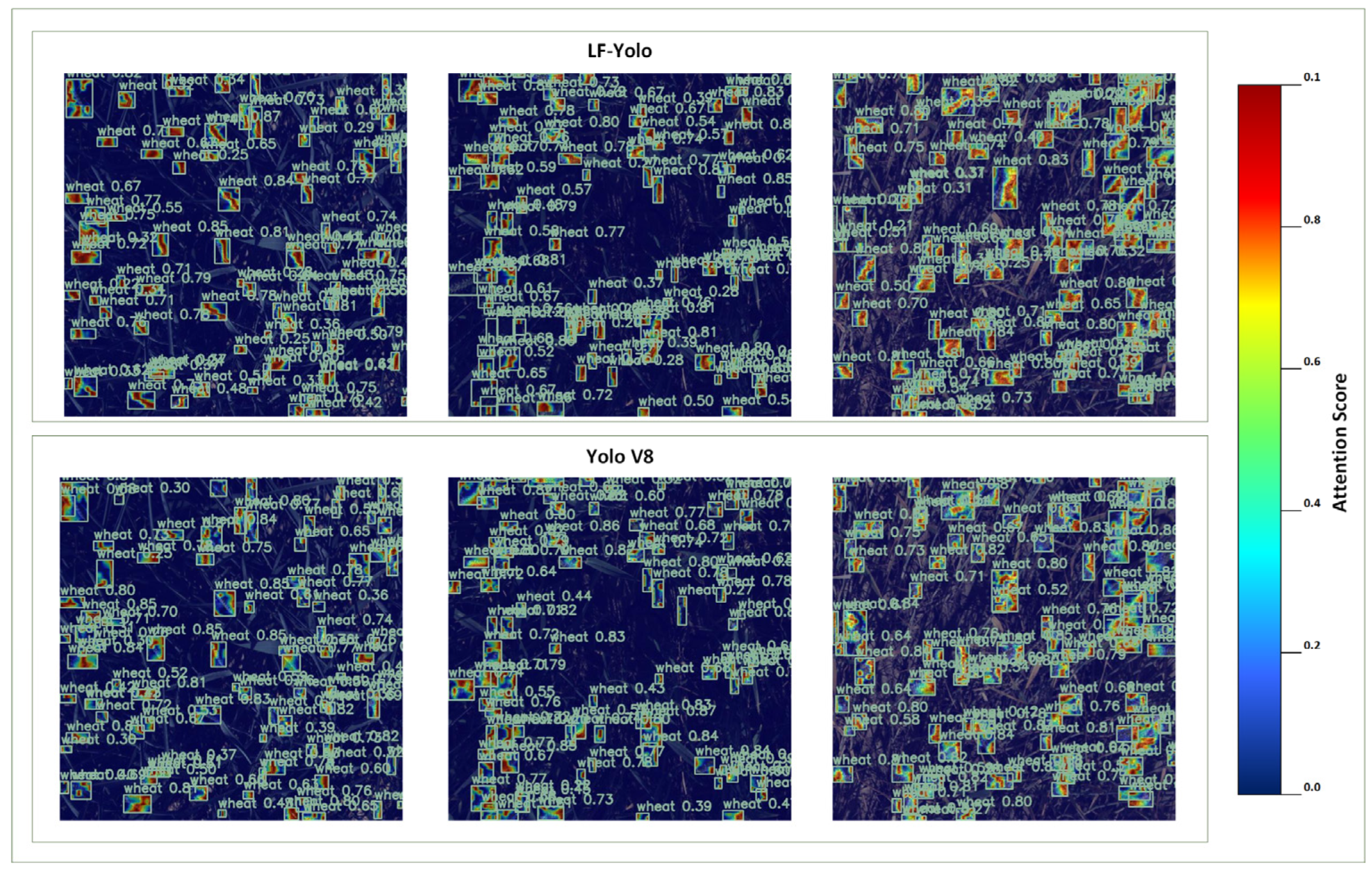

3.5. Heatmap Visualization Analysis

To visually demonstrate the optimization effectiveness of the LF-Yolo model proposed in this study, this section employs Gradient-Weighted Class Activation Mapping [

22] to visualize the output layer. For comparison, the widely used YoloV8 object detection model is employed. The results of the heatmap visualization are shown in

Figure 18,

Figure 19 and

Figure 20, where

Figure 18 presents the heatmap on the UAVMaize dataset,

Figure 19 displays the heatmap on the FusionMaize Dataset, and

Figure 20 illustrates the heatmap on the Wheat Ear Dataset. A color gradient is displayed on the right side of each heatmap to quantitatively represent the intensity of attention focus. As shown below, the transition from blue to red indicates progressively deeper levels of attention. Dark blue signifies areas receiving minimal attention, while dark red denotes areas attracting the highest level of focus.

When using the YoloV8 model to detect densely packed small objects in fields, such as wheat ears and corn tassels, the model is susceptible to interference from complex backgrounds and overlapping occlusions by other objects, which can distract the model. As shown in the figure, when detecting crop ears, the YoloV8 model fails to focus its attention on the main body of the ear. Instead, its attention is scattered around the peripheral areas surrounding the target ear, which is the reason for the low detection accuracy. In contrast, the proposed LF-YOLO demonstrates more concentrated and accurate heat response regions under identical conditions. Attention noticeably converges toward the main body of the ears, with significantly reduced false responses in background and peripheral areas. This visualization validates the structural design effectiveness of our model: The DE-C2f module enhances feature expression of small object edges and textures through multi-scale separable convolutions and dual-path enhancement, suppressing interference from redundant background information. The LTAM utilizes channel–spatial attention collaboration and bottleneck enhancement to boost target region saliency while reducing noise responses. The CFFM alleviates semantic loss during feature alignment between deep and shallow layers through dynamic upsampling and multi-domain weight fusion, thereby improving localization accuracy. The synergistic interaction of these modules enhances the model’s ability to focus on crop ears and perceive structural details.