AGRI-YOLO: A Lightweight Model for Corn Weed Detection with Enhanced YOLO v11n

Abstract

1. Introduction

2. Dataset

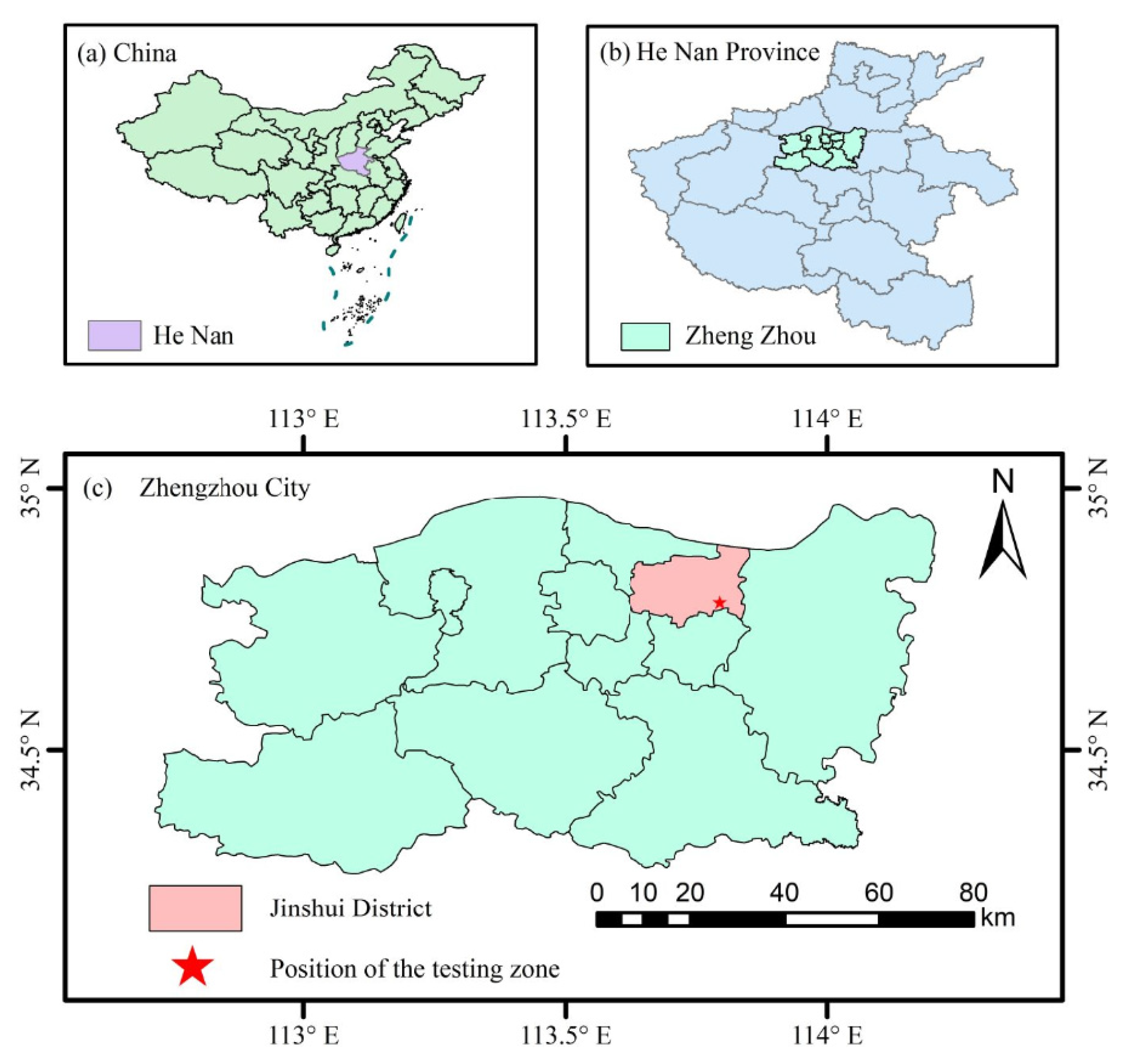

2.1. Data Collection and Dataset Construction

2.2. Dataset Divisions

2.3. Data Enhancement

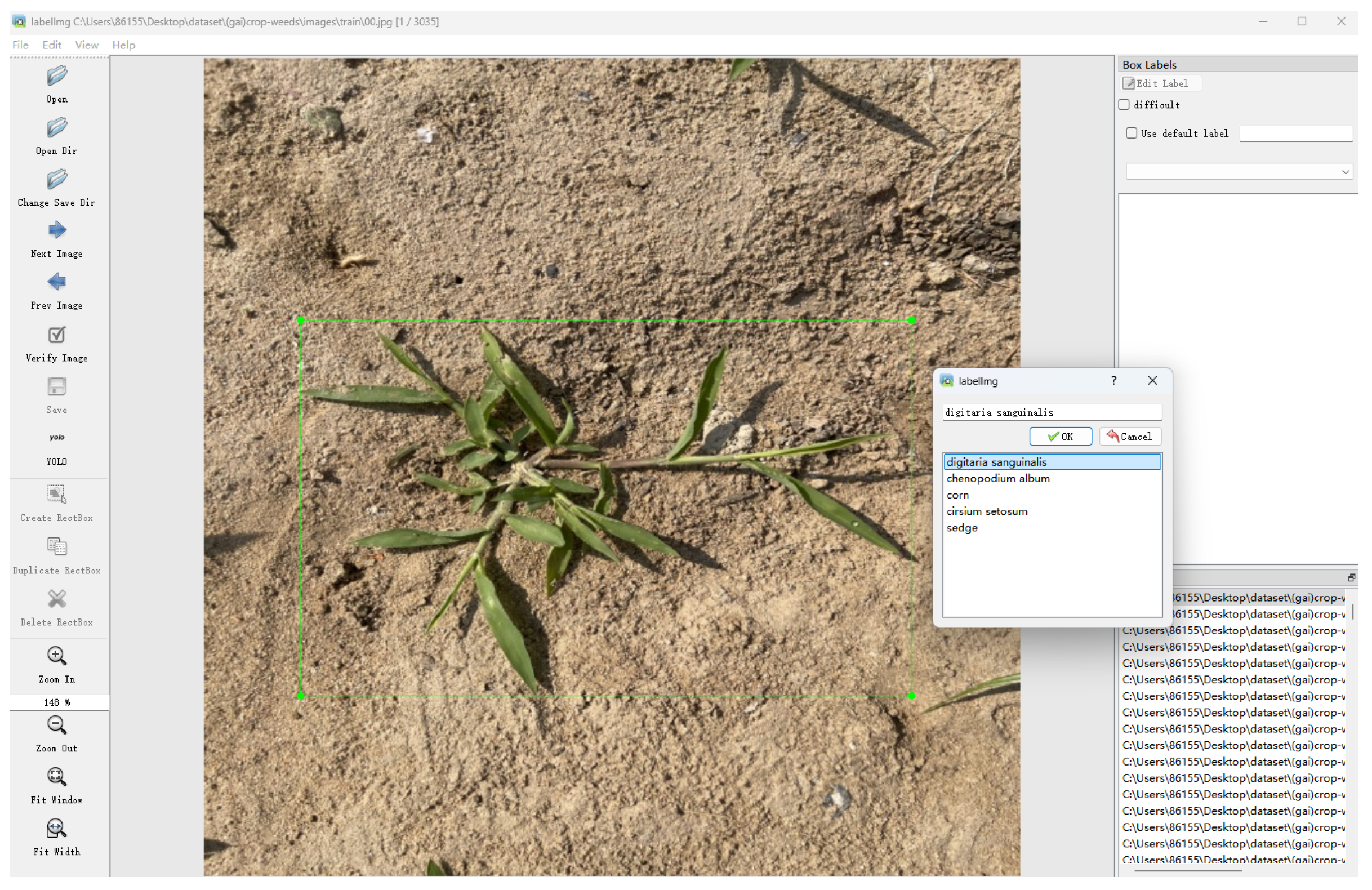

2.4. Dataset Labeling

3. Methods

3.1. Lightweight Model Improved Based on YOLO v11n

3.2. Reconstructing the C3k2 Feature Extraction Module

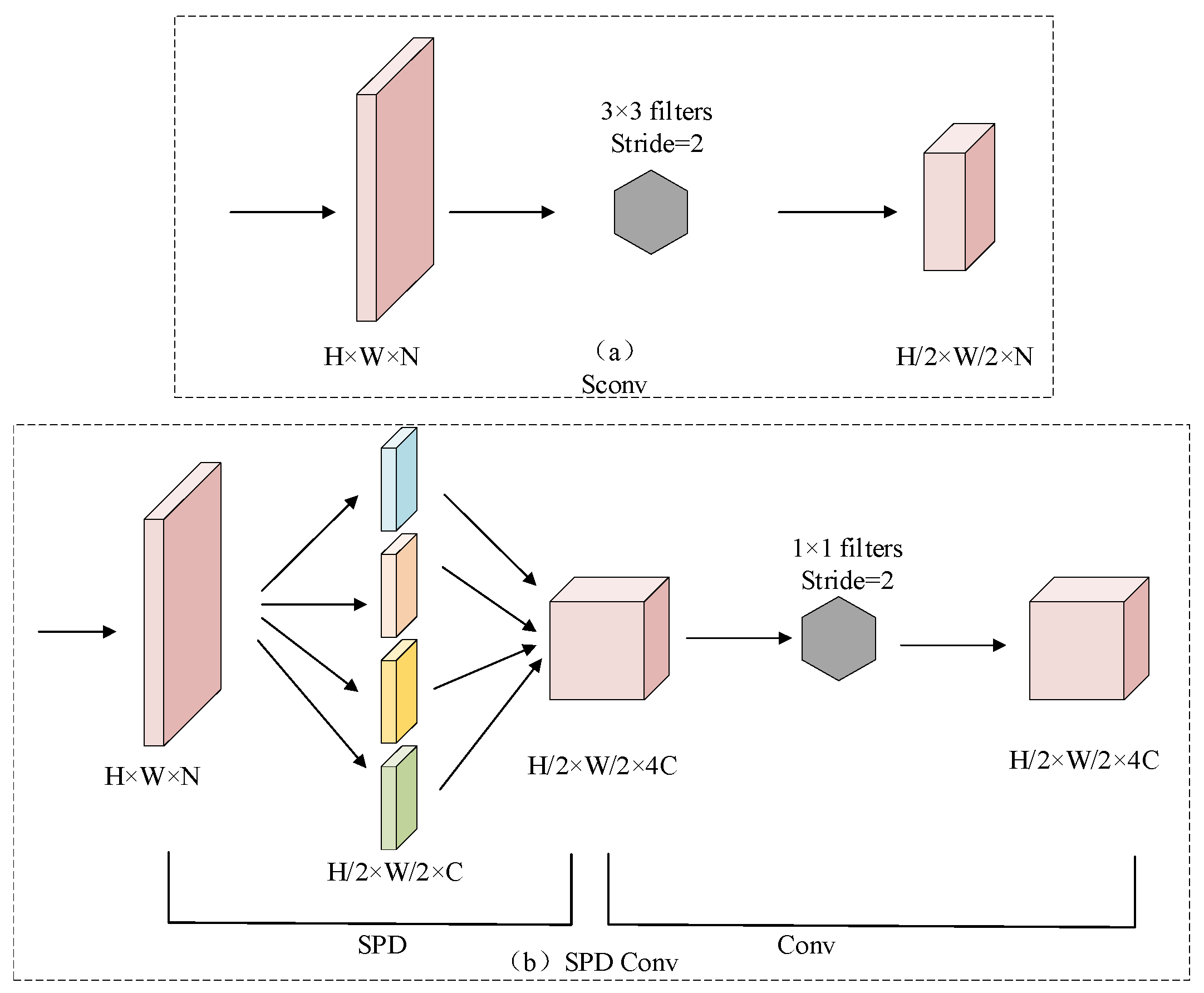

3.3. ADown Downsampling Module

3.4. LADH Lightweight Detection Head Module

3.5. Loss Function

3.6. Model Performance Evaluation

3.7. Experimental Environment and Parameter Settings

4. Experimental Results and Analysis

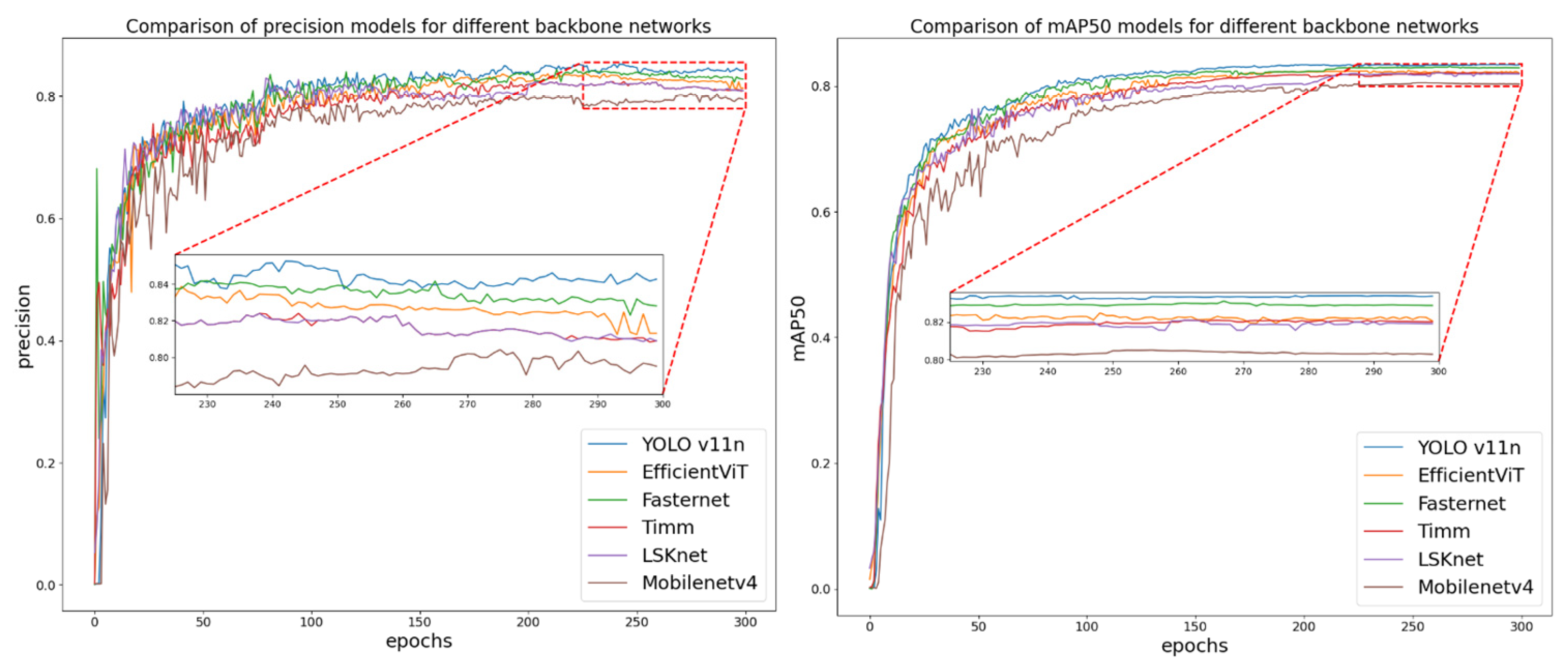

4.1. Comparative Experiments with Different Backbone Networks

4.2. Comparative Experiments with Different Feature Extraction Modules

4.3. Comparative Experiments with Different Sampling Layers

4.4. Comparative Experiments with Different Loss Functions

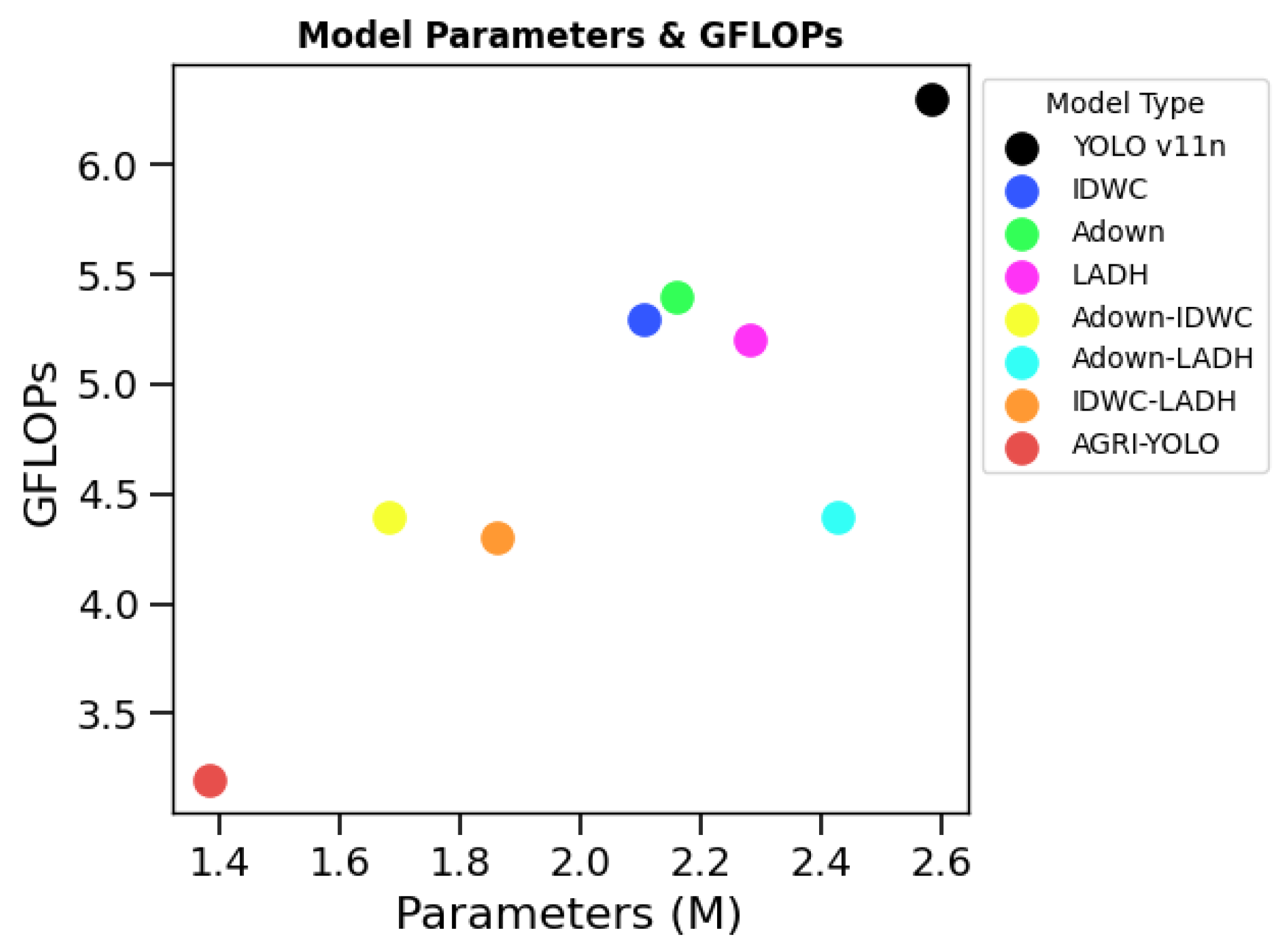

4.5. Ablation Experiment

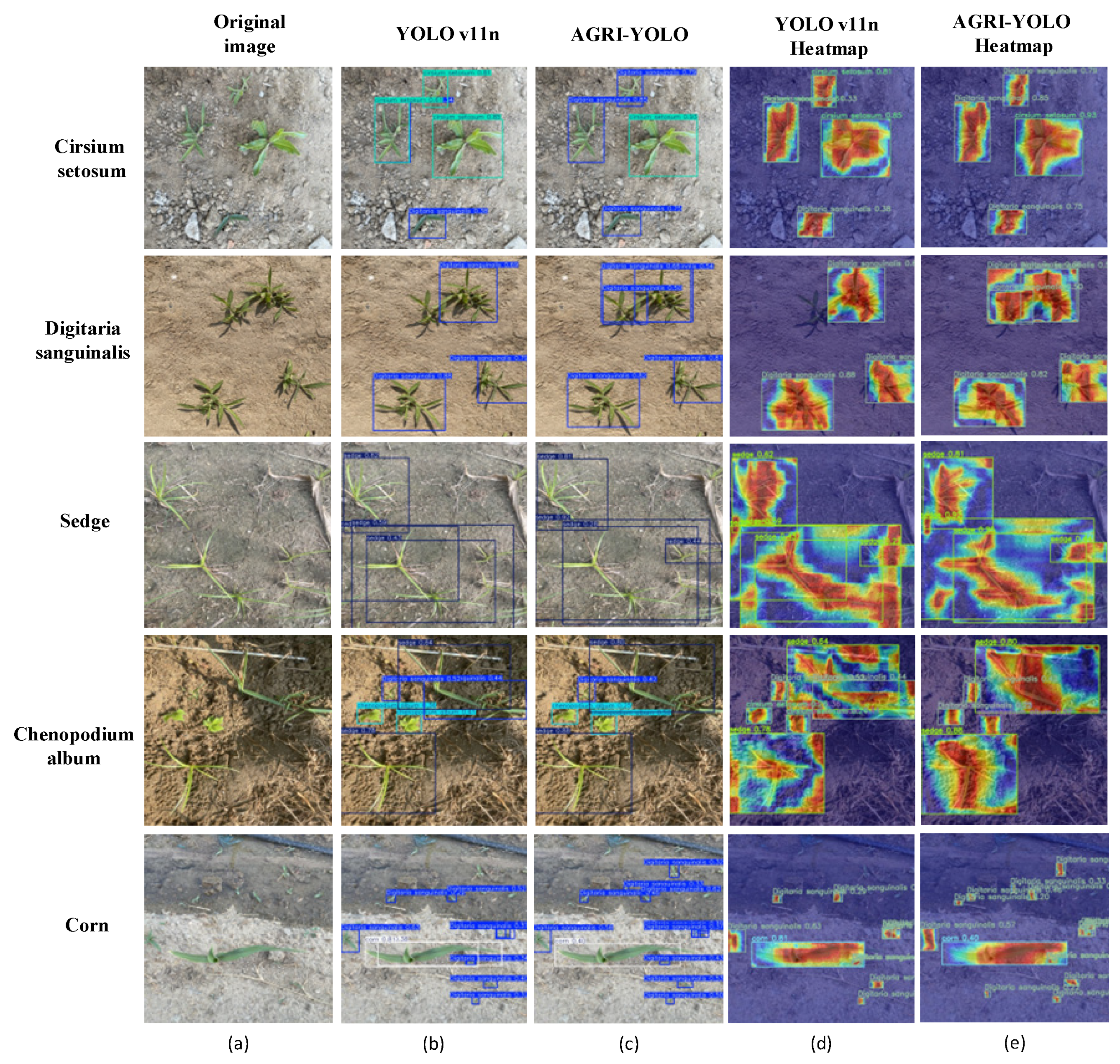

4.6. Comparative Experiments Using Different Models

5. Discussion

5.1. Application of Lightweight Models in Detecting Weeds in Agricultural Fields

5.2. Limitations of This Study and Future Research Directions

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sun, W.; Xu, M.; Xu, K.; Chen, D.; Wang, J.; Yang, R.; Chen, Q.; Yang, S. CSGD-YOLO: A Corn Seed Germination Status Detection Model Based on YOLOv8n. Agronomy 2025, 15, 128. [Google Scholar] [CrossRef]

- Wang, B.; Yan, Y.; Lan, Y.; Wang, M.; Bian, Z. Accurate Detection and Precision Spraying of Corn and Weeds Using the Improved YOLOv5 Model. IEEE Access 2023, 11, 29868–29882. [Google Scholar] [CrossRef]

- Zhou, P.; Zhu, Y.; Jin, C.; Gu, Y.; Kong, Y.; Ou, Y.; Yin, X.; Hao, S. A new training strategy: Coordinating distillation techniques for training lightweight weed detection model. Crop Prot. 2025, 190, 107124. [Google Scholar] [CrossRef]

- Liu, H.; Hou, Y.; Zhang, J.; Zheng, P.; Hou, S. Research on Weed Reverse Detection Methods Based on Improved You Only Look Once (YOLO) v8: Preliminary Results. Agronomy 2024, 14, 1667. [Google Scholar] [CrossRef]

- Lytridis, C.; Pachidis, T. Recent Advances in Agricultural Robots for Automated Weeding. AgriEngineering 2024, 6, 3279–3296. [Google Scholar] [CrossRef]

- Gonzalez-Gonzalez, M.G.; Blasco, J.; Cubero, S.; Chueca, P. Automated Detection of Tetranychus urticae Koch in Citrus Leaves Based on Colour and VIS/NIR Hyperspectral Imaging. Agronomy 2021, 11, 1002. [Google Scholar] [CrossRef]

- Rahman, M.; Robson, A.; Salgadoe, S.; Walsh, K.; Bristow, M. Exploring the Potential of High Resolution Satellite Imagery for Yield Prediction of Avocado and Mango Crops. Proceedings 2019, 36, 154. [Google Scholar] [CrossRef]

- Daga, A.P.; Garibaldi, L. GA-Adaptive Template Matching for Offline Shape Motion Tracking Based on Edge Detection: IAS Estimation from the SURVISHNO 2019 Challenge Video for Machine Diagnostics Purposes. Algorithms 2020, 13, 33. [Google Scholar] [CrossRef]

- Palumbo, M.; Pace, B.; Cefola, M.; Montesano, F.F.; Serio, F.; Colelli, G.; Attolico, G. Self-Configuring CVS to Discriminate Rocket Leaves According to Cultivation Practices and to Correctly Attribute Visual Quality Level. Agronomy 2021, 11, 1353. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation Applied to Handwritten Zip Code Recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Olsen, A.; Konovalov, D.A.; Philippa, B.; Ridd, P.; Wood, J.C.; Johns, J.; Banks, W.; Girgenti, B.; Kenny, O.; Whinney, J.; et al. DeepWeeds: A Multiclass Weed Species Image Dataset for Deep Learning. Sci. Rep. 2019, 9, 2058. [Google Scholar] [CrossRef] [PubMed]

- Ahmad, A.; Saraswat, D.; Aggarwal, V.; Etienne, A.; Hancock, B. Performance of deep learning models for classifying and detecting common weeds in corn and soybean production systems. Comput. Electron. Agric. 2021, 184, 106081. [Google Scholar] [CrossRef]

- Herrera, P.J.; Dorado, J.; Ribeiro, A. A novel approach for weed type classification based on shape descriptors and a fuzzy decision-making method. Sensors 2014, 14, 15304–15324. [Google Scholar] [CrossRef]

- Peteinatos, G.G.; Reichel, P.; Karouta, J.; Andújar, D.; Gerhards, R. Weed Identification in Maize, Sunflower, and Potatoes with the Aid of Convolutional Neural Networks. Remote Sens. 2020, 12, 4185. [Google Scholar] [CrossRef]

- Zhang, D.; Lu, R.; Guo, Z.; Yang, Z.; Wang, S.; Hu, X. Algorithm for Locating Apical Meristematic Tissue of Weeds Based on YOLO Instance Segmentation. Agronomy 2024, 14, 2121. [Google Scholar] [CrossRef]

- Zuo, Z.; Gao, S.; Peng, H.; Xue, Y.; Han, L.; Ma, G.; Mao, H. Lightweight Detection of Broccoli Heads in Complex Field Environments Based on LBDC-YOLO. Agronomy 2024, 14, 2359. [Google Scholar] [CrossRef]

- Jia, Z.; Zhang, M.; Yuan, C.; Liu, Q.; Liu, H.; Qiu, X.; Zhao, W.; Shi, J. ADL-YOLOv8: A Field Crop Weed Detection Model Based on Improved YOLOv8. Agronomy 2024, 14, 2355. [Google Scholar] [CrossRef]

- Ma, C.; Chi, G.; Ju, X.; Zhang, J.; Yan, C. YOLO-CWD: A novel model for crop and weed detection based on improved YOLOv8. Crop Prot. 2025, 192, 107169. [Google Scholar] [CrossRef]

- Wang, X.; Wang, Q.; Qiao, Y.; Zhang, X.; Lu, C.; Wang, C. Precision Weed Management for Straw-Mulched Maize Field: Advanced Weed Detection and Targeted Spraying Based on Enhanced YOLO v5s. Agriculture 2024, 14, 2134. [Google Scholar] [CrossRef]

- Tao, T.; Wei, X. STBNA-YOLOv5: An Improved YOLOv5 Network for Weed Detection in Rapeseed Field. Agriculture 2024, 15, 22. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Zhang, H.; Zhu, Y.; Zheng, H. NAMF: A nonlocal adaptive mean filter for removal of salt-and-pepper noise. Math. Probl. Eng. 2021, 2021, 4127679. [Google Scholar] [CrossRef]

- Cubuk, E.D.; Zoph, B.; Shlens, J.; Le, Q.V. Randaugment: Practical automated data augmentation with a reduced search space. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 702–703. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1–9. Available online: https://proceedings.neurips.cc/paper_files/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf (accessed on 15 October 2024). [CrossRef]

- Kandel, I.; Castelli, M.; Manzoni, L. Brightness as an augmentation technique for image classification. Emerg. Sci. J. 2022, 6, 881–892. [Google Scholar] [CrossRef]

- Ultralytics. Ultralytics yolov11. 2024. Available online: https://docs.ultralytics.com/models/yolo11/ (accessed on 15 October 2024).

- Mallick, S. Yolo-learnopencv. 2024. Available online: https://learnopencv.com/yolo11/ (accessed on 15 October 2024).

- Shi, L.; Wei, Z.; You, H.; Wang, J.; Bai, Z.; Yu, H.; Ji, R.; Bi, C. OMC-YOLO: A Lightweight Grading Detection Method for Oyster Mushrooms. Horticulturae 2024, 10, 742. [Google Scholar] [CrossRef]

- Yu, W.; Zhou, P.; Yan, S.; Wang, X. InceptionNeXt: When Inception Meets ConvNeXt. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 5672–5683. [Google Scholar]

- Sunkara, R.; Luo, T. No More Strided Convolutions or Pooling: A New CNN Building Block for Low-Resolution Images and Small Objects. In Proceedings of the European Conference, ECML PKDD 2022, Grenoble, France, 19–23 September 2023; pp. 443–459. [Google Scholar] [CrossRef]

- Wu, T.; Miao, Z.; Huang, W.; Han, W.; Guo, Z.; Li, T. SGW-YOLOv8n: An Improved YOLOv8n-Based Model for Apple Detection and Segmentation in Complex Orchard Environments. Agriculture 2024, 14, 1958. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Yeh, I.H.; Mark Liao, H.-Y. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In Proceedings of the 18th European Conference, Milan, Italy, 29 September–4 October 2024; pp. 1–21. [Google Scholar] [CrossRef]

- Zhang, X.; Yang, W.; Tang, X.; Liu, J. A Fast Learning Method for Accurate and Robust Lane Detection Using Two-Stage Feature Extraction with YOLO v3. Sensors 2018, 18, 4308. [Google Scholar] [CrossRef]

- Liu, X.; Peng, H.; Zheng, N.; Yang, Y.; Hu, H.; Yuan, Y. EfficientViT: Memory Efficient Vision Transformer with Cascaded Group Attention. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 14420–14430. [Google Scholar]

- Qin, D.; Leichner, C.; Delakis, M.; Fornoni, M.; Luo, S.; Yang, F.; Wang, W.; Banbury, C.; Ye, C.; Akin, B. MobileNetV4: Universal models for the mobile ecosystem. In Proceedings of the 18th European Conference, Milan, Italy, 29 September–4 October 2024; pp. 78–96. [Google Scholar]

- Chen, J.; Kao, S.h.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.H.G. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar]

- Wightman, R.; Touvron, H.; Jégou, H. Resnet strikes back: An improved training procedure in timm. arXiv 2021. [Google Scholar] [CrossRef]

- Li, Y.; Hou, Q.; Zheng, Z.; Cheng, M.M.; Yang, J.; Li, X. Large Selective Kernel Network for Remote Sensing Object Detection. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 16748–16759. [Google Scholar]

- Yu, W.; Si, C.; Zhou, P.; Luo, M.; Zhou, Y.; Feng, J.; Yan, S.; Wang, X. MetaFormer Baselines for Vision. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 896–912. [Google Scholar] [CrossRef] [PubMed]

- Dai, T.; Wang, J.; Guo, H.; Li, J.; Wang, J.; Zhu, Z. FreqFormer: Frequency-aware transformer for lightweight image super-resolution. In Proceedings of the 33rd International Joint Conference on Artificial Intelligence, Jeju, Republic of Korea, 3–9 August 2024; pp. 731–739. [Google Scholar]

- Ding, X.; Zhang, X.; Han, J.; Ding, G. Diverse Branch Block: Building a Convolution as an Inception-like Unit. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 10881–10890. [Google Scholar]

- Zhang, X.; Song, Y.; Song, T.; Yang, D.; Ye, Y.; Zhou, J.; Zhang, L. LDConv: Linear deformable convolution for improving convolutional neural networks. Image Vis. Comput. 2024, 149, 105190. [Google Scholar] [CrossRef]

- Wu, T.; Tang, S.; Zhang, R.; Cao, J.; Zhang, Y. CGNet: A Light-Weight Context Guided Network for Semantic Segmentation. IEEE Trans. Image Process. 2021, 30, 1169–1179. [Google Scholar] [CrossRef] [PubMed]

- Han, K.; Wang, Y.; Guo, J.; Wu, E. ParameterNet: Parameters are All You Need for Large-Scale Visual Pretraining of Mobile Networks. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 15751–15761. [Google Scholar] [CrossRef]

- Zhang, T.; Li, L.; Zhou, Y.; Liu, W.; Qian, C.; Hwang, J.-N.; Ji, X. Cas-vit: Convolutional additive self-attention vision transformers for efficient mobile applications. arXiv 2024. [Google Scholar] [CrossRef]

- Lu, W.; Chen, S.-B.; Tang, J.; Ding, C.H.Q.; Luo, B. A Robust Feature Downsampling Module for Remote-Sensing Visual Tasks. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4404312. [Google Scholar] [CrossRef]

- Wang, J.; Chen, K.; Xu, R.; Liu, Z.; Loy, C.C.; Lin, D. CARAFE: Content-Aware ReAssembly of FEatures. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3007–3016. [Google Scholar]

- Liu, W.; Lu, H.; Fu, H.; Cao, Z. Learning to Upsample by Learning to Sample. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 6004–6014. [Google Scholar]

- Williams, T.; Li, R. Wavelet pooling for convolutional neural networks. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Fan, X.; Sun, T.; Chai, X.; Zhou, J. YOLO-WDNet: A lightweight and accurate model for weeds detection in cotton field. Comput. Electron. Agric. 2024, 225, 109317. [Google Scholar] [CrossRef]

- Luo, W.; Chen, Q.; Wang, Y.; Fu, D.; Mi, Z.; Wang, Q.; Li, H.; Shi, Y.; Su, B. Real-time identification and spatial distribution mapping of weeds through unmanned aerial vehicle (UAV) remote sensing. Eur. J. Agron. 2025, 169, 127699. [Google Scholar] [CrossRef]

- Zhao, P.; Chen, J.; Li, J.; Ning, J.; Chang, Y.; Yang, S. Design and Testing of an autonomous laser weeding robot for strawberry fields based on DIN-LW-YOLO. Comput. Electron. Agric. 2025, 229, 109808. [Google Scholar] [CrossRef]

| Models | Datasets | Whether Used Data Enhancement | Precision | mAP50 | Parameters /M | GFLOPs | Size/MB |

|---|---|---|---|---|---|---|---|

| F-YOLOv8n-seg-CDA [15] | Calystegia hederacea Wall Self: 248 Public: 5998 | √ Self Enhanced to 980 | 81.0% | - | 2.01 | 8.9 | 4.2 |

| LBDC-YOLO [16] | Broccoli Heads Self: 839 | √ Enhanced to 2013 | 97.65% | 94.44% | 1.93 | 6.5 | 3.5 |

| ADL-YOLOv8 [17] | Crop Weed Self and Public: 4398 | √ Enhanced to 7966 | 92.13% | 94.71% | - | 7.3 | 5.02 |

| YOLO-CWD [18] | CropAndWeed Public: 4800 | √ | 81.3% | 71.5% | 3.49 | 9.6 | - |

| v5s-FasterNet-CBAM_WioU [19] | Weeds in straw-covered Self: 2088 | √ Enhanced to 5000 | 90.3% | 91.4% | 6.8 | 7.4 | 6.8 |

| STBNA-YOLOv5 [20] | Rapeseed weed Self: 5000 | - | 64.4% | 90.8% | - | - | - |

| Categories | Total Sample | Training (70%) | Validation (20%) | Test (10%) |

|---|---|---|---|---|

| Corn | 182 | 127 | 36 | 19 |

| Digitaria sanguinalis | 171 | 120 | 34 | 17 |

| Sedge | 179 | 125 | 36 | 18 |

| Cirsium setosum | 161 | 113 | 32 | 16 |

| Chenopodium album | 174 | 122 | 35 | 17 |

| Total | 867 | 607 | 173 | 87 |

| Categories | Total Sample | Training (70%) | Validation (20%) | Test (10%) |

|---|---|---|---|---|

| Corn | 910 | 635 | 180 | 95 |

| Digitaria sanguinalis | 855 | 600 | 170 | 85 |

| Sedge | 895 | 625 | 180 | 90 |

| Cirsium setosum | 805 | 565 | 160 | 80 |

| Chenopodium album | 870 | 610 | 175 | 85 |

| Total | 4335 | 3035 | 865 | 435 |

| Program | Parameters |

|---|---|

| Operating system | Windows |

| ARM | 16 GB |

| CPU | i5-12400F |

| GPU | 12 GB NVIDIA 3060 |

| Platform | Pycharm |

| Base model | YOLO v11n |

| Programming languages | Python 3.10 |

| Deep learning framework | Pytorch 2.01 |

| CUDA version | 11.8 |

| Optimizer | SGD |

| Learning rate | 0.001 |

| Epochs | 300 |

| Models | Precision | Recall | mAP50 | Parameters | GFLOPs | Size/MB |

|---|---|---|---|---|---|---|

| EfficientViT [34] | 82.4% | 73.5% | 82.2% | 3738831 | 7.9 | 7.9 |

| Mobilenetv4 [35] | 79.3% | 68.4% | 79.4% | 5430447 | 21.0 | 10.7 |

| Fasternet [36] | 82.8% | 73.2% | 82.5% | 3935860 | 9.3 | 7.8 |

| Timm [37] | 81.3% | 74.1% | 81.8% | 13056783 | 33.6 | 25.2 |

| LSKNet [38] | 80.6% | 71.9% | 81.5% | 5624573 | 18.2 | 11.1 |

| YOLO v11n | 84.7% | 72.9% | 83.3% | 2583127 | 6.3 | 5.2 |

| Models | Precision | Recall | mAP50 | Parameters | GFLOPs | Size/MB |

|---|---|---|---|---|---|---|

| C3k2-ConvFormer [39] | 82.4% | 71.1% | 80.6% | 2429311 | 6.5 | 4.9 |

| C3k2-CTA [40] | 82.7% | 71.2% | 80.5% | 2620969 | 6.7 | 7.1 |

| C3k2-DBB [41] | 82.8% | 73.2% | 81.3% | 2583127 | 6.7 | 7.0 |

| C3k2-AKConv [42] | 80.3% | 73.1% | 81.5% | 2482903 | 6.3 | 5.1 |

| C3k2-ContextGuided [43] | 81.6% | 71.6% | 80.2% | 2181176 | 5.5 | 4.5 |

| C3k2-GhostDynamicConv [44] | 79.1% | 71.6% | 80.3% | 2227607 | 5.4 | 4.6 |

| C3k2-AdditiveBlock [45] | 82.0% | 71.4% | 80.3% | 2626287 | 6.7 | 5.5 |

| C3k2-IDWC | 83.3% | 73.6% | 82.2% | 2160423 | 5.4 | 4.4 |

| Models | Precision | Recall | mAP50 | Parameters | GFLOPs | Size/MB |

|---|---|---|---|---|---|---|

| SRFD [46] | 81.2% | 72.1% | 80.5% | 2587928 | 7.7 | 5.8 |

| CARAFE [47] | 83.3% | 71.9% | 80.3% | 2756352 | 6.8 | 5.6 |

| Dysample [48] | 82.2% | 72.9% | 82.6% | 2628600 | 6.5 | 5.4 |

| WaveletPool [49] | 82.9% | 72.5% | 81.8% | 2201992 | 5.6 | 4.6 |

| SPDConv | 84.1% | 72.6% | 82.9% | 4587607 | 11.3 | 8.8 |

| ADown | 84.0% | 74.1% | 82.9% | 2103895 | 5.3 | 4.3 |

| Model | Loss Functions | Precision | Recall | mAP50 |

|---|---|---|---|---|

| YOLO v11n | Giou | 83.0% | 73.9% | 82.1% |

| Eiou | 81.9% | 73.5% | 82.1% | |

| Siou | 82.8% | 73.3% | 82.2% | |

| Diou | 81.8% | 74.8% | 82.6% | |

| Piou | 82.1% | 73.9% | 82.2% | |

| Shapeiou | 83.6% | 73.6% | 82.5% | |

| Ciou | 84.7% | 72.9% | 83.3% |

| Experiment Number | Adown | IDWC | LADH | Precision | Recall | mAP50 | Parameters | GFLOPs | Size/MB |

|---|---|---|---|---|---|---|---|---|---|

| 1 | - | - | - | 84.7% | 72.9% | 83.3% | 2583127 | 6.3 | 5.2 |

| 2 | √ | - | - | 83.9% | 74.0% | 82.9% | 2103895 | 5.3 | 4.3 |

| 3 | - | √ | - | 83.3% | 73.4% | 82.1% | 2160423 | 5.4 | 4.4 |

| 4 | - | - | √ | 82.9% | 74.5% | 83.1% | 2282327 | 5.2 | 4.8 |

| 5 | √ | √ | - | 85.5% | 74.3% | 84.1% | 1681191 | 4.4 | 3.5 |

| 6 | √ | - | √ | 83.8% | 74.9% | 83.5% | 2428631 | 4.4 | 3.8 |

| 7 | - | √ | √ | 82.9% | 72.6% | 81.2% | 1859623 | 4.3 | 3.9 |

| 8 | √ | √ | √ | 84.7% | 73.0% | 82.8% | 1380391 | 3.2 | 3.0 |

| Models | Precision | Recall | mAP50 | Parameters | GFLOPs | Size/MB |

|---|---|---|---|---|---|---|

| YOLO v5 | 84.4% | 73.1% | 83.0% | 2503919 | 7.1 | 5.0 |

| YOLO v8 | 84.2% | 74.0% | 84.2% | 3006623 | 8.1 | 6.0 |

| YOLOv9 | 84.5% | 75.3% | 84.3% | 1971759 | 7.6 | 4.4 |

| YOLO v10 | 77.5% | 71.1% | 79.4% | 2266143 | 6.5 | 5.5 |

| YOLO v11n | 84.7% | 72.9% | 83.3% | 2583127 | 6.3 | 5.2 |

| AGRI-YOLO | 84.7% | 73.0% | 82.8% | 1380391 | 3.2 | 3.0 |

| Models | Performance Metrics | ARGI-YOLO | Comparison Model Performance | Performance Difference | t-Statistic | p-Value |

|---|---|---|---|---|---|---|

| YOLO v5 | Precision | 84.7% | 84.4% | +0.3 | 2.31 | 0.021 |

| Recall | 73.0% | 73.1% | −0.1 | 0.45 | 0.652 | |

| mAP50 | 83.0% | 83.0% | 0 | 0 | 1.000 | |

| YOLO v8 | Precision | 84.7% | 84.2% | +0.5 | 2.87 | 0.004 |

| Recall | 73.0% | 74.0% | −1.0 | 3.12 | 0.002 | |

| mAP50 | 83.0% | 84.2% | −1.2 | 3.59 | <0.001 | |

| YOLOv9 | Precision | 84.7% | 84.5% | +0.2 | 1.96 | 0.051 |

| Recall | 73.0% | 75.3% | −2.3 | 4.87 | <0.001 | |

| mAP50 | 83.0% | 84.3% | −1.3 | 4.15 | <0.001 | |

| YOLO v10 | Precision | 84.7% | 77.5% | +7.2 | 12.63 | <0.001 |

| Recall | 73.0% | 71.1% | +1.9 | 5.24 | <0.001 | |

| mAP50 | 83.0% | 79.4% | +3.6 | 8.91 | <0.001 | |

| YOLO v11n | Precision | 84.7% | 84.7% | 0 | 0 | 1.000 |

| Recall | 73.0% | 72.9% | +0.1 | 0.38 | 0.703 | |

| mAP50 | 82.8% | 83.3% | −0.5 | 1.89 | 0.060 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peng, G.; Wang, K.; Ma, J.; Cui, B.; Wang, D. AGRI-YOLO: A Lightweight Model for Corn Weed Detection with Enhanced YOLO v11n. Agriculture 2025, 15, 1971. https://doi.org/10.3390/agriculture15181971

Peng G, Wang K, Ma J, Cui B, Wang D. AGRI-YOLO: A Lightweight Model for Corn Weed Detection with Enhanced YOLO v11n. Agriculture. 2025; 15(18):1971. https://doi.org/10.3390/agriculture15181971

Chicago/Turabian StylePeng, Gaohui, Kenan Wang, Jianqin Ma, Bifeng Cui, and Dawei Wang. 2025. "AGRI-YOLO: A Lightweight Model for Corn Weed Detection with Enhanced YOLO v11n" Agriculture 15, no. 18: 1971. https://doi.org/10.3390/agriculture15181971

APA StylePeng, G., Wang, K., Ma, J., Cui, B., & Wang, D. (2025). AGRI-YOLO: A Lightweight Model for Corn Weed Detection with Enhanced YOLO v11n. Agriculture, 15(18), 1971. https://doi.org/10.3390/agriculture15181971