LWCD-YOLO: A Lightweight Corn Seed Kernel Fast Detection Algorithm Based on YOLOv11n

Abstract

1. Introduction

2. Materials and Methods

2.1. Construction of Corn Seed Detection Dataset

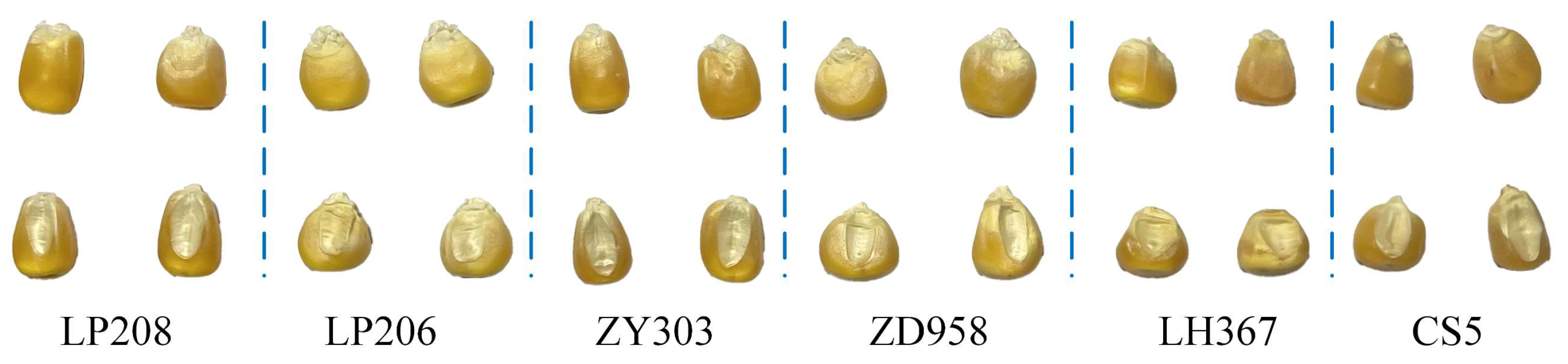

2.1.1. Corn Seed Material

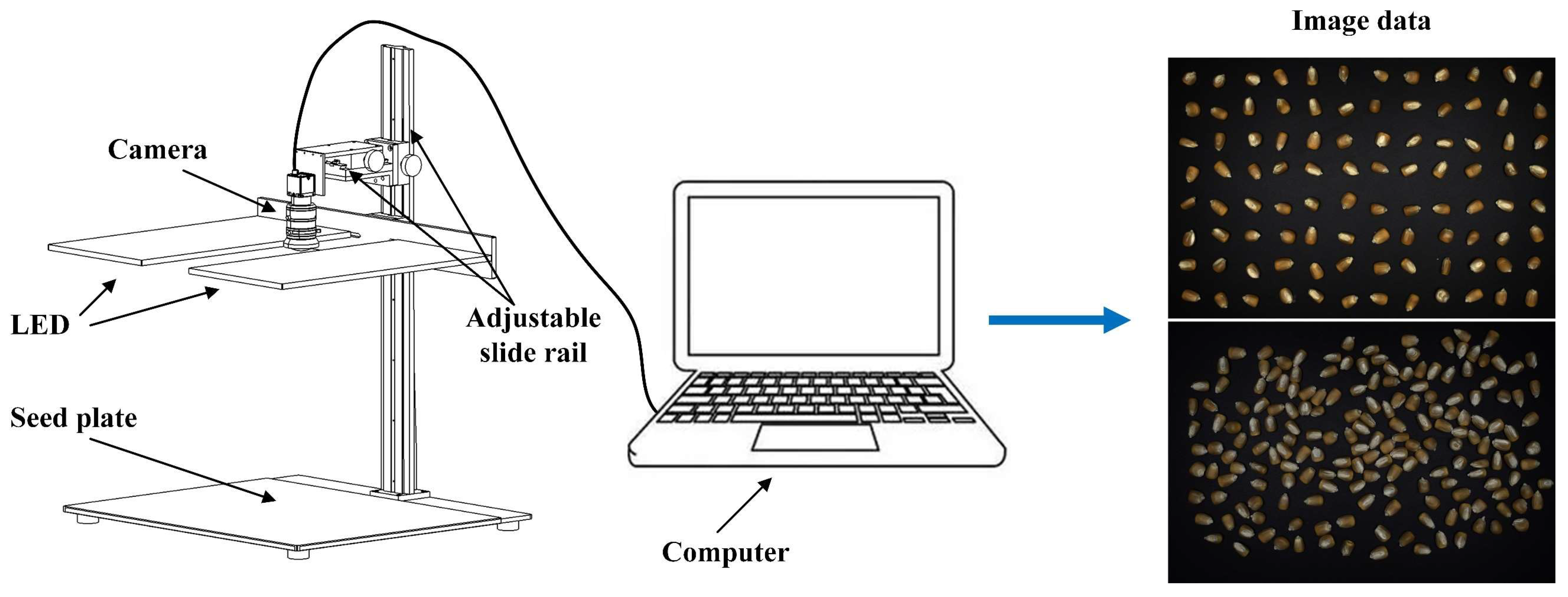

2.1.2. Image Acquisition

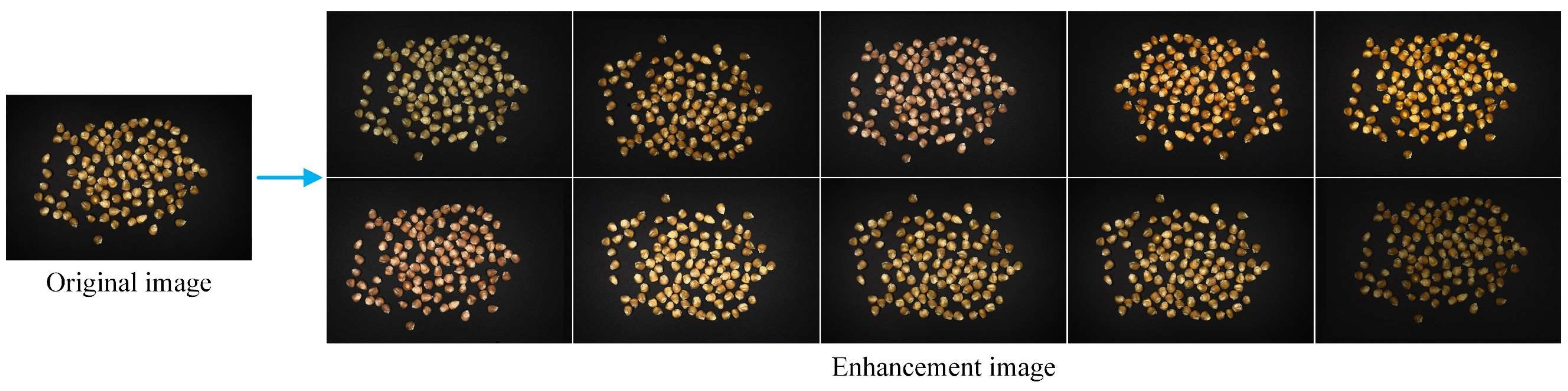

2.1.3. Data Preprocessing

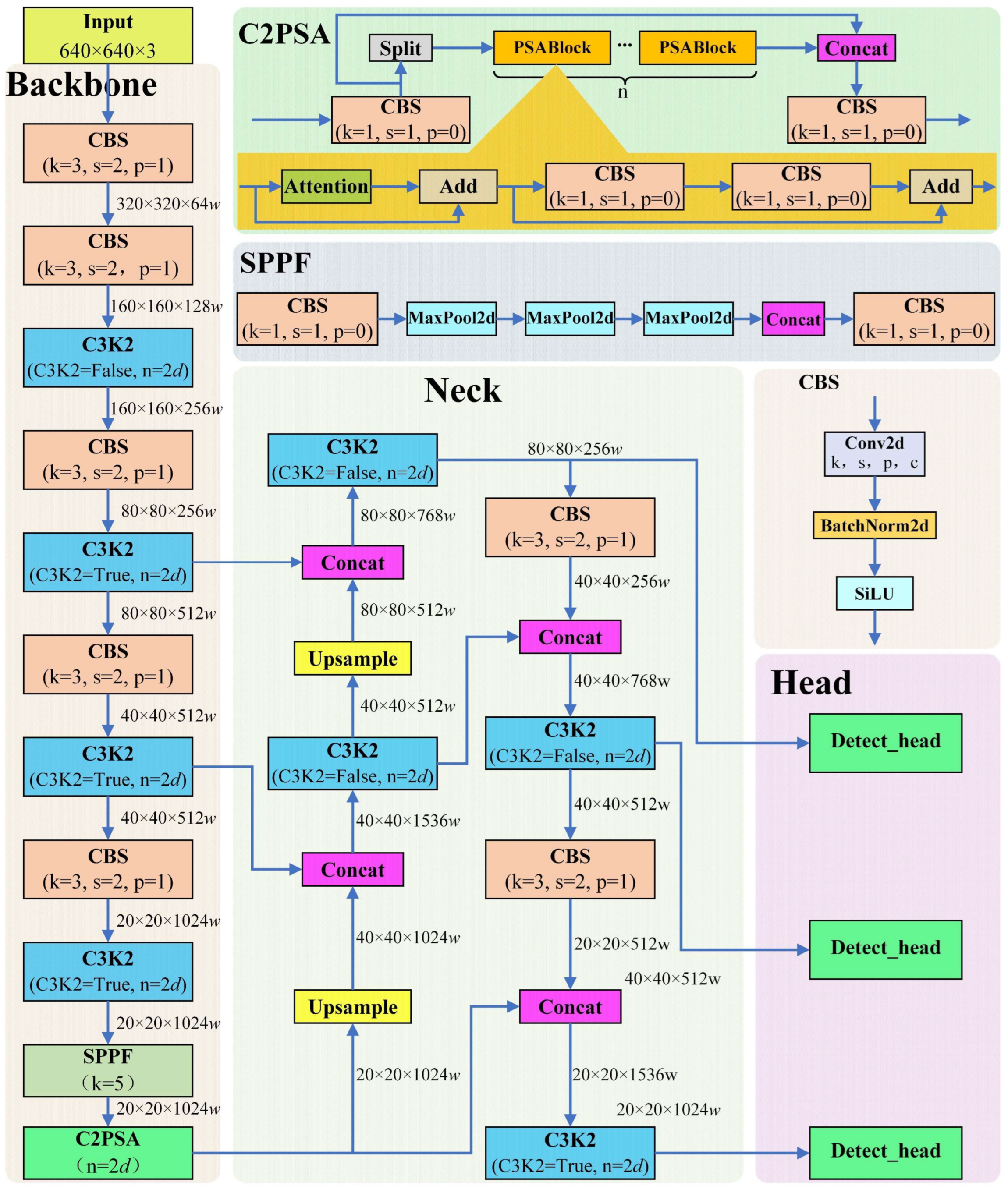

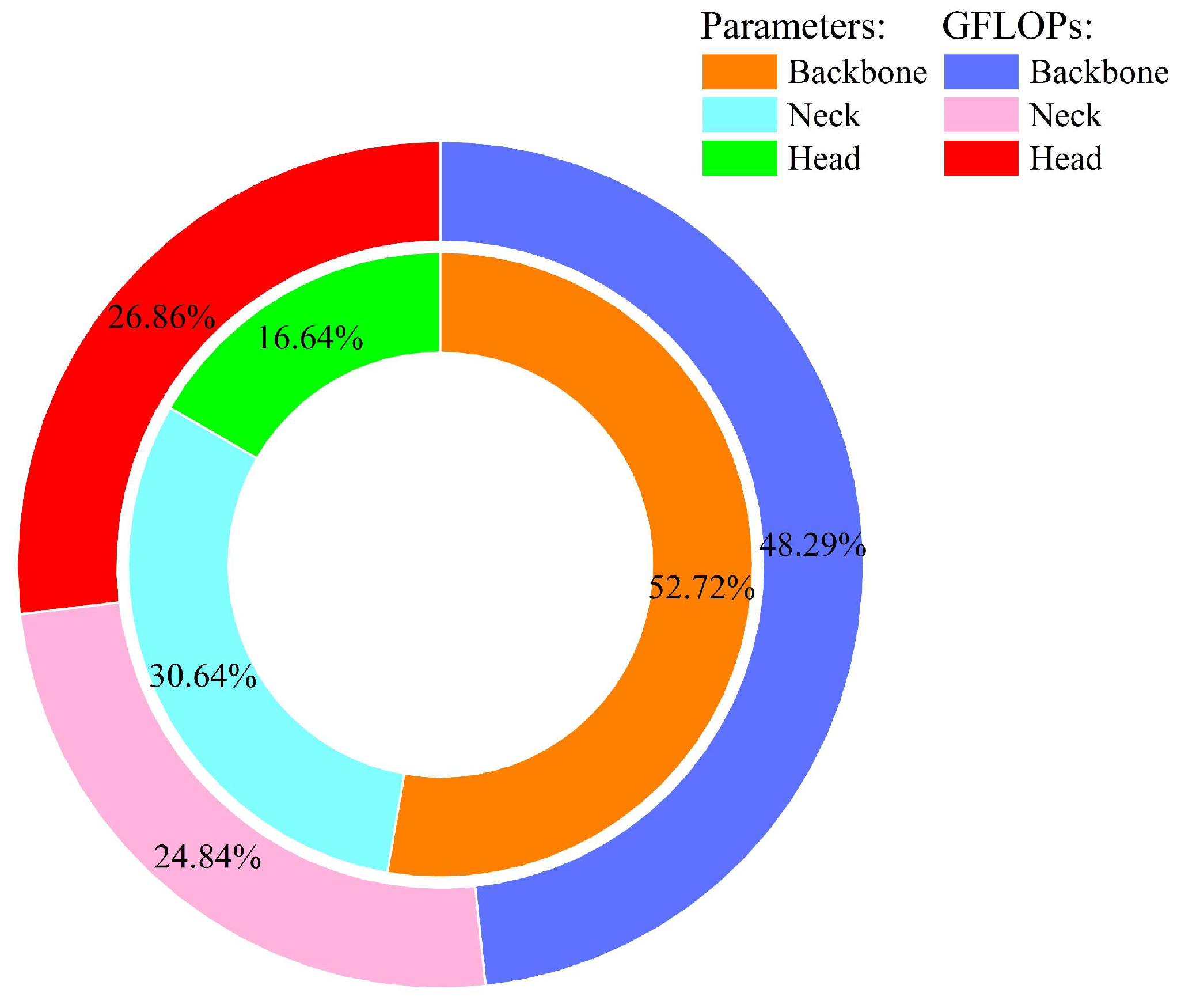

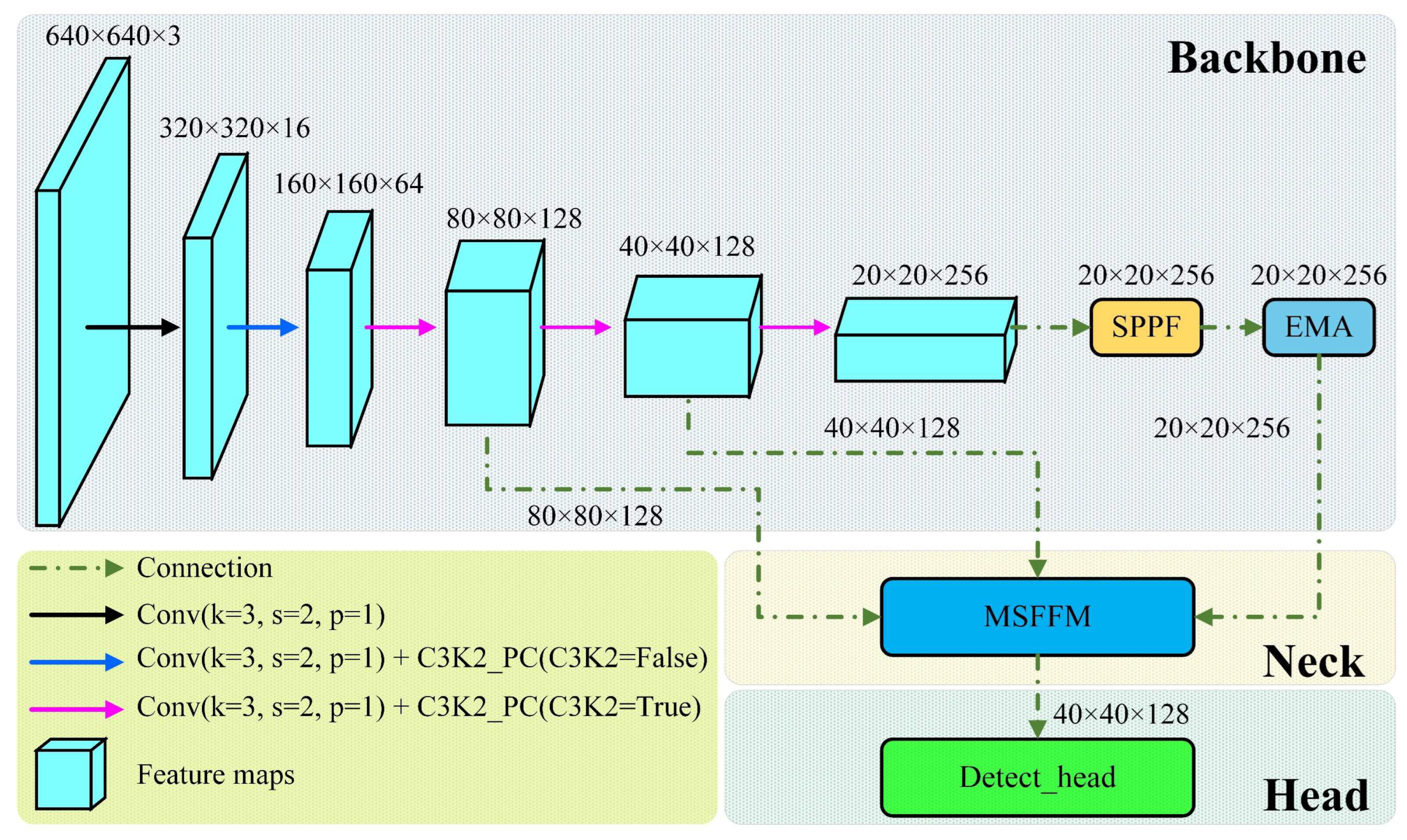

2.2. The Network Structure of LWCD-YOLO

2.2.1. The Object Detection Framework

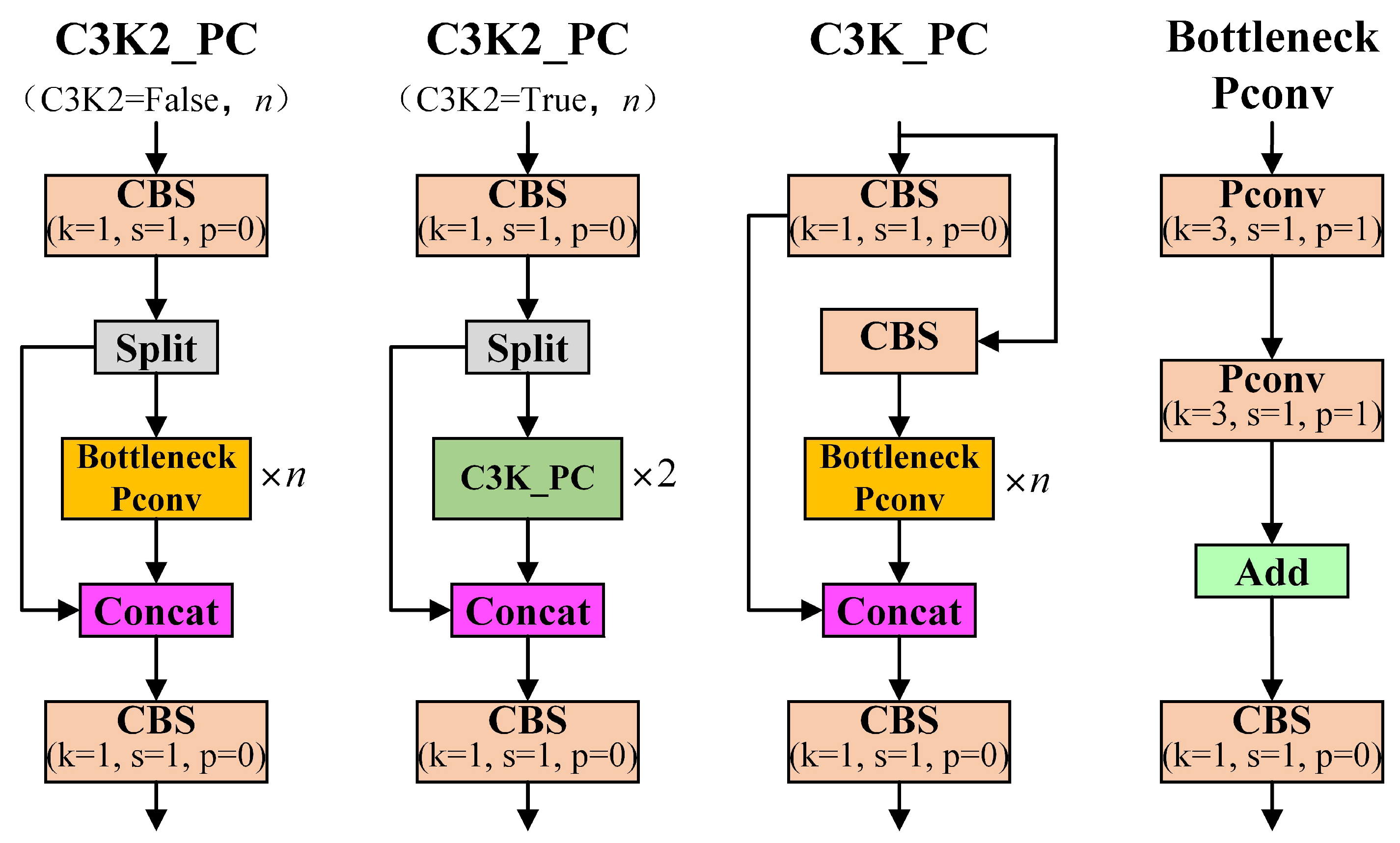

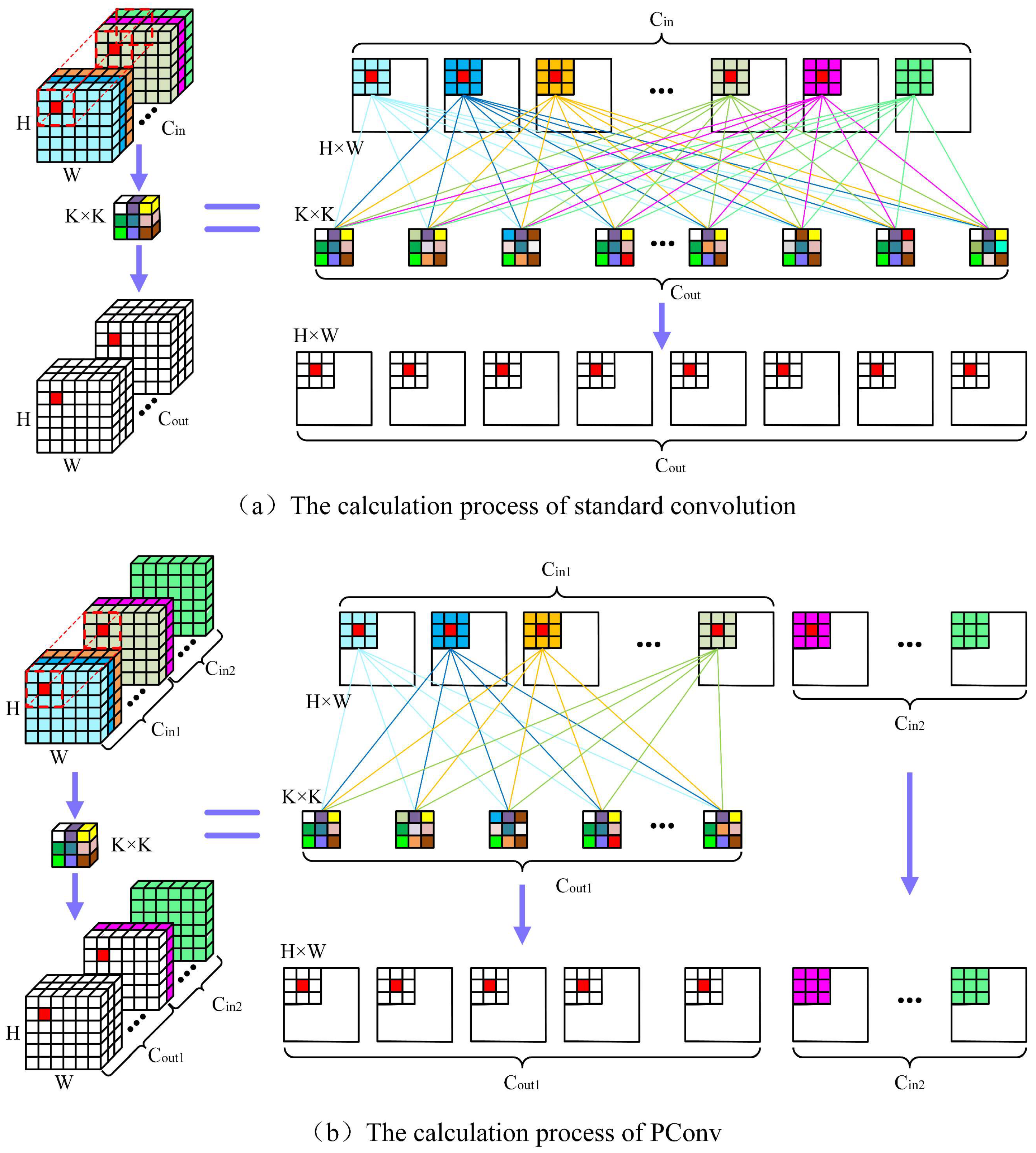

2.2.2. Lightweight Backbone Feature Extraction Network

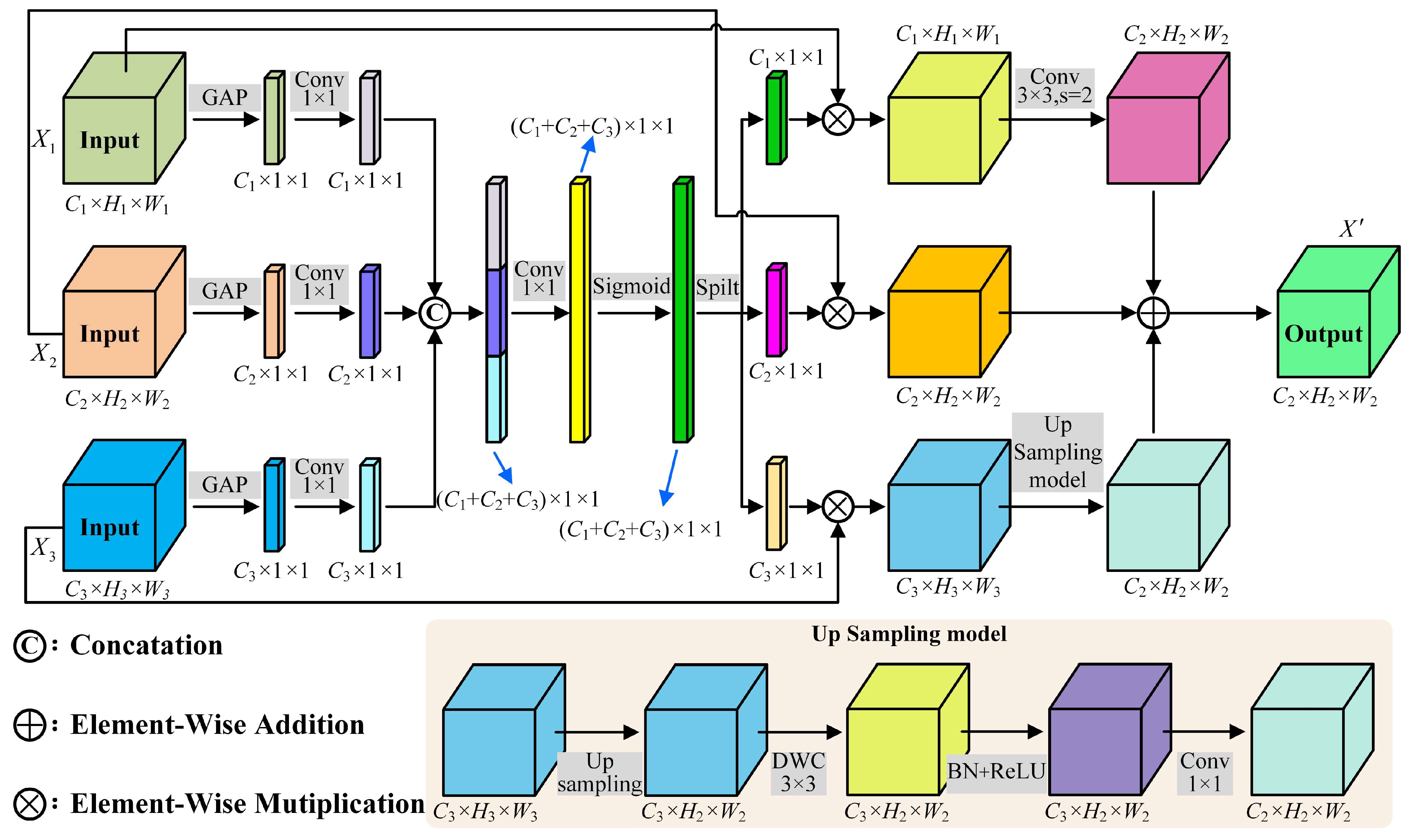

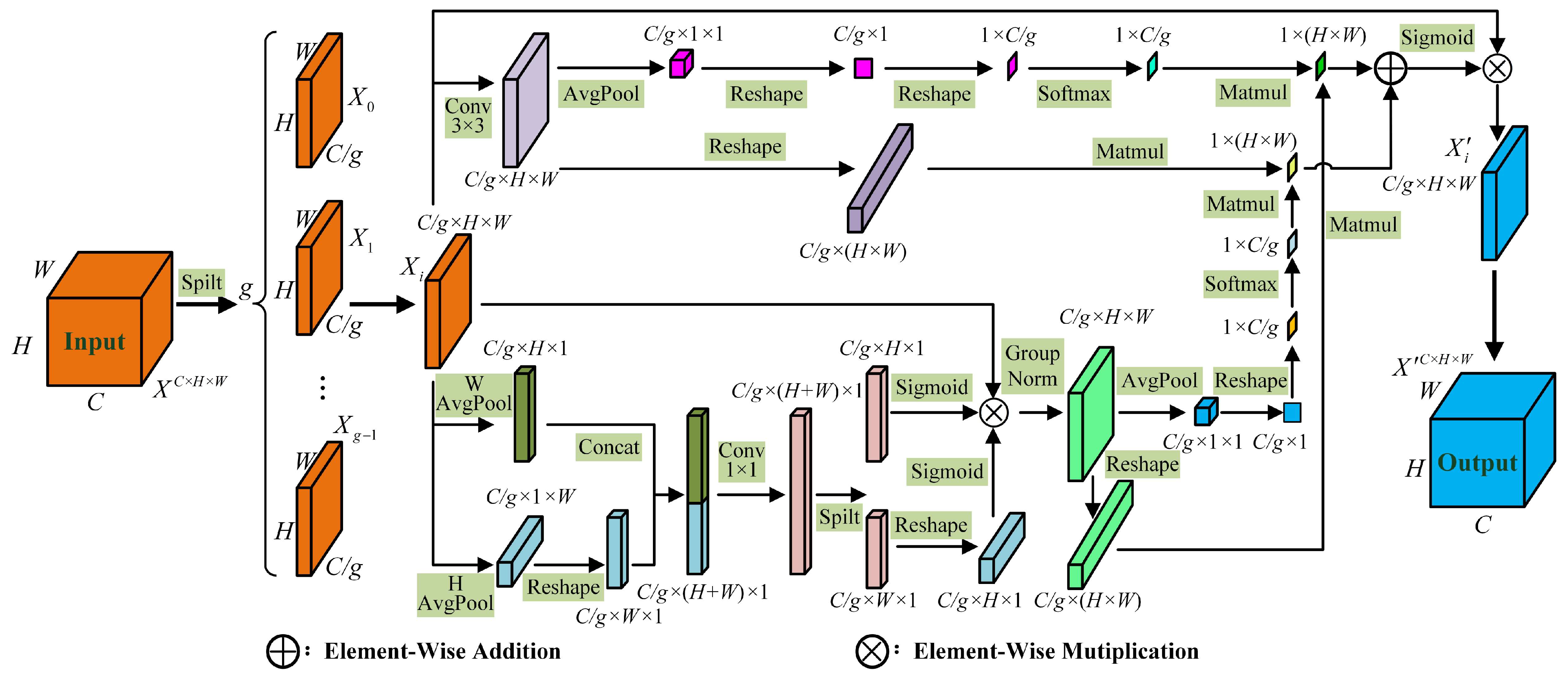

2.2.3. MSFFM Module

2.2.4. Loss Function Optimization

2.2.5. Model Evaluation Metrics

2.3. Experimental Environment and Parameters

3. Results

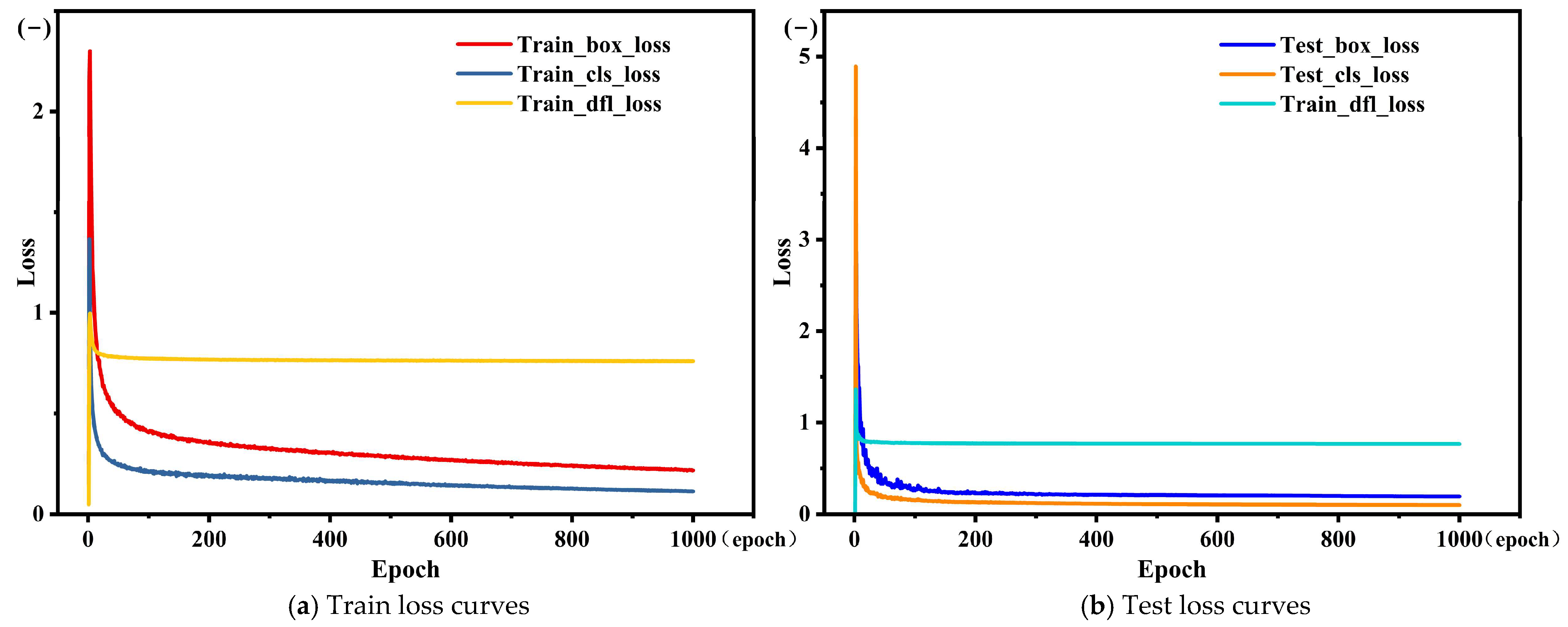

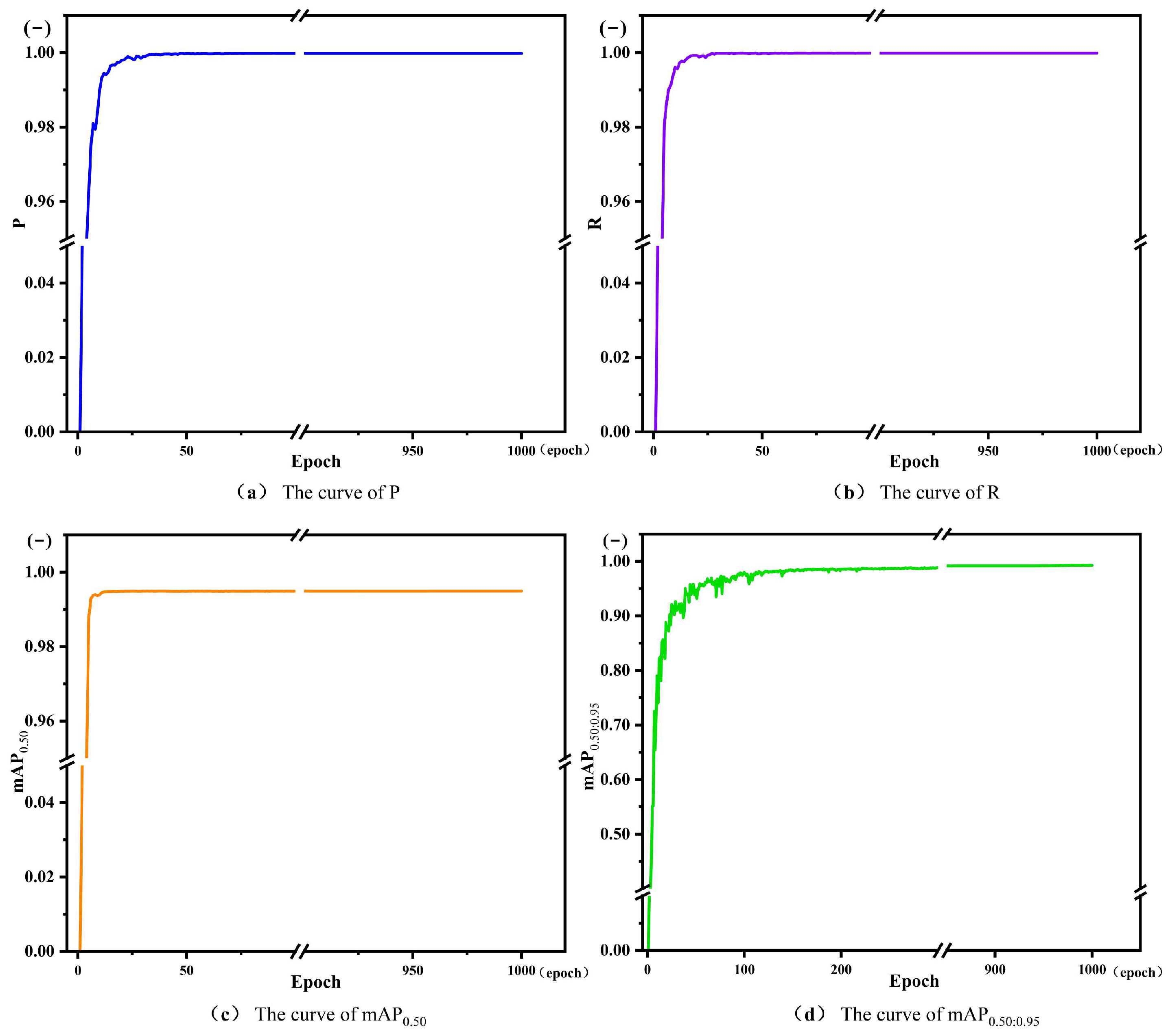

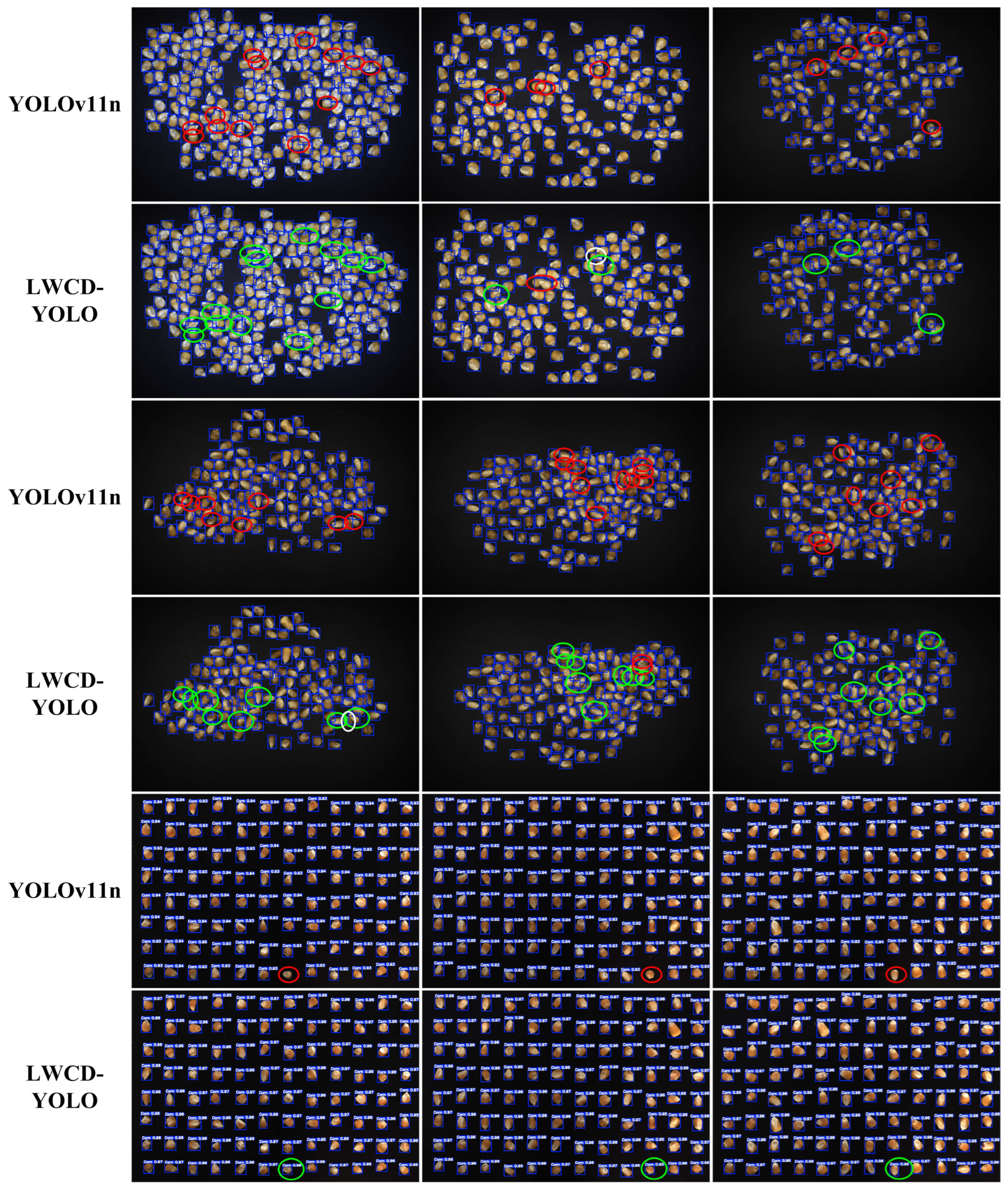

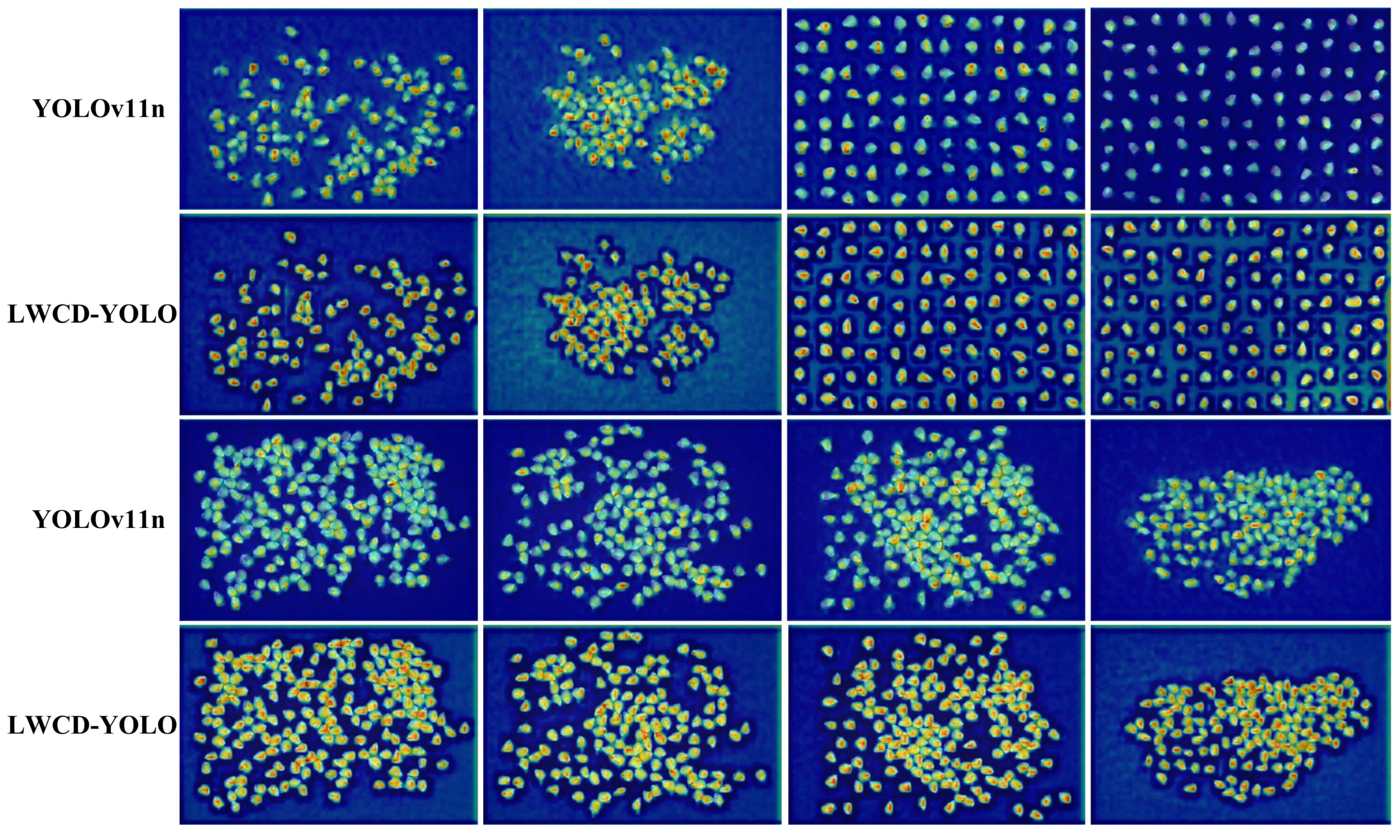

3.1. LWCD-YOLO Test Results and Analysis

3.2. Performance Comparison of The-State-of-the-Art Models

3.3. Ablation Experiment Results of Proposed Model

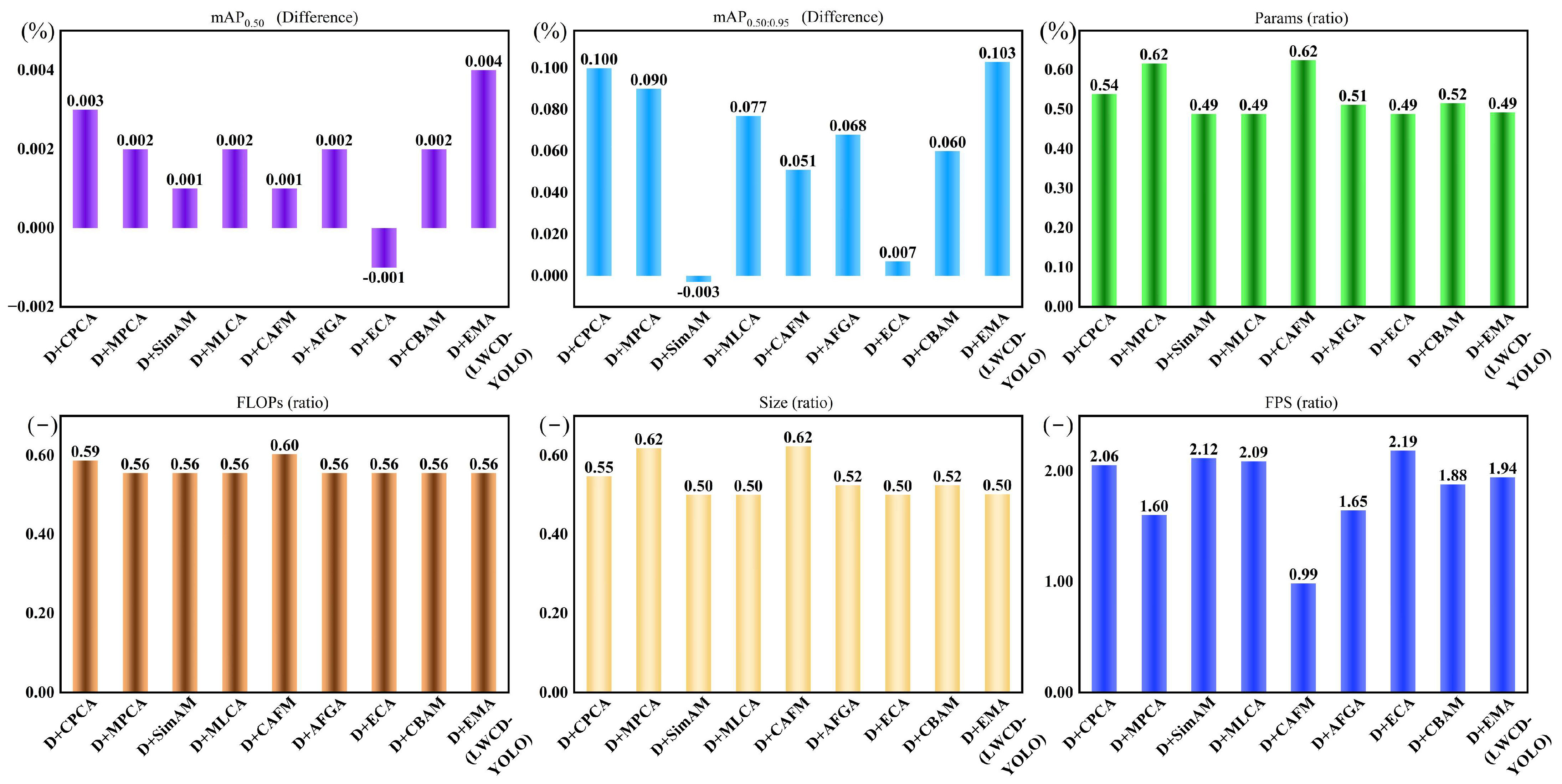

3.4. Comparative Experiments on Different Attention Mechanisms in Backbone Networks

3.5. Comparative Experiments on Different Regression Box Localization Loss Functions

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Song, P.; Yue, X.; Gu, Y.; Yang, T. Assessment of maize seed vigor under saline-alkali and drought stress based on low field nuclear magnetic resonance. Biosyst. Eng. 2022, 220, 135–145. [Google Scholar] [CrossRef]

- Shatadal, P.; Jayas, D.S.; Bulley, N.R. Digital image analysis for software separation and classification of touching grains: I. Disconnect algorithm. Trans. ASAE 1995, 38, 635–643. [Google Scholar] [CrossRef]

- Wang, Y.C.; Chou, J.J. Automatic segmentation of touching rice kernels with an active contour model. Trans. ASAE 2004, 47, 1803–1811. [Google Scholar] [CrossRef]

- Zhou, T.; Zhang, T.; Yang, L.; Zhao, J. Comparison of two algorithms based on mathematical morphology for segmentation of touching strawberry fruits. Trans. Chin. Soc. Agric. Eng. 2007, 23, 164–168. [Google Scholar] [CrossRef]

- Dougherty, E.R. Granulometric size density for segmented random-disk models. J. Math. Imaging Vis. 2002, 17, 271–281. [Google Scholar] [CrossRef]

- Lin, P.; Chen, Y.M.; He, Y.; Hu, G.W. A novel matching algorithm for splitting touching rice kernels based on contour curvature analysis. Comput. Electron. Agric. 2014, 109, 124–133. [Google Scholar] [CrossRef]

- Garofalo, S.P.; Ardito, F.; Sanitate, N.; De Carolis, G.; Ruggieri, S.; Giannico, V.; Rana, G.; Ferrara, R.M. Robustness of Actual Evapotranspiration Predicted by Random Forest Model Integrating Remote Sensing and Meteorological Information: Case of Watermelon (Citrullus lanatus, (Thunb.) Matsum. & Nakai, 1916). Water 2025, 17, 323. [Google Scholar] [CrossRef]

- Barrio-Conde, M.; Zanella, M.A.; Aguiar-Perez, J.M.; Ruiz-Gonzalez, R.; Gomez-Gil, J. A Deep Learning Image System for Classifying High Oleic Sunflower Seed Varieties. Sensors 2023, 23, 2471. [Google Scholar] [CrossRef]

- Velesaca, H.O.; Mira, R.; Suárez, P.L.; Larrea, C.X.; Sappa, A.D. Deep learning based corn kernel classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 66–67. [Google Scholar] [CrossRef]

- Zhao, Y.; Wei, Y.; Shan, H.Y.; Mu, Z.M.; Zhang, J.X.; Wu, H.Y.; Zhao, H.; Hu, J.L. Wheat ear detection method based on deep learning. Intell. Agric. Agric. Mach. 2022, 24, 96–105. [Google Scholar] [CrossRef]

- Wang, Y.; LI, Y.; Chen, Y.; Ding, Q.; He, R. A Method for Testing Phenotype Parameters of Wheat Grains on Spike Based on Improved Mask R-CNN. Sci. Agric. Sin. 2024, 57, 2322–2335. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, Q.; Feng, T.; Wang, Y.; Li, Y.; Chen, D. Wheat Grain Counting Method Based on YOLO v7-ST Model. Trans. Chin. Soc. Agric. Mach. 2023, 54, 188–197,204. [Google Scholar] [CrossRef]

- Wang, Y.; Duan, Y.; Song, L.; Han, M. Detection Method of Severe Adhesive Wheat Grain Based on YOLO v5-MDC Model. Trans. Chin. Soc. Agric. Mach. 2022, 53, 245–253. [Google Scholar] [CrossRef]

- Zou, Y.; Tian, Z.; Cao, J.; Ren, Y.; Zhang, Y.; Liu, L.; Zhang, P.; Ni, J. Rice grain detection and counting method based on TCLE–YOLO model. Sensors 2023, 23, 9129. [Google Scholar] [CrossRef]

- Liang, Z.; Xu, X.; Yang, D.; Liu, Y. The Development of a Lightweight DE-YOLO Model for Detecting Impurities and Broken Rice Grains. Agriculture 2025, 15, 848. [Google Scholar] [CrossRef]

- Chen, D.; Sun, W.; Xu, K.; Qing, Y.; Zhou, G.; Yang, R. A lightweight detection model for rice grain with dense bonding distribution based on YOLOv5s. Comput. Electron. Agric. 2025, 237, 110672. [Google Scholar] [CrossRef]

- Ma, N.; Su, Y.; Yang, L.; Li, Z.; Yan, H. Wheat Seed Detection and Counting Method Based on Improved YOLOv8 Model. Sensors 2024, 24, 1654. [Google Scholar] [CrossRef]

- Wang, X.; Li, C.; Zhao, C.; Jiao, Y.; Xiang, H.; Wu, X.; Chai, H. GrainNet: Efficient detection and counting of wheat grains based on an improved YOLOv7 modeling. Plant Methods 2025, 21, 44. [Google Scholar] [CrossRef]

- Xu, X.; Geng, Q.; Gao, F.; Xiong, D.; Qiao, H.; Ma, X. Segmentation and counting of wheat spike grains based on deep learning and textural feature. Plant Methods 2023, 19, 77. [Google Scholar] [CrossRef] [PubMed]

- Lei, M.; Li, S.; Wu, Y.; Hu, H.; Zhou, Y.; Zheng, X.; Ding, G.; Du, S.; Wu, Z.; Gao, Y. YOLOv13: Real-Time Object Detection with Hypergraph-Enhanced Adaptive Visual Perception. arXiv 2025, arXiv:2506.17733. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.H.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.-H. Run, don’t walk: Chasing higher flops for faster neural networks. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar] [CrossRef]

- Ma, G.; Shen, X.; Yan, Y.; Ma, S.; Wang, H. Efficient multiscale attention feature infusion for enhancing MAC protocol identification in underwater acoustic networks. Ocean Eng. 2025, 320, 120226. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Fan, M.; Xue, D.; Yan, Q.; Zhu, Y.; Sun, J.; Zhang, Y. Dim and Small Space Target Detection Method Based on Enhanced Information Representation. Chin. J. Comput. 2025, 48, 537–555. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing geometric factors in model learning and inference for object detection and instance segmentation. IEEE Trans. Cybern. 2021, 52, 8574–8586. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. arXiv 2016, arXiv:1610.02391. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; Kwon, Y.; Michael, K.; Tao, X.; Fang, J.; Lorna; Zeng, Y.; et al. Ultralytics YOLOv5. 2020. Available online: https://zenodo.org/records/7347926 (accessed on 20 April 2025).

- Solawetz, J. Francesco, What Is YOLOv8? The Ultimate Guide.. 2023. Available online: https://roboflow.com/ (accessed on 20 May 2025).

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. Yolov9: Learning what you want to learn using programmable gradient information. arXiv 2024, arXiv:2402.13616. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D.S. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar] [CrossRef]

- Huang, H.; Chen, Z.; Zou, Y.; Lu, M.; Chen, C.; Song, Y.; Yan, F. Channel prior convolutional attention for medical image segmentation. Comput. Biol. Med. 2024, 178, 108784. [Google Scholar] [CrossRef]

- Wang, M.; Wang, J.; Liu, C.; Li, F.; Wang, Z. Spatial-coordinate attention and multi-path residual block based oriented object detection in remote sensing images. Int. J. Remote Sens. 2022, 43, 5757–5774. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, R.Y.; Li, L.; Xie, X. Simam: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the 38th International Conference on Machine Learning, Virtual Event, 18–24 July 2021; pp. 11863–11874. Available online: https://proceedings.mlr.press/v139/yang21o (accessed on 20 May 2025).

- Wan, D.; Lu, R.; Shen, S.; Xu, T.; Lang, X.; Ren, Z. Mixed local channel attention for object detection. Eng. Appl. Artif. Intell. 2023, 123, 106442. [Google Scholar] [CrossRef]

- Palsson, F.; Ulfarsson, M.O.; Sveinsson, J.R. Hyperspectral image denoising using a sparse low rank model and dual-tree complex wavelet transform. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium (IGARSS 2014), Quebec City, QC, Canada, 13–18 July 2014. [Google Scholar] [CrossRef]

- Sun, H.; Wen, Y.; Feng, H.; Zheng, Y.; Mei, Q.; Ren, D.; Yu, M. Unsupervised bidirectional contrastive reconstruction and adaptive fine-grained channel attention networks for image dehazing. Neural Netw. 2024, 176, 106314. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar] [CrossRef]

- Gevorgyan, Z. SIoU loss: More powerful learning for bounding box regression. arXiv 2022, arXiv:2205.12740. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Yang, Z.; Wang, X.; Li, J. EIoU: An Improved Vehicle Detection Algorithm Based on VehicleNet Neural Network. J. Phys. Conf. Ser. 2021, 1924, 012001. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, S. Shape-IoU: More Accurate Metric considering Bounding Box Shape and Scale. arXiv 2023, arXiv:2312.17663. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34. [Google Scholar] [CrossRef]

- Wu, H.; Zhu, R.; Wang, H.; Wang, X.; Huang, J.; Liu, S. Flaw-YOLOv5s: A Lightweight Potato Surface Defect Detection Algorithm Based on Multi-Scale Feature Fusion. Agronomy 2025, 15, 875. [Google Scholar] [CrossRef]

- Liu, Z.; Guo, X.; Zhao, T.; Liang, S. YOLO-BSMamba: A YOLOv8s-Based Model for Tomato Leaf Disease Detection in Complex Backgrounds. Agronomy 2025, 15, 870. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar] [CrossRef]

- Xu, K.; Hou, Y.; Sun, W.; Chen, D.; Lv, D.; Xing, J.; Yang, R. A Detection Method for Sweet Potato Leaf Spot Disease and Leaf-Eating Pests. Agriculture 2025, 15, 503. [Google Scholar] [CrossRef]

- Xu, K.; Sun, W.; Chen, D.; Qing, Y.; Xing, J.; Yang, R. Early Sweet Potato Plant Detection Method Based on YOLOv8s (ESPPD-YOLO): A Model for Early Sweet Potato Plant Detection in a Complex Field Environment. Agronomy 2024, 14, 2650. [Google Scholar] [CrossRef]

- Han, Y.; Ren, G.; Zhang, J.; Du, Y.; Bao, G.; Cheng, L.; Yan, H. DSW-YOLO-Based Green Pepper Detection Method Under Complex Environments. Agronomy 2025, 15, 981. [Google Scholar] [CrossRef]

- Yang, S.; Wang, B.; Ru, S.; Yang, R.; Wu, J. Maize Seed Damage Identification Method Based on Improved YOLOV8n. Agronomy 2025, 15, 710. [Google Scholar] [CrossRef]

| Data Type | Variety | Simple Environment | Complex Environment | ||

|---|---|---|---|---|---|

| Number of Images | Number of Target Boxes | Number of Images | Number of Target Boxes | ||

| Training dataset | LP208 | 24 | 2303 | 20 | 2790 |

| ZY303 | 24 | 2304 | 15 | 2606 | |

| ZD958 | 22 | 2101 | 24 | 3124 | |

| LH367 | 20 | 1920 | 12 | 1511 | |

| total | 90 | 8628 | 71 | 10,031 | |

| Test dataset | CS5 | 24 | 2304 | 15 | 1633 |

| LP206 | 24 | 2304 | 20 | 3078 | |

| total | 48 | 4608 | 35 | 4711 | |

| Layer |

Network Layer

Architecture | Stride | Number of Output Channels | Number of Modules | Params |

FLOPs (G) |

|---|---|---|---|---|---|---|

| 1 | CBS | 2 | 16 | 1 | 464 | 0.10 |

| 2 | CBS | 2 | 32 | 1 | 4672 | 0.24 |

| 3 | C3K2_PC | 1 | 64 | 1 | 4576 | 0.24 |

| 4 | CBS | 2 | 64 | 1 | 36,992 | 0.48 |

| 5 | C3K2_PC | 1 | 128 | 1 | 17,920 | 0.23 |

| 6 | CBS | 2 | 128 | 1 | 14,772 | 0.47 |

| 7 | C3K2_PC | 1 | 128 | 1 | 5224 | 0.17 |

| 8 | CBS | 2 | 256 | 1 | 295,424 | 0.24 |

| 9 | C3K2_PC | 1 | 256 | 1 | 207,360 | 0.17 |

| 10 | SPPF | 1 | 256 | 1 | 164,608 | 0.13 |

| 11 | EMA | 1 | 256 | 1 | 10,368 | 0.06 |

| Models | P (%) | R (%) | F1 (%) | mAP0.50 (%) | mAP0.75 (%) | mAP0.50:0.95 (%) | Params (M) | FLOPs (G) | Size (MB) | FPS |

|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv11n | 99.979 | 99.977 | 99.978 | 99.487 | 99.487 | 99.159 | 2.58 | 6.3 | 5.34 | 144 |

| YOLOv11s | 99.972 | 99.979 | 99.975 | 99.479 | 99.468 | 99.186 | 9.41 | 20.2 | 18.41 | 96 |

| LWCD-YOLO | 99.978 | 99.989 | 99.984 | 99.491 | 99.491 | 99.262 | 1.27 | 3.5 | 2.68 | 280 |

| Models | P (%) | R (%) | F1 (%) | mAP0.50 (%) | mAP0.75 (%) | mAP0.50:0.95 (%) | Params (M) | FLOPs (G) | Size (MB) | FPS |

|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv5n | 99.968 | 99.957 | 99.963 | 99.491 | 99.491 | 98.450 | 1.76 | 4.1 | 3.66 | 250 |

| YOLOv8n | 99.966 | 99.989 | 99.978 | 99.482 | 99.482 | 99.151 | 3.00 | 8.1 | 5.95 | 132 |

| YOLOv9t | 99.952 | 99.979 | 99.965 | 99.493 | 99.490 | 98.837 | 2.80 | 11.7 | 6.5 | 94 |

| YOLOv10n | 99.957 | 99.922 | 99.940 | 99.483 | 99.483 | 99.200 | 2.27 | 6.5 | 5.51 | 121 |

| YOLOv11n | 99.979 | 99.977 | 99.978 | 99.487 | 99.487 | 99.159 | 2.58 | 6.3 | 5.34 | 144 |

| YOLOv12n | 99.946 | 99.946 | 99.946 | 99.486 | 99.485 | 99.124 | 2.53 | 5.8 | 5.27 | 125 |

| YOLOv13n | 99.964 | 99.979 | 99.972 | 99.483 | 99.482 | 99.222 | 2.45 | 6.1 | 5.24 | 112 |

| LWCD-YOLO | 99.978 | 99.989 | 99.984 | 99.491 | 99.491 | 99.262 | 1.27 | 3.5 | 2.68 | 280 |

| Models | P (%) | R (%) | F1 (%) | mAP0.50 (%) | mAP0.75 (%) | mAP0.50:0.95 (%) | Params (M) | FLOPs (G) | Size (MB) | FPS |

|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv11n | 99.979 | 99.977 | 99.978 | 99.487 | 99.487 | 99.159 | 2.58 | 6.3 | 5.34 | 144 |

| +A | 99.975 | 99.989 | 99.982 | 99.485 | 99.485 | 99.160 | 2.16 | 5.7 | 4.50 | 160 |

| +B | 99.972 | 99.989 | 99.981 | 99.493 | 99.493 | 99.160 | 1.69 | 4.1 | 3.52 | 218 |

| +C | 99.977 | 99.989 | 99.983 | 99.489 | 99.489 | 99.207 | 2.58 | 6.3 | 5.34 | 164 |

| +A + B | 99.978 | 99.989 | 99.984 | 99.490 | 99.490 | 99.205 | 1.27 | 3.5 | 2.68 | 257 |

| +A + C | 99.968 | 99.979 | 99.973 | 99.482 | 99.482 | 99.170 | 2.16 | 5.7 | 4.50 | 170 |

| +B + C | 99.978 | 99.979 | 99.978 | 99.492 | 99.491 | 99.161 | 1.69 | 4.1 | 3.52 | 245 |

| +A + B + C (LWCD-YOLO) | 99.978 | 99.989 | 99.984 | 99.491 | 99.491 | 99.262 | 1.27 | 3.5 | 2.68 | 280 |

| Models | P (%) | R (%) | F1 (%) | mAP0.50 (%) | mAP0.75 (%) | mAP0.50:0.95 (%) | Params (M) | FLOPs (G) | Size (MB) | FPS |

|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv11n | 99.979 | 99.977 | 99.978 | 99.487 | 99.487 | 99.159 | 2.58 | 6.3 | 5.34 | 144 |

| D + CPCA | 99.978 | 99.989 | 99.984 | 99.490 | 99.490 | 99.259 | 1.39 | 3.7 | 2.92 | 296 |

| D + MPCA | 99.978 | 99.979 | 99.978 | 99.489 | 99.486 | 99.249 | 1.59 | 3.5 | 3.30 | 231 |

| D + SimAM | 99.978 | 99.893 | 99.984 | 99.488 | 99.488 | 99.156 | 1.26 | 3.5 | 2.67 | 305 |

| D + MLCA | 99.978 | 99.989 | 99.983 | 99.489 | 99.489 | 99.236 | 1.26 | 3.5 | 2.67 | 301 |

| D + CAFM | 99.975 | 99.979 | 99.977 | 99.488 | 99.488 | 99.210 | 1.61 | 3.8 | 3.33 | 142 |

| D + AFGA | 99.978 | 99.979 | 99.978 | 99.489 | 99.489 | 99.227 | 1.32 | 3.5 | 2.80 | 237 |

| D + ECA | 99.978 | 99.979 | 99.978 | 99.486 | 99.482 | 99.166 | 1.26 | 3.5 | 2.67 | 315 |

| D + CBAM | 99.978 | 99.979 | 99.978 | 99.489 | 99.480 | 99.219 | 1.33 | 3.5 | 2.80 | 271 |

| D + EMA (LWCD-YOLO) | 99.978 | 99.989 | 99.984 | 99.491 | 99.491 | 99.262 | 1.27 | 3.5 | 2.68 | 280 |

| Models | P (%) | R (%) | F1 (%) | mAP0.50 (%) | mAP0.75 (%) | mAP0.50:0.95 (%) | FPS |

|---|---|---|---|---|---|---|---|

| E + CIoU | 99.979 | 99.977 | 99.978 | 99.487 | 99.487 | 99.159 | 257 |

| E + SIoU | 99.978 | 99.989 | 99.984 | 99.491 | 99.491 | 99.258 | 275 |

| E + GIoU | 99.978 | 99.989 | 99.984 | 99.489 | 99.488 | 99.199 | 267 |

| E + EIoU | 99.978 | 99.979 | 99.978 | 99.496 | 99.496 | 99.259 | 273 |

| E + DIoU | 99.978 | 99.979 | 99.978 | 99.493 | 99.491 | 99.250 | 261 |

| E + ShapeIoU | 99.978 | 99.989 | 99.984 | 99.491 | 99.491 | 99.200 | 241 |

| E + WIoU (LWCD-YOLO) | 99.978 | 99.989 | 99.984 | 99.491 | 99.491 | 99.262 | 280 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, W.; Xu, K.; Chen, D.; Lv, D.; Yang, R.; Yang, S.; Wang, R.; Wang, L.; Chen, L. LWCD-YOLO: A Lightweight Corn Seed Kernel Fast Detection Algorithm Based on YOLOv11n. Agriculture 2025, 15, 1968. https://doi.org/10.3390/agriculture15181968

Sun W, Xu K, Chen D, Lv D, Yang R, Yang S, Wang R, Wang L, Chen L. LWCD-YOLO: A Lightweight Corn Seed Kernel Fast Detection Algorithm Based on YOLOv11n. Agriculture. 2025; 15(18):1968. https://doi.org/10.3390/agriculture15181968

Chicago/Turabian StyleSun, Wenbin, Kang Xu, Dongquan Chen, Danyang Lv, Ranbing Yang, Songmei Yang, Rong Wang, Ling Wang, and Lu Chen. 2025. "LWCD-YOLO: A Lightweight Corn Seed Kernel Fast Detection Algorithm Based on YOLOv11n" Agriculture 15, no. 18: 1968. https://doi.org/10.3390/agriculture15181968

APA StyleSun, W., Xu, K., Chen, D., Lv, D., Yang, R., Yang, S., Wang, R., Wang, L., & Chen, L. (2025). LWCD-YOLO: A Lightweight Corn Seed Kernel Fast Detection Algorithm Based on YOLOv11n. Agriculture, 15(18), 1968. https://doi.org/10.3390/agriculture15181968