Abstract

In situ detection of growth information in greenhouse crops is crucial for germplasm resource optimization and intelligent greenhouse management. To address the limitations of poor flexibility and low automation in traditional phenotyping platforms, this study developed a controlled environment inspection robot. By means of a SCARA robotic arm equipped with an information acquisition device consisting of an RGB camera, a depth camera, and an infrared thermal imager, high-throughput and in situ acquisition of lettuce phenotypic information can be achieved. Through semantic segmentation and point cloud reconstruction, 12 phenotypic parameters, such as lettuce plant height and crown width, were extracted from the acquired images as inputs for three machine learning models to predict fresh weight. By analyzing the training results, a Backpropagation Neural Network (BPNN) with an added feature dimension-increasing module (DE-BP) was proposed, achieving improved prediction accuracy. The R2 values for plant height, crown width, and fresh weight predictions were 0.85, 0.93, and 0.84, respectively, with RMSE values of 7 mm, 6 mm, and 8 g, respectively. This study achieved in situ, high-throughput acquisition of lettuce phenotypic information under controlled environmental conditions, providing a lightweight solution for crop phenotypic information analysis algorithms tailored for inspection tasks.

1. Introduction

Lettuce phenotypic information refers to all externally observable traits of lettuce during its growth, including shape, structure, texture, and color [1]. By obtaining lettuce phenotypic information, one can fully understand the plant architecture, growth status, physiological characteristics, and yield of lettuce [2,3]. Therefore, monitoring lettuce phenotypic information is crucial for agronomists and breeders to assess lettuce growth and adjust management strategies [4]. However, traditional methods of obtaining phenotypic information rely on manual measurement, which has drawbacks such as small scale, low efficiency, and strong subjectivity, severely limiting the efficiency of agricultural breeding and production [5]. There is an urgent need to utilize advanced phenotypic analysis technologies and equipment to achieve automated acquisition and intelligent analysis of lettuce phenotypic information [6].

Existing gantry-type [7], track-type [8], and imaging chamber-type [9] phenotyping platforms offer advantages such as high stability and detection accuracy. However, gantry-type and track-type phenotyping platforms are limited to collecting phenotypic information from crops in fixed areas, and their installation costs are generally high; imaging-type phenotyping platforms require moving crops to designated locations for information collection, resulting in low efficiency. In comparison, inspection robots capable of autonomous navigation and crop information acquisition can overcome the limitations of traditional phenotyping platforms. Inspection robots can also achieve in situ, non-destructive detection of crops through automated, high-throughput data collection and analysis [10]. For example, Miao et al. [11] designed a phenotyping inspection robot with adjustable wheel tracks, inspired by the structure of gantry-style platforms, and achieved high-throughput image collection through pixel-level algorithm registration, overcoming the fixed working area issue of traditional gantry-style platforms.

In facility agriculture production, yield is a key indicator of lettuce economic efficiency, and lettuce fresh weight directly determines yield [12]. Lettuce fresh weight refers to the total mass of the plant excluding the root system in its fresh state. Direct measurement of lettuce fresh weight requires destructive sampling methods to remove the root system. Therefore, many researchers have employed imaging devices combined with deep learning methods to predict lettuce fresh weight based on collected images. In lettuce fresh weight prediction models, using multi-view images to perform three-dimensional reconstruction of lettuce can fully capture its complex three-dimensional structural information, which offers significant advantages for fresh weight prediction tasks [13]. With the improvement of computer processing capabilities and the reduction in the size of three-dimensional data measurement devices, three-dimensional plant canopy structure measurement and reconstruction have been deployed on miniaturized platforms [14]. Victor Bloch et al. [15] used four 3D cameras to collect point clouds of lettuce plants, extracted morphological parameters such as plant volume and plant height through point cloud reconstruction, and used these parameters as inputs for linear regression to predict the fresh weight of lettuce, with the model R2 reaching 0.85. However, facility-based phenotyping platforms must maintain a certain level of operational efficiency. Collecting multi-angle information for individual crops consumes excessive time, impairing detection efficiency. Additionally, the complex structural layout of facility environments and the compact planting arrangement of lettuce make idealized multi-angle information collection schemes unsuitable for the high-throughput in situ acquisition of facility crop phenotyping parameters. Therefore, the single-angle, multi-modal imaging approach based on mobile phenotyping platforms is more suitable for in situ monitoring of crop growth in facility environments [16].

Compared to multi-angle imaging, single-angle imaging struggles to comprehensively capture the three-dimensional morphological information of lettuce and also loses a significant amount of color and texture information, which has a substantial impact on the accuracy of fresh weight estimation [17,18]. Currently, many scholars have been exploring the prediction of lettuce fresh weight from single-view images. Tan et al. [19] combined the Mask R-CNN and PoseNet deep learning models to predict fresh weight information from tilted images of lettuce in a controlled environment; Tang et al. [20] combined the Mask3D and PointNet deep learning models to predict actual fresh weight using the three-dimensional point cloud of lettuce.

Using deep learning methods, complex feature mapping relationships can be directly learned from RGB images, depth images, or point clouds through models such as CNN and PointNet to predict the fresh weight of lettuce. Although varying degrees of accuracy have been achieved in prediction, running complex deep learning models on small mobile devices such as inspection robots means more computing time and higher computing power consumption [21]. Therefore, the phenotypic information processing algorithms carried by inspection robots need to develop in the direction of lightweighting [22].

Extracting more phenotypic parameters with higher relevance to fresh weight from single-view, multi-modal information, deeply exploring the potential relationships between phenotypic parameters and fresh weight, and using machine learning algorithms to predict fresh weight based on phenotypic parameters may overcome the high computational demands of directly processing raw images with deep learning models, providing a lightweight solution for crop phenotypic information analysis algorithms tailored to inspection tasks [23,24,25]. However, most current research pursues higher prediction accuracy by using deep learning networks to directly process complex relationships within images, while neglecting the model’s size. Furthermore, few platforms can achieve non-destructive, in situ, high-throughput monitoring of crops in facility environments while simultaneously ensuring automation and simple deployment.

This study aims to develop a facility-based crop information inspection robot, with the following specific research objectives. (1) Develop a robot capable of autonomous navigation and completing image acquisition and analysis tasks in a facility environment. (2) Design single-plant lettuce segmentation algorithms and point cloud reconstruction algorithms to automatically identify and segment individual lettuce plants from raw RGB and depth images and reconstruct their three-dimensional point cloud models. (3) Extract morphological parameters such as lettuce plant height and canopy width, as well as vegetation indices such as ultra-green index and normalized vegetation index and analyze the correlation between various parameters and fresh weight. (4) Construct different fresh weight prediction models based on multiple machine learning algorithms and investigate the contribution of different phenotypic parameters to fresh weight prediction.

2. Materials and Methods

2.1. Overview of the Platform

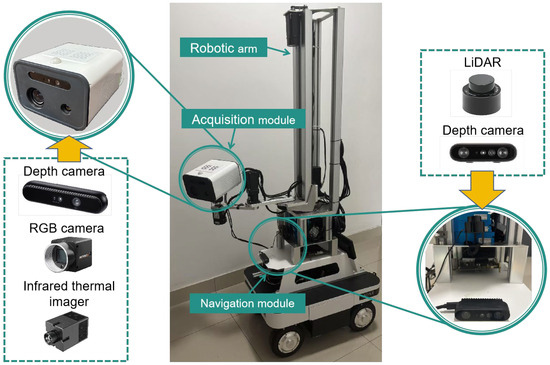

The controlled environment crop information inspection robot designed by this research institute mainly consists of a four-wheel omnidirectional chassis, a SCARA robotic arm, and information sensing devices. The specific structure is shown in Figure 1.

Figure 1.

Hardware structure of inspection robot.

The robot chassis uses a programmable omnidirectional unmanned ground vehicle (UGV), which completes continuous and accurate positioning and navigation inside and outside the facility through the SLAM architecture. The UGV communicates with the board NVIDIA Jetson Orin Nano (NVIDIA Corp., Santa Clara, CA, USA) via a CAN bus interface, continuously sending odometer information and receiving driving control signals in real time. An RGB-D camera Gemini335 (Orbbec, Shenzhen, China) and a single-line laser radar LSLIDAR N10P (Leishen Intelligent System Co., Ltd., Shenzhen, China) are selected to provide visual information and 3D point clouds for the navigation system, which are used to construct a 2D grid map. The NAV2 navigation architecture under the ROS2 system is adopted. After setting navigation target points via RVIZ, the UGV can achieve continuous and precise autonomous navigation after establishing a cost map through loopback. This enables the inspection robot to stably achieve the “localization-planning-control” closed-loop in controlled environments, while ensuring navigation accuracy, obstacle avoidance safety, and task continuity.

To meet the on-site data collection needs for crops of different types, growth stages, and planting methods within the facility, this study designed a five-degree-of-freedom SCARA robotic arm with a vertical lifting range of 0.9 m to 1.8 m relative to the ground and a horizontal reach of 0.7 m. Equipped with a multi-modal imaging device mounted on its end-effector, the arm can achieve 360° horizontal rotation and ±90° vertical tilt.

To achieve precise acquisition of crop information, a pre-trained lightweight semantic segmentation model, YOLOv8n-seg, is used to identify lettuce based on the RGB video stream obtained from an RGB-D camera. The identified lettuce is then mapped to the depth video stream in the form of a mask, thereby obtaining the coordinate position information of the lettuce center point relative to the depth camera. A depth threshold is then set for the lettuce center point. When the lettuce center point exceeds the depth threshold range, the robotic arm targets the lettuce center point and, through inverse kinematics calculation, controls its end effector to move to a position 1 m away from the lettuce center point; The center point of the RGB image is set as the crosshair. Through forward kinematics control of the robotic arm, the center point of the RGB-D camera at the end of the robotic arm moves toward and aligns with the center point of the lettuce, ensuring that the imaging device always faces the target lettuce at a distance of 1 m.

The information sensing device mounted on the end of the robotic arm consists of an Orbbec Gemini336L (Orbbec, Shenzhen, China), a Hikvision industrial camera MV-CS200-10GC (Hikvision, Hangzhou, China), a Gode IPM630 infrared thermal imager (Guide, Wuhan, China), a humidity sensor, and a light sensor, enabling high-throughput acquisition of crop information. The specific models are as follows:

In Table 1, resolution is the most important consideration. The high resolution of 5472 × 3648 of the RGB camera ensures high-quality RGB images; the RGB channel and depth channel resolutions of the depth camera are both 1280 × 800, which facilitates image fusion; and the 640 × 512 pixels of the infrared thermal imager are sufficient for the application of lettuce temperature measurement. The operating range only needs to cover an effective range of 0.5 m to 2 m to meet the imaging requirements of inspection robots; in most cases, the surface temperature of crops will not exceed the temperature measurement range of the infrared thermal imager.

Table 1.

Image sensor model of the acquisition device.

Before performing area inspection tasks, the inspection robot is manually controlled to navigate within the facility to obtain a two-dimensional grid map model of the facility environment. Each location requiring information acquisition is marked as a navigation point. The inspection robot will pause at each navigation point and initiate a vision-based robotic arm control program. Once the robotic arm is aligned with the target lettuce, the image acquisition program is activated to capture RGB images, depth images, and near-infrared images of the target lettuce from a side view perspective. After completion, the inspection robot will proceed to the next navigation point and perform the next image acquisition task.

2.2. Experimental Materials

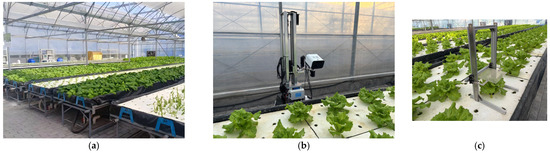

The experimental site is located at the Zijing Farm, Ganghua, Jiangsu Province, China (119°8′21.725″ E, 32°8′27.481″ N). Italian lettuce grown by hydroponics was selected as the experimental subject. Each lettuce plant was spaced 200 mm apart, and the hydroponic rack was 1000 mm above the ground. Other management measures were consistent with local facility management practices.

The experiment was conducted on 11 April 2025, with the lettuce having already been growing in the area for 30 days prior to the experiment. The inspection robot navigated autonomously through the aisles between the hydroponic racks to complete patrols and data collection, with a maximum travel speed of 0.5 m/s. To ensure the imaging quality of all sensors, the distance between the information sensing devices and the target crops was maintained at 1000 mm. A total of 132 RGB images, depth images, and thermal infrared images of Italian lettuce were obtained.

Within 2 h of image acquisition, manually measure the lettuce using a height measurement device (Figure 2c) to determine the distance from the highest point of the lettuce to the bottom surface of the hydroponic foam board, which is used as the plant height. The maximum distance from the farthest left to the farthest right end of the lettuce parallel to the aisle direction is used as the crown width. Finally, remove the lettuce from the hydroponic rack, remove the roots, and use a balance (accuracy 0.01 g) to measure the mass of the lettuce after removing the roots as the fresh weight.

Figure 2.

The scenario of the experiment. (a) Lettuce growing environment; (b) inspection robots perform inspection tasks in the greenhouses; (c) height measuring device with an accuracy of 0.5 mm.

2.3. Image Processing Methods

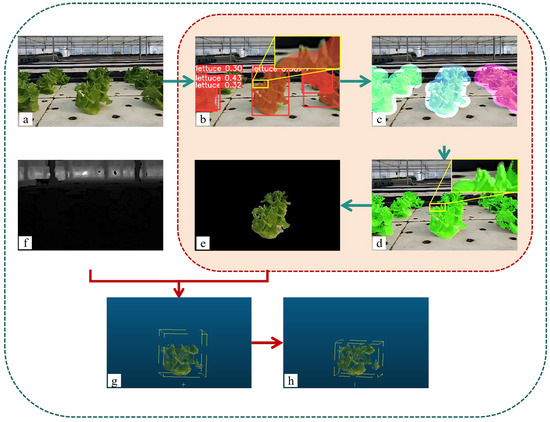

RGB images and depth images of lettuce captured using the Orbbec Gemini336L camera were used as input for the phenotyping estimation algorithm. First, to enable the image processing algorithm to automatically identify lettuce while accounting for the processor’s limited computational capacity, this study selected the lightweight model YOLOv8n-seg for embedded deployment. Compared to other YOLO variants, it offers more extreme lightweight optimization and more mature architecture. This study employed YOLOv8n-seg to train a lightweight semantic segmentation model for processing raw RGB images. This model identifies lettuce within images and generates preliminary masks, as shown in Figure 3b.

Figure 3.

Flowchart of image processing algorithms. (a) RGB images obtained from the Gemini336L camera; (b) RGB images processed by YOLOv8n-seg, the mask obtained at this point has a low coverage rate for the edges of the lettuce leaves; (c) Expand the mask outward by 5%; (d) Use an algorithm based on Excess Green Feature to process the mask and obtain a more accurate mask; (e) Obtain the RGB image under the center position mask. At this point, the image contains the target lettuce and the lettuce in the background that cannot be segmented; (f) Depth images obtained from the Gemini336L camera; (g) Reconstruct the 3D point cloud of the mask area using RGB images and depth images, and segment the background lettuce by depth threshold; (h) Remove noise point clouds based on the DBSCAN clustering algorithm to obtain point clouds of individual lettuce plants.

Due to limitations in model accuracy, the mask generated by YOLOv8n-seg does not precisely cover the leaf edges. Therefore, the mask is expanded outward by 5% to ensure it fully covers the lettuce instances (Figure 3c). Then, the super-green algorithm is used to reassign values to each pixel within the mask to enhance the contrast of the G channel relative to the R and B channels, thereby avoiding situations where the G channel index of lettuce pixels is too low due to shadows or other factors, making them difficult to distinguish. Next, we set thresholds for the G channel and the supergreen index. We then determine whether the G channel of the original RGB image and the supergreen index after processing by the supergreen algorithm exceed the thresholds. The results are combined using a logical OR operation to obtain a lettuce mask with clear boundaries (Figure 3d).

YOLOv8n-seg has already distinguished each instance of the mask during semantic segmentation. Combined with the clearly defined lettuce mask obtained, a single lettuce instance located at the center of the image can be directly extracted. Combined with the original depth image, the RGB image and depth image are aligned to the same spatial coordinate system through the intrinsic matrix, thereby reconstructing a 3D point cloud with RGB information.

Using the Orbbec SDK to obtain the camera intrinsic matrix K, coordinate transformation is applied to convert two-dimensional pixel coordinates combined with depth information into three-dimensional spatial coordinates, thereby reconstructing the three-dimensional colored point cloud of the target lettuce. The coordinate transformation formula is shown in Equation (1), as follows.

where (u, v) represents the pixel coordinates, dz denotes the depth value corresponding to these coordinates in the depth map, the depth scaling factor s = 1000, is the internal parameter matrix, and (X, Y, Z) represents the world coordinate system coordinates corresponding to the pixel coordinates (u, v).

During the image acquisition phase, the distance between the target lettuce and the camera is controlled to be 1 m. By setting a depth threshold (0.8 m–1.2 m), point clouds outside the segmentation threshold can effectively remove depth-failed points, noise point clouds, and background lettuce (Figure 3g). The point cloud within the depth threshold is further processed using the DBSCAN clustering method to remove noise point clouds within the depth threshold, thereby obtaining a complete three-dimensional point cloud of a single lettuce plant, as shown in Figure 3h.

2.4. Phenotypic Parameter Extraction Method

2.4.1. Morphological Parameter Extraction

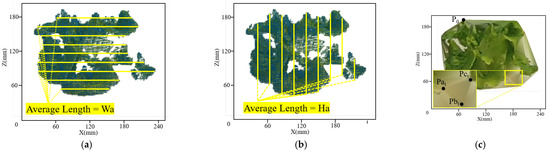

Extract morphological parameters from the 3D point cloud model of a single lettuce plant. The number of points in the 3D point cloud of the clustered single lettuce plant is denoted as (Np). Project this point cloud onto the X-Y plane to obtain a discrete set of projection points. Use Delaunay triangulation to divide the projection point set into multiple triangles and calculate the sum of the areas of all triangles to obtain the projected area (Ap) of the target lettuce plant. The difference between the highest and lowest vertical coordinates of the projection is the plant height (Hm), and the difference between the rightmost and leftmost horizontal coordinates is the crown width (Wm).

Using the equidistant segmentation method, the projection is divided into 10 equal intervals along the Z-axis, and the arithmetic mean of the X-axis spans corresponding to the intercepts is calculated as the average crown width (Wa), as shown in Figure 4a. Similarly, divide the projection along the X-axis into 10 equal intervals, and calculate the arithmetic mean of the Z-axis spans corresponding to the intercept lines as the average plant height (Ha), as shown in Figure 4b.

Figure 4.

Methods for Extracting Morphological Parameters. (a) Schematic of average crown width extraction; (b) schematic of average plant height extraction; (c) the minimum convex polyhedron constructed by the 3D convex hull algorithm that encloses the point cloud of a single lettuce plant.

Using the 3D convex hull algorithm, construct the minimum convex polyhedron enclosing the point cloud of a single lettuce plant, as shown in Figure 4c. Each face of this polyhedron is a triangle formed by three vertices from the original point cloud. The volume of the convex polyhedron (Vh) can be expressed using the tetrahedral decomposition method which shown in Equation (2), as follows.

where (Vh) is the volume of the convex polyhedron, m is the total number of faces of the convex hull polyhedron, , , and are the coordinates of the three vertices of the tth face of the convex polyhedron, and P0 is the coordinate of the highest point of the convex polyhedron.

The abbreviations for the morphological parameters are shown in Table 2:

Table 2.

Abbreviations corresponding to morphological parameters.

2.4.2. Vegetation Index Extraction

The amount of radiation available for crop photosynthesis exhibits a positive linear relationship with biomass. Vegetation indices, by reflecting the spectral characteristics of crops, directly relate to crop growth status and are therefore widely used in estimating crop fresh weight [26]. This study selected 10 vegetation indices that may be correlated with the fresh weight of lettuce. The specific calculation formulas are shown in Table 3:

Table 3.

List of vegetation index calculation formulas.

2.5. Fresh Weight Prediction Model

This study considered the small size of the lettuce sample dataset and the high correlation between input variables and fresh weight, selecting four algorithms—Multiple Linear Regression (MLR), Random Forest Regression (RFR), Backpropagation Neural Network (BPNN), and Dimensionality-Enhanced BPNN (DE-BP).

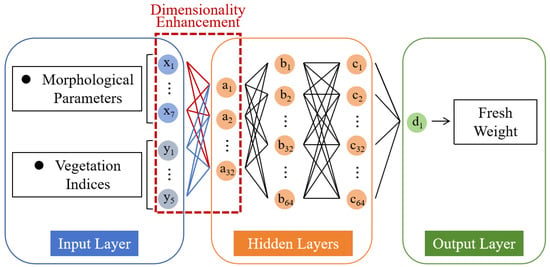

Among these, MLR has a simple structure, strong interpretability, and excellent ability to fit linear relationships, allowing for an intuitive assessment of the contribution of each variable to fresh weight estimation. RFR, through decision tree ensemble and random sampling strategies, exhibits strong resistance to overfitting. BPNN learns complex relationships between inputs and outputs through gradient descent by propagating error signals backward. DE-BP incorporates a feature dimensionality enhancement module consisting of two fully connected layers at the front end of the original BPNN, enhancing the richness of feature interaction information by dimensionality enhancement of lettuce phenotypic parameters, followed by feature dimensionality reduction and regression via the BP neural network, as shown in Figure 5.

Figure 5.

DE-BP Network Architecture Diagram. Building upon the BPNN structure, a feature dimensionality enhancement module has been incorporated. This module employs a branch architecture for independent dimensionality expansion, where morphological parameters undergo separate normalization before being mapped to vegetation indices. This approach enhances the complex mapping relationships of morphological parameters while preserving the original correlations of vegetation indices.

The models were trained using the Adam optimizer with an initial learning rate of 0.01 and a batch size of 32. The number of epochs was determined by monitoring the Mean Absolute Error (MAE) over iterations. Ultimately, the BPNN was set to 500 epochs, while the DE-BP was set to 600 epochs. This study employed a five-fold cross-validation method for model training and evaluation, using the average coefficient of determination (R2), average root mean square error (RMSE), and average absolute percentage error (MAPE) of the training set as evaluation metrics to compare the training performance of the four models.

3. Results

3.1. Accuracy of Extracting Plant Height and Crown Width

Using the 132 side-view RGB images and depth maps of lettuce collected by the RGB-D camera, the single-plant lettuce 3D point cloud reconstruction and morphological parameter extraction algorithm proposed in this study was used to obtain the plant height and crown width. R2 was used to evaluate the degree of fit between the estimated values and the actual values; RMSE, MAPE, and mean absolute error (MAE) were used to evaluate the deviation between the estimated values and the actual values. The evaluation results are as follows:

As shown in Table 4, the three-dimensional point cloud reconstruction algorithm for single lettuce plants proposed in this study has high accuracy in estimating the plant height and crown width of lettuce plants, with an average absolute error of less than 5 mm, demonstrating a certain degree of reliability.

Table 4.

Prediction accuracy of plant height and crown width.

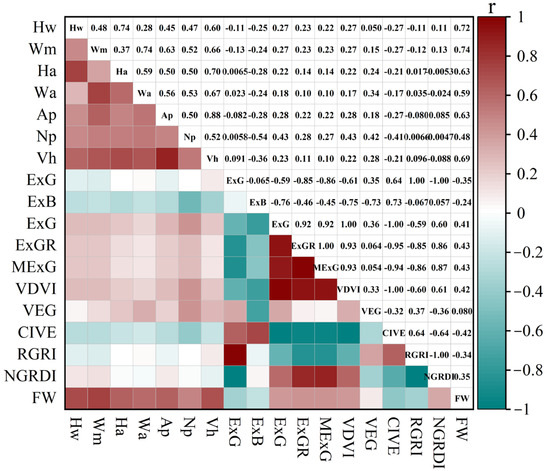

3.2. Correlation Analysis Between Phenotypic Parameters and Fresh Weight

In order to screen parameters with significant correlation with fresh weight as inputs for subsequent fresh weight prediction models, this study normalized the seven morphological parameters and 15 vegetation indices extracted and performed Pearson correlation analysis with lettuce fresh weight. The analysis results are shown in Figure 4.

As shown in Figure 6, there are seven morphological parameters (Hm, Wm, Ha, Wa, Ap, Np, Vh) and five vegetation indices (ExG, ExGR, MExG, VDVI, NGRDI) that are positively correlated with fresh weight and exhibit significant correlations. Among these, all morphological parameters exhibit high correlation coefficients (r > 0.48), with Hw and Wm showing the best performance, reaching 0.72 and 0.74, respectively, indicating that the 7 morphological parameters extracted in this study are strongly correlated with fresh weight; Among the vegetation indices, ExG, ExGR, MExG, VDVI, and NGRDI had correlation coefficients ranging from 0.35 to 0.43, also showing a certain degree of correlation; the remaining vegetation indices were negatively correlated or weakly correlated with fresh weight.

Figure 6.

Pearson correlation coefficient between fresh weight and phenotypic parameters. From green to red indicates the Pearson correlation coefficient ranging from −1 to 1; the darker the color, the stronger the correlation between the two parameters. Each cell value represents the Pearson correlation coefficient between the two parameters, where (0, 1) indicates a positive correlation and (−1, 0) indicates a negative correlation.

Based on the above correlation analysis results, seven morphological parameters (Hm, Wm, Ha, Wa, Ap, Np, Vh) and five vegetation indices (ExG, ExGR, MExG, VDVI, NGRDI) were selected as inputs for the subsequent fresh weight prediction model.

3.3. Training Results of the Fresh Weight Prediction Model

Four machine learning models, MLR, RFR, BPNN, and DE-BP, were used to predict the fresh weight based on 12 phenotypic parameters extracted from 132 lettuce samples. The average R2, RMSE, and MAPE of the training set in five-fold cross-validation were used as evaluation indicators. The training results are shown in Table 5:

Table 5.

Training results of the fresh weight prediction model.

In the MLR model, when MP is used as the sole input, the model exhibits a high R2 value, indicating a certain linear relationship between MP and fresh weight. However, VI shows poor linear correlation with fresh weight. Therefore, the selection of subsequent models should focus on their ability to learn nonlinear relationships.

Compared to the MLR model, the RFR model can capture nonlinear relationships between data. Therefore, in the analysis of the training results of the RFR model, it can be observed that whether MP and VI are used as inputs individually or together, there is a certain improvement compared to the MLR model, indicating that there is a nonlinear correlation between MP, VI, and fresh weight. The model captures some nonlinear relationships on top of the linear relationship, but the improvement in training performance is limited.

The error backpropagation characteristic of the BPNN model further enhances the model’s ability to capture cross-relationships among multidimensional data. The training results show that the model’s performance has been significantly improved. When VI is used as a single input, the model’s R2 no longer approaches 0 but increases to 0.22, indicating that VI has certain potential for fresh weight prediction. Additionally, from the training results of these three models, it can be observed that regardless of the training results when VI is the sole input, the training performance of the model improves when MP and VI are used as dual inputs. This indicates that MP and VI have a certain intrinsic association in the fresh weight prediction task, but a deeper and more complex feature mapping network is needed to explore this relationship.

Therefore, this study designed a feature dimension-increasing module consisting of two fully connected layers. By adjusting the number of input neurons, 5-dimensional, 7-dimensional, or 12-dimensional feature parameters can be mapped to 64 dimensions, thereby enhancing the ability to express the cross-relationships between multi-dimensional data. The BPNN model (DE-BP) with the added feature dimension-increasing module showed a certain improvement compared to other models when MP was used as the sole input. Under the MP and VI co-input conditions, the DE-BP training results achieved an R2 of 0.84, an RMSE of 8 g, and a MAPE of 7.52% on the test set, significantly outperforming the training effects of other models.

It is worth noting that the DE-BP model performs worse than the BPNN model without the feature dimension-increasing module under single VI input conditions. This indicates that the cross-mapping of the five parameters of VI has limited effect on improving freshness weight prediction, and may even cause redundancy in input feature parameters, affecting the model’s fitting ability. This warrants further verification and exploration in subsequent studies with expanded sample sizes.

4. Discussion

This study proposes a facility-based crop information inspection robot equipped with SLAM technology, enabling autonomous navigation in facility environments. Based on this, a five-degree-of-freedom SCARA robotic arm was designed. Through forward and inverse kinematic control of the robotic arm combined with machine vision technology, the system can accurately identify lettuce and actively control the end effector to align with the lettuce. The end-effector of the robotic arm is equipped with a multi-source information sensing device designed in this study, which can simultaneously acquire RGB images, depth images, and near-infrared images of the crops. Based on this device, the inspection robot can achieve autonomous navigation in a facility environment and in situ collection of lettuce phenotypic information.

In terms of lettuce phenotypic information analysis, this study designed an automatic extraction algorithm for lettuce phenotypic information. Using the lightweight YOLOv8n-seg, ultra-green segmentation, and point cloud clustering, it extracts the point cloud model of a single lettuce plant while calculating seven morphological parameters and ten vegetation indices of the lettuce.

Considering that the complexity of the fresh weight prediction model directly affects the computational load on the robot processor and inspection efficiency, complex deep learning models with high computational power and time requirements are not suitable for deployment on mobile devices like facility inspection robots. This study selected 17 phenotypic parameters extracted directly from lettuce to analyze their relationship with fresh weight. Notably, to further enhance the description of lettuce morphology, after selecting plant height and crown width, this study proposed dividing the lettuce into equal parts to obtain the average plant height and average crown width. By analyzing the Pearson correlation coefficients between extracted phenotypic parameters and fresh weight, the coefficients for average plant height and fresh weight reached 0.63, while that for average crown width and fresh weight reached 0.59. This demonstrates that further numerical characterization of lettuce morphology is beneficial for fresh weight prediction tasks. This study identified 12 parameters for fresh weight prediction. Three lightweight machine learning models—MLR, RFR, and BPNN—were selected for lettuce fresh weight prediction. Training was conducted using five-fold cross-validation. Analysis of the average R2, RMSE, and MAPE of the training set demonstrated that the cross-relationship between morphological parameters and vegetation indices significantly influences fresh weight prediction. A BPNN model with an added feature dimension-increasing module (DE-BP) was proposed. The training results showed that the model achieved R2, RMSE, and MAPE values of 0.84, 8 g, and 7.52%, respectively, accurately reflecting the growth status of lettuce. Compared with recent literature predicting lettuce fresh weight using morphological parameters and vegetation indices, this study demonstrates improved prediction accuracy [33]. Additionally, the dataset established by this research institute has certain limitations. To address the issue of insufficient sample size, we selected models such as BPNN that perform well with small training datasets and employed Adam optimizer and five-fold cross-validation to prevent overfitting. Although environmental factors in lettuce-growing greenhouses are generally similar, incorporating samples from different greenhouse conditions could further enhance the model’s generalization capability. Additionally, expanding the dataset to include more lettuce varieties would be an effective means of improving its quality.

This study aims to explore the relationship between easily extractable phenotypic information parameters of lettuce and fresh weight and strives to construct a lettuce fresh weight prediction model using lightweight machine learning algorithms. This addresses the issues of insufficient computing power and excessive computation time encountered when deploying existing deep learning prediction models such as CNN and Pointnet on mobile devices, thereby reducing hardware costs for inspection robot processors while improving inspection efficiency. Through the deployment of lightweight models and optimization of model structures, this study provides a method for acquiring crop phenotypic information that reduces hardware costs and improves inspection efficiency for agricultural inspection robots.

5. Conclusions

The current facility environment lacks a platform capable of non-destructive, in situ, high-throughput crop monitoring that is also automated and simple to deploy. This study designed an inspection robot capable of autonomous navigation within facilities to complete inspection tasks. Furthermore, addressing the issue of overly large models caused by deep learning networks directly learning complex linear relationships in images for lettuce fresh weight prediction, this study explores the possibility of directly predicting lettuce fresh weight using morphological parameters and vegetation indices. It successfully achieves fresh weight prediction through a structurally simple machine learning model, enabling lightweight model development for mobile devices such as inspection robots.

The inspection robot uses autonomous navigation to move to pre-set navigation points, where it uses a robotic arm to control its end-effector information collection device to align with the lettuce and maintain an optimal imaging distance of 1 m, thereby completing the collection of RGB images, depth images, and near-infrared images. Seven morphological parameters and 10 vegetation indices were extracted from the collected images, and 12 parameters with high correlation to fresh weight were selected through Pearson correlation analysis. Then, three machine learning methods (MLR, RFR, BPNN) were used to predict fresh weight based on the selected parameters. By analyzing the training results of different models and feature parameters, it was found that more complex feature mapping was needed between the 12 lettuce phenotypic parameters to enhance the expression of the intrinsic relationships between the data. Subsequently, a two-layer feature dimension-increasing module (DE-BP) based on the BPNN model was designed to improve model accuracy. The results showed that the R2 values for predicting lettuce plant height, maximum crown width, and fresh weight were 0.85, 0.93, and 0.84, respectively, with RMSE values of 7 mm, 6 mm, and 8 g, respectively. These results accurately reflect the growth status of lettuce, providing a scientific basis for growth analysis and facility environmental control.

Author Contributions

Conceptualization, X.H. and X.Z.; methodology, X.H.; software, X.H. and Y.Z.; validation, X.Z. and L.H.; formal analysis, X.H. and T.L.; investigation, X.H.; resources, X.H. and L.H.; data curation, X.H. and T.L.; writing—original draft preparation, X.H.; writing—review and editing, X.H. and X.Z.; funding acquisition, X.Z. and Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the project of the National Key Research and Development Program of China (Grant Nos.2022YFD2002302); National Key Research and Development Pro- gram for Young Scientists (Grant Nos.2022YFD2000200); Agricultural Equipment Department of Jiangsu University (Grant Nos. NZXB20210106); and Jiangsu Province Industry Forward-looking Program Project (Grant Nos. BE2023017).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Acknowledgments

The authors express their gratitude to the School of Agricultural Engineering, Jiangsu University, for providing the essential instruments without which this work would not have been possible. The authors also thank the reviewers for their important feedback.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Yuan, H.; Song, M.; Liu, Y.; Xie, Q.; Cao, W.; Zhu, Y.; Ni, J. Field Phenotyping Monitoring Systems for High-Throughput: A Survey of Enabling Technologies, Equipment, and Research Challenges. Agronomy 2023, 13, 2832. [Google Scholar] [CrossRef]

- Rahaman, M.M.; Chen, D.; Gillani, Z.; Klukas, C.; Chen, M. Advanced Phenotyping and Phenotype Data Analysis for the Study of Plant Growth and Development. Front. Plant Sci. 2015, 6, 619. [Google Scholar] [CrossRef]

- Taha, M.F.; Mao, H.; Mousa, S.; Zhou, L.; Wang, Y.; Elmasry, G.; Al-Rejaie, S.; Elwakeel, A.E.; Wei, Y.; Qiu, Z. Deep Learning-Enabled Dynamic Model for Nutrient Status Detection of Aquaponically Grown Plants. Agronomy 2024, 14, 2290. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhang, X.; Sun, J.; Yu, T.; Cai, Z.; Zhang, Z.; Mao, H. Low-Cost Lettuce Height Measurement Based on Depth Vision and Lightweight Instance Segmentation Model. Agriculture 2024, 14, 1596. [Google Scholar] [CrossRef]

- Liu, T.; Zhu, S.; Yang, T.; Zhang, W.; Xu, Y.; Zhou, K.; Wu, W.; Zhao, Y.; Yao, Z.; Yang, G.; et al. Maize Height Estimation Using Combined Unmanned Aerial Vehicle Oblique Photography and LIDAR Canopy Dynamic Characteristics. Comput. Electron. Agric. 2024, 218, 108685. [Google Scholar] [CrossRef]

- Ge, X.; Wu, S.; Wen, W.; Shen, F.; Xiao, P.; Lu, X.; Liu, H.; Zhang, M.; Guo, X. LettuceP3D: A Tool for Analysing 3D Phenotypes of Individual Lettuce Plants. Biosyst. Eng. 2025, 251, 73–88. [Google Scholar]

- Vadez, V.; Kholová, J.; Hummel, G.; Zhokhavets, U.; Gupta, S.K.; Hash, C.T. LeasyScan: A Novel Concept Combining 3D Imaging and Lysimetry for High-Throughput Phenotyping of Traits Controlling Plant Water Budget. J. Exp. Bot. 2015, 66, 5581–5593. [Google Scholar] [PubMed]

- Hassan, M.A.; Chang, C.Y.Y. PhenoGazer: A High-Throughput Phenotyping System to Track Plant Stress Responses Using Hyperspectral Reflectance, Nighttime Chlorophyll Fluorescence and RGB Imaging in Controlled Environments. Plant Phenomics 2025, 7, 100047. [Google Scholar]

- Yu, H.; Dong, M.; Zhao, R.; Zhang, L.; Sui, Y. Research on Precise Phenotype Identification and Growth Prediction of Lettuce Based on Deep Learning. Environ. Res. 2024, 252, 118845. [Google Scholar] [CrossRef]

- Jin, Y.; Liu, J.; Xu, Z.; Yuan, S.; Li, P.; Wang, J. Development Status and Trend of Agricultural Robot Technology. Int. J. Agric. Biol. Eng. 2021, 14, 1–19. [Google Scholar] [CrossRef]

- Su, M.; Zhou, D.; Yun, Y.; Ding, B.; Xia, P.; Yao, X.; Ni, J.; Zhu, Y.; Cao, W. Design and Implementation of a High-Throughput Field Phenotyping Robot for Acquiring Multisensor Data in Wheat. Plant Phenomics 2025, 7, 100014. [Google Scholar] [CrossRef]

- Hosoda, Y.; Tada, T.; Goto, H. Lettuce Fresh Weight Prediction in a Plant Factory Using Plant Growth Models. IEEE Access 2024, 12, 97226–97234. [Google Scholar] [CrossRef]

- Ma, H.; Wen, W.; Gou, W.; Lu, X.; Fan, J.; Zhang, M.; Liang, Y.; Gu, S.; Guo, X. 3D Time-Series Phenotyping of Lettuce in Greenhouses. Biosyst. Eng. 2025, 250, 250–269. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, Y.; Gu, R. Research Status and Prospects on Plant Canopy Structure Measurement Using Visual Sensors Based on Three-Dimensional Reconstruction. Agriculture 2020, 10, 462. [Google Scholar] [CrossRef]

- Bloch, V.; Shapiguzov, A.; Kotilainen, T.; Pastell, M. A Method for Phenotyping Lettuce Volume and Structure from 3D Images. Plant Methods 2025, 21, 27. [Google Scholar] [CrossRef]

- Wang, Z.; Li, T.; Du, R.; Yang, N.; Ping, J. A High-Efficiency Lettuce Quality Detection System Based on FPGA. Comput. Electron. Agric. 2025, 231, 109978. [Google Scholar] [CrossRef]

- Li, J.; Wang, Y.; Zheng, L.; Zhang, M.; Wang, M. Towards End-to-End Deep RNN Based Networks to Precisely Regress of the Lettuce Plant Height by Single Perspective Sparse 3D Point Cloud. Expert Syst. Appl. 2023, 229, 120497. [Google Scholar] [CrossRef]

- Gu, W.; Wen, W.; Wu, S.; Zheng, C.; Lu, X.; Chang, W.; Xiao, P.; Guo, X. 3D Reconstruction of Wheat Plants by Integrating Point Cloud Data and Virtual Design Optimization. Agriculture 2024, 14, 391. [Google Scholar] [CrossRef]

- Tan, J.; Hou, J.; Xu, W.; Zheng, H.; Gu, S.; Zhou, Y.; Qi, L.; Ma, R. PosNet: Estimating Lettuce Fresh Weight in Plant Factory Based on Oblique Image. Comput. Electron. Agric. 2023, 213, 108263. [Google Scholar] [CrossRef]

- Yi, Y.A.; Shutao, M.A.; Wending, Z.H.; Houcheng, L.I.; Song, G.U. Segmenting Collective Lettuce to Predict Fresh Weight Using Three-Dimensional Point Cloud. Trans. Chin. Soc. Agric. Eng. 2025, 41, 192–199. [Google Scholar]

- Wang, Y.; Wang, J.; Zhang, W.; Zhan, Y.; Guo, S.; Zheng, Q.; Wang, X. A Survey on Deploying Mobile Deep Learning Applications: A Systemic and Technical Perspective. Digit. Commun. Netw. 2022, 8, 1–17. [Google Scholar] [CrossRef]

- Chen, Y.; Zheng, B.; Zhang, Z.; Wang, Q.; Shen, C.; Zhang, Q. Deep Learning on Mobile and Embedded Devices: State-of-the-Art, Challenges, and Future Directions. ACM Comput. Surv. 2021, 53, 84. [Google Scholar] [CrossRef]

- Li, Q.; Gao, H.; Zhang, X.; Ni, J.; Mao, H. Describing Lettuce Growth Using Morphological Features Combined with Nonlinear Models. Agronomy 2022, 12, 860. [Google Scholar] [CrossRef]

- Niu, Y.; Han, W.; Zhang, H.; Zhang, L.; Chen, H. Estimating Maize Plant Height Using a Crop Surface Model Constructed from UAV RGB Images. Biosyst. Eng. 2024, 241, 56–67. [Google Scholar] [CrossRef]

- Zhang, L.; Song, X.; Niu, Y.; Zhang, H.; Wang, A.; Zhu, Y.; Zhu, X.; Chen, L.; Zhu, Q. Estimating Winter Wheat Plant Nitrogen Content by Combining Spectral and Texture Features Based on a Low-Cost UAV RGB System throughout the Growing Season. Agriculture 2024, 14, 456. [Google Scholar] [CrossRef]

- Barboza, T.O.C.; Ardigueri, M.; Souza, G.F.C.; Ferraz, M.A.J.; Gaudencio, J.R.F.; Santos, A.F.d. Performance of Vegetation Indices to Estimate Green Biomass Accumulation in Common Bean. AgriEngineering 2023, 5, 840–854. [Google Scholar] [CrossRef]

- Guijarro, M.; Pajares, G.; Riomoros, I.; Herrera, P.J.; Burgos-Artizzu, X.P.; Ribeiro, A. Automatic Segmentation of Relevant Textures in Agricultural Images. Comput. Electron. Agric. 2011, 75, 75–83. [Google Scholar] [CrossRef]

- Burgos-Artizzu, X.P.; Ribeiro, A.; Guijarro, M.; Pajares, G. Real-Time Image Processing for Crop/Weed Discrimination in Maize Fields. Comput. Electron. Agric. 2011, 75, 337–346. [Google Scholar]

- Meyer, G.E.; Neto, J.C. Verification of Color Vegetation Indices for Automated Crop Imaging Applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Hague, T.; Tillett, N.D.; Wheeler, H. Automated Crop and Weed Monitoring in Widely Spaced Cereals. Precis. Agric. 2006, 7, 21–32. [Google Scholar] [CrossRef]

- Gamon, J.A.; Surfus, J.S. Assessing Leaf Pigment Content and Activity with a Reflectometer. New Phytol. 1999, 143, 105–117. [Google Scholar] [CrossRef]

- Iyoob, A.L.; Norbert, S.A.; Ameer, M.L.F.; Nasar-u-Minallah, M.; Zahir, I.L.M.; Nuskiya, M.H.F. Using UAV RGB Image for Object-based Feature Detection. Procedia Comput. Sci. 2025, 260, 1052–1059. [Google Scholar] [CrossRef]

- Lee, D.-H.; Park, J.-H. Development of a UAS-Based Multi-Sensor Deep Learning Model for Predicting Napa Cabbage Fresh Weight and Determining Optimal Harvest Time. Remote Sens. 2024, 16, 3455. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).