Prediction of Rice Chlorophyll Index (CHI) Using Nighttime Multi-Source Spectral Data

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Design

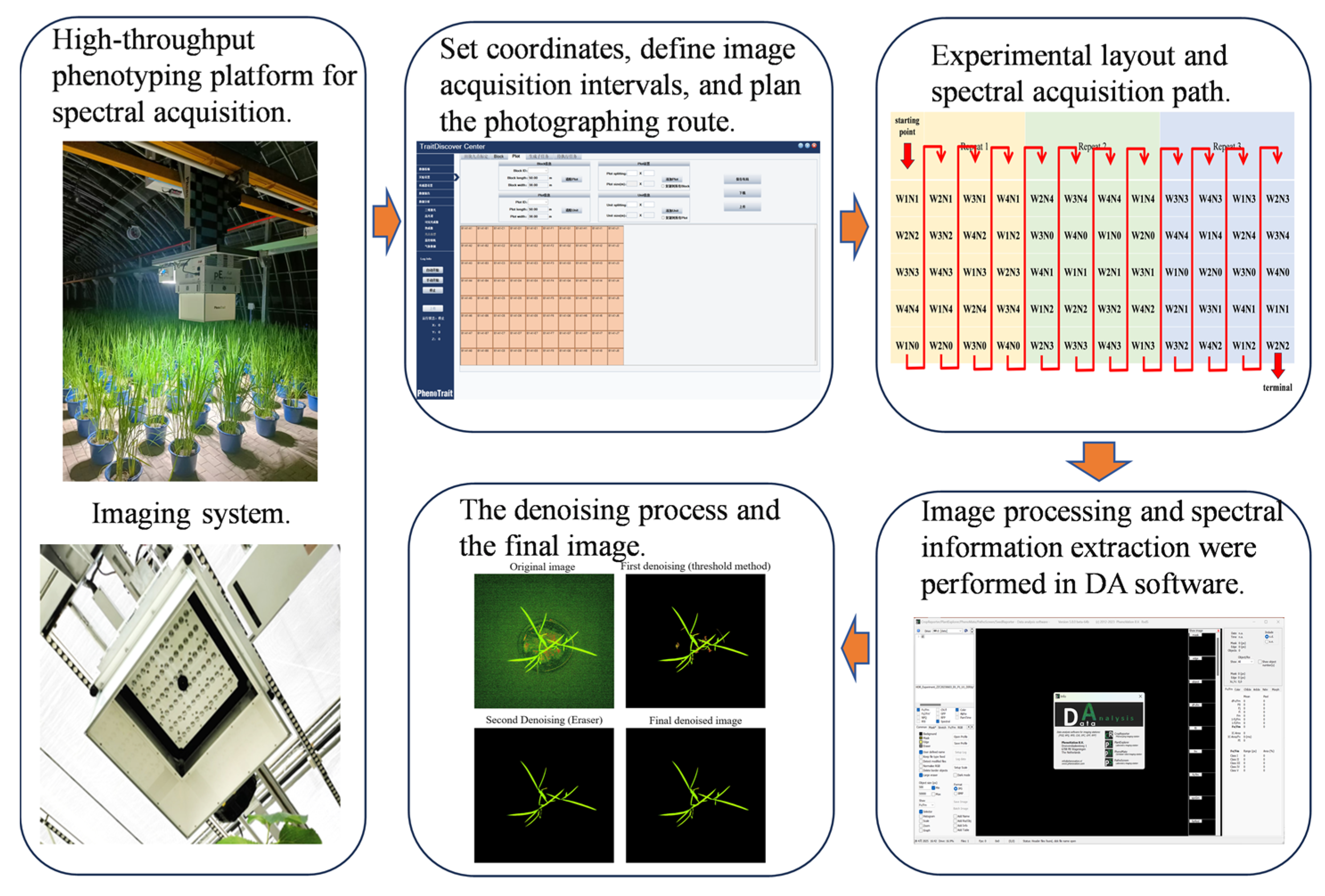

2.2. Spectral Data Acquisition and Preprocessing

2.3. Chlorophyll Index Measurement

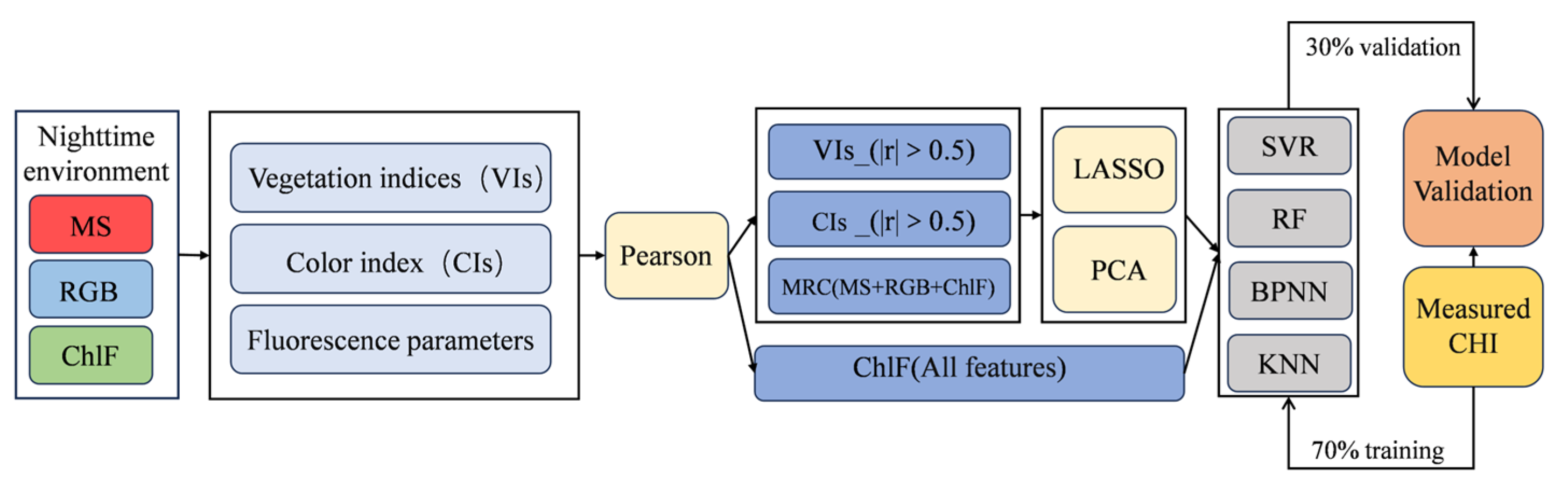

2.4. Spectral Index Selection

2.5. Modeling and Accuracy Evaluation

2.5.1. Machine Learning Models

2.5.2. Model Performance Evaluation

2.6. Feature Selection Methods

3. Results

3.1. Descriptive Statistics

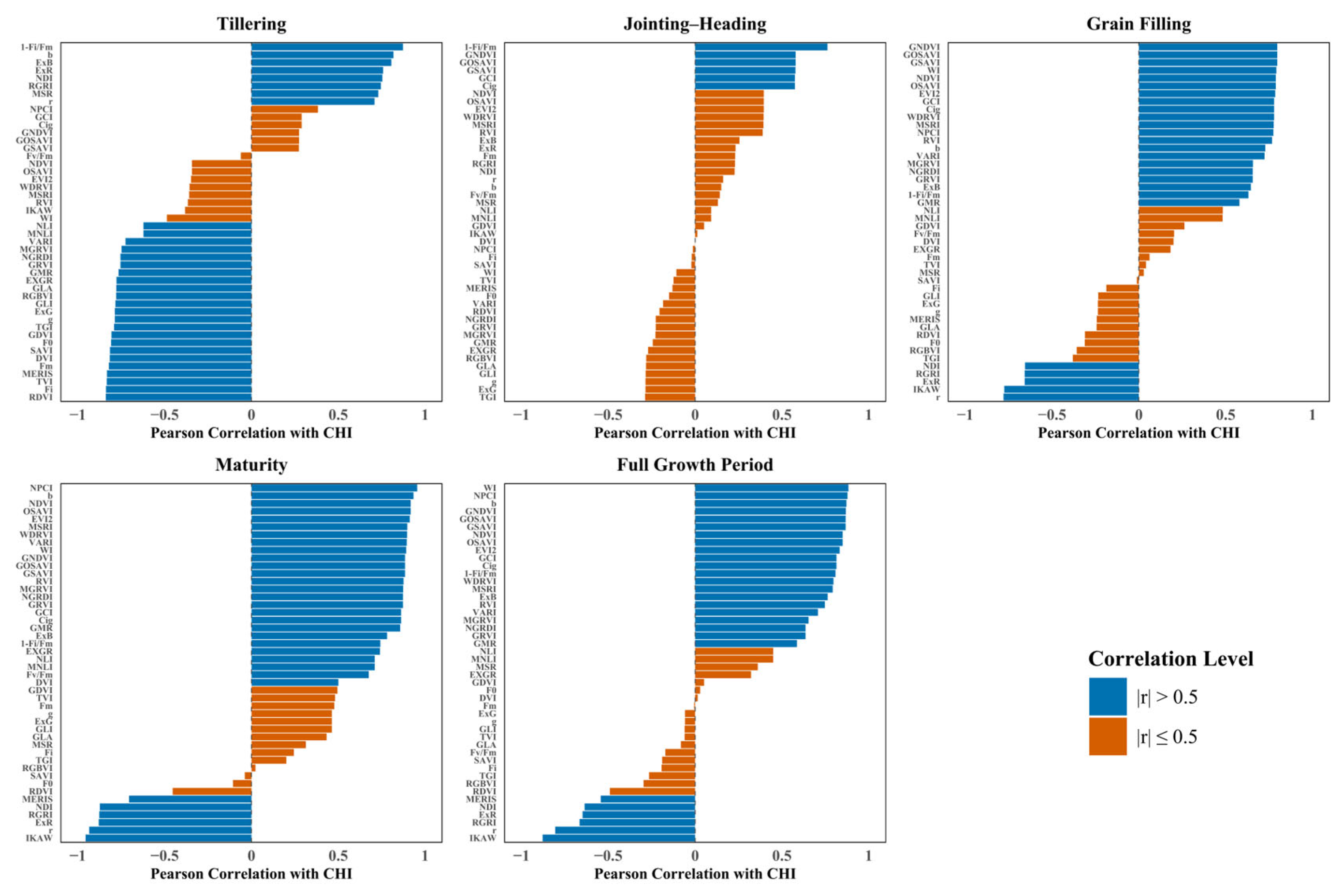

3.2. Correlation Analysis Between Spectral Features and CHI

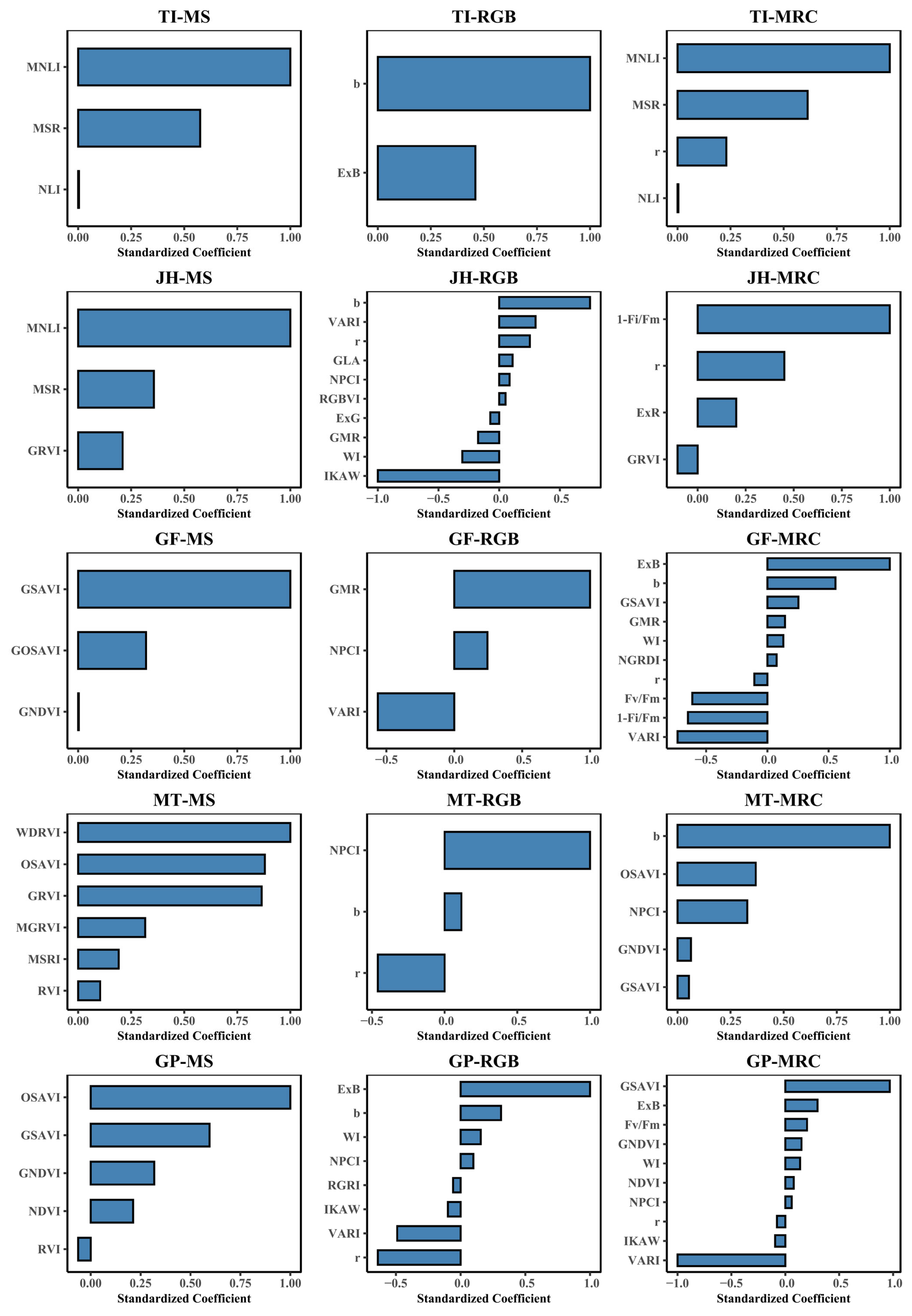

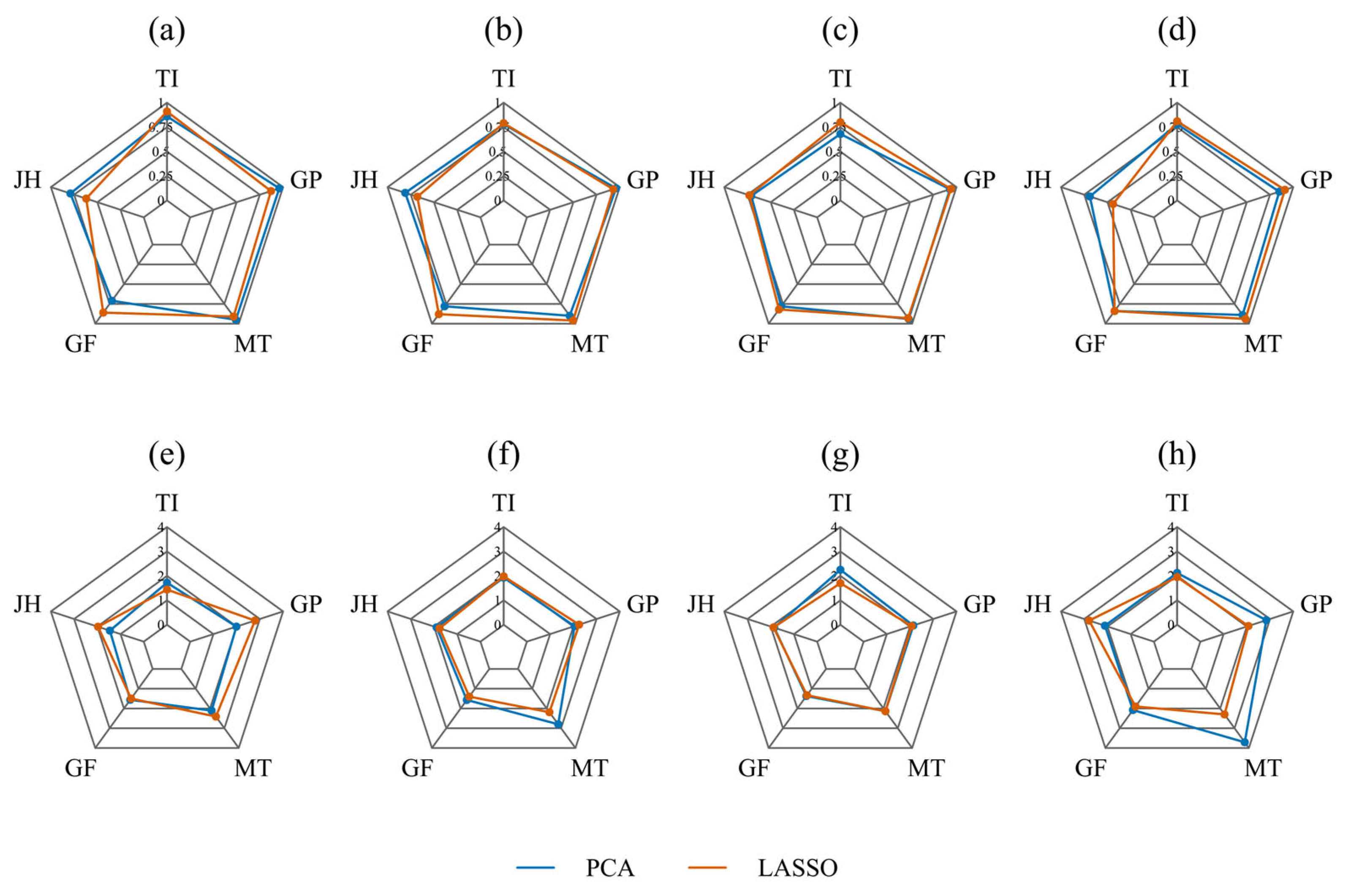

3.3. Feature Selection

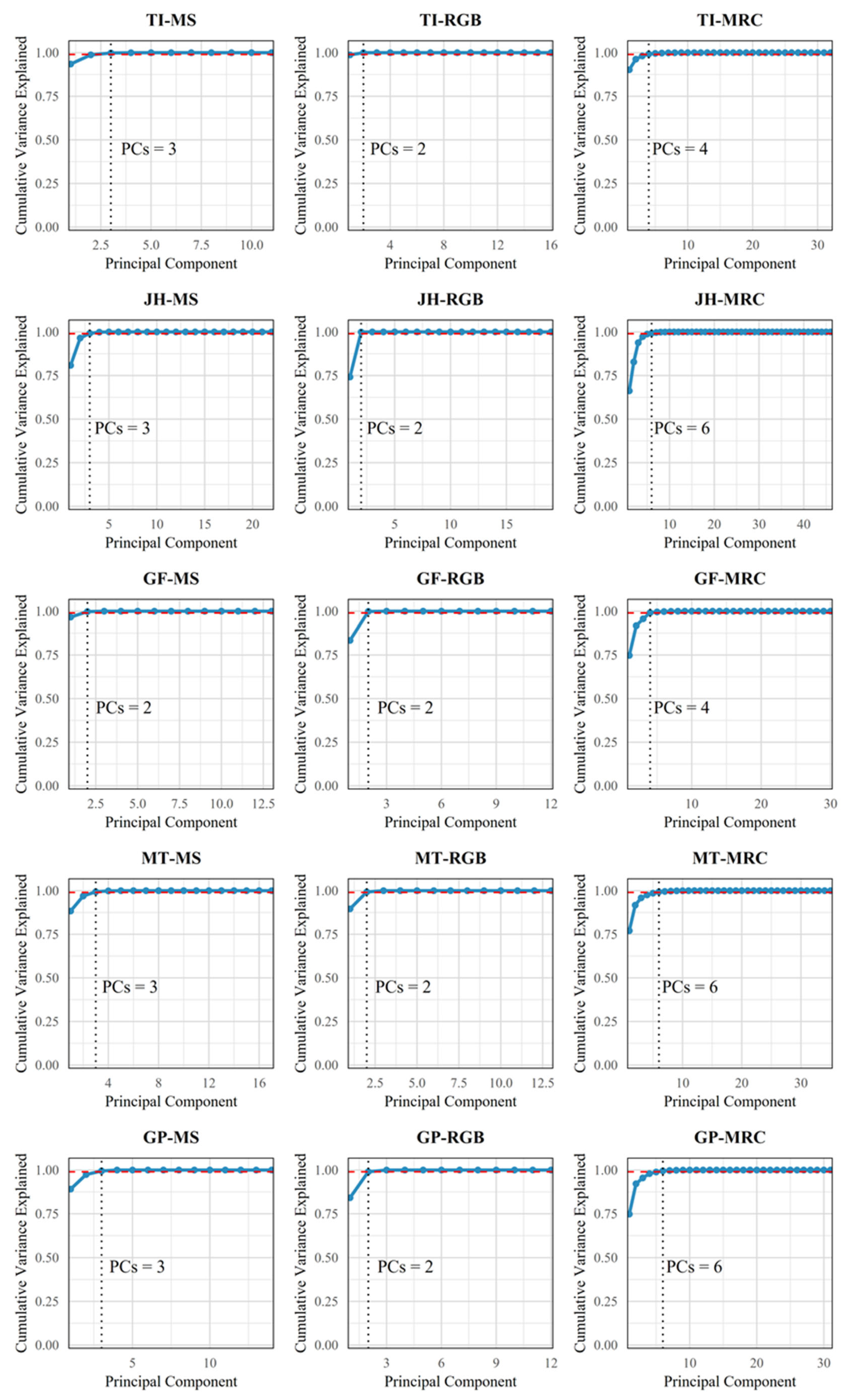

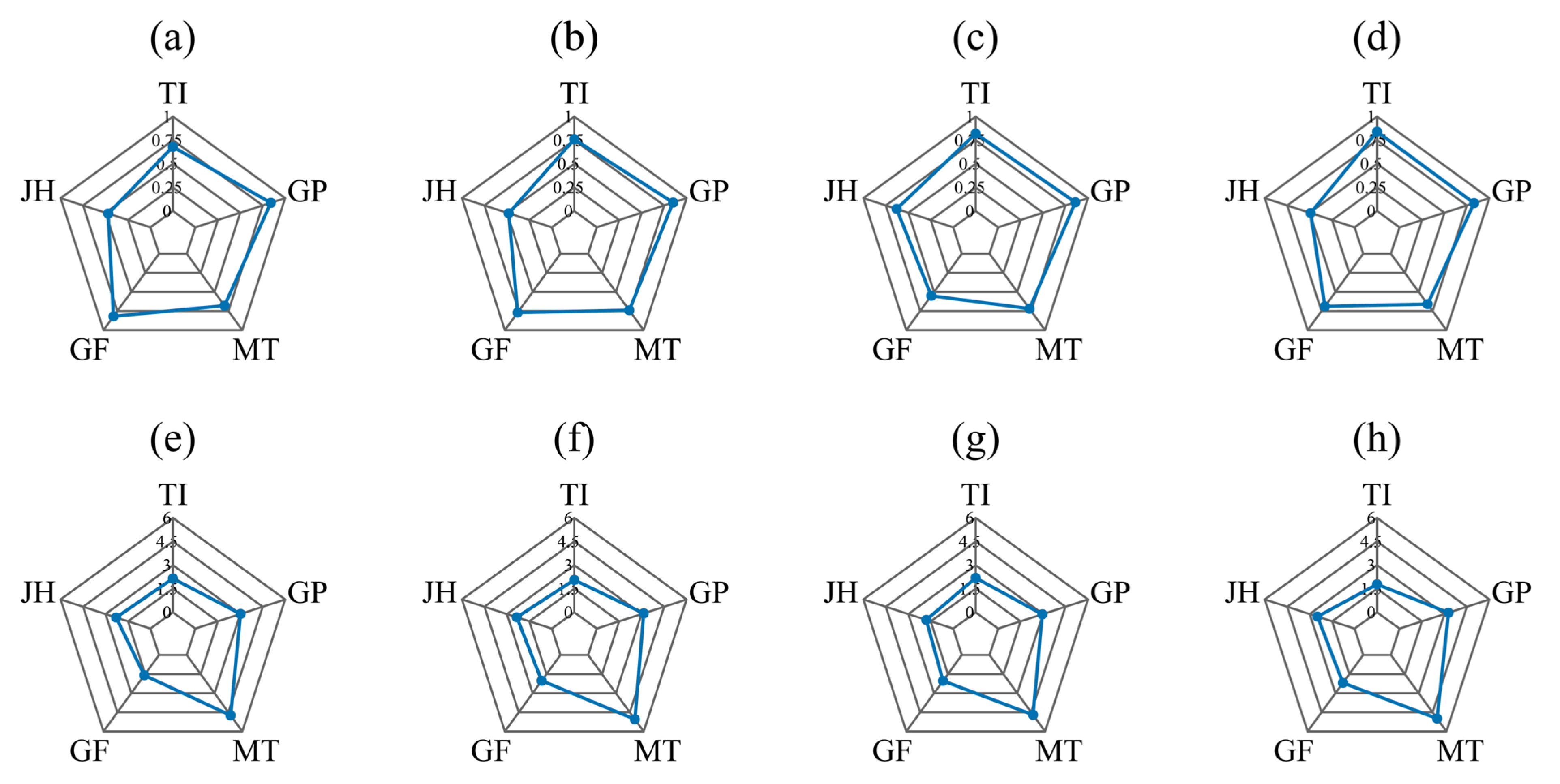

3.3.1. PCA Feature Selection Results

3.3.2. LASSO Feature Selection Results

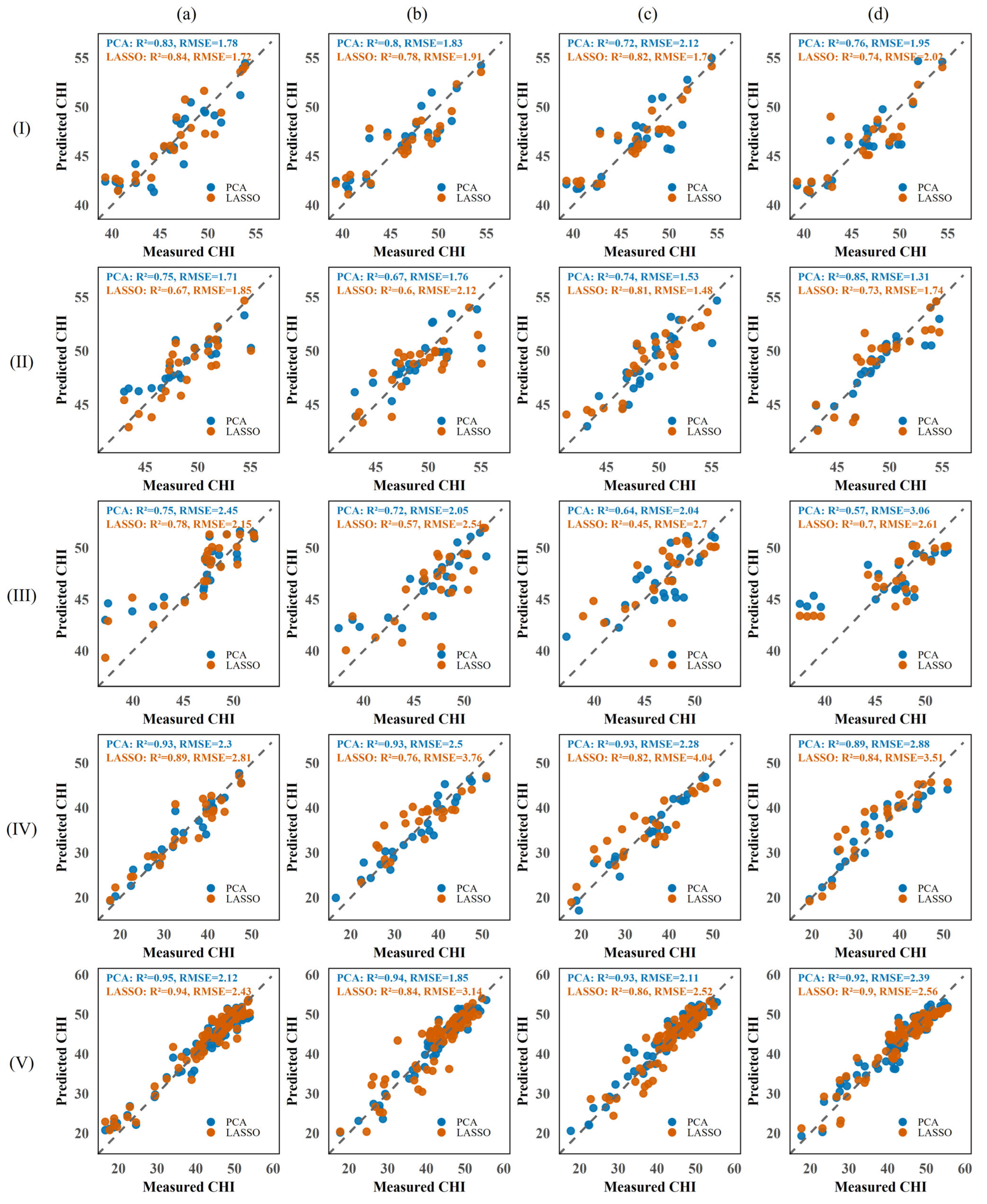

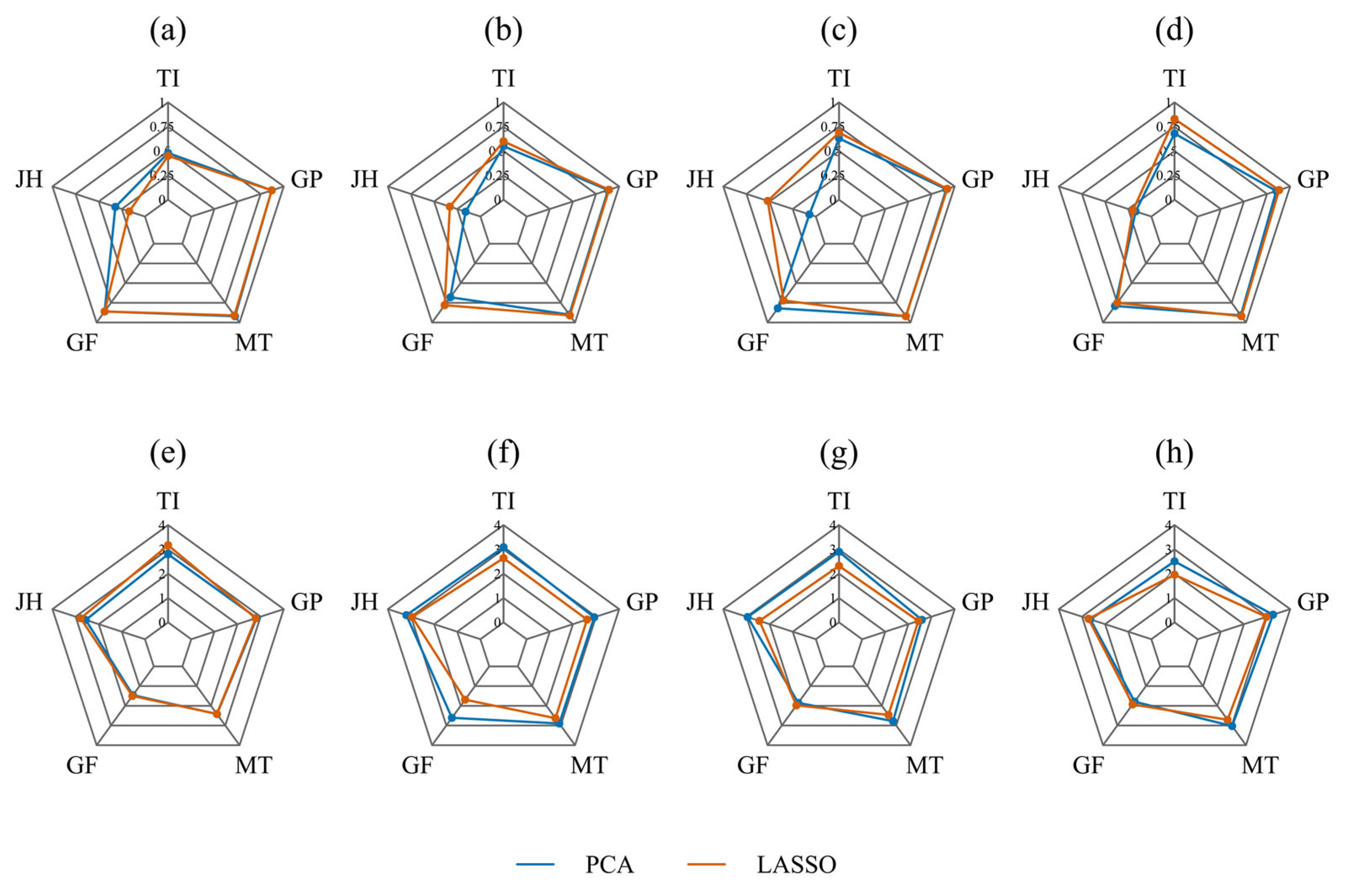

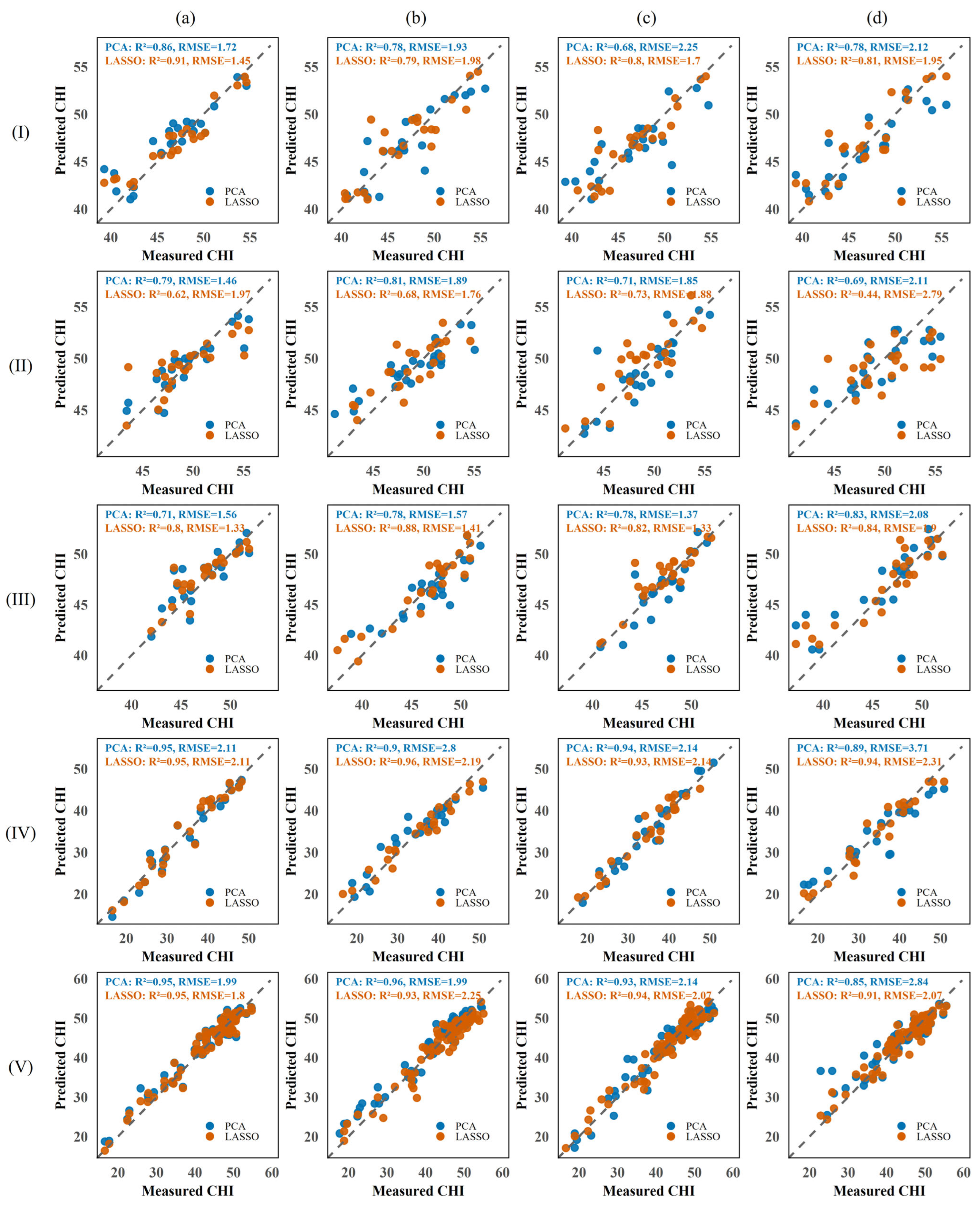

3.4. Model Performance Comparison Across Sensor Types

3.4.1. CHI Modeling Performance Using ChlF Features

3.4.2. CHI Modeling Performance Using Multispectral Data

3.4.3. Performance of CHI Prediction Models Based on RGB Features

3.4.4. Performance of CHI Prediction Models Based on Multi-Source Spectral Features

4. Discussion

4.1. Advantages of Nighttime Spectral Imaging for Chlorophyll Monitoring

| Sensor | Data Collection Time | Modeling Methods | Model Accuracy (R2) | References |

|---|---|---|---|---|

| Multispectral | Daytime | BRT | 0.712 | [57] |

| Multispectral | Daytime | RF | 0.79 | [11] |

| Hyperspectral | Daytime | BP | 0.6717 | [58] |

| Hyperspectral | Daytime | PSO-ELM | 0.791 | [59] |

| Visible light | Daytime | AdaBoost | 0.879 | [60] |

| MS + RGB + ChlF | Nighttime | SVR | 0.96 | This study |

4.2. Analysis of Factors Influencing Model Performance

4.3. Limitations and Future Directions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nguyen, N. Global Climate Changes and Rice Food Security; FAO: Rome, Italy, 2002; Volume 625, pp. 24–30. [Google Scholar]

- Brown, L.A.; Williams, O.; Dash, J. Calibration and characterisation of four chlorophyll meters and transmittance spectroscopy for non-destructive estimation of forest leaf chlorophyll concentration. Agric. For. Meteorol. 2022, 323, 109059. [Google Scholar] [CrossRef]

- Zhang, R.; Yang, P.; Liu, S.; Wang, C.; Liu, J. Evaluation of the methods for estimating leaf chlorophyll content with SPAD chlorophyll meters. Remote Sens. 2022, 14, 5144. [Google Scholar] [CrossRef]

- Tan, L.; Zhou, L.; Zhao, N.; He, Y.; Qiu, Z. Development of a low-cost portable device for pixel-wise leaf SPAD estimation and blade-level SPAD distribution visualization using color sensing. Comput. Electron. Agric. 2021, 190, 106487. [Google Scholar] [CrossRef]

- Omia, E.; Bae, H.; Park, E.; Kim, M.S.; Baek, I.; Kabenge, I.; Cho, B.-K. Remote sensing in field crop monitoring: A comprehensive review of sensor systems, data analyses and recent advances. Remote Sens. 2023, 15, 354. [Google Scholar]

- Ban, S.; Liu, W.; Tian, M.; Wang, Q.; Yuan, T.; Chang, Q.; Li, L. Rice leaf chlorophyll content estimation using UAV-based spectral images in different regions. Agronomy 2022, 12, 2832. [Google Scholar] [CrossRef]

- Liu, T.; Xu, T.; Yu, F.; Yuan, Q.; Guo, Z.; Xu, B. A method combining ELM and PLSR (ELM-P) for estimating chlorophyll content in rice with feature bands extracted by an improved ant colony optimization algorithm. Comput. Electron. Agric. 2021, 186, 106177. [Google Scholar] [CrossRef]

- Yu, F.; Xu, T.; Guo, Z.; Wen, D.; Wang, D.; Cao, Y. Remote sensing inversion of chlorophyll content in rice leaves in cold region based on Optimizing Red-edge Vegetation Index (ORVI). Smart Agric. 2020, 2, 77. [Google Scholar]

- Saberioon, M.; Amin, M.; Anuar, A.; Gholizadeh, A.; Wayayok, A.; Khairunniza-Bejo, S. Assessment of rice leaf chlorophyll content using visible bands at different growth stages at both the leaf and canopy scale. Int. J. Appl. Earth Obs. Geoinf. 2014, 32, 35–45. [Google Scholar] [CrossRef]

- Qiao, L.; Tang, W.; Gao, D.; Zhao, R.; An, L.; Li, M.; Sun, H.; Song, D. UAV-based chlorophyll content estimation by evaluating vegetation index responses under different crop coverages. Comput. Electron. Agric. 2022, 196, 106775. [Google Scholar] [CrossRef]

- Wang, Y.; Tan, S.; Jia, X.; Qi, L.; Liu, S.; Lu, H.; Wang, C.; Liu, W.; Zhao, X.; He, L. Estimating relative chlorophyll content in rice leaves using unmanned aerial vehicle multi-spectral images and spectral–textural analysis. Agronomy 2023, 13, 1541. [Google Scholar] [CrossRef]

- Nansen, C.; Savi, P.J.; Mantri, A. Methods to optimize optical sensing of biotic plant stress–combined effects of hyperspectral imaging at night and spatial binning. Plant Methods 2024, 20, 163. [Google Scholar] [CrossRef] [PubMed]

- Xu, B.; Zhang, J.; Tang, Z.; Zhang, Y.; Xu, L.; Lu, H.; Han, Z.; Hu, W. Nighttime environment enables robust field-based high-throughput plant phenotyping: A system platform and a case study on rice. Comput. Electron. Agric. 2025, 235, 110337. [Google Scholar] [CrossRef]

- Nguyen, H.D.D.; Pan, V.; Pham, C.; Valdez, R.; Doan, K.; Nansen, C. Night-based hyperspectral imaging to study association of horticultural crop leaf reflectance and nutrient status. Comput. Electron. Agric. 2020, 173, 105458. [Google Scholar] [CrossRef]

- Xiang, R. Image segmentation for whole tomato plant recognition at night. Comput. Electron. Agric. 2018, 154, 434–442. [Google Scholar] [CrossRef]

- Zhuang, J.; Wang, Q. Estimating Leaf Chlorophyll Fluorescence Parameters Using Partial Least Squares Regression with Fractional-Order Derivative Spectra and Effective Feature Selection. Remote Sens. 2025, 17, 833. [Google Scholar] [CrossRef]

- Zheng, W.; Lu, X.; Li, Y.; Li, S.; Zhang, Y. Hyperspectral identification of chlorophyll fluorescence parameters of suaeda salsa in coastal wetlands. Remote Sens. 2021, 13, 2066. [Google Scholar] [CrossRef]

- Porcar-Castell, A.; Tyystjärvi, E.; Atherton, J.; Van der Tol, C.; Flexas, J.; Pfündel, E.E.; Moreno, J.; Frankenberg, C.; Berry, J.A. Linking chlorophyll a fluorescence to photosynthesis for remote sensing applications: Mechanisms and challenges. J. Exp. Bot. 2014, 65, 4065–4095. [Google Scholar] [CrossRef] [PubMed]

- Deng, Y.; Xin, N.; Zhao, L.; Shi, H.; Deng, L.; Han, Z.; Wu, G. Precision Detection of Salt Stress in Soybean Seedlings Based on Deep Learning and Chlorophyll Fluorescence Imaging. Plants 2024, 13, 2089. [Google Scholar] [CrossRef]

- Lee, H.; Park, Y.; Kim, G.; Lee, J.H. Pre-symptomatic diagnosis of rice blast and brown spot diseases using chlorophyll fluorescence imaging. Plant Phenomics 2025, 7, 100012. [Google Scholar] [CrossRef]

- Li, L.; Huang, G.; Wu, J.; Yu, Y.; Zhang, G.; Su, Y.; Wang, X.; Chen, H.; Wang, Y.; Wu, D. Combine photosynthetic characteristics and leaf hyperspectral reflectance for early detection of water stress. Front. Plant Sci. 2025, 16, 1520304. [Google Scholar] [CrossRef]

- Chen, J.M.; Cihlar, J. Retrieving leaf area index of boreal conifer forests using Landsat TM images. Remote Sens. Environ. 1996, 55, 153–162. [Google Scholar] [CrossRef]

- Bagheri, N. Application of aerial remote sensing technology for detection of fire blight infected pear trees. Comput. Electron. Agric. 2020, 168, 105147. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.R.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Raper, T.; Varco, J. Canopy-scale wavelength and vegetative index sensitivities to cotton growth parameters and nitrogen status. Precis. Agric. 2015, 16, 62–76. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Gong, P.; Pu, R.; Biging, G.S.; Larrieu, M.R. Estimation of forest leaf area index using vegetation indices derived from Hyperion hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1355–1362. [Google Scholar] [CrossRef]

- Feng, W.; Wu, Y.; He, L.; Ren, X.; Wang, Y.; Hou, G.; Wang, Y.; Liu, W.; Guo, T. An optimized non-linear vegetation index for estimating leaf area index in winter wheat. Precis. Agric. 2019, 20, 1157–1176. [Google Scholar] [CrossRef]

- Goel, N.S.; Qin, W. Influences of canopy architecture on relationships between various vegetation indices and LAI and FPAR: A computer simulation. Remote Sens. Rev. 1994, 10, 309–347. [Google Scholar] [CrossRef]

- Poley, L.G.; McDermid, G.J. A systematic review of the factors influencing the estimation of vegetation aboveground biomass using unmanned aerial systems. Remote Sens. 2020, 12, 1052. [Google Scholar] [CrossRef]

- Xu, T.; Wang, F.; Shi, Z.; Miao, Y. Multi-scale monitoring of rice aboveground biomass by combining spectral and textural information from UAV hyperspectral images. Int. J. Appl. Earth Obs. Geoinf. 2024, 127, 103655. [Google Scholar] [CrossRef]

- Sankaran, S.; Zhou, J.; Khot, L.R.; Trapp, J.J.; Mndolwa, E.; Miklas, P.N. High-throughput field phenotyping in dry bean using small unmanned aerial vehicle based multispectral imagery. Comput. Electron. Agric. 2018, 151, 84–92. [Google Scholar] [CrossRef]

- Roujean, J.-L.; Breon, F.-M. Estimating PAR absorbed by vegetation from bidirectional reflectance measurements. Remote Sens. Environ. 1995, 51, 375–384. [Google Scholar] [CrossRef]

- Wilson, R.J.; Haas, R.; Schelland, J.; Deering, D. Monitoring vegetation systems in the Great Plains with ERTS. NASA Spec. Publ 1974, 351, 309R. [Google Scholar]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Sripada, R.P.; Heiniger, R.W.; White, J.G.; Weisz, R. Aerial color infrared photography for determining late-season nitrogen requirements in corn. Agron. J. 2005, 97, 1443–1451. [Google Scholar] [CrossRef]

- Putra, A.N.; Kristiawati, W.; Mumtazydah, D.C.; Anggarwati, T.; Annisa, R.; Sholikah, D.H.; Okiyanto, D. Pineapple biomass estimation using unmanned aerial vehicle in various forcing stage: Vegetation index approach from ultra-high-resolution image. Smart Agric. Technol. 2021, 1, 100025. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef]

- Zhang, Y.; Jiang, Y.; Xu, B.; Yang, G.; Feng, H.; Yang, X.; Yang, H.; Liu, C.; Cheng, Z.; Feng, Z. Study on the Estimation of Leaf Area Index in Rice Based on UAV RGB and Multispectral Data. Remote Sens. 2024, 16, 3049. [Google Scholar] [CrossRef]

- El Hafyani, M.; Saddik, A.; Hssaisoune, M.; Labbaci, A.; Tairi, A.; Abdelfadel, F.; Bouchaou, L. Weeds detection in a citrus orchard using multispectral UAV data and machine learning algorithms: A Case Study from Souss-Massa basin, Morocco. Remote Sens. Appl. Soc. Environ. 2025, 38, 101553. [Google Scholar] [CrossRef]

- Gerardo, R.; de Lima, I.P. Applying RGB-based vegetation indices obtained from UAS imagery for monitoring the rice crop at the field scale: A case study in Portugal. Agriculture 2023, 13, 1916. [Google Scholar] [CrossRef]

- Li, J.; Wang, W.; Sheng, Y.; Anwar, S.; Su, X.; Nian, Y.; Yue, H.; Ma, Q.; Liu, J.; Li, X. Rice Yield Estimation Based on Cumulative Time Series Vegetation Indices of UAV MS and RGB Images. Agronomy 2024, 14, 2956. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Li, Z.; Feng, X.; Li, J.; Wang, D.; Hong, W.; Qin, J.; Wang, A.; Ma, H.; Yao, Q.; Chen, S. Time Series Field Estimation of Rice Canopy Height Using an Unmanned Aerial Vehicle-Based RGB/Multispectral Platform. Agronomy 2024, 14, 883. [Google Scholar] [CrossRef]

- Roháček, K. Chlorophyll fluorescence parameters: The definitions, photosynthetic meaning, and mutual relationships. Photosynthetica 2002, 40, 13–29. [Google Scholar] [CrossRef]

- Xia, Q.; Tan, J.; Cheng, S.; Jiang, Y.; Guo, Y. Sensing plant physiology and environmental stress by automatically tracking Fj and Fi features in PSII chlorophyll fluorescence induction. Photochem. Photobiol. 2019, 95, 1495–1503. [Google Scholar] [CrossRef]

- Awad, M.; Khanna, R.; Awad, M.; Khanna, R. Support vector regression. In Efficient Learning Machines: Theories, Concepts, and Applications for Engineers and System Designers; Apress: Berlin/Heidelberg, Germany, 2015; pp. 67–80. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Song, E.; Shao, G.; Zhu, X.; Zhang, W.; Dai, Y.; Lu, J. Estimation of plant height and biomass of rice using unmanned aerial vehicle. Agronomy 2024, 14, 145. [Google Scholar] [CrossRef]

- Hemalatha, N.; Akhil, W.; Vinod, R. Computational yield prediction of Rice using KNN regression. In Computer Vision and Robotics: Proceedings of CVR 2022; Springer: Berlin/Heidelberg, Germany, 2023; pp. 295–308. [Google Scholar]

- Yang, M.-D.; Hsu, Y.-C.; Tseng, W.-C.; Tseng, H.-H.; Lai, M.-H. Precision assessment of rice grain moisture content using UAV multispectral imagery and machine learning. Comput. Electron. Agric. 2025, 230, 109813. [Google Scholar] [CrossRef]

- Tian, L.; Li, Y.; Zhang, M. A variable selection method based on multicollinearity reduction for food origin traceability identification. Vib. Spectrosc. 2025, 138, 103804. [Google Scholar] [CrossRef]

- Xu, S.; Xu, X.; Blacker, C.; Gaulton, R.; Zhu, Q.; Yang, M.; Yang, G.; Zhang, J.; Yang, Y.; Yang, M. Estimation of leaf nitrogen content in rice using vegetation indices and feature variable optimization with information fusion of multiple-sensor images from UAV. Remote Sens. 2023, 15, 854. [Google Scholar] [CrossRef]

- Wong, C.Y.S.; McHugh, D.P.; Bambach, N.; McElrone, A.J.; Alsina, M.M.; Kustas, W.P.; Magney, T.S. Hyperspectral and Photodiode Retrievals of Nighttime LED-Induced Chlorophyll Fluorescence (LEDIF) for Tracking Photosynthetic Phenology in a Vineyard. J. Geophys. Res. Biogeosci. 2024, 129, e2023JG007742. [Google Scholar] [CrossRef]

- Gu, Q.; Huang, F.; Lou, W.; Zhu, Y.; Hu, H.; Zhao, Y.; Zhou, H.; Zhang, X. Unmanned aerial vehicle-based assessment of rice leaf chlorophyll content dynamics across genotypes. Comput. Electron. Agric. 2024, 221, 108939. [Google Scholar] [CrossRef]

- Liu, H.; Lei, X.; Liang, H.; Wang, X. Multi-Model Rice Canopy Chlorophyll Content Inversion Based on UAV Hyperspectral Images. Sustainability 2023, 15, 7038. [Google Scholar] [CrossRef]

- Cao, Y.; Jiang, K.; Wu, J.; Yu, F.; Du, W.; Xu, T. Inversion modeling of japonica rice canopy chlorophyll content with UAV hyperspectral remote sensing. PLoS ONE 2020, 15, e0238530. [Google Scholar] [CrossRef] [PubMed]

- Jing, H.; Bin, W.; Jiachen, H. Chlorophyll inversion in rice based on visible light images of different planting methods. PLoS ONE 2025, 20, e0319657. [Google Scholar] [CrossRef]

- Fei, S.; Li, L.; Han, Z.; Chen, Z.; Xiao, Y. Combining novel feature selection strategy and hyperspectral vegetation indices to predict crop yield. Plant Methods 2022, 18, 119. [Google Scholar] [CrossRef]

- Elsherbiny, O.; Fan, Y.; Zhou, L.; Qiu, Z. Fusion of Feature Selection Methods and Regression Algorithms for Predicting the Canopy Water Content of Rice Based on Hyperspectral Data. Agriculture 2021, 11, 51. [Google Scholar] [CrossRef]

- Yuan, Y.; Wang, X.; Shi, M.; Wang, P. Performance comparison of RGB and multispectral vegetation indices based on machine learning for estimating Hopea hainanensis SPAD values under different shade conditions. Front. Plant Sci. 2022, 13, 928953. [Google Scholar] [CrossRef]

- Lu, S.; Lu, F.; You, W.; Wang, Z.; Liu, Y.; Omasa, K. A robust vegetation index for remotely assessing chlorophyll content of dorsiventral leaves across several species in different seasons. Plant Methods 2018, 14, 15. [Google Scholar] [CrossRef] [PubMed]

- Karmakar, P.; Teng, S.W.; Murshed, M.; Pang, S.; Li, Y.; Lin, H. Crop monitoring by multimodal remote sensing: A review. Remote Sens. Appl. Soc. Environ. 2024, 33, 101093. [Google Scholar] [CrossRef]

- Zhu, H.; Liang, S.; Lin, C.; He, Y.; Xu, J.-L. Using Multi-Sensor Data Fusion Techniques and Machine Learning Algorithms for Improving UAV-Based Yield Prediction of Oilseed Rape. Drones 2024, 8, 642. [Google Scholar] [CrossRef]

- Gong, Y.; Yang, K.; Lin, Z.; Fang, S.; Wu, X.; Zhu, R.; Peng, Y. Remote estimation of leaf area index (LAI) with unmanned aerial vehicle (UAV) imaging for different rice cultivars throughout the entire growing season. Plant Methods 2021, 17, 88. [Google Scholar] [CrossRef]

- Wan, L.; Cen, H.; Zhu, J.; Zhang, J.; Zhu, Y.; Sun, D.; Du, X.; Zhai, L.; Weng, H.; Li, Y.; et al. Grain yield prediction of rice using multi-temporal UAV-based RGB and multispectral images and model transfer—a case study of small farmlands in the South of China. Agric. For. Meteorol. 2020, 291, 108096. [Google Scholar] [CrossRef]

- Ram, B.G.; Oduor, P.; Igathinathane, C.; Howatt, K.; Sun, X. A systematic review of hyperspectral imaging in precision agriculture: Analysis of its current state and future prospects. Comput. Electron. Agric. 2024, 222, 109037. [Google Scholar] [CrossRef]

- Araújo, S.O.; Peres, R.S.; Ramalho, J.C.; Lidon, F.; Barata, J. Machine Learning Applications in Agriculture: Current Trends, Challenges, and Future Perspectives. Agronomy 2023, 13, 2976. [Google Scholar] [CrossRef]

| Period | Max | Min | Mean | SD | n | CV |

|---|---|---|---|---|---|---|

| TI | 55.52 | 39.3 | 46.77 | 4.18 | 80 | 8.93 |

| JH | 55.52 | 41.21 | 49.03 | 3.18 | 80 | 6.48 |

| GF | 53.34 | 37.24 | 46.9 | 3.66 | 80 | 7.79 |

| MT | 50.93 | 16.61 | 34.76 | 8.42 | 80 | 24.23 |

| Metric | Source of Variation | Sum of Squares | df | F-Value | p-Value |

|---|---|---|---|---|---|

| R2 | Sensor | 4.3591 | 3 | 100.39 | <0.0001 ** |

| Model | 0.0080 | 3 | 0.18 | 0.9068 | |

| Feature Selection | 0.2856 | 2 | 9.87 | 0.0001 ** | |

| Stage | 2.1867 | 4 | 37.77 | <0.0001 ** | |

| RMSE | Sensor | 15.1381 | 3 | 19.35 | <0.0001 ** |

| Model | 1.6007 | 3 | 2.05 | 0.1107 | |

| Feature Selection | 17.1034 | 2 | 32.79 | <0.0001 ** | |

| Stage | 21.3650 | 4 | 20.48 | <0.0001 ** |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, C.; Wang, L.; Fu, X.; Zhang, J.; Wang, R.; Wang, X.; Chai, N.; Guan, L.; Chen, Q.; Zhang, Z. Prediction of Rice Chlorophyll Index (CHI) Using Nighttime Multi-Source Spectral Data. Agriculture 2025, 15, 1425. https://doi.org/10.3390/agriculture15131425

Liu C, Wang L, Fu X, Zhang J, Wang R, Wang X, Chai N, Guan L, Chen Q, Zhang Z. Prediction of Rice Chlorophyll Index (CHI) Using Nighttime Multi-Source Spectral Data. Agriculture. 2025; 15(13):1425. https://doi.org/10.3390/agriculture15131425

Chicago/Turabian StyleLiu, Cong, Lin Wang, Xuetong Fu, Junzhe Zhang, Ran Wang, Xiaofeng Wang, Nan Chai, Longfeng Guan, Qingshan Chen, and Zhongchen Zhang. 2025. "Prediction of Rice Chlorophyll Index (CHI) Using Nighttime Multi-Source Spectral Data" Agriculture 15, no. 13: 1425. https://doi.org/10.3390/agriculture15131425

APA StyleLiu, C., Wang, L., Fu, X., Zhang, J., Wang, R., Wang, X., Chai, N., Guan, L., Chen, Q., & Zhang, Z. (2025). Prediction of Rice Chlorophyll Index (CHI) Using Nighttime Multi-Source Spectral Data. Agriculture, 15(13), 1425. https://doi.org/10.3390/agriculture15131425