Research on Lightweight Algorithm Model for Precise Recognition and Detection of Outdoor Strawberries Based on Improved YOLOv5n

Abstract

1. Introduction

2. Materials and Methods

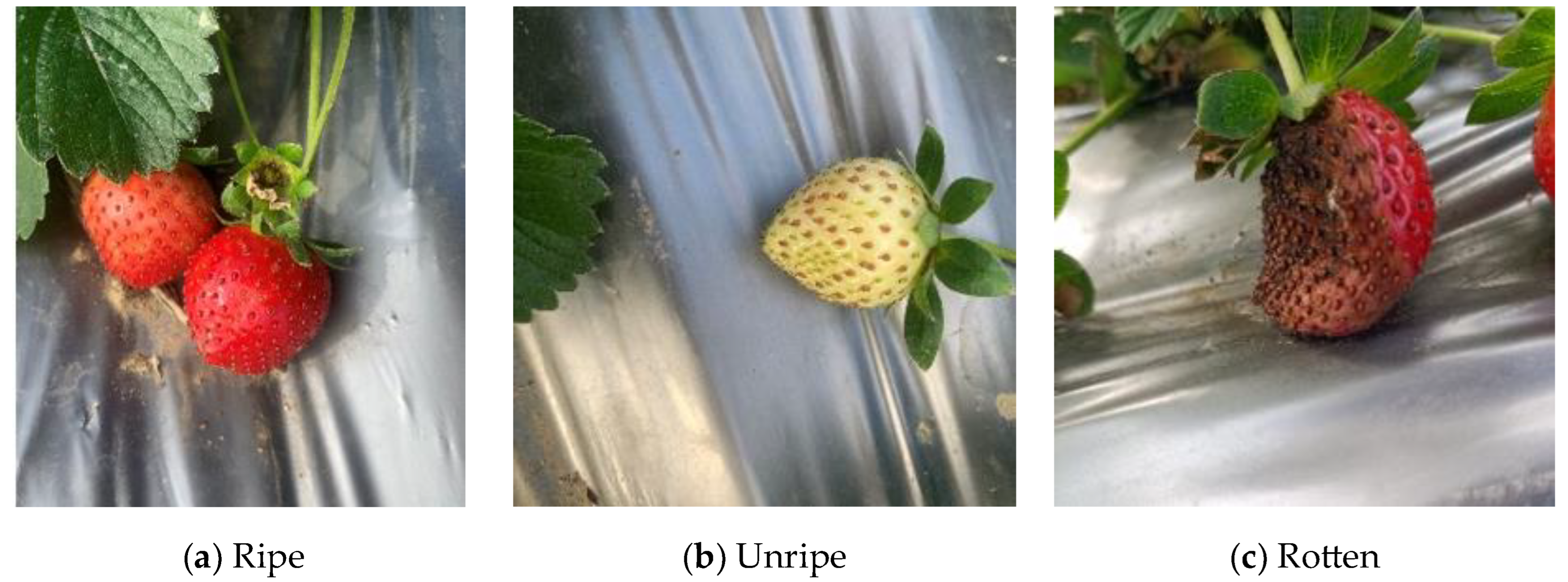

2.1. Data Sources

2.2. Data Processing

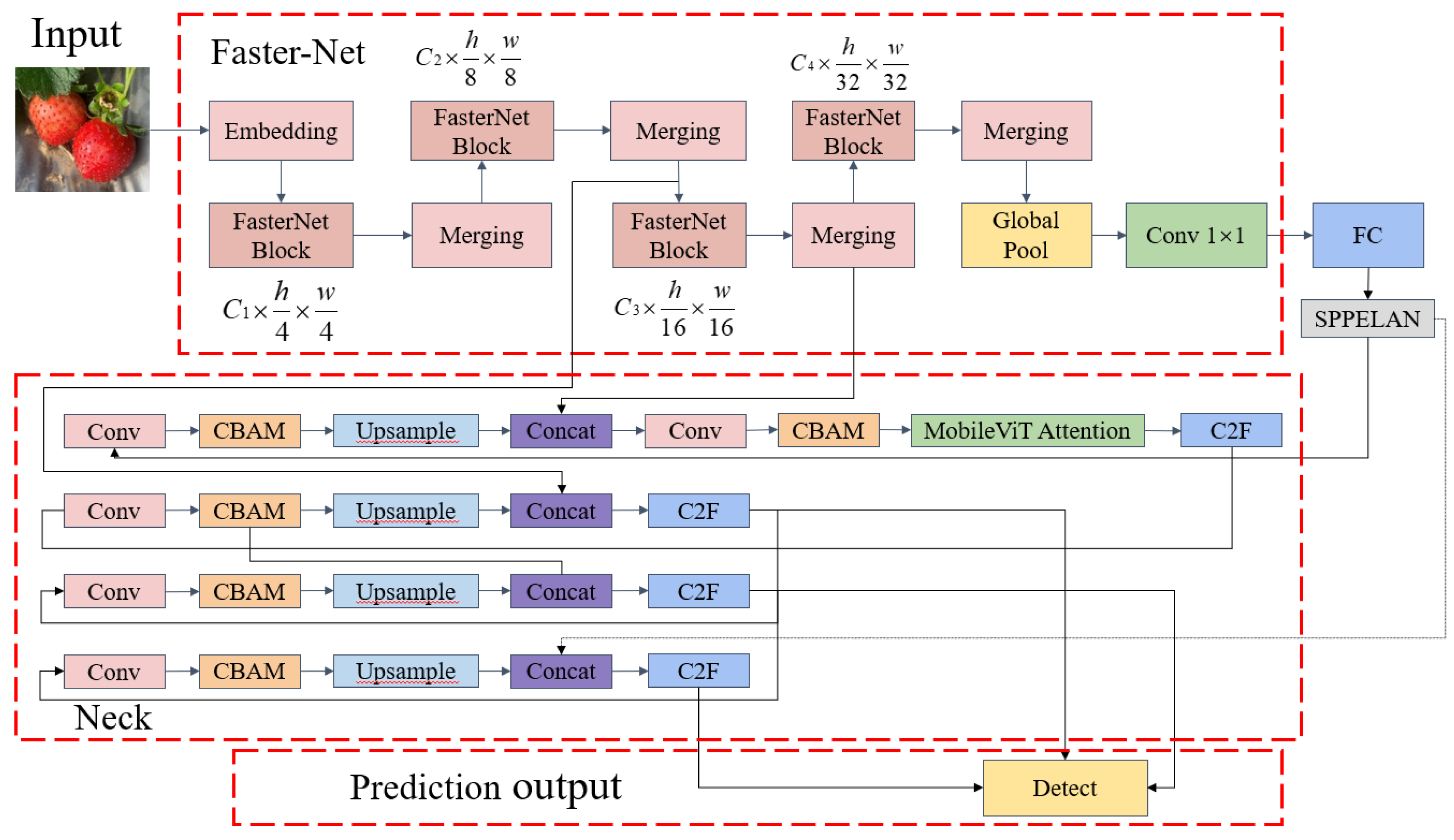

3. Improved YOLOv5n Strawberry Detection Algorithm

3.1. YOLOv5n Model

3.2. Improved YOLOv5n Model

3.2.1. Improvement and Optimization of Backbone Network

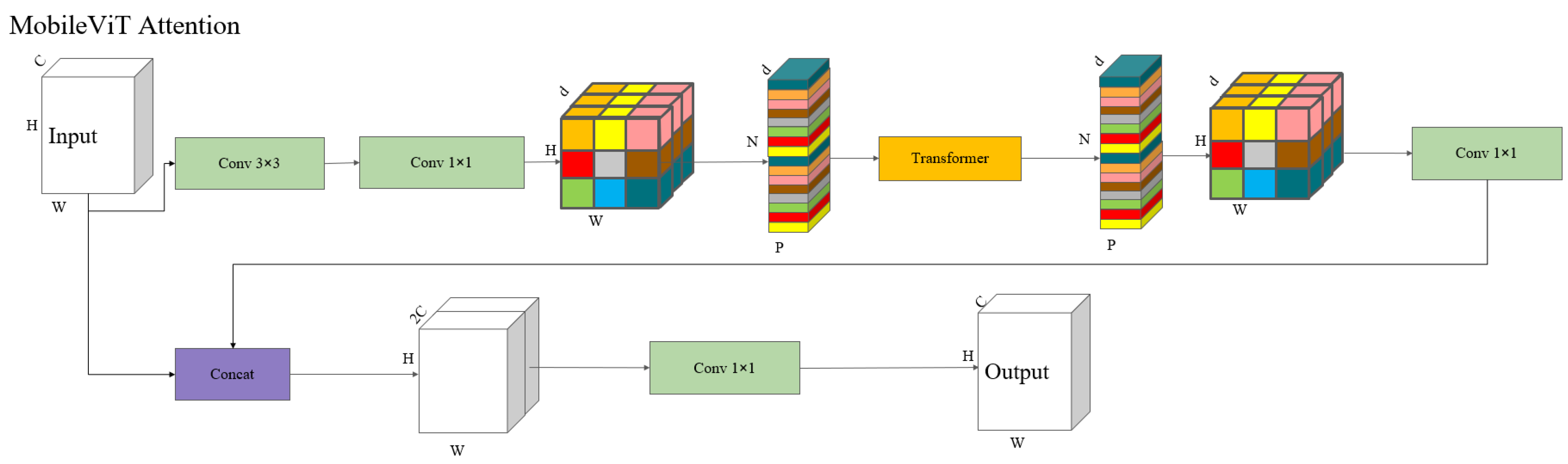

3.2.2. Improvement and Optimization of Attention Mechanism

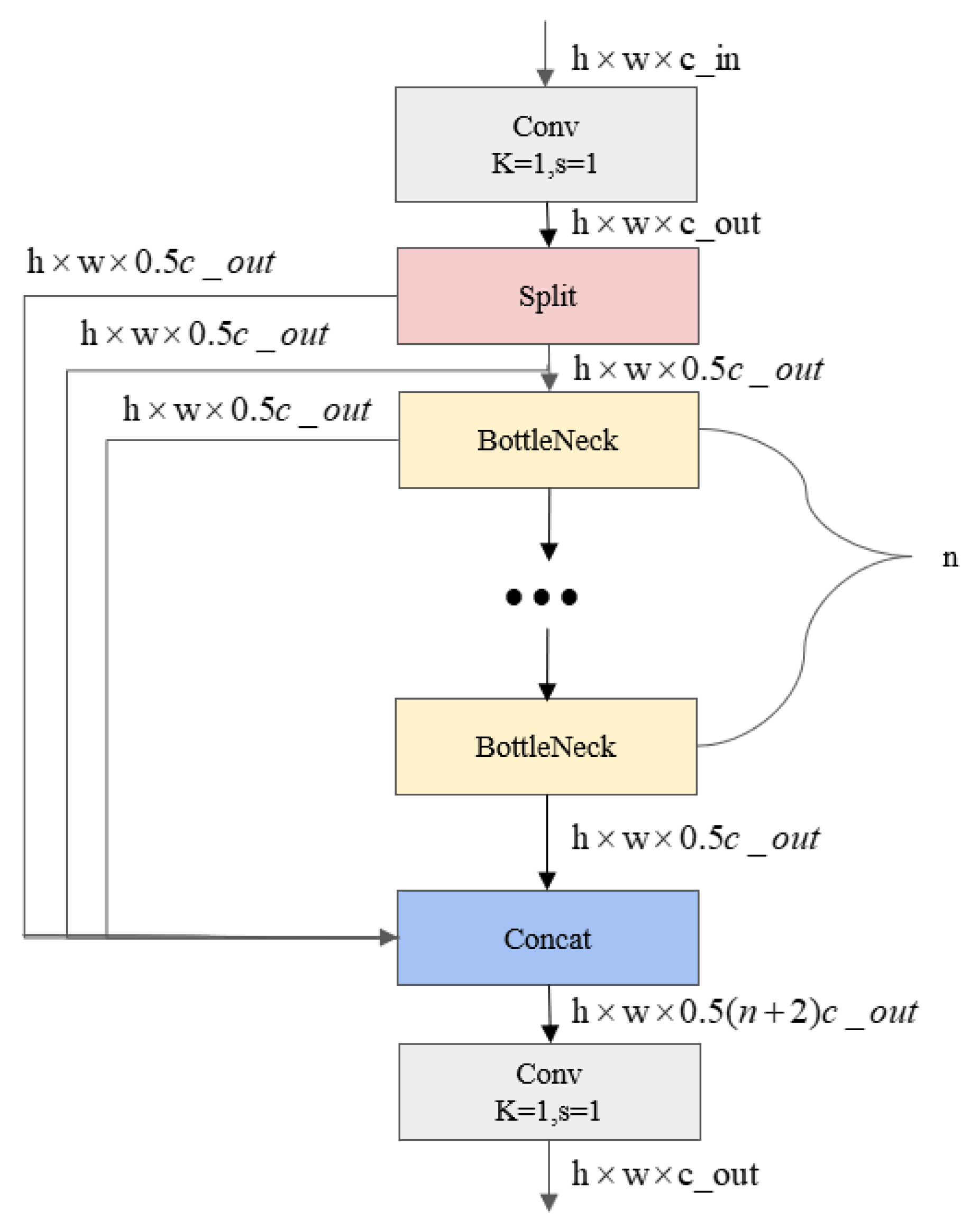

3.2.3. C2f Module

3.2.4. SPPELAN Module

3.2.5. Soft-NMS Module

3.3. Model Training and Testing

3.3.1. Experiment Environment

3.3.2. Evaluation Indicators

4. Results and Analysis

4.1. Ablation Test Results and Analysis

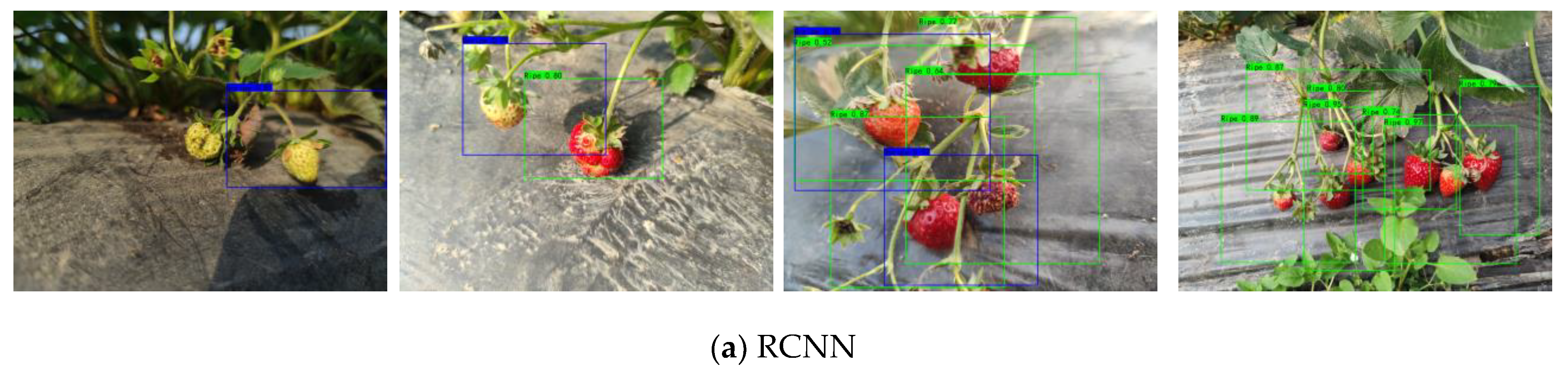

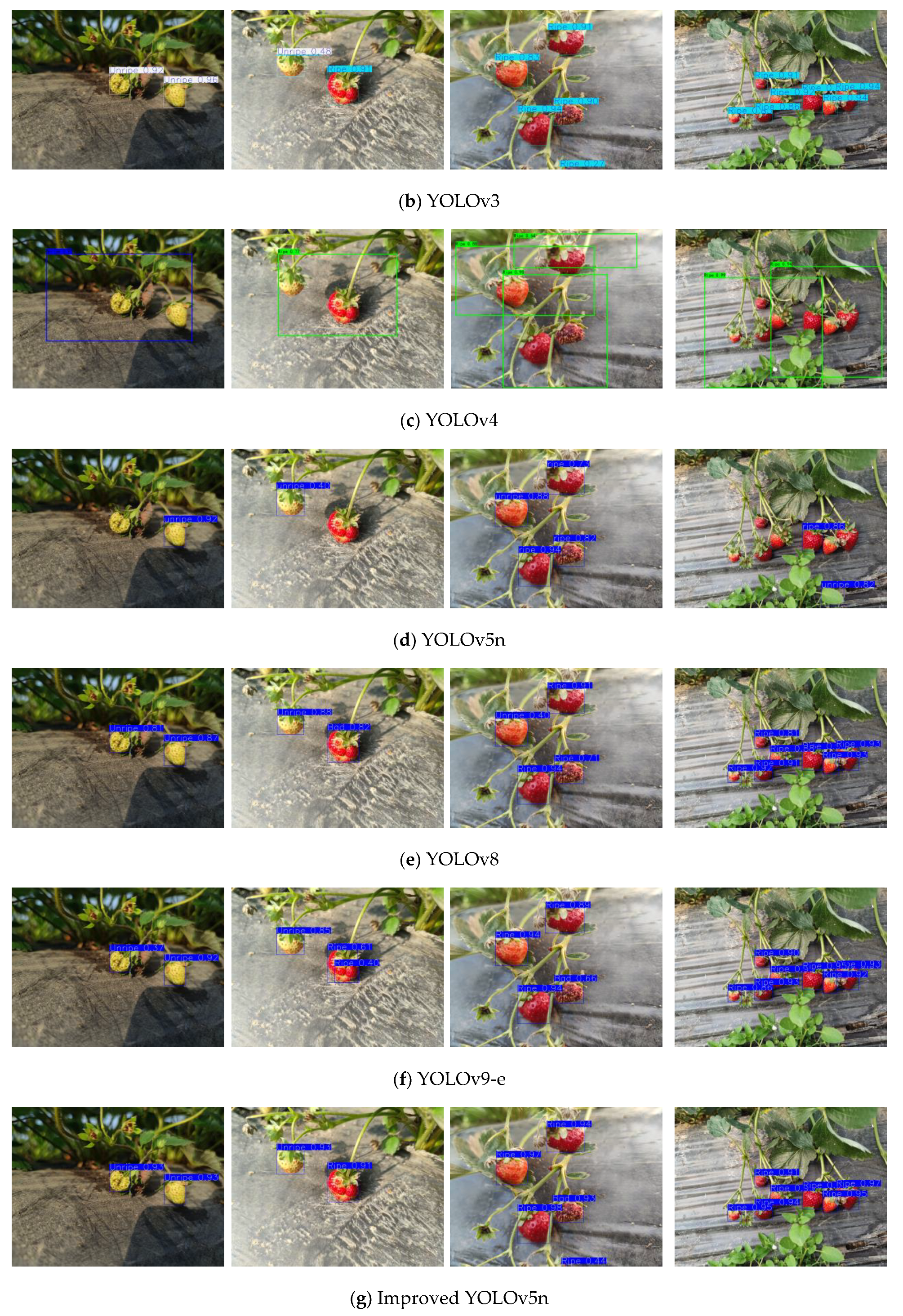

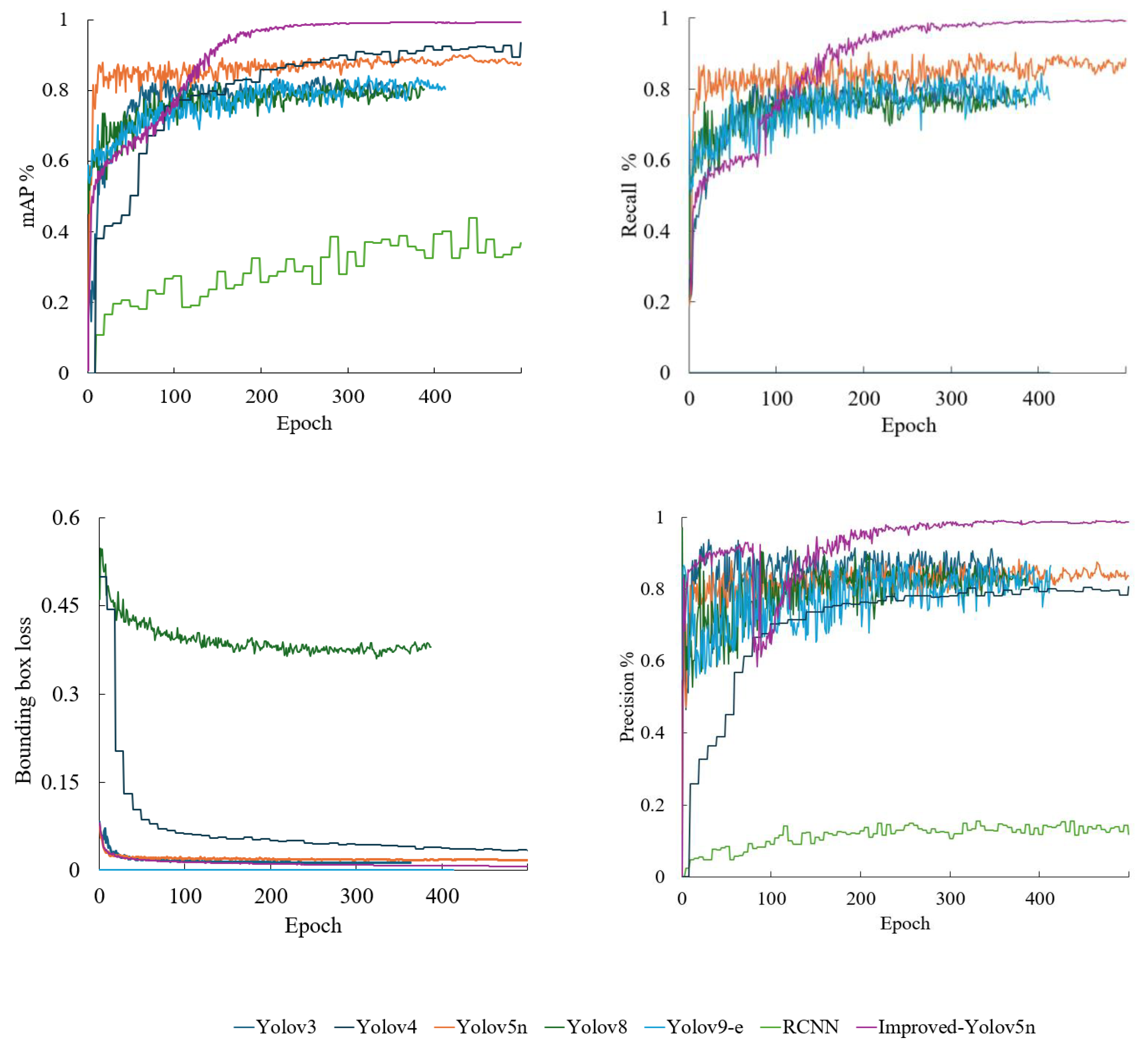

4.2. Comparison of the Detection Effect with Different Models

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, Y.; Yan, G.; Meng, Q.L.; Yao, T.; Han, J.F.; Zhang, B. DSE-YOLO: Detail semantics enhancement YOLO for multi-stage strawberry detection. Comput. Electron. Agric. 2022, 198, 107057. [Google Scholar] [CrossRef]

- Zhao, S.Y.; Liu, J.Z.; Wu, S. Multiple disease detection method for greenhouse-cultivated strawberry based on multiscale feature fusion Faster R_CNN. Comput. Electron. Agric. 2022, 199, 107176. [Google Scholar] [CrossRef]

- Miao, R.H.; Li, G.A.; Huang, Z.B.; Li, Z.W.; Du, H.L. Maturity Detection of Apple in Complex Orchard Environment Based on YOLO v7-ST-ASFF. Trans. Chin. Soc. Agric. Mach. 2024, 55, 219–228. (In Chinese) [Google Scholar]

- Miao, R.H.; Li, Z.W.; Wu, J.L. Lightweight Maturity Detection of Cherry Tomato Based on Improved YOLO v7. Trans. Chin. Soc. Agric. Mach. 2023, 54, 225–233. (In Chinese) [Google Scholar]

- Zhang, Z.; Zhou, J.; Jiang, Z.Z.; Han, H.Q. Lightweight Apple Recognition Method in Natural Orchard Environment Based on Improved YOLO v7 Model. Trans. Chin. Soc. Agric. Mach. 2024, 55, 231–242+262. (In Chinese) [Google Scholar]

- Yuan, J.; Xie, L.W.; Guo, X.; Liang, R.G.; Zhang, Y.G.; Ma, H.T. Apple Leaf Disease Detection Method Based on Improved YOLO v7. Trans. Chin. Soc. Agric. Mach. 2024, 55, 1–9. (In Chinese) [Google Scholar]

- Song, H.B.; Yang, H.R.; Su, X.W.; Zhou, Y.H.; Gao, X.Y.; Shang, Y.Y.; Zhang, S.J. Application of Image Enhancement Technology Based on Enlighten GAN in Apple Detection in Natural Scenes. Trans. Chin. Soc. Agric. Mach. 2024, 55, 266–279. (In Chinese) [Google Scholar]

- Yang, Z.Y.; Wang, X.C.; Qi, Z.H.; Wang, D.Z. Recognizing strawberry to detect key points for peduncle picking using improved YOLO v8 model. Trans. Chin. Soc. Agric. Eng. 2024, 40, 167–175. (In Chinese) [Google Scholar]

- Nan, Y.L.; Zhang, H.C.; Zeng, Y.; Zheng, J.Q.; Ge, Y.F. Intelligent detection of Multi-Class pitaya fruits in target picking row based on WGB-YOLO network. Comput. Electron. Agric. 2023, 208, 107780. [Google Scholar] [CrossRef]

- Xu, D.F.; Zhao, H.M.; Lawal, O.M.; Lu, X.Y.; Ren, R.; Zhang, S.J. An Automatic Jujube Fruit Detection and Ripeness Inspection Method in the Natural Environment. Agronomy 2023, 13, 451. [Google Scholar] [CrossRef]

- Du, X.Q.; Cheng, H.C.; Ma, Z.H.; Lu, W.W.; Wang, M.X.; Meng, Z.C.; Jiang, C.J.; Hong, F.W. DSW-YOLO: A detection method for ground-planted strawberry fruits under different occlusion levels. Comput. Electron. Agric. 2023, 214, 108304. [Google Scholar] [CrossRef]

- Wang, Y.W.; Wang, Y.J.; Zhao, J.B. MGA-YOLO: A lightweight one-stage network for apple leaf disease detection. Front. Plant Sci. 2022, 13, 927424. [Google Scholar] [CrossRef] [PubMed]

- Bai, Y.F.; Yu, J.Z.; Yang, S.Q.; Ning, J.F. An improved YOLO algorithm for detecting flowers and fruits on strawberry seedlings. Biosyst. Eng. 2024, 237, 1–12. [Google Scholar] [CrossRef]

- Wu, D.H.; Lv, S.C.; Jiang, M.; Song, H.B. Using channel pruning-based YOLO v4 deep learning algorithm for the real-time and accurate detection of apple flowers in natural environments. Comput. Electron. Agric. 2020, 178, 105742. [Google Scholar] [CrossRef]

- Wang, D.D.; He, D.J. Channel pruned YOLO V5s-based deep learning approach for rapid and accurate apple fruitlet detection before fruit thinning. Biosyst. Eng. 2021, 210, 271–281. [Google Scholar] [CrossRef]

- Wang, H.; Xu, X.; Liu, Y.; Lu, D.; Liang, B.; Tang, Y. Real-Time Defect Detection for Metal Components: A Fusion of Enhanced Canny–Devernay and YOLOv6 Algorithms. Appl. Sci. 2023, 13, 6898. [Google Scholar] [CrossRef]

- Tang, Y.; Qiu, J.; Zhang, Y.; Wu, D.; Cao, Y.; Zhao, K.; Zhu, L. Optimization strategies of fruit detection to overcome the challenge of unstructured background in field orchard environment: A review. Precis. Agric. 2023, 1–37, 1183–1219. [Google Scholar] [CrossRef]

- Siampour, H.; Nezhad, A.Z. Revealing the Invisible: Imaging Through Non-Radiating Subspace. J. Opt. Photonics Res. 2024, 1, 159–169. [Google Scholar] [CrossRef]

- Tang, Y.; Zhou, H.; Wang, H.; Zhang, Y. Fruit detection and positioning technology for a Camellia oleifera C. Abel orchard based on improved YOLOv4-tiny model and binocular stereo vision. Expert Syst. Appl. 2023, 211, 118573. [Google Scholar] [CrossRef]

- Jamal Jumaah, H.; Adnan Rashid, A.; Abdul Razzaq Saleh, S.; Jamal Jumaah, S. Deep Neural Remote Sensing and Sentinel-2 Satellite Image Processing of Kirkuk City, Iraq for Sustainable Prospective. J. Opt. Photonics Res. 2024. [Google Scholar] [CrossRef]

- Wang, Q.F.; Cheng, M.; Huang, S.; Cai, Z.J.; Zhang, J.L.; Yuan, H.B. A deep learning approach incorporating YOLO v5 and attention mechanisms for field real-time detection of the invasive weed Solanum rostratum Dunal seedlings. Comput. Electron. Agric. 2022, 199, 107194. [Google Scholar] [CrossRef]

- Jia, X.Y.; Zhao, C.J.; Zhou, J.; Wang, Q.Y.; Liang, X.T.; He, X.; Huang, W.Q.; Zhang, C. Online detection of citrus surface defects using improved YOLOv7 modeling. Trans. Chin. Soc. Agric. Eng. 2023, 39, 142–151. (In Chinese) [Google Scholar]

- Guo, H.P.; Cao, Y.Z.; Wang, C.S.; Rong, L.R.; Li, Y.; Wang, T.W.; Yang, F.Z. Recognition and application of apple defoliation disease based on transfer learning. Trans. Chin. Soc. Agric. Eng. 2024, 40, 184–192. (In Chinese) [Google Scholar]

- Su, B.F.; Liu, D.Z.; Chen, Q.F.; Han, D.J.; Wu, J.H. Method for the identification of wheat stripe rust resistance grade using time series vegetation index. Trans. Chin. Soc. Agric. Eng. 2024, 40, 160–170. (In Chinese) [Google Scholar]

- Tian, Y.N.; Wang, S.H.; Li, E.; Yang, G.D.; Liang, Z.Z.; Tan, M. MD-YOLO: Multiscale Dense YOLO for small target pest detection. Comput. Electron. Agric. 2023, 213, 108233. [Google Scholar] [CrossRef]

- Rai, N.; Zhang, Y.; Villamil, M.; Howatt, K.; Ostlie, M.; Sun, X. Agricultural weed identification in images and videos by integrating optimized deep learning architecture on an edge computing technology. Comput. Electron. Agric. 2024, 216, 108442. [Google Scholar] [CrossRef]

- Shi, H.K.; Xiao, W.F.; Zhu, S.P.; Li, L.B.; Zhang, J.F. CA-YOLOv5: Detection model for healthy and diseased silkworms in mixed conditions based on improved YOLOv5. Int. J. Agric. Biol. Eng. 2023, 16, 236–245. [Google Scholar] [CrossRef]

- Zhu, L.Q.; Li, X.M.; Sun, H.M.; Han, Y.P. Research on CBF-YOLO detection model for common soybean pests in complex environment. Comput. Electron. Agric. 2024, 216, 108515. [Google Scholar] [CrossRef]

- Zhang, F.; Chen, Z.Z.; Bao, R.F.; Zhang, C.C.; Wang, Z.H. Recognition of dense cherry tomatoes based on improved YOLOv4-LITE lightweight neural network. Trans. Chin. Soc. Agric. Eng. 2021, 37, 270–278. (In Chinese) [Google Scholar]

- Huang, J.C.; Zhao, X.D.; Gao, F.Z.; Wen, X.; Jin, S.Y.; Zhang, Y. Recognizing and detecting the strawberry at multi-stages using improved lightweight YOLOv5s. Trans. Chin. Soc. Agric. Eng. 2023, 39, 181–187. (In Chinese) [Google Scholar]

- Liu, M.C.; Chu, Z.Y.; Cui, M.S.; Yang, Q.L.; Wang, J.X.; Yang, H.W. Red Ripe Strawberry Recognition and Stem Detection Based on Improved YOLO v8—Pose. Trans. Chin. Soc. Agric. Mach. 2023, 54, 244–251. (In Chinese) [Google Scholar]

- Xie, R.L.; Zhu, Y.J.; Luo, J.; Qin, G.F.; Wang, D. Detection algorithm for bearing roller end surface defects based on improved YOLOv5n and image fusion. Meas. Sci. Technol. 2023, 34, 045402. [Google Scholar] [CrossRef]

- Chen, J.R.; Kao, S.H.; He, H.; Zhuo, W.P.; Wen, S.; Lee, C.H.; Gary Chan, S.H. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 1–15. [Google Scholar]

- Mehta, S.; Rastegari, M. MobileViT: Lightweight, General-purpose, and Mobile-friendly Vision Transformer. arXiv 2022, arXiv:2110.02178. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Computer Vision—ECCV 2018; Springer: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar]

| Fasternet | CBAM and MobileViT | Soft-NMS | SPPELAN | C2f | P | R | mAP 50/% | mAP 50–95/% | Model Size /MB | Detection Frame Rate FPS/(Frame·s−1) |

|---|---|---|---|---|---|---|---|---|---|---|

| √ | √ | √ | √ | √ | 98.94% | 99.12% | 99.43% | 97.76% | 53.22 | 16.61 |

| √ | × | × | × | × | 86.64% | 84.87% | 89.75% | 74.77% | 59.33 | 46.08 |

| × | √ | × | × | × | 92.62% | 91.54% | 90.22% | 73.23% | 137.01 | 16.30 |

| × | × | √ | × | × | 84.08% | 87.72% | 90.17% | 85.64% | 74.54 | 25.74 |

| × | × | × | √ | × | 94.09% | 92.77% | 91.48% | 83.82% | 115.66 | 37.04 |

| × | × | × | × | √ | 92.48% | 89.16% | 88.23% | 80.12% | 86.45 | 42.82 |

| No. | Models | mAP 50–95/% | mAP 50/% | Computation /GFLOPs | F1 | Size of Model /MB | P/% | R/% | FPS /(Frame·s−1) |

|---|---|---|---|---|---|---|---|---|---|

| 1 | RCNN | 14.01% | 40.31% | 470.58 | 25.48% | 108.01 | 27.52% | 23.73% | 7.17 |

| 2 | YOLOv3 | 74.22% | 83.54% | 12.90 | 84.71% | 17.40 | 89.12% | 80.71% | 22.58 |

| 3 | YOLOv4 | 77.41% | 94.14% | 30.26 | 88.62% | 244.06 | 94.17% | 83.68% | 22.95 |

| 4 | YOLOv5n | 73.13% | 90.15% | 4.12 | 85.94% | 6.72 | 84.22% | 87.73% | 89.32 |

| 5 | YOLOv8 | 76.24% | 83.17% | 8.12 | 81.73% | 6.35 | 84.03% | 79.55% | 47.43 |

| 6 | YOLOv9-e | 77.34% | 83.82% | 240.73 | 81.31% | 140.03 | 79.54% | 83.16% | 10.40 |

| 7 | Improved YOLOv5n | 97.01% | 99.43% | 78.03 | 99.03% | 53.22 | 98.94% | 99.12% | 16.61 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, X.; Zhong, P.; Huang, Y.; Huang, M.; Huang, Z.; Zou, T.; Xing, H. Research on Lightweight Algorithm Model for Precise Recognition and Detection of Outdoor Strawberries Based on Improved YOLOv5n. Agriculture 2025, 15, 90. https://doi.org/10.3390/agriculture15010090

Cao X, Zhong P, Huang Y, Huang M, Huang Z, Zou T, Xing H. Research on Lightweight Algorithm Model for Precise Recognition and Detection of Outdoor Strawberries Based on Improved YOLOv5n. Agriculture. 2025; 15(1):90. https://doi.org/10.3390/agriculture15010090

Chicago/Turabian StyleCao, Xiaoman, Peng Zhong, Yihao Huang, Mingtao Huang, Zhengyan Huang, Tianlong Zou, and He Xing. 2025. "Research on Lightweight Algorithm Model for Precise Recognition and Detection of Outdoor Strawberries Based on Improved YOLOv5n" Agriculture 15, no. 1: 90. https://doi.org/10.3390/agriculture15010090

APA StyleCao, X., Zhong, P., Huang, Y., Huang, M., Huang, Z., Zou, T., & Xing, H. (2025). Research on Lightweight Algorithm Model for Precise Recognition and Detection of Outdoor Strawberries Based on Improved YOLOv5n. Agriculture, 15(1), 90. https://doi.org/10.3390/agriculture15010090