CNN-MLP-Based Configurable Robotic Arm for Smart Agriculture

Abstract

1. Introduction

2. Materials and Methods

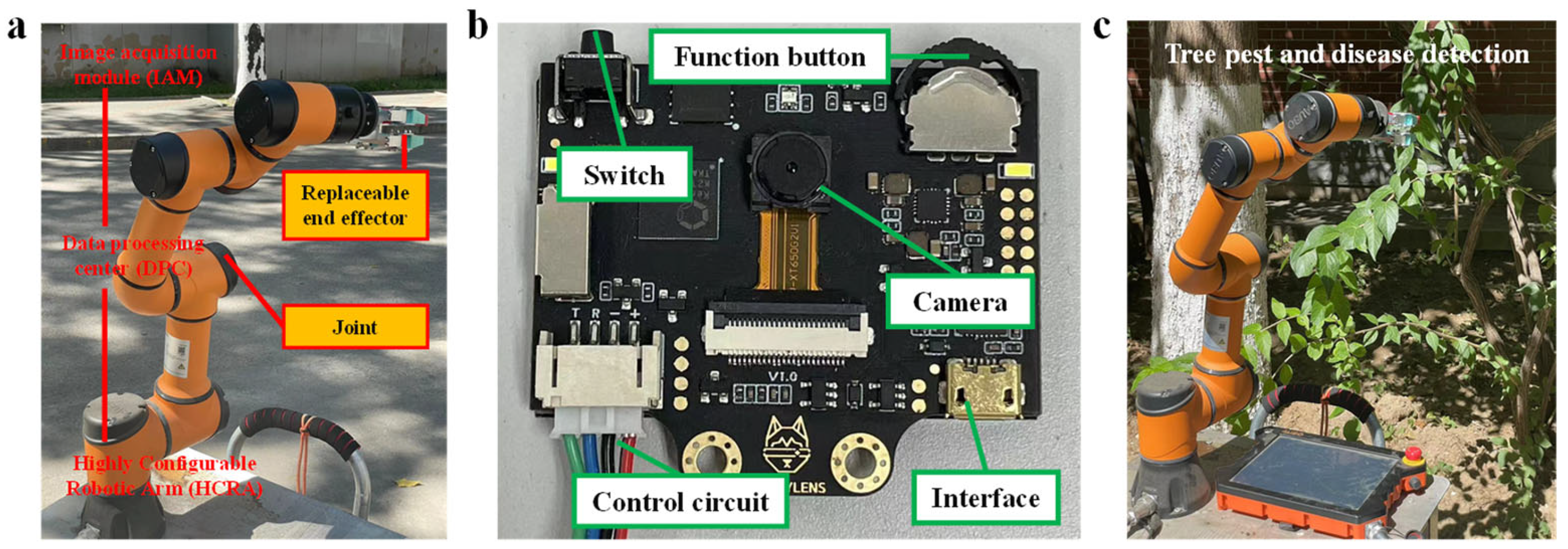

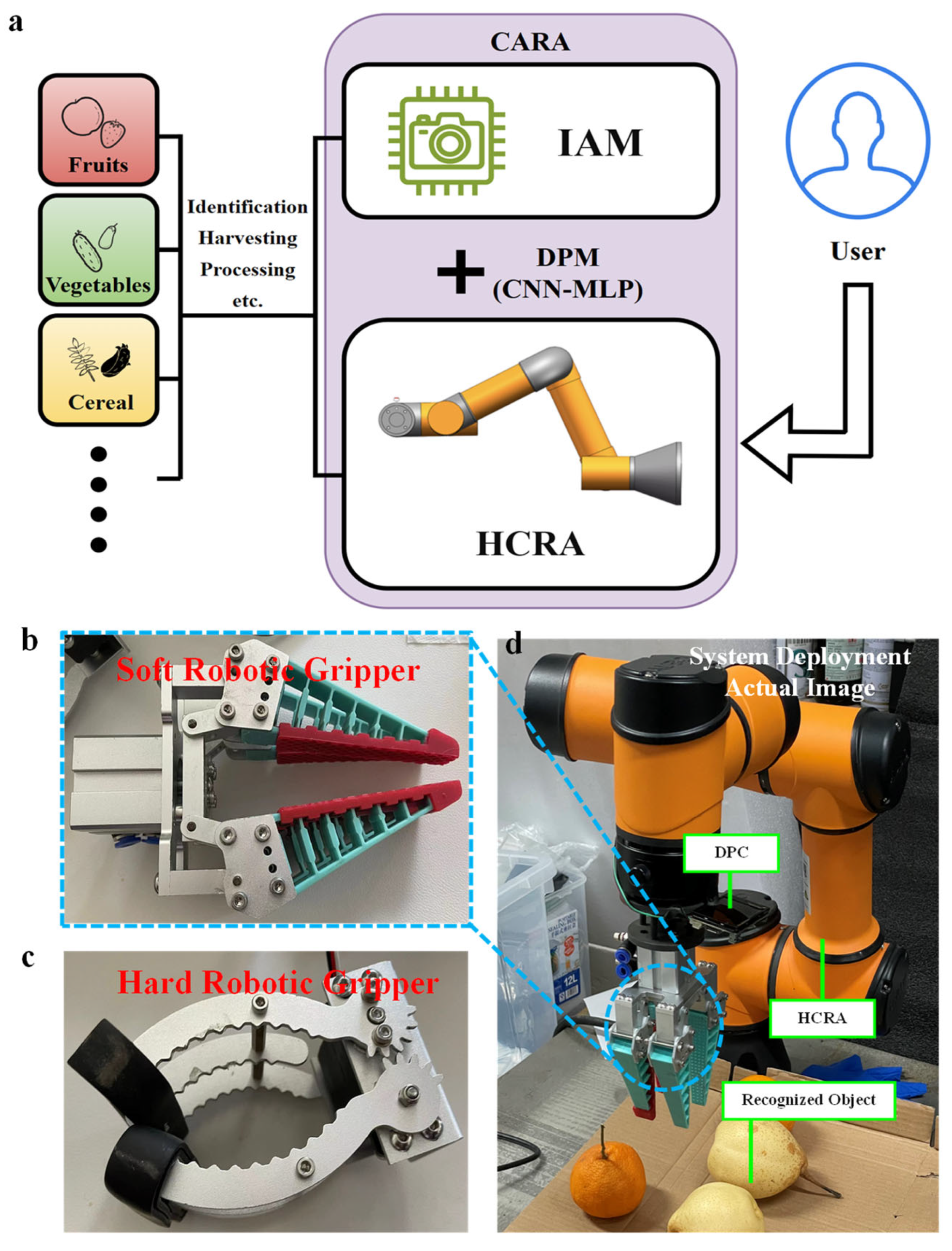

2.1. Structure and Configuration of HCRA

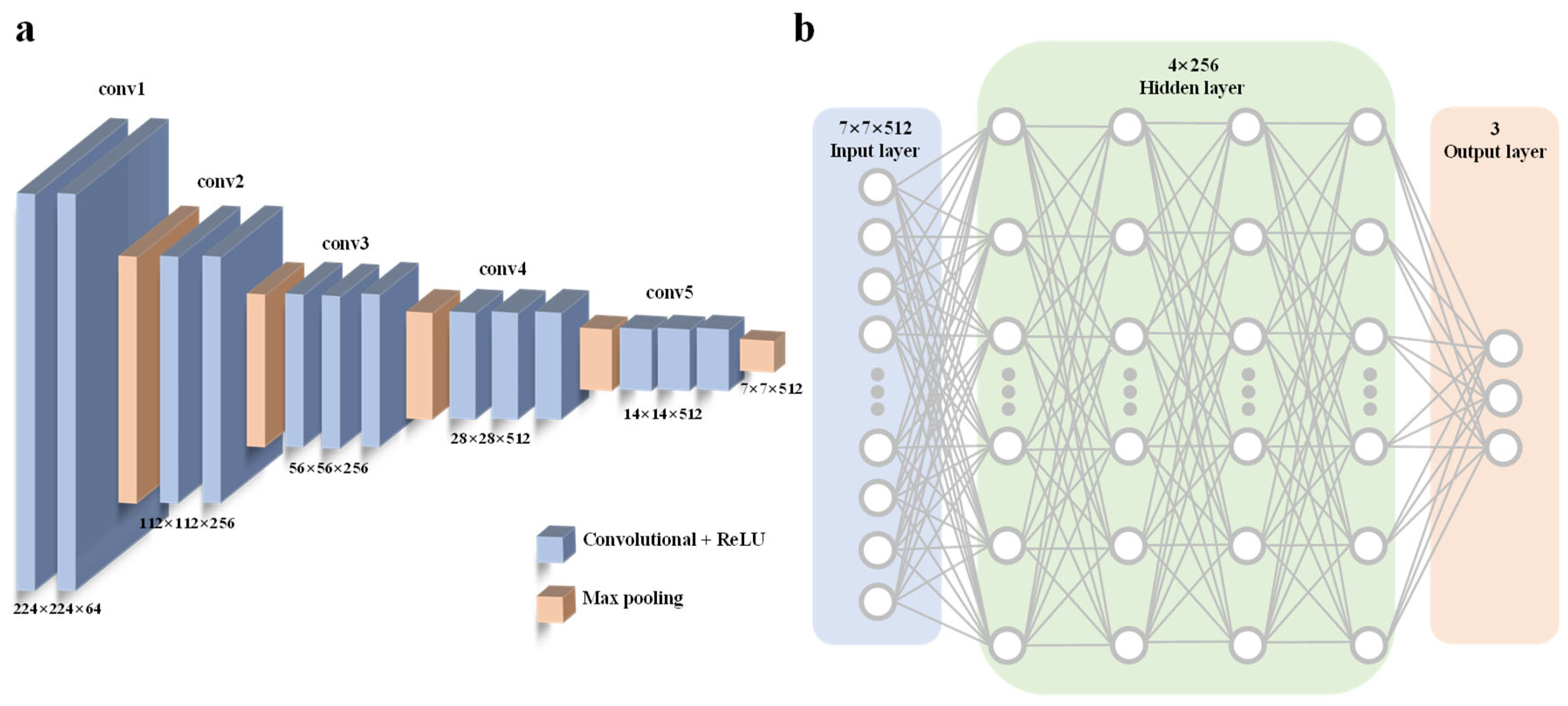

2.2. Structure and Configuration of IAM and DPC

2.3. Experimental Scheme

3. Results and Discussion

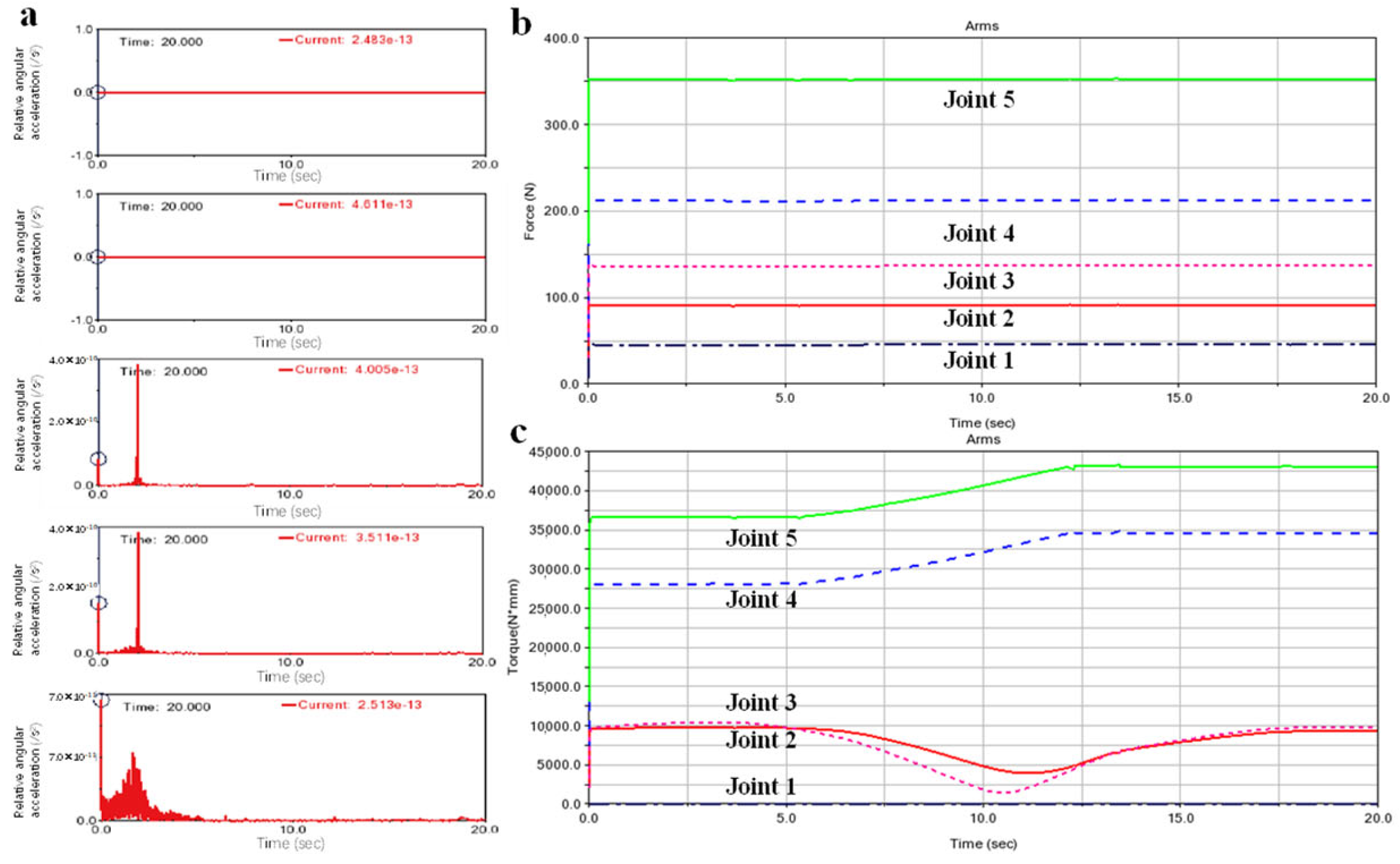

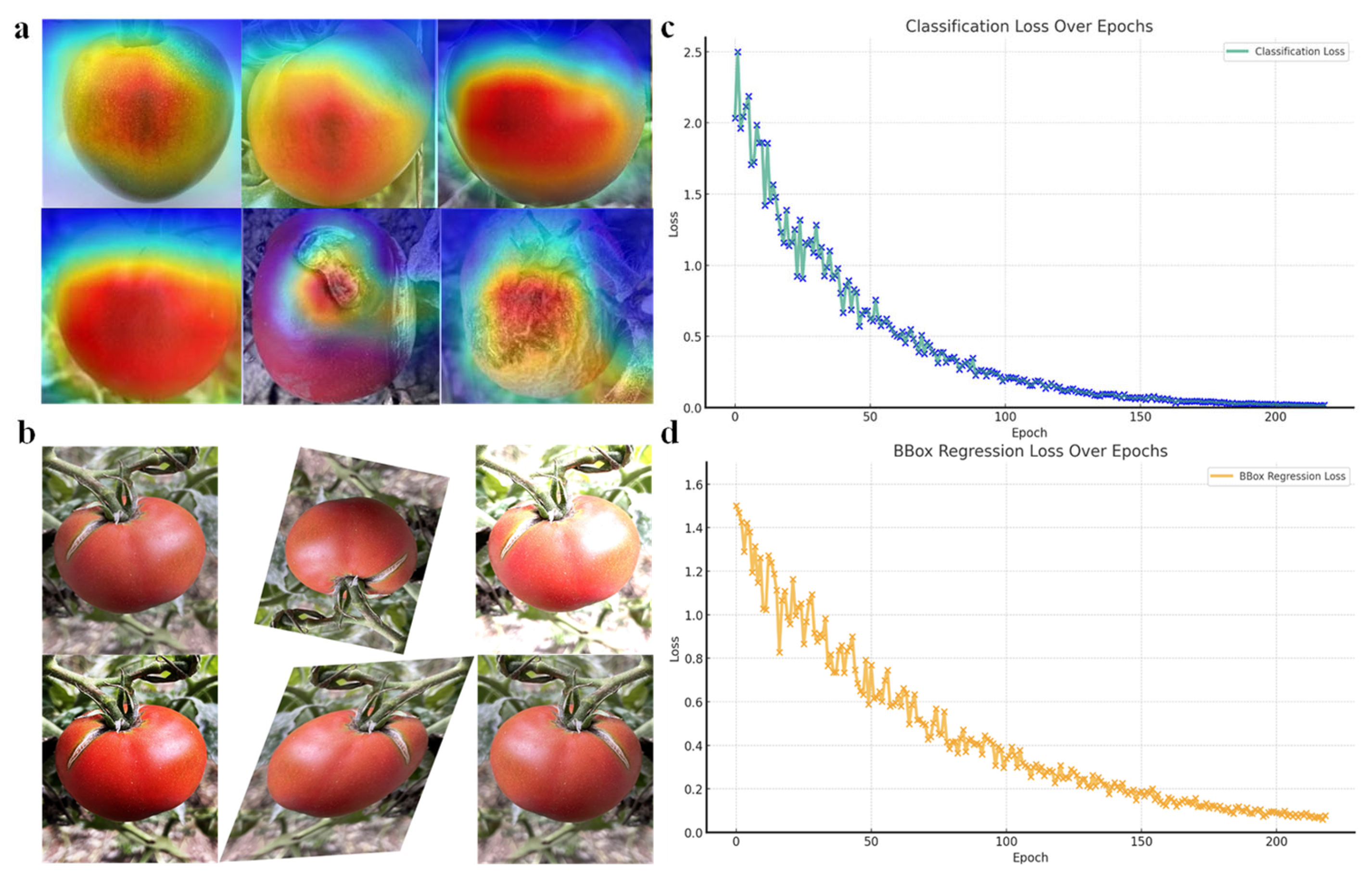

3.1. Performance Analysis of the HARC

3.2. Performance Analysis of DPC

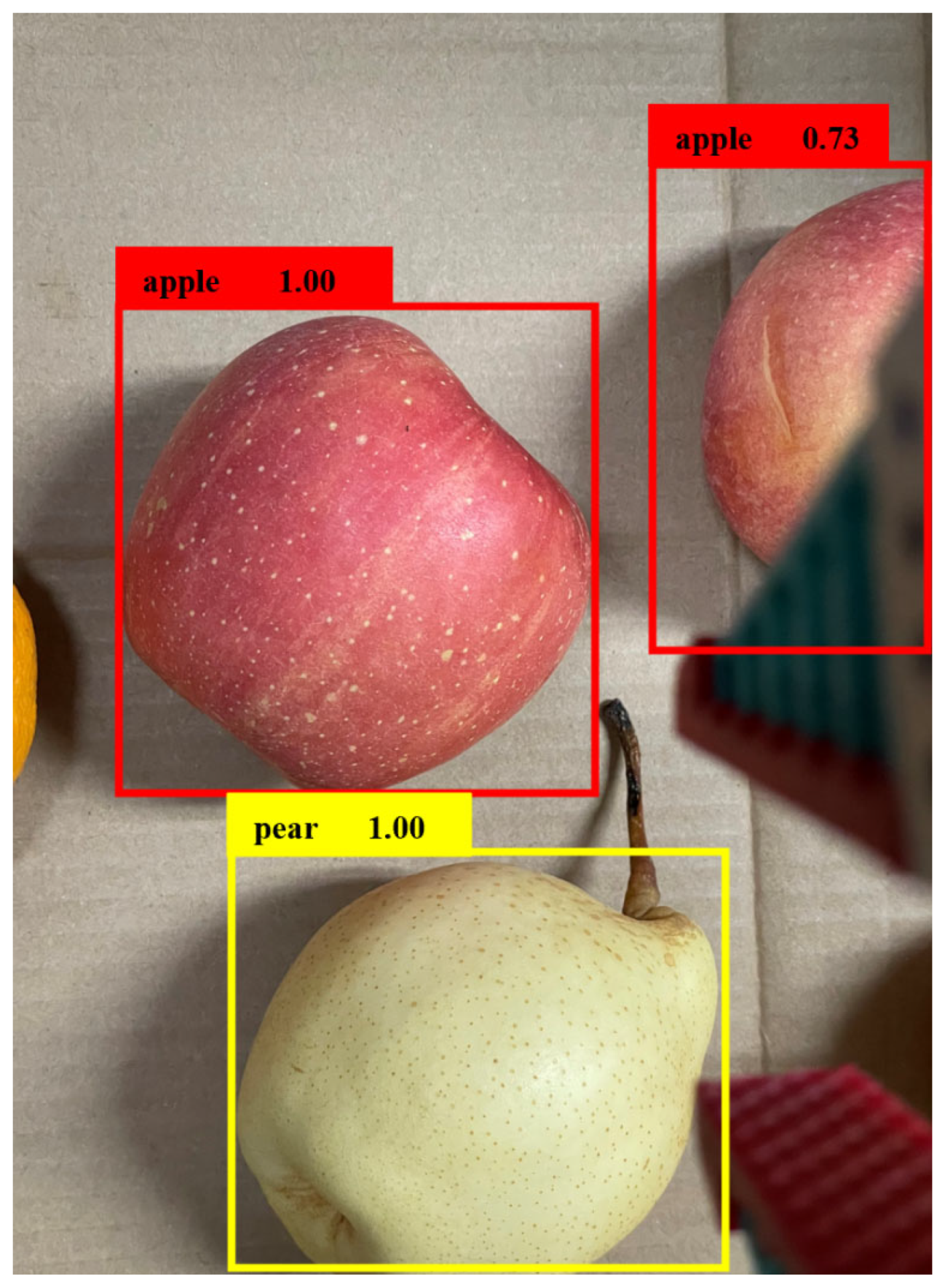

3.3. Performance Analysis of IAM

3.4. Evaluation of CARA

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, J.J.; Chen, D.; Qi, X.D.; Li, Z.J.; Huang, Y.B.; Morris, D.; Tan, X.B. Label-efficient learning in agriculture: A comprehensive review. Comput. Electron. Agric. 2023, 215, 108412. [Google Scholar] [CrossRef]

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Automation in Agriculture by Machine and Deep Learning Techniques: A Review of Recent Developments. Precis. Agric. 2021, 22, 2053–2091. [Google Scholar] [CrossRef]

- Tang, Y.C.; Chen, M.Y.; Wang, C.L.; Luo, L.F.; Li, J.H.; Lian, G.P.; Zou, X.J. Recognition and Localization Methods for Vision-Based Fruit Picking Robots: A Review. Front. Plant Sci. 2020, 11, 510. [Google Scholar] [CrossRef]

- Kumar, M.S.; Mohan, S. Selective fruit harvesting: Research, trends and developments towards fruit detection and localization—A review. Proc. Inst. Mech. Eng. Part C-J. Mech. Eng. Sci. 2023, 237, 1405–1444. [Google Scholar] [CrossRef]

- Rakhmatuiln, I.; Kamilaris, A.; Andreasen, C. Deep Neural Networks to Detect Weeds from Crops in Agricultural Environments in Real-Time: A Review. Remote Sens. 2021, 13, 4486. [Google Scholar] [CrossRef]

- Morales-García, J.; Terroso-Sáenz, F.; Cecilia, J.M. A multi-model deep learning approach to address prediction imbalances in smart greenhouses. Comput. Electron. Agric. 2024, 216, 108537. [Google Scholar] [CrossRef]

- Sharma, V.; Tripathi, A.K.; Mittal, H. Technological revolutions in smart farming: Current trends, challenges & future directions. Comput. Electron. Agric. 2022, 201, 107217. [Google Scholar] [CrossRef]

- Hasan, M.M.; Rahman, T.; Uddin, A.; Galib, S.M.; Akhond, M.R.; Uddin, M.J.; Hossain, M.A. Enhancing Rice Crop Management: Disease Classification Using Convolutional Neural Networks and Mobile Application Integration. Agriculture 2023, 13, 1549. [Google Scholar] [CrossRef]

- Kong, J.L.; Xiao, Y.; Jin, X.B.; Cai, Y.Y.; Ding, C.; Bai, Y.T. LCA-Net: A Lightweight Cross-Stage Aggregated Neural Network for Fine-Grained Recognition of Crop Pests and Diseases. Agriculture 2023, 13, 2080. [Google Scholar] [CrossRef]

- He, Z.; Ma, L.; Wang, Y.C.; Wei, Y.Z.; Ding, X.T.; Li, K.; Cui, Y.J. Double-Arm Cooperation and Implementing for Harvesting Kiwifruit. Agriculture 2022, 12, 1763. [Google Scholar] [CrossRef]

- Ma, Y.H.; Feng, Q.C.; Sun, Y.H.; Guo, X.; Zhang, W.H.; Wang, B.W.; Chen, L.P. Optimized Design of Robotic Arm for Tomato Branch Pruning in Greenhouses. Agriculture 2024, 14, 359. [Google Scholar] [CrossRef]

- Vrochidou, E.; Tsakalidou, V.N.; Kalathas, I.; Gkrimpizis, T.; Pachidis, T.; Kaburlasos, V.G. An Overview of End Effectors in Agricultural Robotic Harvesting Systems. Agriculture 2022, 12, 1240. [Google Scholar] [CrossRef]

- Amin, A.; Wang, X.C.; Zhang, Y.N.; Li, T.H.; Chen, Y.Y.; Zheng, J.M.; Shi, Y.Y.; Abdelhamid, M.A. A Comprehensive Review of Applications of Robotics and Artificial Intelligence in Agricultural Operations. Stud. Inform. Control 2023, 32, 59–70. [Google Scholar] [CrossRef]

- Gonzalez-de-Santos, P.; Fernández, R.; Sepúlveda, D.; Navas, E.; Emmi, L.; Armada, M. Field Robots for Intelligent Farms-Inhering Features from Industry. Agronomy 2020, 10, 1638. [Google Scholar] [CrossRef]

- Zimmer, D.; Plasdak, I.; Barad, Z.; Jurisic, M.; Radodaj, D. Application of Robots and Robotic Systems in Agriculture. Teh. Glas.-Tech. J. 2021, 15, 435–442. [Google Scholar] [CrossRef]

- Cheng, C.; Fu, J.; Su, H.; Ren, L.Q. Recent Advancements in Agriculture Robots: Benefits and Challenges. Machines 2023, 11, 48. [Google Scholar] [CrossRef]

- Xie, D.B.; Chen, L.; Liu, L.C.; Chen, L.Q.; Wang, H. Actuators and Sensors for Application in Agricultural Robots: A Review. Machines 2022, 10, 913. [Google Scholar] [CrossRef]

- Atefi, A.; Ge, Y.F.; Pitla, S.; Schnable, J. Robotic Detection and Grasp of Maize and Sorghum: Stem Measurement with Contact. Robotics 2020, 9, 58. [Google Scholar] [CrossRef]

- Din, A.; Ismail, M.Y.; Shah, B.B.; Babar, M.; Ali, F.; Baig, S.U. A deep reinforcement learning-based multi-agent area coverage control for smart agriculture. Comput. Electr. Eng. 2022, 101, 108089. [Google Scholar] [CrossRef]

- Mohammed, E.A.; Mohammed, G.H. Robotic vision based automatic pesticide sprayer for infected citrus leaves using machine learning. Prz. Elektrotechniczny 2023, 99, 98–101. [Google Scholar] [CrossRef]

- Ren, G.Q.; Lin, T.; Ying, Y.B.; Chowdhary, G.; Ting, K.C. Agricultural robotics research applicable to poultry production: A review. Comput. Electron. Agric. 2020, 169, 105216. [Google Scholar] [CrossRef]

- Yu, Z.P.; Lu, C.H.; Zhang, Y.H.; Jing, L. Gesture-Controlled Robotic Arm for Agricultural Harvesting Using a Data Glove with Bending Sensor and OptiTrack Systems. Micromachines 2024, 15, 918. [Google Scholar] [CrossRef] [PubMed]

- Magalhaes, S.A.; Moreira, A.P.; dos Santos, F.N.; Dias, J. Active Perception Fruit Harvesting Robots—A Systematic Review. J. Intell. Robot. Syst. 2022, 105, 14. [Google Scholar] [CrossRef]

- Wang, Z.H.; Xun, Y.; Wang, Y.K.; Yang, Q.H. Review of smart robots for fruit and vegetable picking in agriculture. Int. J. Agric. Biol. Eng. 2022, 15, 33–54. [Google Scholar] [CrossRef]

- Adamides, G.; Edan, Y. Human-robot collaboration systems in agricultural tasks: A review and roadmap. Comput. Electron. Agric. 2023, 204, 107541. [Google Scholar] [CrossRef]

- Chen, S.X.; Noguchi, N. Remote safety system for a robot tractor using a monocular camera and a YOLO-based method. Comput. Electron. Agric. 2023, 215, 108409. [Google Scholar] [CrossRef]

- Ju, C.; Kim, J.; Seol, J.; Il Son, H. A review on multirobot systems in agriculture. Comput. Electron. Agric. 2022, 202, 107336. [Google Scholar] [CrossRef]

- Wang, T.H.; Chen, B.; Zhang, Z.Q.; Li, H.; Zhang, M. Applications of machine vision in agricultural robot navigation: A review. Comput. Electron. Agric. 2022, 198, 107085. [Google Scholar] [CrossRef]

- Zhang, C.; Noguchi, N. Development of a multi-robot tractor system for agriculture field work. Comput. Electron. Agric. 2017, 142, 79–90. [Google Scholar] [CrossRef]

- Cheein, F.A.A.; Carelli, R. Agricultural Robotics: Unmanned Robotic Service Units in Agricultural Tasks. IEEE Ind. Electron. Mag. 2013, 7, 48–58. [Google Scholar] [CrossRef]

- Droukas, L.; Doulgeri, Z.; Tsakiridis, N.L.; Triantafyllou, D.; Kleitsiotis, I.; Mariolis, I.; Giakoumis, D.; Tzovaras, D.; Kateris, D.; Bochtis, D. A Survey of Robotic Harvesting Systems and Enabling Technologies. J. Intell. Robot. Syst. 2023, 107, 21. [Google Scholar] [CrossRef] [PubMed]

- Emmi, L.; Gonzalez-de-Santos, P. Mobile robotics in arable lands: Current state and future trends. In Proceedings of the European Conference on Mobile Robots (ECMR), Paris, France, 6–8 September 2017. [Google Scholar]

- Fue, K.G.; Porter, W.M.; Barnes, E.M.; Rains, G.C. An Extensive Review of Mobile Agricultural Robotics for Field Operations: Focus on Cotton Harvesting. Agriengineering 2020, 2, 150–174. [Google Scholar] [CrossRef]

- Gil, G.; Casagrande, D.E.; Cortés, L.P.; Verschae, R. Why the low adoption of robotics in the farms? Challenges for the establishment of commercial agricultural robots. Smart Agric. Technol. 2023, 3, 100069. [Google Scholar] [CrossRef]

- Lytridis, C.; Kaburlasos, V.G.; Pachidis, T.; Manios, M.; Vrochidou, E.; Kalampokas, T.; Chatzistamatis, S. An Overview of Cooperative Robotics in Agriculture. Agronomy 2021, 11, 1818. [Google Scholar] [CrossRef]

- Qiao, Y.L.; Valente, J.; Su, D.; Zhang, Z.; He, D.J. AI, sensors and robotics in plant phenotyping and precision agriculture. Front. Plant Sci. 2022, 13, 1064219. [Google Scholar] [CrossRef]

- Sparrow, R.; Howard, M. Robots in agriculture: Prospects, impacts, ethics, and policy. Precis. Agric. 2021, 22, 818–833. [Google Scholar] [CrossRef]

- Joseph, S.B.; Dada, E.G.; Abidemi, A.; Oyewola, D.O.; Khammas, B.M. Metaheuristic algorithms for PID controller parameters tuning: Review, approaches and open problems. Heliyon 2022, 8, e09399. [Google Scholar] [CrossRef]

- Shah, P.; Agashe, S. Review of fractional PID controller. Mechatronics 2016, 38, 29–41. [Google Scholar] [CrossRef]

- Kusrini, K.; Suputa, S.; Setyanto, A.; Agastya, A.; Priantoro, H.; Chandramouli, K.; Izquierdo, E. Data augmentation for automated pest classification in Mango farms. Comput. Electron. Agric. 2020, 179, 105842. [Google Scholar] [CrossRef]

- Li, W.H.; Yu, X.; Chen, C.; Gong, Q. Identification and localization of grape diseased leaf images captured by UAV based on CNN. Comput. Electron. Agric. 2023, 214, 108277. [Google Scholar] [CrossRef]

- Lu, J.; Hu, J.; Zhao, G.N.; Mei, F.H.; Zhang, C.S. An in-field automatic wheat disease diagnosis system. Comput. Electron. Agric. 2017, 142, 369–379. [Google Scholar] [CrossRef]

- Rauf, H.T.; Lali, M.I.U.; Zahoor, S.; Shah, S.Z.H.; Rehman, A.; Bukhari, S.A.C. Visual features based automated identification of fish species using deep convolutional neural networks. Comput. Electron. Agric. 2019, 167, 105075. [Google Scholar] [CrossRef]

- Tetila, E.C.; Machado, B.B.; Astolfi, G.; Belete, N.A.D.; Amorim, W.P.; Roel, A.R.; Pistori, H. Detection and classification of soybean pests using deep learning with UAV images. Comput. Electron. Agric. 2020, 179, 105836. [Google Scholar] [CrossRef]

- Zhang, J.J.; Ma, Q.; Cui, X.L.; Guo, H.; Wang, K.; Zhu, D.H. High-throughput corn ear screening method based on two-pathway convolutional neural network. Comput. Electron. Agric. 2020, 175, 105525. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Degrees of freedom (DOF) | 6 |

| Maximum operational radius (mm) | 625 |

| Weight (kg) | 16 |

| Load (kg) | 3 |

| Mounting diameter (mm) | φ140 |

| Repeatability (mm) | ±0.02 |

| End effector velocity (m/s) | ≤1.9 |

| Rated power (W) | 150 |

| Peak power (W) | 1000 |

| Working temperature range (°C) | 0–50 |

| Working environment humidity | 25–90% |

| Insulation method | Arbitrary angle |

| Protection level | IP54 |

| Joint Motion Parameters | Motion Limit (°) | Maximum Angular Velocity (°/s) |

|---|---|---|

| Joint 1 | ±360 | 178°/s |

| Joint 2 | ±360 | 178°/s |

| Joint 3 | ±360 | 178°/s |

| Joint 4 | ±360 | 237°/s |

| Joint 5 | ±360 | 237°/s |

| Joint 6 | ±360 | 237°/s |

| ID | Content | Before Implementation | After Implementation |

|---|---|---|---|

| 1 | Grasping or processing capabilities | Null | Precise grasping or processing |

| 2 | Image acquisition functionality | Null | Efficient and rapid image extraction |

| 3 | Image processing capabilities | Null | Accurate image processing |

| 4 | Image-integrated robotic arm control functionality | Null | Precise and stable control |

| 5 | High-configurability end-effector motion capabilities | Null | Motion stability and high configurability |

| ID | Suggestion | Suggestion Type |

|---|---|---|

| 1 | Further enhance the stability and robustness of robotic arm movements | Robotic arm functionality |

| 2 | Enrich the variety and functionality of end-effectors | Robotic arm functionality |

| 3 | Further improve recognition frequency and response rate | Image recognition capabilities |

| 4 | Expand CARA’s dimensions and functionalities | Full system performance |

| 5 | Further broaden the recognizable range of agricultural products | Full system performance |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, M.; Wu, F.; Wang, F.; Zou, T.; Li, M.; Xiao, X. CNN-MLP-Based Configurable Robotic Arm for Smart Agriculture. Agriculture 2024, 14, 1624. https://doi.org/10.3390/agriculture14091624

Li M, Wu F, Wang F, Zou T, Li M, Xiao X. CNN-MLP-Based Configurable Robotic Arm for Smart Agriculture. Agriculture. 2024; 14(9):1624. https://doi.org/10.3390/agriculture14091624

Chicago/Turabian StyleLi, Mingxuan, Faying Wu, Fengbo Wang, Tianrui Zou, Mingzhen Li, and Xinqing Xiao. 2024. "CNN-MLP-Based Configurable Robotic Arm for Smart Agriculture" Agriculture 14, no. 9: 1624. https://doi.org/10.3390/agriculture14091624

APA StyleLi, M., Wu, F., Wang, F., Zou, T., Li, M., & Xiao, X. (2024). CNN-MLP-Based Configurable Robotic Arm for Smart Agriculture. Agriculture, 14(9), 1624. https://doi.org/10.3390/agriculture14091624